Improving Teleoperator Efficiency Using Position–Rate Hybrid Controllers and Task Decomposition

Abstract

:1. Introduction

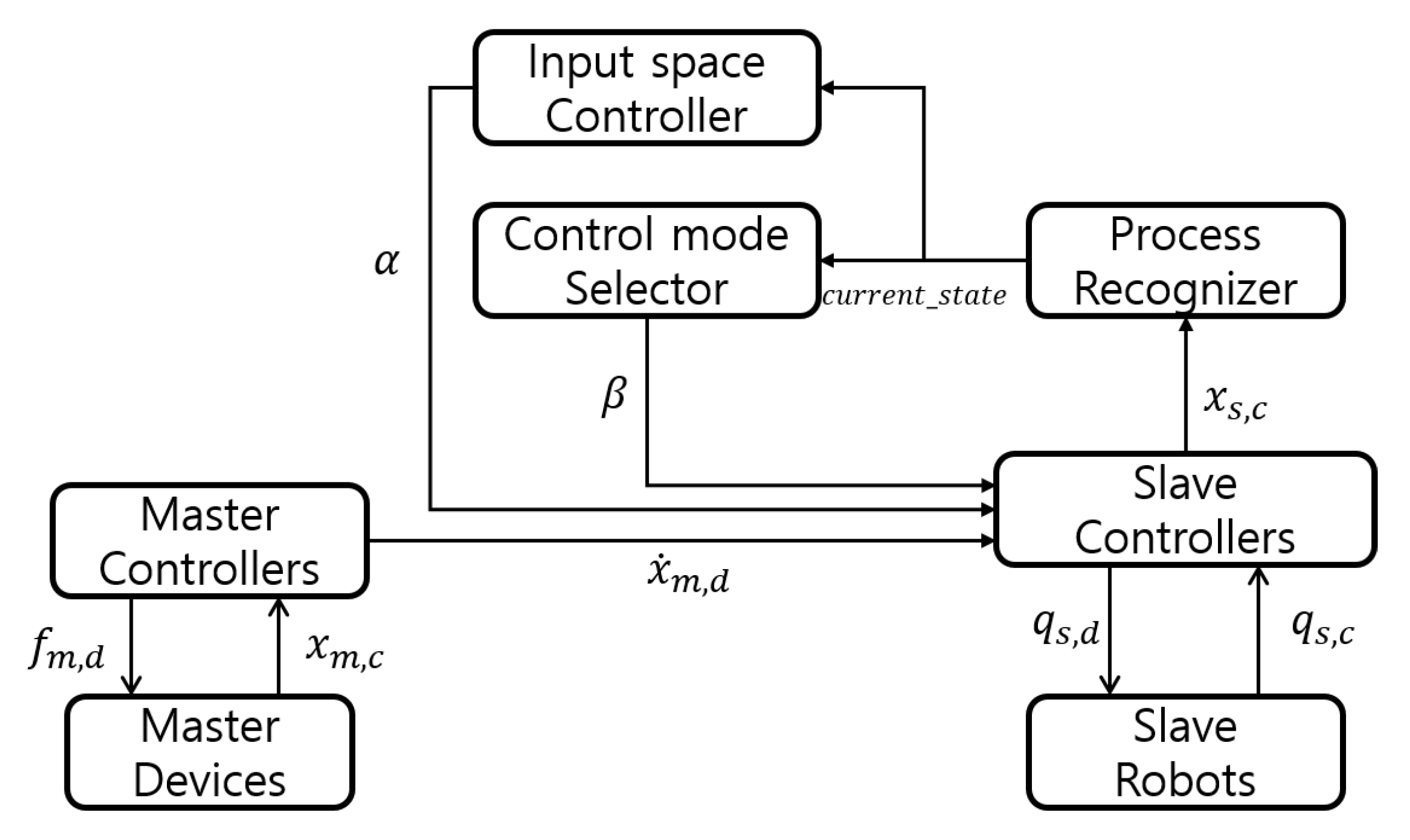

2. Control Method

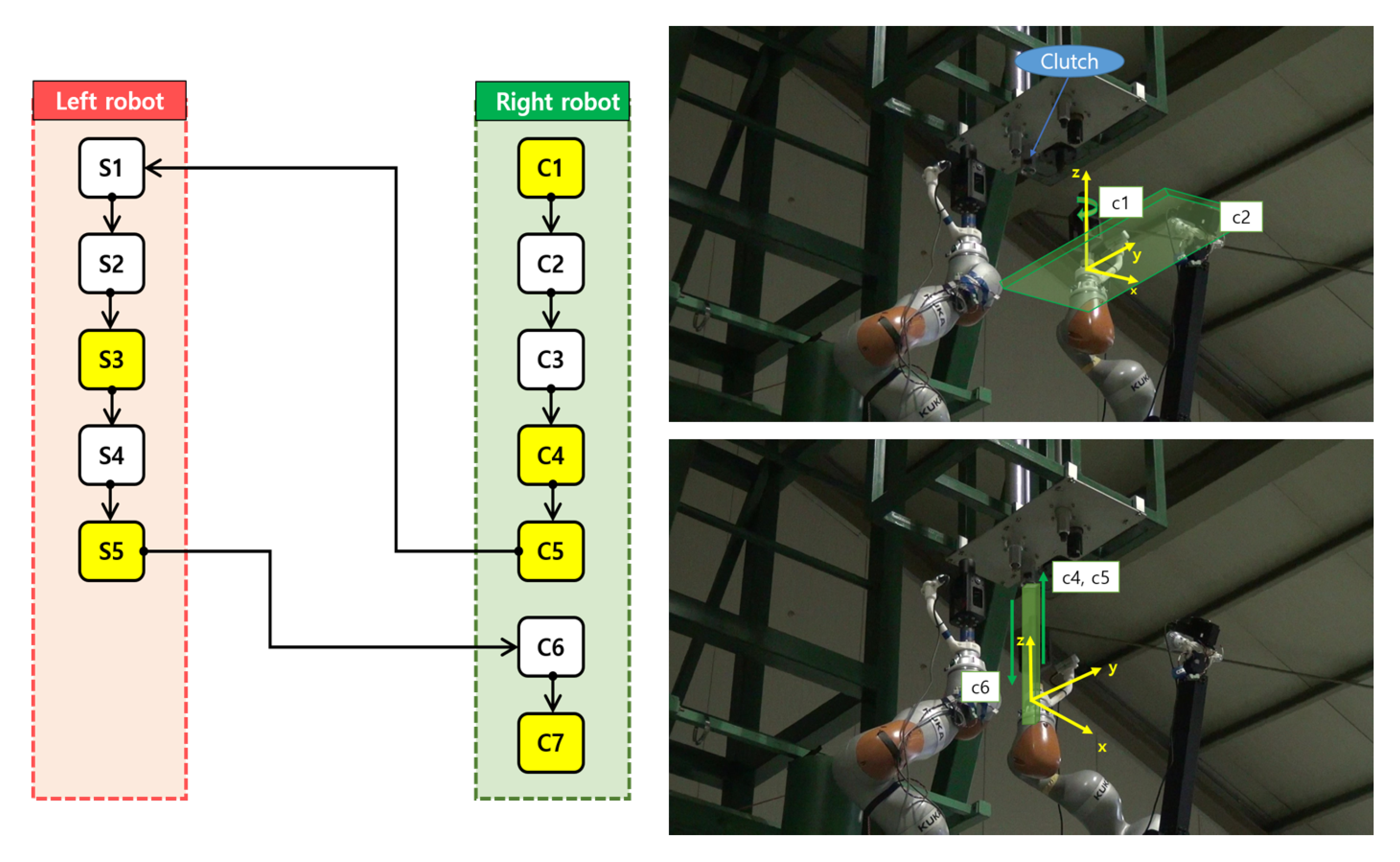

2.1. Madmofem Task

- C1: Align the rotation axis of the right robot and the clutch.

- C2: Align the x- and y-axes of the right robot and the clutch.

- C3: Move the right robot in the z-axis direction to approach the clutch.

- C4: Insert the tool into the clutch through fine manipulation of the right robot.

- C5: Standby.

- S1: Align the x- and y-axes of the left robot and the shaft.

- S2: Move the left robot in the z-axis direction to approach the shaft.

- S3: Insert the tool into the shaft through fine manipulation of the left robot.

- S4: Remove the tool from the shaft with the left robot.

- S5: Standby.

- C6: Remove the tool from the clutch with the right robot.

- C7: Task finished.

2.2. Position Control Mode

2.3. Rate-Control Mode

2.4. Position-Rate Hybrid Teleoperation Control

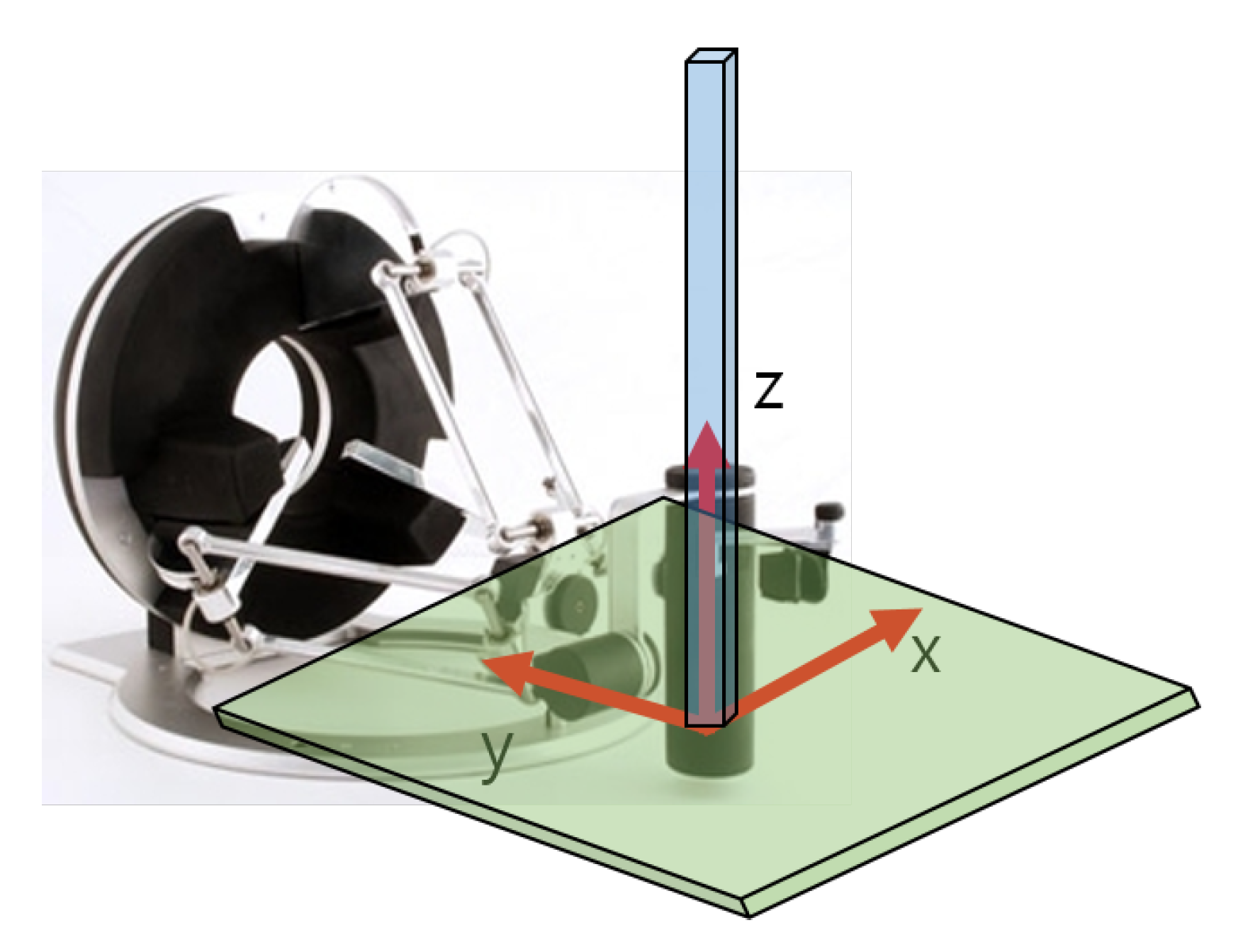

2.5. Minimizing the Operating Degree of Freedom

3. Experiments

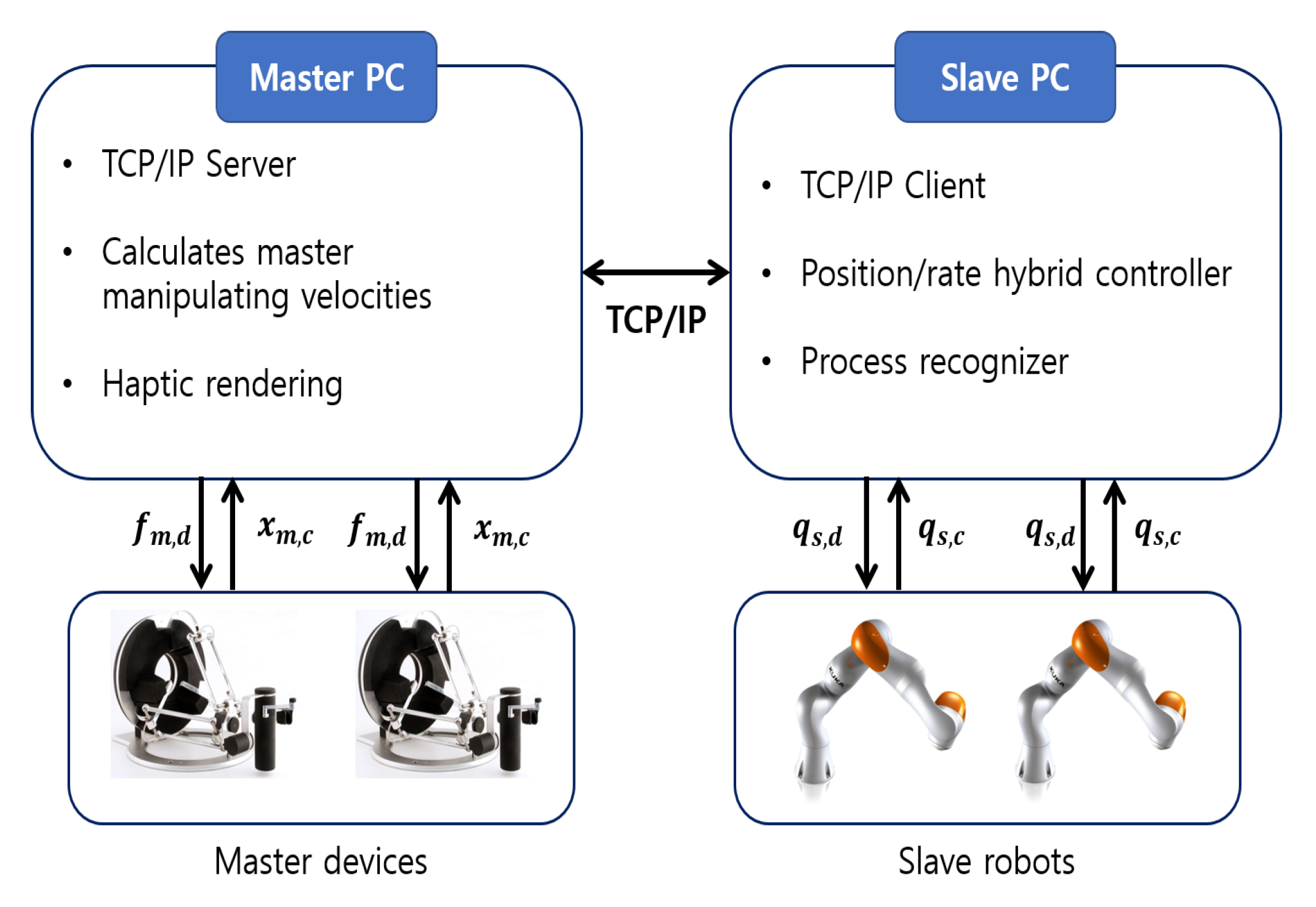

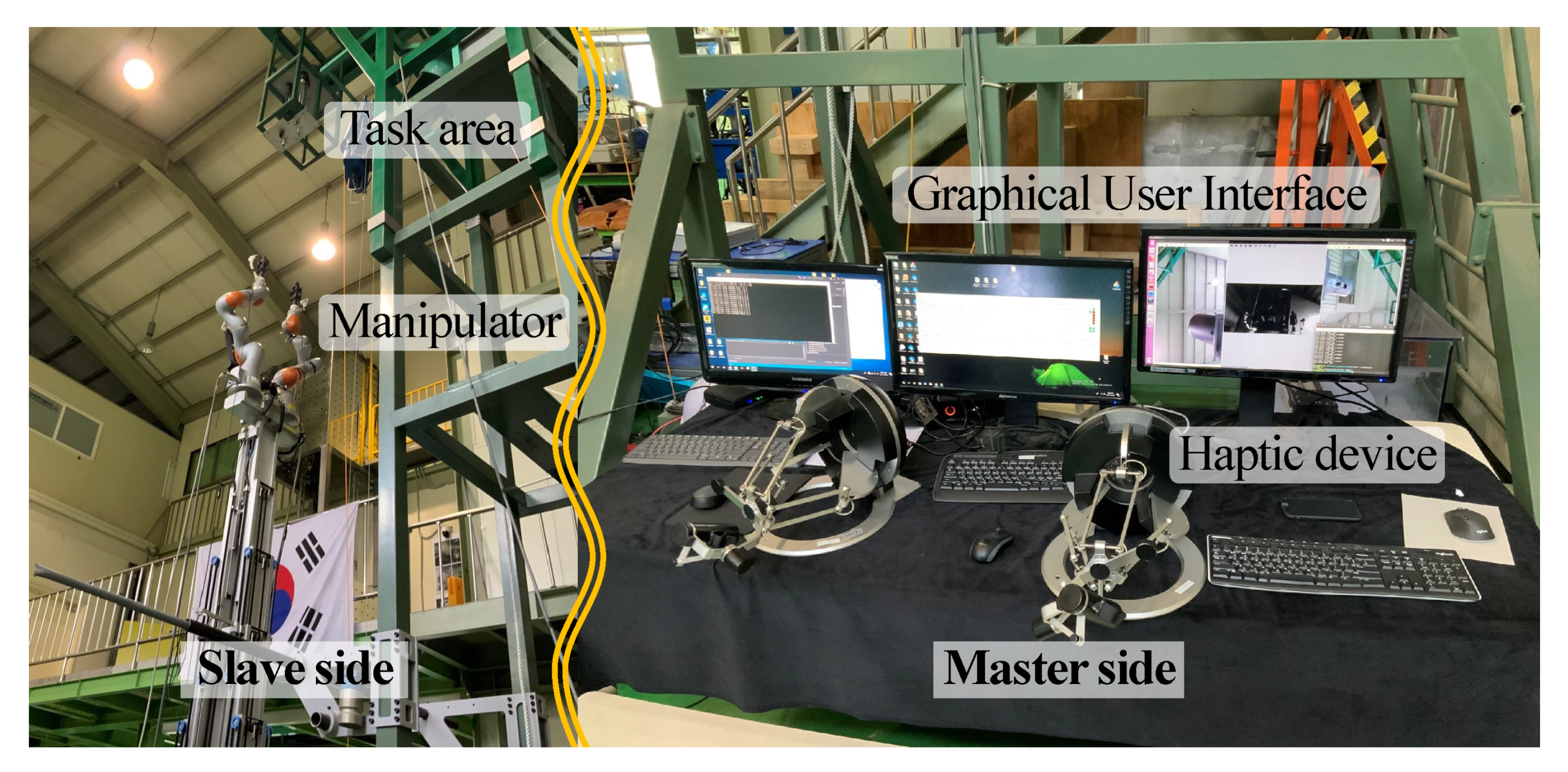

3.1. Experimental Setup

3.2. Scenario

4. Results

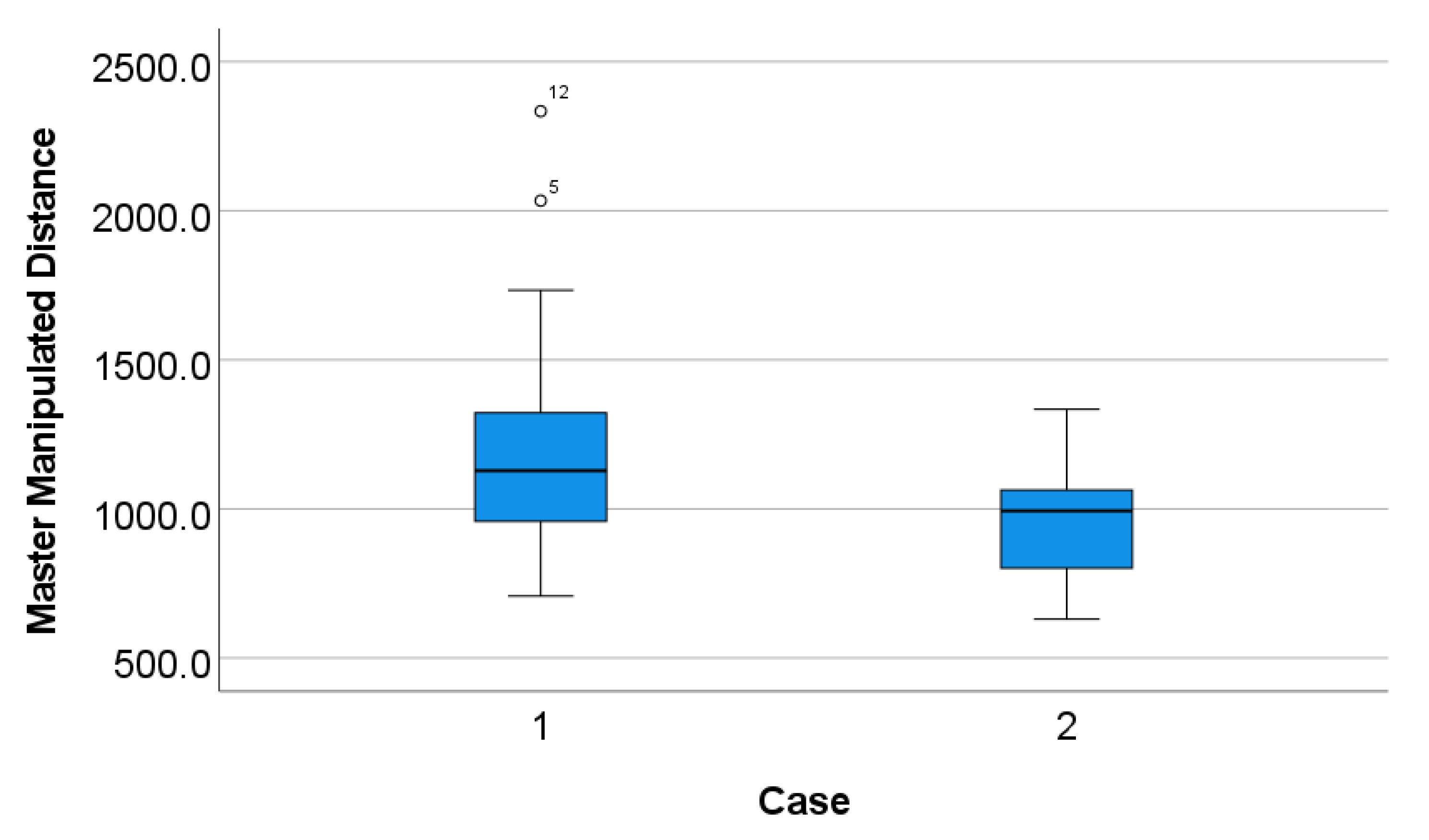

4.1. Manipulated Distance

4.2. Completion Time

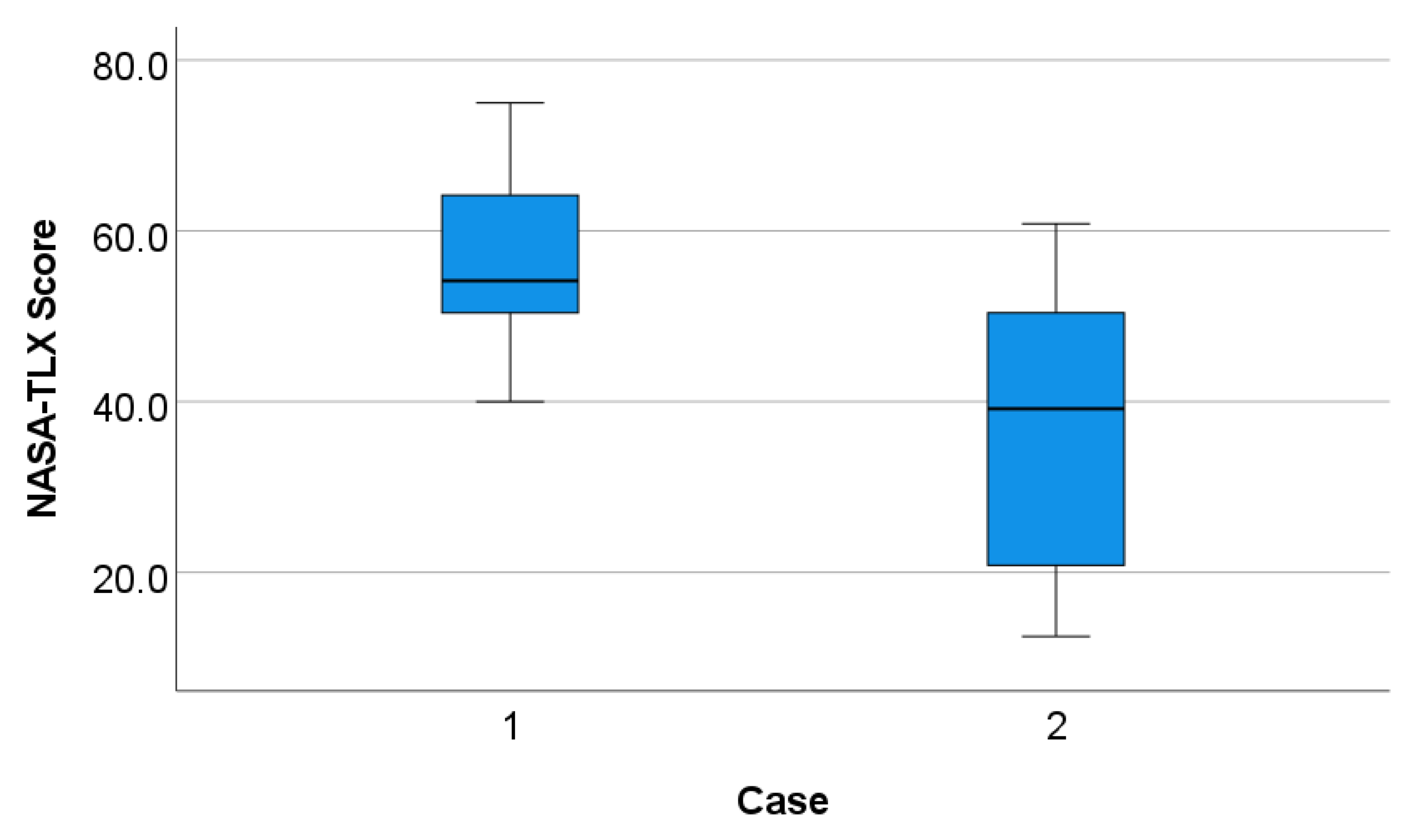

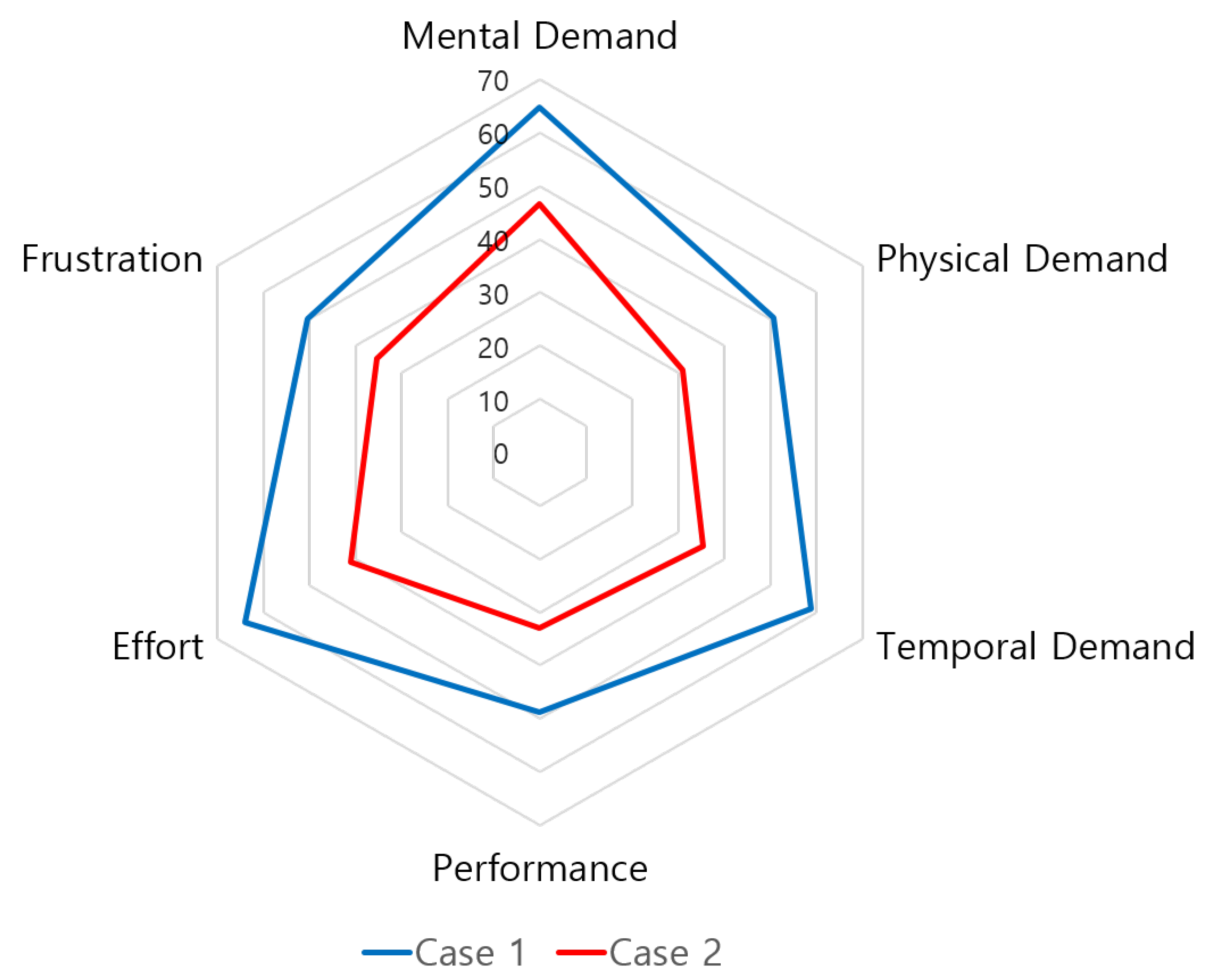

4.3. NASA-TLX

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Gelhaus, F.E.; Roman, H.T. Robot applications in nuclear power plants. Prog. Nucl. Energy 1990, 23, 1–33. [Google Scholar] [CrossRef]

- Khan, H.; Abbasi, S.J.; Lee, M.C. DPSO and inverse jacobian-based real-Time inverse kinematics with trajectory tracking using integral SMC for teleoperation. IEEE Access 2020, 8, 159622–159638. [Google Scholar] [CrossRef]

- Bloss, R. How do you decommission a nuclear installation? Call in the robots. Ind. Robot. 2010, 37, 133–136. [Google Scholar] [CrossRef]

- Burrell, T.; Montazeri, A.; Monk, S.; Taylor, C.J. Feedback control—Based inverse kinematics solvers for a nuclear decommissioning robot. IFAC-PapersOnLine 2016, 49, 177–184. [Google Scholar] [CrossRef]

- Álvarez, B.; Iborra, A.; Alonso, A.; de la Puente, J.A. Reference architecture for robot teleoperation: Development details and practical use. Control Eng. Pract. 2001, 9, 395–402. [Google Scholar] [CrossRef]

- Sun, L.; Zhao, C.; Yan, Z.; Liu, P.; Duckett, T.; Stolkin, R. A novel weakly-supervised approach for RGB-D-based nuclear waste object detection. IEEE Sens. J. 2018, 19, 3487–3500. [Google Scholar] [CrossRef]

- Kim, K.; Park, J.; Lee, H.; Song, K. Teleoperated cleaning robots for use in a highly radioactive environment of the DFDF. In Proceedings of the 2006 SICE-ICASE International Joint Conference, Busan, Korea, 18–21 October 2006; pp. 3094–3099. [Google Scholar]

- Bandala, M.; West, C.; Monk, S.; Montazeri, A.; Taylor, C.J. Vision-based assisted tele-operation of a dual-arm hydraulically actuated robot for pipe cutting and grasping in nuclear environments. Robotics 2019, 8, 42. [Google Scholar] [CrossRef]

- Nagatani, K.; Kiribayashi, S.; Okada, Y.; Otake, K.; Yoshida, K.; Tadokoro, S.; Nishimura, T.; Yoshida, T.; Koyanagi, E.; Fukushima, M.; et al. Emergency response to the nuclear accident at the Fukushima Daiichi Nuclear Power Plants using mobile rescue robots. J. Field Robot. 2013, 30, 44–63. [Google Scholar] [CrossRef]

- Funda, J.; Taylor, R.H.; Eldridge, B.; Gomory, S.; Gruben, K.G. Constrained Cartesian motion control for teleoperated surgical robots. IEEE Trans. Robot. Autom. 1996, 12, 453–465. [Google Scholar] [CrossRef]

- Wagner, C.R.; Stylopoulos, N.; Jackson, P.G.; Howe, R.D. The benefit of force feedback in surgery: Examination of blunt dissection. Presence Teleoper. Virtual Environ. 2007, 16, 252–262. [Google Scholar] [CrossRef]

- Moradi Dalvand, M.; Shirinzadeh, B.; Nahavandi, S.; Smith, J. Effects of realistic force feedback in a robotic assisted minimally invasive surgery system. Minim. Invasive Ther. Allied Technol. 2014, 23, 127–135. [Google Scholar] [CrossRef] [PubMed]

- Tang, X.; Zhao, D.; Yamada, H.; Ni, T. Haptic interaction in tele-operation control system of construction robot based on virtual reality. In Proceedings of the 2009 International Conference on Mechatronics and Automation, Changchun, China, 9–12 August 2009; pp. 78–83. [Google Scholar]

- Lee, K.H.; Pruks, V.; Ryu, J.H. Development of shared autonomy and virtual guidance generation system for human interactive teleoperation. In Proceedings of the 2017 14th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Jeju, Korea, 28 June–1 July 2017; pp. 457–461. [Google Scholar]

- Pruks, V.; Ryu, J.H. A Framework for Interactive Virtual Fixture Generation for Shared Teleoperation in Unstructured Environments. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 10234–10241. [Google Scholar]

- Dariush, B.; Gienger, M.; Arumbakkam, A.; Goerick, C.; Zhu, Y.; Fujimura, K. Online and markerless motion retargeting with kinematic constraints. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 191–198. [Google Scholar]

- Rakita, D.; Mutlu, B.; Gleicher, M. A motion retargeting method for effective mimicry-based teleoperation of robot arms. In Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 361–370. [Google Scholar]

- Lu, W.; Liu, Y.; Sun, J.; Sun, L. A motion retargeting method for topologically different characters. In Proceedings of the 2009 Sixth International Conference on Computer Graphics, Imaging and Visualization, Tianjin, China, 11–14 August 2009; pp. 96–100. [Google Scholar]

- Yang, G.H.; Won, J.; Ryu, S. Online Retargeting for Multi-lateral teleoperation. In Proceedings of the 2013 tenth International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Jeju, Korea, 30 October–2 November 2013; pp. 238–240. [Google Scholar]

- Omarali, B.; Palermo, F.; Valle, M.; Poslad, S.; Althoefer, K.; Farkhatdinov, I. Position and velocity control for telemanipulation with interoperability protocol. In TAROS 2019: Towards Autonomous Robotic Systems; Springer: Berlin/Heidelberg, Germany, 2019; pp. 316–324. [Google Scholar]

- Wrock, M.; Nokleby, S. Haptic teleoperation of a manipulator using virtual fixtures and hybrid position-velocity control. In Proceedings of the IFToMM World Congress in Mechanism and Machine Science, Guanajuato, Mexico, 19–23 June 2011. [Google Scholar]

- Farkhatdinov, I.; Ryu, J.H. Switching of control signals in teleoperation systems: Formalization and application. In Proceedings of the 2008 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Xi’an, China, 2–5 July 2008; pp. 353–358. [Google Scholar]

- Teather, R.J.; MacKenzie, I.S. Position vs. velocity control for tilt-based interaction. In Graphics Interface; CRC Press: Boca Raton, FL, USA, 2014; pp. 51–58. [Google Scholar]

- Mokogwu, C.N.; Hashtrudi-Zaad, K. A hybrid position-rate teleoperation system. Robot. Auton. Syst. 2021, 141, 103781. [Google Scholar] [CrossRef]

- Dominjon, L.; Lécuyer, A.; Burkhardt, J.M.; Andrade-Barroso, G.; Richir, S. The “bubble” technique: Interacting with large virtual environments using haptic devices with limited workspace. In Proceedings of the First Joint Eurohaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, World Haptics Conference, Pisa, Italy, 18–20 March 2005; pp. 639–640. [Google Scholar]

- Filippeschi, A.; Brizzi, F.; Ruffaldi, E.; Jacinto Villegas, J.M.; Landolfi, L.; Avizzano, C.A. Evaluation of diagnostician user interface aspects in a virtual reality-based tele-ultrasonography simulation. Adv. Robot. 2019, 33, 840–852. [Google Scholar] [CrossRef]

- Dominjon, L.; Lécuyer, A.; Burkhardt, J.M.; Richir, S. A comparison of three techniques to interact in large virtual environments using haptic devices with limited workspace. In CGI 2006: Advances in Computer Graphics; Springer: Berlin/Heidelberg, Germany, 2006; pp. 288–299. [Google Scholar]

- Fischer, A.; Vance, J.M. PHANToM haptic device implemented in a projection screen virtual environment. In Proceedings of the IPT/EGVE03: Imersive Progection Technologies/Eurographics Virtual Environments, Zurich, Switzerland, 22–23 May 2003; pp. 225–229. [Google Scholar]

- Lee, H.G.; Hyung, H.J.; Lee, D.W. Egocentric teleoperation approach. Int. J. Control Autom. Syst. 2017, 15, 2744–2753. [Google Scholar] [CrossRef]

- Shin, H.; Jung, S.H.; Choi, Y.R.; Kim, C. Development of a shared remote control robot for aerial work in nuclear power plants. Nucl. Eng. Technol. 2018, 50, 613–618. [Google Scholar] [CrossRef]

| C1 | 0 | 0 | 0 | 0 | 0 | 1 | 1 |

| C2 | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

| C3 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| C4 | 1 | 1 | 1 | 0 | 0 | 0 | 1 |

| C5 | 0 | 0 | 0 | 0 | 0 | 0 | 1 |

| C6 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| C7 | 0 | 0 | 0 | 0 | 0 | 0 | 1 |

| S1 | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

| S2 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| S3 | 1 | 1 | 1 | 0 | 0 | 0 | 1 |

| S4 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| S5 | 0 | 0 | 0 | 0 | 0 | 0 | 1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, J.; Yang, G.-H. Improving Teleoperator Efficiency Using Position–Rate Hybrid Controllers and Task Decomposition. Appl. Sci. 2022, 12, 9672. https://doi.org/10.3390/app12199672

Han J, Yang G-H. Improving Teleoperator Efficiency Using Position–Rate Hybrid Controllers and Task Decomposition. Applied Sciences. 2022; 12(19):9672. https://doi.org/10.3390/app12199672

Chicago/Turabian StyleHan, JiWoong, and Gi-Hun Yang. 2022. "Improving Teleoperator Efficiency Using Position–Rate Hybrid Controllers and Task Decomposition" Applied Sciences 12, no. 19: 9672. https://doi.org/10.3390/app12199672

APA StyleHan, J., & Yang, G.-H. (2022). Improving Teleoperator Efficiency Using Position–Rate Hybrid Controllers and Task Decomposition. Applied Sciences, 12(19), 9672. https://doi.org/10.3390/app12199672