Intelligent Educational Evaluation of Research Performance between Digital Library and Open Government Data

Abstract

:1. Introduction

2. Literature Review

2.1. Research Performance

2.2. THE Ranking

2.3. Intelligent Educational Database

2.4. BCG Matrix

3. Methodology

3.1. Research Variables and Procedure

3.2. Benchmark Samples

3.3. Analysis Methods

4. Results

4.1. Research Indicators in the THE Ranking

4.2. Productivity and Research Income

4.3. Correlation

4.4. Topic Cluster

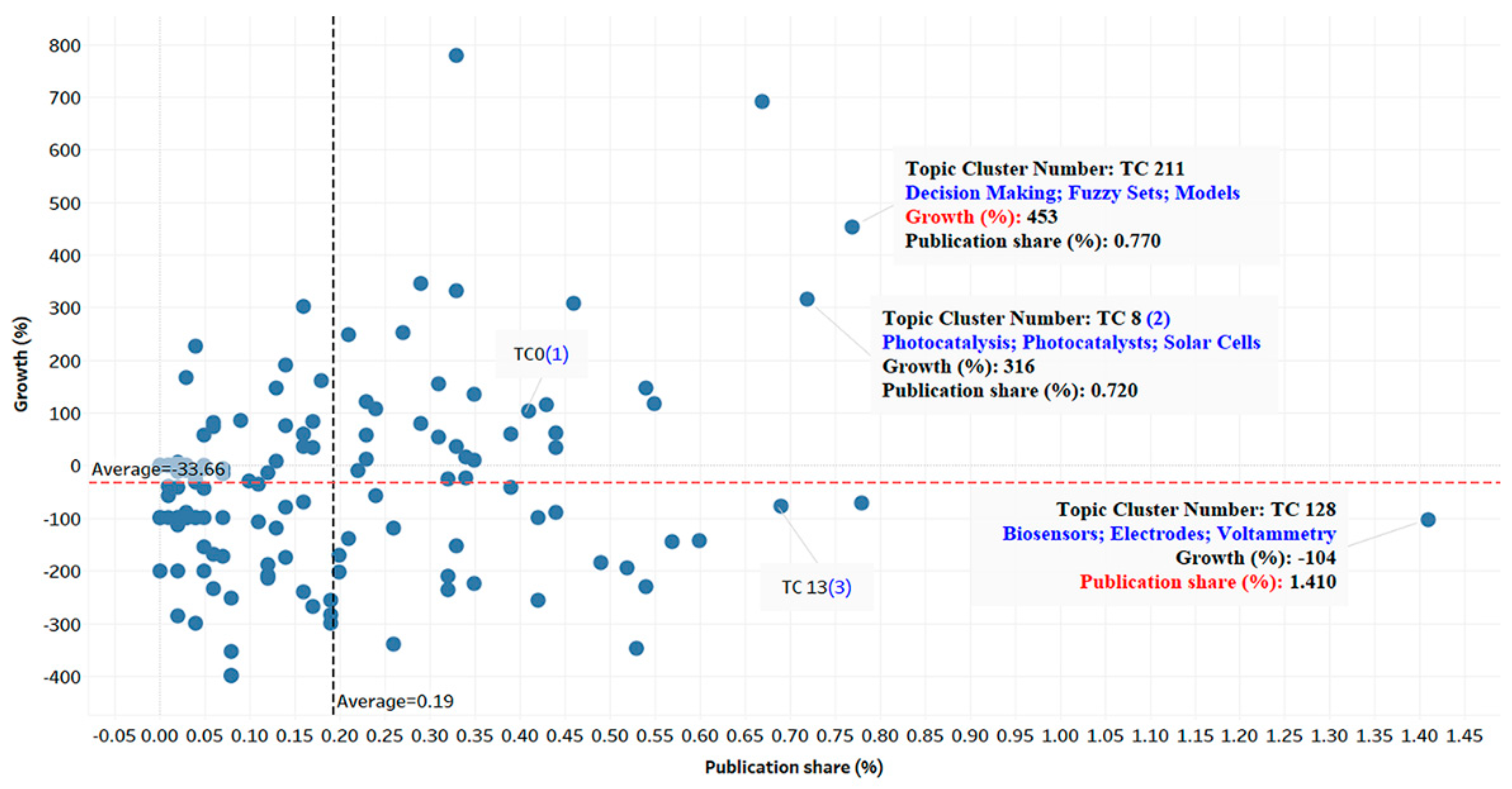

4.5. BCG Matrix Analysis

5. Discussion

5.1. Productivity

5.2. Collaboration and Internationalization

5.3. BCG Matrix of Topic Cluster

5.4. Practical Implications and Further Research

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Burke, J.C. Achieving Accountability in Higher Education: Balancing Public, Academic, and Market Demands, 1st ed.; The Jossey-Bass Adult and Higher Education Series; Jossey-Bass: San Francisco, CA, USA, 2005. [Google Scholar]

- Semyonov, D.; Platonova, D. Accountability of higher education institutions. In The Global Education Monitoring Report; Global Education Monitoring Report Team: Paris, France, 2017; pp. 1–17. [Google Scholar]

- Burke, J.C.; Minassians, H. Real Accountability of Accountability Lite: Seventh Annual Survey; The Rockefeller Institute of Government: Albany, NY, USA, 2003.

- Martinez, M.C.; Nilson, M. Assessing the connection between higher education policy and performance. Educ. Policy 2006, 20, 299–322. [Google Scholar] [CrossRef]

- Shin, J.C. Impacts of performance-based accountability on institutional performance in the US. High. Educ. 2010, 60, 47–68. [Google Scholar] [CrossRef]

- The 2019 World University Rankings Methodology. Available online: https://www.timeshighereducation.com/sites/default/files/the_2019_world_university_rankings_methodology_pwc.pdf (accessed on 19 September 2018).

- Beck, S.; Morrow, A. Canada’s Universities Make the Grade Globally. The Globe and Mail. Available online: https://www.theglobeandmail.com/news/national/canadas-universities-make-the-grade-globally/article4326026/ (accessed on 10 January 2021).

- Samarasekera, I.; Amrhein, C. Top Schools Don’t Always Get Top Marks, Edmonton Journal. Available online: https://www.pressreader.com/canada/edmonton-journal/20100922/282870842154432 (accessed on 3 October 2010).

- Zirulnick, A. New World University Ranking Puts Harvard Back on Top. Christian Science Monitor, Boston, MA, USA. Available online: https://www.csmonitor.com/World/2010/0916/New-world-university-ranking-puts-Harvard-back-on-top (accessed on 16 September 2010).

- MOE Open Database. Available online: https://udb.moe.edu.tw/ReportCategories (accessed on 1 September 2018).

- Hou, H.Y.; Agrawal, S.; Lee, C.F. Comparison of institutional performance between national and private universities of science and technology in Taiwan using world university ranking indicators. In Proceedings of the SEAAIR 2019 19th Annual Conference, Taipei, Taiwan, 25–27 September 2019. [Google Scholar]

- Chang, Y.-S.; Hu, K.-J. Usability Evaluation for the Integration of Library Data Analysis and an Interactive Artwork by Sensing Technology. Appl. Sci. 2020, 10, 7499. [Google Scholar] [CrossRef]

- Chen, M.; Shen, C.W. The correlation analysis between the service quality of intelligent library and the behavioral intention of users. Electron. Libr. 2019, 538, 95–112. [Google Scholar] [CrossRef]

- Zhang, H.; Xiao, J. Quality assessment framework for open government data: Meta-synthesis of qualitative research, 2009–2019. Electron. Libr. 2020, 38, 209–222. [Google Scholar] [CrossRef]

- MOE Higher Education Sprout Project. Available online: https://www.edu.tw/News_Content.aspx?n=9E7AC85F1954DDA8&s=8365C4C9ED53126D (accessed on 13 February 2018).

- Auranen, O.; Nieminen, M. University research funding and publication performance—An international comparison. Res. Policy 2010, 39, 822–834. [Google Scholar] [CrossRef]

- Johnes, J.; Johnes, G. Research funding and performance in U.K. university departments of economics: A frontier analysis. Econ. Educ. Rev. 1995, 14, 301–314. [Google Scholar] [CrossRef]

- Zhou, W.; Lia, Y.; Hsieh, C.J.; Chang, K.C.; Kiang, Y.J.; Kene, Y. Research performance and university–industry–government funding sources in Taiwan’s technological and vocational universities innovation: Management. Policy Pract. 2016, 18, 340–351. [Google Scholar] [CrossRef]

- Artés, J.; Pedraja-Chaparro, F.; Salinas-JiméneZ, M.M. Research performance and teaching quality in the Spanish higher education system: Evidence from a medium-sized university. Res. Policy 2017, 46, 19–29. [Google Scholar] [CrossRef]

- Goodall, A.H.; McDowell, J.M.; Singell, L.D. Leadership and the research productivity of university departments. IZA Discuss. Pap. 2014, 1–26. [Google Scholar] [CrossRef]

- Butler, L.; Mcallister, I. Professional evaluating university research performance using metrics. Eur. Political Sci. 2011, 10, 44–58. [Google Scholar] [CrossRef]

- Uma, V.; Rao, M.K. Evaluation of research performance using journal citations of University of Hyderabad: A case study. Int. J. Inf. Dissem. Technol. 2014, 4, 168–172. [Google Scholar]

- Aldieri, L.; Guida, G.; Kotsemir, M.; Vinci, C.P. An investigation of impact of research collaboration on academic performance in Italy. Qual. Quant. 2019, 53, 2003–2040. [Google Scholar] [CrossRef]

- Baskaran, C. Research productivity of Alagappa University during 1999–2011: A bibliometric study. DESIDOC J. Libr. Inf. Technol. 2013, 33, 236–242. [Google Scholar] [CrossRef]

- Vuong, Q.H.; Ho, M.T.; Vuong, T.T.; Nguyen, V.H.; Napier, N.K.; Pham, H.H. Nemo Solus Satis Sapit: Trends of research collaborations in the Vietnamese social sciences, observing 2008–2017 Scopus data. Publications 2017, 5, 24. [Google Scholar] [CrossRef]

- Bornmann, L.; Stefaner, M.; de Moya Anegón, F.; Mutz, R. What is the effect of country-specific characteristics on the research performance of scientific institutions? Using multi-level statistical models to rank and map universities and research-focused institutions worldwide. J. Informetr. 2014, 8, 581–593. [Google Scholar] [CrossRef]

- Uddin, A.; Singh, V.K. A quantity-quality composite ranking of Indian institutions in CS research. IETE Technol. Rev. 2015, 32, 273–283. [Google Scholar] [CrossRef]

- Bennell, P. The internationalisation of higher education provision in the United Kingdom: Patterns and relationships between onshore and offshore overseas student enrolment. Int. J. Educ. Dev. 2020, 74, 102162. [Google Scholar] [CrossRef]

- Vernon, M.M.; Balas, E.A.; Momani, S. Are university rankings useful to improve research? A systematic review. PLoS ONE 2018, 13, e0193762. [Google Scholar] [CrossRef]

- Altbach, P.G. The state of the rankings. Inside High. Ed. 2010, 11. Available online: www.insidehighered.com/views/2010/11/11/altbach (accessed on 29 March 2018).

- Network, QS Asia News. The History and Development of Higher Education Ranking. QS WOWNEWS. Available online: https://qswownews.com/history-development-higher-education-ranking-systems/ (accessed on 29 March 2018).

- Vardell, E.; Feddern-Bekcan, T.; Moore, M. SciVal experts: A collaborative tool. Med. Ref. Serv. Q. 2011, 30, 283–294. [Google Scholar] [CrossRef]

- Yu, M.C.; Wu, Y.C.; Alhalabi, W.; Kao, H.Y.; Wu, W.H. Research gate: An effective altmetric indicator for active researchers? Comput. Hum. Behav. 2016, 55, 1001–1006. [Google Scholar] [CrossRef]

- Dresbeck, R. Resource reviews. Scival J. Med. Libr. Assoc. 2015, 103, 164–166. [Google Scholar] [CrossRef]

- Ghemawat, P. Competition and business strategy in historical perspective. Bus. Hist. Rev. 2002, 76, 37–74. [Google Scholar] [CrossRef]

- Whitehead, J. BCG (Growth Share) Matrix. In Wiley Encyclopedia of Management; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2015. [Google Scholar]

- Jung, N.; Kieser, A. Consultants in the management fashion arena. In The Oxford Handbook of Management, Consulting; Kipping, M., Clark, T., Eds.; Oxford University Press: New York, NY, USA, 2012; pp. 327–346. [Google Scholar]

- Pidun, U.; Rubner, H.; Krühler, M.; Untiedt, R.; Nippa, M. Corporate portfolio management: Theory and practice. J. Appl. Corp. Financ. 2011, 23, 63–76. [Google Scholar] [CrossRef]

- Allio, R.J. Strategic thinking: The ten big ideas. Strateg. Leadersh. 2006, 34, 4–13. [Google Scholar] [CrossRef]

- Madsen, D.Ø. Not dead yet: The rise, fall and persistence of the BCG Matrix. Probl. Perspect. Manag. 2017, 15, 19–34. [Google Scholar]

- Sheoran, M.; Kumar, D.; Kumar, V.; Verma, D. Understanding the trends of marketing research and its future directions: A citation analysis. Bottom Line 2018, 31, 191–207. [Google Scholar] [CrossRef]

- Bunkley, N. Joseph Juran, 103, pioneer in quality control, dies. The New York Times, 3 March 2008. [Google Scholar]

- Xanthopoulou, D.; Bakker, A.B.; Schaufeli, W.B. The role of personal resources in the Job Demands-Resources model. Int. J. Stress Manag. 2007, 14, 121–141. [Google Scholar] [CrossRef]

- Demerouti, E.; Bakker, A.B.; Nachreiner, F.; Schaufeli, W.B. The job demands-resources model of burnout. J. Appl. Psychol. 2001, 86, 499–512. [Google Scholar] [CrossRef]

- Albert, C.; Davia, M.A.; Legazpe, N. Job satisfaction amongst academics: The role of research productivity. Stud. High. Educ. 2018, 43, 1362–1377. [Google Scholar] [CrossRef]

- Callaghan, C.W.; Coldwell, D. Job satisfaction and job performance: The case of research productivity. J. Econ. 2014, 5, 97–113. [Google Scholar] [CrossRef]

- Irs, R. Pay-for-performance in Estonian general educational schools: The situation for further development. Balt. J. Manag. 2012, 7, 302–332. [Google Scholar] [CrossRef]

- MOE. The Jump Project in Higher Education. Available online: https://nutcup.nutc.edu.tw/files/11-1096-10447.php (accessed on 1 September 2018).

- Kumaresan, R.; Vinitha, K.; Kannan, K. Mapping of Cyprinus carpio research: A global perspective. Libr. Philos. Pract. 2018, 1750, 1–14. [Google Scholar]

- Leydesdorff, L.; Bornmann, L.; Wagner, C.S. The Relative Influences of Government Funding and International Collaboration on Citation Impact. J. Assoc. Inf. Sci. Technol. 2019, 70, 198–201. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Thijs, B.; Glänzel, W. Characteristics of international collaboration in sport sciences publications and its influence on citation impact. Scientometrics 2015, 105, 843–862. [Google Scholar] [CrossRef]

- Castillo, J.A.; Powell, M.A. Research productivity and international collaboration: A study of Ecuadorian science. J. Hisp. High. Educ. 2020, 19, 369–387. [Google Scholar] [CrossRef]

- Brew, A.; Boud, D.; Lucas, L.; Crawford, K. Reflexive deliberation in international research collaboration: Minimising risk and maximising opportunity. High. Educ. 2013, 66, 93–104. [Google Scholar] [CrossRef]

- Low, W.Y.; Tong, W.T.; Gunasegaran, V. Constraints in journal publishing and international research collaboration in the Asia Pacific Region. Int. J. Inf. Sci. Manag. 2016, 12, 13–19. [Google Scholar]

- Hung, W.-C.; Tsai, Y.-H.; Liou, Y.-T.; Lin, Y.-Y.; Yang, M.-H. Adversarial learning for semi-supervised semantic segmentation. In Proceedings of the British Machine Vision Conference 2018 (BMVC 2018), Newcastle, UK, 3–6 September 2018. [Google Scholar]

- Tu, W.-C.; Liu, M.-Y.; Jampani, V.; Sun, D.; Chien, S.-Y.; Yang, M.-H.; Kautz, J. Learning superpixels with segmentation-aware affinity loss. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 568–576. [Google Scholar]

- Chiu, Y.-H.; Chang, T.-F.M.; Chen, C.-Y.; Sone, M.; Hsu, Y.-J. Mechanistic insights into photodegradation of organic dyes using heterostructure photocatalysts. Catalysts 2019, 9, 430. [Google Scholar] [CrossRef]

- Lee, G.-J.; Wu, J.J. Recent developments in ZnS photocatalysts from synthesis to photocatalytic applications—A review. Powder Technol. 2017, 318, 8–22. [Google Scholar] [CrossRef]

- Liu, M.-J.; Hsu, S.S.H. A miniature 300-MHz resonant DC-DC converter with GaN and CMOS integrated in IPD technology. IEEE Trans. Power Electron. 2018, 33, 9656–9668. [Google Scholar] [CrossRef]

- Yang, N.-C.; Chen, H.-C. Decomposed Newton algorithm-based three-phase power-flow for unbalanced radial distribution networks with distributed energy resources and electric vehicle demands. Int. J. Electr. Power Energy Syst. 2018, 96, 473–483. [Google Scholar] [CrossRef]

- Cheng, S.H. Autocratic decision making using group recommendations based on hesitant fuzzy sets for green hotels selection and bidders selection. Inf. Sci. 2018, 467, 604–617. [Google Scholar] [CrossRef]

- Zeng, S.; Xiao, Y. A method based on TOPSIS and distance measures for hesitant fuzzy multiple attribute decision making. Technol. Econ. Dev. Econ. 2018, 24, 969–983. [Google Scholar] [CrossRef]

- Li, J.; Bi, X.; Tamulevičius, S.; Erts, D.; Chang, C.-F.; Gu, Y. Fabrication of a biocompatible and continuous glucose biosensor with the poly (3,4-ethylenedioxythiophene) modified electrode. J. Taiwan Inst. Chem. Eng. 2019, 104, 1–7. [Google Scholar] [CrossRef]

- Liu, X.; Hsu, S.P.C.; Liu, W.-C.; Wang, Y.-M.; Liu, X.; Lo, C.-S.; Lin, Y.-C.; Nabilla, S.C.; Li, Z.; Hong, Y.; et al. Salivary electrochemical cortisol biosensor based on tin disulfide nanoflakes. Nanoscale Res. Lett. 2019, 14, 189. [Google Scholar] [CrossRef]

| THE Indicator | MOE and SciVal Items |

|---|---|

| Teaching (the learning environment) | Reputation Staff-to-student ratio Institutional income |

| Research (volume, income, and reputation) | No. of publications (SciVal) * No. of citations of the superior subject (SciVal) * Research income (MOE) * |

| International outlook (staff, students, research) | Proportion of international students Proportion of international staff Proportion of publications with international collaboration (SciVal) * |

| Industry income (knowledge transfer) | Institutional income from industry and commerce/academic staff |

| School Name | Publications | Citations | Collaboration | Internalization |

|---|---|---|---|---|

| N1 | 4175 | 24,136 | 4103 | 1370 |

| N2 | 3564 | 20,301 | 3461 | 1074 |

| N5 | 2235 | 8270 | 2131 | 532 |

| N4 | 1455 | 4971 | 1382 | 341 |

| P2 | 1134 | 3097 | 1075 | 276 |

| P1 | 1085 | 6787 | 1053 | 309 |

| P3 | 773 | 2660 | 744 | 110 |

| N3 | 661 | 2088 | 616 | 126 |

| School Name | Publication | HR | PH Ratio | Research Income (NT Dollars) |

|---|---|---|---|---|

| P1 | 355 | 681 | 0.521 | 102,228,451 |

| N1 | 1333 | 5882 | 0.227 | 497,164,554 |

| P2 | 349 | 1556 | 0.224 | 45,825,000 |

| N2 | 1190 | 5552 | 0.214 | 304,882,112 |

| N3 | 206 | 1164 | 0.177 | 88,433,709 |

| N4 | 466 | 3529 | 0.132 | 140,922,212 |

| P3 | 244 | 1884 | 0.130 | 81,962,999 |

| N5 | 691 | 5991 | 0.115 | 239,032,328 |

| 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|

| 1 | ||||

| 0.904 ** | 1 | |||

| 0.834 ** | 0.980 ** | 1 | ||

| 0.883 ** | 0.983 ** | 0.989 ** | 1 | |

| 0.834 ** | 0.832 ** | 0.822 ** | 0.847 ** | 1 |

| Variable | Coding | Mean | Frequency | SD |

|---|---|---|---|---|

| Topic | TC 0 | 66.00 | 8 | 62.108 |

| TC 8 | 61.88 | 8 | 55.573 | |

| TC 13 | 54.25 | 8 | 42.948 | |

| TC 30 | 46.13 | 8 | 52.684 | |

| TC 128 | 38.86 | 7 | 71.627 | |

| School | N1 | 19.66 | 112 | 29.894 |

| N2 | 17.50 | 114 | 29.616 | |

| N5 | 9.03 | 117 | 12.515 | |

| N4 | 8.56 | 82 | 11.403 | |

| P2 | 7.66 | 73 | 12.371 | |

| P1 | 7.23 | 81 | 13.524 | |

| N3 | 5.14 | 58 | 6.309 | |

| P3 | 4.13 | 82 | 4.949 | |

| Type | National | 12.95 | 483 | 22.481 |

| Private | 6.29 | 236 | 10.959 |

| SS | df | MS | F | Sig. | ||

|---|---|---|---|---|---|---|

| output ∗ topic | between | 112,715.706 | 144 | 782.748 | 2.704 | 0.000 |

| within | 166,132.625 | 574 | 289.430 | |||

| Total | 278,848.331 | 718 | ||||

| output ∗ school | between | 21,939.263 | 7 | 3134.180 | 8.674 | 0.000 |

| within | 256,909.068 | 711 | 361.335 | |||

| Total | 278,848.331 | 718 | ||||

| output ∗ type | between | 7032.218 | 1 | 7032.218 | 18.550 | 0.000 |

| within | 271,816.113 | 717 | 379.102 | |||

| Total | 278,848.331 | 718 | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, T.-M.; Hou, H.-Y. Intelligent Educational Evaluation of Research Performance between Digital Library and Open Government Data. Appl. Sci. 2022, 12, 791. https://doi.org/10.3390/app12020791

Cheng T-M, Hou H-Y. Intelligent Educational Evaluation of Research Performance between Digital Library and Open Government Data. Applied Sciences. 2022; 12(2):791. https://doi.org/10.3390/app12020791

Chicago/Turabian StyleCheng, Tao-Ming, and Hsing-Yu Hou. 2022. "Intelligent Educational Evaluation of Research Performance between Digital Library and Open Government Data" Applied Sciences 12, no. 2: 791. https://doi.org/10.3390/app12020791