Abstract

Deep learning based on neural networks has been widely used in image recognition, speech recognition, natural language processing, automatic driving, and other fields and has made breakthrough progress. FPGA stands out in the field of accelerated deep learning with its advantages such as flexible architecture and logic units, high energy efficiency ratio, strong compatibility, and low delay. In order to track the latest research results of neural network optimization technology based on FPGA in time and to keep abreast of current research hotspots and application fields, the related technologies and research contents are reviewed. This paper introduces the development history and application fields of some representative neural networks and points out the importance of studying deep learning technology, as well as the reasons and advantages of using FPGA to accelerate deep learning. Several common neural network models are introduced. Moreover, this paper reviews the current mainstream FPGA-based neural network acceleration technology, method, accelerator, and acceleration framework design and the latest research status, pointing out the current FPGA-based neural network application facing difficulties and the corresponding solutions, as well as prospecting the future research directions. We hope that this work can provide insightful research ideas for the researchers engaged in the field of neural network acceleration based on FPGA.

1. Introduction

With the rise of the short video industry and the advent of the era of big data and the Internet of Things (IoTs), the data created by people in recent years has shown a blowout growth, providing a solid data foundation for the development of artificial intelligence (AI). As the core technology and research direction for realizing artificial intelligence, deep learning based on neural networks has achieved good results in many fields, such as speech recognition [1,2,3], image processing [4,5,6], and natural language processing [7,8,9]. Common platforms to accelerate deep learning include central processing unit (CPU), graphics processing unit (GPU), field-programmable gate array (FPGA), and application-specific integrated circuit (ASIC).

Among them, the CPU adopts Von Neumann architecture and the program execution in the field of deep learning of artificial intelligence is less, while the computational demand for data is relatively large. Therefore, the implementation of AI algorithms by CPU has a natural structural limitation—that is, the CPU spends a large amount of time reading and analyzing data or instructions. In general, it is not possible to achieve unlimited improvement of instruction execution speed by increasing CPU frequency and memory bandwidth without limit.

The ASIC special purpose chip has the advantages of high throughput, low latency, and low power consumption. Compared with FPGA implementation of the same process, ASIC can achieve 5~10 times the computation acceleration, and the cost of ASIC will be greatly reduced after mass production. But, deep learning computing tasks are flexible, each algorithm’s needs can be implemented effectively through slightly different dedicated hardware architecture, and there is a high cost of research and development of ASIC and flow, with the cycle being long, yet the most important thing to note is that is logic cannot cause dynamic adjustment, which means that the custom accelerator chips (such as ASIC) must make a large amount of compromise in order to act as a sort of universal accelerator.

At a high level, this means that system designers face two choices. One is to select a heterogeneous system and load a large number of ASIC accelerator chips into the system to deal with various types of problems. Or, one can choose a single chip to handle as many types of algorithms as possible. The FPGA scheme falls into the latter category because FPGA provides unlimited reconfigurable logic, whereas FPGA can update the logic function in only a few hundred milliseconds, and we can design accelerators for each algorithm. The only compromise is that the accelerators involve programmable logic rather than a hardened gate, but this also means that we can take advantage of the flexibility of FPGA to help us in saving development costs even more.

At present, artificial intelligence computing requirements represented by deep learning mainly use GPU, FPGA, and other existing general chips that are suitable for parallel computing in order to achieve acceleration. Due to the characteristics of high parallelism, high frequency, and high bandwidth, GPU can parallelize the operation and greatly shorten the operation time of the model. Due to its powerful computing ability, it is mainly used to deal with large-scale computing tasks at present. GPU-accelerated deep learning algorithms have been widely used and have achieved remarkable results.

Compared with FPGA, the peak performance of GPU (10 TFLOPS, floating point operation per second) is much higher than that of FPGA (<1 TFLOPS), and thus GPU is a good choice for deep learning algorithms in terms of accelerating performance. However, this is only the case when the power consumption is not considered. Because the power consumption of GPU is very high, sometimes tens of hundreds of times that of CPU and FPGA, the high energy consumption limits it to being used in high-performance computing clusters. If it is used on edge devices, the performance of GPU will be greatly sacrificed.

However, the DDR (double data rate) bandwidth, frequency, and number of computing units (low-end chips) of FPGA are not as high as GPU. For on-chip memory, FPGA has larger computing capacity; moreover, on-chip memory is crucial for reducing latency in applications such as deep learning. Accessing external memory such as DDR consumes more energy than the chip itself for computing. Therefore, the larger capacity of on-chip cache reduces the memory bottleneck caused by external memory reading and also reduces the power consumption and cost required by high-memory bandwidth. The large capacity of on-chip memory and flexible configuration capability of FPGA reduces the read and write of external DDR, while GPU requires the support of an external processor during operation. The addition of external hardware resources greatly reduces the data processing speed. Moreover, FPGA’s powerful raw data computing power and reconfigurability allow it to process arbitrary precision data, but GPU’s data processing is limited by the development platform. Therefore, in this context, FPGA seems to be a very ideal choice. In addition, compared with GPU, FPGA not only has the characteristics of data parallel, but also has the characteristics of pipeline parallel, and thus for pipelined computing tasks, FPGA has a natural advantage in delay. On the basis of these advantages, FPGA stands out in the field of accelerated deep learning.

In the previous work, the application, acceleration method and accelerator design of neural networks such as CNN (convolutional neural network), RNN (recurrent neural network), and GAN (generative adversarial network) based on FPGA were described in detail, and the research hotspots of industrial application combined with FPGA and deep neural networks were fully investigated. They have made relevant contributions to the application and development of FPGA in neural networks [10,11,12,13,14]. It is worth noting that the analysis of the acceleration effect of different acceleration techniques on different neural networks is less involved in their work. Motivated by the current research progress, this paper reviews the latest optimization technology and application schemes of various neural networks based on FPGA, compares the performance effect of different acceleration technologies on different neural networks, and analyzes and summarizes of potential research prospects.

Compared with the previous work, the contributions of this paper are as follows:

- (1)

- The development history of neural networks is divided into five stages and presented in the form of tables, so that readers can understand the development history of neural networks more intuitively.

- (2)

- Study of the optimization technology of various neural networks based on FPGA, and introducing the application scenarios of various technologies and the latest research results of various technologies.

- (3)

- Introducing the latest application achievements of CNN, RNN, and GAN in the field of FPGA acceleration, analyzing the performance achieved by deploying different neural networks using different optimization technologies on different FPGA platforms, and finding that the use of Winograd and other convolutional optimization technologies can bring about huge performance gains. The reasons for this phenomenon are analyzed.

- (4)

- The future research directions of neural network acceleration based on FPGA are pointed out, and the application of FPGA accelerated neural network is prospected.

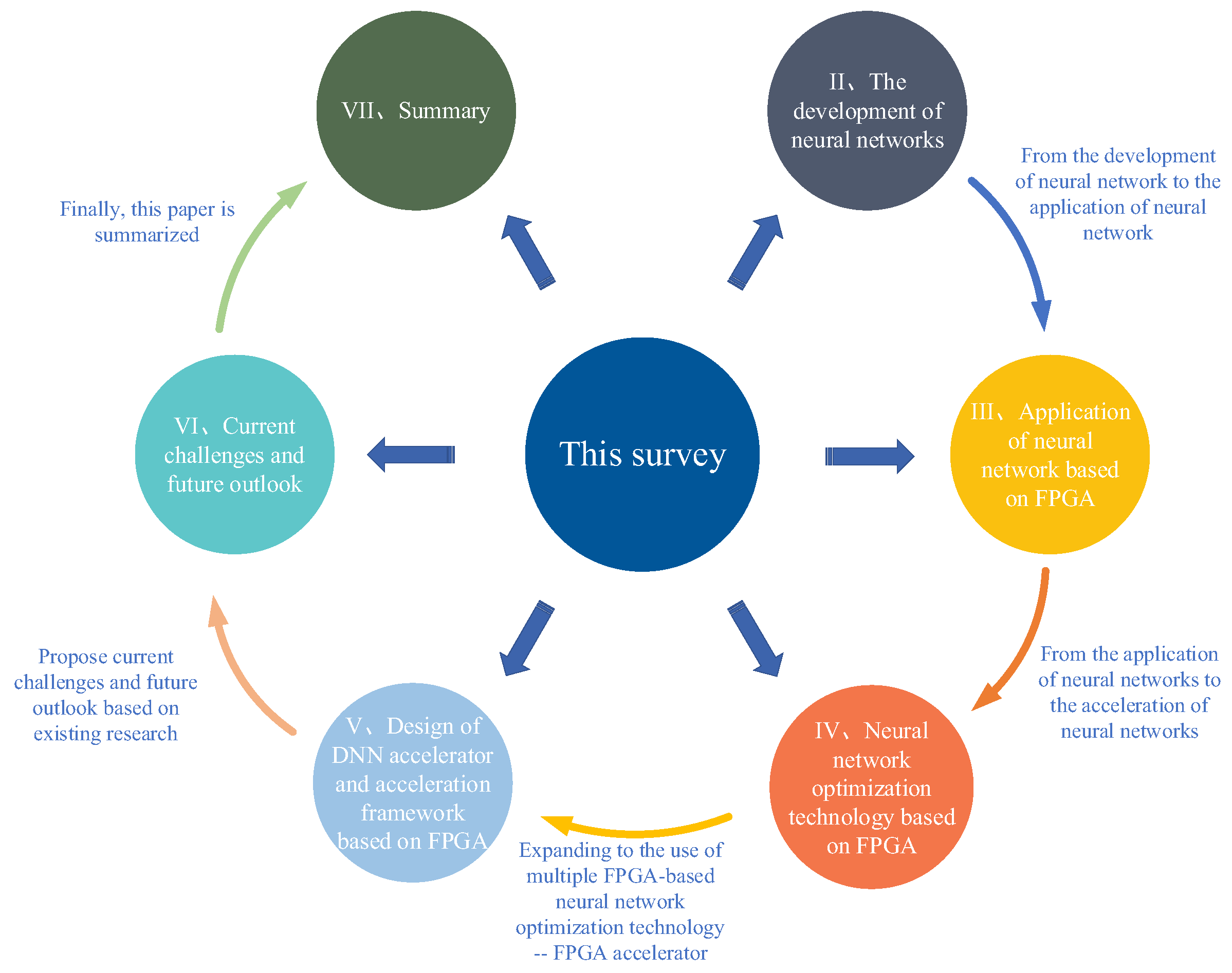

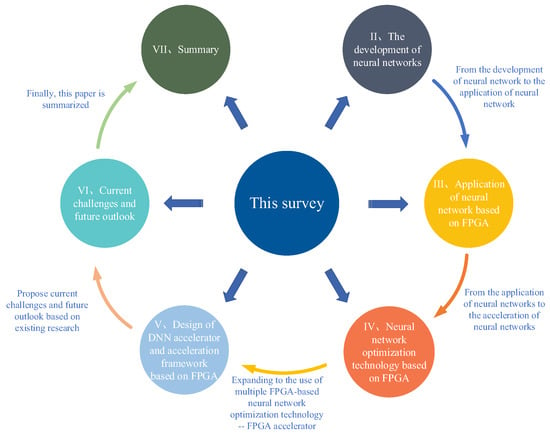

The remainder of this paper is organized as follows: In Section 2, the history of neural networks is clearly shown in the form of a table. The history of neural networks is divided into five stages, being convenient for readers to comb and study. In Section 3, some common neural networks are introduced, and their applications based on FPGA and their effects are described. In Section 4, the acceleration technology of various neural networks based on FPGA is introduced, its advantages and disadvantages and application scenarios are pointed out, and the latest research status and achievements are described. In Section 5, this paper describes the FPGA-based neural network accelerator and the acceleration framework in detail. These accelerators are often the comprehensive application of the acceleration technology described in Section 4, and they are compared with and summarized in terms of the performance of different neural networks deployed on different FPGA platforms using different acceleration technologies. In Section 6, the current difficulties in the application of FPGA-based neural networks are pointed out, and the future research directions are prospected. Finally, the paper is summarized in Section 7. The structural layout of the article is illustrated in Figure 1.

Figure 1.

The structural layout of this paper.

2. The Development of Neural Networks

As shown in Table 1, the development process of neural networks can be roughly divided into five stages: the proposal of the model, the stagnation period, the rise of the back propagation algorithm, the confusion period, and the rise of deep learning.

Table 1.

Development history of neural networks.

The period of 1943–1958 is considered as the model proposal phase, and the perceptron wave proposed in 1958 continued for about 10 years. With increasingly more scholars entering into this direction of research, some scholars gradually found the limitations of perceptron model. With the publication of the Perceptron by M. Minsky in 1969, the neural network was pushed to the bottom directly, leading to the “stagnation period” of neural networks from 1969 to 1980.

After 1980, increasingly more scholars paid attention to the back propagation algorithm, which reopened the thinking for researchers and launched another spring for the development of neural networks.

Then, for a long period of time, scholars did not make breakthrough results, only working on the basis of existing research. In the mid-1990s, statistical learning theory and the machine learning model represented by the support vector machine began to rise. In contrast, the theoretical basis of neural networks was not clear, and optimization difficulties, poor interpretability, and other shortcomings became more prominent; thus, neural network research fell into a low tide again.

Until 2006, Professor Geoffrey Hinton, a neural network expert at the University of Toronto, and his students formally proposed the concept of deep learning. They proposed the deep belief network model in their published paper. Hinton et al. [26] found that the multi-layer feedforward neural network could be pre-trained layer by layer. In other words, the unsupervised pre-training method is used to improve the initial value of the network weights, and then the weights are fine-tuned. This model began the research boom of deep neural networks and opened the prelude to the research and application of deep learning.

At present, common deep learning models include deep neural networks (DNNs), convolutional neural networks (CNNs), recurrent neural networks (RNNs), and generative adversarial networks (GANs), among others.

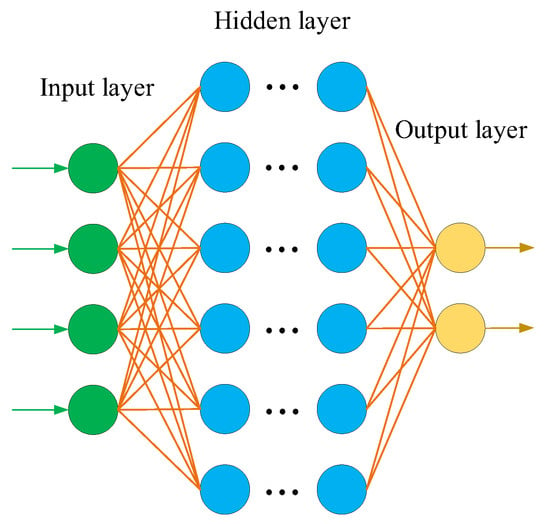

2.1. Deep Neural Network (DNN)

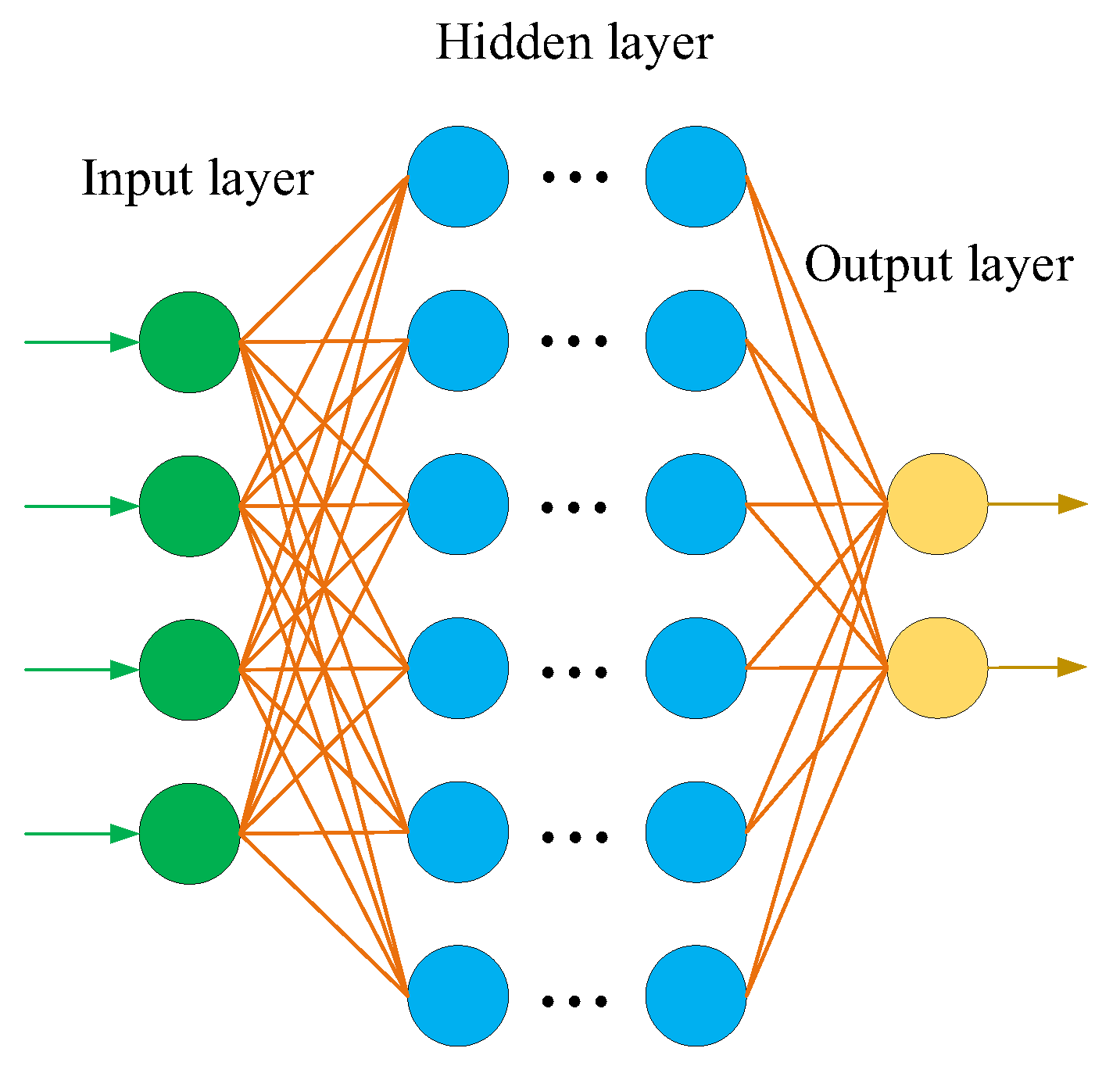

Since a single-layer perceptron cannot solve linear inseparability problems, it cannot be used in industry. Scholars have expanded and improved the perceptron model by increasing the number of hidden layers and corresponding nodes to enhance the expression ability of the model, and thus the deep neural network (DNN) was created. The DNN is sometimes called a multi-layer perceptron (MLP). The emergence of the DNN overcomes the low performance of a single-layer perceptron. According to the position of different layers in the DNN, the neural network layers inside the DNN can be divided into three types: the input layer, hidden layer, and output layer, as shown in Figure 2. Generally speaking, the first layer is the input layer, the last layer is the output layer, and the middle layers are all hidden layers. Layer to layer is fully connected, that is, any neuron in layer n must be connected with any neuron in layer n + 1. Although the DNN appears complicated, from a small local model, it is the same as the perceptron, namely, a linear relation z = ∑Wi Xi + b plus an activation function σ(z).

Figure 2.

Typical model of a DNN.

With the deepening of the layers of neural networks, the phenomena of overfitting, gradient explosion, and gradient disappearance has become increasingly more serious, and the optimization function is increasingly more likely to fall into the local optimal solution. In order to overcome this problem, scholars propose convolutional neural networks (CNN) based on the receptive field mechanism in biology.

2.2. Convolutional Neural Network (CNN)

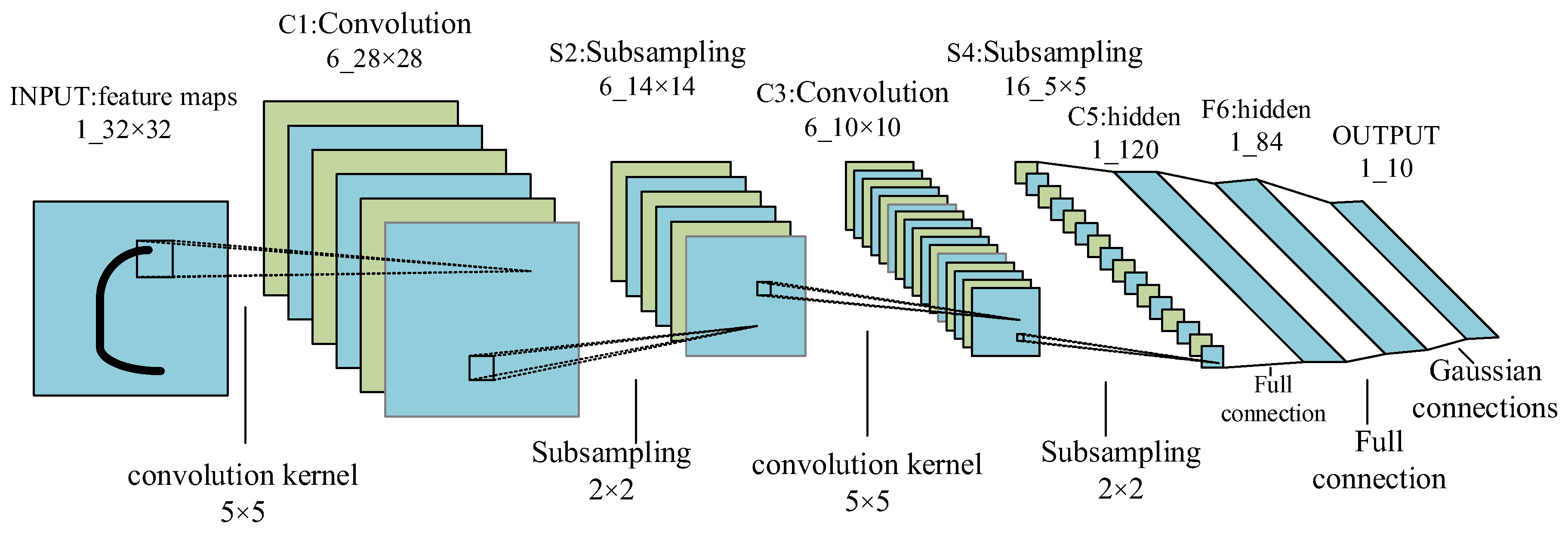

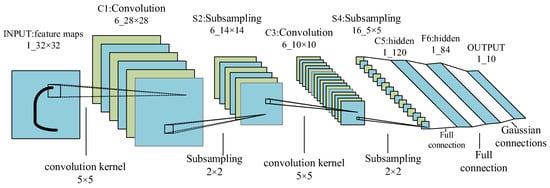

The CNN model was developed from the early artificial neural network. On the basis of the research of Hub et al. on the cells in the visual cortex of cats, the CNN model is a specially designed artificial neural network with multiple hidden layers through the biomimetic brain skin layer. Convolution operation is used to solve the disadvantages of large computation and loss of structure information of artificial neural networks. In 1982, Fukushima et al. [21] proposed the concept of Neocognitron to simulate human visual cognitive function, with it being considered to be the starting point of CNNs. In 1989, LeCun et al. [25] built the original Le-Net model, which included a convolutional layer and a fully connected layer. In 1998, LeCun et al. improved and proposed the classical Lenet-5 model, which better solved the problem of handwritten digit recognition.

The birth of LeNet-5 established the basic embryonic form of CNN, which is composed of a convolution layer, a pooling layer, an activation function, and a fully connected layer connected in a certain number of sequential connections, as shown in Figure 3. The CNN is mainly applied to image classification [39,40,41], object detection [42,43], and semantic segmentation [44,45,46], as well as in other fields. The most common algorithms are YOLO and R-CNN, among which YOLO has a faster recognition speed due to the characteristics of the algorithm. It has been upgraded to V7. R-CNN’s target location search and identification algorithm are slightly different from those of YOLO. Although the speed is slower than that of YOLO, the accuracy rate is higher than that of YOLO.

Figure 3.

The Lenet-5 model.

A convolution neural network with local awareness and parameters share two characteristics, local awareness, namely, the convolution neural network proposed that each neuron need not sense all pixels in the image and local pixels in an image-only perception; then, at a higher level, the information of these local pixels merge, and all the information of the image is obtained. The neural units of different layers are connected locally, that is, the neural units of each layer are only connected with part of the neural units of the previous layer. Each neural unit responds only to the area within the receptive field and does not consider the area outside the receptive field at all. Such a local connection pattern ensures that the learned convolution has the strongest response to the spatial local pattern of the input. The structure of the weight-sharing network makes it more similar to a biological neural network, which reduces the complexity of the network model and reduces the number of weights. This network structure is highly invariant to translation, scaling, tilting, or other forms of deformation. In addition, the convolutional neural network adopts the original image as input, being able to effectively learn the corresponding features from a large number of samples and to avoid the complex feature extraction process.

Since the convolutional neural network can directly process two-dimensional images, it has been widely used in image processing, and many research achievements have been made. The network extracts more abstract features from the original images through simple nonlinear models and only requires a small amount of human involvement in the whole process. However, as each layer of signals can only propagate in one direction and the sample processing is independent of each other at each moment, neither the DNN nor CNN can model the changes in time series. However, in natural language processing, speech recognition, handwriting recognition, and other fields, the chronological order of samples is very critical, and neither DNN nor CNN can deal with these scenarios. Thus, the recurrent neural network (RNN) came into being.

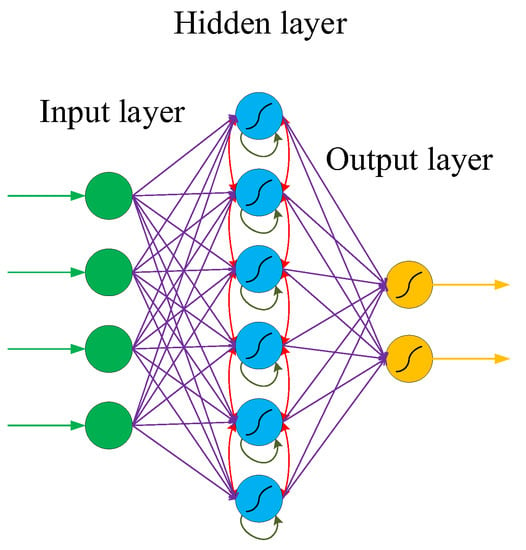

2.3. Recurrent Neural Network (RNN)

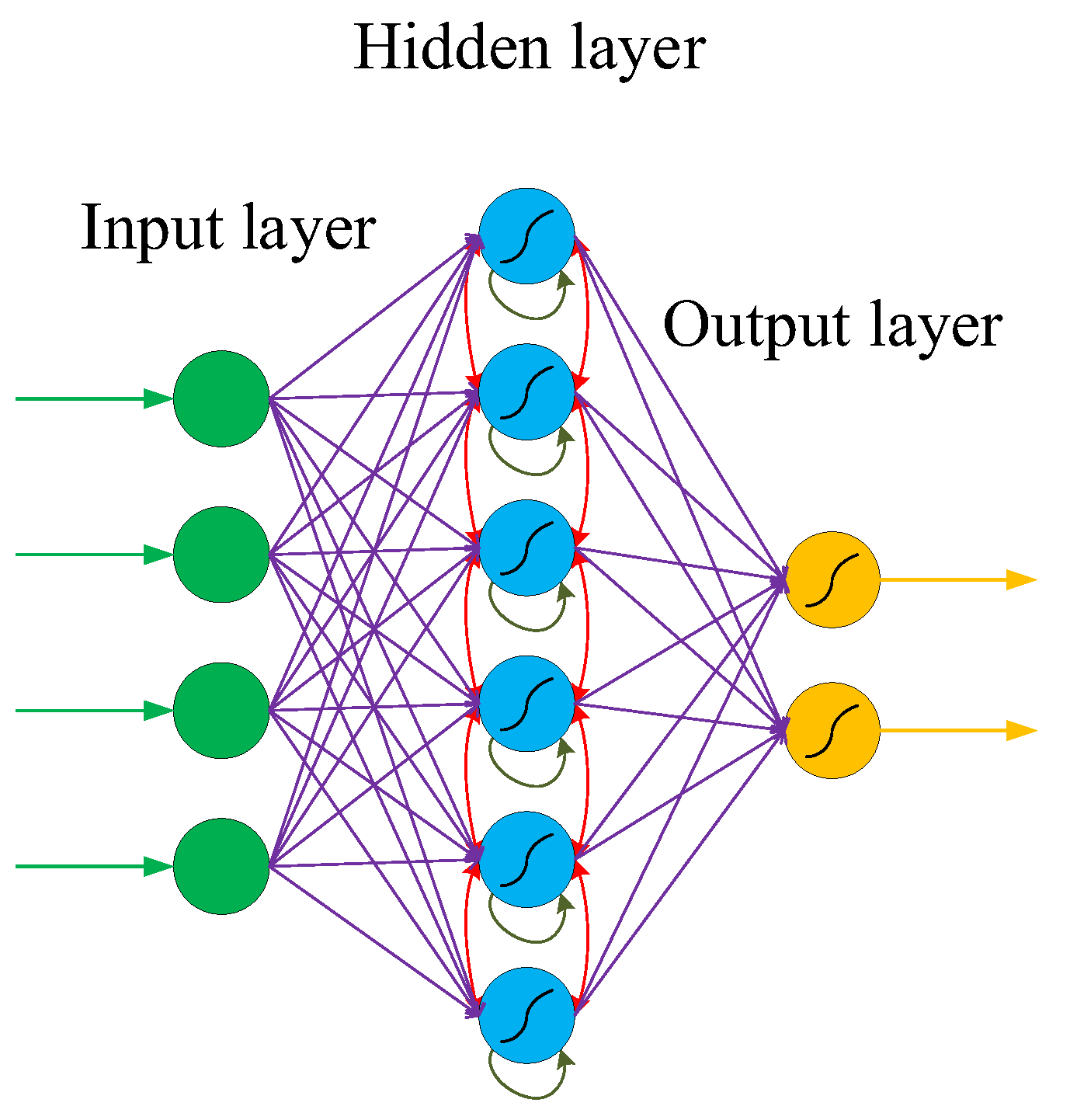

Different from CNN, RNN introduces the dimension of “time”, which is suitable for processing time-series-type data. Because the network itself has a memory ability, it can learn data types with correlation before and after. Recurrent neural networks have a strong model fitting ability for serialized data and are widely used in the field of natural language processing (NLP), including image classification, image acquisition, machine translation, video processing, sentiment analysis, and text similarity calculation. The specific structure is as follows: the recurrent neural network will store and remember the previous information in the hidden layer and then input it into the current calculation of the hidden layer unit.

Figure 4 shows the typical structure of an RNN, which is similar to but different from the traditional deep neural network (DNN). The similarity lies in that the network models of DNN and RNN are fully connected from the input layer to the hidden layer and then to the output layer, and the network propagation is also sequential. The difference is that the internal nodes of the hidden layer of the RNN are no longer independent of each other but have messages passing to each other. The input of the hidden layer can be composed of the output of the input layer and the output of the hidden layer at a previous time, which indicates that the nodes in the hidden layer are self-connected. It can also be composed of the output of the input layer, the output of the hidden layer at the previous moment, and the state of the previous hidden layer, which indicates that the nodes in the hidden layer are not only self-connected but also interconnected.

Figure 4.

Typical structure of an RNN.

Although the RNN solves the problems that the CNN cannot handle, it still has some shortcomings. Therefore, there are many deformed networks of RNN, among which one of the most commonly used networks is the long short-term network (LSTM). The input data of such networks is not limited to images or text, and the problem solved is not limited to translation or text comprehension. Numerical data can also be analyzed using the LSTM. For example, in predictive maintenance applications of factory machines, LSTM can be used to analyze machine vibration signals to predict whether the machine is faulty. In medicine, the LSTM can help in reading through thousands of pieces of literature and can find information related to specific cancers, such as tumor location, tumor size, number of stages, and even treatment policy or survival rate. It can also be combined with image recognition to provide keywords of lesions in order to assist doctors in writing pathological reports.

In addition to the DNN, CNN, and RNN, there is also an emerging network called reinforcement learning, among which the generative adversarial network (GAN) is a distinctive network.

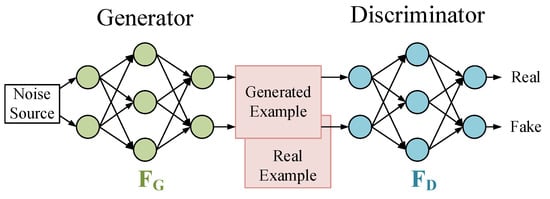

2.4. Generative Adversarial Network (GAN)

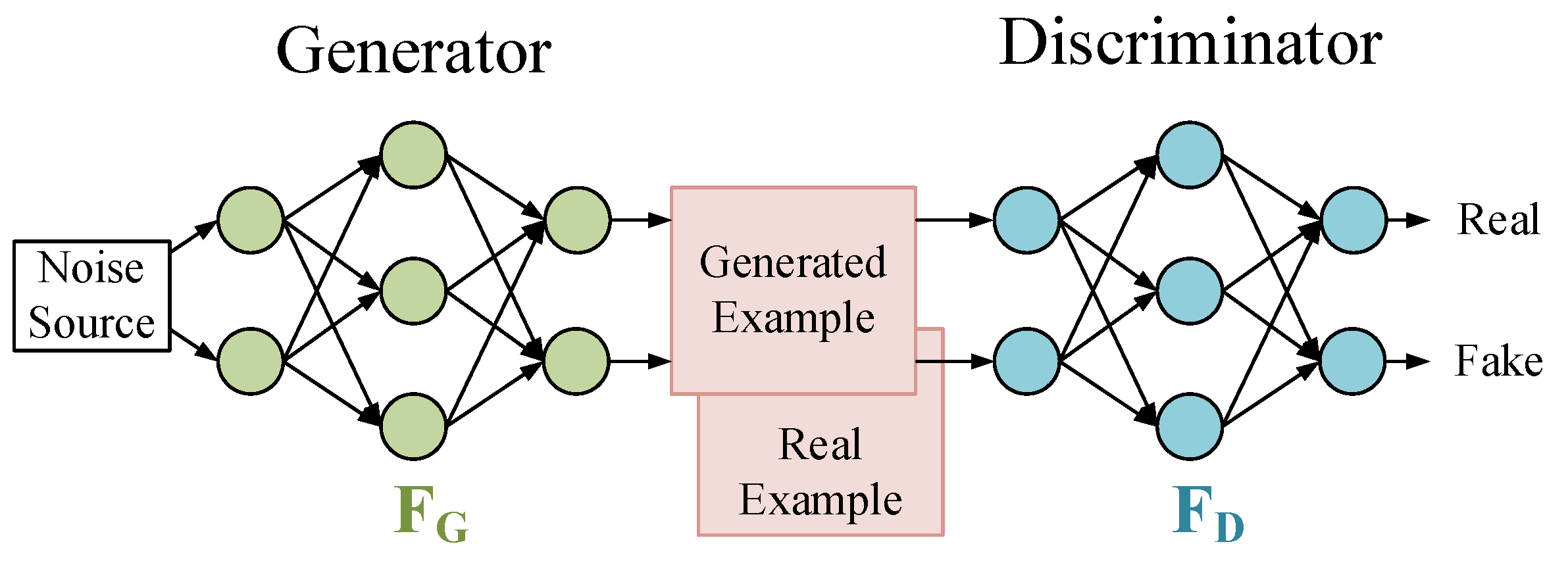

The GAN (generative adversarial network) is a machine learning model designed by Goodfellow et al. [30] in 2014. Inspired by the zero-sum game in game theory, this model views the generation problem as a competition between generators and discriminators. The generative adversarial network consists of a generator and a discriminator. The generator generates data by learning, and the discriminator determines whether the input data are real data or generated data. After several iterations, the ability of the generator and discriminator is constantly improved, and finally the generated data are infinitely close to the real data so that the discriminator cannot judge whether they are true or false. The GAN is shown in Figure 5.

Figure 5.

Typical structure of a GAN.

The training of the network can be equivalent to the minimax problem of the objective function. That is, let the discriminator maximize the accuracy of distinguishing real data from forged data, and at the same time minimize the probability that the data generated by the generator will be discovered by the discriminator. During training, one of them (the discriminator or generator) is fixed, the parameters of the other model are updated, and the model that can estimate the distribution of sample data is generated by alternating iterations. In GAN, the two networks compete with each other, eventually reaching a balance wherein the generating network can generate data, while the discriminating network can hardly distinguish it from the actual image.

In recent years, with the continuous development of neural networks, the combination of FPGA and neural networks has become increasingly closer, being used in various fields and having achieved certain results.

3. Application of Neural Networks Based on FPGA

The largest proportion of FPGA applications is in the field of communication, often used in large flow data transmission, digital signal processing, and other occurrences. With the continuous development of neural networks, the application field of FPGA has expanded from the original communication to a wider range of fields, such as national defense, the military industry, the aerospace industry, industrial control, security monitoring, intelligent medical treatment, and intelligent terminals, and increasingly more intelligent products have been derived from them.

In the academic community, the application of the combination of FPGA and neural networks has attracted increasingly more attention, and the optimal design of neural networks based on FPGA has also become a research hotspot. Scholars have also conducted much research and have obtained some results. We introduce the current research results of the combination of FPGA and neural networks in terms of three aspects: the application of FPGA-based CNN, the application of FPGA-based RNN, and the application of FPGA-based GAN, and analyzed the directions for improvement of these studies.

3.1. Application of CNNs Based on FPGA

In terms of intelligent medical treatment, in November 2019, Serkan Sağlam et al. [47] deployed a CNN on FPGA to classify malaria disease cells and achieved 94.76% accuracy. In 2020, Qiang Zhang et al. [48] adopted a CPU + FPGA heterogeneous system to realize CNN classification of heart sound sample data, with an accuracy of 86%. The fastest time to classify 200 heart sound samples was 0.77 s, which was used in the machine of primary hospital to assist in the initial diagnosis of congenital heart disease. In 2021, Jiying Zhu et al. [49] proposed a computed tomography (CT) diagnostic image recognition of cholangiocarcinoma that was based on FPGA and a neural network, showing an excellent classification performance on liver dynamic CT images. In September of the same year, Siyu Xiong et al. [50] used an FPGA-based CNN to speed up the detection and segmentation of 3D brain tumors, providing a new direction for the improvement of automatic segmentation of brain tumors. In 2022, H. Liu et al. [51] designed an FPGA-based multi-task recurrent neural network gesture recognition and motion assessment upper limb rehabilitation device, making it unnecessary for patients with upper limb movement disorders to spend a large amount of time in the hospital for upper limb rehabilitation training. Using this device can help patients to complete the same professional upper limb rehabilitation training at home in the same way as in the hospital, helping the patients in reducing the burden of medical expenses and reducing the investment of a large number of medical resources. The intelligent rehabilitation system adopts a high-level synthesis design of HLS, and a convolutional recurrent neural network (C-RNN) is deployed on Zynq ZCU104 FPGA. The experiments showed that the system can recognize the unnatural features (such as tremor or limited flexion and extension) in patients’ dynamic movements and upper limb movements with an accuracy of more than 99%. However, the system does not optimize the convolution operation in the network, nor does it optimize the memory access of parameters.

In terms of national defense, in 2020, Cihang Wang et al. [52] found that a large number of high-precision and high-resolution remote sensing images were used in civil economic construction and military national defense, and thus they proposed a scheme of real-time processing of remote sensing images that was based on a convolutional neural network on the FPGA platform. This scheme optimized CNN on FPGA from two aspects: spatial parallel and temporal parallel. By adding pipeline design and using the ideas of module reuse and data reuse, the pressure of the data cache was reduced, and the resource utilization of FPGA was increased. Compared with other schemes, the proposed scheme greatly improved the recognition speed of remote sensing images, reduced the power consumption, and achieved 97.8% recognition accuracy. Although the scheme uses many speedup techniques, it does not optimize the convolution operation, which has the most significant performance improvement. In the next step, the traditional CNN convolution operation can be transformed into a Winograd fast convolution operation, so as to reduce the use of multiplication and accumulation operation, and thus improving the model operation rate and reducing the resource occupancy.

In the same year, Buyue Qin et al. [53] proposed a special processor for key point detection of aircraft that was based on FPGA and deployed a VGG-19 deep neural network (DNN) to speed up the detection process of enemy aircraft, so as to detect enemy aircraft in the first time and avoid the danger of being attacked. The design was implemented on Xilinx Virtex-7 VC709 FPGA at 150 MHz (Mega Hertz) using HLS high-level synthesis, fixed-point quantization, on-chip data buffering, and FIFO (first in first out) optimization methods. Compared to the Intel I7-8700K (@ 3.7 GHz, Giga Hertz) processor, the former is 2.95 times the throughput of the latter and 17.75 times the performance power ratio. Although the processor uses HLS high-level synthesis to simplify the difficulty of network deployment on FPGA, HLS causes individuals to focus on the design and to pay less attention to the specific implementation of the bottom layer by integrating other languages such as C/C++ (the C/C++ programming language) into the HDL (hardware description language). Compared with the implementation of VHDL (very high-speed integrated circuit hardware description language) or Verilog (Verilog HDL), the optimization objective of the algorithm is not the actual on-board objective, and thus the final implementation often fails to meet the timing or power constraints.

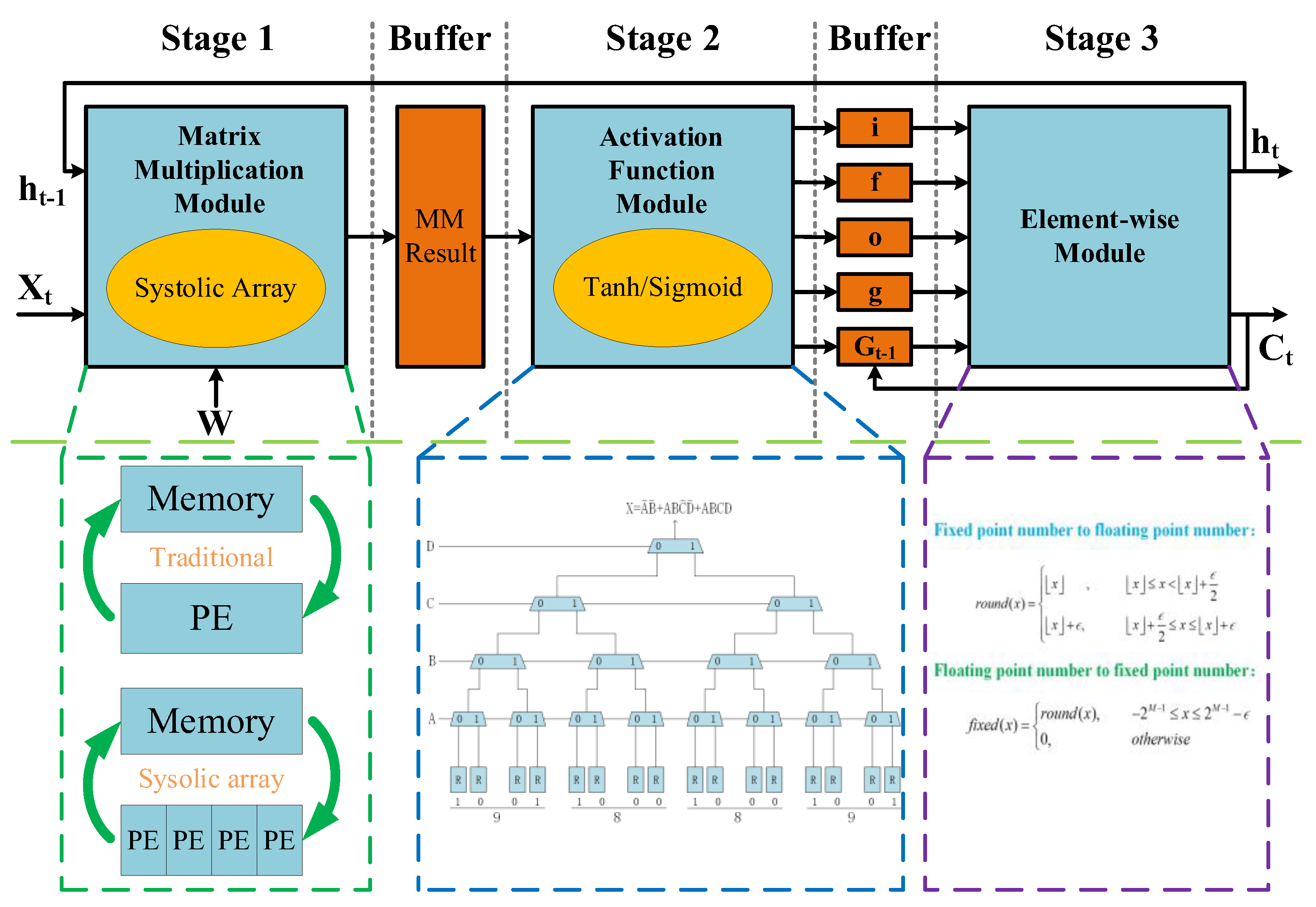

3.2. Application of RNNs Based on FPGA

At present, in addition to the application of deep neural networks into the fields of image and video, an FPGA-based speech recognition system has also become a research hotspot. Among them, the commonly used speech recognition model is the RNN and its variant LSTM. Due to its huge market demand, speech recognition develops rapidly. In intelligent speech recognition products, in order to ensure certain flexibility and mobility, the speech recognition model is usually deployed on FPGA to meet the needs of intelligence and production landing.

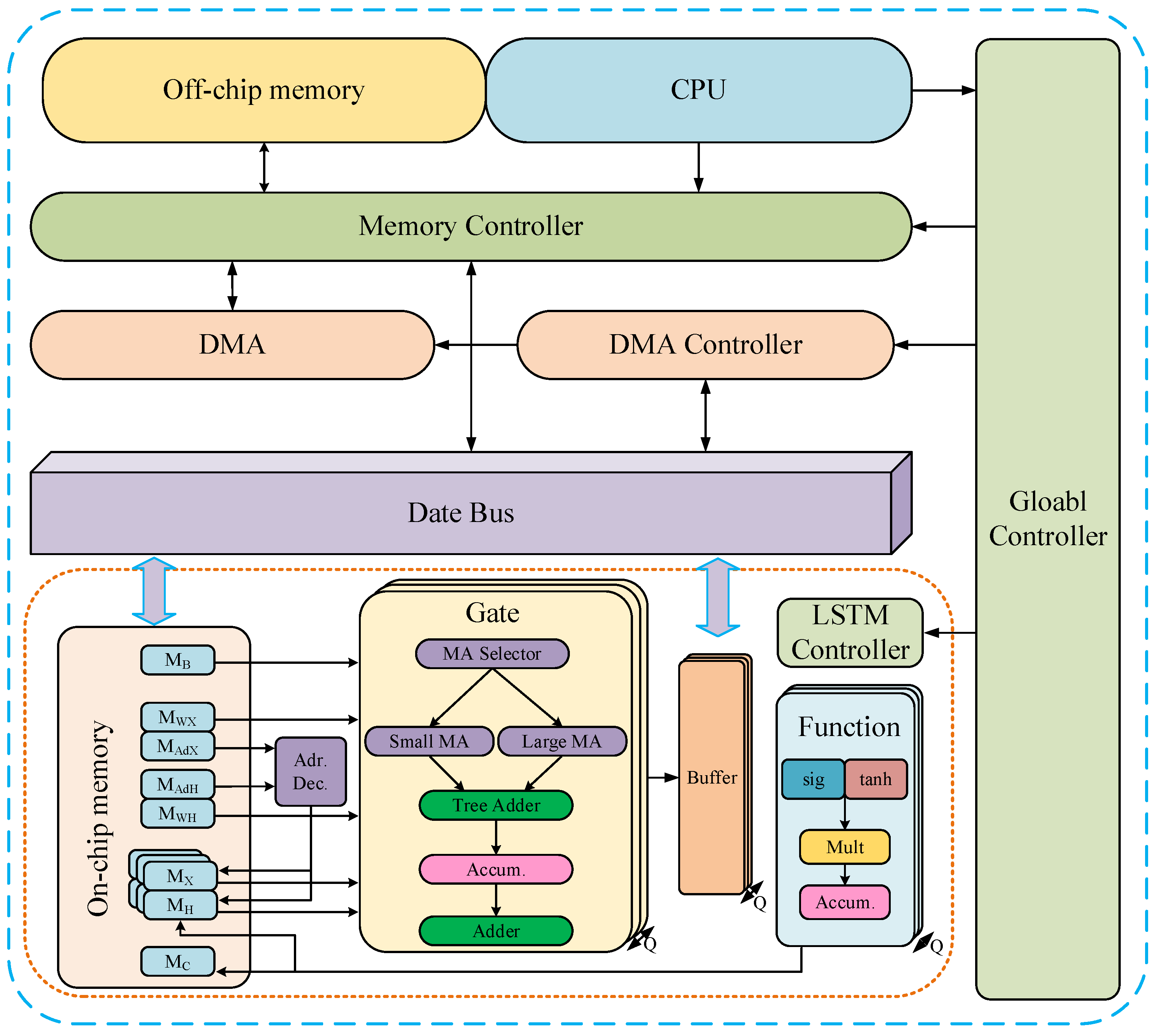

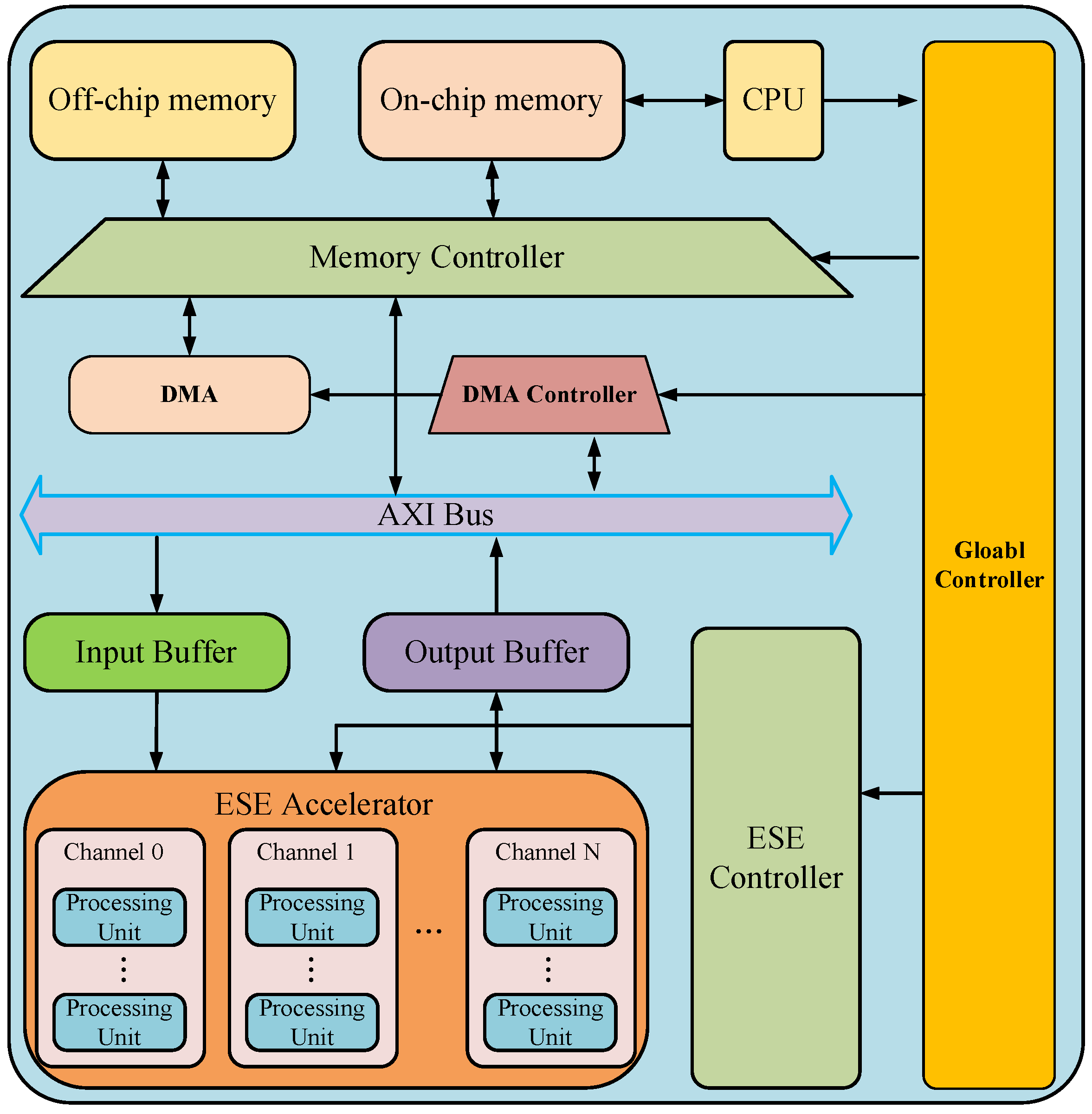

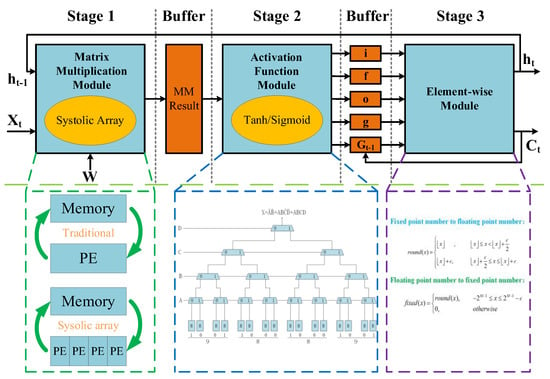

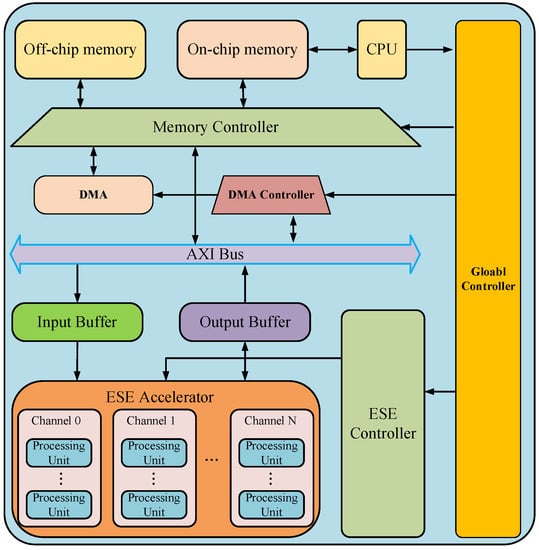

In 2016, the work of J. C. Ferreira and J. Fonseca et al. [54] was considered as one of the earliest works to implement the LSTM network on the FPGA hardware platform, which focused people’s attention from the software implementation of LSTM to FPGA hardware. In 2017, Y. Guan et al. [55] implemented the LSTM network using special hardware IP containing devices such as data scheduler, FPGA, AXI4Lite (one of the Advanced eXtensible Interfaces) bus, and optimized computational performance and communication requirements in order to accelerate inference. In the same year, Y. Zhang et al. conducted two works. First, they proposed an energy-efficient optimization structure based on tiling vector multiplication, binary addition tree, overlap computation, and data access methods [56]. The second further developed on the basis of the first work and improved the performance by adding sparse LSTM layers that occupy less resources [57]. Song Han et al. [58] proposed the ESE (efficient speech recognition engine), a sparse LSTM efficient speech recognition engine based on FPGA, which uses a load-balancing sensing pruning method. The proposed method can compress the size of the LSTM model by a factor of 20 (10 times pruning, 2 times quantization) with negligible loss of prediction accuracy. They also proposed a scheduler that encodes and partitions the compression model into multiple parallel PE and schedule complex LSTM data streams. Compared with the peak performance of the uncompressed LSTM model of 2.52 GOPS (Giga Operations Per Second), the ESE engine reached the peak performance of 282 GOPS on a Xilinx XCKU060 FPGA with 200 MHz operating frequency. ESE, evaluated in the LSTM speech recognition benchmark, was 43 and 3 times faster than the Intel Core I7 5930K CPU and Pascal Titan X GPU implementations, respectively, and was 40 and 11.5 times more energy efficient, respectively.

In 2018, Zhe Li et al. [59] summarized two previous works on the FPGA implementation of the LSTM RNN inference stage on the basis of model compression. One work found that the network structure became irregular after weight pruning. Another involved adding a cyclic matrix to the RNN to represent the weight matrix in order to achieve model compression and acceleration while reducing the influence of irregular networks. On this basis, an efficient RNN framework for automatic speech recognition based on FPGA was proposed, called E-RNN. Compared with the previous work, E-RNN achieved a maximum energy efficiency improvement of 37.4 times, which was more than two times that of the latter work under the same accuracy. In 2019, Yong Zheng et al. [60] also used the pruning operation to compress the LSTM model, which was different from the work of Zhe Li et al. [59] in that they used the permutation block diagonal mask matrix to pry the model. The structured sparse features were created, and the normalized linear quantization method was used to quantify the weight and activation function, so as to achieve the purpose of hardware friendliness. However, compared with similar jobs, the acceleration effect was not very significant.

In 2020, Yuxi Sun et al. [61] adopted the idea of model partitioning. Unlike Song Han et al. [58], who coded the compression model and divided it into multiple parallel PEs for processing, they extended the reasoning of deep RNN by dividing a large model into FPGA clusters. The whole FPGA cluster shared an RNN model, and thus each FPGA processed only a part of the large RNN model, which reduced the processing burden of FPGA. The parallelism of the FPGA cluster and the time dependence of RNN made the delay basically unchanged when the number of RNN layers increased. Compared to Intel CPU, 31 times and 61 times speedup were achieved for single-layer and four-layer RNNS, respectively.

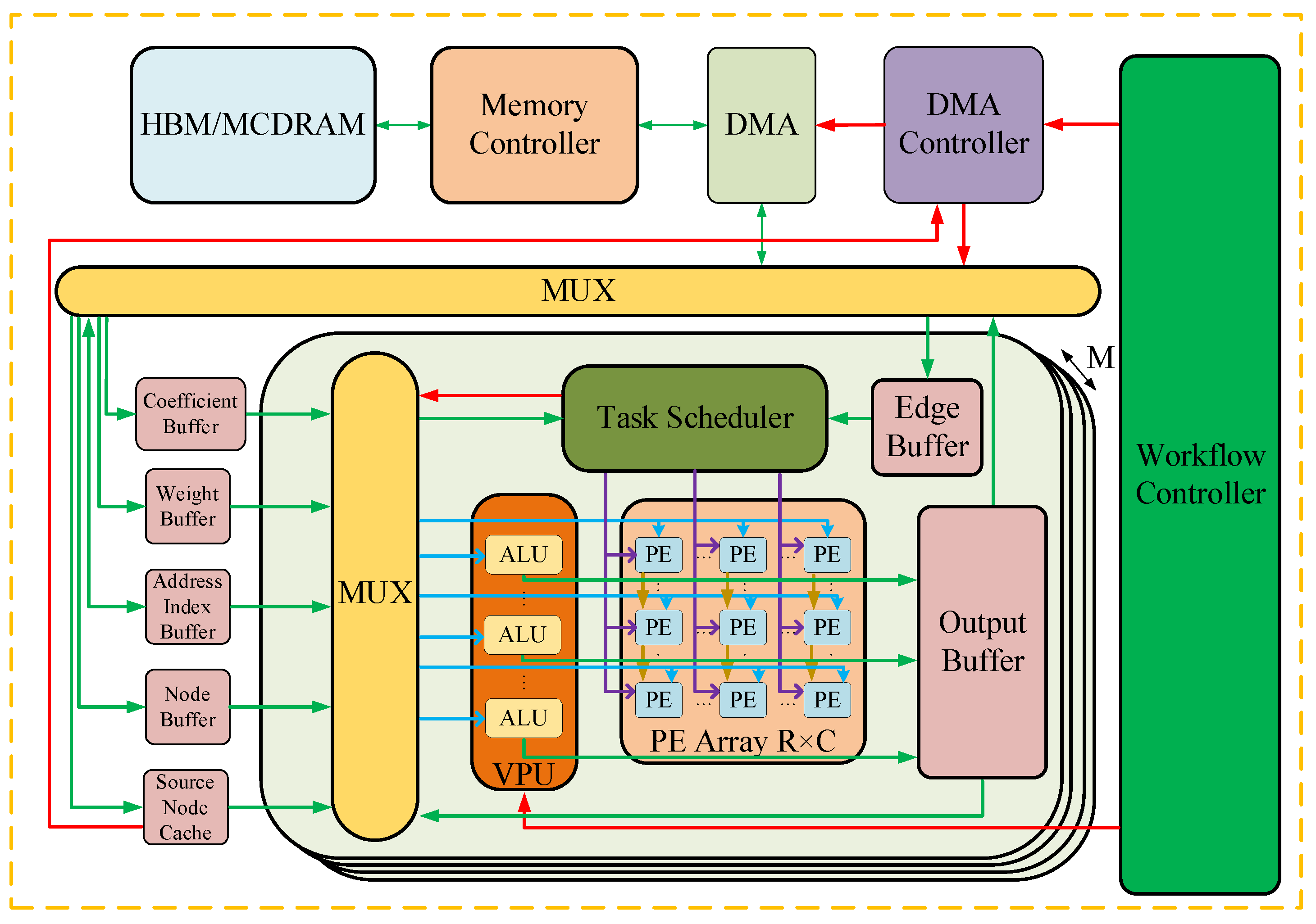

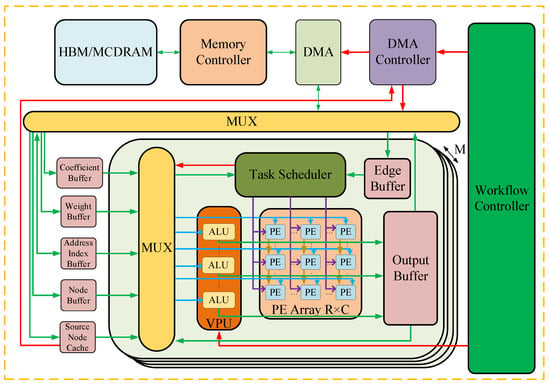

In December of the same year, Chang Gao et al. [62] proposed a lightweight gated recursive unit (GRU)-based RNN accelerator, called EDGERDNN, which was optimized for low-latency edge RNN inference with batch size 1. EDGERDRNN used a delta network algorithm inspired by pulsed neural networks in order to exploit the temporal sparsity in RNN. Sparse updates reduced distributed RAM (DRAM) weight and memory access by a factor of 10, and reduced latency, resulting in an average effective throughput of 20.2 GOP/s for batch size 1. In 2021, Jinwon Kim et al. [63] implemented an efficient and reconfigurable RNN inference processor AERO on a resource-limited Intel Cyclone V FPGA on the basis of the instruction set architecture that specializes in processing the raw vector operations that constitute the data stream of RNN models. The vector processing unit (VPU) based on the approximation multiplier was used to complete the approximate calculation in order to reduce the resource usage. Finally, AERO achieved a resource utilization of 1.28 MOP/s/LUT. As the authors stated, the next step can be achieved by the multi-core advantage of FPGA clusters in order to achieve higher reasoning speed.

In June 2022, Jianfei Jiang et al. [64] proposed a subgraph segmentation scheme based on the CPU-FPGA heterogeneous acceleration system and the CNN-RNN hybrid neural network. The Winograd algorithm was applied to accelerate the CNN. Fixed-point quantization, cyclic tiling, and piecewise linear approximation of activation function were used to reduce hardware resource usage and to achieve high parallelization in order to achieve RNN acceleration. The connectionist text proposal network (CTPN) was used for testing on an Intel Xeon 4116 CPU and Arria10 GX1150 FPGA, and the throughput reached 1223.53 GOP/s. Almost at the same time, Chang Gao et al. [65] proposed a new LSTM accelerator called “Spartus” that was again based on spatiotemporal sparsity, with it utilizing spatiotemporal sparsity in order to achieve an ultra-low delay inference. Different from the pruning method used by Zhe Li [59] and Yong Zheng et al. [60], they used a new structured pruning method of column balancing target dropout (CBTD) in order to induce spatial sparsity, which generates structured sparse weight matrices for balanced workloads and reduces the weight of memory access and related arithmetic operations. The throughput of 9.4 TOp/s and energy efficiency of 1.1 Top/J were achieved on a Xilinx Zynq 7100 FPGA with an operating frequency of 200 MHz.

3.3. Application of GANs Based on FPGA

In 2018, Amir Yazdanbakhsh et al. [66] found that generators and discriminators in GANs use convolution operators differently. The discriminator uses the normal convolution operator, while the generator uses the transposed convolution operator. They found that due to the algorithmic nature of transposed convolution and the inherent irregularity in its computation, the use of conventional convolution accelerators for GAN leads to inefficiency and underutilization of resources. To solve these problems, FlexiGAN was designed, an end-to-end solution that generates optimized synthesizable FPGA accelerators according to the advanced GAN specification. The architecture takes advantage of MIMD (multiple instructions stream multiple data stream) and SIMD (single instruction multiple data) execution models in order to avoid inefficient operations while significantly reducing on-chip memory usage. The experimental results showed that FlexiGAN produced an accelerator with an average performance of 2.2 times that of the optimized conventional accelerator. The accelerator delivered an average of 2.6 times better performance per watt compared to the Titan X GPU. Along the same lines as Amir Yazdanbakhsh et al. [66], Jung-Woo Chang et al. [67] found that the GAN created impressive data mainly through a new type of operator called deconvolution or transposed convolution. On this basis, a Winograd-based deconvolution accelerator wsa proposed, which greatly reduces the use of the multiplication–accumulation operation and improves the operation speed of the model. The acceleration effect was 1.78~8.38 times of the fastest model at that time. However, as was the case for Amir Yazdanbakhsh et al., they only optimized the convolution operation in GANs, but did not optimize the GAN model framework.

In the study of infrared image colorization, in order to obtain more realistic and detailed colorization results, in 2019, Xingping Shi et al. [68] improved the generator based on Unet, designed a discriminator for deconvolution optimization, proposed a DenseUnet GAN structure, and added a variety of loss functions in order to optimize the colorization results. The data were preprocessed, and the face localization neural network used in preprocessing datasets was accelerated by FPGA. It achieved better results than other image coloring methods on large public datasets.

In terms of image reconstruction research, Dimitrios Danopoulos et al. [69] first used GAN to implement image reconstruction application on FPGA in 2021. Compared with CPU and GPU platforms, generator models trained with specific hardware optimizations can reconstruct images with high quality, minimum latency, and the lowest power consumption.

In terms of hardware implementation of GAN, in 2021, Yue Liu et al. [70] proposed a hardware scheme of a generative adversarial network based on FPGA, which effectively meets the requirements of dense data communication, frequent memory access, and complex data operation in generative adversarial networks. However, the parameters of GANs are not optimized by the acceleration design such as ping-pong cache, and thus there is a large amount of room for improvement in this research.

4. Neural Network Optimization Technology Based on FPGA

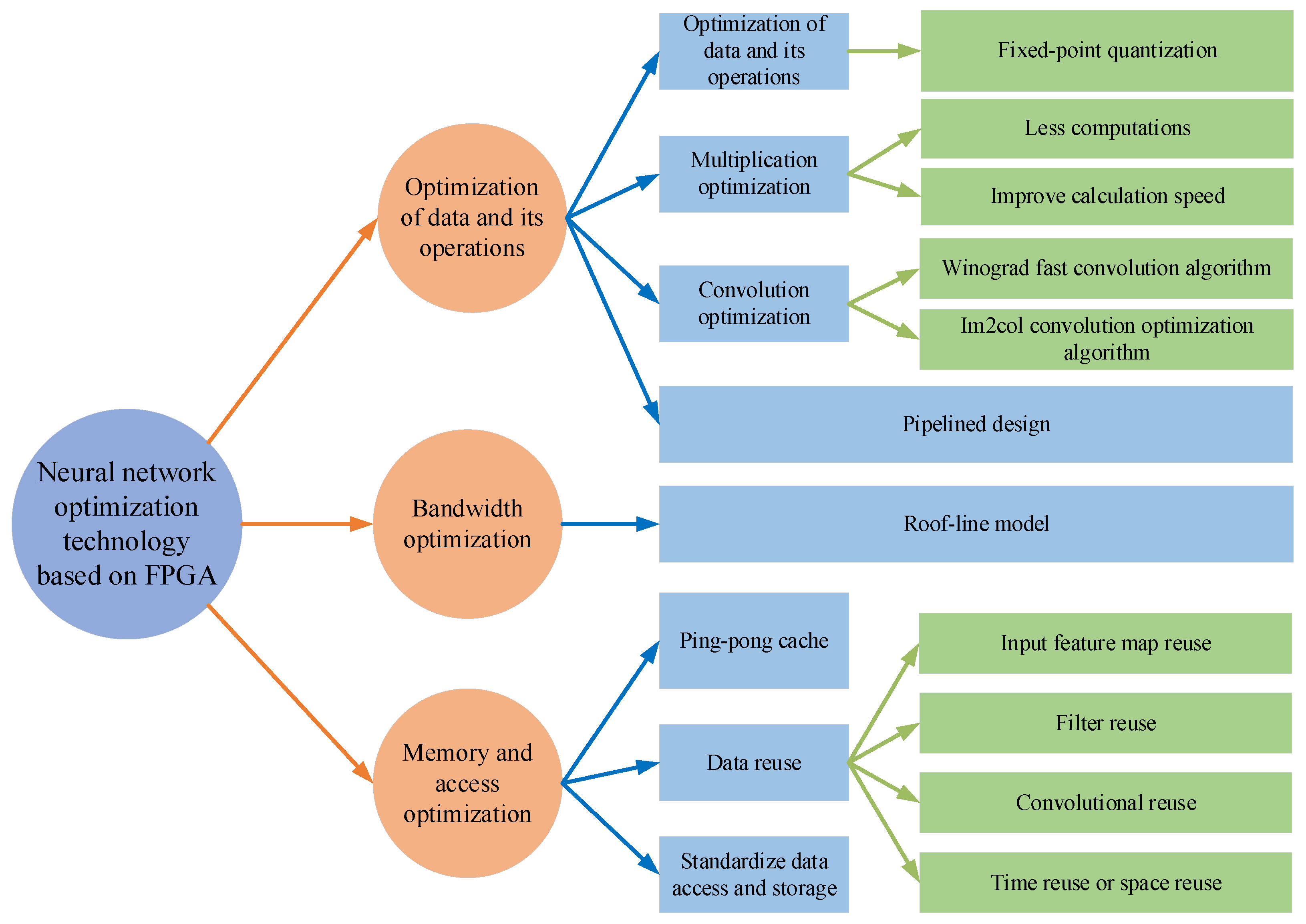

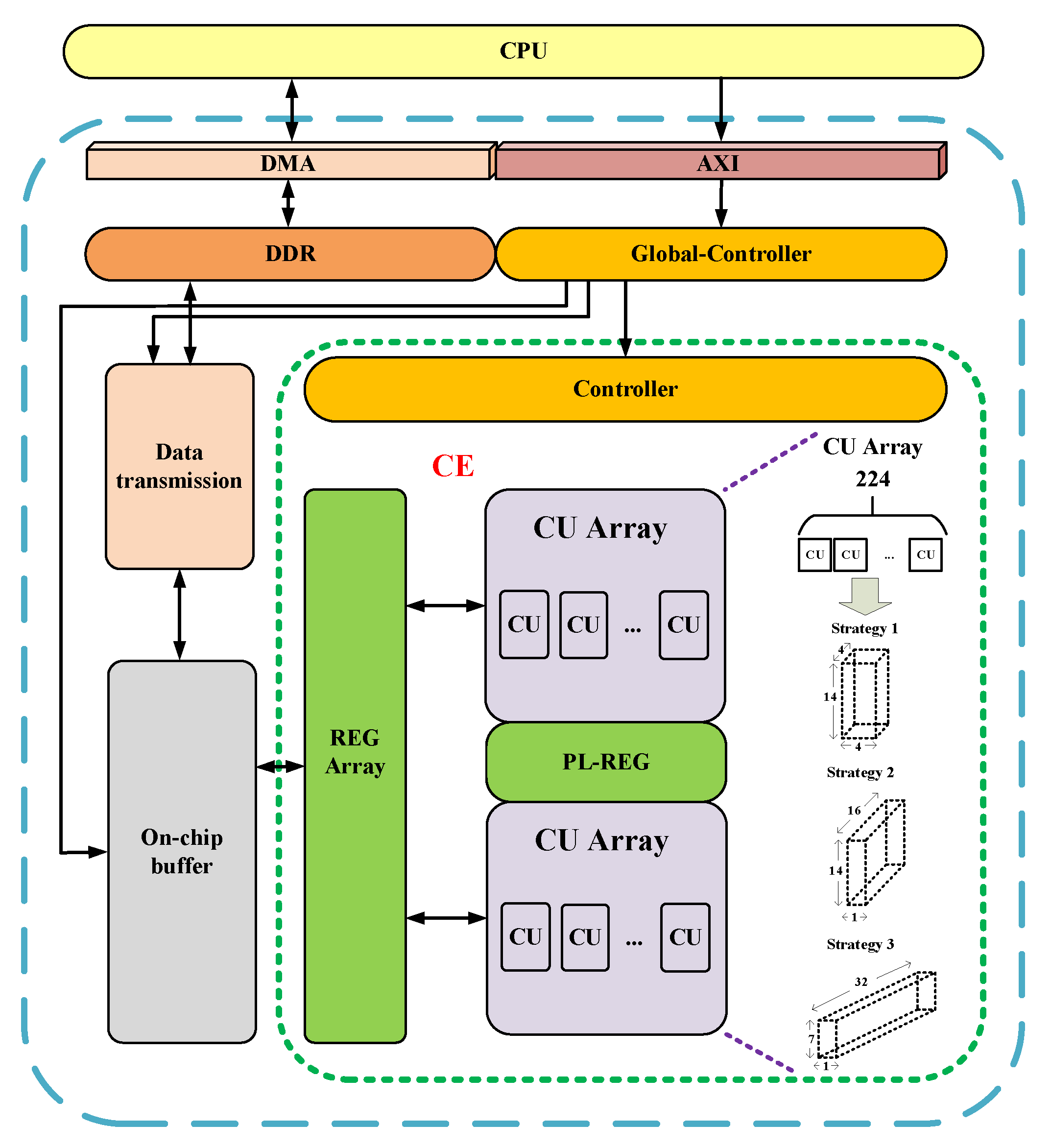

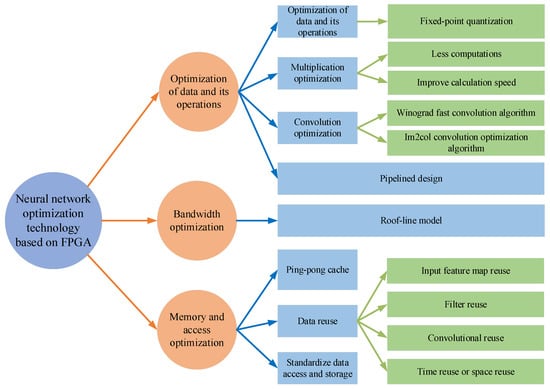

With the rapid development of artificial intelligence, the number of layers and nodes of neural network models is increasing, and the complexity of the models is also increasing. Deep learning and neural networks have put forward more stringent requirements on the computing ability of hardware. On the basis of the advantages of FPGA mentioned in the introduction, increasingly more scholars are choosing to use FPGA to complete the deployment of neural networks. As shown in Figure 6, according to different design concepts and requirements, FPGA-based neural network optimization technology can be roughly divided into optimization for data and operation, optimization for bandwidth, and optimization for memory and access, among others, which are introduced in detail below.

Figure 6.

Neural network optimization technology based on FPGA.(fixed-point quantization [71,72,73,74,75,76,77,78], less computations [79,80,81], improve calculation speed [82,83,84,85], Winograd fast convolution algorithm [86,87,88,89,90,91], Im2col convolution optimization algorithm [92,93,94,95,96,97], pipelined design [98,99,100,101,102], Roof-line model [103,104,105], ping-pong cache [106,107,108,109], input feature map reuse [110,111], filter reuse [111,112], convolutional reuse [110,111,112], time reuse or space reuse [111], standardize data access and storage [113,114,115]).

4.1. Optimization of Data and Its Operations

In the aspect of optimization of data and its operation, scholars have made many attempts and achieved certain results. Aiming at the data itself, a method to reduce the data accuracy and computational complexity is usually used. For example, fixed-point quantization is used to reduce the computational complexity with an acceptable loss of data accuracy, so as to improve the computational speed. In terms of operation, the optimization is realized by reducing the computation times of multiplication and increasing the computation speed. The commonly used optimization methods include addition calculation instead of multiplication calculation, the Winograd fast convolution algorithm, and the Im2col convolution acceleration algorithm, among others.

4.1.1. Fixed-Point Quantitative

Since the bit width of the number involved in the operation is finite and constant in FPGA calculations, the floating-point decimal number involved in the operation needs to be fixed to limit the bit width of a floating-point decimal to the range of bit width allowed by FPGA. Floating-point decimals mean that the decimal point position is not fixed, and fixed-point decimals mean that the decimal point position is fixed. Fixed-point quantization is the use of finite digits to represent infinite precision numbers, that is, the quantization function maps the full precision numbers (the activation parameters, weight parameters, and even gradient values) to a finite integer space. Fixed-point quantization can greatly reduce the memory space of each parameter and the computational complexity within the acceptable accuracy loss range, so as to achieve neural network acceleration.

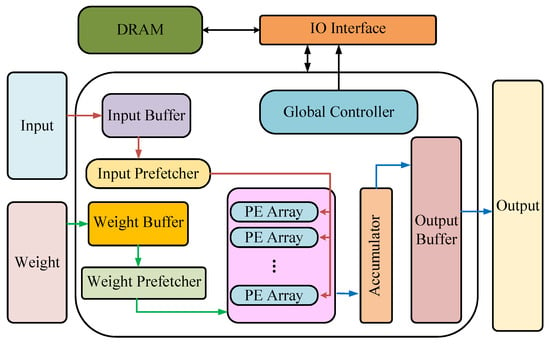

In 2011, Vincent Vanhoucke first proposed the linear fixed-point 8-bit quantization technology, which was initially used in X86 CPU, greatly reducing the computational cost, with it then being slowly applied to FPGA [71]. In March 2020, Shiguang Zhang et al. [72] proposed a reconfigurable CNN accelerator with an AXI bus based on advanced RISC machine (ARM) + FPGA architecture. The accelerator receives the configuration signal sent by the ARM. The calculation in the reasoning process of different CNN layers is completed by time-sharing, and the data movement of the convolutional layer and pooling layer is reduced by combining convolutional and pooling operations; moreover, the number of access times of off-chip memory is reduced. At the same time, fixed-point quantization is used to convert floating-point numbers into 16-bit dynamic fixed-point format, which improves the computational performance. The peak performance of 289 GOPS is achieved on a Xilinx ZCU102 FPGA.

In June of the same year, Zongling Li et al. [73] designed a CNN weight parameter quantization method suitable for FPGA, different from the direct quantization operation in the literature [72]. They transformed the weight parameter into logarithm base 2, which greatly reduced the quantization bit width, improved the quantization efficiency, and reduced the delay. Using a shift operation instead of a convolution multiplication operation saves a large number of computational resources. In December of the same year, Sung-en Chang et al. [74] first proposed a hardware-friendly quantization method named Sum-of-Power-of-2 (SP2), and on the basis of this method proposed a mixed scheme quantization (MSQ) combining SP2 and fixed-point quantization methods. By combining these two schemes, a better match with the weight distribution is achieved, accomplishing the effect of maintaining or even improving the accuracy.

In 2021, consistent with X. Zhao’s view of whole-integer quantization [75], Zhenshan Bao et al. [76] proposed an effective quantization method that was based on hardware implementation, a learnable parameter soft clipping fully integer quantization (LSFQ). Different from previous studies that only quantified weight parameters, input and output data, etc., their study quantified the entire neural network as integers and automatically optimized the quantized parameters in order to minimize losses through backpropagation. Then, the batch norm layer and convolution layer were fused to further quantify the deviation and quantization step. In the same year, Xiaodong Zhao et al. [77] optimized the YOLOv3 network structure through pruning and the Int8 (Integer8) quantization algorithm with the trade-off between speed and accuracy. Good acceleration was achieved with limited and acceptable loss of accuracy. Some scholars proposed the use of the quantization algorithm to map matrix elements participating in matrix multiplication operations from single-precision floating-point data to half-precision floating-point data. Under the condition of ensuring the accuracy of the algorithm model, the consumption of on-chip storage resources on FPGA is greatly saved and the computing speed is improved [78].

4.1.2. Multiplication Optimization

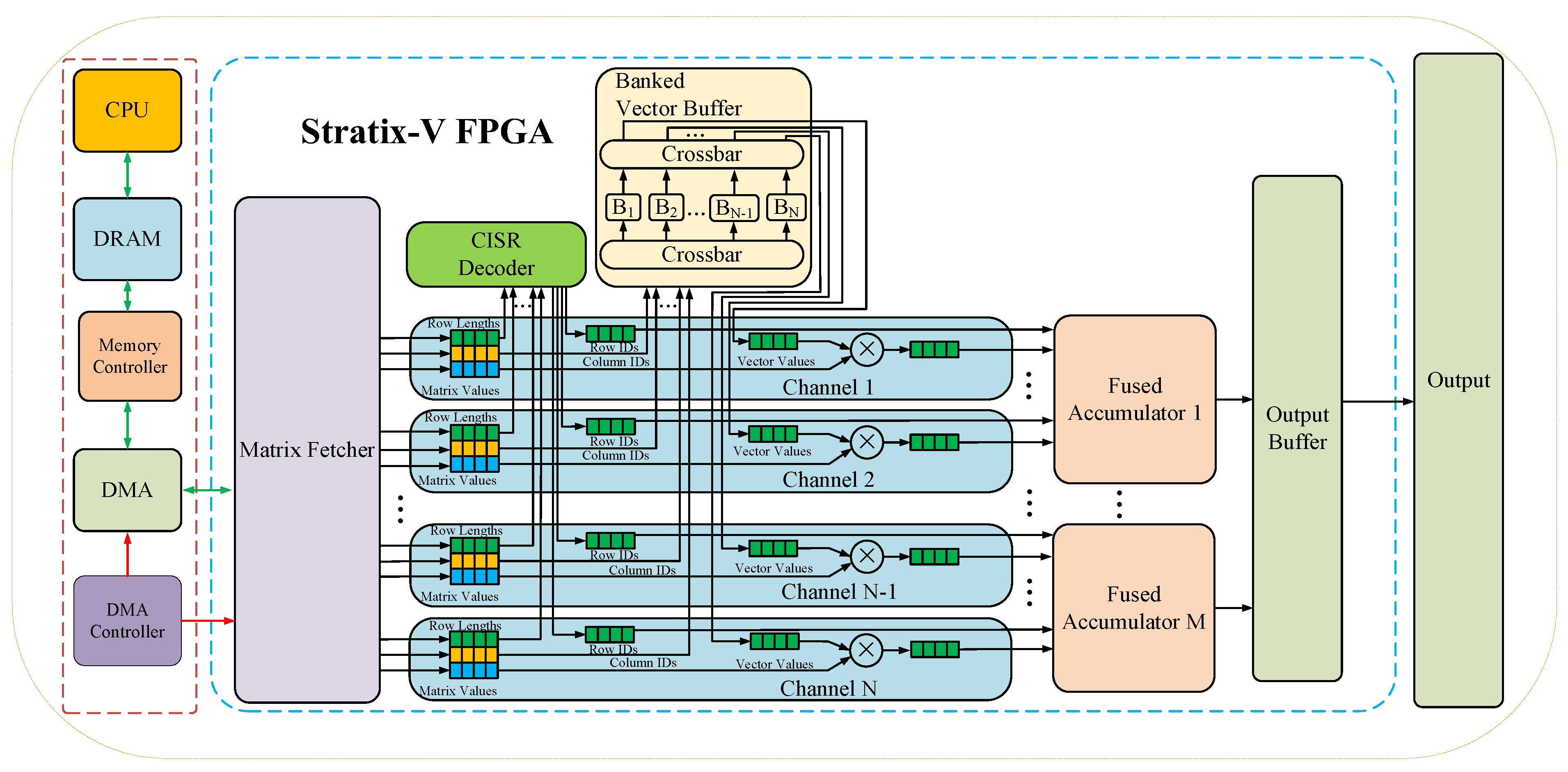

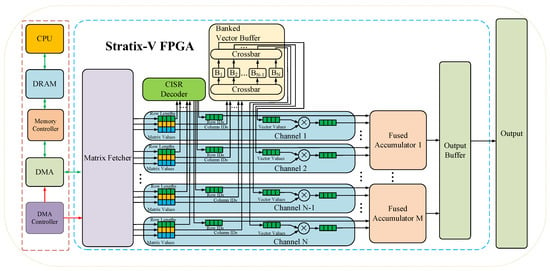

Matrix operations mainly appear in the training and forward computation of neural networks and they occupy a dominant position; thus, it is of great significance to accelerate matrix operation. The optimization of matrix multiplication can be achieved by reducing the number of multiplication computations and increasing the computation speed. Matrix operations often have remarkable parallelism. Scholars usually use the characteristics of FPGA parallel computing to optimize matrix operations. In 2006, D. K. Iakovidis et al. [82] designed an FPGA structure capable of performing fast parallel co-occurrence matrix computation in grayscale images. The symmetries and sparsity of a co-occurrence matrix were used to achieve shorter processing time and less FPGA resource occupation. In 2014, Jeremy Fowers et al. [79] designed an FPGA accelerator with high memory bandwidth for Sparse matrix–vector multiplication.

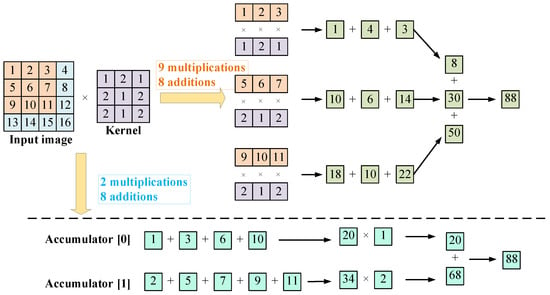

As shown in Figure 7, the architecture uses specialized compressed interleave sparse row (CISR) coding to efficiently process multiple rows of the matrix in parallel, combined with a caching design that eliminates the replication of the buffer carrier and enables larger vectors to be stored on the chip. The design maximizes the bandwidth utilization by organizing the data from memory into parallel channels, which can keep the hardware complexity low while greatly improving the parallelism and speed of data processing.

Figure 7.

Neural network optimization technology based on FPGA.

In 2016, Eriko Nurvitadhi et al. [80] used XNOR Gate to replace the multiplier in FPGA in order to reduce the computational difficulty and improve the computational efficiency from the perspective of the multiplier occupying more resources. In 2019, Asgar Abbaszadeh et al. [83] proposed a universal square matrix computing unit that was based on cyclic matrix structure and finally tested a 500 × 500 matrix on an FPGA with an operating frequency of 346 MHz, achieving a throughput of 173 GOPS. In 2020, S. Kala and S. Nalesh [84] proposed an efficient CNN accelerator that was based on block Winograd GEMM (general matrix multiplication) architecture. Using blocking technology to improve bandwidth and storage efficiency, the ResNet-18 CNN model was implemented on XC7VX690T FPGA. Running at a clock frequency of 200MHz, the average throughput was 383 GOPS, which was a significant improvement in comparison to the work of Asgar Abbaszadeh et al. In the same year, Ankit Gupta et al. [81] made a tradeoff between accuracy and performance and proposed a new approximate matrix multiplier structure, which greatly improved the speed of matrix multiplication by introducing a negligible error amount and an approximate multiplication operation.

In 2022, Shin-Haeng Kang et al. [85] implemented the RNN-T (RNN-Transducer) inference accelerator on FPGA for the first time on the basis of Samsung high-bandwidth memory–processing in memory (HBM-PIM) technology. By placing the DRAM-optimized AI engine in each memory bank (storage subunit), the processing power was brought directly to the location of the data storage, which enabled parallel processing and minimized data movement. The HBM internal bandwidth was utilized to significantly reduce the execution time of matrix multiplication, thus achieving acceleration.

4.1.3. Convolution Optimization

For the optimization of convolution algorithms, scholars have provided the following two ideas. One is the Winograd fast convolution algorithm, which speeds up convolution by increasing addition and reducing multiplication. Another is the Im2col convolution optimization algorithm that sacrifices storage space in order to improve the speed of the convolution operation. This is described in detail below.

- (1)

- Winograd fast convolution algorithm

The Winograd Fast Convolutional algorithm was first proposed by Shmuel Winograd in his paper “Fast Algorithms for Convolutional Neural Networks” in 1980, but it did not cause much sensation at that time. In the 2016 CVPR conference, Lavin et al. [86] proposed the use of the Winograd fast convolution algorithm to accelerate the convolution operation. Since then, the Winograd algorithm has been widely used to accelerate the convolution algorithm.

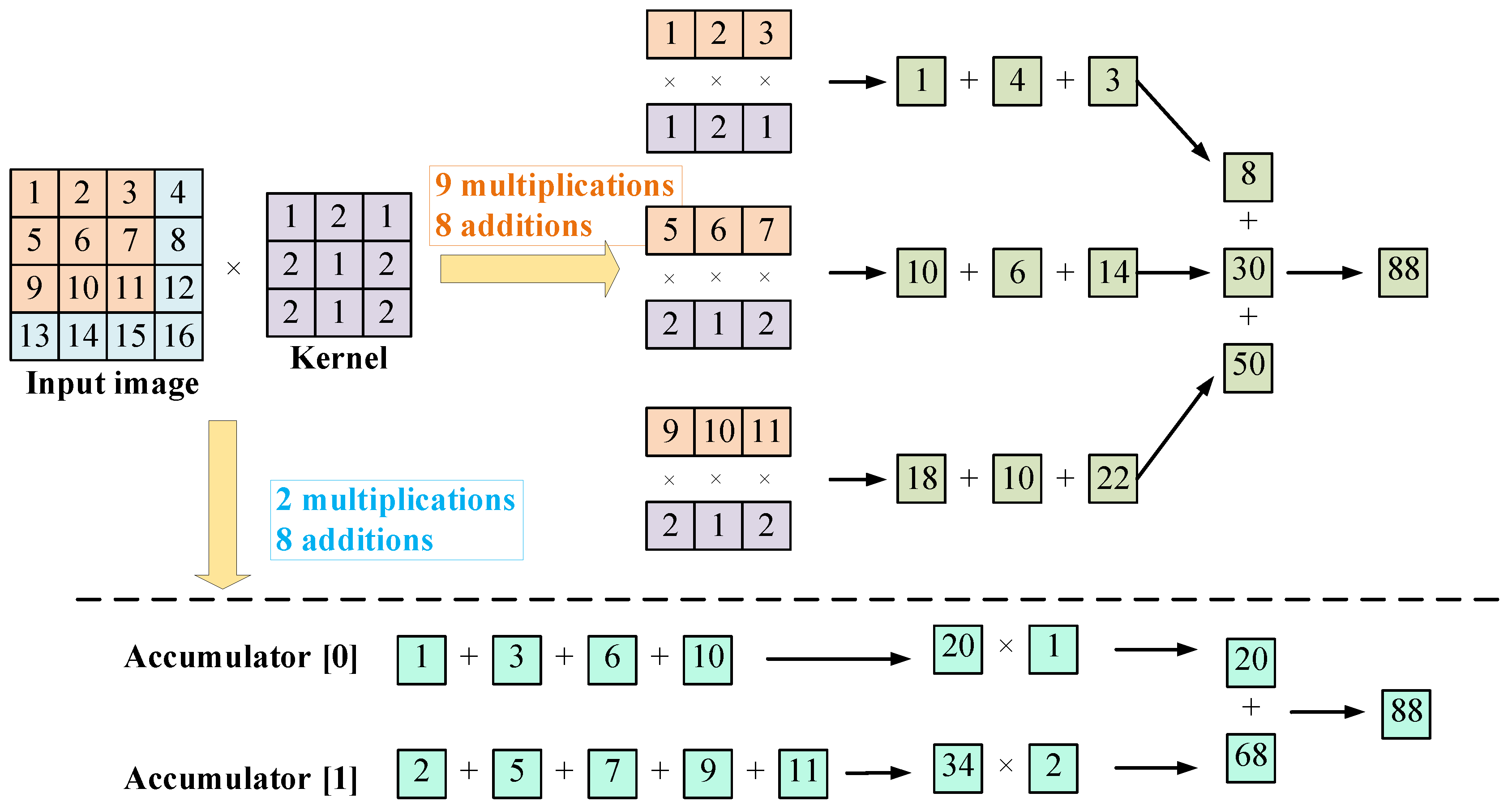

The Winograd algorithm can accelerate the convolution operation because it uses more addition to reduce multiplication calculation, thus reducing the amount of calculations, using less FPGA resources, and improving operation speed, not as well as when FFT (fast Fourier transform) is introduced into the plural. But with the premise being, in the processor, the clock cycle of the calculation of the multiplication are longer than the clock cycles of the addition.

Taking the one-dimensional convolution operation as an example, the input signal as d = [d0 d1 d2 d3] T, and the convolution kernel as g = [g0 g1 g2] T, then the convolution can be written as the following matrix multiplication form:

For general matrix multiplication, six multiplications and four additions are required, as follows: r0 = d0g0 + d1g1 + d2g2, r1 = d1g0 + d2g1 + d3g2. However, the matrix transformed by the input signal in the convolution operation is not an arbitrary matrix, in which a large number of repetitive elements are regularly distributed, such as d1 and d2 in row 1 and row 2. The problem domain of the matrix multiplication transformed by convolution is smaller than that of the general matrix multiplication, which makes it possible to optimize. After conversion using a Winograd algorithm:

Among them, the m1 = (d0 − d2) g0, m2 = (d1 + d2) (g0 + g1 + g2)/2, m3 = (d2 − d1) (g0 − g1 + g2)/2, m4 = (d1 − d3) g2. To calculate r0 = m1 + m2 + m3, r1 = m2 − m3 − m4, the number of operations required are four additions (subtraction) on the input signal d and four multiplications and four additions on the output m, respectively. In the inference stage of the neural network, the elements on the convolution kernel are fixed, and therefore the operation on g can be calculated well in advance. In the prediction stage, it only needs to be calculated once, which can be ignored. Therefore, the total number of operations required is the sum of the operation times on d and m, namely, four times of multiplication and eight times of addition. Compared with the direct operation of six multiplications and four additions, the number of multiplications decreases, and the number of additions increases. In FPGA, the multiplication operation is much slower than the addition operation, and it will occupy more resources. By reducing multiplication times and adding a small amount of addition, the operation speed can be improved.

It is worth noting that the Winograd algorithm is not a panacea. As the number of additions increases, additional transform computation and transform matrix storage are required. As the size of convolution kernel and tile increases, the cost of addition, transform, and storage needs to be considered. Moreover, the larger the tile, the larger the transform matrix, and the loss of calculation accuracy will further increase. Therefore, the general Winograd algorithm is only suitable for small convolution kernels and tiles.

In FPGA, the neural network optimization based on the Winograd algorithm also has a large number of research achievements. In 2018, Liqiang Lu et al. [87] proposed an efficient sparse Winograd convolutional neural network accelerator (SpWA) that was based on FPGA. Using the Winograd fast convolution algorithm, transforming feature maps to specific domains to reduce algorithm complexity, and compressing CNN models by pruning unimportant connections were able to reduce storage and arithmetic complexity. On the Xilinx ZC706 FPGA platform, at least 2.9× speedup was achieved compared to previous work. In 2019, Kala S. et al. [88] combined the Winograd algorithm and GEMM on FPGA to speed up the reasoning of AlexNet. In 2020, Chun Bao et al. [89] implemented the YOLO target detection model based on the Winograd algorithm under PYNQ (Python productivity for Zynq) architecture, which was applied in order to accelerate edge computing and greatly reduced the resource usage of FPGA and the power consumption of the model. In November of the same year, Xuan Wang et al. [90] systematically analyzed the challenges of simultaneously supporting the Winograd algorithm, weight sparsity, and activation sparsity. An efficient encoding scheme was proposed to minimize the effect of activation sparsity, and a new decentralized computing-aggregation method was proposed to deal with the irregularity of sparse data. It was deployed and evaluated on ZedBoard, ZC706, and VCU108 platforms and achieved the highest energy efficiency and DSP (digital signal processing) efficiency at the time. In 2022, Bin Li et al. [91] combined the Winograd algorithm with fusion strategy, reduced the amount of data movement and the number of accesses to off-chip memory, and improved the overall performance of the accelerator. On the U280 FPGA board, the mean average precision (mAP) decreased by 0.96% after neural network quantization, and the performance reached 249.65 GOP/s, which was 4.4 times Xilinx’s official parameters.

- (2)

- Im2col convolution optimization algorithm

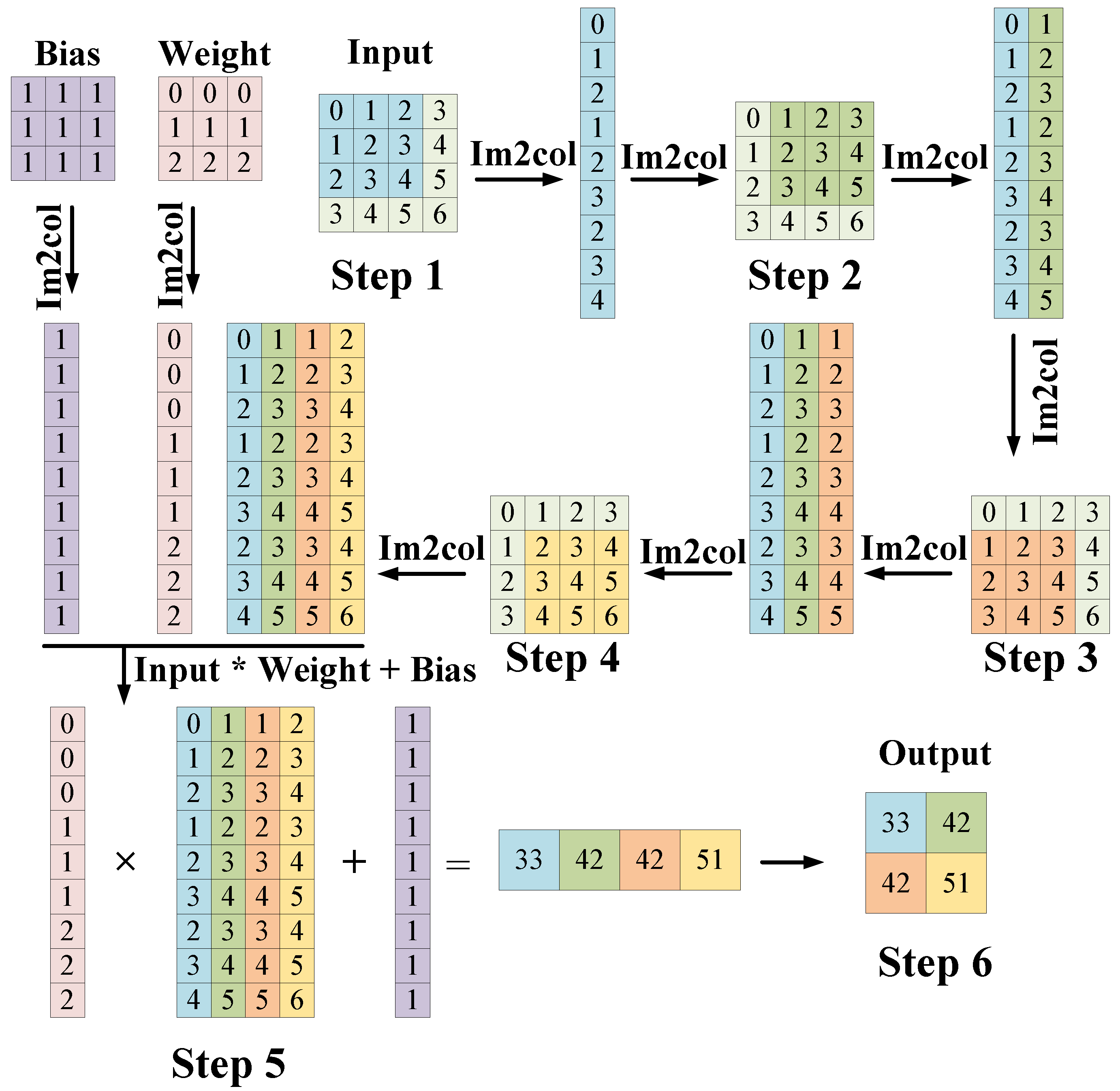

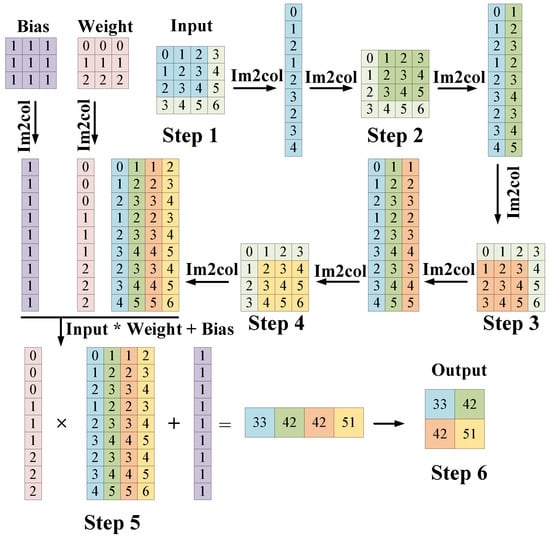

The Im2col convolution optimization algorithm is different from the Winograd algorithm, which uses more addition operations instead of multiplication operations in order to occupy less resources in order to improve the operation speed. The Im2col convolution optimization algorithm adopts the idea of exchanging space for the improvement of computation speed. By converting the receptive field of convolution kernel into a row (column) for storage, the memory access time is reduced, and the operation speed is accelerated.

Because matrix multiplication involves nothing more than the dot product of the corresponding position, it only takes one traversal for both matrices to be multiplied, and thus matrix multiplication is very fast compared to convolution. From the perspective of matrix multiplication, the convolution operation is actually the process of circular matrix multiplication with the template moving. Although the image of each multiplication is locally different, the template remains the same. Every time one adds a template and a local dot product, one multiplies rows and columns in matrix multiplication.

As shown in Figure 8, using the Im2col convolution optimization algorithm as an example, by converting each local multiplication and accumulation process into the multiplication of a row and a column of two matrices, the whole convolution process can be converted into a matrix multiplication process, and the convolution speed can be greatly improved. At the same time, it is worth noting that just because of the action mechanism of Im2col convolution optimization algorithm, the number of elements after Im2col expansion will be more than the number of elements of the original block. Therefore, optimizing the convolution operation using Im2col consumes more memory. Therefore, when the Im2col convolution optimization algorithm is used, memory optimization technology is often used together.

Figure 8.

An example of the Im2col convolution optimization algorithm.

In terms of FPGA-based neural network Im2col convolution optimization, in 2017, Feixue Tang et al. [92] used the Im2col algorithm to optimize the convolution algorithm and then converted the optimized convolution algorithm into a matrix operation, so as to improve the operation speed. In 2020, Feng Yu et al. [93] combined the quantization method with the Im2col algorithm for visual tasks and designed a dedicated data-stream convolution acceleration under the heterogeneous CPU-FPGA platform PYNQ, including the changed data-stream order and Im2col for convolution. Compared with ARM CPU, 120× acceleration was achieved on FPGA operating at 250 MHz.

In 2021, Tian Ye et al. [94] applied the Im2col algorithm to the FPGA accelerator of homomorphic encryption of private data in order to optimize convolution, which greatly reduced the delay of the convolution algorithm. In October of the same year, Hongbo Zhang et al. [95] combined the Im2col algorithm with pipeline design and applied it to the lightweight object detection network YOLOV3-Tiny, achieving 24.32 GOPS throughput and 3.36 W power consumption on the Zedboard FPGA platform.

In 2022, Chunhua Xiao et al. [96] proposed a smart data stream transformation method (SDST), which is similar to but different from the Im2col algorithm. Different from the Im2col algorithm, with the improvement of the peak performance of the GEMM kernel, the overhead caused by the Im2col algorithm, which converts convolution to matrix multiplication, becomes obvious. SDST divides the input data into conflict-free streams on the basis of the local property of data redundancy, which reduces the external storage of data and keeps the continuity of data. A prototype system based on SDST was implemented on Xilinx ZC706 APSoC (all programmable system-on-chip), and the actual performance of accelerated CNN was evaluated on it. The results showed that SDST was able to significantly reduce the overhead associated with the explicit data conversion of convolutional inputs at each layer. In March of the same year, Bahadır Özkılbaç et al. [97] combined a fixed-point quantization technique with the Im2col algorithm in order to optimize convolution, reduce temporary memory size and storage delay, perform the application of digital classification of the same CNN on acceleration hardware in the ARM processor and FPGA, and observe the delay time. The results show that the digital classification application executed in the FPGA was 30 times faster than that executed in the ARM processor.

4.1.4. Pipelined Design

Pipelined design is a method of systematically dividing combinational logic, inserting registers between the parts (hierarchies), and temporarily storing intermediate data. The purpose is to decompose a large operation into a number of small operations. Each small operation takes less time, shortens the length of the path that a given signal must pass in a clock cycle, and improves the frequency of operations; moreover, small operations can be executed in parallel and can improve the data throughput.

The design method of Pipeline can greatly improve the working speed of the system. This is a typical design approach that converts “area” into “velocity”. The “area” here mainly refers to the number of FPGA logic resources occupied by the design, which is measured by the consumed flip-flop (FF) and look-up table (LUT). “Speed” refers to the highest frequency that can be achieved while running stably on the chip. The two indexes of area and speed always run through the design of FPGA, which is the final standard of design quality evaluation. This method can be widely used in all kinds of designs, especially the design of large systems with higher speed requirements. Although pipelining can increase the use of resources, it can reduce the propagation delay between registers and ensure that the system maintains a high system clock speed. In the convolutional layer of deep neural networks, when two adjacent convolutional iterations are performed and there is no data dependency, pipelining allows for the next convolutional operation to start before the current operation is completely finished, improving the computational power, with pipelining having become a necessary operation for most computational engine designs. In practical application, considering the use of resources and the requirements of speed, the series of pipelines can be selected according to the actual situation in order to meet the design needs.

In terms of using pipeline design to accelerate neural networks, pipeline design is usually not used alone, but instead used in conjunction with other techniques. In 2017, Zhiqiang Liu et al. [98] used a pipeline design together with the space exploration method to maximize the throughput of a network model, optimizing and evaluating three representative CNNs (LeNet, AlexNet, and VGG-S) on a Xilinx VC709 board. The results show that the performances of 424.7 GOPS/s, 445.6 GOPS/s, and 473.4 GOPS/s, respectively, were clearly higher than in previous work.

In 2019, Yu Xing et al. [99] proposed an optimizer that integrates graphics, loops, and data layout, as well as DNNVM, a full-stack compiler for an assembler. In the framework of a compiler, the CNN model is transformed into a directed acyclic graph (XGraph). The pipeline design, data layout optimization, and operation fusion technology are applied to XGraph, and lower FPGA hardware resource consumption is realized on VGG and Residual Network 50 (ResNet50) neural networks. On GoogLeNet with operation fusion, a maximum 1.26× speedup was achieved compared to the original implementation. In April of the same year, Wei Wang et al. [100] combined a pipeline design with a ping-pong cache and optimized Sigmoid activation function through a piecewise fitting method combining the look-up table and polynomial. On a Xilinx virtex-7 FPGA with 150 MHz operating frequency, the computational performance was improved from 15.87 GOPS to 20.62 GOPS, and the recognition accuracy was 98.81% on a MNIST dataset.

In 2021, Dong Wen et al. [101] used the CNN for acoustic tasks and combined fixed-point quantization, space exploration methods similar to the roof-line model, and pipeline design in order to achieve higher throughput of the designed FPGA accelerator. In 2022, Varadharajan et al. [102] proposed pipelined stochastic adaptive distributed architectures (P-SCADAs) by combining pipelined design and adaptive technology in the LSTM network, which improved FPGA performance and saved FPGA resource consumption and power consumption.

4.2. Bandwidth Optimization

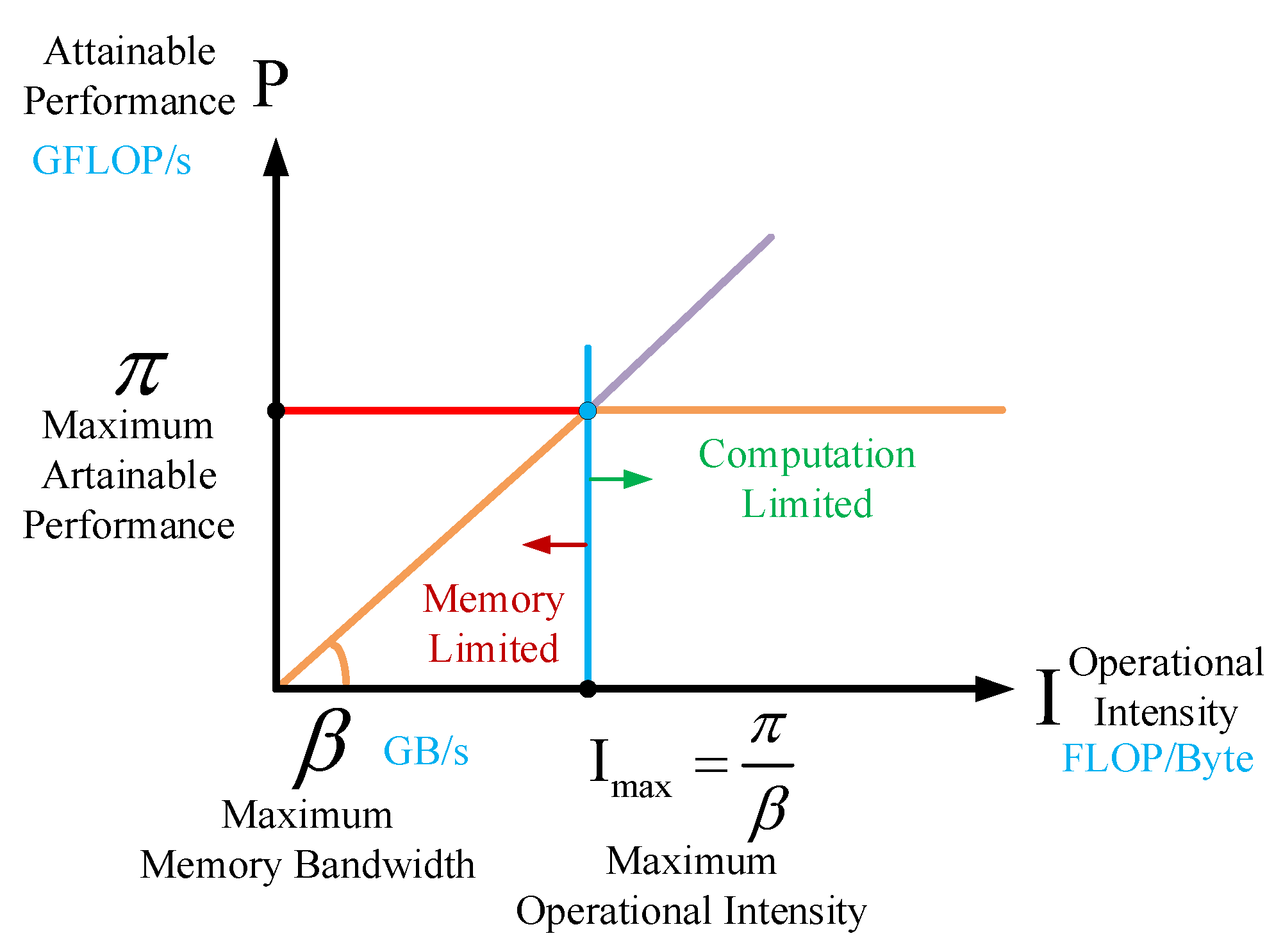

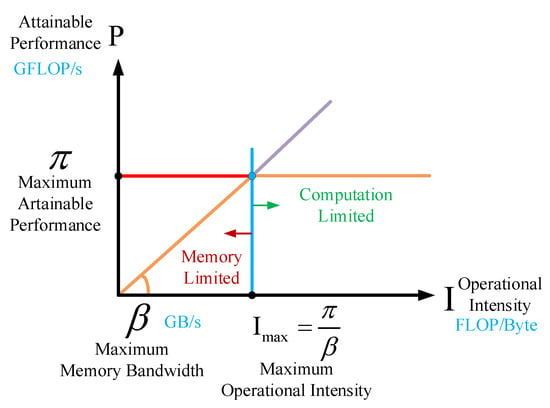

In the deployment of neural networks, all kinds of neural networks must rely on specific computing platforms (such as CPU/GPU/ASIC/FPGA) in order to complete the corresponding algorithms and functions. At this point, the “level of compatibility” between the model and the computing platform will determine the actual performance of the model. Samuel Williams et al. [103] proposed an easy-to-understand visual performance model, the roof-line model, and proposed a quantitative analysis method using operational intensity. The formula showcasing that the model can reach the upper limit of the theoretical calculation performance on the computing platform is provided. It offers insights for programmers and architects in order to improve the parallelism of floating-point computing on software and hardware platforms and is widely used by scholars to evaluate their designs on various hardware platforms to achieve better design results.

The roof-line model is used to measure the maximum floating point computing speed that the model can achieve within the limits of a computing platform. Computing power and bandwidth are usually used to measure performance on computing platforms. Computing power is also known as the platform performance ceiling, which refers to the number of floating pointed operations per second that can be completed by a computing platform at its best, in terms of FLOP/s or GLOP/s. Bandwidth is the maximum bandwidth of a computing platform. It refers to the amount of memory exchanged per second (byte/s or GB/s) that can be completed by a computing platform at its best. Correspondingly, the computing intensity limit Imax is the computing power divided by the bandwidth. It describes the maximum number of calculations per unit of memory exchanged on the computing platform in terms of FLOP/byte. The force calculation formula of the roof-line model is as follows:

As shown in Figure 9, the so-called “roof-line” refers to the “roof” shape determined by the two parameters of computing power and bandwidth upper limit of the computing platform. The green line segment represents the height of the “roof” determined by the computing power of the computing platform, and the red line segment represents the slope of the “eaves” determined by the bandwidth of the computing platform.

Figure 9.

Roof-line model.

As can be seen from the figure, the roof-line model is divided into two areas, namely, the computation limited area and the memory-limited area. In addition, we can find three rules from Figure 9:

- (1)

- When the computational intensity of the model I is less than the upper limit of the computational intensity of the computing platform Imax. Since the model is in the “eaves” interval at this time, the theoretical performance P of the model is completely determined by the upper bandwidth limit of the computing platform (the slope of the eaves) and the computational strength I of the model itself. Therefore, the model is said to be in a memory-limited state at this time. It can be seen that under the premise that the model is in the bandwidth bottleneck, the larger the bandwidth of the computing platform (the steeper the eaves), or the larger the computational intensity I of the model, the greater the linear increase in the theoretical performance P of the model.

- (2)

- When the computational intensity of the model I is greater than the upper limit of the computational intensity of the computing platform Imax. In the current computing platform, the model is in the compute-limited state, that is, the theoretical performance P of the model is limited by the computing power of the computing platform and can no longer be proportional to the computing strength I.

- (3)

- No matter how large the computational intensity of the model is, its theoretical performance P can only be equal to the computational power of the computing platform at most.

It can be seen that increasing the bandwidth ceiling or reducing the bandwidth requirement of the system can improve the performance of the system and thus accelerate it. Specifically, the bandwidth requirement of the system can be reduced by the techniques of data quantization and matrix calculation (described in detail in Section 4.1), and the upper limit of the bandwidth can be increased by data reuse and optimization of data access (described in detail in Section 4.3).

In recent years, many application achievements based on the roof-line model are also involved in the optimization design of neural networks that are based on FPGA. In 2020, Marco Siracusa et al. [104] focused on the optimization of high-level synthesis (HLS). They found that although advanced synthesis (HLS) provides a convenient way to write FPGA code in a general high-level language, it requires a large amount of effort and expertise to optimize the final FPGA design of the underlying hardware, and therefore they proposed a semi-automatic performance optimization method that was based on the FPGA-based hierarchical roof line model. By combining the FPGA roof-line model with the Design Space Exploration (DSE) engine, this method is able to guide the optimization of memory limit and can automatically optimize the design of a calculation limit, and thus the workload is greatly reduced, and a certain acceleration performance can be obtained. In 2021, Enrico Calore et al. [105] optimized the neural network compiler by pipeline design and used the roof-line model to explore the balance between computational throughput and bandwidth ceiling so that FPGA could perform better.

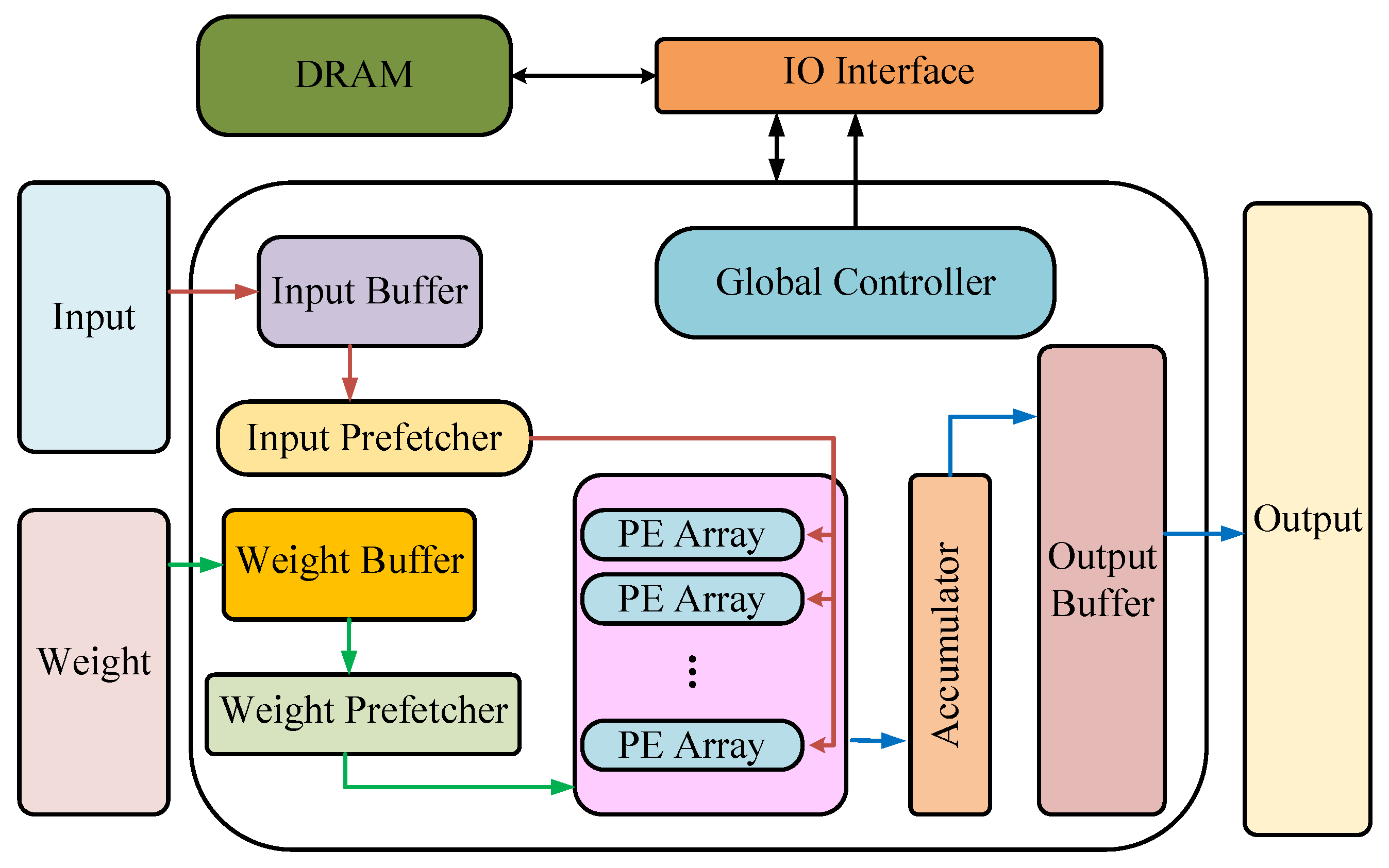

4.3. Memory and Access Optimization

Data storage and access is an essential part of neural networks. A large amount of data will occupy limited memory space. At the same time, a large amount of memory access will greatly increase the execution time of network models and reduce the computational efficiency. In the aspect of memory and access optimization, ping-pong cache, data reuse, standard data access, and so on are usually used for optimization.

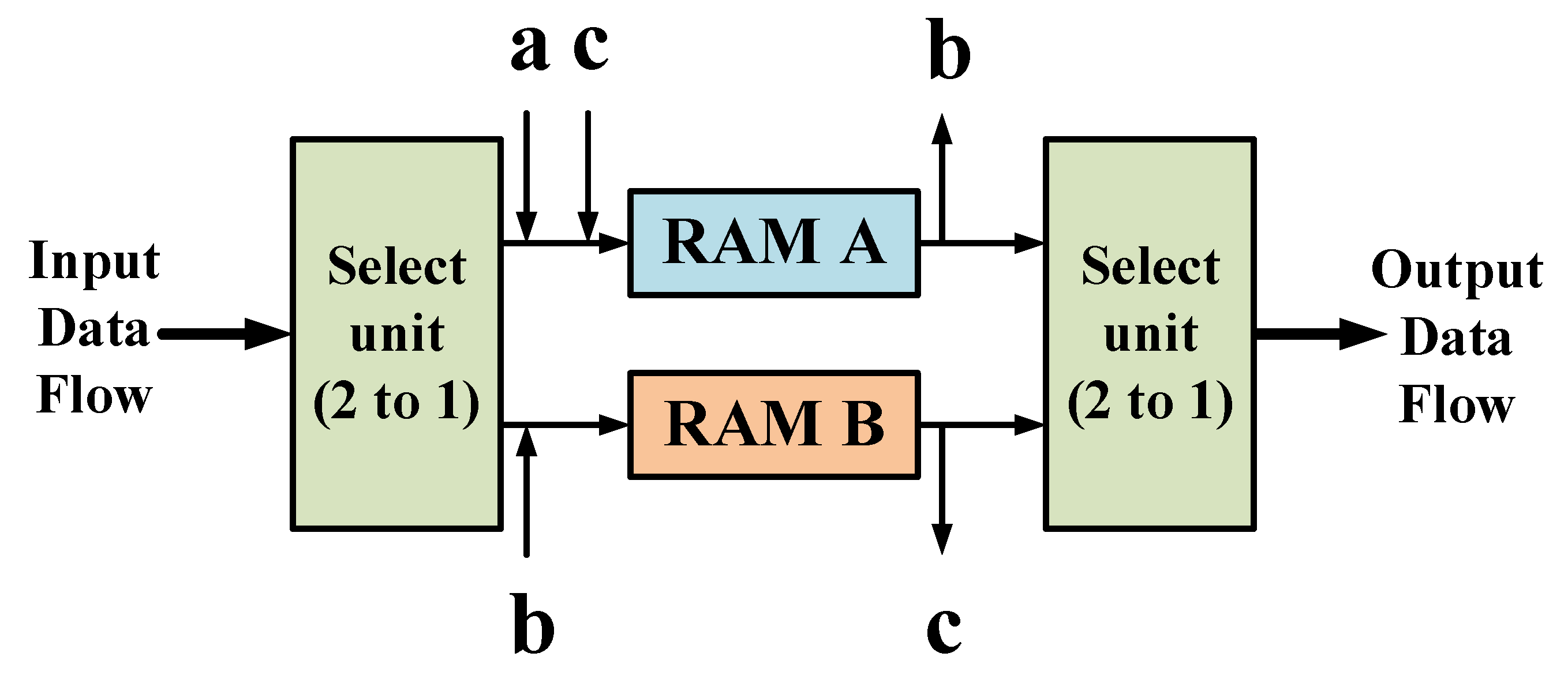

4.3.1. Ping-Pong Cache

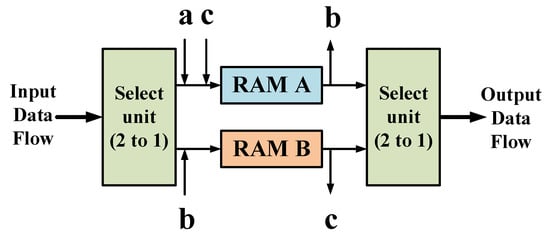

The ping-pong cache is a commonly used data flow control technique, which is used to allocate the input data flow to two random access memory (RAM) buffers in equal time through the input data selection unit and realize the flow transmission of data by switching between the two RAM reads and writes.

As shown in Figure 10, a~c describes the complete operation process of completing the cache of data in RAM A and B and the output of data. Each letter represents the input and output of data at the same time, the inward arrow represents the cache of data, and the outward arrow represents the output data. The specific caching process is as follows: The input data stream is allocated to two data buffers in equal time through the input data selection unit, and the data buffer module is generally RAM. In the first buffer cycle, the input data stream is cached to the data buffer module RAM A. In the second buffer cycle, the input data stream is cached to the data buffer module RAM B by switching the input data selection unit, and the first cycle data cached by RAM A is transmitted to the output data selection unit. In the third buffer cycle, the input data stream is cached to RAM A, and the data cached by RAM B in the second cycle is passed to the output data selection unit through another switch of the input data selection unit.

Figure 10.

Ping-pong cache description.

In this regard, many scholars also put forward their neural network optimization scheme on the basis of this technology. In 2019, Yingxu Feng et al. [106] applied the ping-pong cache to the optimization of the pulse compression algorithm in open computing language (OpenCL)-based FPGA, which plays an important role in a modern radar signal processing system. By using the ping-pong cache of data between the dual cache matched filter and the Inverse fast Fourier transform (IFFT), 2.89 times speedup was achieved on the Arria 10 GX1150 FPGA compared to the traditional method. In 2020, Xinkai Di et al. [107] deployed GAN on FPGA and optimized it with Winograd transformation and ping-pong cache technology in order to achieve an average performance of 639.2 GOPS on Xilinx ZCU102. In the same year, Xiuli Yu et al. [108] implemented the deployment of the target detection and tracking algorithm on Cyclone IV FPGA of ALTERA Company, completed the filtering and color conversion preprocessing of the collected images, and used the ping-pong cache to solve the frame interleave problem of image data, improve the speed and real-time performance of image processing, and reduce the energy consumption. It met the requirements of miniaturization, integration, real time, and intelligent development of a visual processing system. In 2022, Tian-Yang Li et al. [109] studied the preprocessing of Joint Photographic Experts Group (JPEG) images; optimized the inverse discrete cosine transform (IDCT) algorithm, which is time-consuming in JPEG decoding; and used the ping-pong cache to store the transition matrix. The throughput of 875.67 FPS (frame per second) and energy efficiency of 0.014 J/F were achieved on a Xilinx XCZU7EV.

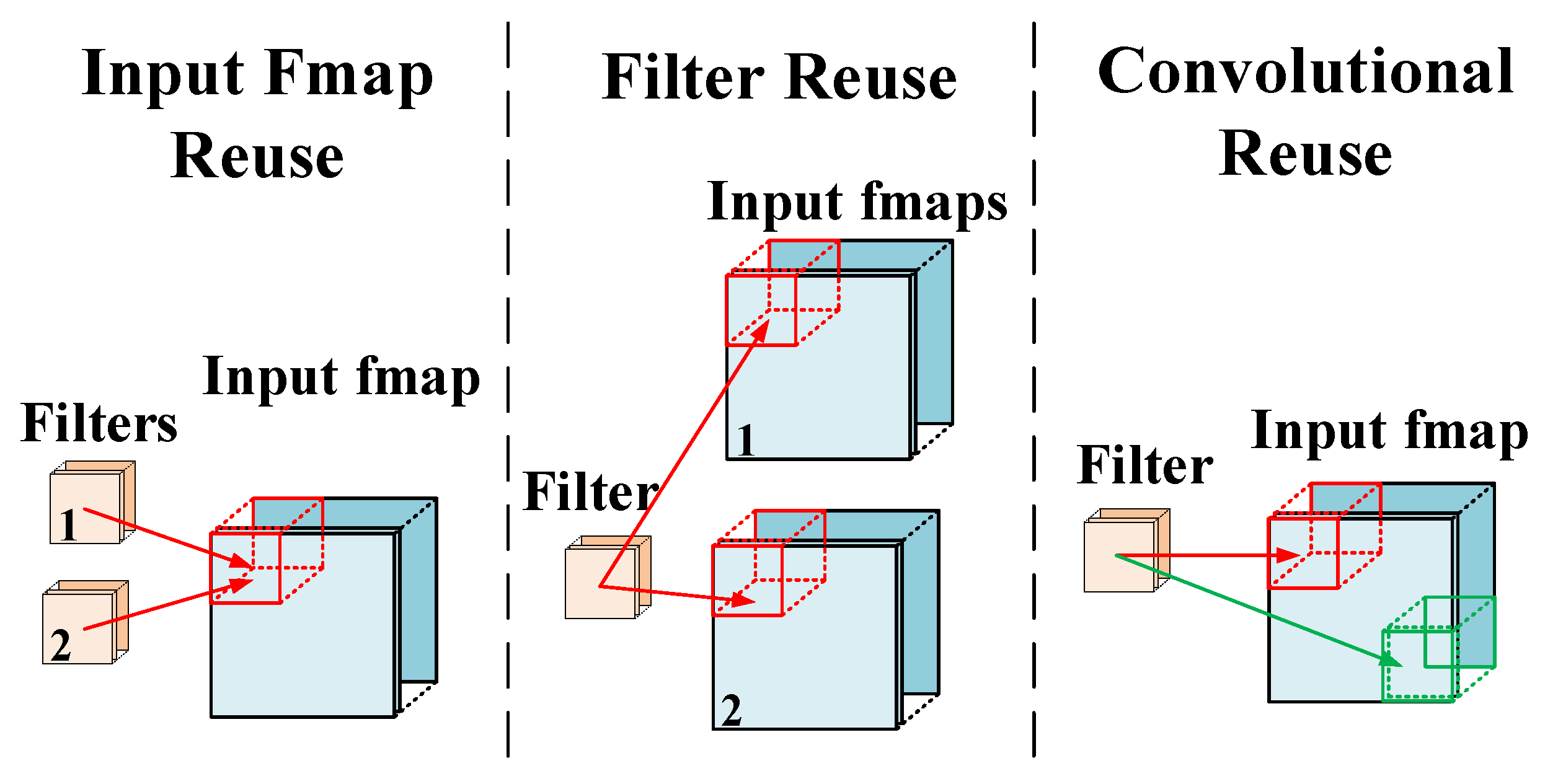

4.3.2. Data Reuse

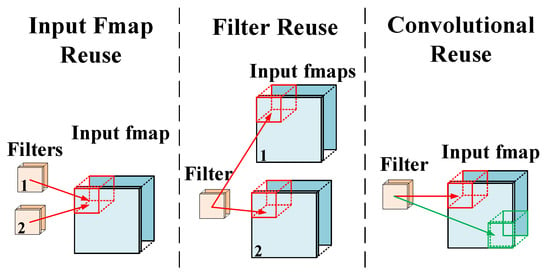

Data reuse is simply the reuse of data. As can be seen from the roof-line model, when the data reuse rate was low, bandwidth became the bottleneck affecting performance, and the computing resources of FPGA were not fully utilized. Therefore, through data reuse, the actual application bandwidth of memory is greater than the theoretical bandwidth, which increases the upper limit of the bandwidth and also reduces the storage pressure of memory and the amount of data cache and data exchange, so as to reduce the unnecessary time spent.

As shown in Figure 11, in the data operation of neural networks, data reuse is usually divided into input feature map reuse, filter reuse, and convolutional reuse. An input feature map reuse is the reuse of the same input and replacing it with the next input after all the convolution kernels are computed. A filter reuse means that if there is a batch of inputs, the same convolution kernel for the batch is reused and the data are replaced after all the inputs in the batch are calculated. Convolutional reuse uses the natural calculation mode in the convolutional layer and uses the same convolution kernel to calculate the output of different input map positions. In general, these three data reuse modes reuse data at different stages in order to achieve the purpose of increasing performance. Of course, data reuse can also be divided by time reuse and space reuse. For example, if the data in a small buffer is repeatedly used in multiple calculations, it is called time reuse. If the same data are broadcast to multiple PEs for simultaneous calculation, it is called space reuse.

Figure 11.

Common data reuse modes.

In the FPGA-based deep neural network acceleration experiments, a large part of the acceleration was based on data reuse to increase the bandwidth upper limit and reduce the amount of data cache and data exchange. For example, in 2020, Gianmarco Dinelli et al. [112] deployed the scheduling algorithm and data reuse system MEM-OPT on a Xilinx XC7Z020 FPGA and evaluated it on LeNet-5, MobileNet, VGG-16, and other CNN networks. The total on-chip memory required to store input feature maps and cumulative output results was reduced by 80% compared to the traditional scheme. In 2021, Hui Zhang et al. [110] proposed an adaptive channel chunking strategy that reduced the size of on-chip memory and access to external memory, achieving better energy efficiency and utilizing multiplexing data and registering configuration in order to improve throughput and enhance accelerator versatility. In 2022, Xuan-Quang Nguyen et al. [111] applied the data reuse technology that were based on time and space to the convolution operation in order to improve data utilization, reduce memory occupancy, reduce latency, and improve computational throughput. The same convolution calculation was about 2.78 times faster than the Intel Core i7-9750H CPU and 15.69 times faster than the ARM Cortex-A53.

4.3.3. Standardized Data Access and Storage

A large amount of data operation and frequent data access are the problems that neural networks must encounter when they are deployed on portable systems such as FPGA. In the optimization design of neural networks that are based on FPGA, FPGA is usually used as a coprocessor, that is, the CPU writes the instructions to the memory, and then the FPGA reads and executes the instructions from the memory unit, and following this, writes the calculation results to the memory. Therefore, by standardizing data access, the read and write efficiency of data can be improved, and the actual bandwidth upper limit can be increased.

In the aspect of standardizing data access, scholars often cut the feature map into small data blocks stored in discontinuous addresses for feature mapping to standardize data access patterns. Takaaki Miyajima et al. [113] standardized data access by partitioning storage space and separately accessing sub-modules of each memory space in order to improve effective memory bandwidth. Bingyi Zhang et al. [114] matched the limited memory space on FPGA by data partitioning, eliminated edge connections of high nodes by merging common adjacent nodes, and normalized data access by reordering densely connected neighborhoods effectively, which increased data reuse and improved storage efficiency. Some scholars have used custom data access paths in order to ensure the timely provision of parameters and intermediate data in model inference, as well as to make full use of local memory in order to improve inference efficiency and reduce the amount of external memory access, thus improving bandwidth utilization [115].

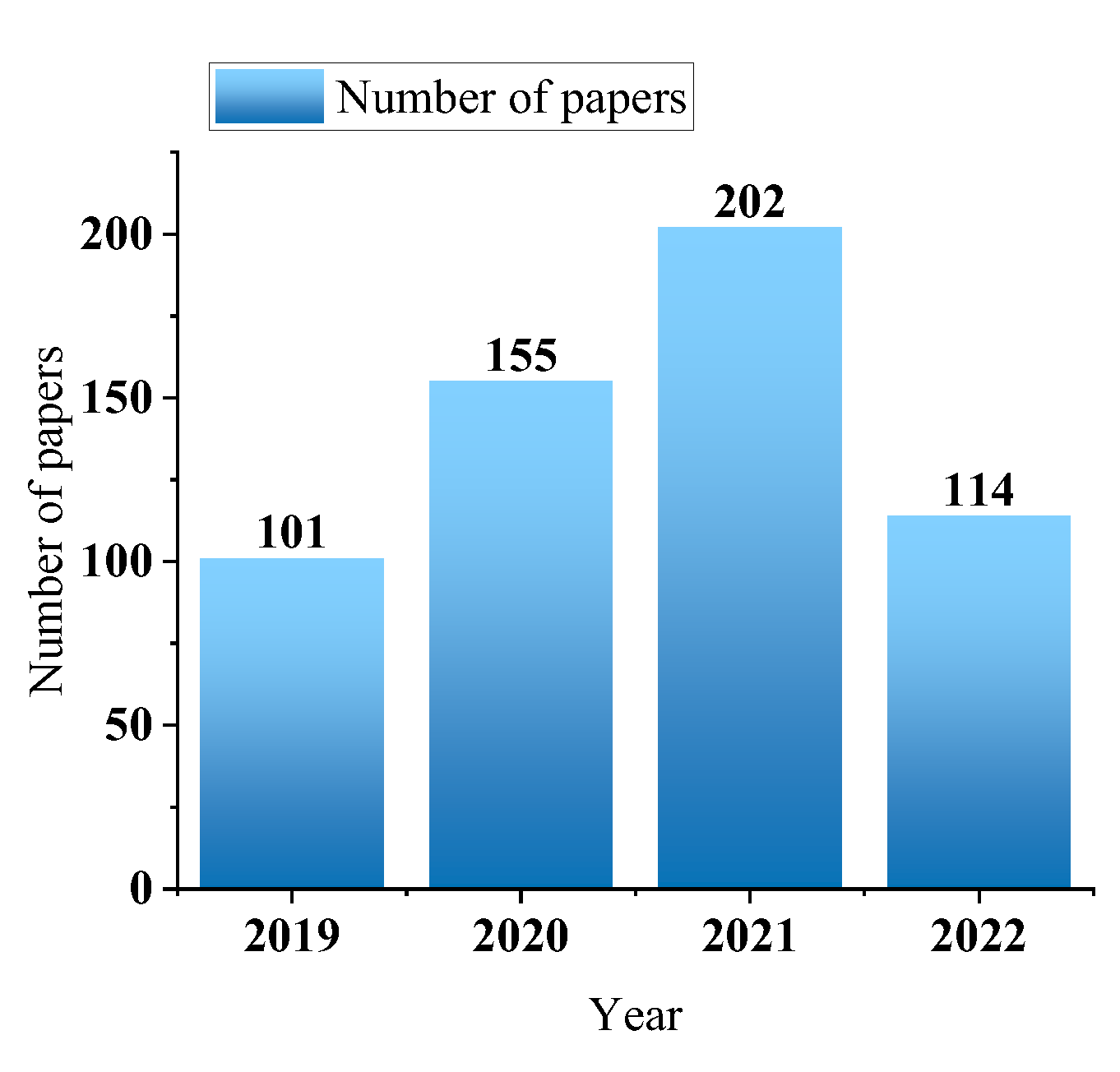

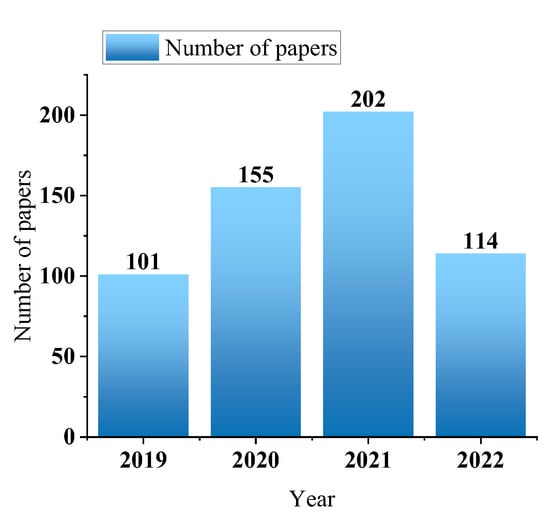

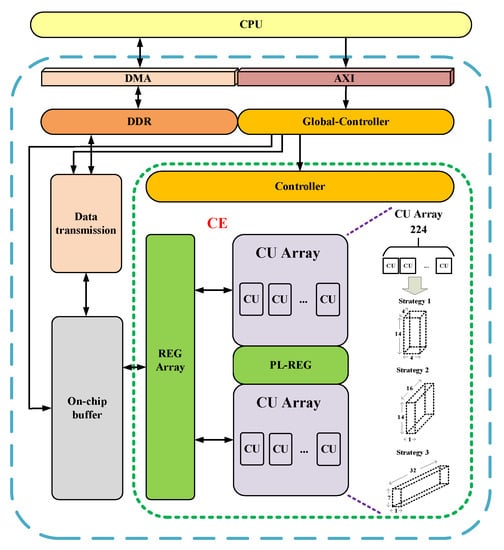

5. Design of the DNN Accelerator and Acceleration Framework Based on FPGA

In the optimization design of neural networks that are based on FPGA, some FPGA synthesis tools are generally used. The existing synthesis tools (HLS, OpenCL, etc.) that are highly suitable for FPGA greatly reduce the design and deployment time of neural networks, and the hardware-level design (such as RTL, register transfer level) can improve the efficiency and achieve a better acceleration effect. However, with the continuous development of neural networks, its deployment on FPGA has gradually become the focus of researchers. This further accelerates the emergence of more accelerators and acceleration frameworks for neural network deployment on FPGA. This is because with the acceleration of the specific neural network model, the idea is the most direct, and the design purpose is also the clearest. These accelerators are often hardware designs for the comprehensive application of the various acceleration techniques described above. When used in specific situations, such accelerators usually only need to fine-tune the program or parameters to be used, which is very convenient [13]. Figure 12 shows the number trend of relevant papers retrieved by the FPGA-based neural network accelerator on Web of Science by August 2022. The average number of papers published each year is about 140. It can be seen that the research on the FPGA accelerated neural network has attracted increasingly more attention in recent years, which is introduced below.

Figure 12.

The most recent number of papers on the FPGA neural network accelerator in Web of Science.

5.1. FPGA Accelerator Design for Different Neural Networks