1. Introduction and Related Studies

The brain–computer interface (BCI) allows the brain and various machines to communicate with one another through recording electrical activity and their connections with external devices. Vidal used the term “brain–computer interface” for the first time in [

1]. Later the first implanted devices were implanted in humans following years of animal testing in the late 1990s [

2]. An ever-increasing number of studies and clinical studies have been conducted on the brain–computer interface as one of the best methods for assisting individuals with motor paralysis caused by strokes, spinal cord injuries, cerebral palsy, and amyotrophic lateral sclerosis [

3].

BCI can replace useful functions for disabled people suffering from neuromuscular disorders. A BCI is a computer-based device that captures an EEG and converts them into commands that are sent to an output device to perform the desired action [

4]. In contrast to all other devices, it is completely non-invasive [

5], cost-effective, and unique in that it can be utilized to address a wide array of needs, including those associated with prosthetics. In order to make the signal useful, its desired information is converted into active functions. It is the active function that operates the microcontroller [

6,

7]. Based on the required input, the microcontroller determines the degree of freedom of the actuators, and the robotic arm acts as per the received signal. Moreover, the electric impulses transmit between neurons in the brain during sleep which is measured by EEG. The recorded EEG signals are obtained through a single electrode that uses a Local field potential (LFP) to measure EEG signals [

7]. LFPs are temporary electrical signals generated by individual neurons in the brain and other tissues when their electrical activity is summed and synchronized [

7]. The placement of electrodes is critical to this design. Typically, electrodes consisting of conductors such as gold or silver chloride are utilized. A headset is used to translate EEG data into movement patterns that measure brain activity via the scalp of the head. AF3, F7, F3, FC5, P7, O1, O2, P8, T8, FC6, F4, F8, and AF4 electrodes are calibrated on this 14-channel hardware. Odd-numbered electrodes are located on the left hemisphere, while even-numbered electrodes are located on the right [

8]. Therefore, the maximum number of degrees of freedom (DOF) is identified [

9]. To implement the code, we have used a jupyter notebook. It is an Integrated Development Environment (IDE) for Python. The classification is performed using machine learning and artificial intelligence techniques. Machine learning aims to understand the structure of data and make models based on that data that are human-comprehensible and useful.

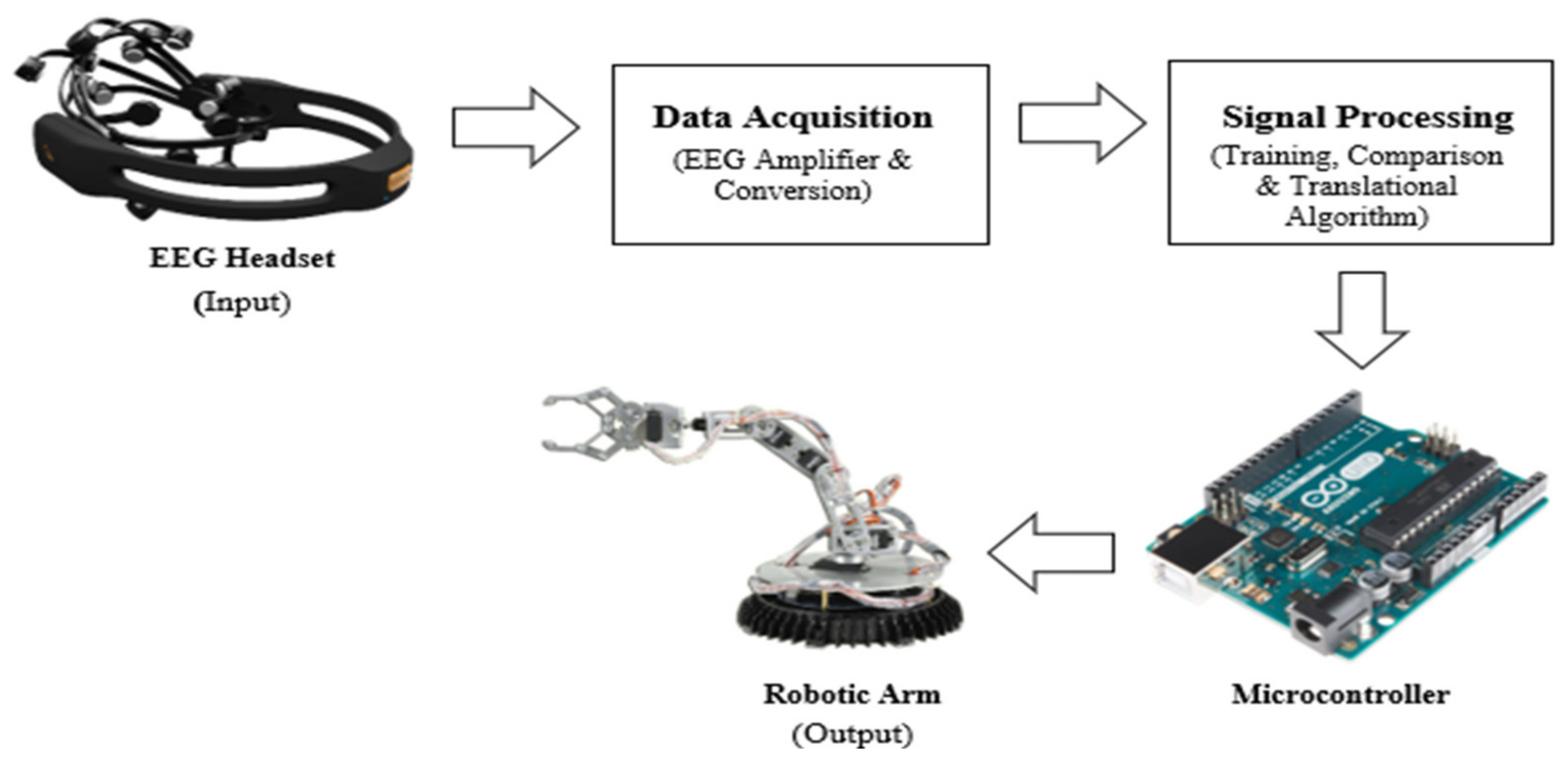

Figure 1 illustrates an overview of the proposed system that shows an extremely versatile robotic arm controlled by reading brain signals. Degrees of freedom is the most important aspect of this brain-controlled arm that corresponds to the joint of each other that represents the point on the arm where the arm can bend, rotate or translate. A joint can perform two motions: rotation and translation. A link is a connector that connects two actuators.

This BCI-based study shows how to actuate a robotic arm with the help of device commands derived from EEG signals. We have used an emotive headset to acquire EEG signals from the brain, which are further used to run the actuator of the robotic arm through artificial intelligence and machine learning techniques.

In this area, some work has also been conducted that proposed different methods to control different objects. We have discussed the most specific works, i.e., the author in [

10] has proposed an attention mechanism for the classification of motor imagery (MI) signals. As a result of the dynamic properties of the motor imagery signals, the attention mechanism can extract spatial context features. This model is more accurate than current best practices that use convolution with varying filter sizes to extract features from MI signals in various spatial contexts. The authors in [

11] have used an inception approach that claims accuracy with the BCI challenge dataset. Further, a variety of deep learning methods have been developed in the existing literature to classify motor imagery (MI) [

8,

9,

10,

11,

12]. In [

13], CNN is used which uses spatial features to classify EEG signals which also shows relatively better results.

In [

14,

15,

16,

17], EEG data has been directly fed into the long short-term memory (LSTM) models. The authors in [

18] developed an application of BCI for LabVIEW with which a robotic hand is controlled by voluntary blinking of the eyes. The embedded sensor in the Neurosky MindWave Mobile headset picked up the blinking. Another study proposed the design of a wheelchair controlled by an EEG signal [

19]. The authors have constructed an electric wheelchair that can be directly controlled by the brain of the disabled person. Emotiv EPOC headset has been used for the acquisition of EEG signals. Additionally, an Arduino IDE is used for the programming. Their program can read and process the EEG data from the Emotiv EPOC headset into mental command. In [

20], the authors used a headset but did not use any classification algorithms for the classification of signals and nor achieve an accuracy of more than 60%. The criteria of the total number of users are also not achieved in this proposal. In [

21,

22,

23,

24,

25,

26,

27,

28,

29], the authors have implemented a better version of the BCI process as they used headset and machine learning algorithms in Python. Consequently, their proposals are also validated through their results that achieved an accuracy of more than 60%, and the product is also used by most r

s. In [

30,

31,

32,

33,

34,

35], the authors used the headset for signal acquisition, and neunetworks and fuzzy logic have been implemented to achieve an accuracy of more than 60%, and the product was used by more than two users. However, like [

36], no classification algorithms are employed, nor any framework for programming is used.

In the proposed work, we have used the Emotive EPOC headset for signals acquisition to predict a reliable bandwidth for the BCI process and provide exact placements of EEG electrodes to verify different arm moments. We have applied different classification algorithms, i.e., Random Forest, KNN, Gradient Boosting, Logistic Regression, SVM, and Decision Tree, to four different users. The proposal is validated through results that achieve an accuracy of more than 60%.

Table 1 shows the comparison between different related studies and proposed work. In this paper, classification has been performed using various machine learning and artificial techniques, and a comparative analysis of all techniques has been provided to decide the most accurate based results (accuracy). Afterward, the robotic arm will be controlled by a microcontroller using input from the brain. A study proposed a new authentication approach for Internet of Things (IoT) devices. This method is entirely based on EEG signals and hand gestures. For signal acquisition, they also use low price Neurosky MindWave headset. This was based on choosing the authentication key’s adaptive thresholds of attention and meditation mode. The authentication process for hand gestures is controlled by using a camera. Results revealed that the proposed method demonstrated the usability of authentication by using EEG signals with an accuracy of 92% and an efficiency of 93%, and user satisfaction is acceptable and satisfying. The results showed that the password strength using the proposed system is stronger than the traditional keyboard. The proposed authentication method also is resistant to target impersonation and physical observation.

3. Results and Discussion

The results in the project are divided into the best fit classification algorithm, individual results of the best fit classifier, and several medical science-related insights and findings.

The results promise to hold novel achievements for BCI and artificial intelligence. The system aims to predict the left or right movement through the highest scoring algorithm. In the user, one case is taken as a reference for all of the users.

Figure 5 is the graphical representation of the classifiers scores that aid in the best-recommended algorithms. The chart in

Figure 5 is a bar chart that is built by matplotlib which illustrates the accuracies of the models, i.e., 52.60%, 60.07%, 50.69%, 46.35%, 74.13%, 64.24%, and 72.74% for lg, knn, svm, nb, gb, tree, and rf, respectively.

The foremost algorithms to deploy are Random Forest (rf), Gradient Boosting (GB), and Decision Trees (tree). The models are prioritized based on their scores. The score is the accuracy of the model to predict the accurate target. The input data is unseen for the model to predict the classes. A pictorial representation of the recommendation levels of the above models can be shown in

Figure 6.

3.1. Confusion Matrix and Classification Reports of Best-Fit Classifiers

Confusion matrices are to illustrate the comparison between truth values and predicted values acquired by predictions from classification algorithms. The classification report is a useful method that is used to take out all of the necessary information from the output of classification algorithms. A confusion matrix is shown in

Figure 7,

Figure 8 and

Figure 9 with their report in

Table 5,

Table 6 and

Table 7 for a Random Forest, Decision Tree, and a Gradient Boosting classifier, respectively.

Figure 7 represents that the Random Forest classifier has predicted the classes 0, 1, and 2 accurately at 1.5 × 10

2, 1.4 × 10

2 and 1.5 × 10

2 times, respectively. Moreover,

Table 5 illustrates the precision and recall of the classifier, i.e., (0.74, 0.80), (0.72, 0.76), and (0.83, 0.76) for classes 0, 1, and 2, respectively, with an overall accuracy of Random Forest is 76%.

Figure 8 represents that the Decision Tree classifier has predicted the classes 0, 1, and 2 accurately at 1.2 × 10

2, 1.3 × 10

2, and 1.2 × 10

2 times, respectively.

Table 6 illustrates the precision and recall of the classifier, i.e., (0.64, 0.64), (0.63, 0.69), and (0.66, 0.61) for class 0, 1, and 2, respectively. Whereas the overall accuracy of the Decision Tree is 64%.

Figure 9 represents that the Gradient Boosting classifier has predicted the classes 0, 1, and 2 accurately at 1.4 × 102 for all times, respectively.

Table 7 illustrates the precision and recall of the classifier, i.e., (0.72, 0.79), (0.69, 0.75), and (0.80, 0.68) for class 0, 1, and 2, respectively.

The overall accuracy of Gradient Boosting is 74%. This result covers the major aspects of the BCI and its analysis.

Figure 10 represents the correlation of electrode “F4” placed on the right side of the brain with six other electrodes placed on different sides of the brain. The decreasing order of maximum density of the left movement represented as 0 class is achieved by the “F4” electrode correlated to FC5, F7, FC6, F3, and F8, respectively. Electrode “F4” correlated to “F4” forms a linear scatter plot, because of the overlapping data point. Hence it is proved that the precise data points for the left movement are gained by the placement of the F4 electrode with the highest correlated electrodes, as discussed above.

Figure 10 displays that the “F4” electrode is mapped with other electrodes to represent the classifications of the features in the specified bandwidth; therefore, this study shows that the movement of the left arm is generated in this region of bandwidth, which can be further observed in

Figure 10. This result shows that different human brains use different neuronic algorithms. Different people have different activation bandwidths; therefore, the dominant frequencies for each individual may differ. However, the position of the electrodes from which the data is acquired is always the same.

To demonstrate the movement of the right hand using an electrode known as “F7” placed on the left side of the brain that is presented in

Figure 5. The “F7” electrode, which is correlated to FC5, F4, FC6, F3, and F8, achieves the decreasing order of maximal density of the right movement depicted as class 1, respectively. On the other hand, because of the overlapping data points, electrode “F7” correlates to electrode “F7”, forming a linear scatter plot.

Figure 11 shows that the location of the F7 electrode with the highest correlated electrodes, as mentioned above, results in the precise data points for the right movement. An electrode placed on the left side of the brain controls the right arm with different activation bandwidth levels and vice versa.

3.2. Working of Actuators Based on Predictions

The robotic arm named “Stacy” tends to move in a unidirectional manner. The system acquires data from the dataset and feeds it to the machine learning algorithm. The qualitative values of moving left, right, and no movement are derived by quantitative values of the classes (through Pyfirmata and Python programming), i.e., if the prediction is 0, the servo motor moves 90 degrees to the left from the reset position whereas if the prediction is 1, the servo moves 90 degrees to the right from the resetting position.

Figure 12 is the representation of the working actuator driving code that resets the robotic arm and translates the predicted output to the controller readable commands that eventually run the system with a delay of some milliseconds provided by the programming.

4. Conclusions

This work implements machine learning techniques to control the movement of a robotic arm by using EEG signal information gathered through EEG electrodes. Further, different classifiers are applied to the extracted data to train the system and compute the accuracy. The EEG data has been extracted from four users to apply the best fit classification algorithms for precise and accurate predictions. The proposed non-invasive has predicted a reliable bandwidth through the entire BCI process that provides an exact placement of EEG electrodes to verify different arm moments. In this study, we have used classifiers, i.e., Random Forest, KNN, Gradient Boosting, Logistic Regression, SVM, and Decision Tree. It can be concluded from the results of the novel deployment that the accuracy for Random Forest shows the best accuracy, 76%, while Decision Tree gives the lowest at 64%. The proposed prototype has great potential as it can be further extended for criminal investigations, autonomous vehicle control systems, the education of disabled students, and prosthetics.