An Intelligent Approach for Fair Assessment of Online Laboratory Examinations in Laboratory Learning Systems Based on Student’s Mouse Interaction Behavior

Abstract

:1. Introduction

2. Background and Related Work

3. The LLS Intelligent Fair Assessment Module

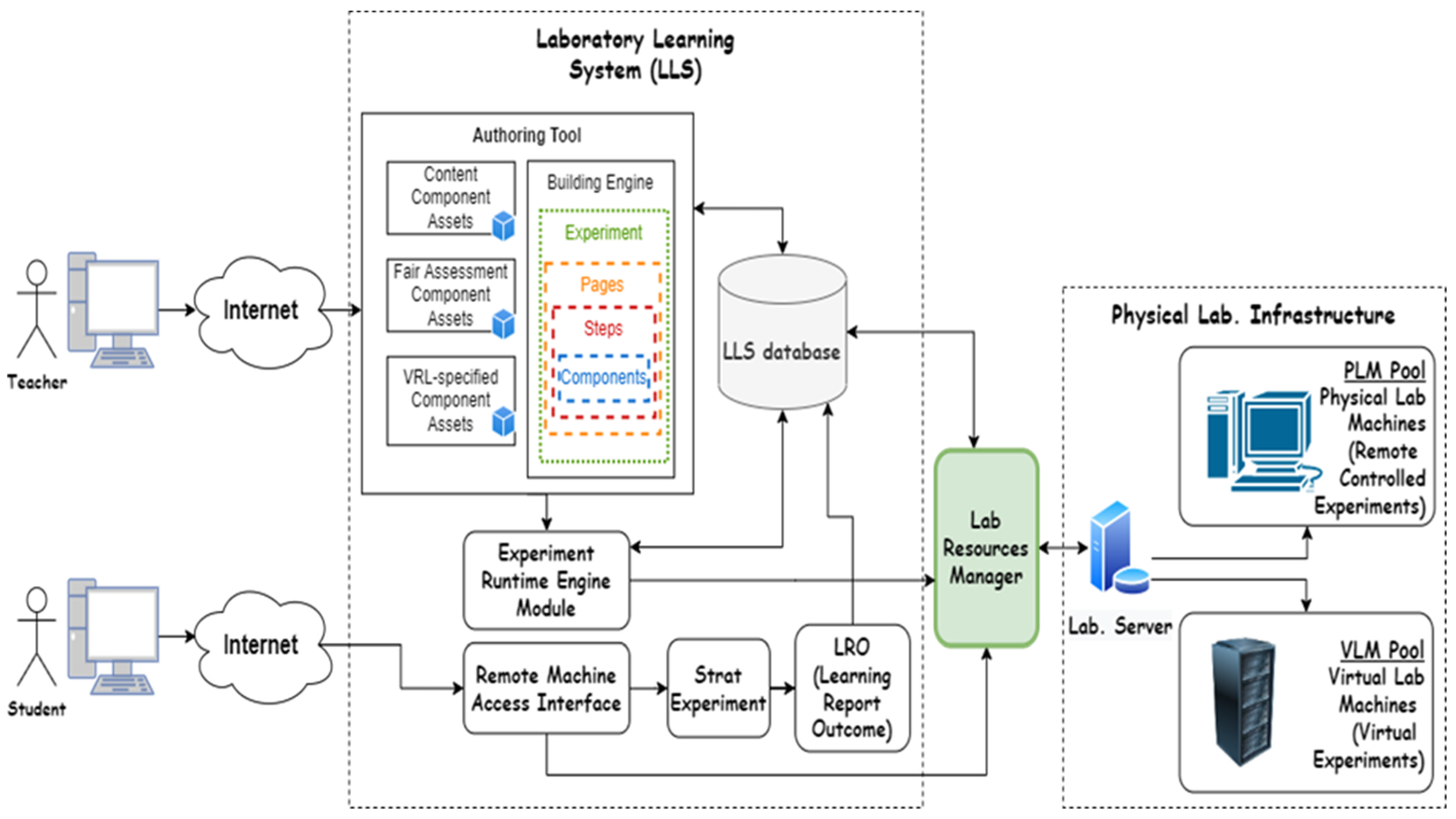

3.1. Overview of the LLS

3.2. The Proposed Fair Assessment Module

4. Experimental Setup

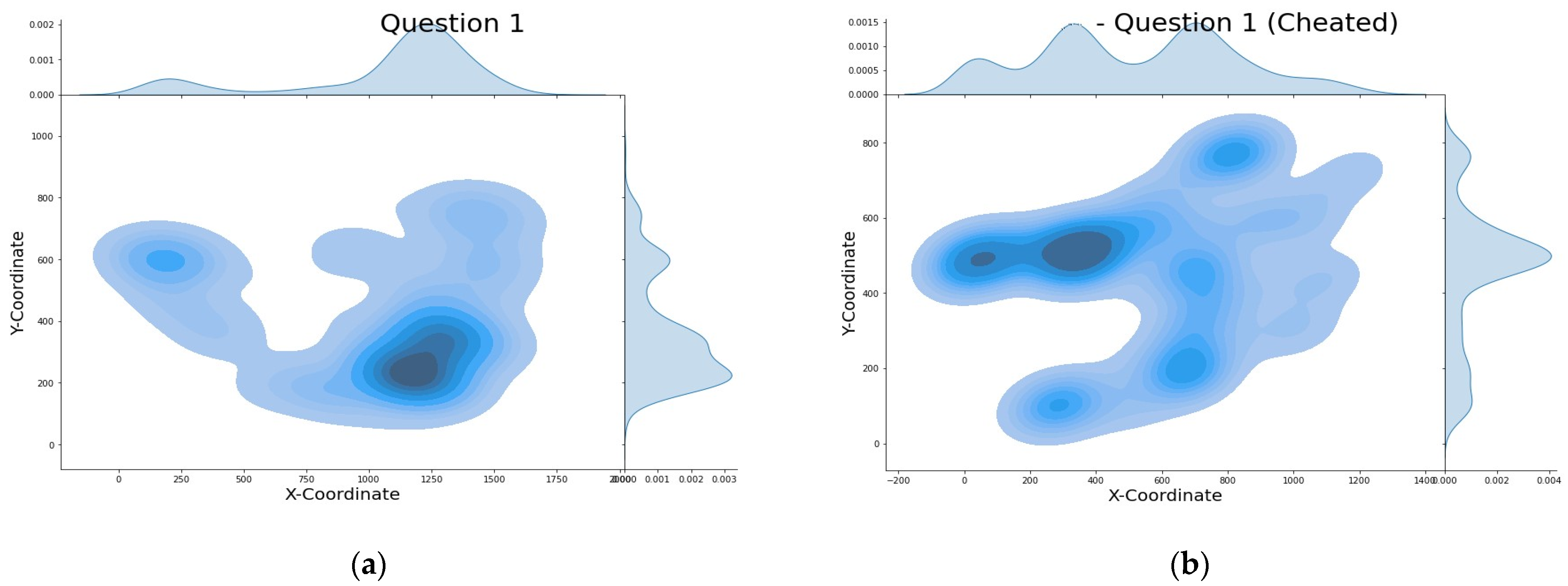

4.1. Raw Data of Mouse Dynamics Collected for VRL-Based Questions

4.2. Raw Data of Mouse Dynamics Collected for Static Questions

5. Evaluation Metrics and Results

5.1. Results for the First Test Scenario

5.2. Results for the Second Test Scenario

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Khtere, R.; Yousef, A.M.F. The professionalism of online teaching in Arab universities: Validation of faculty readiness. Educ. Technol. Soc. 2021, 24, 1–12. [Google Scholar]

- Liang, K.; Zhang, Y.; He, Y.; Zhou, Y.; Tan, W.; Li, X. Online Behavior Analysis-Based Student Profile for Intelligent E-Learning. J. Electr. Comput. Eng. 2017, 2017, 9720396. [Google Scholar] [CrossRef] [Green Version]

- Han, Q.; Su, J.; Zhao, Y. More Adaptive and Updatable: An Online Sparse Learning Method for Face Recognition. J. Electr. Comput. Eng. 2019. [Google Scholar] [CrossRef]

- Elmesalawy, M.M.; Atia, A.; Yousef, A.M.F.; El-Haleem, A.M.A.; Anany, M.G.; Elmosilhy, N.A.; Salama, A.I.; Hamdy, A.; El Zoghby, H.M.; El Din, E.S. AI-based Flexible Online Laboratory Learning System for Post-COVID-19 Era: Requirements and Design. In Proceedings of the 2021 International Mobile, Intelligent, and Ubiquitous Computing Conference (MIUCC), Cairo, Egypt, 26–27 March 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Jain, A.; Ross, A.; Pankanti, S. Biometrics: A Tool for Information Security. IEEE Trans. Inf. Forensics Secur. 2006, 1, 125–143. [Google Scholar] [CrossRef] [Green Version]

- Milisavljevic, A.; Abate, F.; Le Bras, T.; Gosselin, B.; Mancas, M.; Doré-Mazars, K. Similarities and Differences Between Eye and Mouse Dynamics During Web Pages Exploration. Front. Psychol. 2021, 12, 554595. [Google Scholar] [CrossRef] [PubMed]

- Antal, M.; Egyed-Zsigmond, E. Intrusion detection using mouse dynamics. IET Biom. 2019, 8, 285–294. [Google Scholar] [CrossRef] [Green Version]

- Bours, F.P.; Fullu, C. A Login System Using Mouse Dynamics. In Proceedings of the 5th International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Kyoto, Japan, 12–14 September 2009; pp. 1072–1077. [Google Scholar]

- Salman, O.A.; Hameed, S.M. User Authentication via Mouse Dynamics. Iraqi J. Sci. 2018, 59, 963–968. [Google Scholar]

- Zheng, N.; Paloski, A.; Wang, H. An efficient user verification system via mouse movements. In Proceedings of the ACM Conference on Computer and Communications Security, Chicago, IL, USA, 17–21 October 2011; pp. 139–150. [Google Scholar] [CrossRef]

- Jorgensen, Z.; Yu, T. On mouse dynamics as a behavioral biometric for authentication. In Proceedings of the 6th ACM Symposium on Information, Computer and Communication Security, Hong Kong, China, 22–24 March 2011; pp. 476–482. [Google Scholar] [CrossRef]

- Berezniker, A.V.; Kazachuk, M.A.; Mashechkin, I.V.; Petrovskiy, M.I.; Popov, I.S. User Behavior Authentication Based on Computer Mouse Dynamics. Mosc. Univ. Comput. Math. Cybern. 2021, 45, 135–147. [Google Scholar] [CrossRef]

- Earl, S.; Campbell, J.; Buckley, O. Identifying Soft Biometric Features from a Combination of Keystroke and Mouse Dynamics. In Advances in Human Factors in Robots, Unmanned Systems and Cybersecurity; AHFE 2021; Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2021; pp. 184–190. [Google Scholar]

- Almalki, S.; Assery, N.; Roy, K. An Empirical Evaluation of Online Continuous Authentication and Anomaly Detection Using Mouse Clickstream Data Analysis. J. Appl. Sci. 2021, 11, 6083. [Google Scholar] [CrossRef]

- Krátky, P.; Chudá, D. Recognition of web users with the aid of biometric user model. J. Intell. Inf. Syst. 2018, 51, 621–646. [Google Scholar] [CrossRef]

- Trezise, K.; Ryan, T.; de Barba, P.; Kennedy, G. Detecting Contract Cheating Using Learning Analytics. J. Learn. Anal. 2019, 6, 90–104. [Google Scholar] [CrossRef]

- Bawarith, R.; Basuhail, A.; Fattouh, A.; Gamalel-Din, S. E-exam Cheating Detection System. Int. J. Adv. Comput. Sci. Appl. 2017, 8, 176–181. [Google Scholar] [CrossRef] [Green Version]

- Pepa, L.; Sabatelli, A.; Ciabattoni, L.; Monteriù, A.; Lamberti, F.; Morra, L. Stress Detection in Computer Users from Key-board and Mouse Dynamics. IEEE Trans. Consum. Electron. 2021, 67, 12–19. [Google Scholar] [CrossRef]

- Gamboa, H.; Fred, A. A behavioral biometric system based on human-computer interaction. SPIE Biom. Technol. Hum. Identif. 2004, 5404, 381–393. [Google Scholar] [CrossRef]

- Zheng, N.; Paloski, A.; Wang, H. An Efficient User Verification System Using Angle-Based Mouse Movement Biometrics. ACM Trans. Inf. Syst. Secur. 2016, 18, 1–27. [Google Scholar] [CrossRef]

- Siddiqui, N.; Dave, R.; Seliya, N. Continuous authentication using mouse movements, machine learning, and Minecraft. arXiv 2021, arXiv:2110.11080. [Google Scholar]

- Garabato, D.; Dafonte, C.; Santoveña, R.; Silvelo, A.; Nóvoa, F.J.; Manteiga, M. AI-based user authentication reinforcement by continuous extraction of behavioral interaction features. Neural Comput. Applic. 2022, 34, 11691–11705. [Google Scholar] [CrossRef]

- Sokout, H.; Purnama, F.; Mustafazada, A.N.; Usagawa, T. Identifying potential cheaters by tracking their behaviors through mouse activities. Proceeding of the 2020 IEEE International Conference on Teaching, Assessment, and Learning for Engineering, Takamatsu, Japan, 8–11 December 2020. [Google Scholar] [CrossRef]

- Li, H.; Xu, M.; Wang, Y.; Wei, H.; Qu, H. A visual analytics approach to facilitate the proctoring of online exams. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–17. [Google Scholar]

- Available online: https://www.myrtille.io/ (accessed on 1 August 2022).

- Ahmed, A.A.E.; Traore, I. A New Biometric Technology Based on Mouse Dynamics. IEEE Trans. Dependable Secur. Comput. 2007, 4, 165–179. [Google Scholar] [CrossRef]

- Almalki, S.; Chatterjee, P.; Roy, K. Continuous Authentication Using Mouse Clickstream Data Analysis. In Security, Privacy, and Anonymity in Computation, Communication, and Storage; SpaCCS 2019; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2019; Volume 11637. [Google Scholar]

| Field | Description |

|---|---|

| Time Stamp | The elapsed time in seconds since the start of the session |

| Button | The current condition of the mouse buttons (e.g., Left) |

| State | Contains additional information about the button (e.g., Pressed) |

| X | X coordinates of the mouse cursor |

| Y | Y coordinates of the mouse cursor |

| Feature Name | Description | #Features |

|---|---|---|

| Horizontal_velocity | Measure the mean, standard deviation, maximum and minimum values of the horizontal velocity of the mouse events | 4 |

| Vertical_velocity | Measure the mean, standard deviation, maximum and minimum values of the vertical velocity of the mouse events | 4 |

| Velocity | Measure the mean, standard deviation, maximum and minimum values of the velocity of the mouse events | 4 |

| Acceleration | Measure the mean, standard deviation, maximum and minimum values of the acceleration of the mouse events | 4 |

| Jerk | Measure the mean, standard deviation, maximum and minimum values of the jerk of the mouse events | 4 |

| Angular_velocity | Measure the mean, standard deviation, maximum and minimum values of the angular velocity of the mouse events | 4 |

| Curvature | Measure the mean, standard deviation, maximum and minimum values of the curvature time series of the mouse events (i.e., curvature time series equals the ratio between the traveled distance and the change in angle) | 4 |

| Type | Type of mouse click, which may be MM or PC | 1 |

| Elapsed_time | Measure the elapsed time needed to perform a specific action | 1 |

| Path_length | Measure the length of the path from the starting point to the n points of a specific mouse action | 1 |

| Dist_end_to_end | Is the distance of the end-to-end line of an action | 1 |

| Direction | Measure the direction of the end-to-end line. As in Figure 3, if the angle is between 0° and 45°, so it is direction 1 [26] | 1 |

| Straightness | Measure the ratio between the Dist_end_to_end and the Path_length | 1 |

| Num_points | The number of points (mouse events) that exist in each mouse action. | 1 |

| Sum_of_angles | The sum of the angles for each mouse action | 1 |

| Largest_deviation | The largest deviation for each mouse action. | 1 |

| Sharp_angles | The number of sharp angles less than the threshold value, which equals 0.0005 | 1 |

| A_beg_time | Acceleration time at the beginning segment | 1 |

| Rows (Number of Actions) | Columns (Label + 39 Extracted Features + Start and Endpoint for Each Action) | Percentage | |

|---|---|---|---|

| Total | 3859 | 42 | 1.0 |

| Train | 2585 | 42 | 0.67 |

| Test | 1274 | 42 | 0.33 |

| MM | PC | DD | |

|---|---|---|---|

| Train | 561 (21%) | 1998 (77%) | 26 (1%) |

| Test | 272(21.1%) | 989 (77.6%) | 13 (1.3%) |

| Algorithms/Metrics | Accuracy | Precision | TPR | F1-Score | AUROC |

|---|---|---|---|---|---|

| KNN | 0.70 | 0.59 | 0.51 | 0.55 | 0.73 |

| SVC | 0.72 | 0.77 | 0.33 | 0.46 | 0.81 |

| Random Forest | 0.86 | 0.87 | 0.73 | 0.79 | 0.93 |

| Logistic Regression | 0.74 | 0.73 | 0.43 | 0.54 | 0.81 |

| XGBoost | 0.87 | 0.88 | 0.77 | 0.82 | 0.943 |

| LightGBM | 0.88 | 0.88 | 0.78 | 0.83 | 0.945 |

| Algorithms/Metrics | Accuracy | Precision | TPR | F1-Score | AUROC |

|---|---|---|---|---|---|

| KNN | 0.68 | 0.54 | 0.50 | 0.52 | 0.71 |

| SVC | 0.73 | 0.70 | 0.40 | 0.51 | 0.81 |

| Random Forest | 0.87 | 0.87 | 0.74 | 0.80 | 0.94 |

| Logistic Regression | 0.77 | 0.69 | 0.60 | 0.64 | 0.80 |

| XGBoost | 0.88 | 0.84 | 0.80 | 0.82 | 0.952 |

| LightGBM | 0.90 | 0.88 | 0.82 | 0.85 | 0.956 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hassan Hosny, H.A.; Ibrahim, A.A.; Elmesalawy, M.M.; Abd El-Haleem, A.M. An Intelligent Approach for Fair Assessment of Online Laboratory Examinations in Laboratory Learning Systems Based on Student’s Mouse Interaction Behavior. Appl. Sci. 2022, 12, 11416. https://doi.org/10.3390/app122211416

Hassan Hosny HA, Ibrahim AA, Elmesalawy MM, Abd El-Haleem AM. An Intelligent Approach for Fair Assessment of Online Laboratory Examinations in Laboratory Learning Systems Based on Student’s Mouse Interaction Behavior. Applied Sciences. 2022; 12(22):11416. https://doi.org/10.3390/app122211416

Chicago/Turabian StyleHassan Hosny, Hadeer A., Abdulrahman A. Ibrahim, Mahmoud M. Elmesalawy, and Ahmed M. Abd El-Haleem. 2022. "An Intelligent Approach for Fair Assessment of Online Laboratory Examinations in Laboratory Learning Systems Based on Student’s Mouse Interaction Behavior" Applied Sciences 12, no. 22: 11416. https://doi.org/10.3390/app122211416