Burnt-Area Quick Mapping Method with Synthetic Aperture Radar Data

Abstract

:1. Introduction

2. Materials and Methods

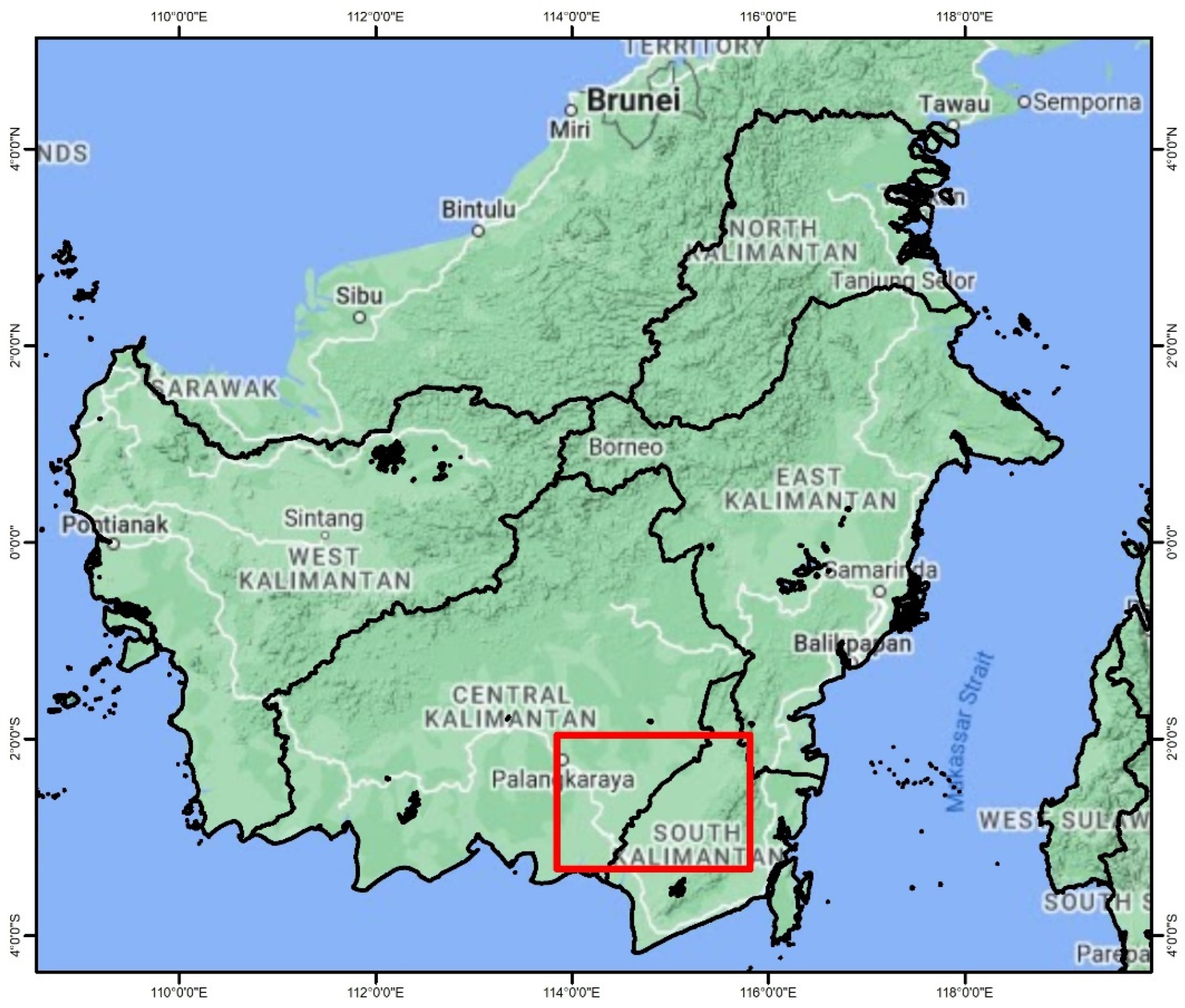

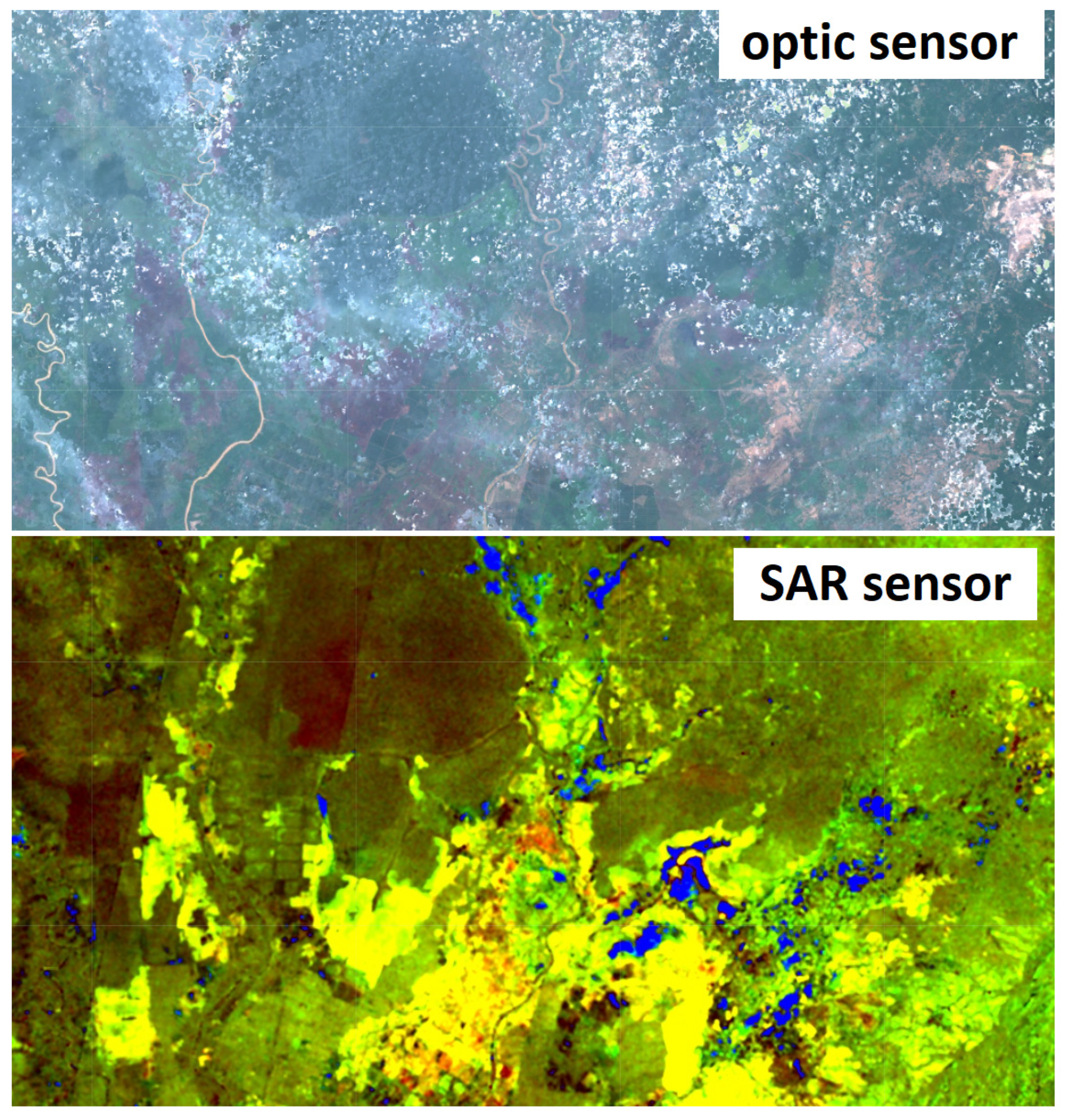

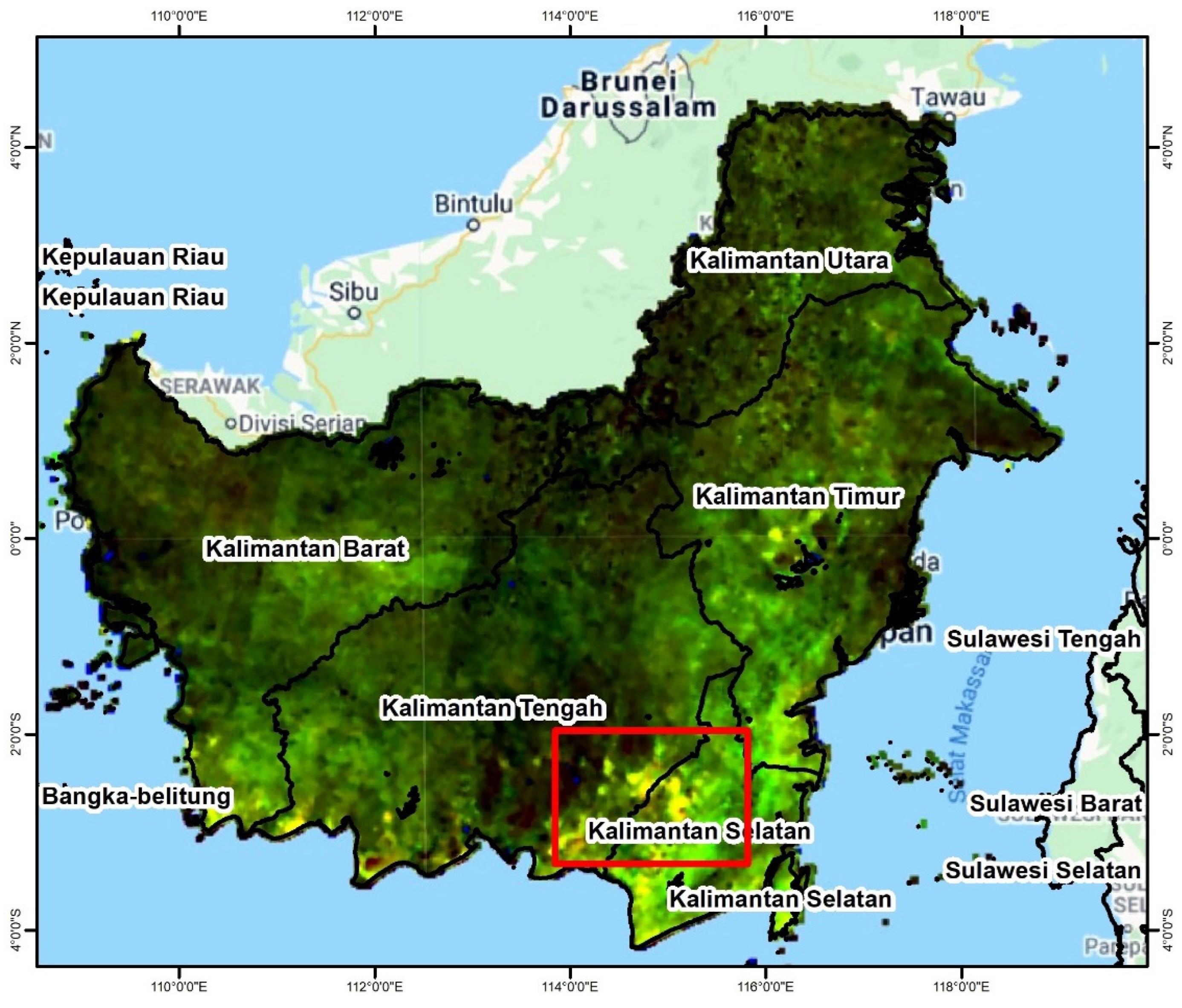

2.1. Location and Data

2.2. Related Method

2.3. Proposed Method

3. Results

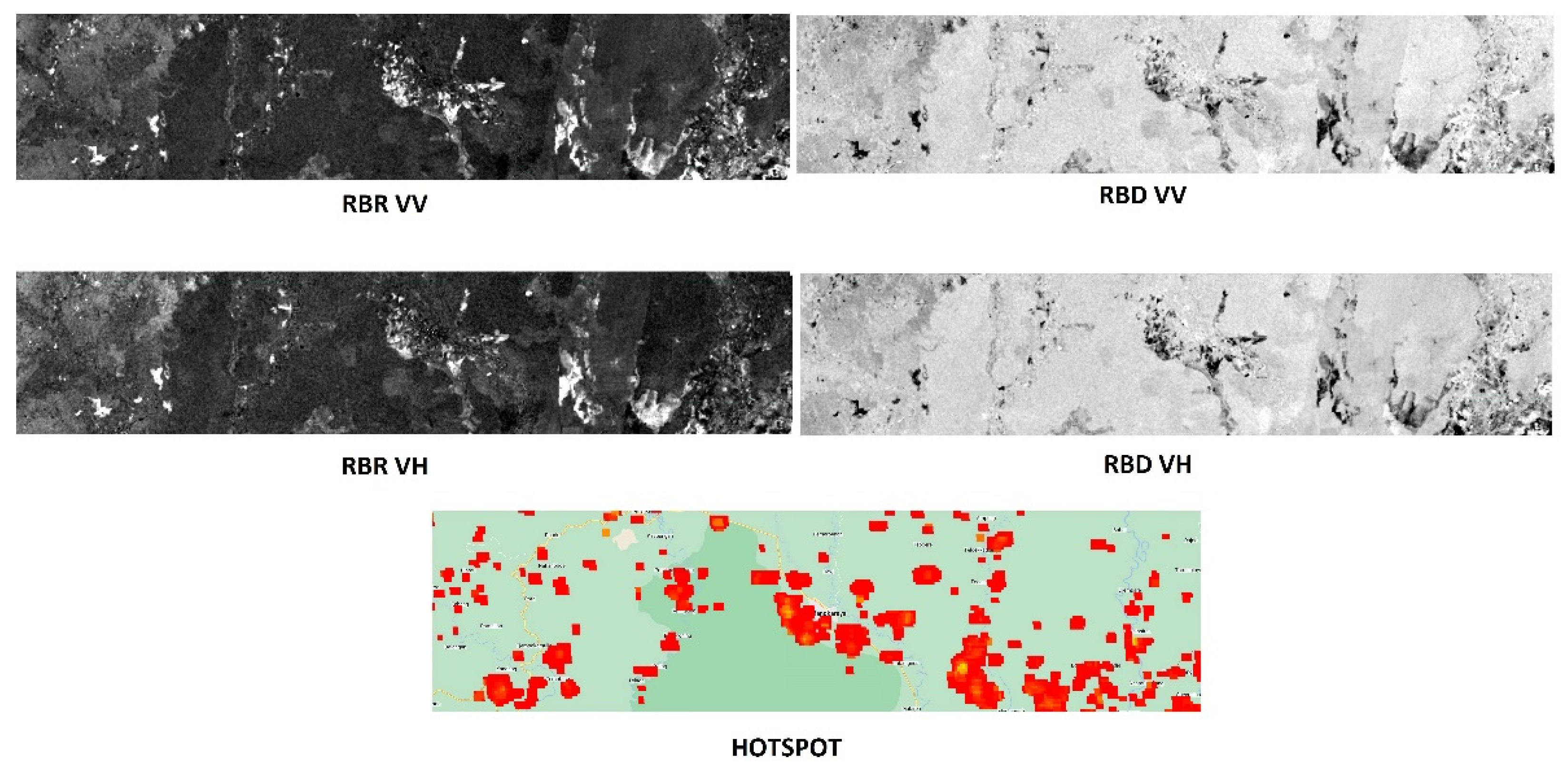

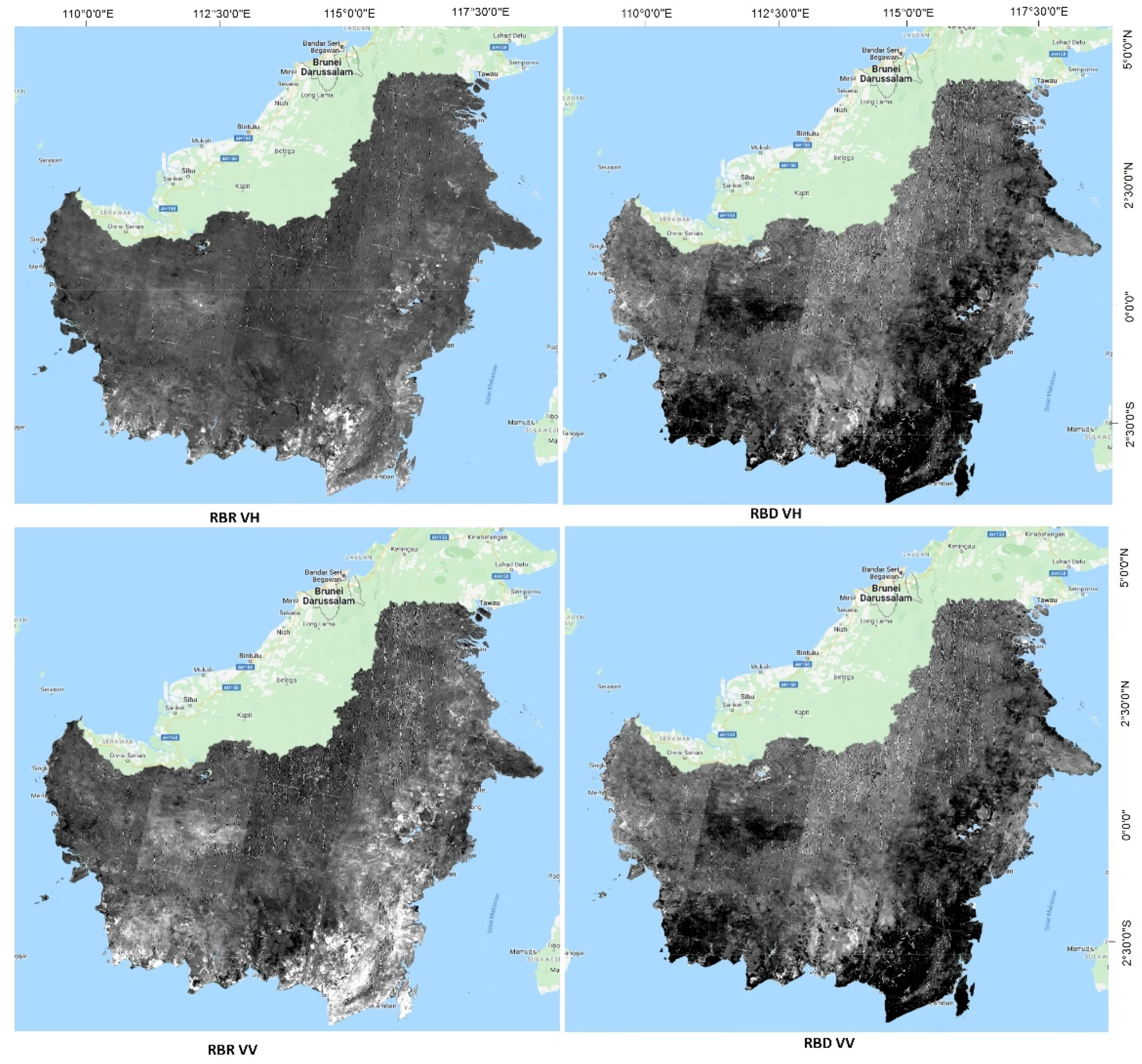

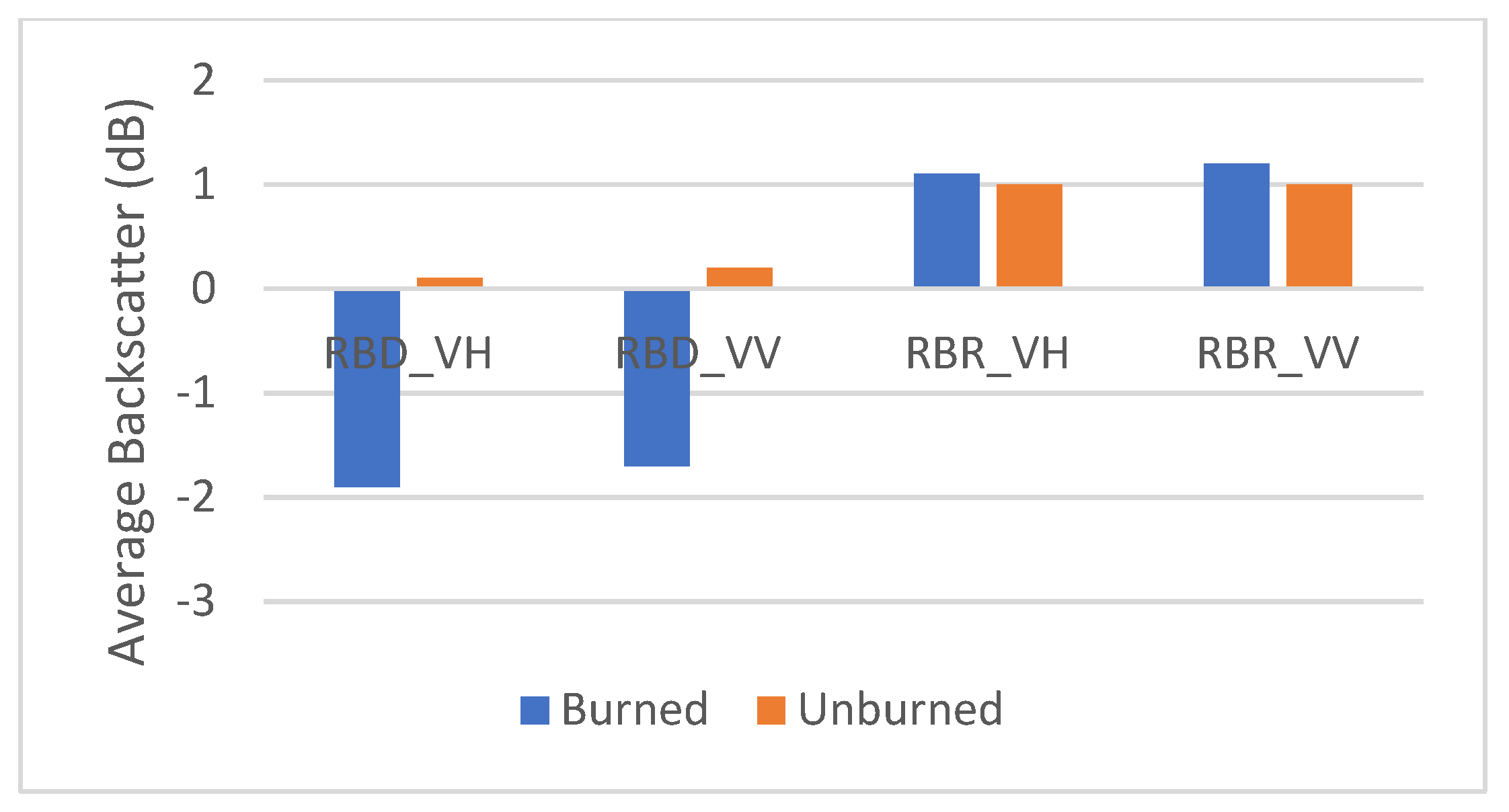

3.1. Radar Burn Ratio and the Difference for the Burnt Area Detection

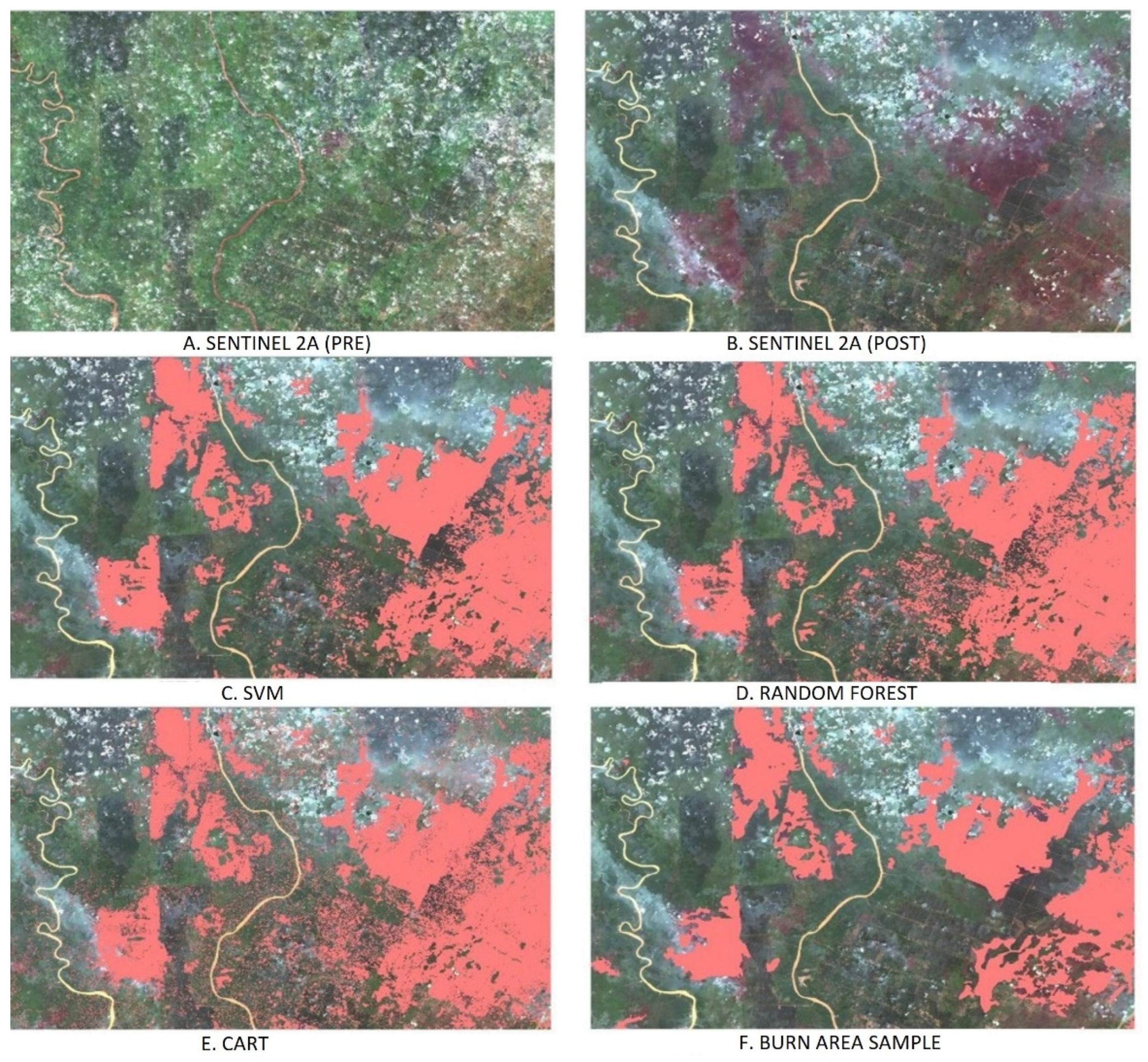

3.2. Burn Scar Mapping Machine-Learning Algorithms

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hoscilo, A.; Page, S.; Tansey, K.J.; Rieley, J.O. Effect of repeated fires on land-cover change on peatland in southern Central Kalimantan, Indonesia, from 1973 to 2005. Int. J. Wildland Fire 2011, 20, 578–588. [Google Scholar] [CrossRef]

- Glauber, A.J.; Moyer, S.; Adriani, M.; Gunawan, I. The Cost of Fire: An Economic Analysis of Indonesia’s 2015 Fire Crisis. In Indonesia Sustainable Landscapes Knowledge Note No. 1; World Bank: Jakarta, Indonesia, 2016. [Google Scholar]

- Huijnen, V.; Wooster, M.; Kaiser, J.W.; Gaveau, D.L.A.; Flemming, J.; Parrington, M.; Inness, A.; Murdiyarso, N.D.; Main, B.; van Weele, M. Fire carbon emissions over maritime southeast Asia in 2015 largest since 1997. Sci. Rep. 2016, 6, 26886. [Google Scholar] [CrossRef] [PubMed]

- Islam, M.S.; Pei, Y.H.; Mangharam, S. Trans-Boundary Haze Pollution in Southeast Asia: Sustainability through Plural Environmental Governance. Sustainability 2016, 8, 499. [Google Scholar] [CrossRef] [Green Version]

- Dennis, R.A.; Colfer, C.P. Impacts of land use and fire on the loss and degradation of lowland forest in 1983–2000 in East Kutai District, East Kalimantan, Indonesia. Singap. J. Trop. Geogr. 2006, 27, 30–48. [Google Scholar] [CrossRef]

- Medrilzam, M.; Dargusch, P.; Herbohn, J.; Smith, C. The socio-ecological drivers of forest degradation in part of the tropical peatlands of Central Kalimantan, Indonesia. For. Int. J. For. Res. 2013, 87, 335–345. [Google Scholar] [CrossRef]

- Susetyo, K.E.; Kusin, K.; Nina, Y.; Jagau, Y.; Kawasaki, M.; Naito, D. 2019 Peatland and Forest Fires in Central Kalimantan, Indonesia. In Newsletter of Tropical Peatland Society Project; Research Institute for Humanity and Nature: Kyoto, Japan, 2020; p. 4. [Google Scholar]

- Fuller, D.O.; Murphy, K. The Enso-Fire Dynamic in Insular Southeast Asia. Clim. Chang. 2006, 74, 435–455. [Google Scholar] [CrossRef]

- Khoirunisa, R.; Laszlo, M. Burned region analysis using normalized burn ratio index (NBRI) in 2019 forest fires in Indonesia (Case study: Pinggir-Mandau District, Bengkalis, Riau, Indonesia). Geogr. Sci. Educ. J. 2019, 2, 9. [Google Scholar]

- Nurdiati, S.; Sopaheluwakan, A.; Septiawan, P. Spatial and Temporal Analysis of El Niño Impact on Land and Forest Fire in Kalimantan and Sumatra. Agromet 2021, 35, 10. [Google Scholar] [CrossRef]

- Bajocco, S.; Salvati, L.; Ricotta, C. Land degradation versus fire: A spiral process? Prog. Phys. Geogr. Earth Environ. 2011, 35, 3–18. [Google Scholar] [CrossRef]

- Mataix-Solera, J.; Cerdà, A.; Arcenegui, V.; Jordán, A.; Zavala, L.M. Fire effects on soil aggregation: A review. Earth Sci. Rev. 2011, 109, 44–60. [Google Scholar] [CrossRef]

- Esteves, T.C.J.; Kirkby, M.; Shakesby, R.; Ferreira, A.; Soares, J.; Irvine, B.; Ferreira, C.; Coelho, C.; Bento, C.; Carreiras, M. Mitigating land degradation caused by wildfire: Application of the PESERA model to fire-affected sites in central Portugal. Geoderma 2012, 191, 40–50. [Google Scholar] [CrossRef]

- Soulis, K.X. Estimation of SCS Curve Number variation following forest fires. Hydrol. Sci. J. 2018, 63, 1332–1346. [Google Scholar] [CrossRef]

- Mouillot, F.; Schultz, M.G.; Yue, C.; Cadule, P.; Tansey, K.; Ciais, P.; Chuvieco, E. Ten years of global burned area products from spaceborne remote sensing—A review: Analysis of user needs and recommendations for future developments. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 64–79. [Google Scholar] [CrossRef] [Green Version]

- Szpakowski, D.M.; Jensen, J.L.R. A Review of the Applications of Remote Sensing in Fire Ecology. Remote Sens. 2019, 11, 2638. [Google Scholar] [CrossRef] [Green Version]

- Chuvieco, E. Satellite Remote Sensing Contributions to Wildland Fire Science and Management. Curr. For. Rep. 2020, 6, 81–96. [Google Scholar] [CrossRef]

- Carreiras, J.M.B.; Quegan, S.; Tansey, K.; Page, S. Sentinel-1 observation frequency significantly increases burnt area detectability in tropical SE Asia. Environ. Res. Lett. 2020, 15, 054008. [Google Scholar] [CrossRef]

- Rahmi, K.I.N.; Ardha, M.; Rarasati, A.; Nugroho, G.; Mayestika, P.; Catur, N.U.; Yulianto, F. Burned area monitoring based on multiresolution and multisensor remote sensing image in Muaro Jambi, Jambi. IOP Conf. Ser. Earth Environ. Sci. 2020, 528, 012058. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Lasko, K. Incorporating Sentinel-1 SAR imagery with the MODIS MCD64A1 burned area product to improve burn date estimates and reduce burn date uncertainty in wildland fire mapping. Geocarto Int. 2021, 36, 340–360. [Google Scholar] [CrossRef] [Green Version]

- Riyanto, I.; Rizkinia, M.; Arief, R.; Sudiana, D. Three-Dimensional Convolutional Neural Network on Multi-Temporal Synthetic Aperture Radar Images for Urban Flood Potential Mapping in Jakarta. Appl. Sci. 2022, 12, 1679. [Google Scholar] [CrossRef]

- Kumar, L.; Mutanga, O. Google Earth Engine Applications Since Inception: Usage, Trends, and Potential. Remote Sens. 2018, 10, 1509. [Google Scholar] [CrossRef]

- Tamiminia, H.; Salehi, B.; Mahdianpari, M.; Quackenbush, L.; Adeli, S.; Brisco, B. Google Earth Engine for geo-big data applications: A meta-analysis and systematic review. ISPRS J. Photogramm. Remote Sens. 2020, 164, 152–170. [Google Scholar] [CrossRef]

- Amani, M.; Ghorbanian, A.; Ahmadi, S.A.; Kakooei, M.; Moghimi, A.; Mirmazloumi, S.M.; Moghaddam, S.H.A.; Mahdavi, S.; Ghahremanloo, M.; Parsian, S.; et al. Google Earth Engine Cloud Computing Platform for Remote Sensing Big Data Applications: A Comprehensive Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5326–5350. [Google Scholar] [CrossRef]

- Johansen, K.; Phinn, S.; Taylor, M. Mapping woody vegetation clearing in Queensland, Australia from Landsat imagery using the Google Earth Engine. Remote Sens. Appl. Soc. Environ. 2015, 1, 36–49. [Google Scholar] [CrossRef]

- Tsai, Y.H.; Stow, D.; Chen, H.L.; Lewison, R.; An, L.; Shi, L. Mapping Vegetation and Land Use Types in Fanjingshan National Nature Reserve Using Google Earth Engine. Remote Sens. 2018, 10, 927. [Google Scholar] [CrossRef] [Green Version]

- Duan, Q.; Tan, M.; Guo, Y.; Wang, X.; Xin, L. Understanding the Spatial Distribution of Urban Forests in China Using Sentinel-2 Images with Google Earth Engine. Forests 2019, 10, 729. [Google Scholar] [CrossRef] [Green Version]

- Koskinen, J.; Leinonen, U.; Vollrath, A.; Ortmann, A.; Lindquist, E.; D’Annunzio, R.; Pekkarinen, A.; Käyhkö, N. Participatory mapping of forest plantations with Open Foris and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2019, 148, 63–74. [Google Scholar] [CrossRef]

- Brovelli, M.A.; Sun, Y.; Yordanov, V. Monitoring Forest Change in the Amazon Using Multi-Temporal Remote Sensing Data and Machine Learning Classification on Google Earth Engine. ISPRS Int. J. Geo-Inf. 2020, 9, 580. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Felegari, S.; Sharifi, A.; Moravej, K.; Amin, M.; Golchin, A.; Muzirafuti, A.; Tariq, A.; Zhao, N. Integration of Sentinel 1 and Sentinel 2 Satellite Images for Crop Mapping. Appl. Sci. 2021, 11, 10104. [Google Scholar] [CrossRef]

- Ministry of Environment and Forestry. SiPongi Karhutla Monitoring System. Available online: https://sipongi.menlhk.go.id/ (accessed on 5 March 2020).

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Lafarge, F.; Descombes, X.; Zerubia, J. Textural kernel for SVM classification in remote sensing: Application to forest fire detection and urban area extraction. In Proceedings of the IEEE International Conference on Image Processing 2005, Genova, Italy, 14 September 2005. [Google Scholar]

- Wang, Y.; Fang, Z.; Hong, H.; Peng, L. Flood susceptibility mapping using convolutional neural network frameworks. J. Hydrol. 2020, 582, 124482. [Google Scholar] [CrossRef]

- Shao, Y.; Lunetta, R.S. Comparison of support vector machine, neural network, and CART algorithms for the land-cover classification using limited training data points. ISPRS J. Photogramm. Remote Sens. 2012, 70, 78–87. [Google Scholar] [CrossRef]

- Gibson, R.; Danaher, T.; Hehir, W.; Collins, L. A remote sensing approach to mapping fire severity in south-eastern Australia using sentinel 2 and random forest. Remote Sens. Environ. 2020, 240, 111702. [Google Scholar] [CrossRef]

- Ramo, R.; Chuvieco, E. Developing a Random Forest Algorithm for MODIS Global Burned Area Classification. Remote Sens. 2017, 9, 1193. [Google Scholar] [CrossRef] [Green Version]

- Kontoes, C.C.; Poilvé, H.; Florsch, G.; Keramitsoglou, I.; Paralikidis, S. A comparative analysis of a fixed thresholding vs. a classification tree approach for operational burn scar detection and mapping. Int. J. Appl. Earth Obs. Geoinf. 2009, 11, 299–316. [Google Scholar] [CrossRef]

- Pereira, A.A.; Pereira, J.M.C.; Libonati, R.; Oom, D.; Setzer, A.W.; Morelli, F.; Machado-Silva, F.; De Carvalho, L.M.T. Burned Area Mapping in the Brazilian Savanna Using a One-Class Support Vector Machine Trained by Active Fires. Remote Sens. 2017, 9, 1161. [Google Scholar] [CrossRef] [Green Version]

- Chew, Y.J.; Ooi, S.Y.; Pang, Y.H. Experimental Exploratory of Temporal Sampling Forest in Forest Fire Regression and Classification. In Proceedings of the 2020 8th International Conference on Information and Communication Technology (ICoICT), Yogyakarta, Indonesia, 24–26 June 2020. [Google Scholar]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees; CRC Press: Boca Raton, FL, USA, 1984. [Google Scholar]

- Ananth, S.; Manjula, T.R.; Niranjan, G.; Kumar, S.; Raghuveer, A.; Raju, G. Mapping of Burnt area and Burnt Severity using Landsat 8 Images: A Case Study of Bandipur forest Fire Region of Karnataka state India. In Proceedings of the 2019 IEEE Recent Advances in Geoscience and Remote Sensing: Technologies, Standards and Applications (TENGARSS), Kochi, India, 17–20 October 2019. [Google Scholar]

- Bar, S.; Parida, B.R.; Pandey, A.C. Landsat-8 and Sentinel-2 based Forest fire burn area mapping using machine learning algorithms on GEE cloud platform over Uttarakhand, Western Himalaya. Remote Sens. Appl. Soc. Environ. 2020, 18, 100324. [Google Scholar] [CrossRef]

- Collins, L.; McCarthy, G.; Mellor, A.; Newell, G.; Smith, L. Training data requirements for fire severity mapping using Landsat imagery and random forest. Remote Sens. Environ. 2020, 245, 111839. [Google Scholar] [CrossRef]

- Belenguer-Plomer, M.A.; Tanase, M.A.; Fernandez-Carrillo, A.; Chuvieco, E. Burned area detection and mapping using Sentinel-1 backscatter coefficient and thermal anomalies. Remote Sens. Environ. 2019, 233, 111345. [Google Scholar] [CrossRef]

- Key, C.H.; Benson, N.C. Measuring and remote sensing of burn severity: The CBI and NBR. In Joint Fire Science Conference and Workshop; University of Idaho and International Association of Wildland Fire: Boise, ID, USA, 1999. [Google Scholar]

- Tanase, M.A.; Kennedy, R.; Aponte, C. Radar Burn Ratio for fire severity estimation at canopy level: An example for temperate forests. Remote Sens. Environ. 2015, 170, 14–31. [Google Scholar] [CrossRef]

- Addison, P.; Oommen, T. Utilizing satellite radar remote sensing for burn severity estimation. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 292–299. [Google Scholar] [CrossRef]

- Indraswari, R.; Arifin, A.Z. RBF kernel optimization method with particle swarm optimization on SVM using the analysis of input data’s movement. J. Ilmu Komput. Dan Inf. 2017, 10, 36. [Google Scholar] [CrossRef]

| Method | Parameter | Value |

|---|---|---|

| CART | Leaf Node | 10 |

| Random Forest | Tree Number | 10 |

| Support Vector Machine | Gamma | 0.5 |

| Cost | 10 |

| Classification Methods | SVM | RF | CART | Field Data | ||||

|---|---|---|---|---|---|---|---|---|

| Unburnt | Burnt | Unburnt | Burnt | Unburnt | Burnt | |||

| Unburnt | 435,189 | 52,199 | 431,690 | 55,698 | 384,044 | 103,344 | 487,388 | |

| Burnt | 7643 | 14,498 | 7360 | 14,781 | 6695 | 15,446 | 22,141 | |

| Overall Accuracy | 88.26% | 87.62% | 78.40% | |||||

| Process Time (Hours) | 1 | 1 | 2 | 12 | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rokhmatuloh; Ardiansyah; Indratmoko, S.; Riyanto, I.; Margatama, L.; Arief, R. Burnt-Area Quick Mapping Method with Synthetic Aperture Radar Data. Appl. Sci. 2022, 12, 11922. https://doi.org/10.3390/app122311922

Rokhmatuloh, Ardiansyah, Indratmoko S, Riyanto I, Margatama L, Arief R. Burnt-Area Quick Mapping Method with Synthetic Aperture Radar Data. Applied Sciences. 2022; 12(23):11922. https://doi.org/10.3390/app122311922

Chicago/Turabian StyleRokhmatuloh, Ardiansyah, Satria Indratmoko, Indra Riyanto, Lestari Margatama, and Rahmat Arief. 2022. "Burnt-Area Quick Mapping Method with Synthetic Aperture Radar Data" Applied Sciences 12, no. 23: 11922. https://doi.org/10.3390/app122311922

APA StyleRokhmatuloh, Ardiansyah, Indratmoko, S., Riyanto, I., Margatama, L., & Arief, R. (2022). Burnt-Area Quick Mapping Method with Synthetic Aperture Radar Data. Applied Sciences, 12(23), 11922. https://doi.org/10.3390/app122311922