Development of an Ensembled Meta-Deep Learning Model for Semantic Road-Scene Segmentation in an Unstructured Environment

Abstract

:1. Introduction

2. Related Work

3. Materials and Methods

3.1. Datasets

3.2. Methodology

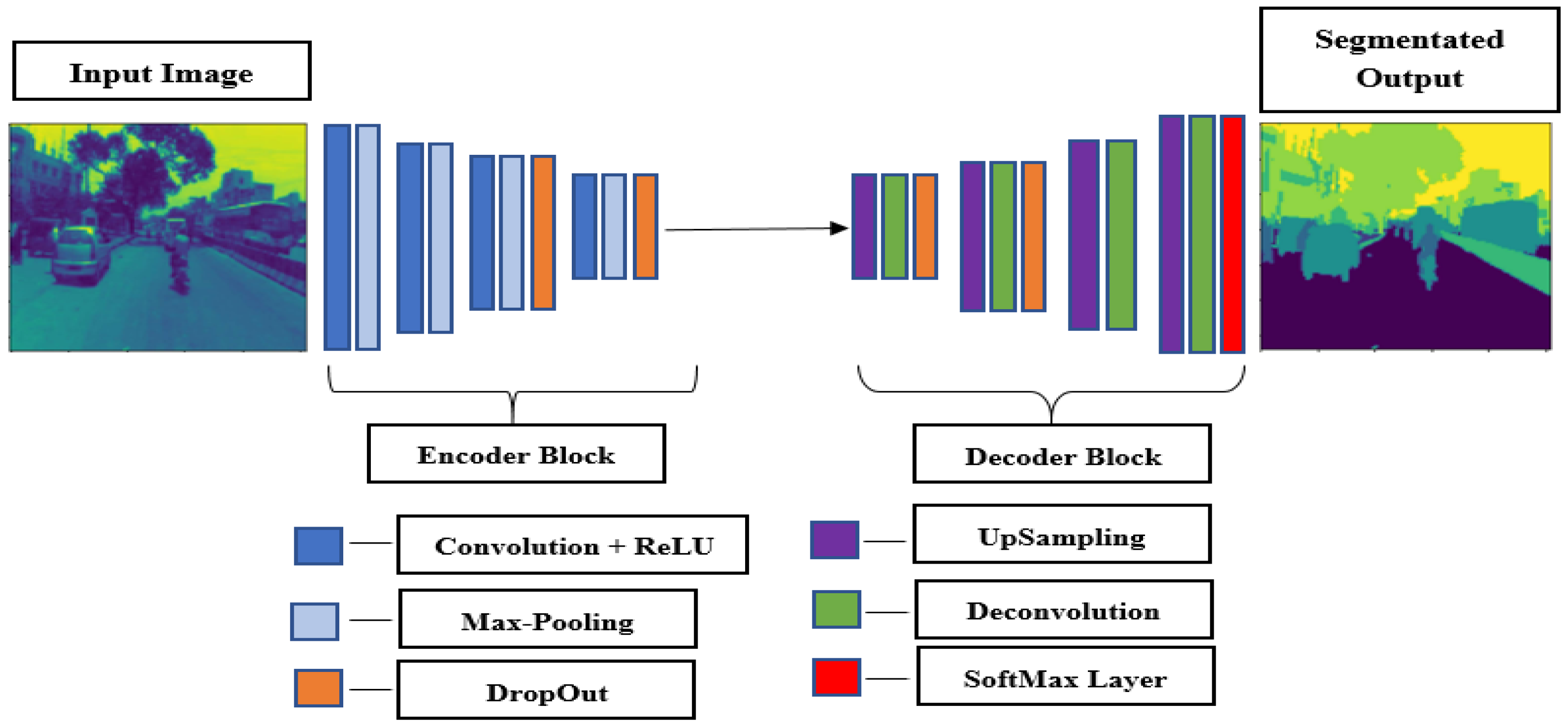

3.2.1. General Outline of the Proposed Method

3.2.2. General Outline of the Proposed Method

3.2.3. Stacking-Based Ensembling Methods

4. Evaluation Metrics

5. Experimental Evaluation

5.1. Implementation Details

5.2. Evaluation Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Baheti, B.; Innani, S.; Gajre, S.; Talbar, S. Eff-UNet: A Novel Architecture for Semantic Segmentation in Unstructured Environment. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 1473–1481. [Google Scholar] [CrossRef]

- Mateen, M.; Wen, J.; Nasrullah; Song, S.; Huang, Z. Fundus Image Classification Using VGG-19 Architecture with PCA and SVD. Symmetry 2019, 11, 1. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 2018, 4510–4520. [Google Scholar] [CrossRef] [Green Version]

- Xing, Y.; Zhong, L.; Zhong, X. DARSegNet: A Real-Time Semantic Segmentation Method Based on Dual Attention Fusion Module and Encoder-Decoder Network. Math. Probl. Eng. 2022, 2022, 6195148. [Google Scholar] [CrossRef]

- Hu, J.; Li, L.; Lin, Y.; Wu, F.; Zhao, J. A Comparison and Strategy of Semantic Segmentation on Remote Sensing Images. Adv. Intell. Syst. Comput. 2020, 1074, 21–29. [Google Scholar] [CrossRef] [Green Version]

- U-Net Architecture For Image Segmentation. Available online: https://blog.paperspace.com/unet-architecture-image-segmentation/ (accessed on 4 November 2022).

- Chaurasia, A.; Culurciello, E. LinkNet: Exploiting encoder representations for efficient semantic segmentation. In Proceedings of the 2017 IEEE Visual Communications and Image Processing, St. Petersburg, FL, USA, 9–12 December 2018; Institute of Electrical and Electronics Engineers Inc.: St. Petersburg, FL, USA; Volume 2018, pp. 1–4. [Google Scholar] [CrossRef] [Green Version]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the Proceedings—30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar] [CrossRef] [Green Version]

- Parmar, V.; Bhatia, N.; Negi, S.; Suri, M. Exploration of Optimized Semantic Segmentation Architectures for Edge-Deployment on Drones. arXiv 2020, arXiv:2007.02839. [Google Scholar]

- Zhuang, J.; Yang, J.; Gu, L.; Dvornek, N. Shelfnet for Fast Semantic Segmentation. In Proceedings of the Proceedings—2019 International Conference on Computer Vision Workshop, ICCVW, Seoul, Republic of Korea, 27–28 October 2019; pp. 847–856. [Google Scholar] [CrossRef] [Green Version]

- Ngiam, J.; Khosla, A.; Kim, M.; Nam, J.; Lee, H.; Ng, A.Y. Multimodal Deep Learning. In Proceedings of the 28th International Conference on Machine Learning, ICML, Bellevue, WA, USA, 28 June–2 July 2011; pp. 689–696. [Google Scholar]

- Summary of—SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation | by Siddhant Kumar | Towards Data Science. Available online: https://towardsdatascience.com/summary-of-segnet-a-deep-convolutional-encoder-decoder-architecture-for-image-segmentation-75b2805d86f5 (accessed on 29 December 2021).

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. Published as Conference paper at International Conference on Learning Representations. arXiv 2016, arXiv:1511.07122. [Google Scholar] [CrossRef]

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. ENet: A Deep Neural Network Architecture for Real-Time Semantic Segmentation. arXiv 2016, arXiv:1606.02147. [Google Scholar]

- Iglovikov, V.; Shvets, A. TernausNet: U-Net with VGG11 Encoder Pre-Trained on ImageNet for Image Segmentation. arXiv 2018, arXiv:1801.05746. [Google Scholar]

- IDD Challenge—NCVPRIPG 2019. Available online: https://cvit.iiit.ac.in/ncvpripg19/idd-challenge/ (accessed on 29 December 2021).

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar] [CrossRef] [Green Version]

- Mishra, A.; Kumar, S.; Kalluri, T.; Varma, G. Semantic Segmentation Datasets for Resource Constrained Training; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Siddique, N.; Paheding, S.; Elkin, C.P.; Devabhaktuni, V. U-Net and Its Variants for Medical Image Segmentation: A Review of Theory and Applications. IEEE Access 2021, 9, 82031–82057. [Google Scholar] [CrossRef]

- Li, X.; Lai, T.; Wang, S.; Chen, Q.; Yang, C.; Chen, R. Weighted Feature Pyramid Networks for Object Detection. In Proceedings of the Proceedings—2019 IEEE Intl Conf on Parallel and Distributed Processing with Applications, Big Data and Cloud Computing, Sustainable Computing and Communications, Social Computing and Networking, ISPA/BDCloud/SustainCom/SocialCom, Xiamen, China, 16–18 December 2019; pp. 1500–1504. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, ICML, Long Beach, CA, USA, 9–15 June 2019; pp. 10691–10700. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. In Proceedings of the 31st AAAI Conference on Artificial Intelligence, AAAI, San Francisco, CA, USA, 4–9 February 2017; pp. 4278–4284. [Google Scholar]

- Cho, Y.-J. Weighted Intersection over Union (WIoU): A New Evaluation Metric for Image Segmentation. arXiv 2021, arXiv:2107.09858. [Google Scholar]

- Yakubovskiy, P. Segmentation Models Documentation. Available online: https://segmentation-models.readthedocs.io/_/downloads/en/v0.2.0/pdf/ (accessed on 17 April 2020).

| Class Names | Class Labels | Proportion of Pixels |

|---|---|---|

| Drivable area | Class 1 | 0.32 |

| Non-drivable | Class 2 | 0.02 |

| Living things | Class 3 | 0.01 |

| (Two-wheeler, auto-rickshaw, large vehicle) Vehicles | Class 4 | 0.1 |

| (Barrier) Roadside objects | Class 5 | 0.12 |

| (Construction) Far objects | Class 6 | 0.28 |

| Sky | Class 7 | 0.15 |

| Resnet50 (1) | VGG19 (2) | Inceptionv3 (3) | Efficientnetb7 (4) | Mobilenetv2 (5) | |

|---|---|---|---|---|---|

| U-Net (1) | M11 | M12 | M13 | M14 | M15 |

| LinkNet (2) | M21 | M22 | M23 | M24 | M25 |

| PSPNet (3) | M32 | M32 | M33 | M34 | M35 |

| FPN (4) | M41 | M42 | M43 | M44 | M45 |

| Predicted Positive | Predicted Negative | |

|---|---|---|

| Ground Truth Positive | True Positive (TP) | True Negative (TN) |

| Ground Truth Negative | False Positive (FP) | False Negative (FN) |

| Evaluation Sores of Models with Different Backbone Architectures | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Model/Backbone | VGG19 | Resnet50 | Efficientnetb7 | InceptionV3 | MobilenetV2 | |||||

| IoU | F1score | IoU | F1score | IoU | F1score | IoU | F1score | IoU | F1score | |

| U-NET | 0.5332 | 0.6532 | 0.6535 | 0.7667 | 0.6686 | 0.7002 | 0.6810 | 0.7053 | 0.6414 | 0.7623 |

| LinkNet | 0.5256 | 0.6400 | 0.5362 | 0.6388 | 0.6617 | 0.6728 | 0.6031 | 0.6457 | 0.5294 | 0.6234 |

| FPN | 0.6085 | 0.6500 | 0.5424 | 0.6978 | 0.5823 | 0.6964 | 0.5784 | 0.6874 | 0.5280 | 0.6547 |

| PSPNet | 0.6540 | 0.7088 | 0.5232 | 0.7284 | 0.5701 | 0.7003 | 0.5255 | 0.7665 | 0.4909 | 0.6832 |

| Class/Model | Evaluation Scores of Ensembled Methods | |||||||

|---|---|---|---|---|---|---|---|---|

| U-Netweight-Ensembled | LinkNetweight-Ensembled | FPNweight-Ensembled | PSPNetweight-Ensembled | |||||

| IoU | F1 Score | IoU | F1 Score | IoU | F1 Score | IoU | F1 Score | |

| Class 1 | 0.9215 | 0.75 | 0.8015 | 0.652 | 0.78 | 0.69 | 0.72 | 0.725 |

| Class 2 | 0.6112 | 0.5702 | 0.55 | 0.45 | ||||

| Class 3 | 0.6004 | 0.462 | 0.451 | 0.45 | ||||

| Class 4 | 0.9018 | 0.852 | 0.752 | 0.88 | ||||

| Class 5 | 0.7082 | 0.68 | 0.66 | 0.69 | ||||

| Class 6 | 0.8815 | 0.856 | 0.725 | 0.80 | ||||

| Class 7 | 0.7172 | 0.6812 | 0.66 | 0.69 | ||||

| Mean score | 0.7312 | 0.7052 | 0.6552 | 0.6875 | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sivanandham, S.; Gunaseelan, D.B. Development of an Ensembled Meta-Deep Learning Model for Semantic Road-Scene Segmentation in an Unstructured Environment. Appl. Sci. 2022, 12, 12214. https://doi.org/10.3390/app122312214

Sivanandham S, Gunaseelan DB. Development of an Ensembled Meta-Deep Learning Model for Semantic Road-Scene Segmentation in an Unstructured Environment. Applied Sciences. 2022; 12(23):12214. https://doi.org/10.3390/app122312214

Chicago/Turabian StyleSivanandham, Sangavi, and Dharani Bai Gunaseelan. 2022. "Development of an Ensembled Meta-Deep Learning Model for Semantic Road-Scene Segmentation in an Unstructured Environment" Applied Sciences 12, no. 23: 12214. https://doi.org/10.3390/app122312214