An Extreme Learning Machine for the Simulation of Different Hysteretic Behaviors

Abstract

1. Introduction

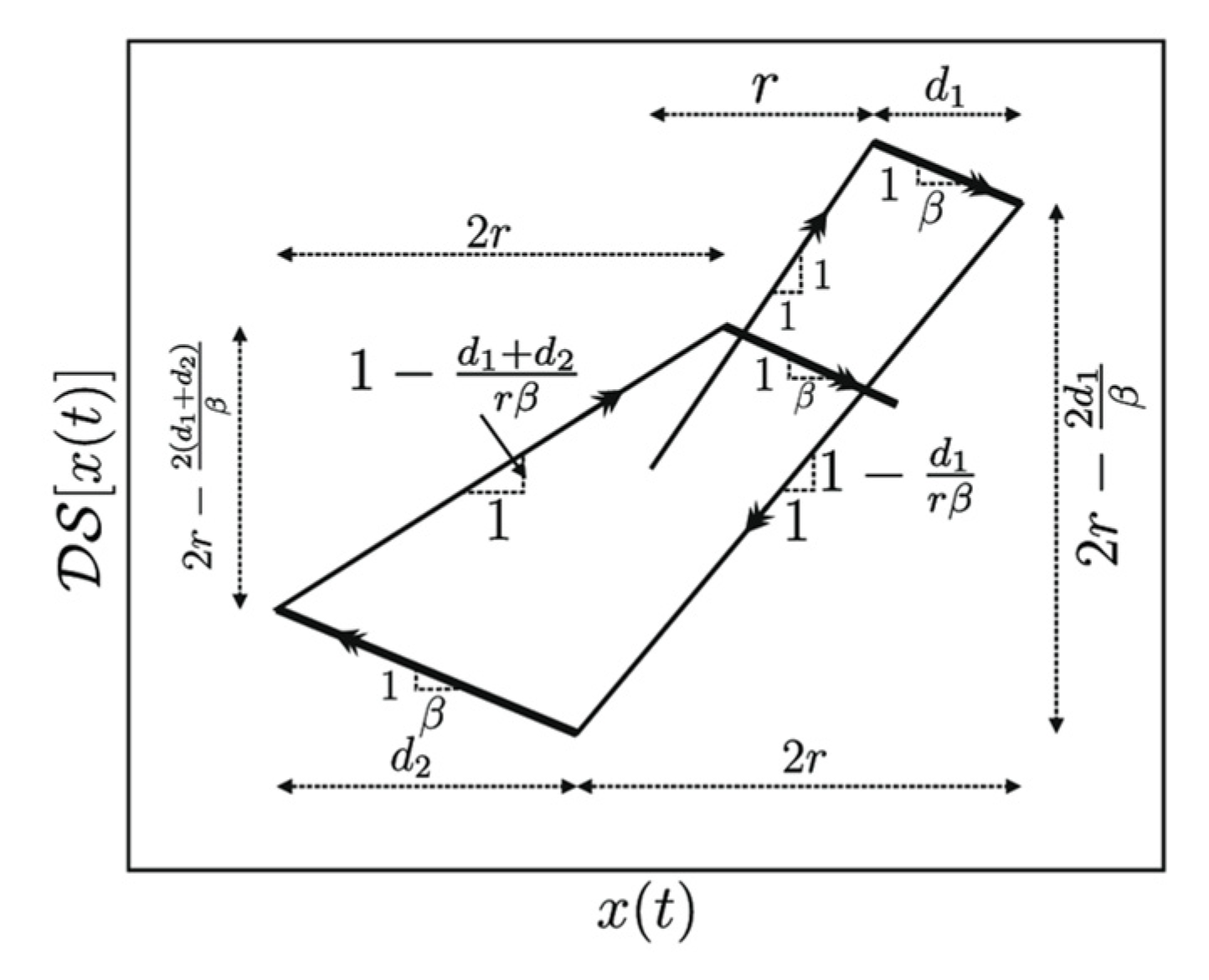

2. Deteriorating Stop Operator

3. Extreme Learning Machine

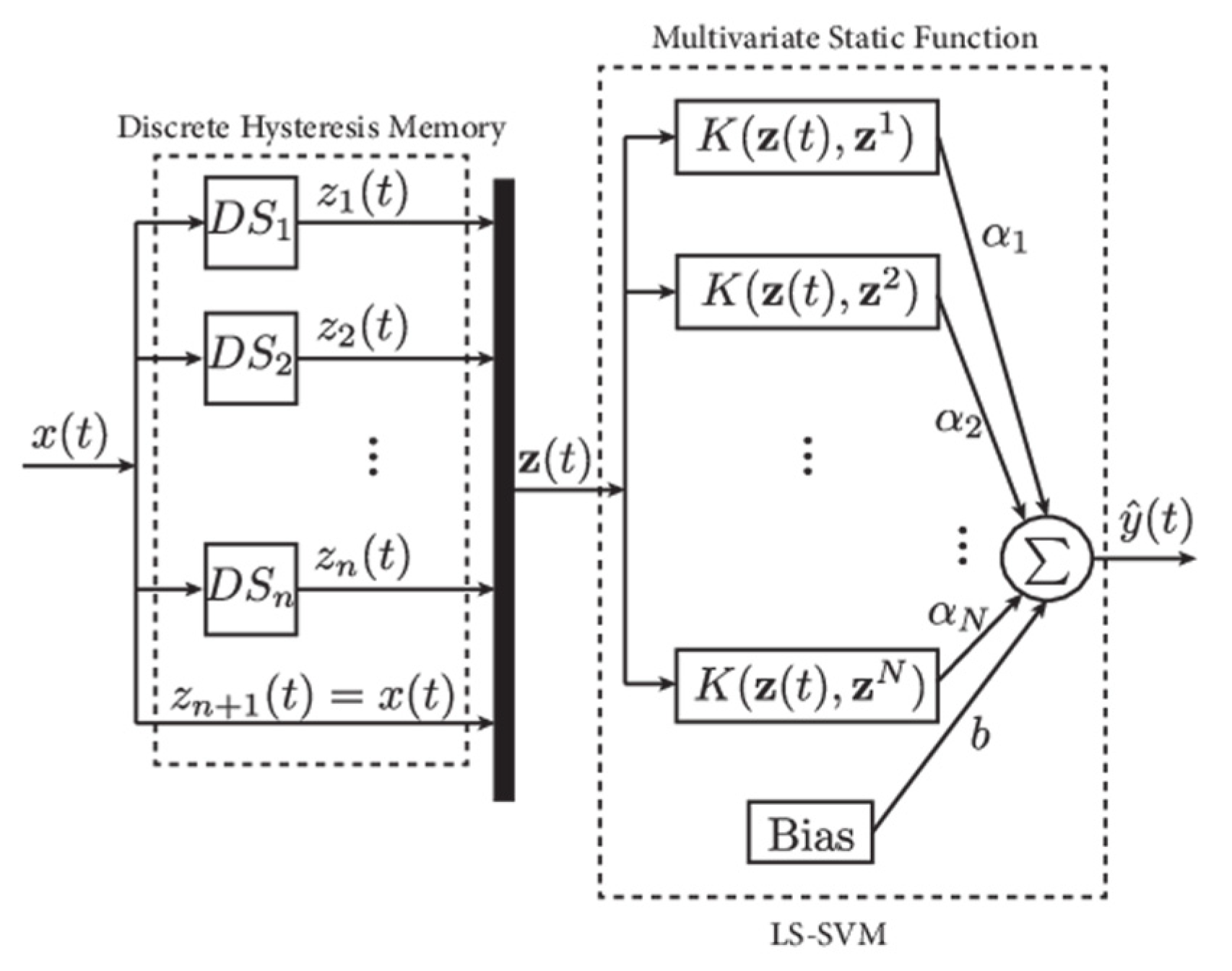

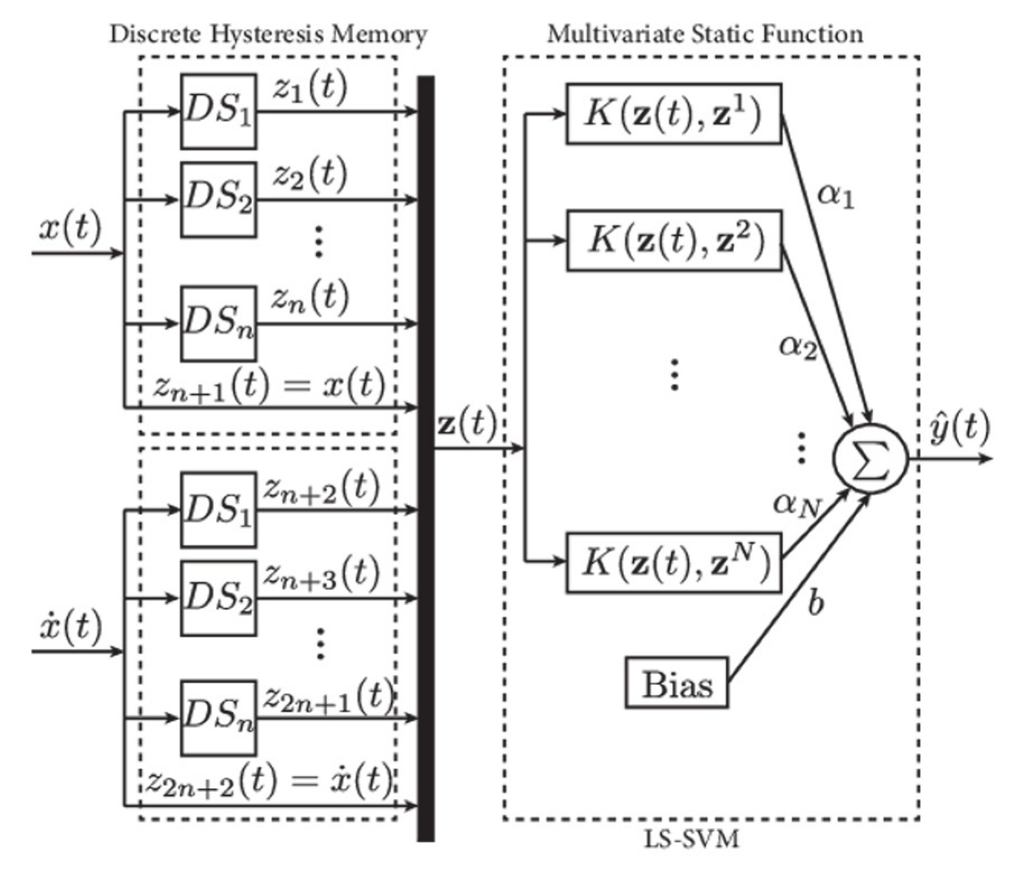

4. Least−Squares Support Vector Machine

5. ELM−SVM Model

- Choose the appropriate number of DS neurons and the value of ;

- Set to and for rate−independent and rate−dependent memories, respectively;

- Randomly set the internal DS neuron parameters and considering Equations (12) and (13);

- Using the Nelder−Mead method, the LS−SVM part’s hyperparameters are obtained based on the cross−validation approach. The internal parameters of LS−SVM, and , are determined using Equation (8) during each iteration of the Nelder−Mead method.

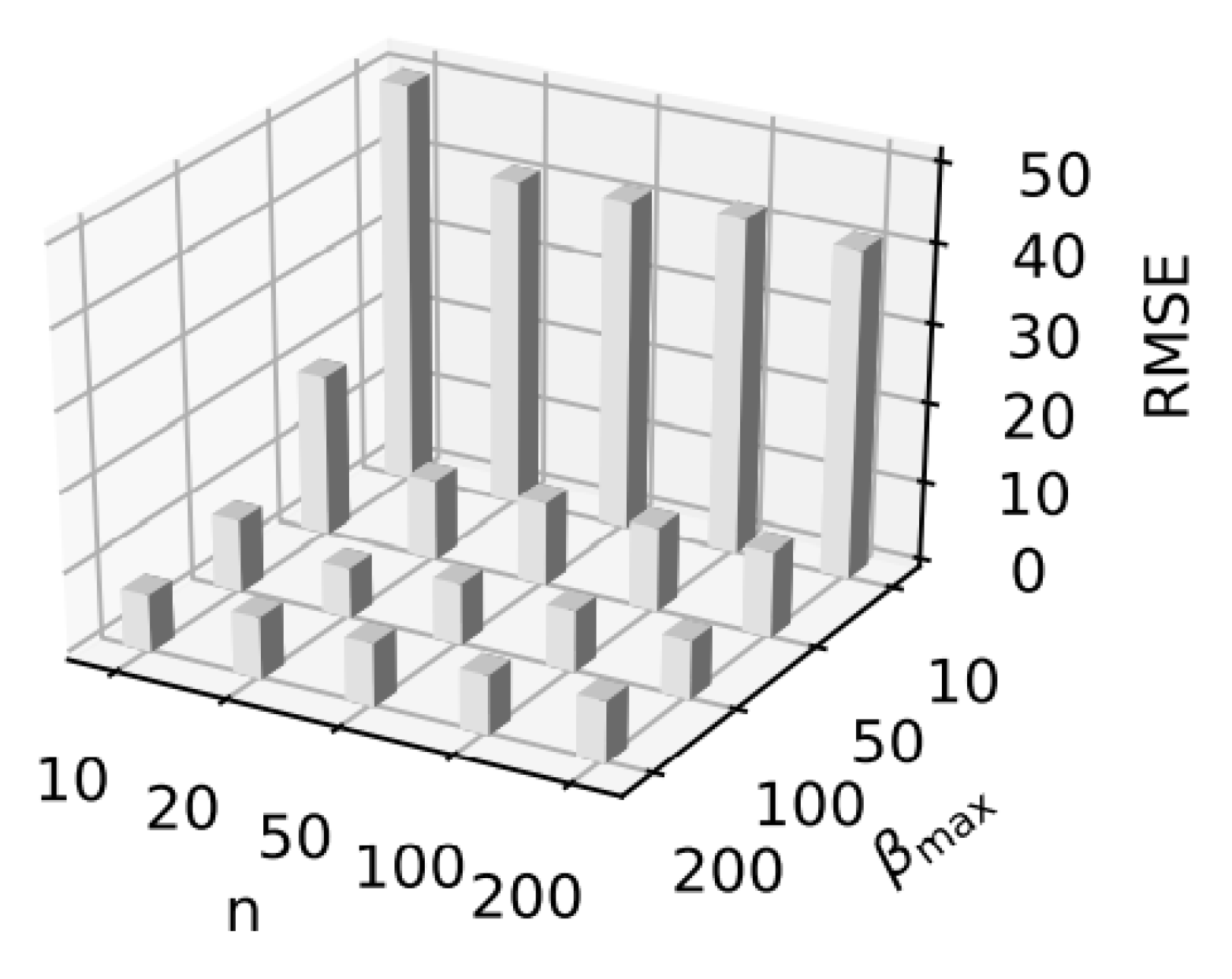

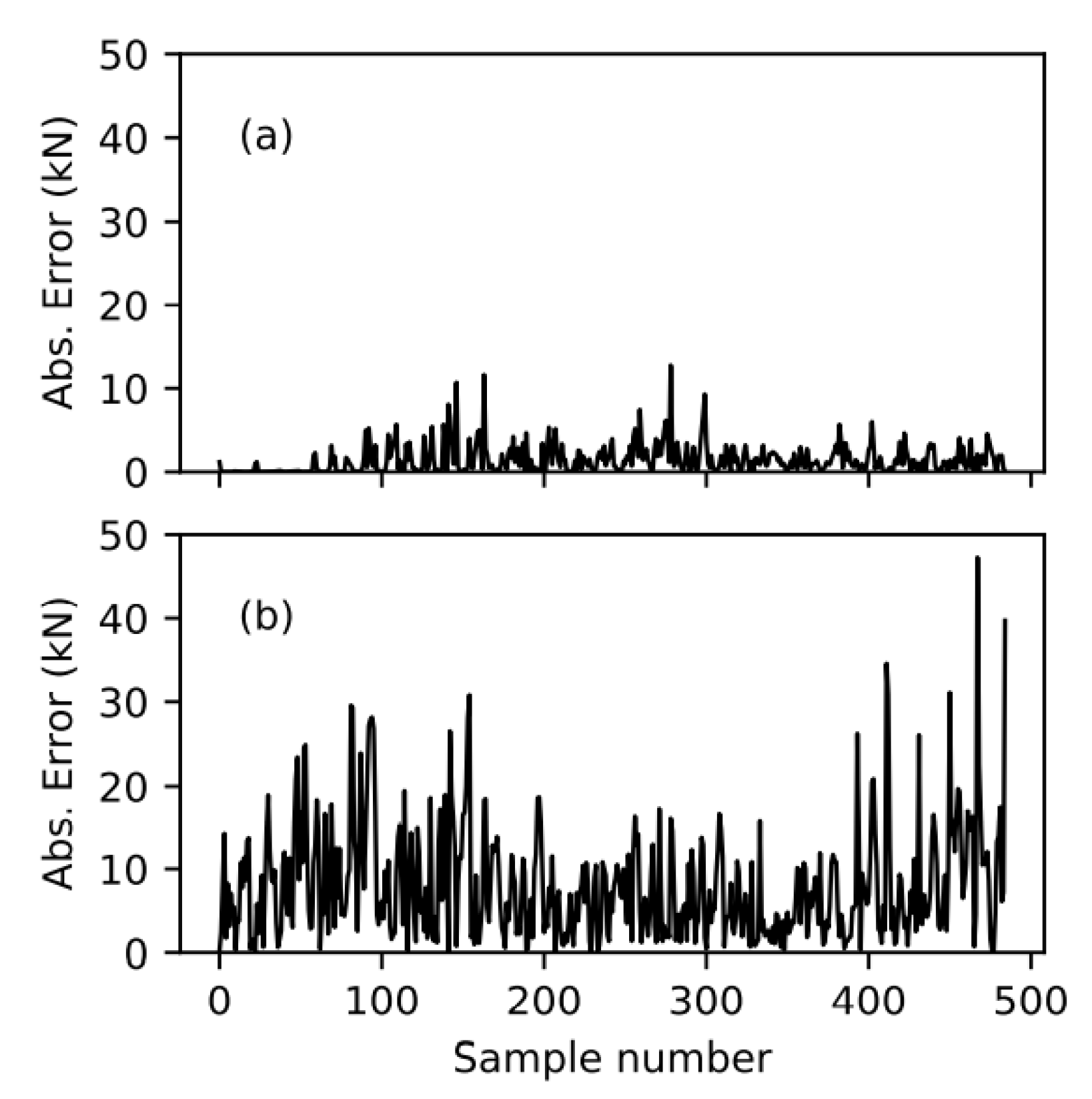

6. Assessment of the ELM−SVM Model

6.1. Symmetric, Non−Congruent, and Rat−Independent Hysteresis

6.2. Asymmetric Non−Congruent Rate−Independent Hysteresis

6.3. Asymmetric Non−Masing Rate−Independent Hysteresis

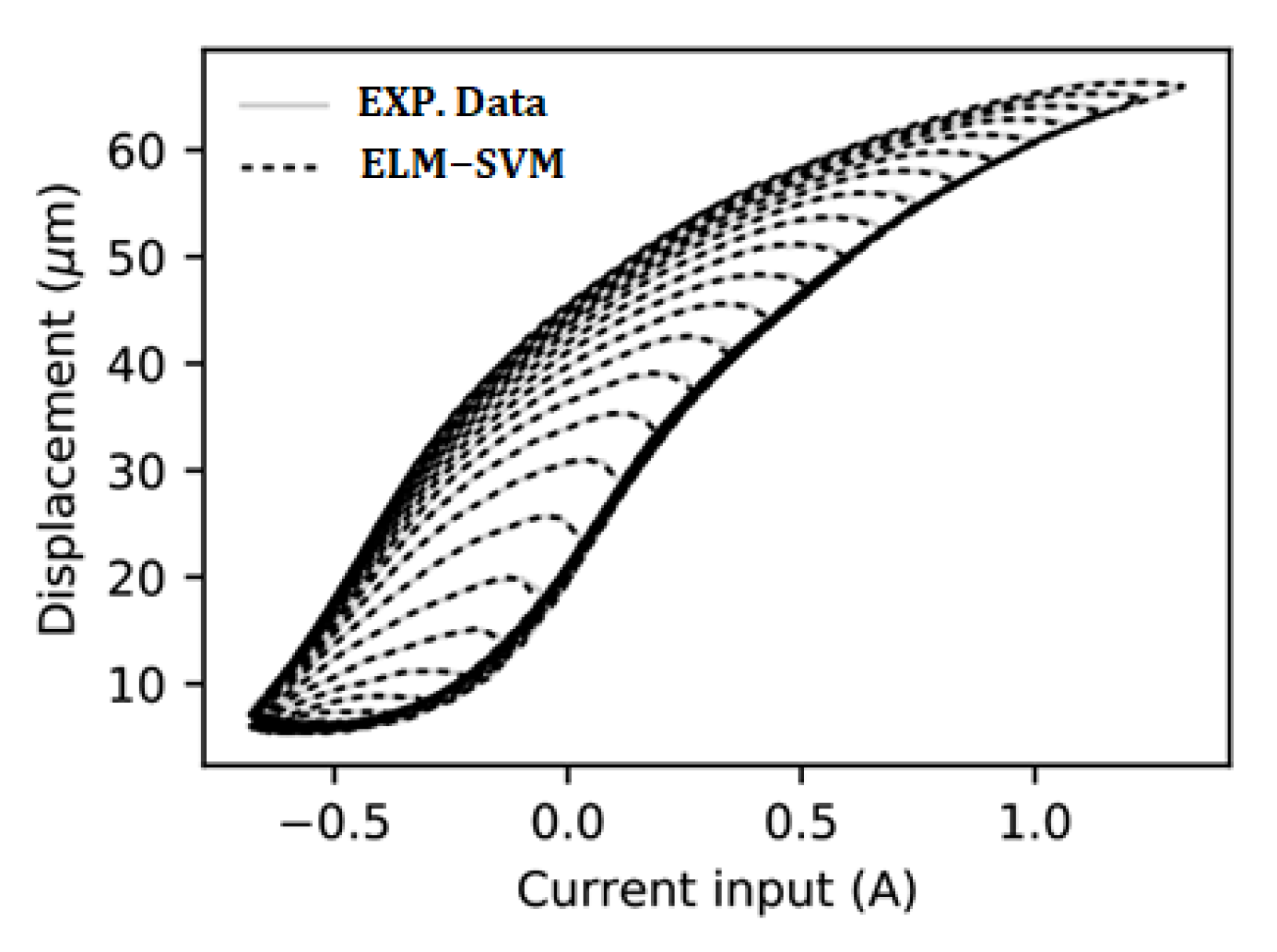

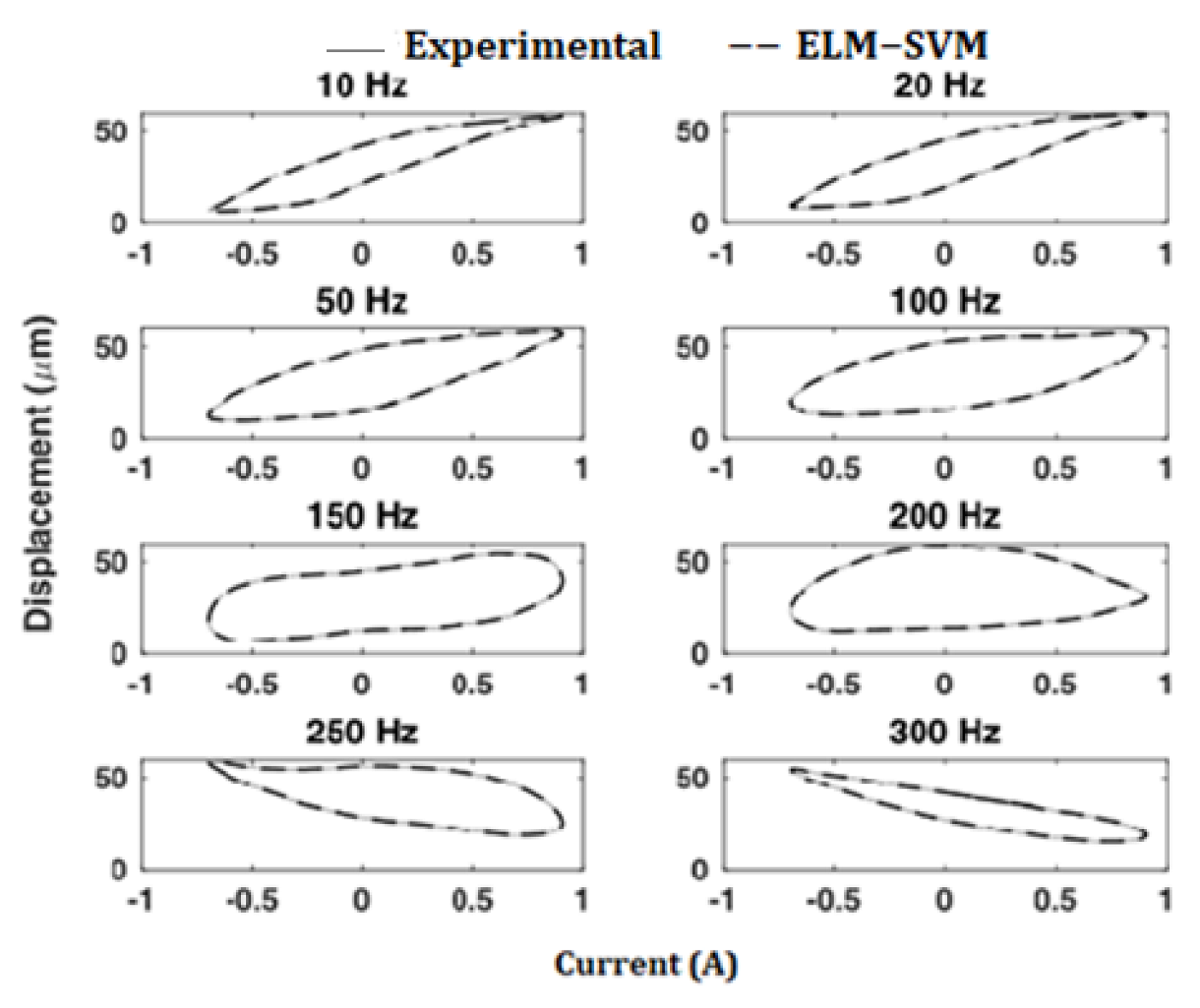

6.4. Symmetric Rate−Dependent Hysteresis

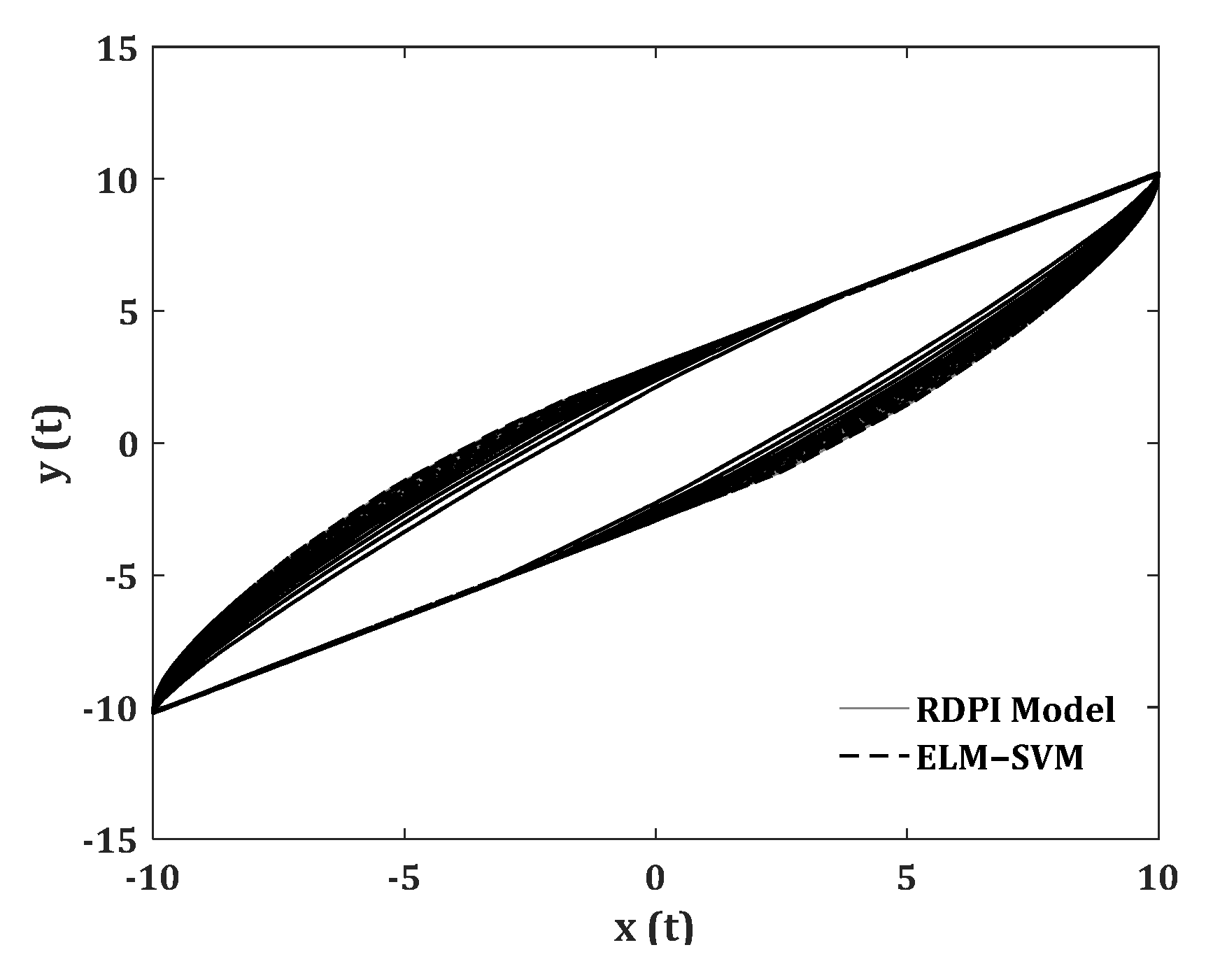

6.5. Asymmetric Rate-Dependent Non-Congruent Hysteresis

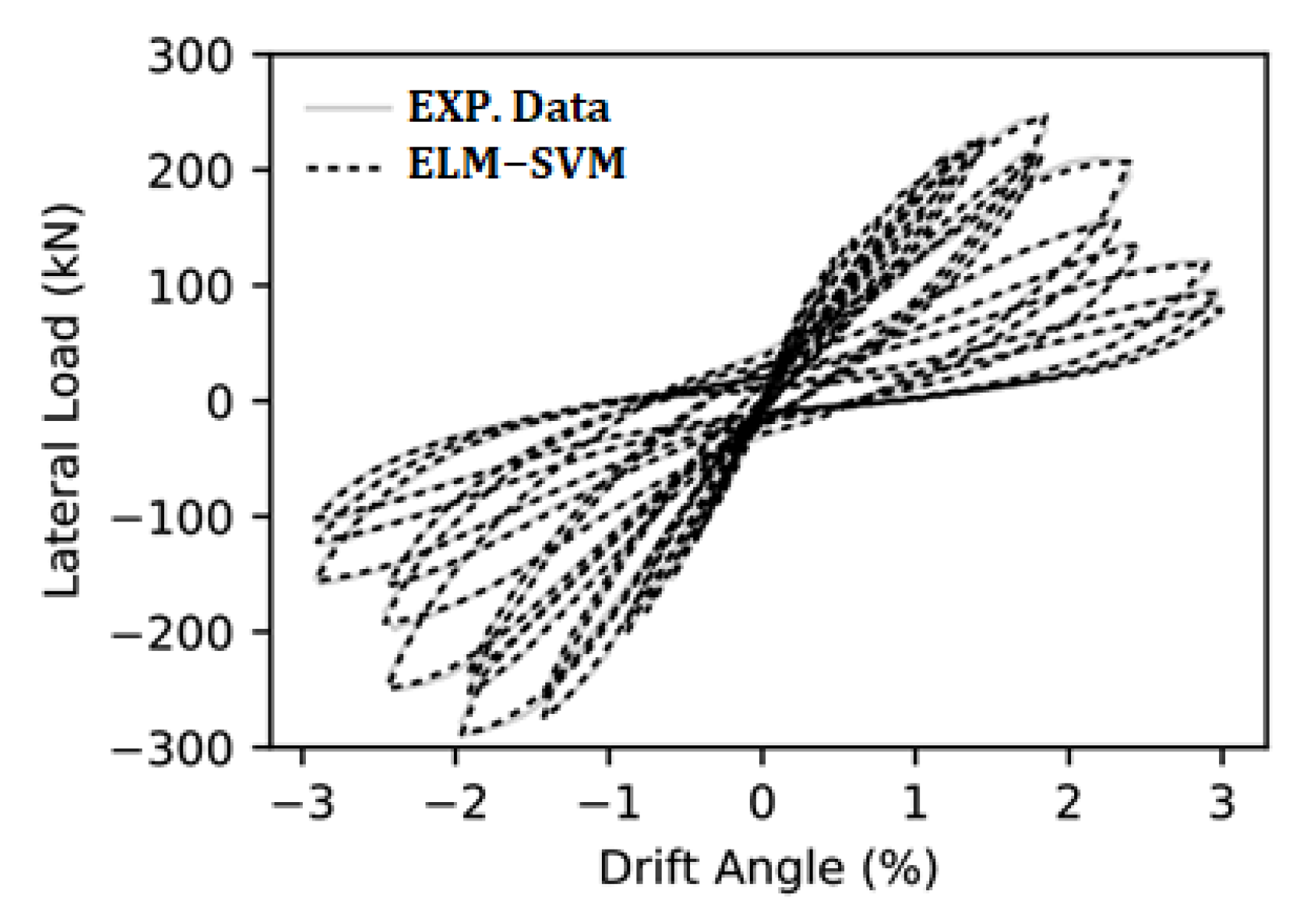

6.6. Hysteretic of Rate−Dependent

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, Y.; Qiu, J.; Palacios, J.; Smith, E.C. Tracking control of piezoelectric stack actuator using modified Prandtl–Ishlinskii model. J. Intell. Mater. Syst. Struct. 2012, 24, 753–760. [Google Scholar] [CrossRef]

- Liu, X.; Wu, Y.; Zhang, Y.; Wang, B.; Peng, H. Inverse model–based iterative learning control on hysteresis in giant magnetostrictive actuator. J. Intell. Mater. Syst. Struct. 2013, 25, 1233–1242. [Google Scholar] [CrossRef]

- Baber, T.T.; Noori, M.N. Random vibration of degrading, pinching systems. J. Eng. Mech. 1985, 111, 1010–1026. [Google Scholar] [CrossRef]

- Chatterjee, S. Parasuchus hislopi Lydekker, 1885 (Reptilia, Archosauria): Proposed replacement of the lectotype by a neotype. Bull. Zool. Nomencl. 2001, 58, 34–36. [Google Scholar]

- Preisach, F. Über die magnetische Nachwirkung. Z. Für Phys. 1935, 94, 277–302. [Google Scholar] [CrossRef]

- Wen, Y.-K. Method for random vibration of hysteretic systems. J. Eng. Mech. Div. 1976, 102, 249–263. [Google Scholar] [CrossRef]

- Wen, Y. Methods of random vibration for inelastic structures. Appl. Mech. Rev. 1989, 42, 39–52. [Google Scholar] [CrossRef]

- Masing, G. Eigenspannumyen und verfeshungung beim messing. Proc. Inter. Congr. Appl. Mech. 1926, 332–335. [Google Scholar]

- Baber, T.T.; Noori, M.N. Modeling general hysteresis behavior and random vibration application. J. Vib. Acoust. 1986, 108, 411–420. [Google Scholar] [CrossRef]

- Zhao, Y.; Noori, M.; Altabey, W.A.; Awad, T. A comparison of three different methods for the identification of hysterically degrading structures using BWBN model. Front. Built Environ. 2019, 4, 80. [Google Scholar] [CrossRef]

- Noori, M.; Altabey, W.A. Hysteresis in Engineering Systems. Appl. Sci. 2022, 12, 9428. [Google Scholar] [CrossRef]

- Wang, T.; Noori, M.; Altabey, W.A.; Farrokh, M.; Ghiasi, R. Parameter identification and dynamic response analysis of a modified Prandtl–Ishlinskii asymmetric hysteresis model via least-mean square algorithm and particle swarm optimization. Proc. Inst. Mech. Eng. Part L J. Mater. Des. Appl. 2021, 235, 2639–2653. [Google Scholar] [CrossRef]

- Hornik, K. Approximation capabilities of multilayer feedforward networks. Neural Netw. 1991, 4, 251–257. [Google Scholar] [CrossRef]

- Ghaboussi, J.; Garrett, J., Jr.; Wu, X. Knowledge-based modeling of material behavior with neural networks. J. Eng. Mech. 1991, 117, 132–153. [Google Scholar] [CrossRef]

- Masri, S.F.; Chassiakos, A.G.; Caughey, T.K. Identification of nonlinear dynamic systems using neural networks. J. Appl. Mech. 1993, 60, 123–133. [Google Scholar] [CrossRef]

- Siegelmann, H.T.; Horne, B.G.; Giles, C.L. Computational capabilities of recurrent NARX neural networks. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 1997, 27, 208–215. [Google Scholar] [CrossRef]

- Serpico, C.; Visone, C. Magnetic hysteresis modeling via feed-forward neural networks. IEEE Trans. Magn. 1998, 34, 623–628. [Google Scholar] [CrossRef]

- Farrokh, M.; Dizaji, M.S. Adaptive simulation of hysteresis using neuro-Madelung model. J. Intell. Mater. Syst. Struct. 2016, 27, 1713–1724. [Google Scholar] [CrossRef]

- Farrokh, M.; Joghataie, A. Adaptive modeling of highly nonlinear hysteresis using preisach neural networks. J. Eng. Mech. 2014, 140, 06014002. [Google Scholar] [CrossRef]

- Joghataie, A.; Farrokh, M. Dynamic analysis of nonlinear frames by Prandtl neural networks. J. Eng. Mech. 2008, 134, 961–969. [Google Scholar] [CrossRef]

- Joghataie, A.; Farrokh, M. Matrix analysis of nonlinear trusses using prandtl-2 neural networks. J. Sound Vib. 2011, 330, 4813–4826. [Google Scholar] [CrossRef]

- Farrokh, M.; Dizaji, M.S.; Joghataie, A. Modeling hysteretic deteriorating behavior using generalized Prandtl neural network. J. Eng. Mech. 2015, 141, 04015024. [Google Scholar] [CrossRef]

- Farrokh, M. Hysteresis simulation using least-squares support vector machine. J. Eng. Mech. 2018, 144, 04018084. [Google Scholar] [CrossRef]

- Suykens, J.A.K.; Vandewalle, J. Least squares support vector machine classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

- Yang, J.; Bouzerdoum, A.; Phung, S.L. A training algorithm for sparse LS-SVM using compressive sampling. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010. [Google Scholar]

- Xu, Q. Identification and compensation of piezoelectric hysteresis without modeling hysteresis inverse. IEEE Trans. Ind. Electron. 2012, 60, 3927–3937. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhou, H.; Ding, X.; Zhang, R. Extreme learning machine for regression and multiclass classification. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2012, 42, 513–529. [Google Scholar] [CrossRef]

- Yu, Y.; Li, Y.; Li, J.; Gu, X.; Royel, S. Dynamic modeling of magnetorheological elastomer base isolator based on extreme learning machine. In Mechanics of Structures and Materials XXIV.; CRC Press: Boca Raton, FL, USA, 2019; pp. 732–737. [Google Scholar]

- Li, W.; Chen, C.; Su, H.; Du, Q. Local binary patterns and extreme learning machine for hyperspectral imagery classification. IEEE Trans. Geosci. Remote. Sens. 2015, 53, 3681–3693. [Google Scholar] [CrossRef]

- Nelder, J.A.; Mead, R. A simplex method for function minimization. Comput. J. 1965, 7, 308–313. [Google Scholar] [CrossRef]

- Pantelides, C.P.; Clyde, C.; Reaveley, L.D. Performance-Based Evaluation of Exterior Reinforced Concrete Building Joints for Seismic Excitation; Pacific Earthquake Engineering Research Center, College of Engineering: Berkeley, CA, USA, 2000. [Google Scholar]

- Sinha, B.; Gerstle, K.H.; Tulin, L.G. Stress-strain relations for concrete under cyclic loading. J. Proc. 1964, 61, 195–212. [Google Scholar]

- Tan, X.; Baras, J.S. Modeling and control of hysteresis in magnetostrictive actuators. Automatica 2004, 40, 1469–1480. [Google Scholar] [CrossRef]

- Tan, X.; Baras, J. Adaptive identification and control of hysteresis in smart materials. IEEE Trans. Autom. Control 2005, 50, 827–839. [Google Scholar]

- Al Janaideh, M.; Rakheja, S.; Su, C.-Y. An analytical generalized Prandtl–Ishlinskii model inversion for hysteresis compensation in micropositioning control. IEEE/ASME Trans. Mechatron. 2011, 16, 734–744. [Google Scholar] [CrossRef]

- Aphale, S.S.; Devasia, S.; Moheimani, S.O.R. High-bandwidth control of a piezoelectric nanopositioning stage in the presence of plant uncertainties. Nanotechnology 2008, 19, 125503. [Google Scholar] [CrossRef]

- Tan, U.-X.; Latt, W.T.; Widjaja, F.; Shee, C.Y.; Riviere, C.N.; Ang, W.T. Tracking control of hysteretic piezoelectric actuator using adaptive rate-dependent controller. Sens. Actuators A Phys. 2009, 150, 116–123. [Google Scholar] [CrossRef] [PubMed]

- Gu, G.; Zhu, L. Modeling of rate-dependent hysteresis in piezoelectric actuators using a family of ellipses. Sens. Actuators A Phys. 2011, 165, 303–309. [Google Scholar] [CrossRef]

- Al Janaideh, M.; Krejčí, P. Inverse rate-dependent Prandtl–Ishlinskii model for feedforward compensation of hysteresis in a piezomicropositioning actuator. IEEE/ASME Trans. Mechatron. 2012, 18, 1498–1507. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Farrokh, M.; Ghasemi, F.; Noori, M.; Wang, T.; Sarhosis, V. An Extreme Learning Machine for the Simulation of Different Hysteretic Behaviors. Appl. Sci. 2022, 12, 12424. https://doi.org/10.3390/app122312424

Farrokh M, Ghasemi F, Noori M, Wang T, Sarhosis V. An Extreme Learning Machine for the Simulation of Different Hysteretic Behaviors. Applied Sciences. 2022; 12(23):12424. https://doi.org/10.3390/app122312424

Chicago/Turabian StyleFarrokh, Mojtaba, Farzaneh Ghasemi, Mohammad Noori, Tianyu Wang, and Vasilis Sarhosis. 2022. "An Extreme Learning Machine for the Simulation of Different Hysteretic Behaviors" Applied Sciences 12, no. 23: 12424. https://doi.org/10.3390/app122312424

APA StyleFarrokh, M., Ghasemi, F., Noori, M., Wang, T., & Sarhosis, V. (2022). An Extreme Learning Machine for the Simulation of Different Hysteretic Behaviors. Applied Sciences, 12(23), 12424. https://doi.org/10.3390/app122312424