Face Gender and Age Classification Based on Multi-Task, Multi-Instance and Multi-Scale Learning

Abstract

:Featured Application

Abstract

1. Introduction

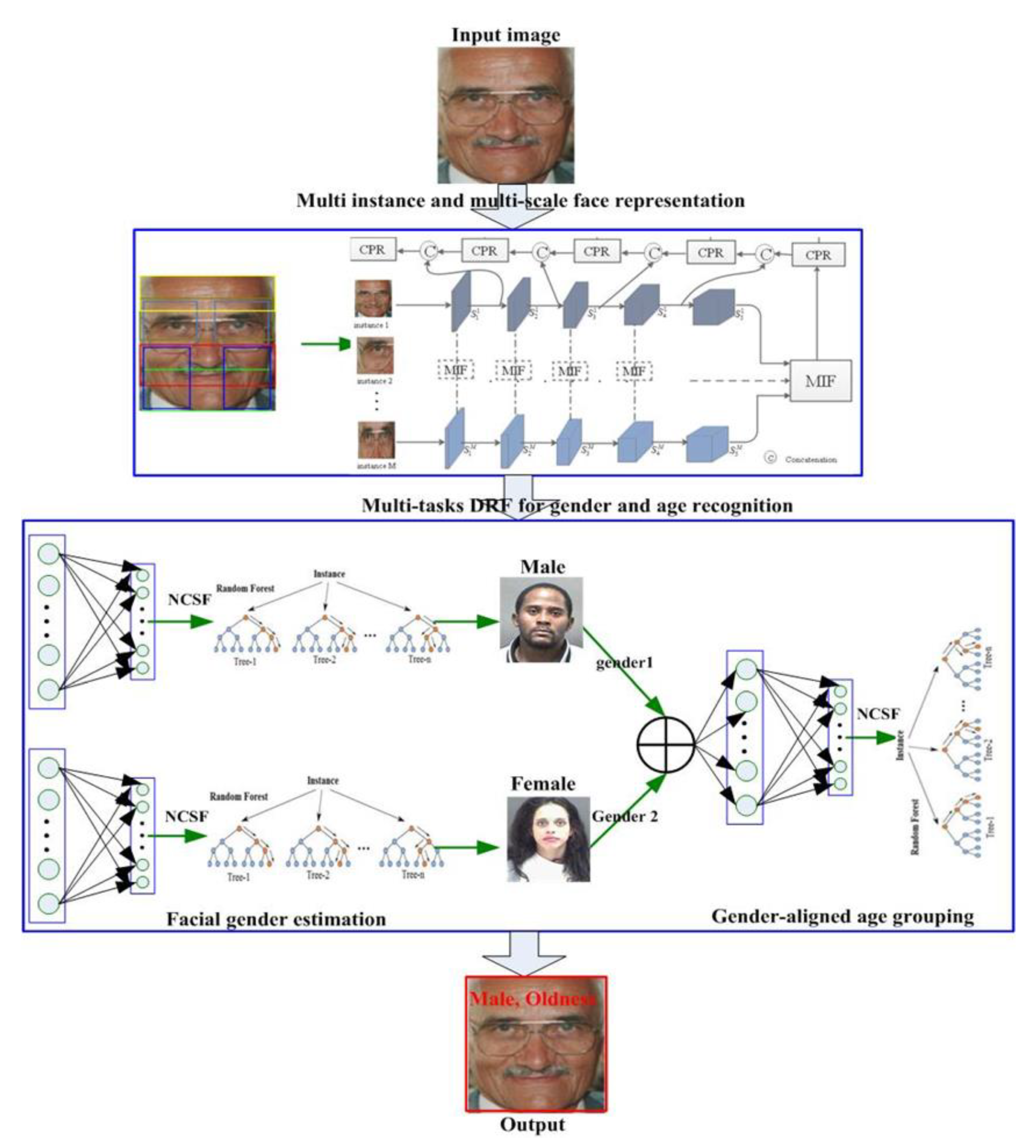

- A multi-instance- and multi-scale-enhanced multi-task random forest is proposed to process gender and age classifications together, which exploits the advantages of CNN and RF.

- We propose a multi-instance- and multi-scale-enhanced facial multi-task feature extraction model, which can alleviate the intra-subject variations in faces, such as illumination, expression, pose and occlusion.

- We propose a gender-aligned conditional probabilistic learning model for facial age grouping to suppress inter-subject variations.

- CNNs: Convolutional Neural Networks

- LBPs: Local Binary Patterns

- BIF: Biologically Inspired Feature

- SFPs: Spatially Flexible Patches

- SVM: Support Vector Machine

- SVR: Support Vector Regression

- CCA: Canonical Correlation Analysis

- PLS: Partial Least Squares

- RF: Random Forest

- DRF: Deep Random Forest

- SLFN: Feedforward Neural Network

- BP: Back Propagation

- MML: Multi-instance and Multi-scale Learning

- MMFL: Multi-scale Fusion Learning Network

- MIF: Multi-Instance Fusion

- GAP: Global Average Pooling

- FC: Fully Connected

- IRBs: Inverted Residual Blocks

- CPR: Compact Pyramid Refinement

- NCSF: Neurally Connected Split Function

2. Facial Gender and Age Classification Based on MML and DRF

2.1. Deep Feature Representation by MML

2.1.1. Facial Instance Selection

2.1.2. Multi-Instance Learning

2.1.3. Multi-Scale Integration Learning

2.2. DRF Model

3. Experimental Results

3.1. Datasets and Settings

3.2. Face Feature Extraction Experiments

3.3. Facial Gender and Age Recognition

- Facial Gender Estimation:

- Facial age grouping:

3.4. Facial Gender Alignment Analysis

4. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Eidinger, E.; Enbar, R.; Hassner, T. Age and gender estimation of unfiltered faces. IEEE Trans. Inf. Forensics Secur. 2014, 9, 2170–2179. [Google Scholar] [CrossRef]

- Levi, G.; Hassner, T. Age and gender classification using convolutional neural networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 34–42. [Google Scholar]

- Wu, C.; Luo, C.; Xiong, N.; Zhang, W.; Kim, T.H. A greedy deep learning method for medical disease analysis. IEEE Access 2018, 6, 20021–20030. [Google Scholar] [CrossRef]

- Gupta, S.K.; Yesuf, S.H.; Nain, N. Real-Time Gender Recognition for Juvenile and Adult Faces. Comput. Intell. Neurosci. 2022, 2022, 1503188. [Google Scholar] [CrossRef]

- Sendik, O.; Keller, Y. DeepAge: Deep learning of face-based age estimation. Signal Process. Image Commun. 2019, 78, 368–375. [Google Scholar] [CrossRef]

- Guehairia, O.; Ouamane, A.; Dornaika, F.; Taleb-Ahmed, A. Feature fusion via Deep Random Forest for facial age estimation. Neural Netw. 2020, 130, 238–252. [Google Scholar] [CrossRef]

- Gupta, S.K.; Nain, N. Single attribute and multi attribute facial gender and age estimation. Multimed. Tools Appl. 2022, 1–23. [Google Scholar] [CrossRef]

- Dantcheva, A.; Elia, P.; Ross, A. What else does your biometric data reveal? A survey on soft biometrics. IEEE Trans. Inf. Forensics Secur. 2015, 11, 441–467. [Google Scholar] [CrossRef] [Green Version]

- Gunay, A.; Nabiyev, V.V. Automatic age classification with LBP. In Proceedings of the 2008 23rd International Symposium on Computer and Information Sciences, Istanbul, Turkey, 27–29 October 2008; pp. 1–4. [Google Scholar]

- Gao, F.; Ai, H. Face age classification on consumer images with gabor feature and fuzzy lda method. In Proceedings of the International Conference on Biometrics, Alghero, Italy, 2–5 June 2009; Springer: Cham, Switzerland, 2009; pp. 132–141. [Google Scholar]

- Guo, G.; Mu, G.; Fu, Y.; Huang, T.S. Human age estimation using bio-inspired features. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 112–119. [Google Scholar]

- Yan, S.; Liu, M.; Huang, T.S. Extracting age information from local spatially flexible patches. In Proceedings of the 2008 IEEE International Conference on Acoustics, Speech and Signal Processing, Las Vegas, NV, USA, 31 March–4 April 2008; pp. 737–740. [Google Scholar]

- Huang, H.; Wei, X.; Zhou, Y. An overview on twin support vector regression. Neurocomputing 2022, 490, 80–92. [Google Scholar] [CrossRef]

- Karthikeyan, V.; Priyadharsini, S.S. Adaptive boosted random forest-support vector machine based classification scheme for speaker identification. Appl. Soft Comput. 2022, 131, 109826. [Google Scholar]

- Guo, G.; Mu, G. Joint estimation of age, gender and ethnicity: CCA vs. PLS. In Proceedings of the 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Shanghai, China, 22–26 April 2013; pp. 1–6. [Google Scholar]

- Guo, G.; Mu, G. Simultaneous dimensionality reduction and human age estimation via kernel partial least squares regression. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 657–664. [Google Scholar]

- Greco, A.; Saggese, A.; Vento, M.; Vigilante, V. Effective training of convolutional neural networks for age estimation based on knowledge distillation. Neural Comput. Appl. 2021, 34, 21449–21464. [Google Scholar] [CrossRef]

- Adjabi, I.; Ouahabi, A.; Benzaoui, A.; Taleb-Ahmed, A. Past, present, and future of face recognition: A review. Electronics 2020, 9, 1188. [Google Scholar] [CrossRef]

- Wang, X.; Guo, R.; Kambhamettu, C. Deeply-learned feature for age estimation. In Proceedings of the 2015 IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2015; pp. 534–541. [Google Scholar]

- Zhang, K.; Gao, C.; Guo, L.; Sun, M.; Yuan, X.; Han, T.X.; Zhao, Z.; Li, B. Age group and gender estimation in the wild with deep RoR architecture. IEEE Access 2017, 5, 22492–22503. [Google Scholar] [CrossRef]

- Niu, X.X.; Suen, C.Y. A novel hybrid CNN–SVM classifier for recognizing handwritten digits. Pattern Recognit. 2012, 45, 1318–1325. [Google Scholar] [CrossRef]

- Liu, F.; Lin, G.; Shen, C. CRF learning with CNN features for image segmentation. Pattern Recognit. 2015, 48, 2983–2992. [Google Scholar] [CrossRef] [Green Version]

- Xie, G.S.; Zhang, X.Y.; Yan, S.; Liu, C.L. Hybrid CNN and dictionary-based models for scene recognition and domain adaptation. IEEE Trans. Circuits Syst. Video Technol. 2015, 27, 1263–1274. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Yuan, X.; Gong, X.; Xie, Z.; Fang, F.; Luo, Z. Conditional convolution neural network enhanced random forest for facial expression recognition. Pattern Recognit. 2018, 84, 251–261. [Google Scholar] [CrossRef]

- Lanitis, A.; Draganova, C.; Christodoulou, C. Comparing different classifiers for automatic age estimation. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2004, 34, 621–628. [Google Scholar] [CrossRef]

- Ricanek, K.; Tesafaye, T. Morph: A longitudinal image database of normal adult age-progression. In Proceedings of the 7th International Conference on Automatic Face and Gesture Recognition (FGR06), Southampton, UK, 10–12 April 2006; pp. 341–345. [Google Scholar]

- Escalera, S.; Gonzalez, J.; Baró, X.; Pardo, P.; Fabian, J.; Oliu, M.; Escalante, H.J.; Huerta, I.; Guyon, I. Chalearn looking at people 2015 new competitions: Age estimation and cultural event recognition. In Proceedings of the 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–17 July 2015; pp. 1–8. [Google Scholar]

- Wu, C.; Ju, B.; Wu, Y.; Lin, X.; Xiong, N.; Xu, G.; Li, H.; Liang, X. UAV autonomous target search based on deep reinforcement learning in complex disaster scene. IEEE Access 2019, 7, 117227–117245. [Google Scholar] [CrossRef]

- Fu, A.; Zhang, X.; Xiong, N.; Gao, Y.; Wang, H.; Zhang, J. VFL: A verifiable federated learning with privacy-preserving for big data in industrial IoT. IEEE Trans. Ind. Inform. 2022, 18, 3316–3326. [Google Scholar] [CrossRef]

- Kumar, P.; Kumar, R.; Srivastava, G.; Gupta, G.P.; Tripathi, R.; Gadekallu, T.R.; Xiong, N.N. PPSF: A privacy-preserving and secure framework using blockchain-based machine-learning for IoT-driven smart cities. IEEE Trans. Netw. Sci. Eng. 2021, 8, 2326–2341. [Google Scholar] [CrossRef]

- Chen, Y.; Zhou, L.; Pei, S.; Yu, Z.; Chen, Y.; Liu, X.; Du, J.; Xiong, N. KNN-BLOCK DBSCAN: Fast clustering for large-scale data. IEEE Trans. Syst. Man Cybern. Syst. 2019, 51, 3939–3953. [Google Scholar] [CrossRef]

- Zhao, J.; Huang, J.; Xiong, N. An effective exponential-based trust and reputation evaluation system in wireless sensor networks. IEEE Access 2019, 7, 33859–33869. [Google Scholar] [CrossRef]

- Xia, F.; Hao, R.; Li, J.; Xiong, N.; Yang, L.T.; Zhang, Y. Adaptive GTS allocation in IEEE 80.215. 4 for real-time wireless sensor networks. J. Syst. Archit. 2013, 59, 1231–1242. [Google Scholar]

- Yao, Y.; Xiong, N.; Park, J.H.; Ma, L.; Liu, J. Privacy-preserving max/min query in two-tiered wireless sensor networks. Comput. Math. Appl. 2013, 65, 1318–1325. [Google Scholar] [CrossRef]

- Gao, Y.; Xiang, X.; Xiong, N.; Huang, B.; Lee, H.J.; Alrifai, R.; Jiang, X.; Fang, Z. Human action monitoring for healthcare based on deep learning. IEEE Access 2018, 6, 52277–52285. [Google Scholar] [CrossRef]

- Cheng, H.; Xie, Z.; Shi, Y.; Xiong, N. Multi-step data prediction in wireless sensor networks based on one-dimensional CNN and bidirectional LSTM. IEEE Access 2019, 7, 117883–117896. [Google Scholar] [CrossRef]

- Saggu, G.S.; Gupta, K.; Mann, P.S. Efficient Classification for Age and Gender of Unconstrained Face Images. In Proceedings of the International Conference on Computational Intelligence and Emerging Power System, Ajmer, India, 9–10 March 2021; Springer: Singapore, 2022; pp. 13–24. [Google Scholar]

- Yang, Z.; Zhang, H.; Sudjianto, A.; Zhang, A. An effective SteinGLM initialization scheme for training multi-layer feedforward sigmoidal neural networks. Neural Netw. 2021, 139, 149–157. [Google Scholar] [CrossRef]

- Dikananda, A.R.; Ali, I.; Fathurrohman; Rinaldi, R.A.; Iin. Genre e-sport gaming tournament classification using machine learning technique based on decision tree, Naive Bayes, and random forest algorithm; IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2021; Volume 1088, p. 012037. [Google Scholar]

- Bai, J.; Li, Y.; Li, J.; Yang, X.; Jiang, Y.; Xia, S.T. Multinomial random forest. Pattern Recognit. 2022, 122, 108331. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Wu, Y.H.; Liu, Y.; Xu, J.; Bian, J.W.; Gu, Y.C.; Cheng, M.M. MobileSal: Extremely efficient RGB-D salient object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 10261–10269. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

| 0–2 | 4–6 | 8–13 | 15–20 | 25–32 | 38–43 | 48–53 | 60− | Total | |

|---|---|---|---|---|---|---|---|---|---|

| Male | 745 | 928 | 934 | 734 | 2308 | 1294 | 392 | 442 | 8192 |

| Female | 682 | 1234 | 1360 | 919 | 2589 | 1056 | 433 | 427 | 9411 |

| Both | 1427 | 2162 | 2294 | 1653 | 4897 | 2350 | 825 | 869 | 17,603 |

| Features | SVM (Gender/Age) | DRF (Gender/Age) |

|---|---|---|

| MMFL | 92.35/55.24 | 93.48/63.72 |

| Gabor [10] | 82.61/42.72 | 82.45/48.62 |

| LBP [9] | 84.52/41.47 | 85.06/47.67 |

| BIF [11] | 83.48/44.06 | 83.67/50.61 |

| Plain CNN [2] | 86.83/50.75 | 87.14/55.32 |

| ResNet50 [44] | 88.21/51.58 | 89.84/58.05 |

| Methods | Accuracy | |

|---|---|---|

| MORPH-II | Adience | |

| plain CNN | 98.7 | 86.8 |

| RoR | 99.5 | 92.43 |

| CNN-ELM | 98.5 | 88.2 |

| Ours | 99.6 | 93.48 |

| Methods | Accuracy | |

|---|---|---|

| MORPH-II | Adience | |

| plain CNN | 89.15 | 50.7 |

| RoR | 94.86 | 62.34 |

| CNN-ELM | 92.58 | 52.3 |

| Ours | 96.14 | 63.72 |

| Group1: 16–30 | Group2: 31–45 | Group3: 46–60+ | |

|---|---|---|---|

| Group1: 16–30 | 97.8 | 1.4 | 0.8 |

| Group2: 31–45 | 1.8 | 96.6 | 1.6 |

| Group3: 46–60+ | 3.2 | 2.78 | 94.02 |

| 0–2 | 4–6 | 8–13 | 15–20 | 25–32 | 38–43 | 48–53 | 60− | |

|---|---|---|---|---|---|---|---|---|

| 0–2 | 66.90 | 24.35 | 8.50 | 0.25 | 0 | 0 | 0 | 0 |

| 4–6 | 21.44 | 63.03 | 14.36 | 1.17 | 0 | 0 | 0 | 0 |

| 8–13 | 2.57 | 15.36 | 62.97 | 18.76 | 0.34 | 0 | 0 | 0 |

| 15–20 | 0 | 0.79 | 16.56 | 64.20 | 15.97 | 2.48 | 0 | 0 |

| 25–32 | 0 | 0 | 0.74 | 13.73 | 65.35 | 19.15 | 1.03 | 0 |

| 38–43 | 0 | 0 | 0 | 0.8 | 18.38 | 61.78 | 17.46 | 1.58 |

| 48–53 | 0 | 0 | 0 | 1.82 | 3.46 | 15.28 | 60.29 | 19.15 |

| 60− | 0 | 0 | 0 | 0.44 | 4.53 | 10.63 | 19.16 | 65.24 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liao, H.; Yuan, L.; Wu, M.; Zhong, L.; Jin, G.; Xiong, N. Face Gender and Age Classification Based on Multi-Task, Multi-Instance and Multi-Scale Learning. Appl. Sci. 2022, 12, 12432. https://doi.org/10.3390/app122312432

Liao H, Yuan L, Wu M, Zhong L, Jin G, Xiong N. Face Gender and Age Classification Based on Multi-Task, Multi-Instance and Multi-Scale Learning. Applied Sciences. 2022; 12(23):12432. https://doi.org/10.3390/app122312432

Chicago/Turabian StyleLiao, Haibin, Li Yuan, Mou Wu, Liangji Zhong, Guonian Jin, and Neal Xiong. 2022. "Face Gender and Age Classification Based on Multi-Task, Multi-Instance and Multi-Scale Learning" Applied Sciences 12, no. 23: 12432. https://doi.org/10.3390/app122312432

APA StyleLiao, H., Yuan, L., Wu, M., Zhong, L., Jin, G., & Xiong, N. (2022). Face Gender and Age Classification Based on Multi-Task, Multi-Instance and Multi-Scale Learning. Applied Sciences, 12(23), 12432. https://doi.org/10.3390/app122312432