Smartphone-Based Photogrammetry Assessment in Comparison with a Compact Camera for Construction Management Applications

Abstract

:Featured Application

Abstract

1. Introduction

2. Materials and Methods

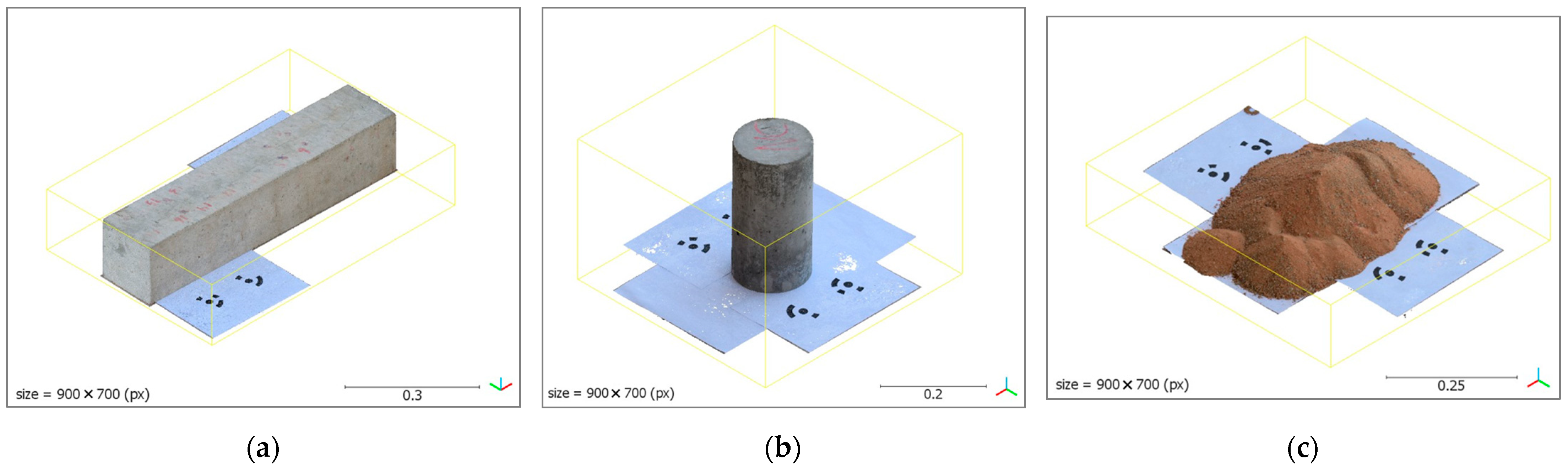

2.1. Data Acquisition

2.2. Image Processing

2.2.1. Features Detection and Matching

2.2.2. BBA and Camera Self-Calibration

2.2.3. Multi-View Stereo (MVS)

2.2.4. Meshing and Texture Mapping Algorithms

2.3. Scaling 3D Data

2.4. Extracting Geometrical Measurements

3. Results and Discussion

3.1. Lens Distortion

3.2. Images Alignment and 3D Reconstruction

- The number of projections, which represents the total number of projections projected from all overlapping images to compute and construct all the matched points. The number of projections is correlated to the number of points successfully matched and constructed. This correlation is given by the tie multiplicity parameter.

- Tie multiplicity (i.e., image redundancy)—that, is the average number of projections or images contribute to calculating a given 3D point. It can be estimated by the following ratio:where is the number of projections used to reconstruct point (i), and is the total number of reconstructed points (i.e., sparse cloud size). An average tie multiplicity value of 2.396 indicates that an average of 2.396 images were used to compute and reconstruct a given 3D point in the bundle adjustment step by triangulating this point from those images into the 3D space. Higher multiplicity values propose greater reliability of the computed 3D points, given that the more images that contribute to constructing a 3D point minimizes its positional error. Nevertheless, if the reprojection error associated with a given image is higher relative to the other contributed images, it will result in a higher positional error. Therefore, the tie multiplicity value by itself is not sufficient to judge the reliability of the computed 3D points.

- RMS reprojection error—that is, the root mean square of normalized reprojection error (d), Figure 8. A tie point gets reconstructed as a 3D point by triangulating its corresponding 2D point from all the images sharing that point to compute its relative position. When the 3D point is reconstructed, it is reprojected back on each image that contributed to its reconstruction initially. The reprojected position on the corresponding image does not perfectly match the actual position of the original 2D point on the same image. The Euclidean distance between the two positions (i.e., actual and reprojected) in the image plane represents the reprojection error (d) in that image, Equation (8).

3.3. Sparse Point Cloud

3.4. Dense Point Cloud

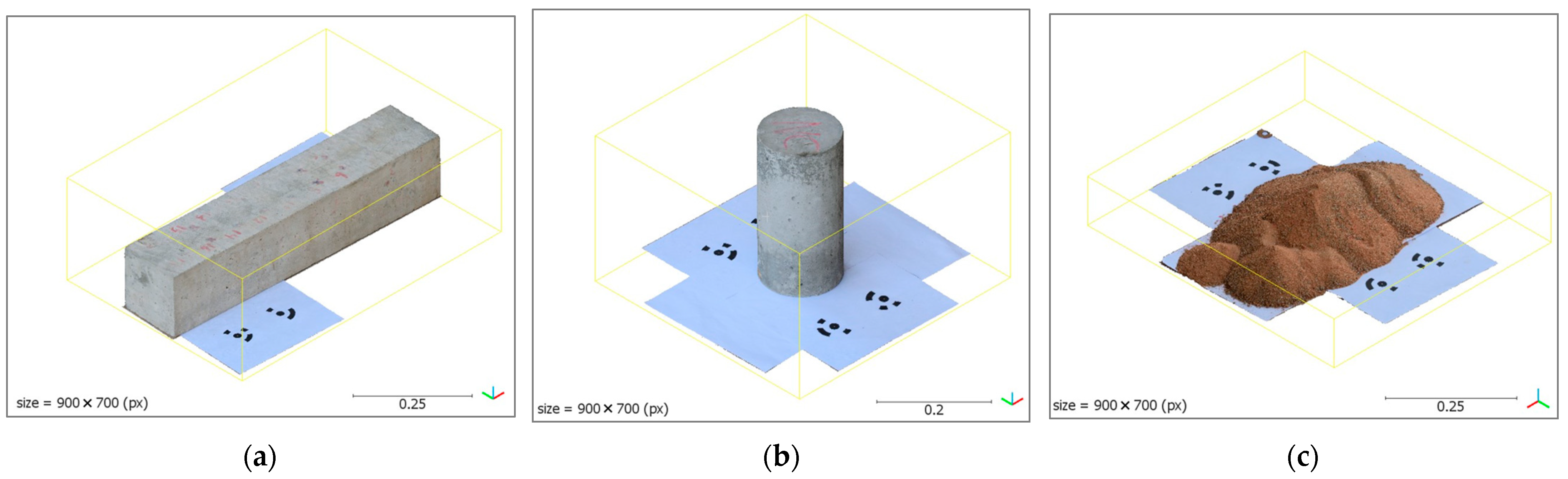

3.5. Polygonal and Textured Models

3.6. Scale Bars

3.7. Geometrical Measurements Extraction

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Alshibani, A.; Moselhi, O. Least cost optimization of scraper–pusher fleet operations. Can. J. Civ. Eng. 2012, 39, 313–322. [Google Scholar] [CrossRef]

- Karachaliou, E.; Georgiou, E.; Psaltis, D.; Stylianidis, E. UAV for Mapping Historic Buildings: From 3D Modelling to BIM. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W9, 397–402. [Google Scholar] [CrossRef] [Green Version]

- Faltýnová, M.; Matoušková, E.; Šedina, J.; Pavelka, K. Building Facade Documentation Using Laser Scanning and Photogrammetry and Data Implementation into BIM. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2016, XLI-B3, 215–220. [Google Scholar] [CrossRef] [Green Version]

- Rocha, G.; Mateus, L.; Fernández, J.; Ferreira, V. A Scan-to-BIM Methodology Applied to Heritage Buildings. Heritage 2020, 3, 47–67. [Google Scholar] [CrossRef] [Green Version]

- Mahami, H.; Nasirzadeh, F.; Ahmadabadian, A.H.; Nahavandi, S. Automated Progress Controlling and Monitoring Using Daily Site Images and Building Information Modelling. Buildings 2019, 9, 70. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Liu, C. Applications of multirotor drone technologies in construction management. Int. J. Constr. Manag. 2019, 19, 401–412. [Google Scholar] [CrossRef]

- Congress, S.S.C.; Puppala, A.J. Novel Methodology of Using Aerial Close Range Photogrammetry Technology for Monitoring the Pavement Construction Projects. In Proceedings of the International Airfield and Highway Pavements Conference, Chicago, IL, USA, 21–24 July 2019. [Google Scholar] [CrossRef]

- Omar, H.; Mahdjoubi, L.; Kheder, G. Towards an automated photogrammetry-based approach for monitoring and controlling construction site activities. Comput. Ind. 2018, 98, 172–182. [Google Scholar] [CrossRef]

- Kim, C.; Son, H. The Effective Acquisition and Processing of 3D Photogrammetric Data from Digital Photogrammetry for Construction Progress Measurement. In Proceedings of the International Workshop on Computing in Civil Engineering, Miami, FL, USA, 19–22 June 2011. [Google Scholar] [CrossRef]

- Dai, F.; Lu, M. Assessing the Accuracy of Applying Photogrammetry to Take Geometric Measurements on Building Products. J. Constr. Eng. Manag. 2010, 136, 242–250. [Google Scholar] [CrossRef]

- Mora, O.E.; Chen, J.; Stoiber, P.; Koppanyi, Z.; Pluta, D.; Josenhans, R.; Okubo, M. Accuracy of stockpile estimates using low-cost sUAS photogrammetry. Int. J. Remote Sens. 2020, 41, 4512–4529. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999. [Google Scholar] [CrossRef]

- Lowe, D. Local feature view clustering for 3D object recognition. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2001. [Google Scholar] [CrossRef] [Green Version]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Triggs, B.; Mclauchlan, P.F.; Hartley, R.I.; FitzGibbon, A.W. Bundle Adjustment—A Modern Synthesis. In International Workshop on Vision Algorithms; Springer: Berlin/Heidelberg, Germany, 2000. [Google Scholar] [CrossRef] [Green Version]

- Nistér, D. An efficient solution to the five-point relative pose problem. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 756–770. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the World from Internet Photo Collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef] [Green Version]

- Örnhag, M.; Wadenbäck, M. Planar Motion Bundle Adjustment. In Proceedings of the 8th International Conference on Pattern Recognition Applications and Methods, ICPRAM 2019, Prague, Czech Republic, 19–21 February 2019. [Google Scholar] [CrossRef]

- Zefri, Y.; Sebari, I.; Hajji, H.; Aniba, G. In-depth investigation of applied digital photogrammetry to imagery-based RGB and thermal infrared aerial inspection of large-scale photovoltaic installations. Remote Sens. Appl. Soc. Environ. 2021, 23, 100576. [Google Scholar] [CrossRef]

- Carrivick, J.L.; Smith, M.W.; Quincey, D.J. Structure from Motion in the Geosciences. In Structure from Motion in the Geosciences; Wiley: Hoboken, NJ, USA, 2016. [Google Scholar] [CrossRef]

- Micusik, B.; Kosecka, J. Piecewise planar city 3D modeling from street view panoramic sequences. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar] [CrossRef] [Green Version]

- Fawzy, H.E.-D. Study the accuracy of digital close range photogrammetry technique software as a measuring tool. Alex. Eng. J. 2019, 58, 171–179. [Google Scholar] [CrossRef]

- Mokroš, M.; Liang, X.; Surový, P.; Valent, P.; Čerňava, J.; Chudý, F.; Tunák, D.; Saloň, S.; Merganič, J. Evaluation of Close-Range Photogrammetry Image Collection Methods for Estimating Tree Diameters. ISPRS Int. J. Geo-Inf. 2018, 7, 93. [Google Scholar] [CrossRef] [Green Version]

- Miller, J.; Morgenroth, J.; Gomez, C. 3D modelling of individual trees using a handheld camera: Accuracy of height, diameter and volume estimates. Urban For. Urban Green. 2015, 14, 932–940. [Google Scholar] [CrossRef]

- Morgenroth, J.; Gomez, C. Assessment of tree structure using a 3D image analysis technique—A proof of concept. Urban For. Urban Green. 2014, 13, 198–203. [Google Scholar] [CrossRef]

- Akpo, H.A.; Atindogbé, G.; Obiakara, M.C.; Gbedolo, M.A.; Laly, F.G.; Lejeune, P.; Fonton, N.H. Accuracy of tree stem circumference estimation using close range photogrammetry: Does point-based stem disk thickness matter? Trees For. People 2020, 2, 100019. [Google Scholar] [CrossRef]

- Wróżyński, R.; Pyszny, K.; Sojka, M.; Przybyła, C.; Murat-Błażejewska, S. Ground volume assessment using ’Structure from Motion’ photogrammetry with a smartphone and a compact camera. Open Geosci. 2017, 9, 281–294. [Google Scholar] [CrossRef]

- Li, L.; Li, P. Discussion of “Verification of an Internal Close-Range Photogrammetry Approach for Volume Determination during Triaxial Testing” by S. Salazar, L. Miramontes, A. Barnes, M. Bernhardt-Barry, and R. Coffman, published in Geotechnical Testing Journal. 42, no. 6 (2019): 1640–1662. Geotech. Test. J. 2020, 44, 222–228. [Google Scholar] [CrossRef]

- Hu, J.; Liu, E.; Yu, J. Application of Structural Deformation Monitoring Based on Close-Range Photogrammetry Technology. Adv. Civ. Eng. 2021, 2021, 6621440. [Google Scholar] [CrossRef]

- Di Stefano, F.; Cabrelles, M.; García-Asenjo, L.; Lerma, J.; Malinverni, E.; Baselga, S.; Garrigues, P.; Pierdicca, R. Evaluation of Long-Range Mobile Mapping System (MMS) and Close-Range Photogrammetry for Deformation Monitoring. A Case Study of Cortes de Pallás in Valencia (Spain). Appl. Sci. 2020, 10, 6831. [Google Scholar] [CrossRef]

- Chu, X.; Zhou, Z.; Deng, G.; Duan, X.; Jiang, X. An Overall Deformation Monitoring Method of Structure Based on Tracking Deformation Contour. Appl. Sci. 2019, 9, 4532. [Google Scholar] [CrossRef] [Green Version]

- Moyano, J.; Nieto-Julián, J.E.; Bienvenido-Huertas, D.; Marín-García, D. Validation of Close-Range Photogrammetry for Architectural and Archaeological Heritage: Analysis of Point Density and 3d Mesh Geometry. Remote Sens. 2020, 12, 3571. [Google Scholar] [CrossRef]

- Kingsland, K. Comparative analysis of digital photogrammetry software for cultural heritage. Digit. Appl. Archaeol. Cult. Herit. 2020, 18, e00157. [Google Scholar] [CrossRef]

- Marín-Buzón, C.; Pérez-Romero, A.; López-Castro, J.; Ben Jerbania, I.; Manzano-Agugliaro, F. Photogrammetry as a New Scientific Tool in Archaeology: Worldwide Research Trends. Sustainability 2021, 13, 5319. [Google Scholar] [CrossRef]

- Dabove, P.; Grasso, N.; Piras, M. Smartphone-Based Photogrammetry for the 3D Modeling of a Geomorphological Structure. Appl. Sci. 2019, 9, 3884. [Google Scholar] [CrossRef] [Green Version]

- Yilmazturk, F.; Gurbak, A.E. Geometric Evaluation of Mobile-Phone Camera Images for 3D Information. Int. J. Opt. 2019, 2019, 1–10. [Google Scholar] [CrossRef]

- Burdziakowski, P.; Bobkowska, K. UAV Photogrammetry under Poor Lighting Conditions—Accuracy Considerations. Sensors 2021, 21, 3531. [Google Scholar] [CrossRef]

- Sanz-Ablanedo, E.; Chandler, J.H.; Rodríguez-Pérez, J.R.; Ordóñez, C. Accuracy of unmanned aerial vehicle (UAV) and SfM photogrammetry survey as a function of the number and location of ground control points used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef] [Green Version]

- Farella, E.; Torresani, A.; Remondino, F. Refining the Joint 3D Processing of Terrestrial and UAV Images Using Quality Measures. Remote Sens. 2020, 12, 2873. [Google Scholar] [CrossRef]

- Agisoft Metashape. Agisoft Metashape User Manual Version 1.5. Agisoft Metashape. No. September, 2019. p. 160. Available online: https://www.agisoft.com/pdf/metashape-pro_1_5_en.pdf (accessed on 20 July 2021).

- Girardeau-Montaut, D. CloudCompare Version 2.6.1. User Manual. 2015, p. 181. Available online: http://www.danielgm.net/cc/ (accessed on 3 August 2021).

- AgiSoft PhotoScan Professional. Agisoft Metasape. Available online: https://www.agisoft.com (accessed on 20 July 2021).

- Romero, N.L.; Giménez-Chornet, V.; Cobos, J.S.; Carot, A.A.S.; Centellas, F.C.; Mendez, M.C. Recovery of descriptive information in images from digital libraries by means of EXIF metadata. Libr. Hi Tech 2008, 26, 302–315. [Google Scholar] [CrossRef]

- Brown, D.C. Decentering Distortion of Lenses. Photom. Eng. 1966, 32, 444–462. [Google Scholar]

- Brown, D.C. Close-range camera calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- CloudCompare (Version 2.11.3). 2020. Available online: http://www.cloudcompare.org/ (accessed on 3 August 2021).

- Girardeau-Montaut, D. Cloud-to-Mesh Distance. Available online: http://www.cloudcompare.org/doc/wiki/index.php?title=Cloud-to-Mesh_Distance (accessed on 3 August 2021).

- Moselhi, O.; Bardareh, H.; Zhu, Z. Automated Data Acquisition in Construction with Remote Sensing Technologies. Appl. Sci. 2020, 10, 2846. [Google Scholar] [CrossRef] [Green Version]

| Specification | Digital Camera (Nikon-D 3300) | Smartphone (Huawei Mate 10 Lite) |

|---|---|---|

| Camera Model | D-3300 | RNE-L21 |

| Sensor Type | CMOS | BSI-CMOS |

| Sensor Size | APS-C (23.5 mm × 15.6 mm) | ~1/2.9″ 4.8 × 3.6 mm |

| Camera Resolution | 24 MP 6000 × 4000 (3:2) | Dual 16 MP + 2 MP 4608 × 3456 (4:3) |

| Pixel Size | 4.04 μm | ~1.06 μm |

| Focal Length (35 mm equivalent) | 18–55 mm | 27 mm |

| Crop Factor | 1.43 | 6.75 |

| Native ISO | 100–12,800 | 50–3200 |

| Aperture | f/3.5–f/5.6 | f/1.6–f/2.2 |

| Image Format | RAW (NEF), JPEG | JPG |

| Specimen | Capturing Device | Number of Images | Image Quality | Covered Area (m2) |

|---|---|---|---|---|

| Square Beam | DC 1 | 43 | 0.842 | 0.337 |

| SP 2 | 0.894 | 0.474 | ||

| Cylinder | DC | 24 | 0.835 | 0.409 |

| SP | 0.886 | 0.546 | ||

| Sand Pile | DC | 40 | 0.862 | 0.442 |

| SP | 0.893 | 0.504 |

| Capturing Parameters | Digital Camera (Nikon-D 3300) | Smartphone (Huawei Mate 10 Lite) |

|---|---|---|

| Focal Length (35 mm equivalent) | 35 (50) | 4 (27) |

| ISO | 400 | 50 |

| F-stop | f/8 | f/2.2 |

| Shutter Speed | 1/250 | 1/215 |

| Image Format | RAW (NEF): TIFF-16bit | JPG |

| Operating System | Windows 64 bit |

| RAM | 15.92 GB |

| CPU | Intel(R) Core (TM) i7-7700HQ CPU @ 2.80GHz |

| GPUs | GeForce GTX 1050 Intel(R) HD Graphics 630 |

| Value | Error | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 |

| Value | Error | f | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| f | ||||||||||

| 1 |

| Specimen | Capturing Device | N° of Points Detected | N° of Points Matched | N° of Projections | Average Multiplicity | RMSE (pix) |

|---|---|---|---|---|---|---|

| Square Beam | DC | 226,189 | 194,535 | 457,680 | 2.396 | 0.607 |

| SP | 199,752 | 84,572 | 210,912 | 2.472 | 1.2 | |

| Cylinder | DC | 107,530 | 91,634 | 229,964 | 2.531 | 0.79 |

| SP | 129,469 | 82,189 | 232,109 | 2.768 | 1.4 | |

| Sand Pile | DC | 132,618 | 115,289 | 273,129 | 2.466 | 0.656 |

| SP | 143,124 | 78,321 | 211,129 | 2.561 | 1.17 |

| Specimen | Capturing Device | Cloud Size (N° of 3D Points) | Point Size (pix) | Point Color | Processing Time (min) | Memory Usage (GB) |

|---|---|---|---|---|---|---|

| Beam | DC | 194,535 | 4.57 | 3B, U16 | 5.62 | 2.01 |

| SP | 84,572 | 4.54 | 3B, U8 | 6.03 | 1.33 | |

| Cylinder | DC | 91,634 | 4.86 | 3B, U16 | 3.83 | 1.67 |

| SP | 82,189 | 4.57 | 3B, U8 | 4.12 | 1.17 | |

| Sand Pile | DC | 115,289 | 4.18 | 3B, U16 | 6.58 | 1.72 |

| SP | 78,321 | 4.37 | 3B, U8 | 6.26 | 1.37 |

| Specimen | Capturing Device | Cloud Size (N° of Points) | N° of Depth Maps | Depth Maps | Dense Cloud | ||

|---|---|---|---|---|---|---|---|

| Time (min) | Memory Usage (GB) | Time (min) | Memory Usage (GB) | ||||

| Beam | DC | 17,756,572 | 43 | 7.85 | 3.26 | 8.97 | 4 |

| SP | 6,115,497 | 43 | 4.32 | 1.59 | 4.85 | 3.12 | |

| Cylinder | DC | 14,542,288 | 24 | 4.18 | 3.01 | 2.83 | 3.55 |

| SP | 5,197,385 | 24 | 3.35 | 1.42 | 2.05 | 2.62 | |

| Sand Pile | DC | 3,600,957 | 33 | 3.08 | 3.12 | 4.23 | 3.34 |

| SP | 1,246,089 | 35 | 2.32 | 1.49 | 3.18 | 2.58 | |

| Specimen | Capturing Device | N° of Faces | N° of Vertices | Processing Time (min) | Memory Usage (GB) |

|---|---|---|---|---|---|

| Beam | DC | 3,366,273 | 1,685,657 | 16.98 | 8.96 |

| SP | 1,025,557 | 514,162 | 7.43 | 5.61 | |

| Cylinder | DC | 2,762,640 | 1,383,752 | 9.97 | 8.48 |

| SP | 1,039,477 | 521,347 | 3.18 | 3.06 | |

| Sand Pile | DC | 240,062 | 121,109 | 6.64 | 7.24 |

| SP | 78,999 | 40,619 | 2.56 | 2.57 |

| Specimen | Capturing Device | Texture Size (pix) | Color | UV Mapping | Texture Blending | ||

|---|---|---|---|---|---|---|---|

| Time (min) | Memory Usage (GB) | Time (min) | Memory Usage (GB) | ||||

| Beam | DC | 4096 | 4B, U16 | 6.48 | 3.11 | 6.73 | 7.89 |

| SP | 4B, U8 | 5.14 | 2.57 | 0.7 | 1.55 | ||

| Cylinder | DC | 4B, U16 | 6.38 | 2.93 | 3.58 | 8.16 | |

| SP | 4B, U8 | 5.48 | 2.64 | 0.47 | 1.64 | ||

| Sand Pile | DC | 4B, U16 | 5.23 | 2.56 | 2.79 | 7.95 | |

| SP | 4B, U8 | 4.34 | 2.38 | 0.35 | 1.6 | ||

| Specimen | Capturing Device | Scale Bar 1 Error (cm) | Scale Bar 2 Error (cm) |

|---|---|---|---|

| Beam | DC | 0.0008 | −0.0004 |

| SP | −0.0037 | 0.0037 | |

| Cylinder | DC | −0.0009 | 0.0012 |

| SP | −0.0053 | 0.0053 | |

| Sand Pile | DC | 0.0011 | −0.0003 |

| SP | 0.0026 | 0.0026 |

| Specimen | Capturing Device | Surface Area (cm2) | Volume (cm3) | ||||

|---|---|---|---|---|---|---|---|

| Actual | Estimated | Error (%) | Actual | Estimated | Error (%) | ||

| Beam | DC | 5058.56 | 5104.76 | 0.91 | 17466.62 | 17,723 | 1.47 |

| SP | 5154.04 | 1.89 | 17,977 | 2.92 | |||

| Cylinder | DC | 1767.14 | 1774.97 | 0.44 | 5301.43 | 5282 | 0.37 |

| SP | 1775.98 | 0.50 | 5337 | 0.67 | |||

| Sand Pile | DC | _ | 3507.68 | _ | 5490 | 5362 | 2.33 |

| SP | 3558.57 | _ | 5315 | 3.19 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saif, W.; Alshibani, A. Smartphone-Based Photogrammetry Assessment in Comparison with a Compact Camera for Construction Management Applications. Appl. Sci. 2022, 12, 1053. https://doi.org/10.3390/app12031053

Saif W, Alshibani A. Smartphone-Based Photogrammetry Assessment in Comparison with a Compact Camera for Construction Management Applications. Applied Sciences. 2022; 12(3):1053. https://doi.org/10.3390/app12031053

Chicago/Turabian StyleSaif, Wahib, and Adel Alshibani. 2022. "Smartphone-Based Photogrammetry Assessment in Comparison with a Compact Camera for Construction Management Applications" Applied Sciences 12, no. 3: 1053. https://doi.org/10.3390/app12031053

APA StyleSaif, W., & Alshibani, A. (2022). Smartphone-Based Photogrammetry Assessment in Comparison with a Compact Camera for Construction Management Applications. Applied Sciences, 12(3), 1053. https://doi.org/10.3390/app12031053