Implementation of Robots Integration in Scaled Laboratory Environment for Factory Automation

Abstract

:1. Introduction

2. State of the Art

2.1. Mobile Robots for Factory Automation

- Online computation and decision making, which is required in order to avoid unsafe situations and ensure easier incorporation of algorithms with other processes on system without overloading CPU time.

- Ability to adapt to dynamic environments, illumination changes or repetitive environments.

- Low-drift odometry provides information about robot position when it cannot localize itself on the map. Until the SLAM algorithm localizes again, odometry drift should be minimized to provide the system with accurate position information so that navigation is still possible.

2.2. Industrial Robots and Tools

2.3. Integration of Cloud-Enabled Robot Systems

- Environment perception as the vital ability of a system to build knowledge about its surrounding. Collecting information about environment structure and location of obstacles gives robots the ability to predict their future states. The environment perception task usually involves infrared (IR), light detection and ranging (LiDAR) sensors, cameras, etc., and often fused information from these devices.

- Localization as a capability of robots to estimate their position and orientation with respect to the environment.

- Navigation includes the previous two tasks and combines them with an effective planning system. Usually, this task is solved by engaging processes of map building and localization simultaneously, i.e., simultaneous localization and mapping (SLAM) [27].

- With increased computational power and storage space, computation-intensive tasks can be performed in real time, using the cloud infrastructure (computer vision, speech recognition, object recognition, etc.).

- This infrastructure can hold large data, such as global maps, so particular robot navigation can be accomplished with improved safety and efficiency.

- Cloud (server) layer that holds the RoboEarth database containing a global world model with information about the objects, environments, actions, etc.

- Hardware-independent middle layer that serves as a bridge between global knowledge and robot-specific skills. This layer contains generic components as a part of the local robot’s software.

- The layer that represents the robot’s specific skills.

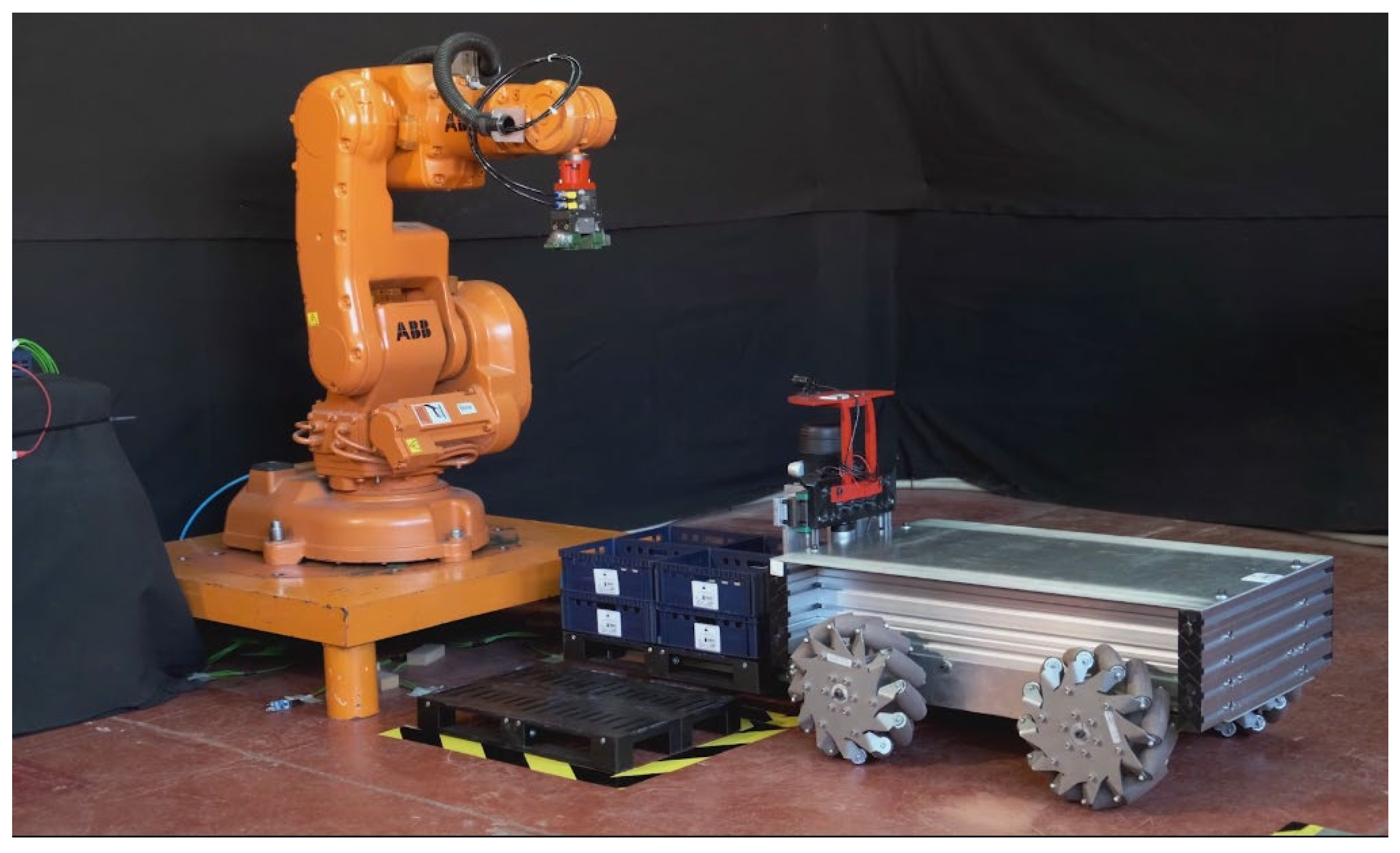

3. Mobile Robot and Industrial Robot Design

3.1. Hardware of Mobile Robot

3.2. Software of Mobile Robot

- The RTAB-SLAM ROS package, assigned to provide system (mobile robot) with both map of the environment and robot localization.

- The TEB ROS package provides a path for a robot to follow, based on a map and odometry from the RTAB-SLAM package.

- The RoboClaw ROS package enables integration of the motor drivers with the rest of the ROS system.

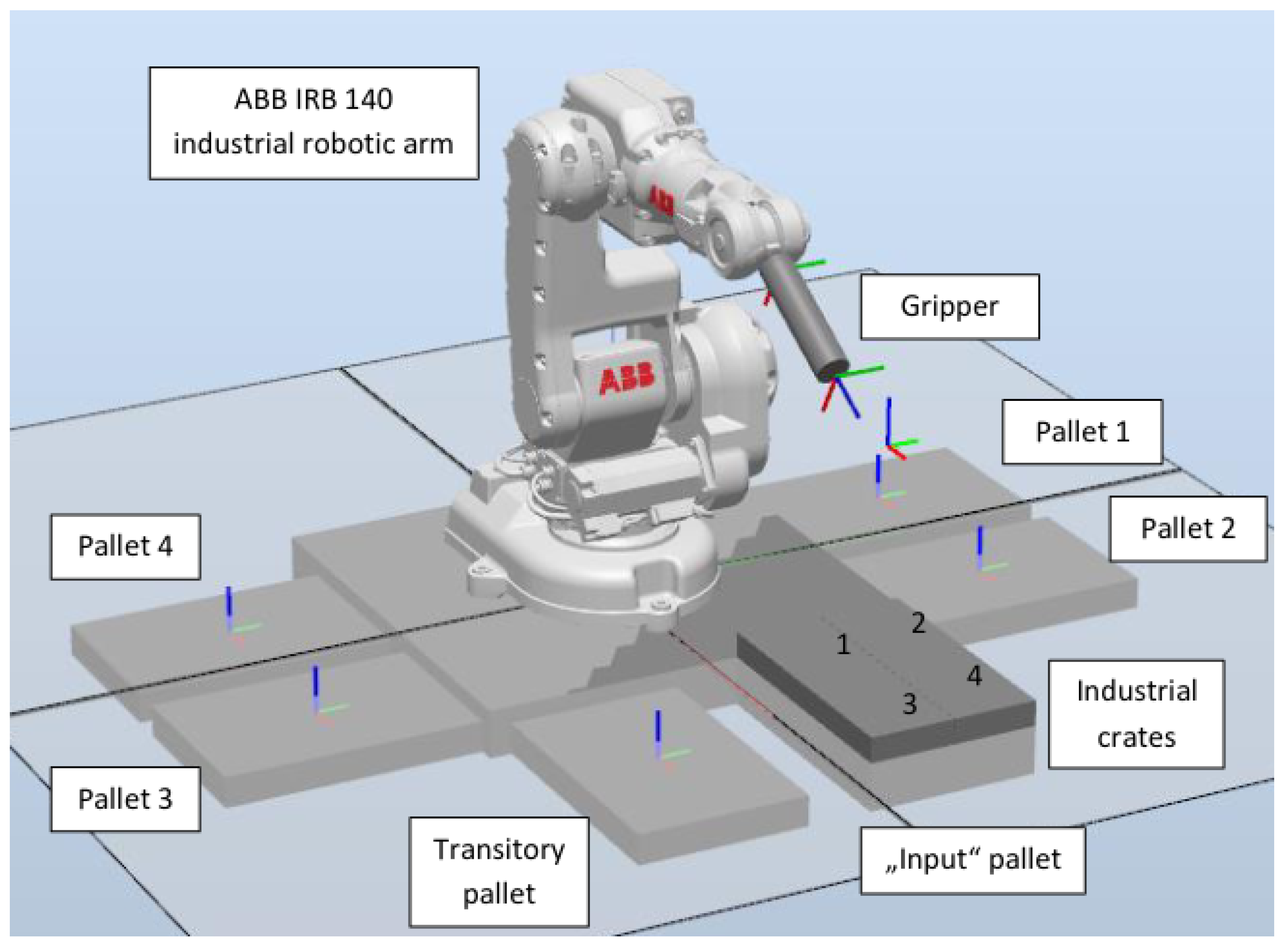

3.3. Industrial Robot and Environment Setup

3.4. Software of Industrial Robot

- abb_driver that enables the communication between personal computer and ABB IRC5 industrial robot controller for robot control. The messages being exchanged contain information about the condition of the robot, such as the position of the robot’s wrists.

- paletizer package, developed for sorting/palletizing industrial crates, as well as to communicate with the rest of the system, e.g., OPIL, from which it receives the commands for palletization and reports on the state of the task.

- abb_irb140_unal, the package that provides information about the physical representation of robots, such as URDF and SRDF records.

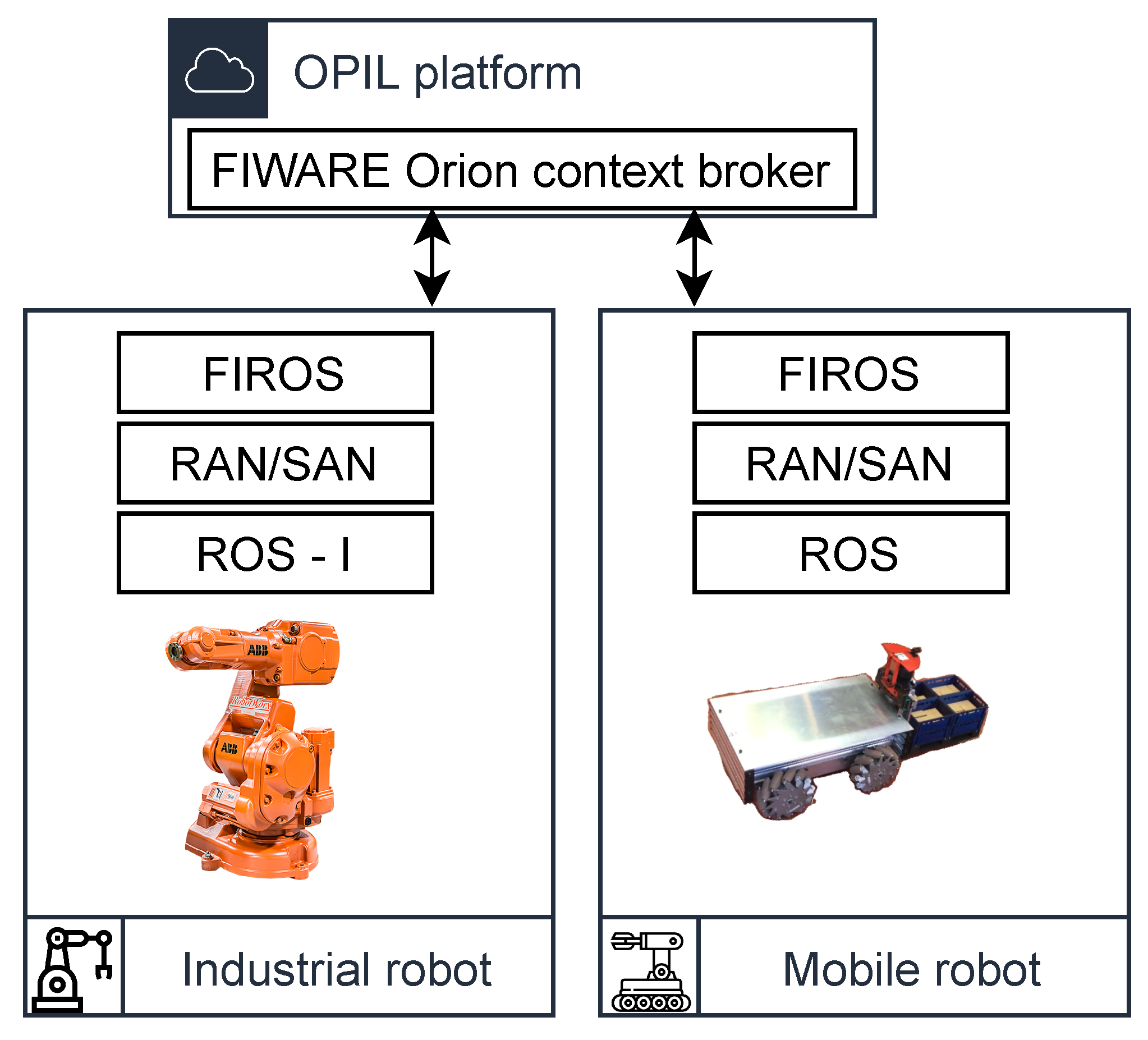

4. System Integration and Experiment

- OPIL server as a cloud infrastructure responsible for hosting the modules, such as task planner, context management, HMI (human–machine interface), SP (sensing and perception).

- Different nodes in the field, including mobile robots, AGVs, forklifts and sensors.

- Robotic agent nodes (RAN) as nodes responsible for dealing with the physical actors. In the OPIL world, that could be manipulation agents (intended for loading and unloading the goods and products) or moving agents (intended for moving goods or products from one place to another).

- Human agent nodes (HAN) as nodes in charge of interfacing with humans.

- Sensor agent node (SAN), i.e., nodes that allow data transfer from various sensing sources to the cloud.

| Algorithm 1 Goal and task reading. |

|

| Algorithm 2 Sorting/palletizing module realization. |

|

| Algorithm 3 Communication with OPIL module. |

|

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Huang, S.; Dissanayake, G. Robot Localization: An Introduction. In Wiley Encyclopedia of Electrical and Electronics Engineering; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2016; pp. 1–10. [Google Scholar] [CrossRef]

- Wong, C.C.; Yeh, L.Y.; Liu, C.C.; Tsai, C.Y.; Aoyama, H. Manipulation Planning for Object Re-Orientation Based on Semantic Segmentation Keypoint Detection. Sensors 2021, 21, 2280. [Google Scholar] [CrossRef]

- Rezende, A.M.; Gonçalves, V.M.; Pimenta, L.C. Safe coordination of robots in cyclic paths. ISA Trans. 2021, 109, 126–140. [Google Scholar] [CrossRef]

- Pinkam, N.; Bonnet, F.; Chong, N.Y. Robot collaboration in warehouse. In Proceedings of the 2016 16th International Conference on Control, Automation and Systems (ICCAS), Gyeongju, Korea, 16–19 October 2016; pp. 269–272. [Google Scholar] [CrossRef]

- Pires, J.N.; Costa, J.; d’Souza, F. Development of a solution for adding a collaborative robot to an industrial AGV. Ind. Robot. 2020, 47, 723–735. [Google Scholar] [CrossRef]

- Kaliappan, S.; Lokesh, J.; Mahaneesh, P.; Siva, M. Mechanical Design and Analysis of AGV for Cost Reduction of Material Handling in Automobile Industries. Int. Res. J. Automot. Technol. 2018, 1, 1–7. [Google Scholar]

- Doroftei, I.; Grosu, V.; Spinu, V. Omnidirectional Mobile Robot—Design and Implementation. In Bioinspiration and Robotics; Habib, M.K., Ed.; IntechOpen: Rijeka, Croatia, 2007; Chapter 29. [Google Scholar] [CrossRef] [Green Version]

- Diegel, O.; Badve, A.; Bright, G.; Potgieter, J.; Tlale, S. Improved Mecanum Wheel Design for Omni-directional Robots. In Proceedings of the Australasian Conference on Robotics and Automation, Wellington, New Zealand, 3–5 December 2012. [Google Scholar]

- Saha, S.K.; Angeles, J.; Darcovich, J. The design of kinematically isotropic rolling robots with omnidirectional wheels. Mech. Mach. Theory 1995, 30, 1127–1137. [Google Scholar] [CrossRef]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved Techniques for Grid Mapping With Rao-Blackwellized Particle Filters. IEEE Trans. Robot. 2007, 23, 34–46. [Google Scholar] [CrossRef] [Green Version]

- Kohlbrecher, S.; von Stryk, O.; Meyer, J.; Klingauf, U. A flexible and scalable SLAM system with full 3D motion estimation. In Proceedings of the 2011 IEEE International Symposium on Safety, Security, and Rescue Robotics, Kyoto, Japan, 1–5 November 2011; pp. 155–160. [Google Scholar] [CrossRef] [Green Version]

- Newman, P.; Ho, K. SLAM-loop closing with visually salient features. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 635–642. [Google Scholar]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef] [Green Version]

- Galvez-López, D.; Tardos, J.D. Bags of Binary Words for Fast Place Recognition in Image Sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Labbé, M.; Michaud, F. RTAB-Map as an open-source lidar and visual simultaneous localization and mapping library for large-scale and long-term online operation. J. Field Robot. 2019, 36, 416–446. [Google Scholar] [CrossRef]

- Fox, D.; Burgard, W.; Thrun, S. The dynamic window approach to collision avoidance. IEEE Robot. Autom. Mag. 1997, 4, 23–33. [Google Scholar] [CrossRef] [Green Version]

- Quinlan, S.; Khatib, O. Elastic bands: Connecting path planning and control. In Proceedings of the IEEE International Conference on Robotics and Automation, Atlanta, GA, USA, 2–6 May 1993; Volume 2, pp. 802–807. [Google Scholar] [CrossRef]

- Rösmann, C.; Hoffmann, F.; Bertram, T. Timed-Elastic-Bands for Time-Optimal Point-to-Point Nonlinear Model Predictive Control. In Proceedings of the 2015 European Control Conference (ECC), Linz, Austria, 15–17 July 2015; pp. 3352–3357. [Google Scholar] [CrossRef]

- Blanes, C.; Mellado, M.; Beltran, P. Novel Additive Manufacturing Pneumatic Actuators and Mechanisms for Food Handling Grippers. Actuators 2014, 3, 205–225. [Google Scholar] [CrossRef] [Green Version]

- Mangan, E.V.; Kingsley, D.A.; Quinn, R.D.; Sutton, G.P.; Mansour, J.M.; Chiel, H.J. A biologically inspired gripping device. Ind. Robot. Int. J. 2005, 32, 49–54. [Google Scholar] [CrossRef]

- Muscato, G.; Prestifilippo, M.; Abbate, N.; Rizzuto, I. A prototype of an orange picking robot: Past history, the new robot and experimental results. Ind. Robot. Int. J. 2005, 32, 128–138. [Google Scholar] [CrossRef]

- Ali, M.H.; Zhanabayev, A.; Khamzhin, S.; Mussin, K. Biologically Inspired Gripper Based on the Fin Ray Effect. In Proceedings of the 2019 5th International Conference on Control, Automation and Robotics (ICCAR), Beijing, China, 19–22 April 2019; pp. 865–869. [Google Scholar] [CrossRef]

- Inaba, M. Remote-brained robotics: Interfacing ai with real world behaviors. In Proceedings of the 6th International Symposium of Robotics Research, Puerto Varas, Chile, 11–14 December 2017; pp. 335–344. [Google Scholar]

- Kamei, K.; Nishio, S.; Hagita, N.; Sato, M. Cloud networked robotics. IEEE Netw. 2012, 26, 28–34. [Google Scholar] [CrossRef]

- Sanfeliu, A.; Hagita, N.; Saffiotti, A. Network robot systems. Robot. Auton. Syst. 2008, 56, 793–797. [Google Scholar] [CrossRef] [Green Version]

- Honkote, V.; Ghosh, D.; Narayanan, K.; Gupta, A.; Srinivasan, A. Design and Integration of a Distributed, Autonomous and Collaborative Multi-Robot System for Exploration in Unknown Environments. In Proceedings of the 2020 IEEE/SICE International Symposium on System Integration (SII), Honolulu, HI, USA, 12–15 January 2020; pp. 1232–1237. [Google Scholar] [CrossRef]

- Bresson, G.; Alsayed, Z.; Yu, L.; Glaser, S. Simultaneous localization and mapping: A survey of current trends in autonomous driving. IEEE Trans. Intell. Veh. 2017, 2, 194–220. [Google Scholar] [CrossRef] [Green Version]

- Saha, O.; Dasgupta, P. A Comprehensive Survey of Recent Trends in Cloud Robotics Architectures and Applications. Robotics 2018, 7, 47. [Google Scholar] [CrossRef] [Green Version]

- Cardarelli, E.; Digani, V.; Sabattini, L.; Secchi, C.; Fantuzzi, C. Cooperative cloud robotics architecture for the coordination of multi-AGV systems in industrial warehouses. Mechatronics 2017, 45, 1–13. [Google Scholar] [CrossRef]

- Waibel, M.; Beetz, M.; Civera, J.; d’Andrea, R.; Elfring, J.; Galvez-Lopez, D.; Haussermann, K.; Janssen, R.; Montiel, J.; Perzylo, A.; et al. A world wide web for robots. IEEE Robot. Autom. Mag. 2011, 18, 69–82. [Google Scholar] [CrossRef] [Green Version]

- Arumugam, R.; Enti, V.R.; Liu, B.; Wu, X.; Baskaran, K.; Kong, F.F.; Kumar, A.S.; Kang, D.M.; Kit, G.W. DAvinCi: A cloud computing framework for service robots. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–8 May 2010; pp. 3084–3089. [Google Scholar] [CrossRef]

- Riazuelo, L.; Civera, J.; Montiel, J.M.M. C2TAM: A Cloud framework for cooperative tracking and mapping. Robot. Auton. Syst. 2014, 62, 401–413. [Google Scholar] [CrossRef] [Green Version]

- Carfagni, M.; Furferi, R.; Governi, L.; Santarelli, C.; Servi, M.; Uccheddu, F.; Volpe, Y. Metrological and Critical Characterization of the Intel D415 Stereo Depth Camera. Sensors 2019, 19, 489. [Google Scholar] [CrossRef] [Green Version]

- ROS Documentation Website. 2019. Available online: http://wiki.ros.org/Topics (accessed on 12 October 2021).

- Röhrig, C.; Heß, D.; Künemund, F. Motion controller design for a mecanum wheeled mobile manipulator. In Proceedings of the 2017 IEEE Conference on Control Technology and Applications (CCTA), Kohala Coast, HI, USA, 27–30 August 2017; pp. 444–449. [Google Scholar] [CrossRef]

- Almaged, M. Forward and Inverse Kinematic Analysis and Validation of the ABB IRB 140 Industrial Robot. Int. J. Electron. Mech. Mechatron. Eng. 2017, 7, 1383–1401. [Google Scholar] [CrossRef]

- Holubek, R.; Delgado Sobrino, D.R.; Košťál, P.; Ružarovský, R. Offline Programming of an ABB Robot Using Imported CAD Models in the RobotStudio Software Environment. Appl. Mech. Mater. 2014, 693, 62–67. [Google Scholar] [CrossRef]

- Zhang, L.; Merrifield, R.; Deguet, A.; Yang, G.Z. Powering the world’s robots-10 years of ROS. Sci. Robot. 2017, 2, eaar1868. [Google Scholar] [CrossRef] [Green Version]

- Chan, M.L.; Hvass, P. ROS-Industrial: Expanding Industrial Application Capabilities. J. Robot. Soc. Jpn. 2017, 35, 307–310. [Google Scholar] [CrossRef]

- Venator, E.; Zoss, J.; Edwards, S. ABB_DRIVER—ROS Wiki. 2021. Available online: http://wiki.ros.org/abb_driver (accessed on 12 October 2021).

- Edwards, S. ABB_Experimental—ROS Wiki. Available online: http://wiki.ros.org/abb_experimental (accessed on 12 October 2021).

- Chitta, S.; Sucan, I.; Cousins, S. MoveIt! [ROS Topics]. IEEE Robot. Autom. Mag. 2012, 19, 18–19. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mišković, D.; Milić, L.; Čilag, A.; Berisavljević, T.; Gottscheber, A.; Raković, M. Implementation of Robots Integration in Scaled Laboratory Environment for Factory Automation. Appl. Sci. 2022, 12, 1228. https://doi.org/10.3390/app12031228

Mišković D, Milić L, Čilag A, Berisavljević T, Gottscheber A, Raković M. Implementation of Robots Integration in Scaled Laboratory Environment for Factory Automation. Applied Sciences. 2022; 12(3):1228. https://doi.org/10.3390/app12031228

Chicago/Turabian StyleMišković, Dragiša, Lazar Milić, Andrej Čilag, Tanja Berisavljević, Achim Gottscheber, and Mirko Raković. 2022. "Implementation of Robots Integration in Scaled Laboratory Environment for Factory Automation" Applied Sciences 12, no. 3: 1228. https://doi.org/10.3390/app12031228

APA StyleMišković, D., Milić, L., Čilag, A., Berisavljević, T., Gottscheber, A., & Raković, M. (2022). Implementation of Robots Integration in Scaled Laboratory Environment for Factory Automation. Applied Sciences, 12(3), 1228. https://doi.org/10.3390/app12031228