1. Introduction

China’s Ministry of Education has proposed “eliminating ‘useless courses’ and creating ‘useful courses’”, with “one degree of gender (high order, innovation and challenge)” as a guide to effectively improve teaching quality. Among them, the “innovation” of the Golden Course requires the modernization of the content of civic science courses, with an advanced teaching form and the personalization of the learning results [

1]. In a speech at China’s Higher Education Director Conference, the Ministry of Education further proposed to promote a “quality revolution” through a “learning revolution”, and to use “the degree of achievement of course objectives, the degree of adaptation to social needs, the degree of support of technical conditions, the degree of effective quality assurance operation and student learning satisfaction” as the criteria for testing the effectiveness of courses [

2]. With the new “Internet + teaching” information, in a student-centered education context, this is necessary in order to overcome the misconception of pure “technology worship” and to ensure students’ roles as active learners and independent constructors through the effective teaching design of civics and political science courses [

3]. In recent years, MOOC applications have enabled the sharing of high-quality resources on a large scale, promoting cross-campus and cross-regional online learning, flipped classrooms, online and offline blended learning, and other sharing and application models [

4]. New technologies, the Internet, artificial intelligence, and information technology tools are constantly changing and reshaping modern education, fundamentally promoting the development of new forms and modes of teaching civics courses, as well as driving a change in learning styles [

5]. Information technology tools are mental models and devices that support, guide, and expand learners’ thinking processes.

The real role of technology is to dilute the learners’ burden of constructing cognition, expand their ability to construct knowledge, and develop higher order thinking skills through the use of technological tools [

6]. In order to improve the teaching quality of civics courses, teachers need to use intelligent information technology tools to focus on a new form of teaching wherein teachers and students generate knowledge via interaction, which leads to the creation and transfer of knowledge in the context of an increasingly prominent form of knowledge creation learning [

7]. To this end, based on online and offline hybrid civics courses, the author conducted an all-round reflection on the course in line with the requirements of the “Golden Course”, seriously exploring how to truly return the course construction to the value of “student-centeredness”. In the context of “Internet + Education”, we have explored how to make full use of the value of information technology tools to empower the connotation of the “Golden Course”, create a new hybrid teaching model for students’ knowledge generation and creation, and move from the “Learning Revolution” to the “Quality Revolution”.

Teaching materials provide the basic guidelines for education and teaching, and the selection of the content of teaching materials plays a key role in the development of students’ values. Meanwhile, there is a structural problem with the simple and repetitive content in the teaching materials of university civics courses [

8,

9]. Therefore, in order to better evaluate the content of teaching materials, experts of the project team have formulated corresponding moral indicators according to the requirements of today’s socialist core value system in China, including four first-level moral indicators, namely, political identity (A), national consciousness (B), cultural confidence (C), and civic personality (D). In order to evaluate the moral indicators of teaching materials in a more detailed and rigorous way, each primary moral indicator is subdivided into four secondary moral indicators, namely, party leadership (A1), scientific theory (A2), political system (A3), development path (A4), national interest (B1), concept of national conditions (B2), national unity (B3), international perspective (B4), national language (C1), history and culture (C2), revolutionary tradition (C3), spirit of the times (C4), healthy body and mind (D1), law-abiding and equality (D2), honesty and responsibility (D3), and self-improvement and cooperation (D4). This paper investigates the automatic classification of secondary moral indicators.

Guided by activity theory and constructivism theory, this paper—based on the empirical study of teaching practice, teaching data analysis, and questionnaires of civics courses—investigates and summarizes the value-enabling path of information technology tools in the process of creating an online and offline hybrid “Golden class”, and explores the “three-dimensional” approach to deep learning in order to produce a constructive hybrid teaching mode for civics and government.

As an important vehicle for moral education, the moral indicators of civics and political science course materials naturally become some of the most important criteria for revising the materials [

10]. However, the textbook dataset has rich textual information, ambiguous feature performance, unbalanced sample distribution, etc. To address these problems, this paper combines a novel data enhancement method to obtain classification results based on word vectors. Additionally, for the problem of unbalanced sample size, this paper proposes a network model based on the attention mechanism [

11,

12]. The experimental results also show that the data augmentation method used in this paper’s model can effectively improve the performance of the model, resulting in a higher boost in the F1-measure of the model. The model incorporating the attention mechanism has better model generalization compared to the one without the attention mechanism, as well as a significant advantage compared to the reference model in other settings.

4. Experimental Results and Analysis

4.1. Experiment Preparation

The dataset of this paper was derived from the text of college civics textbooks, with a total of 33,360 data, containing 16 categories and 23,083 terms. After data enhancement, the balanced dataset contains 110,665 data, 16 categories, and 28,966 words. A randomly selected 8:2 split from the balanced dataset was used for the training and test sets, respectively. Examples of the sample data are shown in

Table 1.

4.2. Experimental Setup

The experimental environment of this work is based on Python 3.6, and the Keras deep learning framework was used to complete the construction of both this work and the reference model.

4.3. Word Vector Pre-Training

In this work, we used Google’s open-source Skip-Gram with Negative Sampling model to generate pre-trained word vectors. The training window size of the Skip-Gram model was set to 15 (the farthest distance between the current word and the predicted word), the learning rate of the model was set to 0.05, the negative value was set to 5, the min_count was set to 1 (indicating that no words would be discarded), and the number of iterations was set to 10. The parameters of the pre-trained word vector were set as shown in

Table 2. The word similarity of the pre-trained word vector is shown in

Table 3.

4.4. Experimental Design

Experiment 1 verified the effectiveness of the data enhancement method proposed by the model in this paper.

Experiment 2 verified whether the parameter selection in this paper was reasonable.

Experiment 3 verified the effectiveness of the proposed model in this paper, and compared the classification performance with Bi-LSTM [

7], Bi-GRU [

9], GRNN [

21], IoMET, and BERT-Base [

22]—provided by Google as reference models. The hypernatremia settings of each model are shown in

Table 4 and

Table 5.

4.5. Experimental Evaluation Criteria

Precision (P) represents the proportion of items found that are relevant. Recall (R) is the proportion of relevant items that are found. F1-measure (F1) is a score that combines P and R, and is defined as the summed average of P and R.

Where TP (true positive) means that the relevant item is correctly identified as relevant, FP (false positive) means that the irrelevant item is incorrectly identified as relevant, and FN (false negative) means that the relevant item is incorrectly identified as irrelevant.

4.6. Analysis of Results

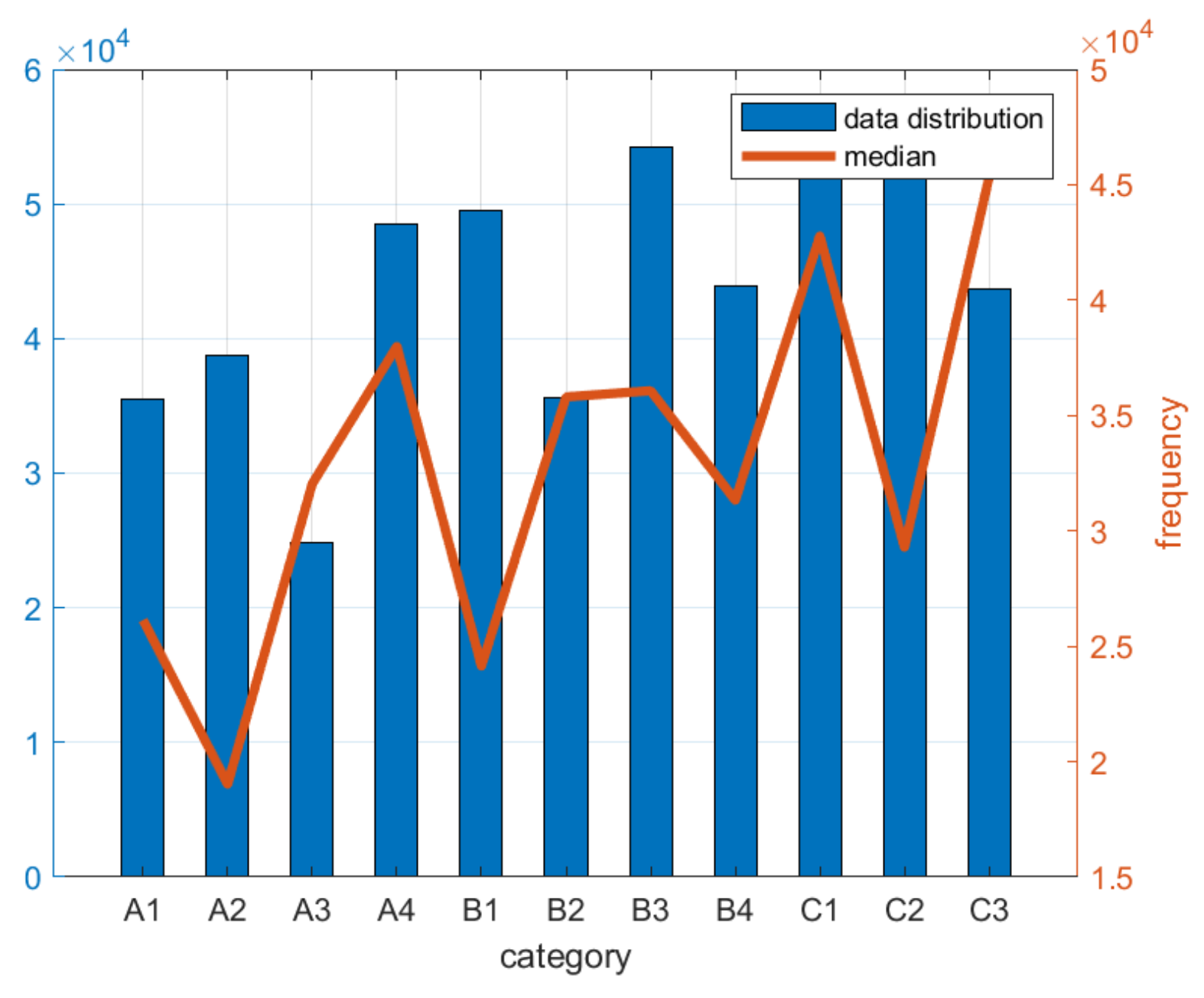

Experiment 1. In order to verify the effectiveness of the data enhancement method used in this paper, the textbook text and the original text after data enhancement were input into the TextCNN text classifier and evaluated by the F1-measure; the experimental results are shown in Figure 6. Experiment 2. Because different hyperparameter settings have different effects on the model, the parameters need to be optimized.

The word vector matrices generated by different word vector dimensions are different, and the text feature information expressed is also different. In order to find the optimal word vector dimension under the Shanghai textbook text dataset, multiple groups of control experiments are set, and F1-measure is used as the model performance evaluation index. The performance of the model in different dimensions is shown in

Figure 7.

Model overfitting often occurs in the process of model training. Because the loss function of overfitting the model in the test set and the training set is very different, the overfitting model cannot be used in practical applications. Crossover et al. [

23] proposed that in the process of training the model some hidden layer nodes can be ignored in each training block in order to reduce the interaction of these hidden layer nodes, so as to reduce the occurrence of overfitting. Therefore, in this paper, multi-group control experiments were carried out in the (0, 1) interval to observe the classification performance of the model under different dropout values. The performance of the model under different dropout values is shown in

Figure 8.

Experiment 3. In order to verify the validity of this paper on the classification task of the textbook’s moral indicators, multiple control experiments were set up, and the results of the experiments are shown in Table 6. For Q1, it can be seen from

Figure 6 that the F1-measure values of each category of the textbook text after data enhancement are higher than those of the original text, verifying the effectiveness of the data enhancement method used in this paper, which can effectively solve the problem of unbalanced text datasets.

For Q2, it can be seen from

Figure 7 that the best classification performance of this paper is between 100 and 150 word vectors, which may be due to the decrease in the quality of the pre-trained word vectors from the corpus when the word vector dimension is set too high. From

Figure 8, we can see that there is no significant change in the dropout value (0, 0.5), and that the best classification performance is achieved when dropout = 0.3.

For Q3, it can be seen from

Table 7 that, in the task of classifying the morphological indicators of college textbooks, the method proposed in this paper achieves the highest F1-measure value of 77.51%, with the word vector dimension of 150 dimensions and dropout = 0.3. The reference models obtain 61.28% (IoMET), 75.62% (Bi_LSTM), 74.82% (Bi_G RU), 75.19% (GRNN), and 78.35% (BERT) of the F1-measure value. The F1-measure values of BERT are less than 1% higher than those of this paper and the convergence speed is much slower than that of this paper because of the larger number of parameters to be trained. The original IoMET model cannot be used as a model, as it does not make good use of the linkage and location information between words, so when the input utterance is too long, some important semantic information may be lost. In this paper, the proposed text classification model is combined with the attention mechanism to generate a context vector for each word. This is then combined with the pre-trained word vector input to the convolutional neural network, which effectively improves the generalization ability of the model and achieves better performance in the classification task of university textbook indicators.

4.7. Model Performance

In this paper, an adaptive multimodal and multiscale feature fusion classification network is proposed. Under the condition of considering multimodal information and multiscale information at the same time, the accurate prediction of tumor classification can be achieved without sketching the lesion area. As shown in

Figure 9, its AUC can reach 0.965, which is higher than the existing mainstream deep learning classification model. When using the focus area prediction, its AUC is as high as 0.997, which is better than the latest model of tumor grading.

As shown in

Figure 10, the multimodal data can complement one another, and have a richer feature search space than the unimodal data, while the multimodal feature extraction module designed in this paper can effectively explore the semantic features useful for classification. The adaptive multimodal multiscale feature fusion module can increase the adaptability of the fusion rules to a certain extent by using the learnable convolutional approximation fusion rules. However, in practice, there are still some open issues in the experimental process. For example, although our method can classify tumors by the differences in their multimodal features, it is difficult to further improve the classification accuracy due to the inconsistent training speed of multiple modalities during training. In addition, among the four modalities, the tumor area was not effectively identified from the T1 and Flair modal images, and their inclusion in the multimodal model would reduce the prediction accuracy of the model. This suggests that while using the multimodal adaptive fusion strategy, stronger constraints should be added to each modal datum in order to ensure that the training speed of each modal feature extraction module is essentially the same, so as to reduce the influence of the multimodal model on individual modalities. From the results of the model optimization analysis, it is clear that the introduction of different submodules requires consideration of the specific problem to be solved by the network. In addition to the introduction of the SE module, the introduction of a newer image attention mechanism in the learning module of the adaptive fusion rules is also one of the options for future optimization. In addition to the optimization of the fusion module, the depth of the network model designed in this paper is only 11 layers, and the network can be deepened. The deeper the network is, the more semantic it is, and the better it can be expressed. However, as the depth of the network increases, the number of network parameters gradually increases, and it is also worth exploring how to improve the hierarchical performance of the model by reducing the network parameters while deepening the network based on the design ideas of frontier networks.

4.8. Effect of Different Combination Methods of Sample Pairs on Performance

This paper introduces the advantages and disadvantages of two sample pair combination methods—differential image and synthetic image—and combines their characteristics to propose the use of aligned synthetic samples as the sample pair combination method in this paper, i.e., the two samples in a sample pair are firstly aligned to find the common region, and then combined into a synthetic image as the input of the network. In this section, a comparative experimental analysis of these three combination methods is carried out, and their performance is individually tested on three publicly available datasets for training. The networks are all deep tree networks as proposed in this paper, and the data are divided into training, validation, and test sets using the closed-set test approach; none of them are augmented with online data.

Table 8 shows the test results of different combinations of sample pairs on the three public datasets, and

Figure 5 shows the corresponding equal error rate curves (the legend “composite” corresponds to “synthetic image”, while “difference” corresponds to “composite image” and “difference” in the table). The legend “composite” corresponds to “composite image”, “difference” corresponds to “difference image”, and “align + difference” corresponds to “align + difference” (align + difference” corresponds to “align + composite” in the table).

As can be seen from

Table 9, the aligned synthetic samples proposed in this paper outperform the two sample pair combination methods of differential and synthetic images on all three datasets under the same network structure and datasets, exhibiting a great improvement. When comparing the fourth and fifth rows of the table to the second and fifth rows, respectively, it can be seen that the performance is improved after adding the alignment method to both the original differential and synthetic images, which verifies the effectiveness of the alignment method used in this paper against the vein pattern shift. Meanwhile, the comparison of the specific values in the third and fifth rows of the table shows that the proposed aligned synthetic image has the most obvious effect on SDUMLA-HMT, followed by MMCBNU_6000, and the least improvement on FV-USM. The alignment of the original data greatly improves the vein offset due to finger rotation between the intraclass samples, but has less improvement on the light and dark samples. The improvement is small for the difference between light and dark, due to different illumination, and the most severe grain shift is found in SDUMLA-HMT, but the difference between light and dark between samples is very small, so the improvement is the largest after adding the alignment method. In FV-USM, except for a small number of samples with grain offset, there are large differences between the samples of all fingers, despite it being a closed-set test, because the division method in this paper first randomly forms sample pairs and disrupts them, and then randomly divides them into training, validation, and test sets proportionally, which increases the difficulty of the test to some extent. Therefore, the alignment-only method has less improvement in illumination, and the performance improvement on FV-USM is relatively small.

5. Conclusions

In this paper, we take the study of moral indices of university teaching materials as the experimental background, and propose a fusion model to achieve a high accuracy of moral index prediction, which can provide an effective reference basis for the study of moral indices and replace part of the manual work. To address the problem of unbalanced sample size, this paper combines the ideas of SMOTE and EDA, and uses the self-built stop word list and synonym word forest to conduct synonym queries in order to achieve a few categories of oversampling while randomly disrupting the sentence order and intra-sentence word order to build a balanced dataset. The experimental results show that the data augmentation method used in this paper can effectively improve the performance of the model, resulting in a greater improvement of the F1-measure of the model. The model with the attention mechanism has a better model generalization ability than the IoM-ET without the attention mechanism, and also has a significant advantage over the reference model for other settings.

The next research direction is to improve the text pre-processing method and optimize the model structure so that the performance of the model can be further improved.