Applying Machine Learning Techniques to the Audit of Antimicrobial Prophylaxis

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data Preprocessing

2.2. Sampling Methods

2.3. Machine Learning Techniques

2.4. Performance of Machine Learning Techniques

2.4.1. Confusion Matrix

2.4.2. Weighted Average of Performance Metrics

2.4.3. Comparison of the Execution Time for Machine Learning Algorithms

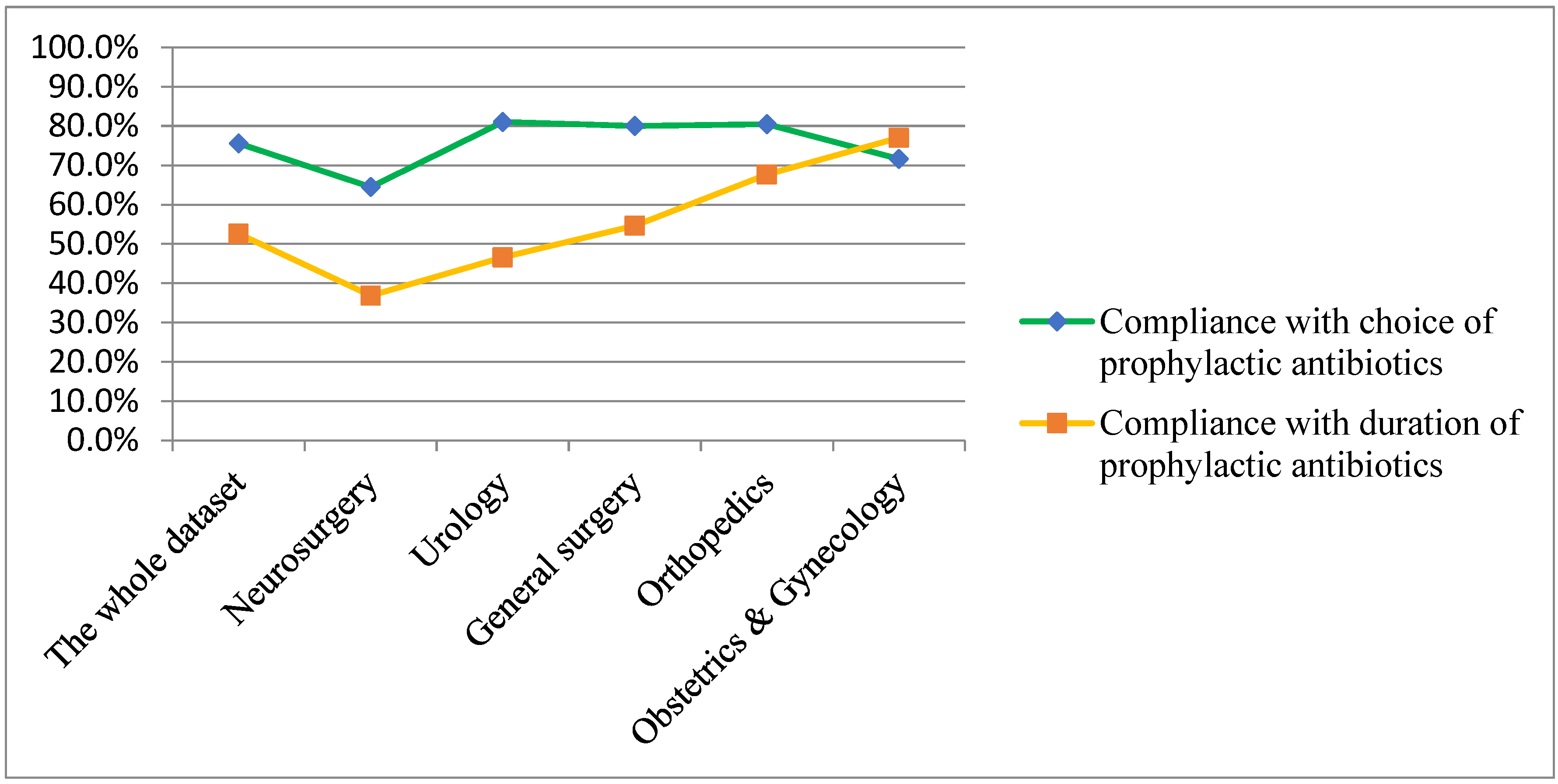

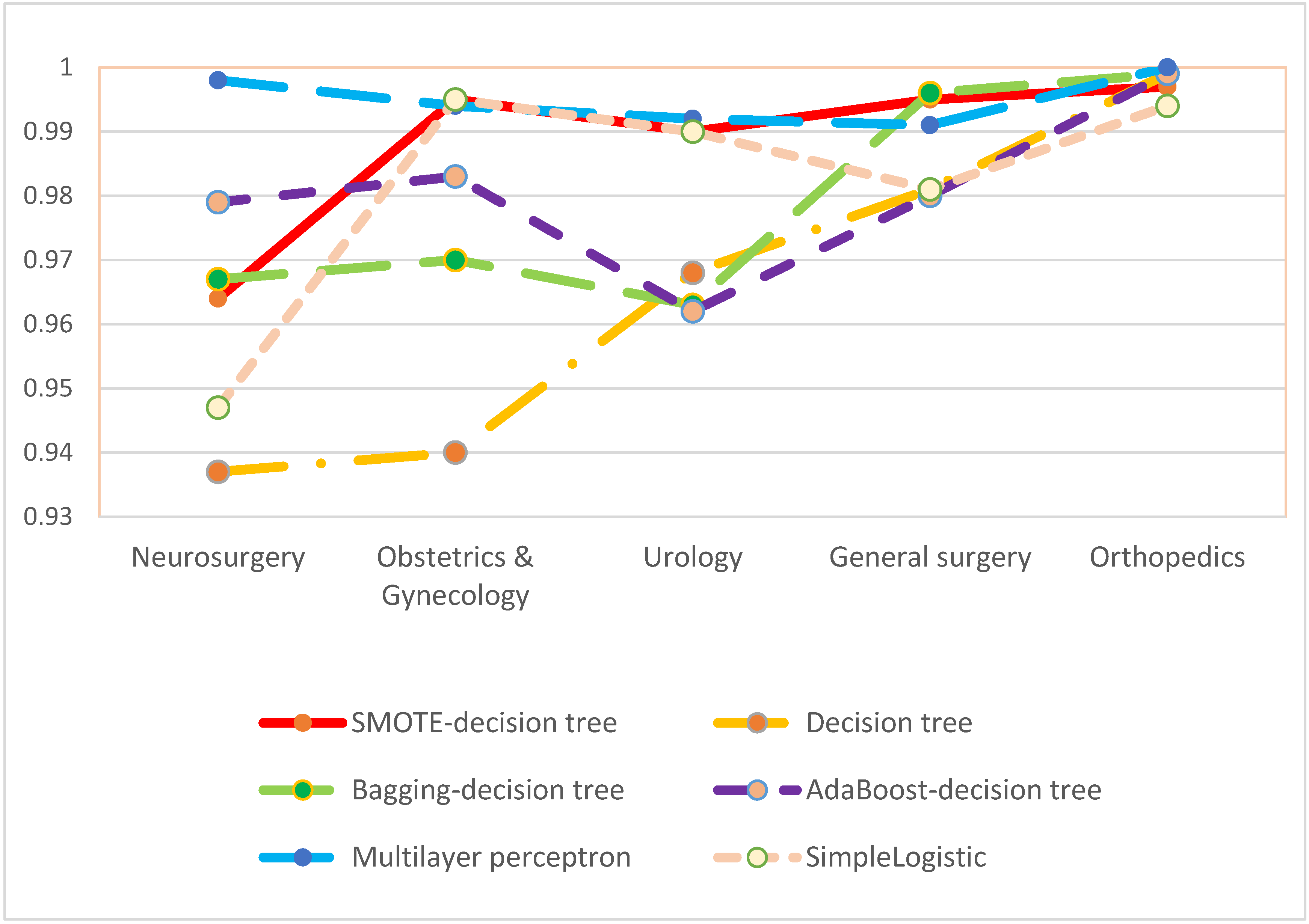

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ban, K.A.; Minei, J.P.; Laronga, C.; Harbrecht, B.G.; Jensen, E.H.; Fry, D.E.; Itani, K.M.; Dellinger, E.P.; Ko, C.Y.; Duane, T.M. American College of Surgeons and Surgical Infection Society: Surgical Site Infection Guidelines, 2016 Update. J. Am. Coll. Surg. 2017, 224, 59–74. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. Global Guidelines for the Prevention of Surgical Site Infection, 2nd ed.; World Health Organization: Geneva, Switzerland, 2018; Available online: https://www.who.int/publications/i/item/global-guidelines-for-the-prevention-of-surgical-site-infection-2nd-ed (accessed on 6 December 2021).

- Berrios-Torres, S.I.; Umscheid, C.A.; Bratzler, D.W.; Leas, B.; Stone, E.C.; Kelz, R.R.; Reinke, C.E.; Morgan, S.; Solomkin, J.S.; Mazuski, J.E.; et al. Centers for Disease Control and Prevention Guideline for the Prevention of Surgical Site Infection, 2017. JAMA Surg. 2017, 152, 784–791. [Google Scholar] [CrossRef] [PubMed]

- McGee, M.F.; Kreutzer, L.; Quinn, C.M.; Yang, A.; Shan, Y.; Halverson, A.L.; Love, R.; Johnson, J.K.; Prachand, V.; Bilimoria, K.Y. Leveraging a Comprehensive Program to Implement a Colorectal Surgical Site Infection Reduction Bundle in a Statewide Quality Improvement Collaborative. Ann. Surg. 2019, 270, 701–711. [Google Scholar] [CrossRef] [PubMed]

- Kefale, B.; Tegegne, G.T.; Degu, A.; Molla, M.; Kefale, Y. Surgical Site Infections and Prophylaxis Antibiotic Use in the Surgical Ward of Public Hospital in Western Ethiopia: A Hospital-Based Retrospective Cross-Sectional Study. Infect Drug Resist. 2020, 13, 3627–3635. [Google Scholar] [CrossRef]

- Purba, A.K.R.; Setiawan, D.; Bathoorn, E.; Postma, M.J.; Dik, J.H.; Friedrich, A.W. Prevention of Surgical Site Infections: A Systematic Review of Cost Analyses in the Use of Prophylactic Antibiotics. Front. Pharm. 2018, 9, 776. [Google Scholar] [CrossRef]

- Bratzler, D.W.; Dellinger, E.P.; Olsen, K.M.; Perl, T.M.; Auwaerter, P.G.; Bolon, M.K.; Fish, D.N.; Napolitano, L.M.; Sawyer, R.G.; Slain, D.; et al. Clinical practice guidelines for antimicrobial prophylaxis in surgery. Am. J. Health Syst. Pharm. 2013, 70, 195–283. [Google Scholar] [CrossRef] [Green Version]

- Ierano, C.; Thursky, K.; Marshall, C.; Koning, S.; James, R.; Johnson, S.; Imam, N.; Worth, L.J.; Peel, T. Appropriateness of Surgical Antimicrobial Prophylaxis Practices in Australia. JAMA Netw. Open 2019, 2, e1915003. [Google Scholar] [CrossRef] [Green Version]

- Tiri, B.; Bruzzone, P.; Priante, G.; Sensi, E.; Costantini, M.; Vernelli, C.; Martella, L.A.; Francucci, M.; Andreani, P.; Mariottini, A.; et al. Impact of Antimicrobial Stewardship Interventions on Appropriateness of Surgical Antibiotic Prophylaxis: How to Improve. Antibiotics 2020, 9, 168. [Google Scholar] [CrossRef] [Green Version]

- Centers for Disease Control and Prevention. Core Elements of Hospital Antibiotic Stewardship Programs; US Department of Health and Human Services, CDC: Atlanta, GA, USA, 2019. Available online: https://www.cdc.gov/antibiotic-use/healthcare/pdfs/hospital-core-elements-H.pdf (accessed on 6 December 2021).

- World Health Organizattion. Antimicrobial Stewardship Programmes in Health-Care Facilities in Low- and Middle-Income Countries: A Practical Toolkit; World Health Organization: Geneva, Switzerland, 2019; Available online: https://www.who.int/publications/i/item/9789241515481 (accessed on 6 December 2021).

- Han, J.; Kamber, M.; Pei, J. Data mining: Concepts and Techniques, 3rd ed.; Morgan Kaufmann: Burlington, MA, USA, 2012. [Google Scholar]

- Peiffer-Smadja, N.; Rawson, T.M.; Ahmad, R.; Buchard, A.; Georgiou, P.; Lescure, F.X.; Birgand, G.; Holmes, A.H. Machine learning for clinical decision support in infectious diseases: A narrative review of current applications. Clin. Microbiol. Infect. 2020, 26, 584–595. [Google Scholar] [CrossRef]

- Bote-Curiel, L.; Muñoz-Romero, S.; Gerrero-Curieses, A.; Rojo-Álvarez, J.L. Deep Learning and Big Data in Healthcare: A Double Review for Critical Beginners. Appl. Sci. 2019, 9, 2331. [Google Scholar] [CrossRef] [Green Version]

- Feretzakis, G.; Loupelis, E.; Sakagianni, A.; Kalles, D.; Martsoukou, M.; Lada, M.; Skarmoutsou, N.; Christopoulos, C.; Valakis, K.; Velentza, A.; et al. Using Machine Learning Techniques to Aid Empirical Antibiotic Therapy Decisions in the Intensive Care Unit of a General Hospital in Greece. Antibiotics 2020, 9, 50. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Martínez-Agüero, S.; Mora-Jiménez, I.; Lérida-García, J.; Álvarez-Rodríguez, J.; Soguero-Ruiz, C. Machine Learning Techniques to Identify Antimicrobial Resistance in the Intensive Care Unit. Entropy 2019, 21, 603. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- European Centre for Disease Prevention and Control. Point Prevalence Survey of Healthcareassociated Infections and Antimicrobial Use in European Acute Care Hospitals—Protocol Version 4.3; ECDC: Stockholm, Sweden, 2012; Available online: https://www.ecdc.europa.eu/sites/default/files/media/en/publications/Publications/0512-TED-PPS-HAI-antimicrobial-use-protocol.pdf (accessed on 6 December 2021).

- Anderson, D.J.; Sexton, D.J. Antimicrobial Prophylaxis for Prevention of Surgical Site Infection in Adults. Uptodate. 2021. Available online: https://www.uptodate.com/contents/antimicrobial-prophylaxis-for-prevention-of-surgical-site-infection-in-adults (accessed on 6 December 2021).

- Witten, I.H.; Frank, E.; Hall, M.A.; Pal, C.J. Data Mining: Practical Machine Learning Tools and Techniques, 4th ed.; Morgan Kaufmann: Burlington, MA, USA, 2017. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Kotthoff, L.; Thornton, C.; Hoos, H.H.; Hutter, F.; Leyton-Brown, K. Auto-WEKA 2.0: Automatic model selection and hyperparameter optimization in WEKA. J. Mach. Learn. Res. 2017, 18, 1–5. [Google Scholar]

- Landwehr, N.; Hall, M.; Frank, E. Logistic Model Trees. Mach. Learn. 2005, 59, 161–205. [Google Scholar] [CrossRef] [Green Version]

- Quinlan, J.R. C4.5. Programs for Machine Learning; Morgan Kaufmann: San Francisco, CA, USA, 1993. [Google Scholar]

- Rokach, L. Ensemble Learning: Pattern Classification Using Ensemble Methods, 2nd ed; World Scientific Publishing Company: Singapore, 2019. [Google Scholar]

- Sánchez-Hernández, F.; Ballesteros-Herráez, J.C.; Kraiem, M.S.; Sánchez-Barba, M.; Moreno-García, M.N. Predictive Modeling of ICU Healthcare-Associated Infections from Imbalanced Data. Using Ensembles and a Clustering-Based Undersampling Approach. Appl. Sci. 2019, 9, 5287. [Google Scholar] [CrossRef] [Green Version]

- Mandrekar, J.N. Receiver Operating Characteristic Curve in Diagnostic Test Assessment. J. Thorac. Oncol. 2010, 5, 1315–1316. [Google Scholar] [CrossRef] [Green Version]

- Grandini, M.; Bagli, E.; Visani, G. Metrics for Multi-Class Classification: An Overview. arXiv 2020, arXiv:abs/2008.05756. Available online: https://arxiv.org/pdf/2008.05756.pdf (accessed on 6 December 2021).

- Wandishin, M.S.; Mullen, S.J. Multiclass ROC Analysis. Weather Forecast. 2009, 24, 530–547. [Google Scholar] [CrossRef]

- Magill, S.S.; O’Leary, E.; Ray, S.M.; Kainer, M.A.; Evans, C.; Bamberg, W.M.; Johnston, H.; Janelle, S.J.; Oyewumi, T.; Lynfield, R.; et al. Antimicrobial Use in US Hospitals: Comparison of Results from Emerging Infections Program Prevalence Surveys, 2015 and 2011. Clin. Infect. Dis. 2021, 72, 1784–1792. [Google Scholar] [CrossRef]

- Plachouras, D.; Kärki, T.; Hansen, S.; Hopkins, S.; Lyytikäinen, O.; Moro, M.L.; Reilly, J.; Zarb, P.; Zingg, W.; Kinross, P.; et al. Antimicrobial use in European acute care hospitals: Results from the second point prevalence survey (PPS) of healthcare-associated infections and antimicrobial use, 2016 to 2017. Eurosurveillance 2018, 23, 1800393. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- World Health Organizattion. Surgical Safety Checklist. Available online: https://www.who.int/teams/integrated-health-services/patient-safety/research/safe-surgery/tool-and-resources (accessed on 6 December 2021).

- Versporten, A.; Zarb, P.; Caniaux, I.; Gros, M.F.; Drapier, N.; Miller, M.; Jarlier, V.; Nathwani, D.; Goossens, H. Antimicrobial consumption and resistance in adult hospital inpatients in 53 countries: Results of an internet-based global point prevalence survey. Lancet Glob. Health 2018, 6, e619–e629. [Google Scholar] [CrossRef] [Green Version]

- Agrawal, A.; Viktor, H.L.; Paquet, E. SCUT: Multi-class imbalanced data classification using SMOTE and cluster-based undersampling. In Proceedings of the 2015 7th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K), Lisbon, Portugal, 12–14 November 2015; pp. 226–234. [Google Scholar]

- Kraiem, M.S.; Sánchez-Hernández, F.; Moreno-García, M.N. Selecting the Suitable Resampling Strategy for Imbalanced Data Classification Regarding Dataset Properties. An Approach Based on Association Models. Appl. Sci. 2021, 11, 8546. [Google Scholar] [CrossRef]

- Ying, X. An Overview of Overfitting and its Solutions. J. Phys. Conf. Ser. 2019, 1168, 022022. [Google Scholar] [CrossRef]

- Davagdorj, K.; Lee, J.S.; Pham, V.H.; Ryu, K.H. A Comparative Analysis of Machine Learning Methods for Class Imbalance in a Smoking Cessation Intervention. Appl. Sci. 2020, 10, 3307. [Google Scholar] [CrossRef]

- Yıldırım, P. Pattern classification with imbalanced and multiclass data for the prediction of albendazole adverse event outcomes. Procedia Comput. Sci. 2016, 83, 1013–1018. [Google Scholar] [CrossRef] [Green Version]

- Angst, D.C.; Tepekule, B.; Sun, L.; Bogos, B.; Bonhoeffer, S. Comparing treatment strategies to reduce antibiotic resistance in an in vitro epidemiological setting. Proc. Natl. Acad. Sci. USA 2021, 118, e2023467118. [Google Scholar] [CrossRef]

- Menz, B.D.; Charani, E.; Gordon, D.L.; Leather, A.J.M.; Moonesinghe, S.R.; Phillips, C.J. Surgical Antibiotic Prophylaxis in an Era of Antibiotic Resistance: Common Resistant Bacteria and Wider Considerations for Practice. Infect. Drug Resist. 2021, 14, 5235–5252. [Google Scholar] [CrossRef]

- Paterson, I.K.; Hoyle, A.; Ochoa, G.; Baker-Austin, C.; Taylor, N.G.H. Optimising Antibiotic Usage to Treat Bacterial Infections. Sci. Rep. 2016, 6, 37853. [Google Scholar] [CrossRef]

| Attribute | Type | Remarks |

|---|---|---|

| Hospital type | Nominal | Primary: 71 instances. Secondary: 258 instances. Tertiary: 271 instances |

| Age | Numeric | Mean: 56.2 years |

| Gender | Binary | Female: 297 instances, male: 304 instances |

| Patient specialty | Nominal | 29 distinct values |

| Diagnosis | Nominal | 20 distinct values |

| Central vascular catheter in place | Binary | Yes: 75 instances No: 526 instances |

| Peripheral vascular catheter in place | Binary | Yes: 477 instances No: 124 instances |

| Urinary catheter in place | Binary | Yes: 213 instances No: 388 instances |

| Under endotracheal intubation | Binary | Yes: 33 instances No: 568 instances |

| Under tracheostomy intubation | Binary | Yes: 9 instances No: 592 instances |

| Ventilator used | Binary | Yes: 34 instances No: 567 instances |

| Patient has active healthcare-associated infection | Binary | Yes: 6 instances No: 595 instances |

| Blood stream infection | Binary | Yes: 0 instances No: 601 instances |

| Urinary tract infection | Binary | Yes: 0 instances No: 601 instances |

| Pneumonia | Binary | Yes: 2 instances No: 599 instances |

| Surgical site infection | Binary | Yes: 3 instances No: 598 instances |

| Antimicrobial agents used | Nominal | 15 distinct values |

| Indication of antimicrobial agents | Nominal | 3 distinct values |

| Diagnosis sites for antimicrobial use | Nominal | 16 distinct values |

| Class label attribute | Nominal | 5 distinct values |

| Wound Classification | Choice of Prophylactic Antimicrobial Agents | Duration of Prophylactic Antimicrobial Agents |

|---|---|---|

| Class I (Clean wound) |

| A single dose or one day |

| Class II (Clean- contaminated wound) |

| A single dose or one day |

| Types of Compliance with Recommendations for Antimicrobial Prophylaxis Determined by Infectious Disease Specialists | Compliance with Choice of Prophylactic Antimicrobial Agents | Compliance with Duration of Prophylactic Antimicrobial Agents |

|---|---|---|

| A | Yes | Yes |

| B | Yes | No |

| C | No | Yes |

| D | No | No |

| E | Antimicrobial agents used for treatment of other infections rather than surgical prophylaxis. | |

| Class | No. of Instances | Choice of Antibiotics | Duration of Prophylactic Antimicrobial Use a (No. of Instances) |

|---|---|---|---|

| A | Total 255 | ||

| 245 | Cefazolin | SP1 (178), SP2 (67) | |

| 5 | Cefoxitin | SP1 | |

| 4 | Cefoxitin | SP2 | |

| 1 | Cefuroxime + metronidazole | SP2 | |

| B | Total 155 | ||

| 126 | Cefazolin | SP3 | |

| 8 | Cefazolin + other antibiotic (for different surgical procedures or risks) | SP3 | |

| 10 | Cefoxitin | SP3 | |

| 8 | Clindamycin | SP3 | |

| 3 | Ciprofloxacin | SP3 | |

| C | Total 30 | ||

| 25 | Cefazolin + gentamicin | SP1 (13), SP2 (12) | |

| 5 | Other antibiotics | SP1 (2), SP2 (3) | |

| D | Total 103 | ||

| 69 | Cefazolin + gentamicin | SP3 | |

| 5 | Cefoxitin + gentamicin | SP3 | |

| 2 | Cefoxitin | SP3 | |

| 21 | Oral cephalexin | SP3 | |

| 6 | Others | ||

| E | Total 57 | ||

| 17 | Ampicillin–sulbactam | SP2 (4), SP3 (13) | |

| 10 | Third-generation cephalosporins | SP2 (4), SP3 (6) | |

| 30 | Others |

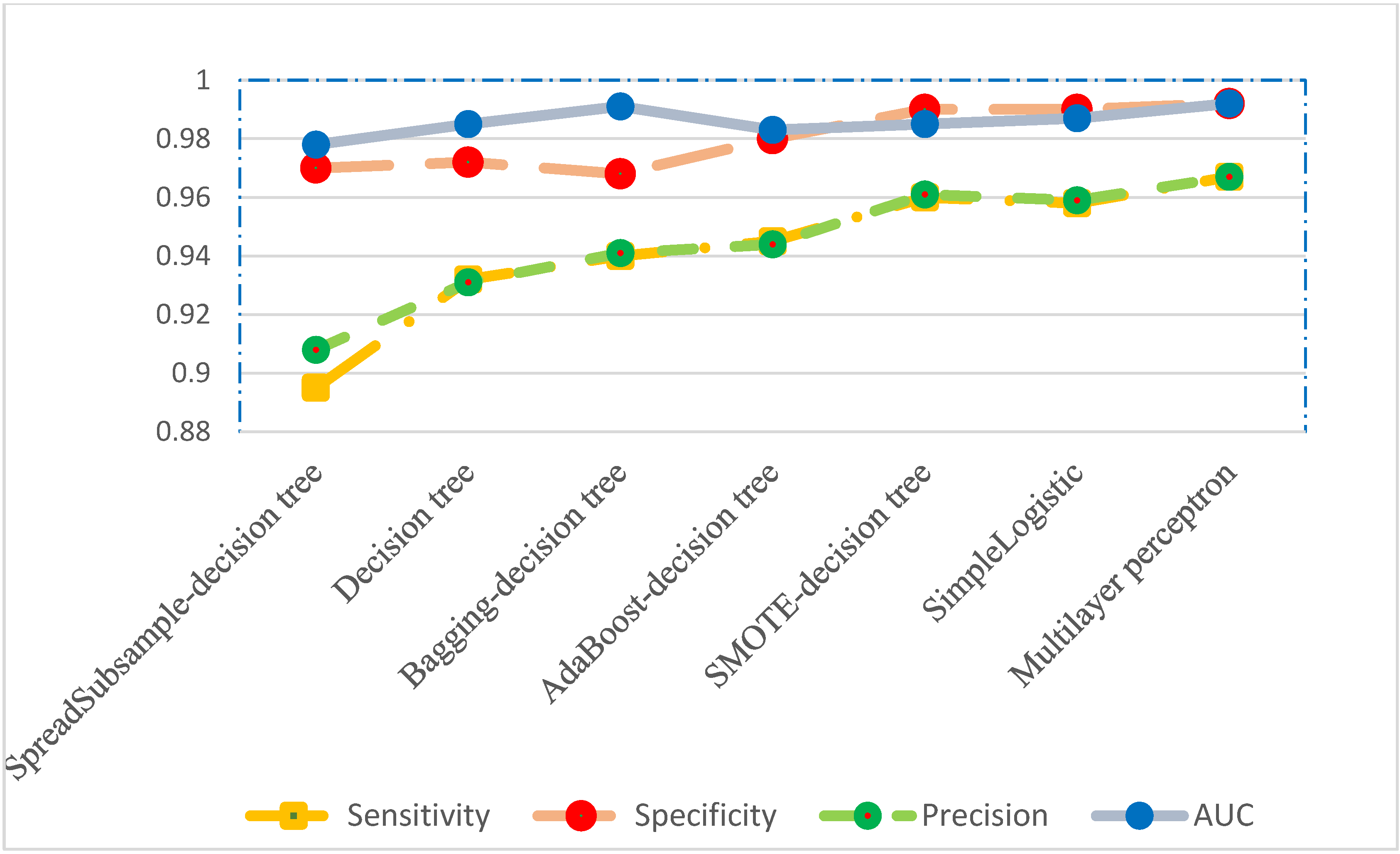

| Spread Subsampl- Decision Tree | Decision Tree | Bagging-Decision Tree | AdaBoost- Decision Tree | SMOTE- Decision Tree | Simple- Logistic | Multilayer Perceptron | |

|---|---|---|---|---|---|---|---|

| Sensitivity | 0.895 | 0.932 | 0.940 | 0.945 | 0.960 | 0.958 | 0.967 |

| Specificity | 0.970 | 0.972 | 0.968 | 0.980 | 0.990 | 0.990 | 0.992 |

| Precision | 0.908 | 0.931 | 0.941 | 0.944 | 0.961 | 0.959 | 0.967 |

| AUC a | 0.978 | 0.985 | 0.991 | 0.983 | 0.985 | 0.987 | 0.992 |

| Predicted Class | Actual Class | Number of Observations (%) | ||||

|---|---|---|---|---|---|---|

| A | B | C | D | E | ||

| 255 | 0 | 1 | 0 | 0 | A | 256 (42.6) |

| 0 | 144 | 0 | 6 | 5 | B | 155 (25.8) |

| 5 | 0 | 25 | 0 | 0 | C | 30 (5.0) |

| 1 | 4 | 0 | 98 | 0 | D | 103 (17.1) |

| 8 | 6 | 0 | 5 | 38 | E | 57 (9.5) |

| Total: 601 (100) | ||||||

| Classifier | Hyperparameters | Sampling Method | Execution Time (s) |

|---|---|---|---|

| Decision tree | Reduced error pruning = false Confidence factor = 0.2 distributionSpread: 2.0 Percentage: frequency of minor classes adjusted to nearly the same with that of major class Classifier Choose: J48 Classifier Choose: J48 | No sampling SpreadSubsample SMOTE Bagging AdaBoost | 7.8 27.9 71.8 16.0 16.1 |

| SimpleLogistic | Default | No sampling | 9.8 |

| Multilayer perceptron | Learning rate = 0.3 Training Time = 500 | No sampling | 353.1 |

| Auto-WEKA | Default Time = 15 min | No sampling | 8586 |

| Manual review | Estimated 24,040 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, Z.-Y.; Hon, J.-S.; Cheng, C.-Y.; Chiang, H.-T.; Huang, H.-M. Applying Machine Learning Techniques to the Audit of Antimicrobial Prophylaxis. Appl. Sci. 2022, 12, 2586. https://doi.org/10.3390/app12052586

Shi Z-Y, Hon J-S, Cheng C-Y, Chiang H-T, Huang H-M. Applying Machine Learning Techniques to the Audit of Antimicrobial Prophylaxis. Applied Sciences. 2022; 12(5):2586. https://doi.org/10.3390/app12052586

Chicago/Turabian StyleShi, Zhi-Yuan, Jau-Shin Hon, Chen-Yang Cheng, Hsiu-Tzy Chiang, and Hui-Mei Huang. 2022. "Applying Machine Learning Techniques to the Audit of Antimicrobial Prophylaxis" Applied Sciences 12, no. 5: 2586. https://doi.org/10.3390/app12052586