Studies to Overcome Brain–Computer Interface Challenges

Abstract

:1. Introduction

2. Studies to Overcome BCI Limitations

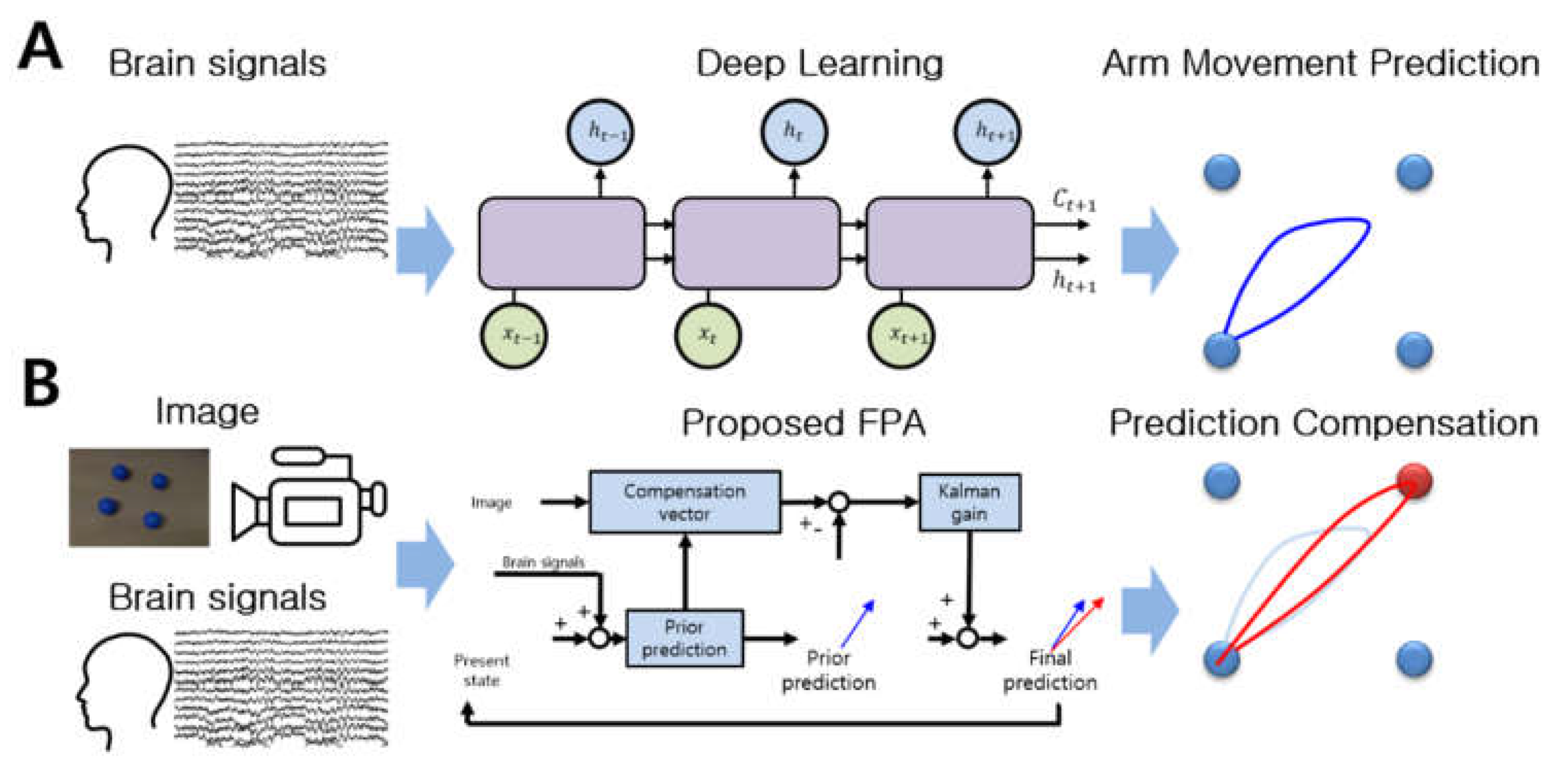

2.1. Arm Movement Prediction

2.2. Correction Using Image Processing

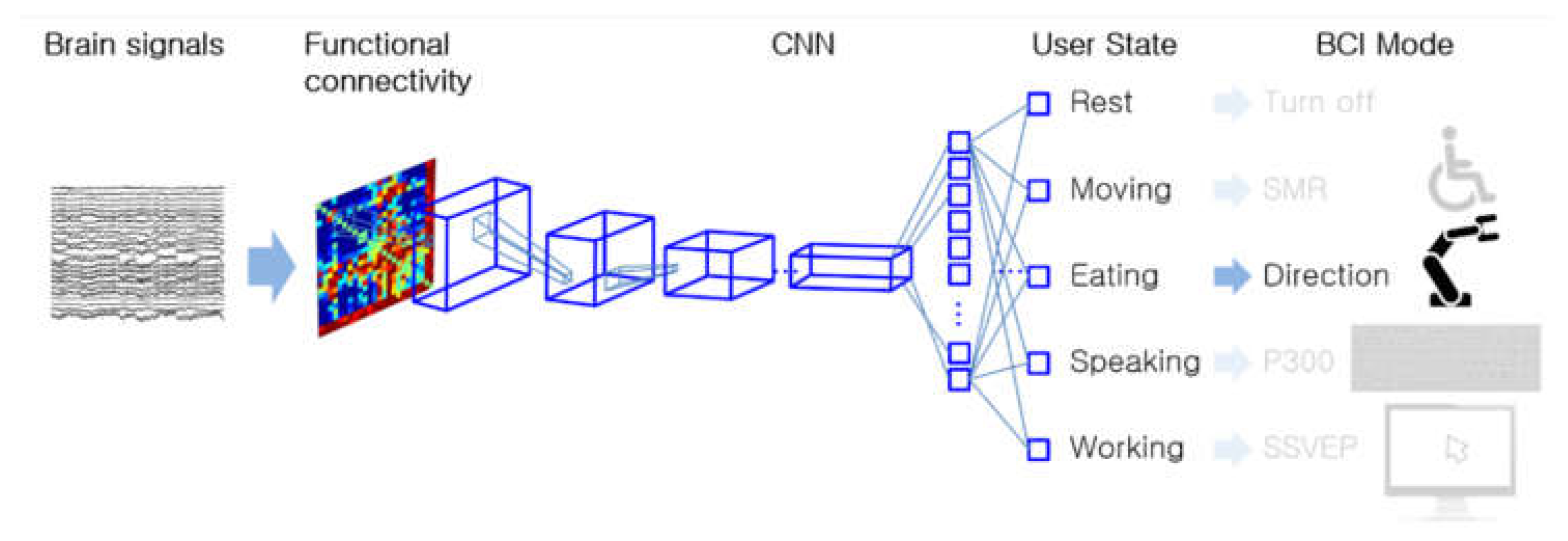

2.3. Prediction of User State

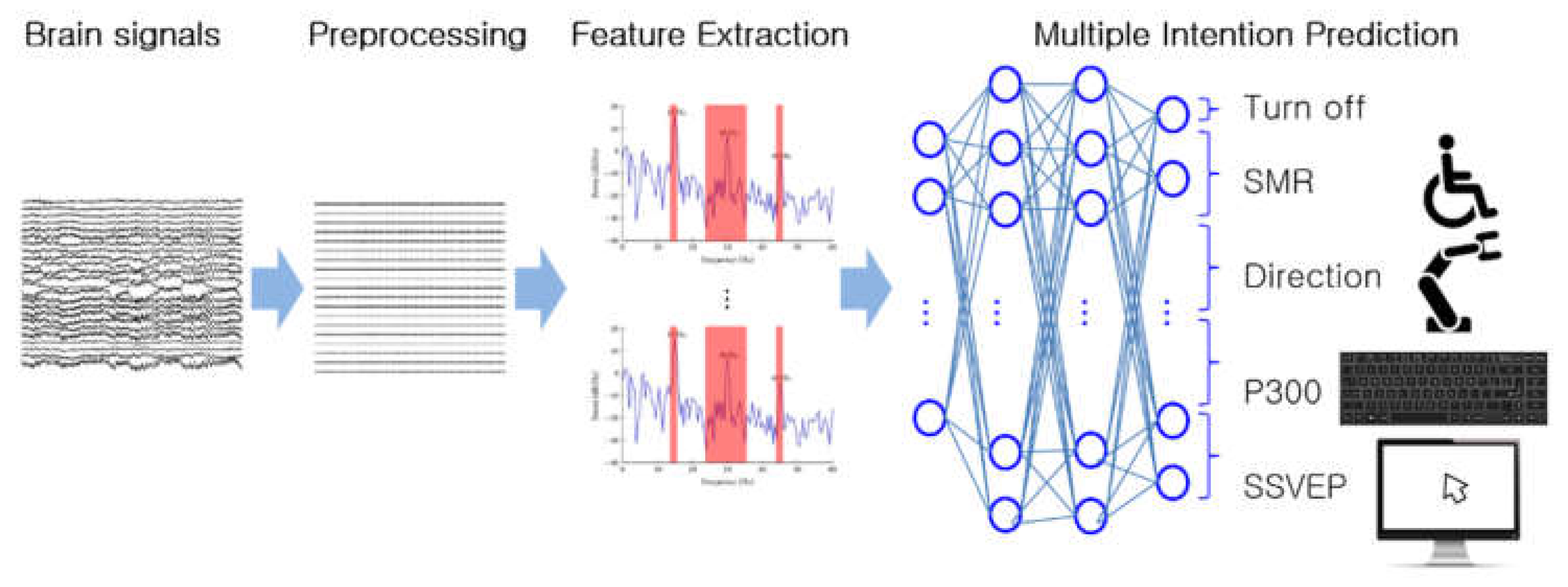

2.4. Multi-Functional BCI

3. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Salahuddin, U.; Gao, P.X. Signal Generation, Acquisition, and Processing in Brain Machine Interfaces: A Unified Review. Front. Neurosci. 2021, 15, 1174. [Google Scholar] [CrossRef] [PubMed]

- Dornhege, G. Toward Brain-Computer Interfacing; MIT Press: Cambridge, MA, USA, 2007; pp. 1–25. [Google Scholar]

- Kandel, E.R.; Koester, J.; Mack, S.; Siegelbaum, S. Principles of Neural Science, 6th ed.; McGraw Hill: New York, NY, USA, 2021; pp. 337–355. [Google Scholar]

- Yeom, H.G.; Kim, J.S.; Chung, C.K. Estimation of the velocity and trajectory of three-dimensional reaching movements from non-invasive magnetoencephalography signals. J. Neural Eng. 2013, 10, 26006. [Google Scholar] [CrossRef] [PubMed]

- Stieger, J.R.; Engel, S.A.; Suma, D.; He, B. Benefits of deep learning classification of continuous noninvasive brain-computer interface control. J. Neural Eng. 2021, 18, 046082. [Google Scholar] [CrossRef]

- Tidare, J.; Leon, M.; Astrand, E. Time-resolved estimation of strength of motor imagery representation by multivariate EEG decoding. J. Neural Eng. 2021, 18, 016026. [Google Scholar] [CrossRef] [PubMed]

- Jiang, X.Y.; Lopez, E.; Stieger, J.R.; Greco, C.M.; He, B. Effects of Long-Term Meditation Practices on Sensorimotor Rhythm-Based Brain-Computer Interface Learning. Front. Neurosci. 2021, 14, 1443. [Google Scholar] [CrossRef] [PubMed]

- Park, S.; Ha, J.; Kim, D.H.; Kim, L. Improving Motor Imagery-Based Brain-Computer Interface Performance Based on Sensory Stimulation Training: An Approach Focused on Poorly Performing Users. Front. Neurosci. 2021, 15, 1526. [Google Scholar] [CrossRef] [PubMed]

- Gao, P.; Huang, Y.H.; He, F.; Qi, H.Z. Improve P300-speller performance by online tuning stimulus onset asynchrony (SOA). J. Neural Eng. 2021, 18, 056067. [Google Scholar] [CrossRef] [PubMed]

- Xiao, X.L.; Xu, M.P.; Han, J.; Yin, E.W.; Liu, S.; Zhang, X.; Jung, T.P.; Ming, D. Enhancement for P300-speller classification using multi-window discriminative canonical pattern matching. J. Neural Eng. 2021, 18, 046079. [Google Scholar] [CrossRef]

- Kirasirova, L.; Bulanov, V.; Ossadtchi, A.; Kolsanov, A.; Pyatin, V.; Lebedev, M. A P300 Brain-Computer Interface With a Reduced Visual Field. Front. Neurosci. 2020, 14, 1246. [Google Scholar] [CrossRef]

- Chen, Y.H.; Yang, C.; Ye, X.C.; Chen, X.G.; Wang, Y.J.; Gao, X.R. Implementing a calibration-free SSVEP-based BCI system with 160 targets. J. Neural Eng. 2021, 18, 046094. [Google Scholar] [CrossRef]

- Ming, G.G.; Pei, W.H.; Chen, H.D.; Gao, X.R.; Wang, Y.J. Optimizing spatial properties of a new checkerboard-like visual stimulus for user-friendly SSVEP-based BCIs. J. Neural Eng. 2021, 18, 056046. [Google Scholar] [CrossRef] [PubMed]

- Rashid, M.; Sulaiman, N.; Majeed, A.P.P.A.; Musa, R.M.; Ab Nasir, A.F.; Bari, B.S.; Khatun, S. Current Status, Challenges, and Possible Solutions of EEG-Based Brain-Computer Interface: A Comprehensive Review. Front. Neurorobotics 2020, 14, 25. [Google Scholar] [CrossRef] [PubMed]

- Rezeika, A.; Benda, M.; Stawicki, P.; Gembler, F.; Saboor, A.; Volosyak, I. Brain-Computer Interface Spellers: A Review. Brain Sci. 2018, 8, 57. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Georgopoulos, A.P.; Kalaska, J.F.; Caminiti, R.; Massey, J.T. On the relations between the direction of two-dimensional arm movements and cell discharge in primate motor cortex. J. Neurosci. 1982, 2, 1527–1537. [Google Scholar] [CrossRef] [PubMed]

- Georgopoulos, A.P.; Kettner, R.E.; Schwartz, A.B. Primate motor cortex and free arm movements to visual targets in three-dimensional space. II. Coding of the direction of movement by a neuronal population. J. Neurosci. 1988, 8, 2928–2937. [Google Scholar] [CrossRef] [PubMed]

- Velliste, M.; Perel, S.; Spalding, M.C.; Whitford, A.S.; Schwartz, A.B. Cortical control of a prosthetic arm for self-feeding. Nature 2008, 453, 1098–1101. [Google Scholar] [CrossRef]

- Hochberg, L.R.; Bacher, D.; Jarosiewicz, B.; Masse, N.Y.; Simeral, J.D.; Vogel, J.; Haddadin, S.; Liu, J.; Cash, S.S.; van der Smagt, P.; et al. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature 2012, 485, 372–375. [Google Scholar] [CrossRef] [Green Version]

- Collinger, J.L.; Wodlinger, B.; Downey, J.E.; Wang, W.; Tyler-Kabara, E.C.; Weber, D.J.; McMorland, A.J.; Velliste, M.; Boninger, M.L.; Schwartz, A.B. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet 2013, 381, 557–564. [Google Scholar] [CrossRef] [Green Version]

- Polikov, V.S.; Tresco, P.A.; Reichert, W.M. Response of brain tissue to chronically implanted neural electrodes. J. Neurosci. Methods 2005, 148, 1–18. [Google Scholar] [CrossRef]

- Bradberry, T.J.; Gentili, R.J.; Contreras-Vidal, J.L. Reconstructing Three-Dimensional Hand Movements from Noninvasive Electroencephalographic Signals. J. Neurosci. 2010, 30, 3432–3437. [Google Scholar] [CrossRef] [Green Version]

- Yeom, H.G.; Kim, J.S.; Chung, C.K. LSTM Improves Accuracy of Reaching Trajectory Prediction From Magnetoencephalography Signals. IEEE Access 2020, 8, 20146–20150. [Google Scholar] [CrossRef]

- Yeom, H.G.; Kim, J.S.; Chung, C.K. High-Accuracy Brain-Machine Interfaces Using Feedback Information. PLoS ONE 2014, 9, e103539. [Google Scholar] [CrossRef] [PubMed]

- Shanechi, M.M.; Williams, Z.M.; Wornell, G.W.; Hu, R.C.; Powers, M.; Brown, E.N. A Real-Time Brain-Machine Interface Combining Motor Target and Trajectory Intent Using an Optimal Feedback Control Design. PLoS ONE 2013, 8, e59049. [Google Scholar] [CrossRef] [Green Version]

- Gilja, V.; Nuyujukian, P.; Chestek, C.A.; Cunningham, J.P.; Yu, B.M.; Fan, J.M.; Churchland, M.M.; Kaufman, M.T.; Kao, J.C.; Ryu, S.I.; et al. A high-performance neural prosthesis enabled by control algorithm design. Nat. Neurosci. 2012, 15, 1752–1757. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Velliste, M.; Kennedy, S.D.; Schwartz, A.B.; Whitford, A.S.; Sohn, J.W.; McMorland, A.J.C. Motor Cortical Correlates of Arm Resting in the Context of a Reaching Task and Implications for Prosthetic Control. J. Neurosci. 2014, 34, 6011–6022. [Google Scholar] [CrossRef]

- Park, S.M.; Yeom, H.G.; Sim, K.B. User State Classification Based on Functional Brain Connectivity Using a Convolutional Neural Network. Electronics 2021, 10, 1158. [Google Scholar] [CrossRef]

- Choi, W.-S.; Yeom, H.G. A Brain-Computer Interface Predicting Multi-intention Using An Artificial Neural Network. J. Korean Inst. Intell. Syst. 2021, 31, 206–212. [Google Scholar] [CrossRef]

- Mattioli, F.; Porcaro, C.; Baldassarre, G. A 1D CNN for high accuracy classification and transfer learning in motor imagery EEG-based brain-computer interface. J. Neural Eng. 2021, 18, 066053. [Google Scholar] [CrossRef]

- Yang, L.; Song, Y.H.; Ma, K.; Su, E.Z.; Xie, L.H. A novel motor imagery EEG decoding method based on feature separation. J. Neural Eng. 2021, 18, 036022. [Google Scholar] [CrossRef]

- Fumanal-Idocin, J.; Wang, Y.K.; Lin, C.T.; Fernandez, J.; Sanz, J.A.; Bustince, H. Motor-Imagery-Based Brain-Computer Interface Using Signal Derivation and Aggregation Functions. IEEE Trans. Cybern. 2021, 1, 1–12. [Google Scholar] [CrossRef]

- Ko, L.W.; Sankar, D.S.V.; Huang, Y.F.; Lu, Y.C.; Shaw, S.; Jung, T.P. SSVEP-assisted RSVP brain-computer interface paradigm for multi-target classification. J. Neural Eng. 2021, 18, 016021. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.H.; Yang, C.; Chen, X.G.; Wang, Y.J.; Gao, X.R. A novel training-free recognition method for SSVEP-based BCIs using dynamic window strategy. J. Neural Eng. 2021, 18, 036007. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.G.; Wang, Y.J.; Nakanishi, M.; Gao, X.R.; Jung, T.P.; Gao, S.K. High-speed spelling with a noninvasive brain-computer interface. Proc. Natl. Acad. Sci. USA 2015, 112, E6058–E6067. [Google Scholar] [CrossRef] [Green Version]

- Chen, X.G.; Wang, Y.J.; Gao, S.K.; Jung, T.P.; Gao, X.R. Filter bank canonical correlation analysis for implementing a high-speed SSVEP-based brain-computer interface. J. Neural Eng. 2015, 12, 046008. [Google Scholar] [CrossRef]

- Mannan, M.M.N.; Kamran, M.A.; Kang, S.; Choi, H.S.; Jeong, M.Y. A Hybrid Speller Design Using Eye Tracking and SSVEP Brain-Computer Interface. Sensors 2020, 20, 891. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yao, Z.L.; Ma, X.Y.; Wang, Y.J.; Zhang, X.; Liu, M.; Pei, W.H.; Chen, H.D. High-Speed Spelling in Virtual Reality with Sequential Hybrid BCIs. IEICE Trans. Inf. Syst. 2018, E101d, 2859–2862. [Google Scholar] [CrossRef] [Green Version]

- Ko, W.; Jeon, E.; Jeong, S.; Phyo, J.; Suk, H.I. A Survey on Deep Learning-Based Short/Zero-Calibration Approaches for EEG-Based Brain-Computer Interfaces. Front. Hum. Neurosci. 2021, 15, 258. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.J.; Sun, J.S.; Chen, T. A new dynamically convergent differential neural network for brain signal recognition. Biomed. Signal Processing Control 2022, 71, 103130. [Google Scholar] [CrossRef]

- Roy, A.M. An efficient multi-scale CNN model with intrinsic feature integration for motor imagery EEG subject classification in brain-machine interfaces. Biomed. Signal Processing Control 2022, 74, 103496. [Google Scholar] [CrossRef]

- Yeom, H.G.; Jeong, H. F-Value Time-Frequency Analysis: Between-Within Variance Analysis. Front. Neurosci. 2021, 15, 729449. [Google Scholar] [CrossRef]

- Li, M.L.; He, D.N.; Li, C.; Qi, S.L. Brain-Computer Interface Speller Based on Steady-State Visual Evoked Potential: A Review Focusing on the Stimulus Paradigm and Performance. Brain Sci. 2021, 11, 450. [Google Scholar] [CrossRef]

- Nakanishi, M.; Wang, Y.J.; Wang, Y.T.; Mitsukura, Y.; Jung, T.P. A High-Speed Brain Speller Using Steady-State Visual Evoked Potentials. Int. J. Neural Syst. 2014, 24, 1450019. [Google Scholar] [CrossRef] [PubMed]

- Schirrmeister, R.T.; Springenberg, J.T.; Fiederer, L.D.J.; Glasstetter, M.; Eggensperger, K.; Tangermann, M.; Hutter, F.; Burgard, W.; Ball, T. Deep Learning With Convolutional Neural Networks for EEG Decoding and Visualization. Hum. Brain Mapp. 2017, 38, 5391–5420. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Freer, D.; Yang, G.Z. Data augmentation for self-paced motor imagery classification with C-LSTM. J. Neural Eng. 2020, 17, 016041. [Google Scholar] [CrossRef] [PubMed]

- Dai, G.H.; Zhou, J.; Huang, J.H.; Wang, N. HS-CNN: A CNN with hybrid convolution scale for EEG motor imagery classification. J. Neural Eng. 2020, 17, 016025. [Google Scholar] [CrossRef]

- Fahimi, F.; Dosen, S.; Ang, K.K.; Mrachacz-Kersting, N.; Guan, C.T. Generative Adversarial Networks-Based Data Augmentation for Brain-Computer Interface. IEEE Trans. Neural Networks Learn. Syst. 2021, 32, 4039–4051. [Google Scholar] [CrossRef]

- Zhang, K.; Xu, G.H.; Han, Z.Z.; Ma, K.Q.; Zheng, X.W.; Chen, L.T.; Duan, N.; Zhang, S.C. Data Augmentation for Motor Imagery Signal Classification Based on a Hybrid Neural Network. Sensors 2020, 20, 4485. [Google Scholar] [CrossRef]

- Zhang, R.L.; Zong, Q.; Dou, L.Q.; Zhao, X.Y.; Tang, Y.F.; Li, Z.Y. Hybrid deep neural network using transfer learning for EEG motor imagery decoding. Biomed. Signal Processing Control 2021, 63, 102144. [Google Scholar] [CrossRef]

- Raghu, S.; Sriraam, N.; Temel, Y.; Rao, S.V.; Kubben, P.L. EEG based multi-class seizure type classification using convolutional neural network and transfer learning. Neural Netw. 2020, 124, 202–212. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, W.-S.; Yeom, H.-G. Studies to Overcome Brain–Computer Interface Challenges. Appl. Sci. 2022, 12, 2598. https://doi.org/10.3390/app12052598

Choi W-S, Yeom H-G. Studies to Overcome Brain–Computer Interface Challenges. Applied Sciences. 2022; 12(5):2598. https://doi.org/10.3390/app12052598

Chicago/Turabian StyleChoi, Woo-Sung, and Hong-Gi Yeom. 2022. "Studies to Overcome Brain–Computer Interface Challenges" Applied Sciences 12, no. 5: 2598. https://doi.org/10.3390/app12052598

APA StyleChoi, W.-S., & Yeom, H.-G. (2022). Studies to Overcome Brain–Computer Interface Challenges. Applied Sciences, 12(5), 2598. https://doi.org/10.3390/app12052598