sEMG Signals Characterization and Identification of Hand Movements by Machine Learning Considering Sex Differences

Abstract

:1. Introduction

2. Materials and Methods

2.1. Subjects

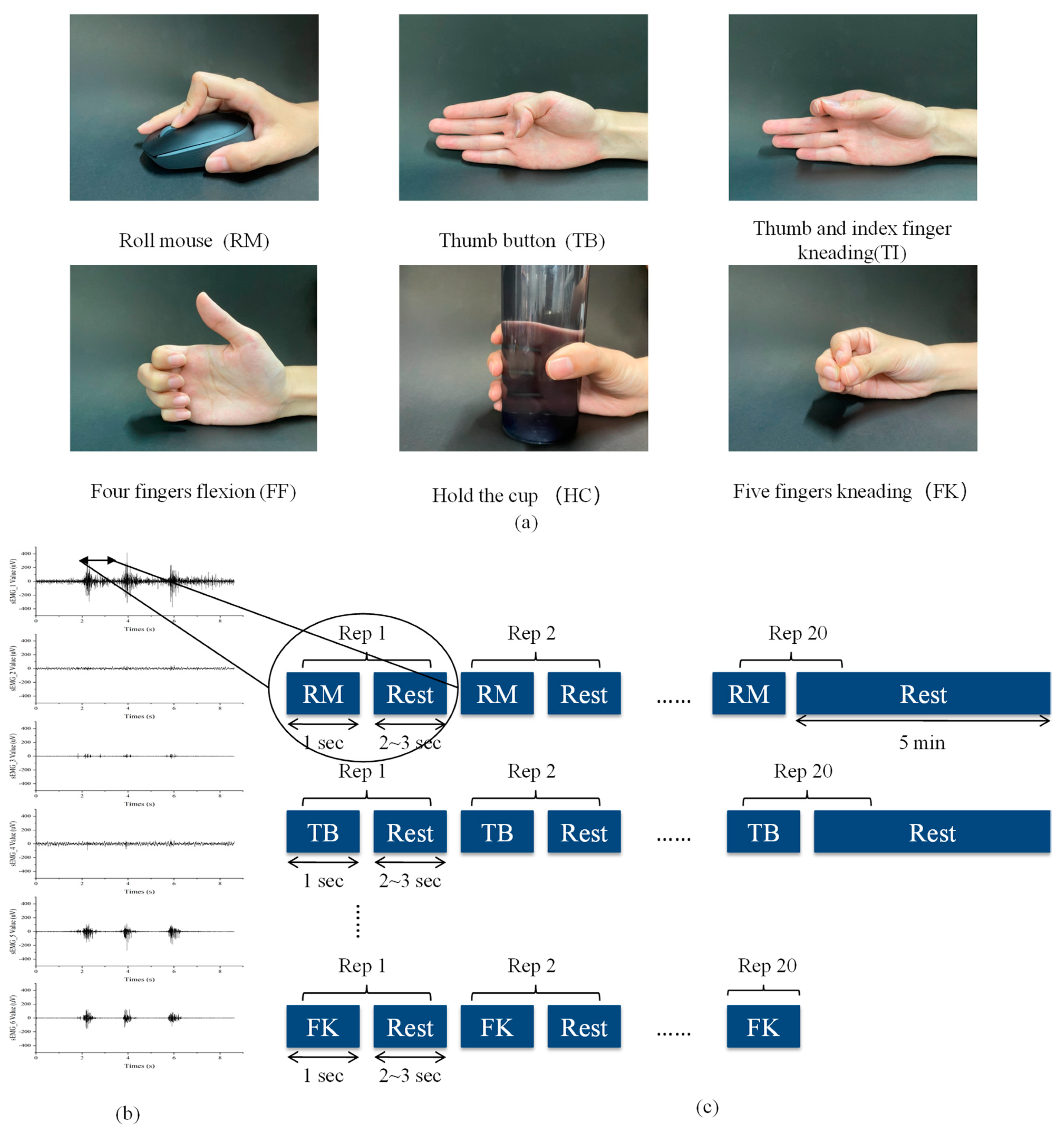

2.2. sEMG Signal Acquisition and Preprocessing

2.3. Statistical Analysis

2.4. Machine Learning for Gesture Discrimination

3. Results

3.1. Statistical Analysis of iEMG

3.2. sEMG Signal-Based Movement Classification

4. Discussion

4.1. Pattern Recognition and Sex Differences

4.2. Comparison of Classifiers

4.3. Algorithm Improvements

4.4. Cross Validation

4.5. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

Appendix A

| (I) MT | (J) MT | Mean Difference (I–J) | Std. Error | Sig. |

|---|---|---|---|---|

| MT-1 | MT-2 | −345.451 * | 25.0213 | 0.000 |

| MT-3 | −836.644 * | 24.4142 | 0.000 | |

| MT-4 | −739.289 * | 23.8941 | 0.000 | |

| MT-5 | −1758.426 * | 23.8241 | 0.000 | |

| MT-6 | −1788.877 * | 24.5963 | 0.000 | |

| MT-2 | MT-1 | 345.451 * | 25.0213 | 0.000 |

| MT-3 | −491.193 * | 25.4514 | 0.000 | |

| MT-4 | −393.838 * | 24.9529 | 0.000 | |

| MT-5 | −1412.975 * | 24.8859 | 0.000 | |

| MT-6 | −1443.426 * | 25.6261 | 0.000 | |

| MT-3 | MT-1 | 836.644 * | 24.4142 | 0.000 |

| MT-2 | 491.193 * | 25.4514 | 0.000 | |

| MT-4 | 97.355 * | 24.3441 | 0.001 | |

| MT-5 | −921.782 * | 24.2755 | 0.000 | |

| MT-6 | −952.233 * | 25.0337 | 0.000 | |

| MT-4 | MT-1 | 739.289 * | 23.8941 | 0.000 |

| MT-2 | 393.838 * | 24.9529 | 0.000 | |

| MT-3 | −97.355 * | 24.3441 | 0.001 | |

| MT-5 | −1019.137 * | 23.7523 | 0.000 | |

| MT-6 | −1049.588 * | 24.5267 | 0.000 | |

| MT-5 | MT-1 | 1758.426 * | 23.8241 | 0.000 |

| MT-2 | 1412.975 * | 24.8859 | 0.000 | |

| MT-3 | 921.782 * | 24.2755 | 0.000 | |

| MT-4 | 1019.137 * | 23.7523 | 0.000 | |

| MT-6 | −30.451 | 24.4586 | 0.815 | |

| MT-6 | MT-1 | 1788.877 * | 24.5963 | 0.000 |

| MT-2 | 1443.426 * | 25.6261 | 0.000 | |

| MT-3 | 952.233 * | 25.0337 | 0.000 | |

| MT-4 | 1049.588 * | 24.5267 | 0.000 | |

| MT-5 | 30.451 | 24.4586 | 0.815 |

| (I) MUSCLE | (J) MUSCLE | Mean Difference (I–J) | Std. Error | Sig. |

|---|---|---|---|---|

| MUSCLE-1 | MUSCLE-2 | 2557.925 * | 24.5518 | 0.000 |

| MUSCLE-3 | 2649.010 * | 24.5518 | 0.000 | |

| MUSCLE-4 | 2483.068 * | 24.5518 | 0.000 | |

| MUSCLE-5 | 2101.673 * | 24.5518 | 0.000 | |

| MUSCLE-6 | 1748.471 * | 24.5518 | 0.000 | |

| MUSCLE-2 | MUSCLE-1 | −2557.925 * | 24.5518 | 0.000 |

| MUSCLE-3 | 91.085 * | 24.5518 | 0.003 | |

| MUSCLE-4 | −74.857 * | 24.5518 | 0.028 | |

| MUSCLE-5 | −456.252 * | 24.5518 | 0.000 | |

| MUSCLE-6 | −809.455 * | 24.5518 | 0.000 | |

| MUSCLE-3 | MUSCLE-1 | −2649.010 * | 24.5518 | 0.000 |

| MUSCLE-2 | −91.085 * | 24.5518 | 0.003 | |

| MUSCLE-4 | −165.942 * | 24.5518 | 0.000 | |

| MUSCLE-5 | −547.337 * | 24.5518 | 0.000 | |

| MUSCLE-6 | −900.540 * | 24.5518 | 0.000 | |

| MUSCLE-4 | MUSCLE-1 | −2483.068 * | 24.5518 | 0.000 |

| MUSCLE-2 | 74.857 * | 24.5518 | 0.028 | |

| MUSCLE-3 | 165.942 * | 24.5518 | 0.000 | |

| MUSCLE-5 | −381.395 * | 24.5518 | 0.000 | |

| MUSCLE-6 | −734.598 * | 24.5518 | 0.000 | |

| MUSCLE-5 | MUSCLE-1 | −2101.673 * | 24.5518 | 0.000 |

| MUSCLE-2 | 456.252 * | 24.5518 | 0.000 | |

| MUSCLE-3 | 547.337 * | 24.5518 | 0.000 | |

| MUSCLE-4 | 381.395 * | 24.5518 | 0.000 | |

| MUSCLE-6 | −353.202 * | 24.5518 | 0.000 | |

| MUSCLE-6 | MUSCLE-1 | −1748.471 * | 24.5518 | 0.000 |

| MUSCLE-2 | 809.455 * | 24.5518 | 0.000 | |

| MUSCLE-3 | 900.540 * | 24.5518 | 0.000 | |

| MUSCLE-4 | 734.598 * | 24.5518 | 0.000 | |

| MUSCLE-5 | 353.202 * | 24.5518 | 0.000 |

References

- Adewuyi, A.A.; Hargrove, L.J.; Kuiken, T.A. An Analysis of Intrinsic and Extrinsic Hand Muscle EMG for Improved Pattern Recognition Control. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 485–494. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dillingham, T.R.; Pezzin, L.E.; MacKenzie, E.J. Limb amputation and limb deficiency: Epidemiology and recent trends in the United States. South. Med. J. 2002, 95, 875–883. [Google Scholar] [CrossRef] [PubMed]

- Ziegler-Graham, K.; MacKenzie, E.J.; Ephraim, P.L.; Travison, T.G.; Brookmeyer, R. Estimating the prevalence of limb loss in the United States: 2005 to 2050. Arch. Phys. Med. Rehabil. 2008, 89, 422–429. [Google Scholar] [CrossRef] [PubMed]

- Waryck, B. Comparison Of Two Myoelectric Multi-Articulating Prosthetic Hands. Myoelectric Symp. 2011. [Google Scholar]

- Kim, J.; Thayabaranathan, T.; Donnan, G.A.; Howard, G.; Howard, V.J.; Rothwell, P.M.; Feigin, V.; Norrving, B.; Owolabi, M.; Pandian, J.; et al. Global Stroke Statistics 2019. Int. J. Stroke 2020, 15, 819–838. [Google Scholar]

- Benjamin, E.J.; Virani, S.S.; Callaway, C.W.; Chamberlain, A.M.; Chang, A.R.; Cheng, S.; Chiuve, S.E.; Cushman, M.; Delling, F.N.; Deo, R.; et al. Heart Disease and Stroke Statistics-2018 Update A Report From the American Heart Association. Circulation 2018, 137, E67–E492. [Google Scholar] [CrossRef] [PubMed]

- Lawrence, E.S.; Coshall, C.; Dundas, R.; Stewart, J.; Rudd, A.G.; Howard, R.; Wolfe, C.D. Estimates of the prevalence of acute stroke impairments and disability in a multiethnic population. Stroke 2001, 32, 1279–1284. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hidler, J.M.; Wall, A.E. Alterations in muscle activation patterns during robotic-assisted walking. Clin. Biomech. 2005, 20, 184–193. [Google Scholar] [CrossRef]

- Kwakkel, G.; Kollen, B.J.; Krebs, H.I. Effects of robot-assisted therapy on upper limb recovery after stroke: A systematic review. Neurorehabilit. Neural Repair 2008, 22, 111–121. [Google Scholar] [CrossRef]

- Lambercy, O.; Lünenburger, L.; Gassert, R. Robots for Measurement/Clinical Assessment. In Neurorehabilitation Technology; Springer: London, UK, 2012. [Google Scholar]

- Chang, W.H.; Yun-Hee, K. Robot-assisted Therapy in Stroke Rehabilitation. J. Stroke 2013, 15, 174–181. [Google Scholar] [CrossRef]

- Lambercy, O.; Ranzani, R.; Gassert, R. Robot-assisted rehabilitation of hand function. Rehabil. Robot. 2018, 23, 205–225. [Google Scholar]

- Turolla, A. An overall framework for neurorehabilitation robotics: Implications for recovery. Rehabil. Robot. 2018, 9, 15–27. [Google Scholar]

- Takahashi, C.D.; Der-Yeghiaian, L.; Le, V.H.; Cramer, S.C. A robotic device for hand motor therapy after stroke. In Proceedings of the 9th International Conference on Rehabilitation Robotics, 2005. ICORR 2005, Chicago, IL, USA, 28 June–1 July 2005. [Google Scholar]

- Lambercy, O.; Dovat, L.; Gassert, R.; Burdet, E.; Teo, C.L.; Milner, T. A Haptic Knob for Rehabilitation of Hand Function. IEEE Trans. Neural Syst. Rehabil. Eng. 2007, 15, 356–366. [Google Scholar] [CrossRef] [PubMed]

- Ho, N.S.K.; Tong, K.Y.; Hu, X.L.; Fung, K.L.; Wei, X.J.; Rong, W.; Susanto, E.A. An EMG-driven exoskeleton hand robotic training device on chronic stroke subjects: Task training system for stroke rehabilitation. In Proceedings of the IEEE International Conference on Rehabilitation Robotics, Zurich, Switzerland, 29 June–1 July 2011. [Google Scholar]

- Schabowsky, C.N.; Godfrey, S.B.; Holley, R.J.; Lum, P.S. Development and pilot testing of HEXORR: Hand EXOskeleton Rehabilitation Robot. J. NeuroEngineering Rehabil. 2010, 7, 1–16. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Polygerinos, P.; Galloway, K.C.; Sanan, S.; Herman, M.; Walsh, C.J. EMG controlled soft robotic glove for assistance during activities of daily living. In Proceedings of the IEEE International Conference on Rehabilitation Robotics, Singapore, 11–14 August 2015. [Google Scholar]

- Borboni, A.; Mor, M.; Faglia, R. Gloreha-Hand Robotic Rehabilitation: Design, Mechanical Model, and Experiments. J. Dyn. Syst. Meas. Control. 2016, 138, 55–60. [Google Scholar] [CrossRef]

- Wang, D.J.; Meng, Q.Y.; Meng, Q.L.; Li, X.W.; Yu, H.L. Design and Development of a Portable Exoskeleton for Hand Rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 2376–2386. [Google Scholar] [CrossRef] [PubMed]

- Arteaga, M.V.; Castiblanco, J.C.; Mondragon, I.F.; Colorado, J.D.; Alvarado-Rojas, C. EMG-driven hand model based on the classification of individual finger movements. Biomed. Signal Processing Control. 2020, 58, 101834. [Google Scholar] [CrossRef]

- Lenzi, T.; De Rossi, S.M.M.; Vitiello, N.; Carrozza, M.C. Intention-Based EMG Control for Powered Exoskeletons. IEEE Trans. Biomed. Eng. 2012, 59, 2180–2190. [Google Scholar] [CrossRef] [PubMed]

- Pulliam, C.L.; Lambrecht, J.M.; Kirsch, R.F. EMG-Based Neural Network Control of Transhumeral Prostheses. J. Rehabil. Res. Dev. 2011, 48, 739. [Google Scholar] [CrossRef]

- Fougner, A.; Stavdahl, O.; Kyberd, P.J.; Losier, Y.G.; Parker, P.A. Control of Upper Limb Prostheses: Terminology and Proportional Myoelectric Control—A Review. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 20, 663–677. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hayashi, H.; Furui, A.; Kurita, Y.; Tsuji, T. A Variance Distribution Model of Surface EMG Signals Based on Inverse Gamma Distribution. IEEE Trans. Biomed. Eng. 2017, 64, 2372–2381. [Google Scholar]

- Phinyomark, A.; Phukpattaranont, P.; Limsakul, C. Feature reduction and selection for EMG signal classification. Expert Syst. Appl. 2012, 39, 7420–7431. [Google Scholar] [CrossRef]

- Naik, G.R.; Al-Timemy, A.H.; Nguyen, H.T. Transradial Amputee Gesture Classification Using an Optimal Number of sEMG Sensors: An Approach Using ICA Clustering. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 837–846. [Google Scholar] [CrossRef] [PubMed]

- Hudgins, B.; Parker, P.; Scott, R.N. A new strategy for multifunction myoelectric control. IEEE Trans. Bio-Med. Eng. 1993, 40, 82–94. [Google Scholar] [CrossRef] [PubMed]

- Du, Y.-C.; Lin, C.-H.; Shyu, L.-Y.; Chen, T. Portable hand motion classifier for multi-channel surface electromyography recognition using grey relational analysis. Expert Syst. Appl. 2010, 37, 4283–4291. [Google Scholar] [CrossRef]

- Robinson, C.P.; Li, B.; Meng, Q.; Pain, M.T.G. Pattern Classification of Hand Movements using Time Domain Features of Electromyography. In Proceedings of the 4th International Conference on Movement and Computing, New York, NY, USA, 14–17 February 2015. [Google Scholar]

- Al-Timemy, A.H.; Bugmann, G.; Escudero, J.; Outram, N. Classification of Finger Movements for the Dexterous Hand Prosthesis Control With Surface Electromyography. IEEE J. Biomed. Health Inform. 2013, 17, 608–618. [Google Scholar] [CrossRef] [PubMed]

- Oskoei, M.A.; Hu, H. Support vector machine-based classification scheme for myoelectric control applied to upper limb. IEEE Trans. Biomed. Eng. 2008, 55, 1956–1965. [Google Scholar] [CrossRef] [PubMed]

- Ariyanto, M.; Caesarendra, W.; Mustaqim, K.A.; Irfan, M.; Pakpahan, J.A.; Setiawan, J.D.; Winoto, A.R. Finger Movement Pattern Recognition Method Using Artificial Neural Network Based on Electromyography (EMG) Sensor. In Proceedings of the 2015 International Conference on Automation, Cognitive Science, Optics, Micro Electro-Mechanical System, and Information Technology, Bandung, Indonesia, 29–30 October 2015; pp. 12–17. [Google Scholar]

- Martinez, R.; Bouffard, J.; Michaud, B.; Plamondon, A.; Cote, J.N.; Begon, M. Sex differences in upper limb 3D joint contributions during a lifting task. Ergonomics 2019, 62, 682–693. [Google Scholar] [CrossRef] [PubMed]

- Bouffard, J.; Martinez, R.; Plamondon, A.; Cote, J.N.; Begon, M. Sex differences in glenohumeral muscle activation and coactivation during a box lifting task. Ergonomics 2019, 62, 1327–1338. [Google Scholar] [CrossRef]

- Hunter, K.S. Fatigability of the elbow flexor muscles for a sustained submaximal contraction is similar in men and women matched for strength. J. Appl. Physiol. 2004, 96, 195–202. [Google Scholar] [CrossRef] [Green Version]

- Kent-Braun, J.A.; Ng, A.V.; Doyle, J.W.; Towse, T.F. Human skeletal muscle responses vary with age and gender during fatigue due to incremental isometric exercise. J. Appl. Physiol. 2002, 93, 1813–1823. [Google Scholar] [CrossRef] [PubMed]

- Manjuanth, H.; Venkatesh, D.; Rajkumar, S.; Taklikar, R.H. Gender difference in hand grip strength and electromyogram (EMG) changes in upper limb. Res. J. Pharm. Biol. Chem. Sci. 2015, 6, 1889–1893. [Google Scholar]

- Kambayashi, I.; Ishimura, N.; Kobayashi, K.; Sagawa, M.; Takeda, H. Differences in Muscle Oxygenation Level According to Gender during Isometric Hand-grip Exercises. J. Hokkaido Univ. Educ. Nat. Sci. 2003, 54, 89–96. [Google Scholar]

- Zeng, H.B.; Li, K.; Tian, X.C.; Wei, N.; Song, R.; Zhou, L.L. Classification of hand motions using linear discriminant analysis and support vector machine. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017. [Google Scholar]

- Du, S.J.; Vuskovic, M. Temporal vs. spectral approach to feature extraction from prehensile EMG signals. Proceedings of IEEE International Conference on Information Reuse and Integration, Las Vegas, NV, USA, 8–10 November 2021; pp. 344–350. [Google Scholar]

- Zhang, D.H.; Xiong, A.B.; Zhao, X.G.; Han, J.D. PCA and LDA for EMG-based Control of Bionic Mechanical hand. Proceedings of IEEE International Conference on Information and Automation (ICIA), Shenyang, China, 6–8 June 2012; pp. 960–965. [Google Scholar]

- Edwards, A.L.; Dawson, M.R.; Hebert, J.S.; Sherstan, C.; Sutton, R.S.; Chan, K.M.; Pilarski, P.M. Application of real-time machine learning to myoelectric prosthesis control: A case series in adaptive switching. Prosthet. Orthot. Int. 2016, 40, 573–581. [Google Scholar]

- Pilarski, P.M.; Dawson, M.R.; Degris, T.; Carey, J.P.; Sutton, R.S. Dynamic switching and real-time machine learning for improved human control of assistive biomedical robots. In Proceedings of the 2012 4th IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob), Rome, Italy, 24–27 June 2012. [Google Scholar]

| Domain | Feature | Formulation |

|---|---|---|

| Time | Integral Electromyographic (iEMG) | |

| Time | Mean Absolute Value (MAV) | |

| Time | Input Contribution Rate (ICRi) | |

| Time | Variance (VAR) |

| Method | Dataset Name | Dataset Description |

|---|---|---|

| Differentiating-the-sex dataset | Female | iEMG values for 10 females |

| Male | iEMG values for 10 males | |

| Half_F&M | iEMG values for 5 males and 5 females | |

| Adding a sex labeling | Sum | iEMG values for 10 males and 10 females without sex label |

| Sum_Label | iEMG values for 10 males and 10 females with sex label |

| Classifier | Dataset | Neighbors | Kernel | Coefficient | Neurons Layer 1 | Neurons Layer 2 | Neurons Layer 3 | Activation Function | Learning Rate (α) |

|---|---|---|---|---|---|---|---|---|---|

| KNN | Female | 2 | - | - | - | - | - | - | - |

| KNN | Male | 4 | - | - | - | - | - | - | - |

| KNN | Half_F&M | 3 | - | - | - | - | - | - | - |

| SVM | Female | - | Gaussian | 9520 | - | - | - | - | - |

| SVM | Male | - | Gaussian | 600 | - | - | - | - | - |

| SVM | Half_F&M | - | Gaussian | 9520 | - | - | - | - | - |

| ANN | Female | - | - | - | 128 | 32 | - | Relu | 10−4 |

| ANN | Male | - | - | - | 128 | 64 | 32 | Relu | 10−4 |

| ANN | Half_F&M | - | - | - | 128 | 64 | 32 | Relu | 10−4 |

| Classifier | Dataset | Neighbors | Kernel | Coefficient | Neurons Layer 1 | Neurons Layer 2 | Neurons Layer 3 | Activation Function | Learning Rate(α) |

|---|---|---|---|---|---|---|---|---|---|

| KNN | Sum | 4 | - | - | - | - | - | - | - |

| KNN | Sum_Label | 3 | - | - | - | - | - | - | - |

| SVM | Sum | - | Gaussian | 17,000 | - | - | - | - | - |

| SVM | Sum_Label | - | Gaussian | 3000 | - | - | - | - | - |

| ANN | Sum | - | - | - | 128 | 64 | 32 | Relu | 10−4 |

| ANN | Sum_Label | - | - | - | 128 | 64 | 32 | Relu | 10−4 |

| Classifier | Dataset | Neighbors | Kernel | Coefficient | Neurons Layer 1 | Neurons Layer 2 | Neurons Layer 3 | Activation Function | Learning Rate (α) |

|---|---|---|---|---|---|---|---|---|---|

| KNN | Female | 2 | - | - | - | - | - | - | - |

| KNN | Male | 2 | - | - | - | - | - | - | - |

| KNN | Half_F&M | 5 | - | - | - | - | - | - | - |

| SVM | Female | - | Gaussian | 610 | - | - | - | - | - |

| SVM | Male | - | Gaussian | 3850 | - | - | - | - | - |

| SVM | Half_F&M | - | Gaussian | 17,000 | - | - | - | - | - |

| ANN | Female | - | - | - | 128 | 64 | 32 | Relu | 10−4 |

| ANN | Male | - | - | - | 128 | 64 | 32 | Relu | 10−4 |

| ANN | Half_F&M | - | - | - | 128 | 64 | 32 | Relu | 10−4 |

| Classifier | Dataset | Neighbors | Kernel | Coefficient | Neurons Layer 1 | Neurons Layer 2 | Neurons Layer 3 | Activation Function | Learning Rate (α) |

|---|---|---|---|---|---|---|---|---|---|

| KNN | Sum | 6 | - | - | - | - | - | - | - |

| KNN | Sum_Label | 4 | - | - | - | - | - | - | - |

| SVM | Sum | - | Gaussian | 1000 | - | - | - | - | - |

| SVM | Sum_Label | - | Gaussian | 590 | - | - | - | - | - |

| ANN | Sum | - | - | - | 128 | 64 | 32 | Relu | 10−4 |

| ANN | Sum_Label | - | - | - | 128 | 64 | 32 | Relu | 10−4 |

| Source | Type III Sum of Squares | df | Mean Square | F | Sig. |

|---|---|---|---|---|---|

| Corrected Model | a35,886,260,844.381 | 71 | 505,440,293.583 | 589.041 | 0.000 |

| Intercept | 28,887,146,915.346 | 1 | 28,887,146,915.346 | 33,665.138 | 0.000 |

| MT | 7,338,848,044.619 | 5 | 1,467,769,608.924 | 1710.542 | 0.000 |

| SEX | 354,125,990.425 | 1 | 354,125,990.425 | 412.699 | 0.000 |

| MUSCLE | 14,477,477,144.689 | 5 | 2,895,495,428.938 | 3374.416 | 0.000 |

| MT ∗ SEX | 56,599,280.705 | 5 | 11,319,856.141 | 13.192 | 0.000 |

| MT ∗ MUSCLE | 12,753,904,623.649 | 25 | 510,156,184.946 | 594.537 | 0.000 |

| SEX ∗ MUSCLE | 216,823,324.470 | 5 | 43,364,664.894 | 50.537 | 0.000 |

| MT ∗ SEX ∗ MUSCLE | 332,584,288.419 | 25 | 13,303,371.537 | 15.504 | 0.000 |

| Error | 14,595,822,050.544 | 17,010 | 858,073.019 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, R.; Zhang, X.; He, D.; Wang, R.; Guo, Y. sEMG Signals Characterization and Identification of Hand Movements by Machine Learning Considering Sex Differences. Appl. Sci. 2022, 12, 2962. https://doi.org/10.3390/app12062962

Zhang R, Zhang X, He D, Wang R, Guo Y. sEMG Signals Characterization and Identification of Hand Movements by Machine Learning Considering Sex Differences. Applied Sciences. 2022; 12(6):2962. https://doi.org/10.3390/app12062962

Chicago/Turabian StyleZhang, Ruixuan, Xushu Zhang, Dongdong He, Ruixue Wang, and Yuan Guo. 2022. "sEMG Signals Characterization and Identification of Hand Movements by Machine Learning Considering Sex Differences" Applied Sciences 12, no. 6: 2962. https://doi.org/10.3390/app12062962