5.1. Sleep Dataset

We used the Sleep-EDFx dataset in Physionet [

32] to evaluate the performance of the proposed method. The dataset included PSG recordings at a sampling rate of 100 Hz and each record consists of EEG (from Fpz-Cz and Pz-Oz electrode locations), EOG (horizontal and vertical), chin electromyography (EMG), and event markers. We only used the Fpz-Cz EEG and the horizontal EOG channels in our research. The hypnograms (sleep stages for each 30 s epoch) were manually labeled by somnologists, followed by the Rechtschaffen and Kales rule [

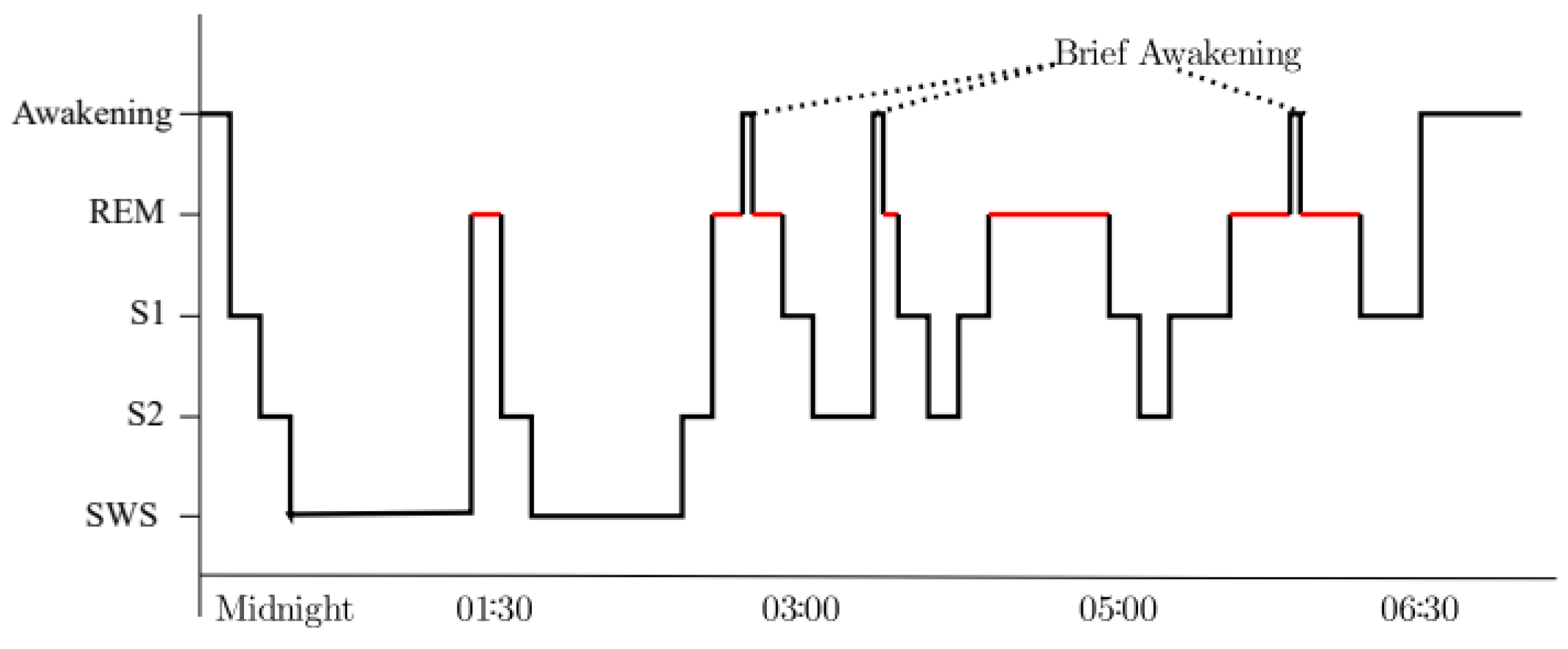

33]. Each stage was mapped to each sleep stage. The sleep stages included Wake, R(REM), S1, S2, S3, S4, MOVEMENT (movement time), and UNKNOWN (not scored). According to the American Academy of Sleep Medicine (AASM) standard, the S3 and S4 stages were merged into a single stage: SWS (Slow-Wave Sleep) [

5]. Wake, MOVEMENT, and UNKNOWN were excluded.

The distribution of sleep stages in the Sleep-EDF database might be biased. Hence, the number of W and S2 stages were much larger than other stages. The deep learning algorithms cannot handle the class imbalance problem. To address this problem, the dataset was sampled to nearly reach a balanced number of sleep stages in each class. Because of the imbalance in the classes, we applied balanced sampling. For example, a total of 16,000 experimental data were randomly sampled from the dataset, consisting of 2400 (15%) for S1, 7200 (45%) for S2, 3520 (22%) for SWS, and 2880 (18%) for R. The experimental dataset was split randomly into a training, a validation, and a testing set with respective proportions (0.6, 0.2, 0.2). The sizes of the training set, validation set, and testing set were 9600, 3200, and 3200, respectively. Therefore, there was no dependency on the training, validation, and testing sets.

5.2. Performance Measures

Various metrics can be used to measure the performance of classifiers. In a binary case, important metrics to measure the performance of the classifier are precision and recall. Precision is the number of true positives divided by the sum of true positives and false positives. Recall is the number of true positives divided by the sum of true positives and false negatives. Frequently, accuracy is used to measure the percentage of overall agreements between predictions and correct answers.

In multi-class problems, the accuracy can show skewed performance because of an imbalance of classes. For example, if the percentage of S2 in the testing set is up to 45%, then just classifying all data as S2 archives an accuracy of 45% with bias in the data. In the case of multi-class, precision and recall can be measured for each class. An F1 score can be used by employing these two measures. The

F1 score calculates the harmonic mean of the precision and recall per class. The F1 scores are not biased due to class imbalance. To measure the overall performance, the accuracy and weighted F1 score were used in our research. The

weighted F1 score was calculated as the weighted average of the F1 score for each class. The closer the accuracy, precision, recall, F1 score, and weighted F1 score values were to 1, the more reliable the classifier was. Using the EarlyStopping function of TensorFlow [

31], the training was stopped when the accuracy of the validation data did not improve more than six epochs. Then, we measured the performance values for the testing data using the Scikit-learn package [

34].

All the experiments were conducted on a server with an Intel i9-9920X CPU @3.50 GHz, 128 GB RAM, four NVidia GeForce RTX 2080, and CUDA 10.1. It took approximately 20 s to perform one epoch in training.

5.3. Experiments

We performed a set of experiments for the signal channels and domains. Experimentally, only the EEG and EOG signals were sufficient, and we used the two signals. The EEG signal should be included because it is vital under the AASM sleep scoring guidelines [

5].

The experiments consisted of the following:

Exp1: Classification using EEG and EOG images in the time domains

Exp2: Classification using EEG and EOG images in the frequency domains

Exp3: Classification using EEG and EOG images in the time domain and frequency domain

In Exp1 and Exp2, the two images (EEG and EOG) were concatenated vertically. The image was resized to 432 by 288 and used as an input for the CNN. In Exp3, the two images that were used in Exp1 and Exp2 were also concatenated horizontally. The image was also resized to 432 by 288 as an input.

Exp1 verified the accuracy of sleep-stage classification using EEG and EOG images in the time domain as input to the CNN. After training the CNN, the accuracy of the training and validation set could be visualized.

Figure 3 shows the accuracy of the training process for the numbers of epochs. After 10 epochs in the training, the CNN achieved 96% as the training set accuracy and 82% as the validation set accuracy.

Table 2 shows the performance evaluation results of Exp1 for the testing data after training. The F1 score values were 59% for S1, 90% for S2, 100% for SWS, and 74% for R. As for the overall performance of Exp1, the weighted F1 score was 84% and the accuracy was 85%.

Figure 4 is the confusion matrix of the cross-validation for Exp1. The row labels are the given classes of the testing data, and the column labels are the ones predicted by the CNN. The results indicate that the CNN was weak in detecting S1. It shows that S1 was correctly classified in only 47.9% of the cases, but that 36.7% of S2 and 15.3% of R were misclassified for the actual given S1.

Exp2 verified the classification accuracy using EEG and EOG images in the frequency domain.

Figure 5 shows the accuracy of the training process for the numbers of epochs. After 14 epochs in the training, the CNN achieves 90% as training set accuracy and 89% as validation set accuracy.

Table 3 shows the performance evaluation results of Exp2. The F1 score values were 60% for S1, 90% for S2, 97% for SWS, and 84% for R, respectively. For the overall performance of Exp2, the weighted F1 score was 86% and accuracy was 86%. The results show that the performance of Exp2 is similar to that of Exp1.

Figure 6 is the confusion matrix of Exp2. The accuracy of classification for S1 is still low. A total of 48.3% of the given S1 set was correctly classified, but 34.7% of S2 and 17.0% of R were misclassified for the S1 set.

Exp3 verifies the classification accuracy using EEG and EOG images in the time and frequency domains. The input for the CNN in Exp3 was resized by concatenating the images that were used in Exp1 and Exp2.

Figure 7 shows the accuracy of the experiment for numbers of epochs. After 14 epochs, the CNN achieved a 98% training set accuracy and a 94% validation set accuracy.

Table 4 shows the performance evaluation results of Exp3. The F1 score values were 84% for S1, 100% for S2, 100% for SWS, and 84% for R. As for the overall performance of Exp 3, the weighted F1 score was 94% and the accuracy was also 94%.

Figure 8 is the confusion matrix of Exp. 3. The CNN is classified correctly except that S1 and R, or vice versa, are partially confused. The reason why it is difficult for the CNN to distinguish between S1 and R is because they have similar characteristics.

Table 5 shows a comparison of the results of the three experiments. It shows the average, the range of accuracy, and the F1 score for each experiment. The highest accuracy was shown when EEG and EOG images in the time domain and frequency domain were used. In this research, only EEG and EOG channels were used, but in general, we can obtain better results by adding more channels. It was experimentally confirmed that using only EEG and EEG channels can achieve similar results to using more channels.

We showed that it is possible to classify sleep stages using the EEG and EOG channel and a CNN working on biosignals, without any feature extraction processes. Training after simple preprocessing was conducted without requiring any expert knowledge of features. This is an advantage, because the CNN can learn the features in the EEG and EOG images, wherein the images are a kind of dataset that somnologists are familiar with when classifying sleep stages.

When analyzing the kinds of errors in the classification, the errors mostly happened in stages that are contiguous in the sleep cycle. For example, S1 is most often confused with R or vice versa. Similarly, S1 is known as the stage for which inter-somnologist agreement is the lowest. It can be misclassified as R, which can contain patterns similar to S1.