A Comparative Study of Deep Learning Models for Dental Segmentation in Panoramic Radiograph

Abstract

:1. Introduction

2. Related Works

3. Materials and Methods

3.1. Dataset

3.2. Data Augmentation

3.3. Segmentation Models

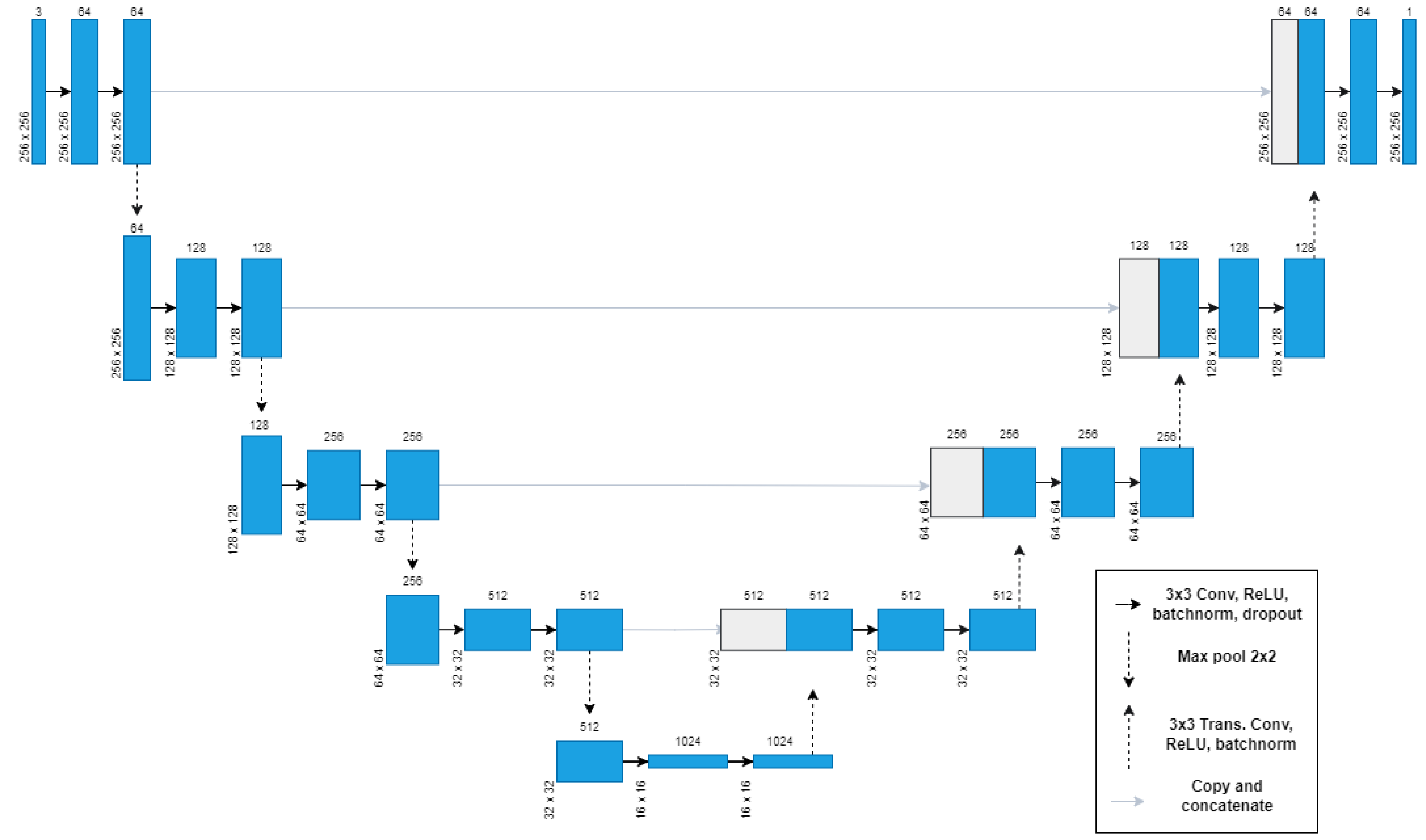

3.3.1. U-Net

3.3.2. DCU-Net

3.3.3. DoubleU-NET

3.3.4. Nano-NET

3.4. Loss Function

3.5. Evaluation Criterion

- TP: are the amount of pixels correctly predicted from the tooth area;

- TN: are the amount of pixels correctly predicted from the background area;

- FP: are the amount of incorrectly predicted pixels of the tooth area;

- FN: are the amount of pixels incorrectly predicted from the background area.

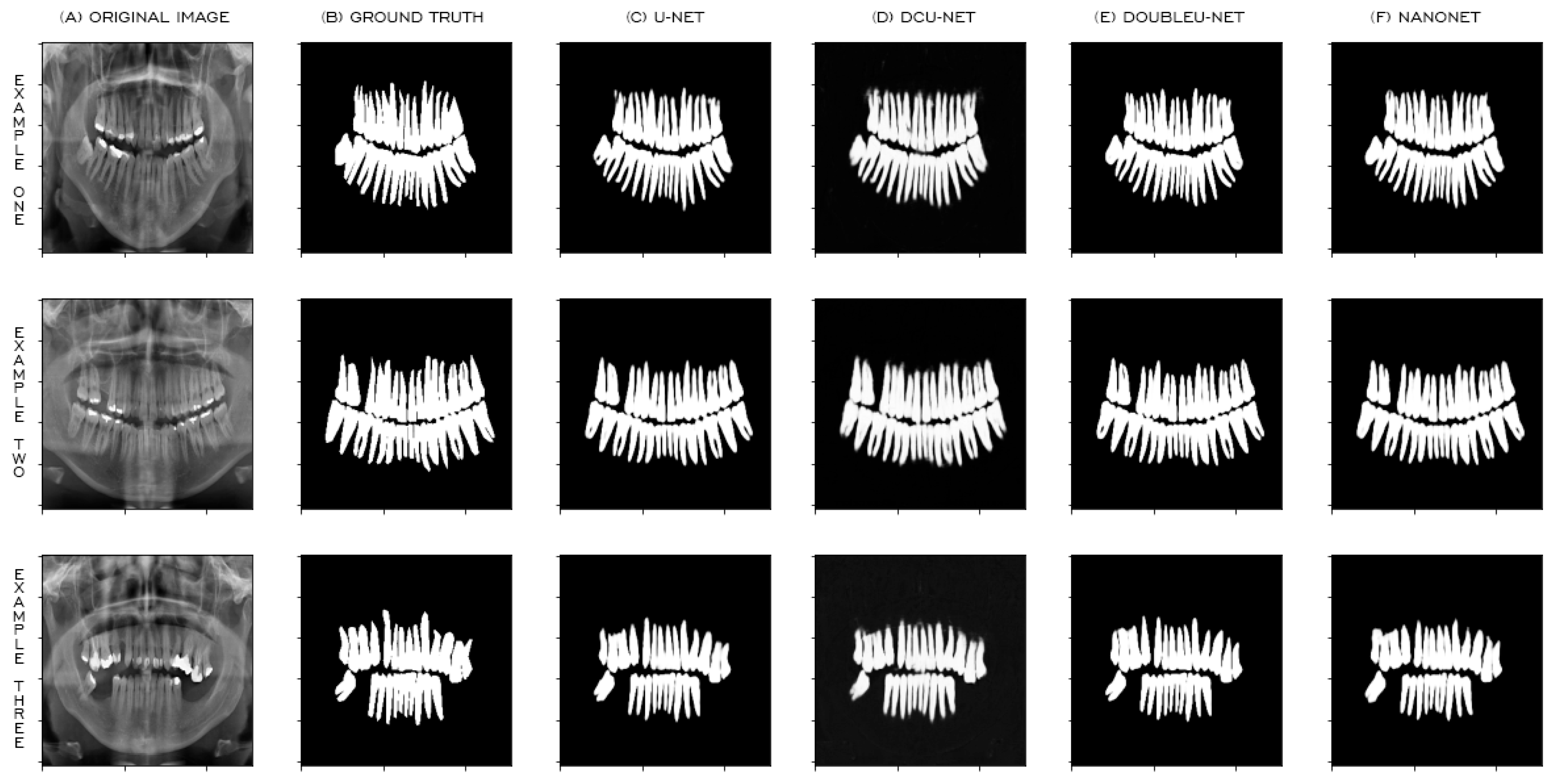

4. Experimental Results and Discussion

4.1. Comparison Setting

4.2. Results Using Data Augmentation

4.3. Results without Using Data Augmentation

4.4. Comparison with the State of the Art

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wirtz, A.; Mirashi, S.G.; Wesarg, S. Automatic teeth segmentation in panoramic X-ray images using a coupled shape model in combination with a neural network. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Granada, Spain, 16–20 September 2018; pp. 712–719. [Google Scholar]

- Kim, J.; Lee, H.S.; Song, I.S.; Jung, K.H. DeNTNet: Deep Neural Transfer Network for the detection of periodontal bone loss using panoramic dental radiographs. Sci. Rep. 2019, 9, 17615. [Google Scholar] [CrossRef]

- Leite, A.F.; Van Gerven, A.; Willems, H.; Beznik, T.; Lahoud, P.; Gaêta-Araujo, H.; Vranckx, M.; Jacobs, R. Artificial intelligence-driven novel tool for tooth detection and segmentation on panoramic radiographs. Clin. Oral Investig. 2021, 25, 2257–2267. [Google Scholar] [CrossRef]

- Silva, G.; Oliveira, L.; Pithon, M. Automatic segmenting teeth in X-ray images: Trends, a novel data set, benchmarking and future perspectives. Expert Syst. Appl. 2018, 107, 15–31. [Google Scholar] [CrossRef] [Green Version]

- Barboza, E.B.; Marana, A.N.; Oliveira, D.T. Semiautomatic dental recognition using a graph-based segmentation algorithm and teeth shapes features. In Proceedings of the 2012 5th IAPR International Conference on Biometrics (ICB), New Delhi, India, 29 March–1 April 2012; pp. 348–353. [Google Scholar]

- Akkus, Z.; Galimzianova, A.; Hoogi, A.; Rubin, D.L.; Erickson, B.J. Deep learning for brain MRI segmentation: State of the art and future directions. J. Digit. Imaging 2017, 30, 449–459. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Song, Q.; Zhao, L.; Luo, X.; Dou, X. Using deep learning for classification of lung nodules on computed tomography images. J. Healthc. Eng. 2017, 2017, 8314740. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, H.; Zhou, Z.; Li, Y.; Chen, Z.; Lu, P.; Wang, W.; Liu, W.; Yu, L. Comparison of machine learning methods for classifying mediastinal lymph node metastasis of non-small cell lung cancer from 18 F-FDG PET/CT images. EJNMMI Res. 2017, 7, 11. [Google Scholar] [CrossRef]

- Becker, A.S.; Marcon, M.; Ghafoor, S.; Wurnig, M.C.; Frauenfelder, T.; Boss, A. Deep learning in mammography: Diagnostic accuracy of a multipurpose image analysis software in the detection of breast cancer. Investig. Radiol. 2017, 52, 434–440. [Google Scholar] [CrossRef] [PubMed]

- Kamnitsas, K.; Ledig, C.; Newcombe, V.F.; Simpson, J.P.; Kane, A.D.; Menon, D.K.; Rueckert, D.; Glocker, B. Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med. Image Anal. 2017, 36, 61–78. [Google Scholar] [CrossRef] [PubMed]

- Kubota, T.; Jerebko, A.K.; Dewan, M.; Salganicoff, M.; Krishnan, A. Segmentation of pulmonary nodules of various densities with morphological approaches and convexity models. Med. Image Anal. 2011, 15, 133–154. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.H.; Kim, D.H.; Jeong, S.N.; Choi, S.H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018, 77, 106–111. [Google Scholar] [CrossRef] [PubMed]

- Ekert, T.; Krois, J.; Meinhold, L.; Elhennawy, K.; Emara, R.; Golla, T.; Schwendicke, F. Deep learning for the radiographic detection of apical lesions. J. Endod. 2019, 45, 917–922. [Google Scholar] [CrossRef] [PubMed]

- Jaskari, J.; Sahlsten, J.; Järnstedt, J.; Mehtonen, H.; Karhu, K.; Sundqvist, O.; Hietanen, A.; Varjonen, V.; Mattila, V.; Kaski, K. Deep learning method for mandibular canal segmentation in dental cone beam computed tomography volumes. Sci. Rep. 2020, 10, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Y.; Li, P.; Gao, C.; Liu, Y.; Chen, Q.; Yang, F.; Meng, D. TSASNet: Tooth segmentation on dental panoramic X-ray images by Two-Stage Attention Segmentation Network. Knowl.-Based Syst. 2020, 206, 106338. [Google Scholar] [CrossRef]

- Lee, J.H.; Han, S.S.; Kim, Y.H.; Lee, C.; Kim, I. Application of a fully deep convolutional neural network to the automation of tooth segmentation on panoramic radiographs. Oral Surgery Oral Med. Oral Pathol. Oral Radiol. 2020, 129, 635–642. [Google Scholar] [CrossRef]

- De Angelis, F.; Pranno, N.; Franchina, A.; Di Carlo, S.; Brauner, E.; Ferri, A.; Pellegrino, G.; Grecchi, E.; Goker, F.; Stefanelli, L.V. Artificial Intelligence: A New Diagnostic Software in Dentistry: A Preliminary Performance Diagnostic Study. Int. J. Environ. Res. Public Health 2022, 19, 1728. [Google Scholar] [CrossRef]

- Koch, T.L.; Perslev, M.; Igel, C.; Brandt, S.S. Accurate segmentation of dental panoramic radiographs with U-Nets. In Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 8–11 April 2019; pp. 15–19. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Lou, A.; Guan, S.; Loew, M.H. DC-UNet: Rethinking the U-Net architecture with dual channel efficient CNN for medical image segmentation. In Medical Imaging 2021: Image Processing; International Society for Optics and Photonics: Bellingham, WA, USA, 2021; Volume 11596, p. 115962T. [Google Scholar]

- Jha, D.; Riegler, M.A.; Johansen, D.; Halvorsen, P.; Johansen, H.D. Doubleu-net: A deep convolutional neural network for medical image segmentation. In Proceedings of the 2020 IEEE 33rd International Symposium on Computer-Based Medical Systems (CBMS), Rochester, MN, USA, 28–30 July 2020; pp. 558–564. [Google Scholar]

- Jha, D.; Tomar, N.K.; Ali, S.; Riegler, M.A.; Johansen, H.D.; Johansen, D.; de Lange, T.; Halvorsen, P. NanoNet: Real-Time Polyp Segmentation in Video Capsule Endoscopy and Colonoscopy. arXiv 2021, arXiv:2104.11138. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hangzhou, China, 26–28 August 2018; pp. 4510–4520. [Google Scholar]

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. Unitbox: An advanced object detection network. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 516–520. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

| Category | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| 32 teeth | Yes | Yes | Yes | Yes | +32 | - | No | No | No | No |

| Filling | Yes | Yes | No | No | - | - | Yes | Yes | No | No |

| Braces | Yes | No | Yes | No | - | - | Yes | No | Yes | No |

| Dental implant | No | No | No | No | No | Yes | No | No | No | No |

| Model | Batch_Size with Data Augmentation | Batch_Size without Data Augmentation | Epochs |

|---|---|---|---|

| U-Net | 32 | 4 | 30 |

| DCU-Net | 10 | 4 | 30 |

| DoubleU-Net | 16 | 4 | 30 |

| Nano-Net | 32 | 4 | 50 |

| Model | Dice (%) | Accuracy (%) | Precision (%) | Recall (%) | Parameter |

|---|---|---|---|---|---|

| U-Net | 91.033 | 95.966 | 95.342 | 87.122 | 31,031,745 |

| DCU-Net | 91.616 | 96.208 | 96.107 | 87.547 | 10,069,928 |

| DoubleU-NET | 92.886 | 96.591 | 93.095 | 92.705 | 29,264,930 |

| Nano-Net | 89.855 | 95.576 | 96.326 | 84.222 | 235,425 |

| Model | Dice (%) | Accuracy (%) | Precision (%) | Recall (%) | Parameter |

|---|---|---|---|---|---|

| U-Net | 91.681 | 96.191 | 94.898 | 88.700 | 31,031,745 |

| DCU-Net | 91.451 | 96.123 | 95.390 | 87.846 | 10,069,928 |

| DoubleU-NET | 92.695 | 96.552 | 94.184 | 91.283 | 29,264,930 |

| Nano-Net | 91.739 | 96.173 | 93.783 | 89.815 | 235,425 |

| Model | Dice (%) | Accuracy (%) | Precision (%) | Recall (%) | Parameter |

|---|---|---|---|---|---|

| TSASNET [15] | 92.72 | 96.94 | 94.97 | 93.77 | 78,270,000 |

| U-NET (Ensemble) [18] | 93.58 | 95.17 | 93.69 | 93.91 | - |

| DoubleU-NET w/data augmentation | 92.886 | 96.591 | 93.095 | 92.705 | 29,264,930 |

| Nano-Net w/data augmentation | 89.855 | 95.576 | 96.326 | 84.222 | 235,425 |

| DoubleU-NET wo/data augmentation | 92.695 | 96.552 | 94.184 | 91.283 | 29,264,930 |

| Nano-Net wo/data augmentation | 91.739 | 96.173 | 93.783 | 89.815 | 235,425 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

da Silva Rocha, É.; Endo, P.T. A Comparative Study of Deep Learning Models for Dental Segmentation in Panoramic Radiograph. Appl. Sci. 2022, 12, 3103. https://doi.org/10.3390/app12063103

da Silva Rocha É, Endo PT. A Comparative Study of Deep Learning Models for Dental Segmentation in Panoramic Radiograph. Applied Sciences. 2022; 12(6):3103. https://doi.org/10.3390/app12063103

Chicago/Turabian Styleda Silva Rocha, Élisson, and Patricia Takako Endo. 2022. "A Comparative Study of Deep Learning Models for Dental Segmentation in Panoramic Radiograph" Applied Sciences 12, no. 6: 3103. https://doi.org/10.3390/app12063103

APA Styleda Silva Rocha, É., & Endo, P. T. (2022). A Comparative Study of Deep Learning Models for Dental Segmentation in Panoramic Radiograph. Applied Sciences, 12(6), 3103. https://doi.org/10.3390/app12063103