1. Introduction

Honoring the legacy of our ancestors, inherited in the form of physical artifacts, is a duty to both to their memory as well as to our successors. Cultural Heritage encompasses the legacy in terms of artifacts, such as sculptures, jewelry, tools, mosaics, paintings, manuscripts as well as museums, monumental buildings or archaeological remains. While visiting traditional museums has been a favorite cultural activity for ages, we have been witnessing a shift towards incorporating technological advancements in museum settings [

1]. The use of digital technologies in cultural heritage-related issues has provided significant enhancements of the overall experience [

2]. Digitization in the field of cultural heritage works towards ensuring immortality of cultural monuments and collections, which are so vulnerable in times of war or natural disasters [

3].

The COVID-19 pandemic brought important changes in all aspects of life. People faced various situations that were previously inconceivable. Special measures, such as social distancing, made us rethink and redesign processes that previously involved close encounters among persons. The cultural life of the majority of people had to take a step back and many cultural events resorted to being fully online [

4,

5]. Social interactions were avoided at all costs, for extended periods of time, and people are slowly starting to get accustomed to being around others. In this context, we tackle the issue of digitally enhanced museums, endowed with Virtual Reality (VR) applications and various media that allow a rich interaction of users with the exposed artifacts.

Virtual Reality is a mature field of research, with many applications in the educational field or in the Cultural Heritage [

6]. As a technology, it has been in the worldwide spotlight recently, with Mark Zuckerberg’s launch of the Meta platform (Mark Zuckerberg, “Facebook’s VR future: New sensors on Quest Pro, fitness and a metaverse for work”,

https://www.cnet.com/tech/gaming/features/mark-zuckerberg-on-facebook-vr-future-new-sensors-on-quest-pro-fitness-and-a-metaverse-for-work/ (accessed on 24 February 2022)). Special hardware, such as the Oculus Quest 2 VR headset (“Zuckerberg wants Facebook to become online ‘metaverse’”,

https://www.bbc.com/news/technology-57942909 (accessed on 24 February 2022)), has been designed in order to help users achieve full immersion into the virtual environment. A great disadvantage of this kind of devices is seen when considering their use in a public setting, such as a museum: in today’s worries surrounding the pandemic, the use of special Head Mounted Displays (HMD) would require special disinfection policies and users may be reluctant to use them altogether, despite the special care that would be taken to ensure proper disinfection [

7]. In this context, we focused on using, in our approach, touchless interaction with the virtual scene, by means of the Leap Motion (Leap Motion Controller,

https://www.ultraleap.com/product/leap-motion-controller/ (accessed on 22 March 2022)) device and common hand movements.

Museum visitor’s interaction with modern digital technologies, such as gesture-based Virtual or Augmented Reality (AR), is often regarded with skepticism [

3,

8,

9,

10,

11,

12]. This kind of experiences is still unfamiliar to most regular users. When taking into account various age gaps and various technological backgrounds of museum visitors, the willingness of users to use such technological solutions and, thus, their acceptance by the users, is questionable.

Our work lies in the field of enhancing cultural heritage-related activities in museums by means of VR software solutions, while adjusting the experience to current limitations regarding social interactions. We wish to offer users the possibility to explore (or experiment) the history contained in the artifacts displayed in the museum beyond their present physical appearance, offering an unforgettable experience mediated by modern interactive technologies. We aim to deliver a sense of touch of the artifacts that are usually encased in glass boxes or may by fragile or too big, altogether, to be exposed in a museum setting. Our solution makes use of natural hand gesture-based interaction, in a desire to reduce physical contact among different persons to a minimum, thus following pandemic recommendations of social distancing and limited contact.

In this study, we present our experience with developing a VR system that uses natural interaction with the artifacts in a museum by hand gestures. We explore several research questions (RQ), in order to assess the degree of usability and usefulness of the presented approach:

Research Question 1 (RQ1): How is natural interaction by means of hand gestures perceived by the users?

Research Question 2 (RQ2): Do visitors consider that the gesture-based VR system contributes to the attractiveness of the museum?

Research Question 3 (RQ3): Are visitors satisfied with using the VR system implemented in the museum?

Research Question 4 (RQ4): Does the interaction with the system spark user interest towards VR/AR applications?

To investigate the opinions of users with respect to the research questions, we conducted a user study with 137 participants among the museum visitors, during a period of 6 months.

The paper is organized as follows. First, we provide an overview of some recent works in the field of VR applications and cultural heritage. The next section is devoted to the detailed description of the design and functionality of the VR systems implemented in the two museums. We take special emphasis on explaining the navigation metaphor. We aim to assess the usability and usefulness of the developed systems, as they result from our study involving a quantitative research methodology. We evaluate the perceived ease of use and usefulness of the developed VR systems, and debunk the skepticism that surrounds the acceptance of new technologies among certain age groups.

2. Related Work

The digitization of cultural content and virtual exhibitions are briefly reviewed in [

12], where the authors compared approaches for web and mobile solutions, with remarks concerning financial aspects. A comparison of various VR/AR systems concluded that users favor systems which provide greater interaction and immersion into the virtual recreated environment. A suite of low cost or free platforms that can be used for modeling and reconstruction of public works is presented in [

13], while gaming-specific tools are used to help reconstruct 3D replicas for some historical buildings in [

14].

It has already been recognized that cultural heritage exhibitions can benefit from recent advances in low-cost optical tracking sensors, such as Leap Motion or Microsoft Kinect (Kinect for Windows,

https://developer.microsoft.com/en-us/windows/kinect/ (accessed on 22 March 2022)), enabling the development of interactive digital installations based on natural gestures. The main advantage of these types of interactive installations that integrate optical tracking sensors is that the users are no longer required to use traditional controllers (mouse, keyboards, controllers, and touchscreens) that require physical interaction and in the current COVID-19 pandemic, a rigorous cleaning of the device is required to be done after each user. For various HMD systems that require the user to use a specific headset paired with controllers, the constraints associated with their use within museum exhibitions are even more restrictive as the head-mounted display equipment cleaning process is even more delicate.

From the point of view of assessing the impact of cultural heritage actions [

15], proposed a conceptual framework for the assessment of heritage impact, studying its implications and consequences in order to support sustainable heritage capitalization. Innovations regarding museum services by means of wireless technology may present as a means to achieve cultural sustainability, while the use of digital technologies also enhances the perceived quality of the museum [

16]. Cultural sustainability goals of ensuring various methods to preserve and protect cultural heritage were embraced by researchers involved in museum-related studies, who investigated sustainability in a museum setting [

17].

During the last decades, museums have started to implement digital systems intended to enable the dissemination of their cultural content with a wider audience; thus, they implemented various web-based application that integrate 3D models. The models are either added to a web database, or, in some cases, encased within a virtual environment. The main drawback of these solutions is represented by the lack of natural gestures interactions, thus, even if the applications can be designed to be compatible with natural gestures sensors, most potential visitors do not have access to 3D depth cameras sensors, such as Leap Motion [

18] or Microsoft Kinect [

19].

One of the main advantages of having virtual replicas of cultural heritage assets is the possibility to integrate 3D reconstruction techniques to digitally restore various movable or immovable cultural heritage assets, regardless of their size and material. For movable assets, such as paintings, coins, jewelry, ceramics, maps, clothing, historical artifacts, etc., they can all be reconstructed in 3D using 3D scanning, reverse engineering, and computer-aided design techniques. The same principle can be applied to large immovable assets, such as buildings and monuments, the only difference involves the high amount of work involved considering the scale of the cultural heritage asset and the details of the final output model.

One example of a large-scale digitization process of immovable cultural heritage is focused on the Kłodzko Fortress [

20], the authors have then made use of the point clouds acquired using 3D terrestrial laser scanning to define the virtual reality environment that presents the fortress. Since museums have started to digitize their inventory to enable web-based dissemination of their cultural assets, the next step involved the development of innovative and interactive museum exhibitions that would encourage some of the online visitors to travel and visit the museum cultural heritage assets in real-life. This increased the demand of modern museum digital exhibitions to offer innovative interaction using natural gestures or with various immersive VR/AR systems, therefore creating an enhanced cultural exhibit experience.

Vosinakis et al. developed a cultural heritage application that allows users to take the role of a sculptor and create Cycladic sculptures [

21]. Other researchers combined Natural Interaction (NI) with AR to define interactive digital installations intended for cultural heritage. The proposed system integrates a low-cost HMD used for visualization and a Leap Motion sensor that tracks the user hands and allows the user to manipulate various 3D models of various archaeological findings [

22].

While Leap Motion is focused on hand tracking, sensors such as Kinect can be implemented within museum exhibition to track both hand gestures as well as body gestures. A Natural User Interface was developed by Manghisi et al. that makes use of the Kinect V2 sensor to enable various mid-air gestures for the navigation of virtual-tours in cultural heritage expositions as well as selecting 3D elements and zooming in and out within the scene [

23].

A methodology of 3D scanning Intangible Cultural Heritage that integrates Kinect sensors was developed by Skublewska-Paszkowska et al. to precisely analyze the fingers of real dancers, to preserve intangible Cultural Heritage with the case study of Lazgi Dance [

24]. This methodology is based on the same Natural gestures sensors that enable real time interaction with cultural heritage assets, but in this case, they are used to record real people dancing and recording the precise movement of both body and, most importantly, their hands.

Digital storytelling by means of VR and AR technologies is the focus of [

25]. Both a Kinect sensor and a Leap Motion sensor were used in order to detect gestures performed by users. The use of the Kinect sensor required users to maintain some static position to enable gesture detection and it was found to be “too demanding for the users”, while the Leap allowed more free movements, while precisely detecting gestures. The educational potential of museums is enhanced by the use of VR, which allows gestural interaction with CH assets [

26], using a Leap Motion sensor, coupled with an Oculus Rift. The advantages in terms of ease of use of the Leap Motion proposed it as the technological choice in this solution, and it was reinforced by the users’ comments. Natural interaction achieved in immersive VR applications deployed in a museum setting was tackled in [

27], in a comparison of the Leap Motion and Kinect sensor. The authors emphasized the appropriateness of using Leap Motion in an uncontrolled environment (such as a museum), as opposed to using the Kinect in a highly supervised and controlled environment.

A complex technological system, encompassing a VR headset and a 3D tracking system, was developed for a cultural heritage setting in the Ara Pacis museum in Rome [

28]. The VR and AR aspects of the exhibition were depicted to be among the focal aspects of interest for the users having minimal prior experience with VR, together with the exhibition content and the general organization of the museum. A hybrid VR-AR application for CH (cultural heritage) was investigated in [

29], where authors also expressed their trust towards the ubiquity of the VR and AR in the near future. Nevertheless, the VR experience can only complement an actual visit to a CH site, and it cannot substitute the experience of the “real visit” [

30].

Interaction with the VR environment by means of a mobile device was explored in many applications [

31,

32]. These studies take advantage of the familiarity of users with using mobile devices with touchscreen and use it as an interaction tool, in various VR application contexts: an “Immersive Virtual Museum” [

31], or an application for decorating interior spaces with virtual objects [

32]. Although the use of AR in mobile technology brings the user independence in viewing artifacts, the desktop solution results may have been influenced by the participants’ greater experience with computer interfaces [

33].

The introduction of devices for capturing gestures and performing actions in the field of AR, in general, but especially in the field of museum exhibitions, is a concern when interpreting hand gestures to perform the necessary action, with the necessary limitations in a multi-user environment [

27]. Of course, there are studies on the use of mobile devices in case of visitor’s interaction with the elements of the exhibition, immersive or/and not-immersive [

31], in which there are limitations regarding the museum interaction for visitors or devices chosen for it. Other means of interaction in a VR application were the focus of [

34], where they totally excluded hand gestures as a means of hand-free interaction. The study reviewed VR applications which allow immersion of the user mainly by means of voice and eye gaze. Visual, audio, and olfactory stimuli were added in the context of an AR application for CH in [

35], in an attempt to test the extent to which the multisensory approach impacts the user experience.

In Romania, recently, researchers took on the mission to digitize artifacts and historical sites. Various approaches have been used in some of the country’s most valuable areas, from a historical perspective. Neamtu et al. [

36] contributed by 3D reconstruction of the Sarmizegetusa Regia site—the cradle of the Dacian kingdom and UNESCO world heritage site. The Bran castle, along with six fortified churches were the subject of a modeling and reconstruction study presented in [

37]. An attempt to obtain a 3D model of natural monuments, such as the Sphinx from the Bucegi Mountains, was presented in [

38].

The Dobrogea region, situated in the south-east of Romania, on the shore of the Black Sea, has been a cradle for humanity since the Paleolithic. It hosted several Greek colonies starting with the 7th and 6th centuries BC, such as Histria, Tomis—on the remains of which lies the city of Constanta today, or Callatis—where the town of Mangalia resides today. Vestiges abound in Dobrogea and it is, in the present, home to several large museums. The TOMIS project (TOMIS project,

http://tomis.cerva.ro/ (accessed on 26 March 2022)) focused on the virtual reconstruction of the ancient Greek colony Tomis, by the means of 3D replicas of architectural artifacts, and a real-time, interactive and self-organizing virtual society that actively immerses visitors into the everyday colony life [

39].

The work detailed in this paper lies on the path of taking advantage of digital technologies in museums, aiming at reaping benefits from intertwining the inherent value of museum heritage, with the advantages brought by the process digitization.

3. Materials and Methods

Our study delves into the details of the design, implementation, and actual setup of a VR system that uses gesture-based NI with virtual artifacts in a museum setting. In the following, we document each phase of our work. The users of the system may interact with virtual replicas of museum artifacts, as well as navigate through 3D reconstructions of historical buildings and their surroundings. The solution is deployed in the Callatis Museum in Mangalia, Romania, and was evaluated in a user-based study over a period of 6 months involving 137 participants among the roughly 250 visitors guided by a total of 5 staff members working in shifts.

3.1. The Development Insights

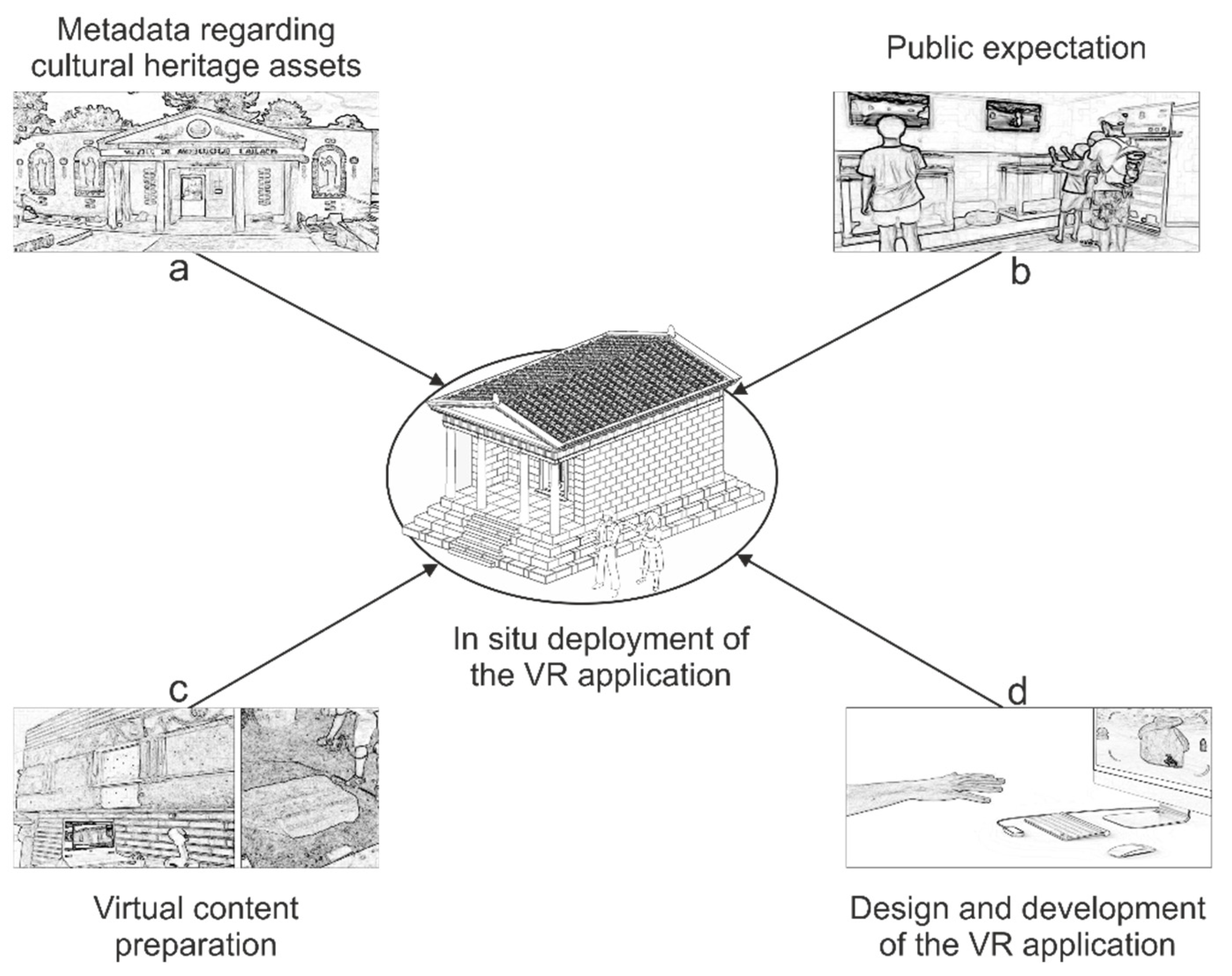

The steps undertaken during the development process of the VR gesture-based system are mentioned in

Figure 1. The system drew its roots from the public expectations for such a system, collected in fruitful discussions with museum staff (

Figure 1a) and regular museum visitors (

Figure 1b). Following the requirement analysis for the system, we started to prepare the virtual content. During this phase, we scanned the movable cultural assets, then, we refined and textured the 3D models, and we obtained 3D replicas of architectural artifacts of the ancient temple (

Figure 1c).

The next development phase was concerned with the design and development of the VR application. We took on a software engineering approach, first designing the application, then implementing the visualization module, the animation module, and the interaction module (

Figure 1d). The modules were integrated and the system went through validation tests.

In the final phase of our project, the system was deployed in situ at the Callatis Museum of History and Archeology, in Mangalia, Romania, where we first installed the hardware system and then made the system operational, calibrating and testing it in real museum conditions. The museum staff was trained to use it in good condition, such that they further explained the functionality of the system to museum visitors. For the testing phase, we wrote user manuals that document the proper usage of the system, and we provided several videos depicting several scenarios for the interaction of users with the 3D replicas by means of hand gestures, as well as for the navigation inside the reconstructed temple. The user manuals are readily available for both museum staff and visitors, and the videos are run in a loop on displays on the walls of the exhibition.

3.1.1. Virtual Content Preparation

The 3D digitization process made use of a handheld structured-light scanner (Creaform Go!Scan 50) (Creaform,

https://www.creaform3d.com/ (accessed on 28 March 2022)), to acquire both the geometry and the texture of the artefacts, and architectural elements from Callatis Museum. The 3D scanning has been done directly in the location where the cultural heritage assets are positioned within the museum exhibition—this is one of the advantages of the handheld structured-light scanner.

Figure 2 presents the 3D scanning of both artifacts and architectural elements. In total, 22 artifacts and 6 architectural elements have been digitized. For the large architectural elements that are positioned with their back to the wall, only the front side has been 3D scanned and the back has been filled in order to obtain closed bodies as 3D virtual models.

In what concerns temple constructive elements, all of them were modelled using 3D Max, 2019 license (Autodesk 3DS Max,

https://www.autodesk.com/campaigns/3ds-max (accessed on 22 March 2022)), on the basis of the archaeological data provided by our partner, Callatis Museum (

Table 1). Scanned replicas were also filtered using 3D Max, in order to be transformed in FBX 2019 binary format (FBX format,

https://www.autodesk.com/products/fbx/overview (accessed on 22 March 2022)) including media information (so-called texture, shadows, etc.). In the exhibition, we put together a hypothetical setup validated by the museum staff.

3.1.2. Interactive VR-Based Visualization Solution

All the artifact 3D replicas (except for some of the architectural basis elements, such as architrave, doric frieze, fragment ceiling geison) were further augmented by semantic/historical content, before being used in the interactive visualization solution. To this end, existing

Supplementary Information focused on the place of discovery, the description, the dimensions was stored in JSON format (excerpt below) in order to be easily used by other applications (ECMA-404—the JSON data interchange,

https://www.json.org/json-en.html (accessed on 22 March 2022)).

![Applsci 12 04452 i003]()

The software application itself was implemented using GoDOT game engine version 3.4.2 (Godot Engine,

https://godotengine.org/ (accessed on 22 March 2022)), GoDOT Leap Motion driver 1.1 from GODOT Asset Library (Godot Leapmotion Asset,

https://godotengine.org/asset-library/asset/215 (accessed on 31 March 2022)), together with LeapMotion driver version 4.0.0+52173 (Leap Motion Controller,

https://www.ultraleap.com/product/leap-motion-controller/ (accessed on 22 March 2022)), on Windows 10 (Windows 10,

https://www.microsoft.com/ro-ro/software-download/windows10 (accessed on 22 March 2022)). In order to optimize its deployment in the partner museum, we chose to pack 3D models separately (one pack for each artifact, and one pack for the temple) and keep the textual description of all of the virtual artifacts open to further updates, outside of their corresponding packs (as test files, easy to be read and written by the museum personnel).

The VR software system is referred to, in the following, as the In Situ Visualization System (ISVS). It is composed of two components, ISVS-A, and ISVS-B, which are similar in implementation, but differ in their purpose within the interactive exhibition. A detailed presentation of both components is presented in

Section 3.2.

3.1.3. Application Deployment in Real Environment

In order to install the systems and put them into operation in situ, at the museum, the team had to adapt the systems from the laboratory conditions to the real working conditions. Thus, it was necessary to analyze the following aspects:

Effective arrangement of equipment in the exhibition, with an impact on the technical elements of connection: network, audio, video signals;

Design and location of LeapMotion clamping and support systems;

Configuring the computer systems and adapting their layout so that the communication signals between LeapMotion and the application is not disturbed;

Designing the necessary elements for the interaction of the visitor with the In Situ Vizualisation Systems ISVS-A, and ISVS-B, within the exhibition.

After establishing all of the above, the VR system implementation in the exhibition was carried out in stages. A pilot version of ISVS-A equipment was installed for the beginning, with all the elements configured in the complete configuration. For this, we also designed and made a support for the Leap Motion device, adapted to the showcases of the exhibition, so as not to make a discordant note to the museum environment. After installing the ISVS-A component, we installed and configured the ISVS-B system. For this, it was necessary to design and make a support device for the ergonomic arrangement of LeapMotion.

The custom support for the Leap Motion sensor for the two proposed ISVS-A and ISVS-B system was manufactured using Fused Deposition Modeling (FDM) on a 3D printer. Our main purpose was to enclose the Leap Motion sensor and position the custom support at an optimal height and orientation according to the existing museum exhibition glass display cases and pillar supports. The 3D-printed parts paired with the aluminum profile facilitate a good cable management solution. The main components of each custom support system are illustrated in

Figure 3 along with the positioning and orientation of the Leap Motion sensor.

These configurations have been validated, since the implementation and testing phase in situ, through the direct and effective interaction of the museum visitors. On this occasion, we received immediate feedback with respect to the usability of the system, mostly positive, along with suggestions for improvements. This confirmed that the proposed solution is suitable for the visiting museum public and is an important step forward in highlighting the museum components in a 3D format.

The system was installed in full configuration after completing these initial steps and validating them in situ.

The staff of the museum has limited knowledge in the field of operating computing technology; hence, this was a challenge in terms of the daily operation of equipment, hardware and software. This issue has been approached by implementing computer on/off procedures at the computer level, as follows:

At the BIOS component, the computer’s start time has been set, using PC’s BIOS or UEFI;

Start-up commands have been implemented for launching applications automatically;

At the end of the working hours, the computing systems set the closing time using the task scheduler mechanisms to set up regular shutdowns.

Through these mechanisms, we compensate for the low level of knowledge of the museum staff regarding the operation of computer systems and installed applications.

3.2. Technical Aspects

Our solution for interactive visualization dedicated for cultural heritage setups is suitable for two museum exhibit configurations. The first is adapted to visualize a large set or virtual artifacts organized in sets of 5 artifacts per visualization session (denoted by ISVS-A). The second one is dedicated to the visualization of an entire edifice, e.g., a temple (denoted by ISVS-B). While the software architectures of both modules are identical, at the hardware level, due to 3D virtual environment complexity, these two systems request slightly different setups as shown in

Table 2 and

Table 3.

Both ISVS-A (

Figure 4) and ISVS-B (

Figure 5) interactive visualization systems consist of a public viewing screen (

Figure 4a and

Figure 5a), a LeapMotion interaction sensor (

Figure 4b and

Figure 5b) which aims to detect the position, orientation, and posture of the palm of the user’s right hand, and, obviously, a process unit, in the form of a laptop (

Figure 4c) or a desktop PC (

Figure 5c), placed in the immediate vicinity of the sensor and the viewing screen.

Obviously, there is a software component, called ISVS-A and ISVS-B, respectively, that makes it possible to take over the information detected by the LeapMotion interaction sensor (

Figure 4b and

Figure 5b), analyze it at the level of the computing unit (

Figure 4c and

Figure 5c), and display a coherent visual response at user’s gestures via the display screen (

Figure 4b and

Figure 5b).

3.3. Follow My Hand—Touchless Interaction Metaphor

We faced multiple challenges in choosing the interaction metaphor. On the one hand, COVID-19 imposed a distance separation-based solution, that is, a touch-less interaction and physical distance of minimum 1.5 m between persons inside a small group of tourists and their guide. A second important constraint arises from the use of a single hand, the right one, and enriching its gestures with different semantics, such as “turn left”, “turn right”, “look up”, “look down”, “rotate to the right”, “rotate to the left”, “move forward”, and “move backwards” that applies on different virtual elements inside the 3D virtual environments, e.g., virtual artifacts or cameras.

In the following, we shall give some insights concerning how user gestures expressed in the real world are translated into the user avatar navigation inside the 3D virtual environment, virtual artifacts visualization and interaction with selected one.

3.3.1. Interaction Metaphor

Let us start with the simplest hand gesture, e.g., presenting the right hand in the sensor area and waving it horizontally (

Figure 6a) in the case of ISVS-A system, used to visualize a set of virtual artifacts. This means that the entire scene will rotate in the sense indicated by the user’s hand (

Figure 7a(a1,a2)). If the user moves the open hand to the left, the entire scene will rotate to the left, otherwise (e.g., the user moves the open hand to the right) the entire scene rotates to the right. If the user retracts his open hand from the sensor area, the application continues to present the artifacts, in a rotative manner, until another user intervenes or it stops according to the museum schedule. Next day, at the opening time, the application starts automatically to present another set of artifacts, arbitrarily selected from the museum collection (see a short demonstration of the ISVS-A system:

https://youtu.be/x0CqLyYd8TQ (accessed on 20 April 2022)).

However, once the user closes the hand (

Figure 6b), the application changes its state from “

artifacts presentation” to “

artifact interaction” mode (

Figure 8). The rotation of the entire scene stops, and the central (so-called

selected) artifact starts rotating, according the closed hand waving/rotating direction (

Figure 7b(b1,b2)). This time, if the user moves the closed hand to the left, the selected artifact will smoothly rotate to the left, otherwise (e.g., the user moves the closed hand at the right) the selected artifact rotates to the right. During all this time, while the user keeps the hand closed, an explanatory text slides in the superior part of the visualization display, from the right to the left. This behavior is described as a finite state machine in

Figure 8.

3.3.2. Navigation Metaphor

For ISVS-B, we implemented a navigation metaphor, tackling the problem from various perspectives. The ISVS-B system implemented yet two other completely different visualization modes: one that corresponds to a completely autonomous “

360° virtual tour” of the edifice (e.g., by default mode of ISVS-B) and another one that corresponds to a “

first person” perspective, completely controlled by the museum visitor (e.g., controlled mode that supposes the user’s hand presence in the sensor area) (see a short demonstration of ISVS-B system:

https://youtu.be/hT2ChW7w4x8 (accessed on 20 April 2022)).

In the default mode, the ISVS-B system presents a 360° virtual tour of a hypothetic temple located in Mangalia city of Romania (former Callatis Greek colony), situated on the Black Sea coast (former Pontus Euxinus—the original Latin name). The default camera is continuously focused on the temple and follows a closed path that turns several times around the temple at different altitudes. According to the camera’s altitude, the building details of the temple are revealed to the user. This way, the user obtains not only a general view of the edifice, but also a detailed interior one, that shows her/him each constructive element in its place and order (

Figure 9).

Once the user decides to “

take the hand” on the camera, all that he/she has to do is to place the open right hand in the sensor area for at least 5 s, and from there, the system knows that there is user interested to interact with the system, so it changes the mode and camera to a “

first person” one (

Figure 10). The first-person camera starts to be controlled by the user only after the perspective is changed to “

first person” and the user closes the right hand. If the user retracts his open right hand from the sensor area for more than 5 s, the system regains control on the camera and turns back in “

360° virtual tour” mode.

Once the user takes control of the first-person camera, he/she may move forward/backwards, turn the camera left/right/upwards/downwards. All these actions are schematically illustrated in

Figure 11.

For the ISVS-B’s state machine describing internal states of ISVS-B according to the user’s right closed hand location and gesture, see

Figure 12. Consequently, while the system is in the “

first person” state (

Figure 12), the user may experiment free navigation by moving his closed right hand along the Oz axis, by looking around her/him at left/right turning left/right the right hand or even to look up or down by orienting the hand upwards or downwards. If one of these right-hand movements stops, the first-person camera reacts accordingly, by passing in the state that fits with the right-hand posture and position.

Last but not least, a special internal state of free navigation mode appears in case an artifact appears in the camera’s field of view that makes the subject of temple construction, such as a column, or the head of a column, the architrave, the tympanum, the temple frieze, and so on. Regardless of the architectural element being placed in the edifice reconstruction or not, the ISVS-B system highlights it by a bounding box and displays useful information concerning it to the user (

Figure 13).

3.4. User Study Design

The deployment of the systems in the Callatis Museum of Mangalia was followed by making it available to be readily used by museum visitors. Our goal was to investigate their impressions after interacting with digital cultural heritage assets, both artifacts and architectural edifice, by means of hand gestures.

Museum visitors were provided with descriptive user manuals to help them get familiarized with the conceptual framework of the system. The members of the stuff performed short demonstrations of the usage, in order to showcase the basic functionality of the systems. The participants were also presented with possible interactions with the system by means of a video which was playing in a loop on large screens inside the museum.

The intention was to have users perform freely tasks, without any help, then have them assess the usability of the applications. We did not impose a time limit for the interaction, nor a rigid set of tasks to be performed. At this point, we were interested in assessing the perceived usefulness of the system and the willingness of casual/regular museum visitors to use gesture-based VR technology.

Users were presented with a questionnaire at the end of their visit. The survey was created by the software development team and refined after being analyzed by museum employees at the Callatis Museum in Mangalia.

The items in the questionnaire were organized in three sections: first, demographic information is collected as variables on nominal scale, then, two sections dedicated to measuring usability, respectively, the degree of utility of the application and satisfaction of the users, composed of items measured on a five-point Likert scale, ranging from

Strongly disagree (1) to

Strongly agree (5) with the affirmation in the item. During the study, the survey was provided for users on paper, and it was also made available for museum visitors online, using the Google Forms platform (Google Forms platform,

https://www.google.com/forms/about/ (accessed on 10 February 2022)), which provides a user-friendly interface and easy access to collected survey data. The survey is presented as

Supplementary Materials for this paper.

5. Discussion and Limitations

Museum artifacts and cultural objects are usually subject to restrictions with respect to their handling, partly due to preservation reasons, their uniqueness, and also to their frailty or inaccessibility. The systems presented in this paper use Virtual Reality replicas of museum artifacts and allow users to interact with the 3D reconstructed objects using natural hand gestures.

We were concerned about the potential skepticism with which users might approach the application. As it results from the analysis above, the vast majority of users enjoyed using the application, finding it easy to be used. This result answers the first research question (

RQ1) that we addressed in the study. With respect to

RQ2, from analyzing the responses to the questionnaire presented in the previous section, we conclude that the implementation of our systems in the museum enhances the attractiveness of the museum (

Figure 19).

The median score for the suitability of the system as a tool for the development of historical knowledge is 5, and the mode is also 5, thus expressing high satisfaction with respect to the historical education of audience, while the median agreement score with the item “The application allowed me to create a mental image of the visualized objects” was also 5, with a mode of 5, signifying an overwhelming strong agreement from the part of the users. The answers are consistent. The same median and mode values of 5 support the strong agreement with the item “The application is useful for promoting cultural heritage”. We extract from the user answers the conclusion that they are satisfied with the experience of using the system, in response to RQ3. Overall, almost 90% of the visitors strongly agreed to a rising interest towards using VR/AR systems in the future, thus answering RQ4.

To conclude, the research questions that we inquired were answered in a positive manner by the museum visitors that agreed to fill in questionnaires on the occasion of their visit to the museum.

The majority of the museum visitors that filled in questionnaires were more than 15 years old (90%), with 80% of visitors being adults, approximately 10% of users being over 50 years old. All the users in the “over 65 years” and in the “11–15 years” categories provided positive answers with respect to their personal satisfaction with using the app (

Figure 22 and

Figure 23). The small sample of users in these age ranges is, however, a limitation of our study. In a future study, it would be interesting to assess the acceptance of our system in a larger sample of young users (<15 years old), and likewise, in a larger sample of older users (>65 years old).

From the point of view of the physical effort required to use the system, the opinions of the users were divided. The mean score of the responses to the item “I consider that the interaction with the system does not require too much physical effort” was 3.05, with the standard deviation of 1.91 (44.5% of users strongly agreed, and 44.5% of users strongly disagreed with the statement). Hence, even if the intellectual effort of using the system was deemed low, the physical effort may raise a concern.

The user answers to the open items revealed that some users considered that they should have devoted more time to the visit to the museum or that the location is too cold; one user was not pleased with the placement of the system in a too small place, and another user considered the exposed artifact to have “low brightness”. On the positive side, we received numerous appreciative comments, such as “I liked showcases, souvenirs, I learned new things about museum pieces”, while other user listed as positive aspects “Presentation mode, friendly staff, objects presented clearly and in detail”.

6. Conclusions and Future Work

Our study lies along the path of using new technologies in cultural heritage preservation, conservation, and dissemination. Our contribution consists in the comprehensible presentation of a full-scale approach of a complex software system that enables museum visitors to naturally interact with museum artifacts using basic hand gestures and immerse in a virtual reality 3D world that evokes the atmosphere of an ancient roman temple. The virtualization of the archaeological artifacts and remains in Callatis is described in the paper, as it was performed without any negative intervention upon them, ensuring their preservation in the museum, while making them readily available for the public manipulation, in a virtual sense. Our methodology may be easily extended to be used in other museums, for any kind of exhibits which are suitable to the scanned 3D models.

The users interact with artifacts in a natural fashion with ease, enjoying the process.

We report our findings from a questionnaire-based study of the usability of the system, in which we were also concerned with the degree of utility of the system, as perceived by the users that interacted with it, as well as their satisfaction with the system. Our analysis revealed that users see the system as a useful tool for learning history and for popularization of archaeological vestiges.

A direction for our research in the near future involves focusing on extending our solution with two-handed interaction metaphors for a single user. One possible application will enable the visitor to experience a personal cultural immersion by modeling virtual artifacts that extend the presented collection to the public. Later on, this metaphor will be further extended to encompass several users that interact in a collaborative task in the virtual environment, e.g., building a sword.