Intelligent Task Offloading in Fog Computing Based Vehicular Networks

Abstract

:1. Introduction

- 1.

- Screen out those offloaded tasks that are about to leave the RSU coverage area. The screen-out tasks are forwarded to a cloud server for task execution.

- 2.

- A utility function is designed for the rest of the offloaded task requests according to task preferences and the remaining time of attachment with the RSU.

- 3.

- If task requests are more than the execution capacity of the fog node, tasks are optimally scrutinized according to their utility function by applying the 0/1 knapsack algorithm. The scrutinized tasks are executed at the fog node, and the rest of the tasks are forwarded to the cloud for processing.

2. Related Works

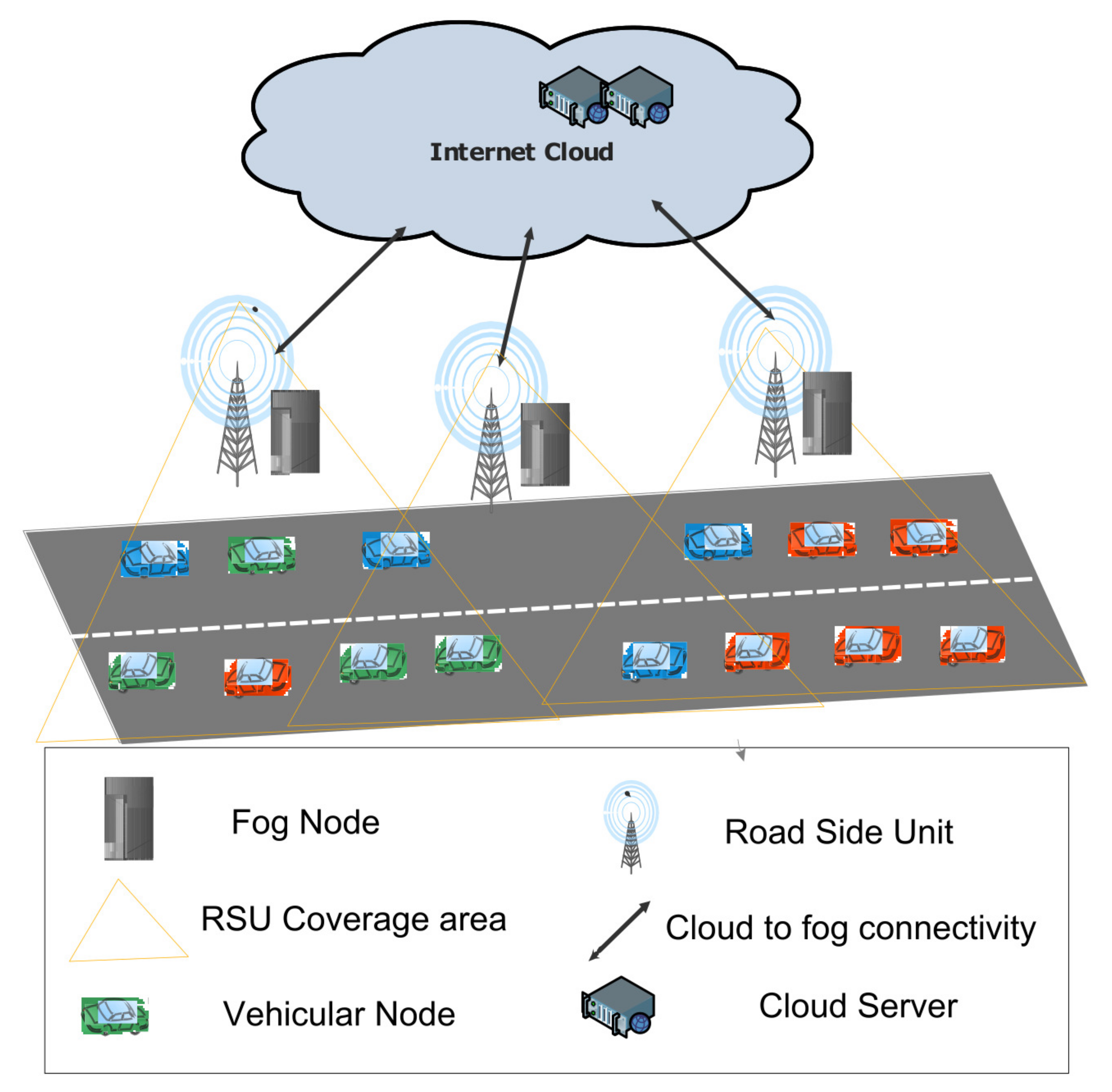

3. System Model

4. Proposed Task Execution Policy

- A task selection policy is introduced by excluding offloaded tasks of those vehicles that are about to leave the fog node coverage area.

- A utility function of the fog node determines the priority for all offloaded tasks by all vehicles in the range of the RSU.

- An optimal selection of offloaded tasks to be executed by the fog node are determined by applying a 0/1 knapsack algorithm.

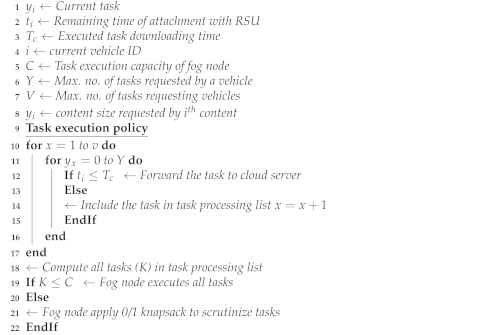

4.1. Task Selection Policy

- 1.

- If the vehicle’s remaining time of attachment with the RSU is less than its executed task downloaded time, then the task is forwarded to the cloud for task execution, and the cloud is supposed to download the task to a fog node placed at the vehicle’s next attached RSU.

- 2.

- If all the valid requested tasks are less than its task execution capacity, then it processes all tasks itself, and no task will be forwarded to the cloud server.

| Algorithm 1: Task processing Criteria |

|

4.2. Fog Node Utility Function

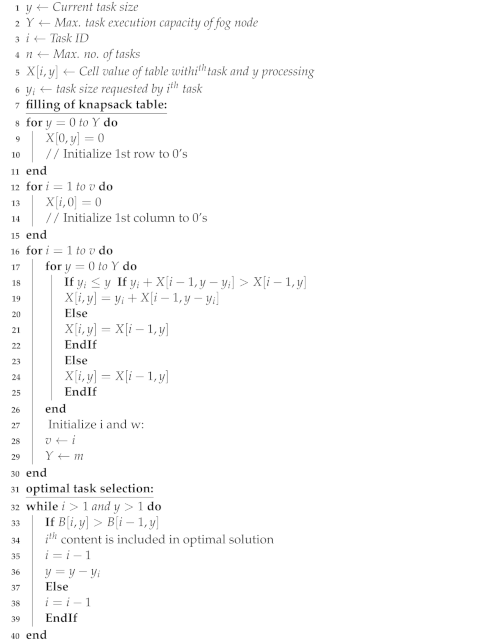

4.3. 0/1 Knapsack for Task Scheduling

- The requested offloaded tasks of V vehicles, with t requested tasks by each vehicle should be less than the task capacity C and is represented as:

- The scrutinized tasks are selected with maximum value such as utility.

| Algorithm 2: Task Selection Criteria |

|

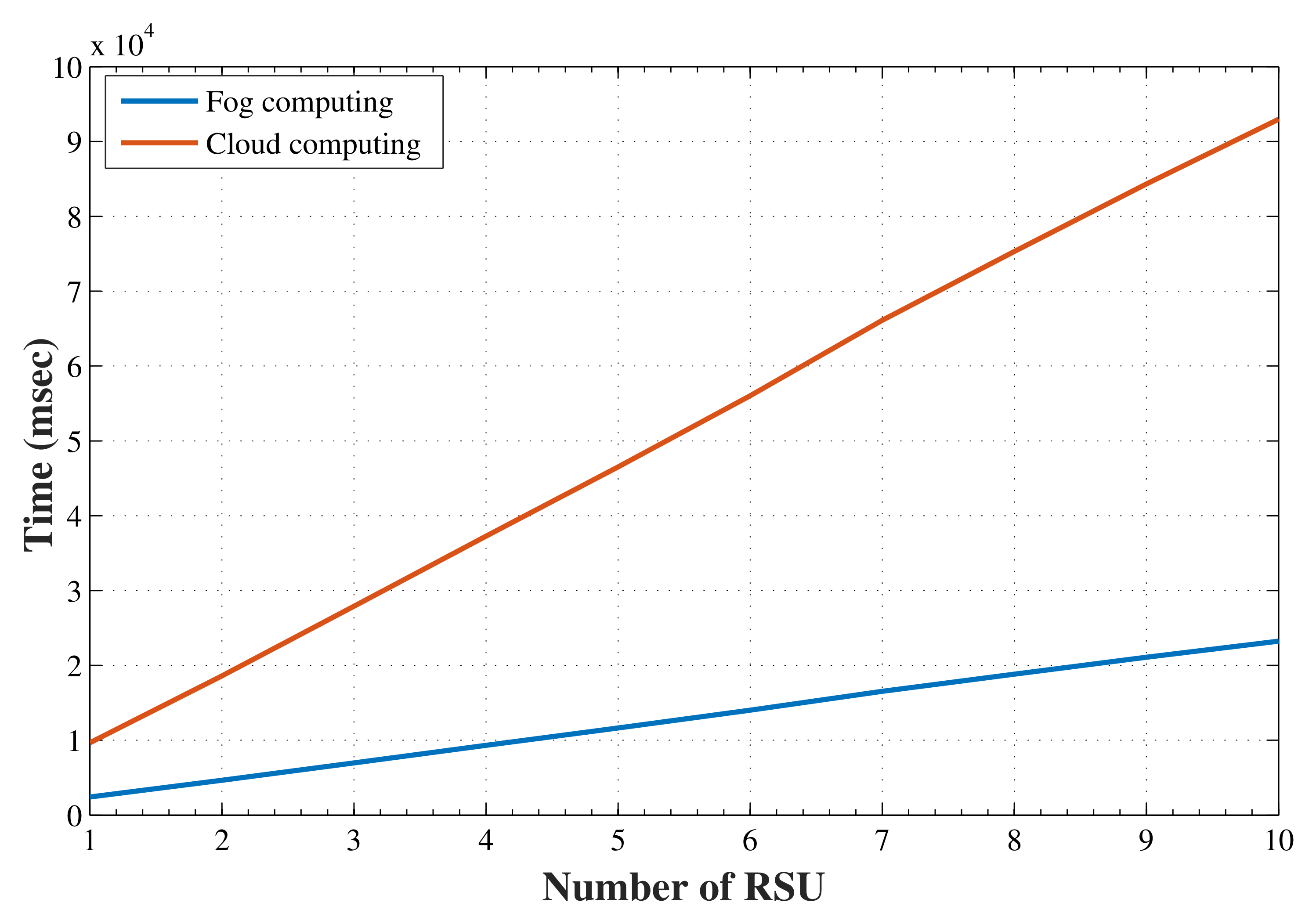

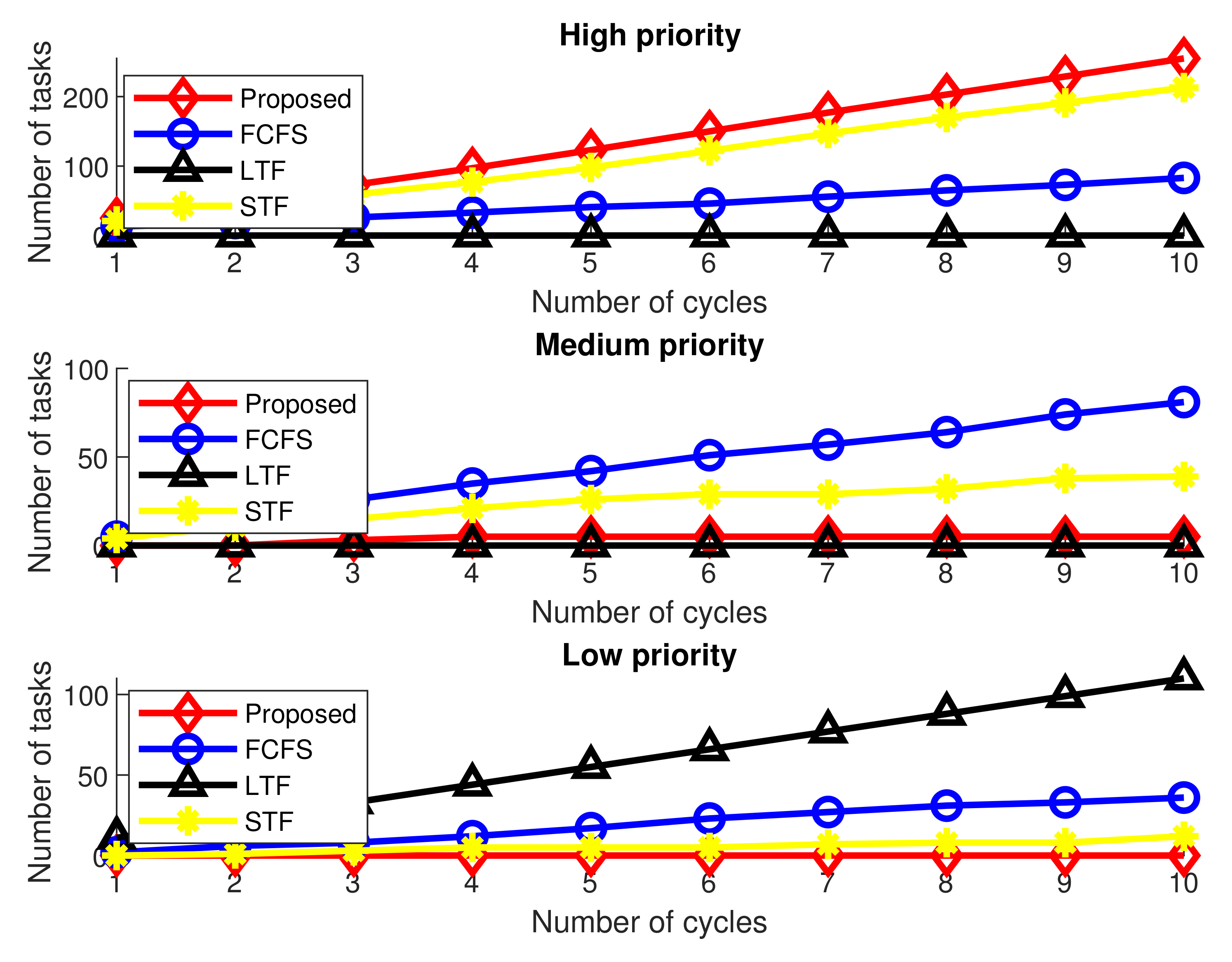

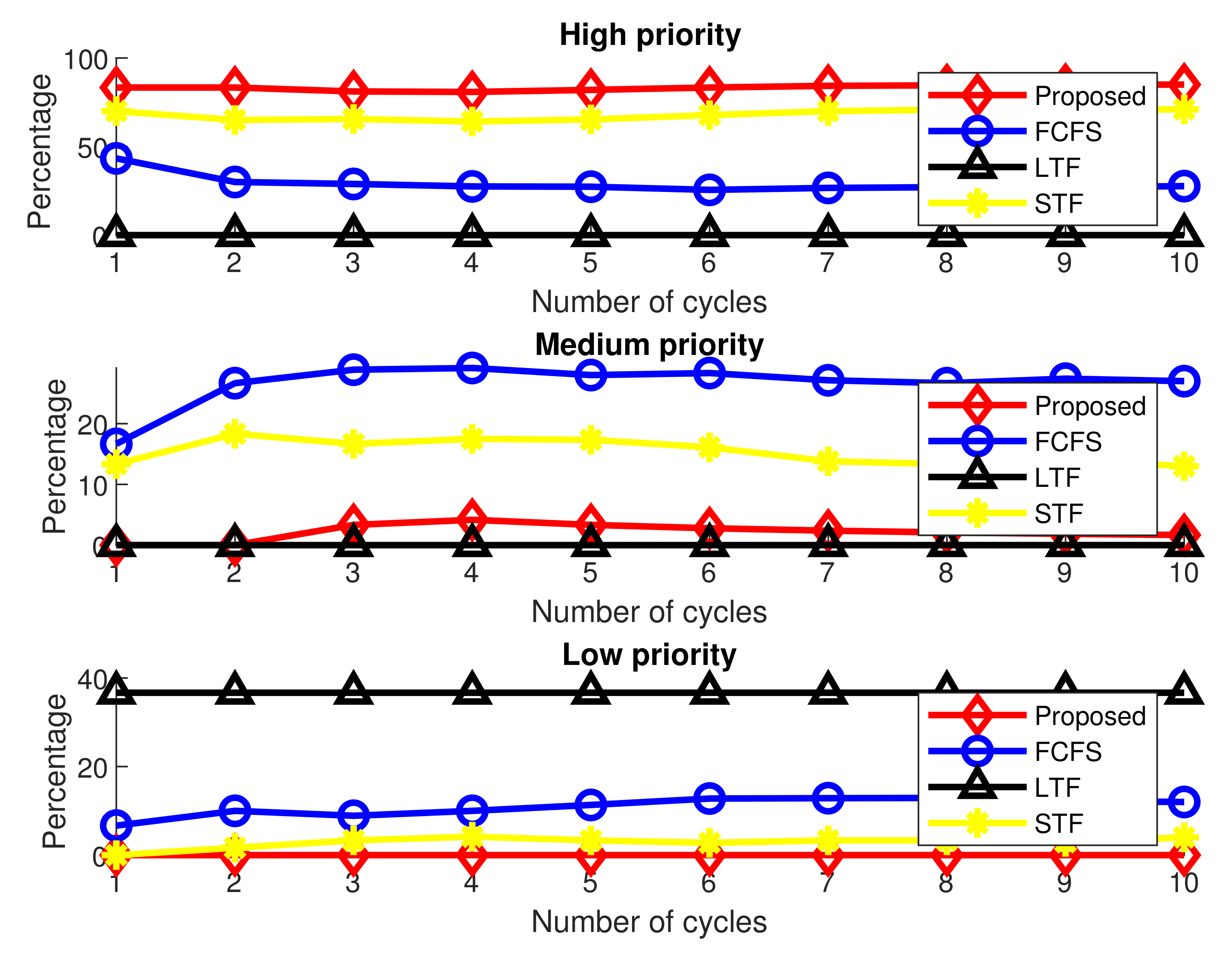

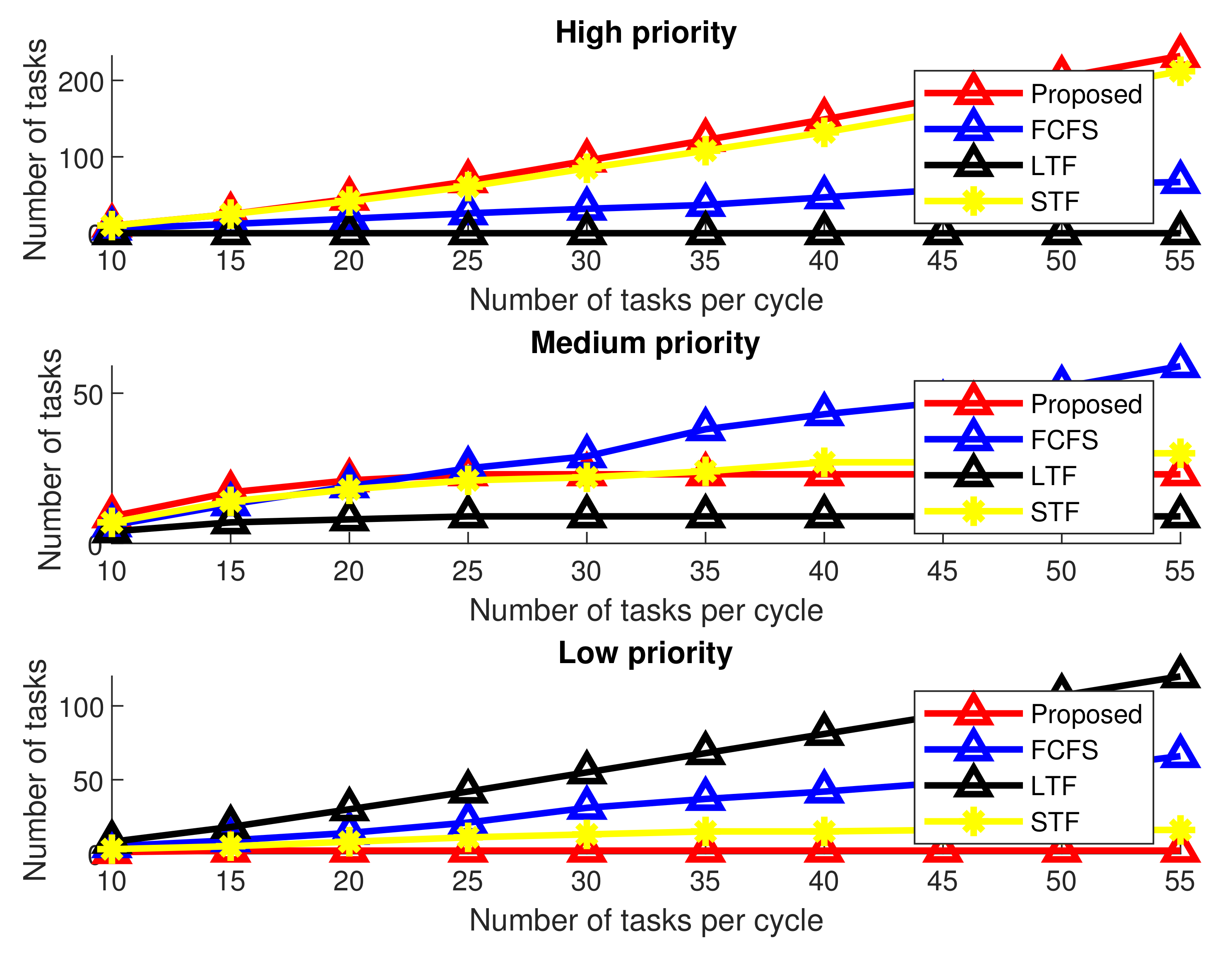

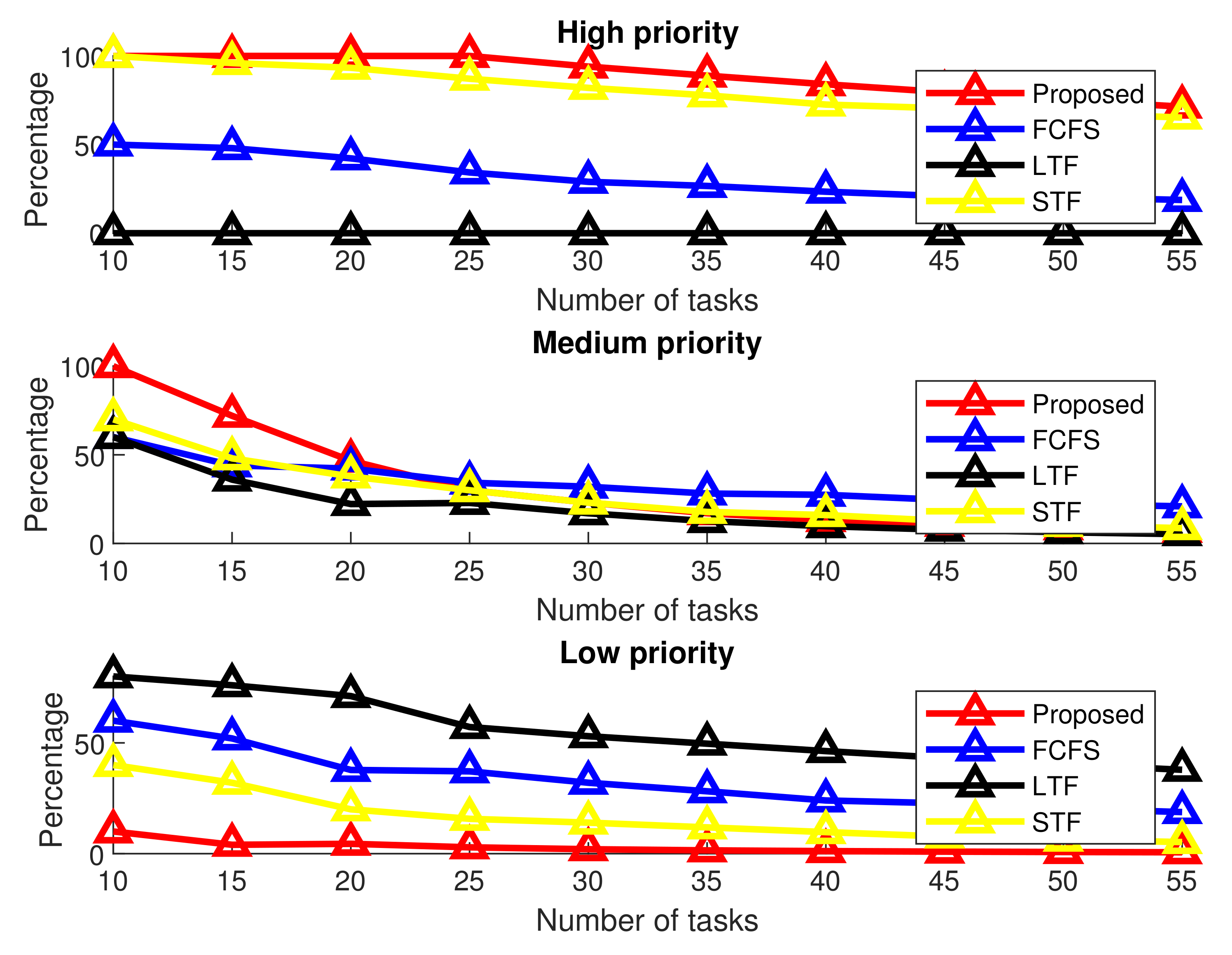

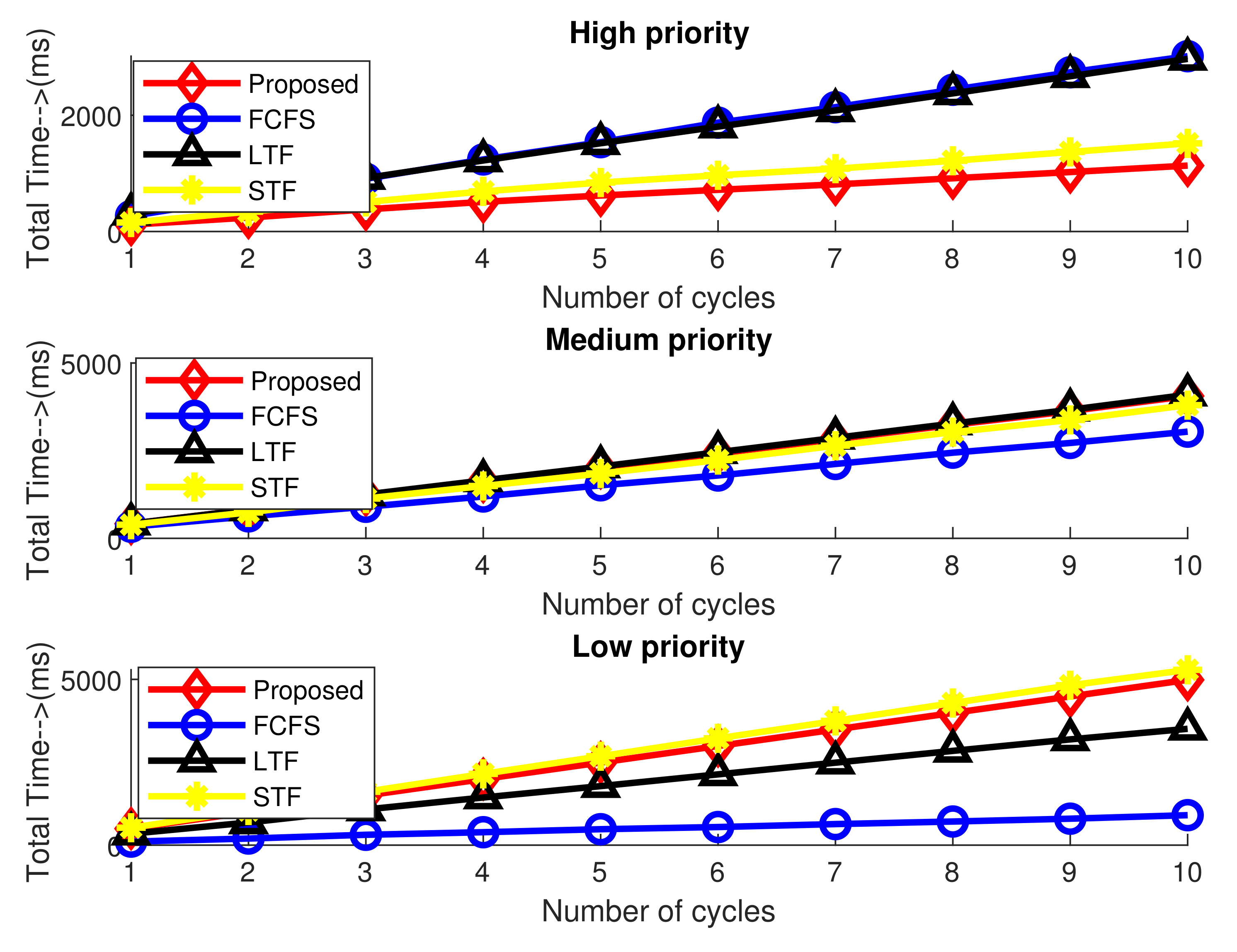

5. Performance Evaluation

- 1.

- Tasks of different vehicles are offloaded to a fog node for task computation. Offloaded tasks are processed by following the smallest task first (STF) algorithm. This allows the fog node to start executing tasks from the smallest tasks and keeps on executing the tasks to the task processing capacity of the fog node. The remaining tasks are forwarded to the cloud for computation and execution.

- 2.

- In the second scheme, a fog node executes tasks by following the longest task first (LTF) algorithm. Contrary to STF, LTF allows a fog node to start executing from the longest task execution and keeps on processing to the task processing capacity of the fog node. The remaining tasks are forwarded to the cloud for computation and execution.

- 3.

- The fog node processes offloaded tasks up to its processing capacity by applying first come first serve (FCFS) mechanism, and the rest of the tasks are forwarded to the cloud for processing and execution.

5.1. Simulation Parameters and Performance Metrics

5.2. Results and Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Javed, M.A.; Zeadally, S.; Hamida, E.B. Data analytics for Cooperative Intelligent Transport Systems. Veh. Commun. 2019, 15, 63–72. [Google Scholar] [CrossRef]

- Khan, A.A.; Abolhasan, M.; Ni, W.; Lipman, J.; Jamalipour, A. A Hybrid-Fuzzy Logic Guided Genetic Algorithm (H-FLGA) Approach for Resource Optimization in 5G VANETs. IEEE Trans. Veh. Technol. 2019, 68, 6964–6974. [Google Scholar] [CrossRef]

- Janjevic, M.; Winkenbach, M. Characterizing urban last-mile distribution strategies in mature and emerging e-commerce markets. Transp. Res. Part A Policy Pract. 2020, 133, 164–196. [Google Scholar] [CrossRef]

- Wang, T.; Quan, Y.; Shen, X.S.; Gadekallu, T.R.; Wang, W.; Dev, K. A Privacy-Enhanced Retrieval Technology for the Cloud-assisted Internet of Things. IEEE Trans. Ind. Inform. 2021, 18, 4981–4989. [Google Scholar] [CrossRef]

- Zeadally, S.; Javed, M.A.; Hamida, E.B. Vehicular Communications for ITS: Standardization and Challenges. IEEE Commun. Stand. Mag. 2020, 4, 11–17. [Google Scholar] [CrossRef]

- Dehkordi, A.A.; Sadiq, A.S.; Mirjalili, S.; Ghafoor, K.Z. Nonlinear-based Chaotic Harris Hawks Optimizer: Algorithm and Internet of Vehicles application. Appl. Soft Comput. 2021, 109, 107574. [Google Scholar] [CrossRef]

- Khan, M.Z.; Rahim, M.; Javed, M.A.; Ghabban, F.; Ameerbakhsh, O.; Alfadli, I. A D2D assisted multi-hop data dissemination protocol for inter-UAV communication. Int. J. Commun. Syst. 2021, 34, e4857. [Google Scholar] [CrossRef]

- Zrar Ghafoor, K.; Kong, L.; Zeadally, S.; Sadiq, A.S.; Epiphaniou, G.; Hammoudeh, M.; Bashir, A.K.; Mumtaz, S. Millimeter-Wave Communication for Internet of Vehicles: Status, Challenges, and Perspectives. IEEE Internet Things J. 2020, 7, 8525–8546. [Google Scholar] [CrossRef]

- Chen, B.; Imran, M.; Nasser, N.; Shoaib, M. Self-Aware Autonomous City: From Sensing to Planning. IEEE Commun. Mag. 2019, 57, 33–39. [Google Scholar] [CrossRef] [Green Version]

- Ding, L.; Wang, Y.; Wu, P.; Li, L.; Zhang, J. Kinematic Information Aided User-Centric 5G Vehicular Networks in Support of Cooperative Perception for Automated Driving. IEEE Access 2019, 7, 40195–40209. [Google Scholar] [CrossRef]

- Jameel, F.; Javed, M.A.; Ngo, D.T. Performance Analysis of Cooperative V2V and V2I Communications Under Correlated Fading. IEEE Trans. Intell. Transp. Syst. 2019, 21, 3476–3484. [Google Scholar] [CrossRef] [Green Version]

- Ahmad, S.A.; Hajisami, A.; Krishnan, H.; Ahmed-Zaid, F.; Moradi-Pari, E. V2V System Congestion Control Validation and Performance. IEEE Trans. Veh. Technol. 2019, 68, 2102–2110. [Google Scholar] [CrossRef]

- Sheehan, B.; Murphy, F.; Mullins, M.; Ryan, C. Connected and autonomous vehicles: A cyber-risk classification framework. Transp. Res. Part A Policy Pract. 2019, 124, 523–536. [Google Scholar] [CrossRef]

- Khan, S.; Alvi, A.N.; Khan, M.Z.; Javed, M.A.; Alhazmi, O.H.; Bouk, S.H. A novel superframe structure and optimal time slot allocation algorithm for IEEE 802.15.4—Based Internet of things. Int. J. Distrib. Sens. Netw. 2020, 16, 1550147720984645. [Google Scholar] [CrossRef]

- Xiong, H.; Jin, C.; Alazab, M.; Yeh, K.H.; Wang, H.; Gadekallu, T.R.R.; Wang, W.; Su, C. On the Design of Blockchain-based ECDSA with Fault-tolerant Batch Verication Protocol for Blockchain-enabled IoMT. IEEE J. Biomed. Health Inform. 2021. [Google Scholar] [CrossRef]

- Farooq, U.; Shabir, M.W.; Javed, M.A.; Imran, M. Intelligent energy prediction techniques for fog computing networks. Appl. Soft Comput. 2021, 111, 107682. [Google Scholar] [CrossRef]

- Awan, K.M.; Nadeem, M.; Sadiq, A.S.; Alghushami, A.; Khan, I.; Rabie, K. Smart Handoff Technique for Internet of Vehicles Communication using Dynamic Edge-Backup Node. Electronics 2020, 9, 524. [Google Scholar] [CrossRef] [Green Version]

- Rahim, M.; Ali, S.; Alvi, A.N.; Javed, M.A.; Imran, M.; Azad, M.A.; Chen, D. An intelligent content caching protocol for connected vehicles. Trans. Emerg. Telecommun. Technol. 2021, 32, e4231. [Google Scholar] [CrossRef]

- Sadiq, A.S.; Khan, S.; Ghafoor, K.Z.; Guizani, M.; Mirjalili, S. Transmission power adaption scheme for improving IoV awareness exploiting: Evaluation weighted matrix based on piggybacked information. Comput. Netw. 2018, 137, 147–159. [Google Scholar] [CrossRef] [Green Version]

- Malik, U.M.; Javed, M.A.; Zeadally, S.; ul Islam, S. Energy efficient fog computing for 6G enabled massive IoT: Recent trends and future opportunities. IEEE Internet Things J. 2021. [Google Scholar] [CrossRef]

- Javed, M.A.; Zeadally, S. AI-Empowered Content Caching in Vehicular Edge Computing: Opportunities and Challenges. IEEE Netw. 2021, 35, 109–115. [Google Scholar] [CrossRef]

- Mirza, J.; Ali, B.; Javed, M.A. Stable Matching for Selection of Intelligent Reflecting Surfaces in Multiuser MISO Systems. IEEE Commun. Lett. 2021, 25, 2748–2752. [Google Scholar] [CrossRef]

- Alazab, M.; Lakshmanna, K.; G, T.R.; Pham, Q.V.; Reddy Maddikunta, P.K. Multi-objective cluster head selection using fitness averaged rider optimization algorithm for IoT networks in smart cities. Sustain. Energy Technol. Assess. 2021, 43, 100973. [Google Scholar] [CrossRef]

- Javed, M.A.; Khan, M.Z.; Zafar, U.; Siddiqui, M.F.; Badar, R.; Lee, B.M.; Ahmad, F. ODPV: An Efficient Protocol to Mitigate Data Integrity Attacks in Intelligent Transport Systems. IEEE Access 2020, 8, 114733–114740. [Google Scholar] [CrossRef]

- Rahim, M.; Javed, M.A.; Alvi, A.N.; Imran, M. An efficient caching policy for content retrieval in autonomous connected vehicles. Transp. Res. Part A Policy Pract. 2020, 140, 142–152. [Google Scholar] [CrossRef]

- Yaqoob, I.; Khan, L.U.; Kazmi, S.M.A.; Imran, M.; Guizani, N.; Hong, C.S. Autonomous Driving Cars in Smart Cities: Recent Advances, Requirements, and Challenges. IEEE Netw. 2020, 34, 174–181. [Google Scholar] [CrossRef]

- MacHardy, Z.; Khan, A.; Obana, K.; Iwashina, S. V2X Access Technologies: Regulation, Research, and Remaining Challenges. IEEE Commun. Surv. Tutor. 2018, 20, 1858–1877. [Google Scholar] [CrossRef]

- Tang, F.; Kawamoto, Y.; Kato, N.; Liu, J. Future Intelligent and Secure Vehicular Network Toward 6G: Machine-Learning Approaches. Proc. IEEE 2020, 108, 292–307. [Google Scholar] [CrossRef]

- Naik, G.; Choudhury, B.; Park, J. IEEE 802.11bd & 5G NR V2X: Evolution of Radio Access Technologies for V2X Communications. IEEE Access 2019, 7, 70169–70184. [Google Scholar]

- Feng, C.; Yu, K.; Aloqaily, M.; Alazab, M.; Lv, Z.; Mumtaz, S. Attribute-Based Encryption with Parallel Outsourced Decryption for Edge Intelligent IoV. IEEE Trans. Veh. Technol. 2020, 69, 13784–13795. [Google Scholar] [CrossRef]

- Khan, W.U.; Javed, M.A.; Nguyen, T.N.; Khan, S.; Elhalawany, B.M. Energy-Efficient Resource Allocation for 6G Backscatter-Enabled NOMA IoV Networks. IEEE Trans. Intell. Transp. Syst. 2021. [Google Scholar] [CrossRef]

- Yousafzai, A.; Yaqoob, I.; Imran, M.; Gani, A.; Noor, R.M. Process migration-based computational offloading framework for IoT-supported mobile edge/cloud computing. IEEE Internet Things J. 2019, 7, 4171–4182. [Google Scholar] [CrossRef] [Green Version]

- Javed, M.A.; Nafi, N.S.; Basheer, S.; Aysha Bivi, M.; Bashir, A.K. Fog-Assisted Cooperative Protocol for Traffic Message Transmission in Vehicular Networks. IEEE Access 2019, 7, 166148–166156. [Google Scholar] [CrossRef]

- Wang, W.; Fida, M.H.; Lian, Z.; Yin, Z.; Pham, Q.V.; Gadekallu, T.R.; Dev, K.; Su, C. Secure-Enhanced Federated Learning for AI-Empowered Electric Vehicle Energy Prediction. IEEE Consum. Electron. Mag. 2021. [Google Scholar] [CrossRef]

- Maddikunta, P.K.R.; Pham, Q.V.; Prabadevi, B.; Deepa, N.; Dev, K.; Gadekallu, T.R.; Ruby, R.; Liyanage, M. Industry 5.0: A survey on enabling technologies and potential applications. J. Ind. Inf. Integr. 2021, 26, 100257. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, J.; Liu, Z.; Cui, Q.; Tao, X.; Wang, S. MDP-based Task Offloading for Vehicular Edge Computing under Certain and Uncertain Transition Probabilities. IEEE Trans. Veh. Technol. 2020, 69, 3296–3309. [Google Scholar] [CrossRef]

- Wu, Y.; Wu, J.; Chen, L.; Zhou, G.; Yan, J. Fog Computing Model and Efficient Algorithms for Directional Vehicle Mobility in Vehicular Network. IEEE Trans. Intell. Transp. Syst. 2020, 22, 2599–2614. [Google Scholar] [CrossRef]

- Zhang, J.; Guo, H.; Liu, J.; Zhang, Y. Task Offloading in Vehicular Edge Computing Networks: A Load-Balancing Solution. IEEE Trans. Veh. Technol. 2020, 69, 2092–2104. [Google Scholar] [CrossRef]

- Yang, C.; Liu, Y.; Chen, X.; Zhong, W.; Xie, S. Efficient Mobility-Aware Task Offloading for Vehicular Edge Computing Networks. IEEE Access 2019, 7, 26652–26664. [Google Scholar] [CrossRef]

- Sun, Y.; Guo, X.; Song, J.; Zhou, S.; Jiang, Z.; Liu, X.; Niu, Z. Adaptive Learning-Based Task Offloading for Vehicular Edge Computing Systems. IEEE Trans. Veh. Technol. 2019, 68, 3061–3074. [Google Scholar] [CrossRef] [Green Version]

- The European Telecommunications Standards Institute. ETSI TR 102 861 v1.1.1—Intelligent Transport Systems (ITS)—STDMA Recommended Parameters and Settings for Cooperative ITS; Access Layer Part; Technical Report; ETSI: Sophia Antipolis, France, 2012. [Google Scholar]

| Parameter | Value |

|---|---|

| RSU coverage area | 2000 m |

| Number of priority tasks | 3 |

| Number of offloaded each priority task | 30 |

| Vehicle speed (m/s) | 20∼40 |

| Data rate for vehicle to fog node | 8 Mbps |

| Data rate for vehicle to cloud | 2 Mbps |

| Emergency tasks size (kB) | 5∼20 |

| Traffic management tasks size (kB) | 12∼30 |

| Infotainment tasks size (kB) | 25∼60 |

| Fog node processing capacity (kB) | 500 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alvi, A.N.; Javed, M.A.; Hasanat, M.H.A.; Khan, M.B.; Saudagar, A.K.J.; Alkhathami, M.; Farooq, U. Intelligent Task Offloading in Fog Computing Based Vehicular Networks. Appl. Sci. 2022, 12, 4521. https://doi.org/10.3390/app12094521

Alvi AN, Javed MA, Hasanat MHA, Khan MB, Saudagar AKJ, Alkhathami M, Farooq U. Intelligent Task Offloading in Fog Computing Based Vehicular Networks. Applied Sciences. 2022; 12(9):4521. https://doi.org/10.3390/app12094521

Chicago/Turabian StyleAlvi, Ahmad Naseem, Muhammad Awais Javed, Mozaherul Hoque Abul Hasanat, Muhammad Badruddin Khan, Abdul Khader Jilani Saudagar, Mohammed Alkhathami, and Umar Farooq. 2022. "Intelligent Task Offloading in Fog Computing Based Vehicular Networks" Applied Sciences 12, no. 9: 4521. https://doi.org/10.3390/app12094521

APA StyleAlvi, A. N., Javed, M. A., Hasanat, M. H. A., Khan, M. B., Saudagar, A. K. J., Alkhathami, M., & Farooq, U. (2022). Intelligent Task Offloading in Fog Computing Based Vehicular Networks. Applied Sciences, 12(9), 4521. https://doi.org/10.3390/app12094521