Short Text Clustering Algorithms, Application and Challenges: A Survey

Abstract

:1. Introduction

2. Applications of Short Text Clustering

- Information retrieval (IR): Clustering methods have been used in various applications in information retrieval, including clustering big datasets. In search engines, text clustering plays a critical role in improving document retrieval performance by grouping and indexing related documents [30].

- Internet of Things (IoT): With the rapid advancement of technology, several domains have focused on IoT. Data collection in the IoT involves using a global positioning system, radio frequency identification technology, sensors and various other IoT devices. Clustering techniques are used for distributed clustering, which is essential for wireless sensor networks [31,32].

- Biology: When clustering genes and samples in gene expression, the gene expression data characteristics become meaningful. They can be classified into clusters based on their expression patterns [33].

- Industry: Businesses collect large volumes of information about current and prospective customers. For further analysis, customers can be divided into small groups [34].

- Climate: Recognising global climate patterns necessitates detecting patterns in the oceans and atmosphere. Data clustering seeks to identify atmospheric pressure patterns that significantly impact the climate [35].

- Medicine: Cluster analysis is used to differentiate among disease subcategories. It can also detect disease patterns in the temporal or spatial distribution [36].

3. Components of Short Text Clustering

- (I)

- Pre-processing: It is the first step to take in STC. The data must be cleaned by removing unnecessary characters, words, symbols, and digits. Then, text representation methods can be applied. Pre-processing plays an essential role in building an efficient clustering system because short text data (original text) are unsuitable to be used directly for clustering.

- (II)

- Representation: Documents and texts are collections of unstructured data. These unstructured data need to be transformed into a structured feature space to use mathematical modelling during clustering. The standard techniques of text representation can be divided into the representation-based corpus and representation-based external knowledge methods.

- (III)

- Dimensionality reduction: Texts or documents, often after being represented by traditional techniques, become high-dimensional. Data-clustering procedures may be slowed down by extensive processing time and storage complexity. Dimensionality reduction is a standard method for dealing with this kind of issue. Many academics employ dimensionality reduction to lessen their application time and memory complexity rather than risk a performance drop. Dimensionality reduction may be more effective than developing inexpensive representation.

- (IV)

- Similarity measure: It is the fundamental entity in the clustering algorithm. It makes it easier to measure similar entities, group the entities and elements that are most similar and determine the shortest distance between related entities. In other words, distance and similarity have an inverse relationship, so they are used interchangeably. The vector representation of the data items is typically used to compute similarity/distance measures.

- (V)

- Clustering techniques: The crucial part of any text clustering system is selecting the best algorithm. We cannot choose the best model for a text clustering system without a deep conceptual understanding of each approach. The goal of clustering algorithms is to generate internally coherent clusters that are obviously distinct from one another.

- (VI)

- Evaluation: It is the final step of STC. Understanding how the model works is necessary before applying or creating text clustering techniques. Several models are available to evaluate STC.

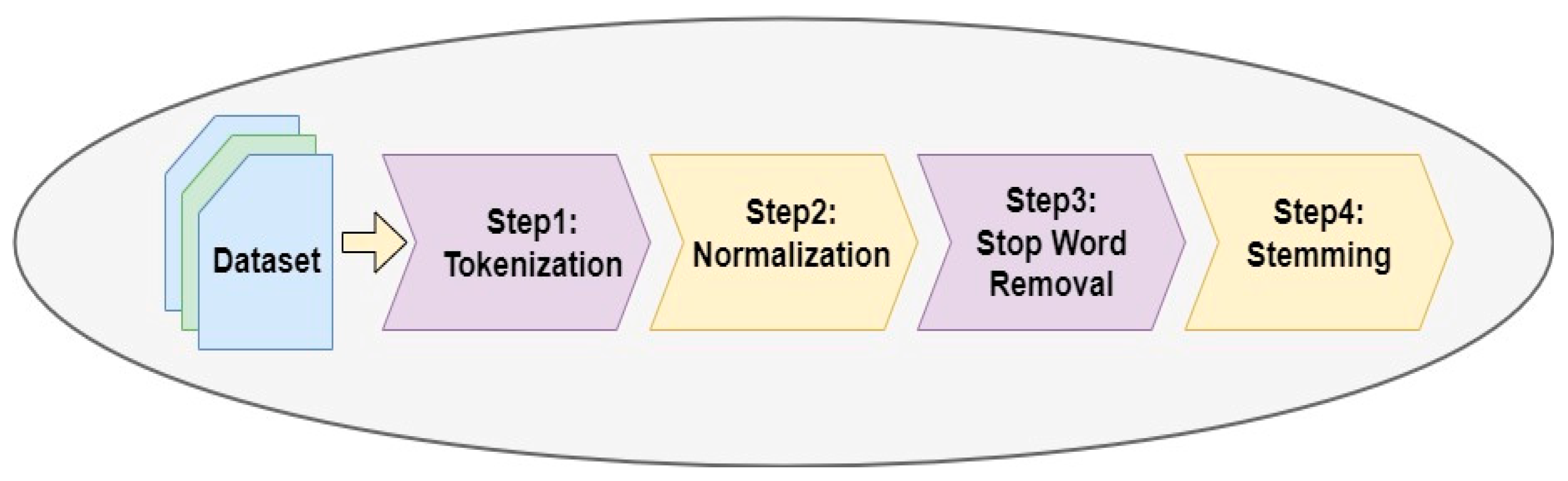

3.1. Document Pre-Processing in Short Text Clustering

3.1.1. Tokenization and Normalization

- Remove numbers (2, 1…).

- Remove punctuation marks (‘!’, ’, -, ”, :, ?, [], \, …).

- Remove special characters (~, @, #, $, %, &, =, +).

- Remove symbols (e.g.,

).

- Remove non-English words, such as اسم.

- Remove words with less than three letters.

3.1.2. Stop-Word Removal

3.1.3. Stemming

3.2. Document Representation

3.2.1. Non-DL Measures

Bag of Words Model

Vector Space Model (VSM)

- I.

- Local term weight

- II.

- Global term weight

Latent Dirichlet Allocation (LDA)

- (a)

- Select a multinomial distribution for topic ( ∈ [1, …, T]) from a Dirichlet distribution with parameter .

- (b)

- Select a multinomial distribution for document ( ∈ [1, …, M]) from a Dirichlet distribution with parameter .

- (c)

- For a word ( ∈ [1, …, ]) in document ,

- Choose a topic from .

- Choose a word from .

- I.

- Gibbs sampling

Dirichlet Multinomial Mixture (DMM)

- (a)

- Sample from a Dirichlet distribution with parameter .

- (b)

- For each topic , the sample from a Dirichlet (, where .

- (c)

- For a : in = .

- A topic is selected from , where represents the topic distribution in the corpus.

- The word count is selected, and a word from d from is also independently selected, where represents the word topic distribution in the corpus.

Latent Semantic Analysis (LSA)

Word Embedding

- I.

- Word2Vec

- II.

- Doc2Vec

- III.

- GloVe (Global Vectors for Word Representation)

Pseudo

External Knowledge

- I.

- WordNet

- II.

- Wikipedia

3.2.2. Deep Learning Measures

Convolutional Neural Networks

Recurrent Neural Networks

Long Short-Term Memory

Bidirectional Long Short-Term Memory

Bidirectional Encoder Representations in Transformers (BERT)

3.3. Dimensionality Reduction

3.3.1. Principal Component Analysis (PCA)

3.3.2. Linear Discriminant Analysis (LDA’)

3.3.3. T-Distributed Stochastic Neighbour Embedding (t-SNE)

3.3.4. Uniform Manifold Approximation and Projection (UMAP)

3.4. Similarity and Distance Measure

3.4.1. Cosine Similarity

3.4.2. Jaccard Coefficient

3.4.3. Euclidean Distance

3.5. Clustering Algorithms

3.5.1. Hierarchical Algorithms

3.5.2. Partitioned Algorithms

3.5.3. Density-Based Clustering Methods

- The main data object (a data object which has more than MinPts neighbours in its neighbourhood).

- A neighbourhood of a data object is denoted by (N (x) = y X | d (x,y) < ).

- The density of the accessible data objects shows that two data items, x and y, can be reached via a set of core data objects.

3.6. Performance Evaluation Measure

3.6.1. Homogeneity (H)

3.6.2. Completeness (C)

3.6.3. V-Measure (V)

3.6.4. Adjusted Rand Index Score (ARI)

3.6.5. Normalized Mutual Information (NMI)

3.6.6. Adjusted Mutual Information (AMI)

3.6.7. Purity (P’)

3.6.8. F-Measure

4. Challenges of Short Text Clustering

- Lack of information: A short text has only a few words, leading to a lack of information and poor document representation. Each short text does not include sufficient information on word co-occurrence, and most texts are likely created for only one topic [171].

- Sparsity: The length of a short text is limited. This short text can represent a wide range of topics, and each user uses unique word choice and writing style [172]. A given topic has a wide range of content, so determining its features is difficult.

- Informal writing and misspelling: Short text is used in many applications, such as comments on microblogs, which contain noise and many misspellings, and the presence of a particular language style [47]. In other words, users of social media platforms such as Twitter tend to use informal, straightforward and simple words to share their opinions and ideas. As an illustration, many people on Twitter may write ‘4you’ rather than ‘for you’ when posting tweets. In addition, users may create new abbreviations and acronyms to simplify the language: ‘Good9t’ and ‘how r u’ are widespread on social networks. Furthermore, the online questions and search queries do not use the grammar seen in official documents.

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| Abbreviations | The Details |

| STC | Short Text Clustering |

| Q&A | Questions and Answers |

| TF-IDF | Term frequency inverse-document-frequency |

| BOW | Bag of Words |

| IR | Information Retrieval |

| IoT | Internet of Things |

| NLP | Natural language processing |

| TF | Term frequency |

| VSM | Vector space model |

| LDA | Latent Dirichlet Allocation |

| D | Document |

| DMM | Dirichlet Multinomial Mixture |

| LSA | Latent Semantic Analysis |

| Glove | Global Vectors for Word Representation |

| CNN | Convolutional Neural Networks |

| RNN | Recurrent Neural Networks |

| LSTM | Long Short-Term Memory |

| Bi-LSTM | Bi-directional long short-term memory |

| BERT | Bidirectional Encoder Representations in Transformers |

| PCA | Principal Component Analysis |

| LDA’ | Linear Discriminant Analysis |

| S-SNE | T-distributed Stochastic Neighbor Embedding |

| UMAP | Uniform Manifold Approximation and Projection |

| K | number of clusters |

| H | Homogeneity |

| C | Completeness |

| V | V-Measure (V) |

| ARI | Adjusted Rand Index score |

| NMI | Normalized Mutual Information |

| P’ | Purity |

| R | Recall |

| P | Precision |

| N | Number of documents |

| F | F-measure |

References

- Yang, S.; Huang, G.; Ofoghi, B.; Yearwood, J. Short text similarity measurement using context-aware weighted biterms. Concurr. Comput. Pract. Exp. 2020, 34, e5765. [Google Scholar] [CrossRef]

- Zhang, W.; Dong, C.; Yin, J.; Wang, J. Attentive representation learning with adversarial training for short text clustering. IEEE Trans. Knowl. Data Eng. 2021, 34, 5196–5210. [Google Scholar] [CrossRef]

- Yu, Z.; Wang, H.; Lin, X.; Wang, M. Understanding short texts through semantic enrichment and hashing. IEEE Trans. Knowl. Data Eng. 2015, 28, 566–579. [Google Scholar] [CrossRef]

- Lopez-Gazpio, I.; Maritxalar, M.; Gonzalez-Agirre, A.; Rigau, G.; Uria, L.; Agirre, E. Interpretable semantic textual similarity: Finding and explaining differences between sentences. Knowl. Based Syst. 2017, 119, 186–199. [Google Scholar] [CrossRef] [Green Version]

- Ramachandran, D.; Parvathi, R. Analysis of twitter specific preprocessing technique for tweets. Procedia Comput. Sci. 2019, 165, 245–251. [Google Scholar] [CrossRef]

- Vo, D.-V.; Karnjana, J.; Huynh, V.-N. An integrated framework of learning and evidential reasoning for user profiling using short texts. Inf. Fusion 2021, 70, 27–42. [Google Scholar] [CrossRef]

- Feng, W.; Zhang, C.; Zhang, W.; Han, J.; Wang, J.; Aggarwal, C.; Huang, J. STREAMCUBE: Hierarchical spatio-temporal hashtag clustering for event exploration over the Twitter stream. In Proceedings of the 2015 IEEE 31st International Conference on Data Engineering, Seoul, Korea, 13–17 April 2015; pp. 1561–1572. [Google Scholar]

- Ailem, M.; Role, F.; Nadif, M. Sparse poisson latent block model for document clustering. IEEE Trans. Knowl. Data Eng. 2017, 29, 1563–1576. [Google Scholar] [CrossRef]

- Liang, S.; Yilmaz, E.; Kanoulas, E. Collaboratively tracking interests for user clustering in streams of short texts. IEEE Trans. Knowl. Data Eng. 2018, 31, 257–272. [Google Scholar] [CrossRef]

- Carpineto, C.; Romano, G. Consensus clustering based on a new probabilistic rand index with application to subtopic retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2315–2326. [Google Scholar] [CrossRef]

- Wang, T.; Brede, M.; Ianni, A.; Mentzakis, E. Detecting and characterizing eating-disorder communities on social media. In Proceedings of the Tenth ACM International Conference on Web Search and Data Mining, Cambridge, UK, 6–10 February 2017; pp. 91–100. [Google Scholar]

- Song, G.; Ye, Y.; Du, X.; Huang, X.; Bie, S. Short text classification: A survey. J. Multimed. 2014, 9, 635. [Google Scholar] [CrossRef]

- Rodriguez, A.; Laio, A. Clustering by fast search and find of density peaks. Science 2014, 344, 1492–1496. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Lei, D.; Yuan, Q.; Zhuang, H.; Kaplan, L.; Wang, S.; Han, J. GeoBurst+ Effective and Real-Time Local Event Detection in Geo-Tagged Tweet Streams. ACM Trans. Intell. Syst. Technol. (TIST) 2018, 9, 1–24. [Google Scholar]

- Yang, S.; Huang, G.; Xiang, Y.; Zhou, X.; Chi, C.-H. Modeling user preferences on spatiotemporal topics for point-of-interest recommendation. In Proceedings of the 2017 IEEE International Conference on Services Computing (SCC), Honolulu, HI, USA, 25–30 June 2017; pp. 204–211. [Google Scholar]

- Alsaffar, D.; Alfahhad, A.; Alqhtani, B.; Alamri, L.; Alansari, S.; Alqahtani, N.; Alboaneen, D.A. Machine and deep learning algorithms for Twitter spam detection. In Proceedings of the International Conference on Advanced Intelligent Systems and Informatics, Cairo, Egypt, 26–28 October 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 483–491. [Google Scholar]

- Shanmugam, S.; Padmanaban, I. A multi-criteria decision-making approach for selection of brand ambassadors using machine learning algorithm. In Proceedings of the 2021 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Uttar Pradesh, India, 28–29 January 2021; pp. 848–853. [Google Scholar]

- Hadifar, A.; Sterckx, L.; Demeester, T.; Develder, C. A self-training approach for short text clustering. In Proceedings of the 4th Workshop on Representation Learning for NLP (RepL4NLP-2019), Florence, Italy, 2 August 2019; pp. 194–199. [Google Scholar]

- Jin, J.; Zhao, H.; Ji, P. Topic attention encoder: A self-supervised approach for short text clustering;SAGE, United Kingdom. J. Inf. Sci. 2022, 48, 701–717. [Google Scholar] [CrossRef]

- Jinarat, S.; Manaskasemsak, B.; Rungsawang, A. Short text clustering based on word semantic graph with word embedding model. In Proceedings of the 2018 Joint 10th International Conference on Soft Computing and Intelligent Systems (SCIS) and 19th International Symposium on Advanced Intelligent Systems (ISIS), Toyama, Japan, 5–8 December 2018; pp. 1427–1432. [Google Scholar]

- Liu, W.; Wang, C.; Chen, X. Inductive Document Representation Learning for Short Text Clustering; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Qiang, J.; Qian, Z.; Li, Y.; Yuan, Y.; Wu, X. Short text topic modeling techniques, applications, and performance: A survey. IEEE Trans. Knowl. Data Eng. 2020, 34, 1427–1445. [Google Scholar] [CrossRef]

- Wei, C.; Zhu, L.; Shi, J. Short Text Embedding Autoencoders with Attention-Based Neighborhood Preservation. IEEE Access 2020, 8, 223156–223171. [Google Scholar] [CrossRef]

- Jain, A.K. Data clustering: 50 years beyond K-means. Pattern Recognit. Lett. 2010, 31, 651–666. [Google Scholar] [CrossRef]

- Xu, J.; Xu, B.; Wang, P.; Zheng, S.; Tian, G.; Zhao, J. Self-taught convolutional neural networks for short text clustering. Neural Netw. 2017, 88, 22–31. [Google Scholar] [CrossRef] [Green Version]

- Mistry, V.; Pandya, U.; Rathwa, A.; Kachroo, H.; Jivani, A. AEDBSCAN—Adaptive Epsilon Density-Based Spatial Clustering of Applications with Noise. In Progress in Advanced Computing and Intelligent Engineering; Springer: Berlin/Heidelberg, Germany, 2021; pp. 213–226. [Google Scholar]

- Salton, G.; Buckley, C. Term-weighting approaches in automatic text retrieval. Inf. Process. Manag. 1988, 24, 513–523. [Google Scholar] [CrossRef] [Green Version]

- Xu, J.; Wang, P.; Tian, G.; Xu, B.; Zhao, J.; Wang, F.; Hao, H. Short text clustering via convolutional neural networks. In Proceedings of the 1st Workshop on Vector Space Modeling for Natural Language Processing, Denver, CO, USA, 5 June 2015; pp. 62–69. [Google Scholar]

- Liu, K.; Bellet, A.; Sha, F. Similarity learning for high-dimensional sparse data. In Artificial Intelligence and Statistics; PMLR: San Diego, CA, USA, 2015; pp. 653–662. [Google Scholar]

- Wahid, A.; Gao, X.; Andreae, P. Multi-objective multi-view clustering ensemble based on evolutionary approach. In Proceedings of the 2015 IEEE Congress on Evolutionary Computation (CEC), Sendai, Japan, 25–28 May 2015; pp. 1696–1703. [Google Scholar]

- Bindhu, V.; Ranganathan, G. Hyperspectral image processing in internet of things model using clustering algorithm. J. ISMAC 2021, 3, 163–175. [Google Scholar]

- AL-Jumaili, A.H.A.; Mashhadany, Y.I.A.; Sulaiman, R.; Alyasseri, Z.A.A. A Conceptual and Systematics for Intelligent Power Management System-Based Cloud Computing: Prospects, and Challenges. Applied Sciences. 2021, 11, 9820. [Google Scholar] [CrossRef]

- Oyelade, J.; Isewon, I.; Oladipupo, F.; Aromolaran, O.; Uwoghiren, E.; Ameh, F.; Achas, M.; Adebiyi, E. Clustering algorithms: Their application to gene expression data. Bioinform. Biol. Insights 2016, 10, BBI-S38316. [Google Scholar] [CrossRef] [PubMed]

- Güçdemir, H.; Selim, H. Integrating multi-criteria decision making and clustering for business customer segmentation. Ind. Manag. Data Syst. 2015, 115, 1022–1040. [Google Scholar] [CrossRef]

- Biabiany, E.; Bernard, D.C.; Page, V.; Paugam-Moisy, H. Design of an expert distance metric for climate clustering: The case of rainfall in the Lesser Antilles. Comput. Geosci. 2020, 145, 104612. [Google Scholar] [CrossRef]

- Bu, F.; Hu, C.; Zhang, Q.; Bai, C.; Yang, L.T.; Baker, T. A cloud-edge-aided incremental high-order possibilistic c-means algorithm for medical data clustering. IEEE Trans. Fuzzy Syst. 2020, 29, 148–155. [Google Scholar] [CrossRef]

- Ding, Y.; Fu, X. Topical Concept Based Text Clustering Method. In Advanced Materials Research; Trans Tech Publications Ltd.: Lausanne, Swizerland, 2012; Volume 532, pp. 939–943. [Google Scholar]

- Li, R.; Wang, H. Clustering of Short Texts Based on Dynamic Adjustment for Contrastive Learning. IEEE Access 2022, 10, 76069–76078. [Google Scholar] [CrossRef]

- Froud, H.; Benslimane, R.; Lachkar, A.; Ouatik, S.A. Stemming and similarity measures for Arabic Documents Clustering. In Proceedings of the 2010 5th International Symposium on I/V Communications and Mobile Network, IEEE Xplore, Rabat, Morocco, 3 December 2010; pp. 1–4. [Google Scholar]

- Agrawal, U.; Soria, D.; Wagner, C.; Garibaldi, J.; Ellis, I.O.; Bartlett, J.M.; Cameron, D.; Rakha, E.A.; Green, A.R. Combining clustering and classification ensembles: A novel pipeline to identify breast cancer profiles. Artif. Intell. Med. 2019, 97, 27–37. [Google Scholar] [CrossRef] [PubMed]

- Allahyari, M.; Pouriyeh, S.; Assefi, M.; Safaei, S.; Trippe, E.D.; Gutierrez, J.B.; Kochut, K. A brief survey of text mining: Classification, clustering and extraction techniques. arXiv 2017, arXiv:1707.02919. [Google Scholar]

- Howland, P.; Park, H. Cluster-preserving dimension reduction methods for document classification. In Survey of Text Mining II; Springer: Berlin/Heidelberg, Germany, 2008; pp. 3–23. [Google Scholar]

- Al-Omari, O.M. Evaluating the effect of stemming in clustering of Arabic documents. Acad. Res. Int. 2011, 1, 284. [Google Scholar]

- Jia, C.; Carson, M.B.; Wang, X.; Yu, J. Concept decompositions for short text clustering by identifying word communities. Pattern Recognit. 2018, 76, 691–703. [Google Scholar] [CrossRef]

- Mohotti, W.A.; Nayak, R. Corpus-based augmented media posts with density-based clustering for community detection. In Proceedings of the 2018 IEEE 30th International Conference on Tools with Artificial Intelligence (ICTAI), Volos, Greece, 5–7 November 2018; pp. 379–386. [Google Scholar]

- Lau, J.H.; Baldwin, T. An empirical evaluation of doc2vec with practical insights into document embedding generation. arXiv 2016, arXiv:1607.05368. [Google Scholar]

- Yang, S.; Huang, G.; Cai, B. Discovering topic representative terms for short text clustering. IEEE Access 2019, 7, 92037–92047. [Google Scholar] [CrossRef]

- Jin, O.; Liu, N.N.; Zhao, K.; Yu, Y.; Yang, Q. Transferring topical knowledge from auxiliary long texts for short text clustering. In Proceedings of the 20th ACM International Conference on Information and Knowledge Management, Glasgow, Scotland, UK, 24–28 October 2011; pp. 775–784. [Google Scholar]

- Mehrotra, R.; Sanner, S.; Buntine, W.; Xie, L. Improving lda topic models for microblogs via tweet pooling and automatic labeling. In Proceedings of the 36th International ACM SIGIR Conference on Research and Development in Information Retrieval, Dublin, Ireland, 28 July–1 August 2013; pp. 889–892. [Google Scholar]

- Aggarwal, C.C.; Zhai, C. A survey of text clustering algorithms. In Mining Text Data; Springer: Berlin/Heidelberg, Germany, 2012; pp. 77–128. [Google Scholar]

- Palanivinayagam, A.; Nagarajan, S. An optimized iterative clustering framework for recognizing speech. Int. J. Speech Technol. 2020, 23, 767–777. [Google Scholar] [CrossRef]

- Kanimozhi, K.; Venkatesan, M. A novel map-reduce based augmented clustering algorithm for big text datasets. In Data Engineering and Intelligent Computing; Springer: Berlin/Heidelberg, Germany, 2018; pp. 427–436. [Google Scholar]

- Obaid, H.S.; Dheyab, S.A.; Sabry, S.S. The impact of data pre-processing techniques and dimensionality reduction on the accuracy of machine learning. In Proceedings of the 2019 9th Annual Information Technology, Electromechanical Engineering and Microelectronics Conference (IEMECON), Jaipur, India, 13–15 March 2019; pp. 279–283. [Google Scholar]

- Croft, W.B.; Metzler, D.; Strohman, T. Search Engines: Information Retrieval in Practice; Addison-Wesley Reading: London UK, 2010; Volume 520. [Google Scholar]

- Cambazoglu, B.B. Review of “Search Engines: Information Retrieval in Practice” by Croft, Metzler and Strohman. Inf. Process. Manag. 2010, 46, 377–379. [Google Scholar] [CrossRef]

- Kaur, J.; Buttar, P.K. A systematic review on stopword removal algorithms. Int. J. Future Revolut. Comput. Sci. Commun. Eng. 2018, 4, 207–210. [Google Scholar]

- Al-Shalabi, R.; Kanaan, G.; Jaam, J.M.; Hasnah, A.; Hilat, E. Stop-word removal algorithm for Arabic language. In Proceedings of the 2004 International Conference on Information and Communication Technologies: From Theory to Applications, Damascus, Syria, 19–23 April 2004; p. 545. [Google Scholar]

- Singh, J.; Gupta, V. A systematic review of text stemming techniques. Artif. Intell. Rev. 2017, 48, 15–217. [Google Scholar] [CrossRef]

- Asha, P.; Albert Mayan, J.; Canessane, A. Efficient Mining of Positive and Negative Itemsets Using K-Means Clustering to Access the Risk of Cancer Patients. Int. Conf. Soft Comput. Syst. 2018, 73, 373–382. [Google Scholar]

- Spirovski, K.; Stevanoska, E.; Kulakov, A.; Popeska, Z.; Velinov, G. Comparison of different model’s performances in task of document classification. In Proceedings of the 8th International Conference on Web Intelligence, Mining and Semantics, Novi Sad, Serbia, 25–27 June 2018; pp. 1–12. [Google Scholar]

- Singh, J.; Gupta, V. Text stemming: Approaches, applications, and challenges. ACM Comput. Surv. (CSUR) 2016, 49, 1–46. [Google Scholar] [CrossRef]

- Ahmed, M.H.; Tiun, S. K-means based algorithm for islamic document clustering. In Proceedings of the International Conference on Islamic Applications in Computer Science and Technologies (IMAN 2013), Selangor, Malaysia, 1–2 July 2013; pp. 2–9. [Google Scholar]

- Abdulameer, A.S.; Tiun, S.; Sani, N.S.; Ayob, M.; Taha, A.Y. Enhanced clustering models with wiki-based k-nearest neighbors-based representation for web search result clustering. J. King Saud Univ. Comput. Inf. Sci. 2020, 34, 840–850. [Google Scholar] [CrossRef]

- Khreisat, L. Arabic Text Classification Using N-Gram Frequency Statistics A Comparative Study. DMIN 2006, 2006, 78–82. [Google Scholar]

- Zakaria, T.N.T.; Ab Aziz, M.J.; Mokhtar, M.R.; Darus, S. Semantic similarity measurement for Malay words using WordNet Bahasa and Wikipedia Bahasa Melayu: Issues and proposed solutions. Int. J. Softw. Eng. Comput. Syst. 2020, 6, 25–40. [Google Scholar] [CrossRef]

- Yin, J.; Wang, J. A dirichlet multinomial mixture model-based approach for short text clustering. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; pp. 233–242. [Google Scholar]

- Sabah, A.; Tiun, S.; Sani, N.S.; Ayob, M.; Taha, A.Y. Enhancing web search result clustering model based on multiview multirepresentation consensus cluster ensemble (mmcc) approach. PLoS ONE 2021, 16, e0245264. [Google Scholar] [CrossRef] [PubMed]

- Fodeh, S.; Punch, B.; Tan, P.-N. On ontology-driven document clustering using core semantic features. Knowl. Inf. Syst. 2011, 28, 395–421. [Google Scholar] [CrossRef]

- Osman, M.A.; Noah, S.A.M.; Saad, S. Ontology-Based Knowledge Management Tools for Knowledge Sharing in Organization—A Review. IEEE Access 2022, 10, 43267–43283. [Google Scholar] [CrossRef]

- Banerjee, S.; Ramanathan, K.; Gupta, A. Clustering short texts using wikipedia. In Proceedings of the 30th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Amsterdam, The Netherlands, 23–27 July 2007; pp. 787–788. [Google Scholar]

- Zakaria, T.N.T.; Ab Aziz, M.J.; Mokhtar, M.R.; Darus, S. Text Clustering for Reducing Semantic Information in Malay Semantic Representation. Asia-Pac. J. Inf. Technol. Multimed. 2020, 9, 11–24. [Google Scholar]

- Mueller, J.; Thyagarajan, A. Siamese recurrent architectures for learning sentence similarity. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Zainodin, U.Z.; Omar, N.; Saif, A. Semantic measure based on features in lexical knowledge sources. Asia-Pac. J. Inf. Technol. Multimed. 2017, 6, 39–55. [Google Scholar] [CrossRef]

- Berger, H.; Dittenbach, M.; Merkl, D. Analyzing the effect of document representation on machine learning approaches in multi-class e-mail filtering. In Proceedings of the 2006 IEEE/WIC/ACM International Conference on Web Intelligence (WI 2006 Main Conference Proceedings) (WI’06), Hong Kong, China, 18–22 December 2006; pp. 297–300. [Google Scholar]

- Joachims, T. Text categorization with support vector machines: Learning with many relevant features. In European Conference on Machine Learning; Springer: Berlin/Heidelberg, Germany, 1998; pp. 137–142. [Google Scholar]

- Le, Q.; Mikolov, T. Distributed representations of sentences and documents. In Proceedings of the International Conference on Machine Learning, Beijing, China, 22–24 June 2014; Volume 32, pp. 1188–1196. [Google Scholar]

- Wu, H.; Gu, X.; Gu, Y. Balancing between over-weighting and under-weighting in supervised term weighting. Inf. Process. Manag. 2017, 53, 547–557. [Google Scholar] [CrossRef] [Green Version]

- Lan, M.; Tan, C.L.; Su, J.; Lu, Y. Supervised and traditional term weighting methods for automatic text categorization. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 721–735. [Google Scholar] [CrossRef]

- Jelodar, H.; Wang, Y.; Yuan, C.; Feng, X.; Jiang, X.; Li, Y.; Zhao, L. Latent Dirichlet allocation (LDA) and topic modeling: Models, applications, a survey. Multimed. Tools Appl. 2019, 78, 15169–15211. [Google Scholar] [CrossRef] [Green Version]

- Griffiths, T.L.; Steyvers, M. Finding scientific topics. Proc. Natl. Acad. Sci. USA 2004, 101, 5228–5235. [Google Scholar] [CrossRef]

- Lu, H.-M.; Wei, C.-P.; Hsiao, F.-Y. Modeling healthcare data using multiple-channel latent Dirichlet allocation. J. Biomed. Inform. 2016, 60, 210–223. [Google Scholar] [CrossRef]

- Miao, J.; Huang, J.X.; Zhao, J. TopPRF: A probabilistic framework for integrating topic space into pseudo relevance feedback. ACM Trans. Inf. Syst. (TOIS) 2016, 34, 1–36. [Google Scholar] [CrossRef]

- Panichella, A.; Dit, B.; Oliveto, R.; Di Penta, M.; Poshynanyk, D.; De Lucia, A. How to effectively use topic models for software engineering tasks? An approach based on genetic algorithms. In Proceedings of the 2013 35th International Conference on Software Engineering (ICSE), San Francisco, CA, USA, 18–26 May 2013; pp. 522–531. [Google Scholar]

- Gudakahriz, S.J.; Moghadam, A.M.E.; Mahmoudi, F. An experimental study on performance of text representation models for sentiment analysis. Inf. Syst. Telecommun. 2020, 29, 45–52. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. Adv. Neural Inf. Process. Syst. 2013, 26, 3111–3119. [Google Scholar]

- Tiun, S.; Saad, S.; Nor, N.F.M.; Jalaludin, A.; Rahman, A.N.C.A. Quantifying semantic shift visually on a Malay domain-specific corpus using temporal word embedding approach. Asia-Pac. J. Inf. Technol. Multimed. 2020, 9, 1–10. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Mohotti, W.A.; Nayak, R. Deep hierarchical non-negative matrix factorization for clustering short text. In International Conference on Neural Information Processing; Springer: Berlin/Heidelberg, Germany, 2020; pp. 270–282. [Google Scholar]

- Lu, H.-Y.; Yang, J.; Zhang, Y.; Li, Z. Polysemy Needs Attention: Short-Text Topic Discovery with Global and Multi-Sense Information. IEEE Access 2021, 9, 14918–14932. [Google Scholar] [CrossRef]

- Deerwester, S.; Dumais, S.T.; Furnas, G.W.; Landauer, T.K.; Harshman, R. Indexing by latent semantic analysis. J. Am. Soc. Inf. Sci. 1990, 41, 391–407. [Google Scholar] [CrossRef]

- Lee, Y.-Y.; Ke, H.; Huang, H.-H.; Chen, H.-H. Less is more: Filtering abnormal dimensions in glove. In Proceedings of the 25th ACM International Conference Companion on World Wide Web, Montréal, Québec, Canada, 11–15 April 2016; pp. 71–72. [Google Scholar]

- Hong, L.; Davison, B.D. Empirical study of topic modeling in twitter. In Proceedings of the First Workshop on Social Media Analytics, Washington, DC, USA, 25 July 2010; pp. 80–88. [Google Scholar]

- Gao, W.; Peng, M.; Wang, H.; Zhang, Y.; Xie, Q.; Tian, G. Incorporating word embeddings into topic modeling of short text. Knowl. Inf. Syst. 2019, 61, 1123–1145. [Google Scholar] [CrossRef]

- Phan, X.-H.; Nguyen, L.-M.; Horiguchi, S. Learning to classify short and sparse text & web with hidden topics from large-scale data collections. In Proceedings of the 17th International Conference on World Wide Web, Beijing, China, 21–25 April 2008; pp. 91–100. [Google Scholar]

- Hu, X.; Sun, N.; Zhang, C.; Chua, T.-S. Exploiting internal and external semantics for the clustering of short texts using world knowledge. In Proceedings of the 18th ACM Conference on Information and Knowledge Management, Hong Kong, China, 2–6 November 2009; pp. 919–928. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Aljalbout, E.; Golkov, V.; Siddiqui, Y.; Strobel, M.; Cremers, D. Clustering with deep learning: Taxonomy and new methods. arXiv Prepr. 2018, arXiv:1801.07648. [Google Scholar]

- Dara, S.; Tumma, P. Feature extraction by using deep learning: A survey. In Proceedings of the 2018 Second International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 29–31 March 2018; pp. 1795–1801. [Google Scholar]

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kowsari, K.; Jafari Meimandi, K.; Heidarysafa, M.; Mendu, S.; Barnes, L.; Brown, D. Text classification algorithms: A survey. Information 2019, 10, 150. [Google Scholar] [CrossRef] [Green Version]

- Deepak, G.; Rooban, S.; Santhanavijayan, A. A knowledge centric hybridized approach for crime classification incorporating deep bi-LSTM neural network. Multimed. Tools Appl. 2021, 80, 28061–28085. [Google Scholar] [CrossRef]

- Chandrasekaran, D.; Mago, V. Evolution of semantic similarity—A survey. ACM Comput. Surv. (CSUR) 2021, 54, 1–37. [Google Scholar] [CrossRef]

- Zeng, D.; Liu, K.; Lai, S.; Zhou, G.; Zhao, J. Relation classification via convolutional deep neural network. In Proceedings of the COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers, Dublin, Ireland, 23–29 August 2014; pp. 2335–2344. [Google Scholar]

- Kalchbrenner, N.; Grefenstette, E.; Blunsom, P. A convolutional neural network for modelling sentences. arXiv 2014, arXiv:1404.2188. [Google Scholar]

- Collobert, R.; Weston, J.; Bottou, L.; Karlen, M.; Kavukcuoglu, K.; Kuksa, P. Natural language processing (almost) from scratch. J. Mach. Learn. Res. 2011, 12, 2493–2537. [Google Scholar]

- Abdullah, A.; Ting, W.E. Orientation and Scale Based Weights Initialization Scheme for Deep Convolutional Neural Networks. Asia-Pac. J. Inf. Technol. Multimed. 2020, 9, 103–112. [Google Scholar] [CrossRef]

- Socher, R.; Perelygin, A.; Wu, J.; Chuang, J.; Manning, C.D.; Ng, A.Y.; Potts, C. Recursive deep models for semantic compositionality over a sentiment treebank. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Washington, DC, USA, 18–21 October 2013; pp. 1631–1642. [Google Scholar]

- Mikolov, T.; Kombrink, S.; Burget, L.; Černocký, J.; Khudanpur, S. Extensions of recurrent neural network language model. In Proceedings of the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 22–27 May 2011; pp. 5528–5531. [Google Scholar]

- Lai, S.; Xu, L.; Liu, K.; Zhao, J. Recurrent convolutional neural networks for text classification. In Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015. [Google Scholar]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef]

- Chin, C.K.; Omar, N. BITCOIN PRICE PREDICTION BASED ON SENTIMENT OF NEWS ARTICLE AND MARKET DATA WITH LSTM MODEL. Asia-Pac. J. Inf. Technol. Multimed. 2020, 9, 1–16. [Google Scholar]

- Tien, N.H.; Le, N.M.; Tomohiro, Y.; Tatsuya, I. Sentence modeling via multiple word embeddings and multi-level comparison for semantic textual similarity. Inf. Process. Manag. 2019, 56, 102090. [Google Scholar] [CrossRef] [Green Version]

- Tai, K.S.; Socher, R.; Manning, C.D. Improved semantic representations from tree-structured long short-term memory networks. arXiv 2015, arXiv:1503.00075. [Google Scholar]

- He, H.; Lin, J. Pairwise word interaction modeling with deep neural networks for semantic similarity measurement. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 937–948. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding with Unsupervised Learning; Technical Report; OpenAI: San Francisco, CA, USA, 2018. [Google Scholar]

- Pugachev, L.; Burtsev, M. Short text clustering with transformers. arXiv 2021, arXiv:2102.00541. [Google Scholar]

- Howard, J.; Ruder, S. Universal language model fine-tuning for text classification. arXiv 2018, arXiv:1801.06146. [Google Scholar]

- Dolan, B.; Brockett, C. Automatically constructing a corpus of sentential paraphrases. In Proceedings of the Third International Workshop on Paraphrasing (IWP2005), Jeju Island, Korea, 14 October 2005. [Google Scholar]

- Williams, A.; Nangia, N.; Bowman, S.R. A broad-coverage challenge corpus for sentence understanding through inference. arXiv 2017, arXiv:1704.05426. [Google Scholar]

- Rajpurkar, P.; Zhang, J.; Lopyrev, K.; Liang, P. Squad: 100,000+ questions for machine comprehension of text. arXiv 2016, arXiv:1606.05250. [Google Scholar]

- Hu, Q.; Shen, J.; Wang, K.; Du, J.; Du, Y. A Web service clustering method based on topic enhanced Gibbs sampling algorithm for the Dirichlet Multinomial Mixture model and service collaboration graph. Inf. Sci. 2022, 586, 239–260. [Google Scholar] [CrossRef]

- Yin, H.; Song, X.; Yang, S.; Huang, G.; Li, J. Representation Learning for Short Text Clustering; Springer International Publishing: Melbourne, VIC, Australia, 2021; pp. 321–335. [Google Scholar]

- Subakti, A.; Murfi, H.; Hariadi, N. The performance of BERT as data representation of text clustering. J. Big Data 2022, 9, 1–21. [Google Scholar] [CrossRef]

- Allaoui, M.; Kherfi, M.L.; Cheriet, A. Considerably improving clustering algorithms using UMAP dimensionality reduction technique: A comparative study. In International Conference on Image and Signal Processing; Springer: Berlin/Heidelberg, Germany, 2020; pp. 317–325. [Google Scholar]

- Swesi, I.M.A.O.; Bakar, A.A. Feature clustering for PSO-based feature construction on high-dimensional data. J. Inf. Commun. Technol. 2019, 18, 439–472. [Google Scholar] [CrossRef]

- Jolliffe, I.T.; Cadima, J. Principal component analysis: A review and recent developments. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2016, 374, 20150202. [Google Scholar] [CrossRef] [Green Version]

- Kurita, T. Principal component analysis (PCA). In Computer Vision: A Reference Guide; Springer: Tokyo, Japan, 2019; pp. 1–4. [Google Scholar]

- Hyvärinen, A.; Oja, E. Independent component analysis: Algorithms and applications. Neural Netw. 2000, 13, 411–430. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Comon, P. Independent component analysis, a new concept? Signal Process. 1994, 36, 287–314. [Google Scholar] [CrossRef]

- Sugiyama, M. Dimensionality reduction of multimodal labeled data by local fisher discriminant analysis. J. Mach. Learn. Res. 2007, 8, 1027–1061. [Google Scholar]

- Xanthopoulos, P.; Pardalos, P.M.; Trafalis, T.B. Linear discriminant analysis. In Robust Data Mining; Springer: Berlin/Heidelberg, Germany, 2013; pp. 27–33. [Google Scholar]

- Fukuaga, K. Introduction to statistical pattern classification. Pattern Recognit. 1990, 30, 1145–1149. [Google Scholar]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Cieslak, M.C.; Castelfranco, A.M.; Roncalli, V.; Lenz, P.H.; Hartline, D.K. t-Distributed Stochastic Neighbor Embedding (t-SNE): A tool for eco-physiological transcriptomic analysis. Mar. Genom. 2020, 51, 100723. [Google Scholar] [CrossRef]

- McInnes, L.; Healy, J.; Melville, J. Umap: Uniform manifold approximation and projection for dimension reduction. arXiv 2018, arXiv:1802.03426. [Google Scholar]

- Little, C.; Mclean, D.; Crockett, K.; Edmonds, B. A semantic and syntactic similarity measure for political tweets. IEEE Access 2020, 8, 154095–154113. [Google Scholar] [CrossRef]

- Alian, M.; Awajan, A. Factors affecting sentence similarity and paraphrasing identification. Int. J. Speech Technol. 2020, 23, 851–859. [Google Scholar] [CrossRef]

- Alkoffash, M.S. Automatic Arabic Text Clustering using K-means and K-mediods. Int. J. Comput. Appl. 2012, 51, 5–8. [Google Scholar]

- Lin, Y.-S.; Jiang, J.-Y.; Lee, S.-J. A similarity measure for text classification and clustering. IEEE Trans. Knowl. Data Eng. 2013, 26, 1575–1590. [Google Scholar] [CrossRef]

- Huang, A. Similarity measures for text document clustering. In Proceedings of the Sixth New Zealand Computer Science Research Student Conference (NZCSRSC2008), Christchurch, New Zealand, 14–18 April 2008; Volume 4, pp. 9–56. [Google Scholar]

- Froud, H.; Lachkar, A.; Ouatik, S.A. Arabic text summarization based on latent semantic analysis to enhance arabic documents clustering. arXiv 2013, arXiv:1302.1612. [Google Scholar] [CrossRef]

- Amer, A.A.; Abdalla, H.I. A set theory based similarity measure for text clustering and classification. J. Big Data 2020, 7, 1–43. [Google Scholar] [CrossRef]

- Guangming, G.; Yanhui, J.; Wei, W.; Shuangwen, Z. A Clustering Algorithm Based on the Text Feature Matrix of Domain-Ontology. In Proceedings of the 2013 Third International Conference on Intelligent System Design and Engineering Applications, Hong Kong, China, 16–18 January 2013; pp. 13–16. [Google Scholar]

- Abualigah, L.M.Q. Feature Selection and Enhanced Krill Herd Algorithm for Text Document Clustering; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Liu, F.; Xiong, L. Survey on text clustering algorithm-Research present situation of text clustering algorithm. In Proceedings of the 2011 IEEE 2nd International Conference on Software Engineering and Service Science, Beijing, China, 15–17 July 2011; pp. 196–199. [Google Scholar]

- Reddy, C.K.; Vinzamuri, B. A survey of partitional and hierarchical clustering algorithms. In Data Clustering; Chapman and Hall/CRC: New York, NY, USA, 2018; pp. 87–110. [Google Scholar]

- Bhattacharjee, P.; Mitra, P. A survey of density based clustering algorithms. Front. Comput. Sci. 2021, 15, 1–27. [Google Scholar] [CrossRef]

- Roux, M. A comparative study of divisive and agglomerative hierarchical clustering algorithms. J. Classif. 2018, 35, 345–366. [Google Scholar] [CrossRef] [Green Version]

- Friedman, J.H. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer Open: New York, NY, USA, 2017. [Google Scholar]

- Popat, S.K.; Emmanuel, M. Review and comparative study of clustering techniques. Int. J. Comput. Sci. Inf. Technol. 2014, 5, 805–812. [Google Scholar]

- Elavarasi, S.A.; Akilandeswari, J.; Sathiyabhama, B. A survey on partition clustering algorithms. Int. J. Enterp. Comput. Bus. Syst. 2011, 1, 1–13. [Google Scholar]

- Agarwal, S.; Yadav, S.; Singh, K. Notice of Violation of IEEE Publication Principles: K-means versus K-means++ Clustering Technique. In Proceedings of the 2012 Students Conference on Engineering and Systems, Allahabad, India, 16–18 March 2012. [Google Scholar]

- Xu, H.; Yao, S.; Li, Q.; Ye, Z. An improved k-means clustering algorithm. In Proceedings of the 2020 IEEE 5th International Symposium on Smart and Wireless Systems within the Conferences on Intelligent Data Acquisition and Advanced Computing Systems (IDAACS-SWS), Piscataway, NJ, USA, 17–18 September 2020; pp. 1–5. [Google Scholar]

- Vora, P.; Oza, B. A survey on k-mean clustering and particle swarm optimization. Int. J. Sci. Mod. Eng. 2013, 1, 24–26. [Google Scholar]

- Bock, H.-H. Clustering methods: A history of k-means algorithms. In Selected Contributions in Data Analysis and Classification; Springer: Berlin/Heidelberg, Germany, 2007; pp. 161–172. [Google Scholar]

- Chan, J.Y.; Leung, A.P. Efficient k-means++ with random projection. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 94–100. [Google Scholar]

- Campello, R.J.; Kröger, P.; Sander, J.; Zimek, A. Density-based clustering. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2020, 10, e1343. [Google Scholar] [CrossRef]

- Karaa, W.B.A.; Ashour, A.S.; Sassi, D.B.; Roy, P.; Kausar, N.; Dey, N. Medline text mining: An enhancement genetic algorithm based approach for document clustering. In Applications of Intelligent Optimization in Biology and Medicine; Springer: Berlin/Heidelberg, Germany, 2016; pp. 267–287. [Google Scholar]

- Durairaj, M.; Vijitha, C. Educational data mining for prediction of student performance using clustering algorithms. Int. J. Comput. Sci. Inf. Technol. 2014, 5, 5987–5991. [Google Scholar]

- Powers, D.M. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar]

- Qiang, J.; Li, Y.; Yuan, Y.; Wu, X. Short text clustering based on Pitman-Yor process mixture model. Appl. Intell. 2018, 48, 1802–1812. [Google Scholar] [CrossRef]

- Punitha, S.; Jayasree, R.; Punithavalli, M. Partition document clustering using ontology approach. In Proceedings of the 2013 International Conference on Computer Communication and Informatics, Coimbatore, Tamil Nadu, India, 4–6 January 2013; pp. 1–5. [Google Scholar]

- Rosenberg, A.; Hirschberg, J. V-measure: A conditional entropy-based external cluster evaluation measure. In Proceedings of the 2007 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning (EMNLP-CoNLL), Prague, Czech Republic, 5 June 2007; pp. 410–420. [Google Scholar]

- Radu, R.-G.; Rădulescu, I.-M.; Truică, C.-O.; Apostol, E.-S.; Mocanu, M. Clustering documents using the document to vector model for dimensionality reduction. In Proceedings of the 2020 IEEE International Conference on Automation, Quality and Testing, Robotics (AQTR), Cluj-Napoca, Romania, 21–23 May 2020; pp. 1–6. [Google Scholar]

- Zhu, Z.; Gao, Y. Finding cross-border collaborative centres in biopharma patent networks: A clustering comparison approach based on adjusted mutual information. In International Conference on Complex Networks and Their Applications; Springer: Berlin/Heidelberg, Germany, 2021; pp. 62–72. [Google Scholar]

- Li, L.; Goh, T.-T.; Jin, D. How textual quality of online reviews affect classification performance: A case of deep learning sentiment analysis. Neural Comput. Appl. 2020, 32, 4387–4415. [Google Scholar] [CrossRef]

- Feizollah, A.; Ainin, S.; Anuar, N.B.; Abdullah, N.A.B.; Hazim, M. Halal products on Twitter: Data extraction and sentiment analysis using stack of deep learning algorithms. IEEE Access 2019, 7, 83354–83362. [Google Scholar] [CrossRef]

- Karami, A.; Lundy, M.; Webb, F.; Dwivedi, Y.K. Twitter and research: A systematic literature review through text mining. IEEE Access 2020, 8, 67698–67717. [Google Scholar] [CrossRef]

- Yi, F.; Jiang, B.; Wu, J. Topic modeling for short texts via word embedding and document correlation. IEEE Access 2020, 8, 30692–30705. [Google Scholar] [CrossRef]

- Hirchoua, B.; Ouhbi, B.; Frikh, B. Topic Modeling for Short Texts: A Novel Modeling Method. In AI and IoT for Sustainable Development in Emerging Countries; Springer: Berlin/Heidelberg, Germany, 2022; pp. 573–595. [Google Scholar]

- Mohotti, W.A.; Nayak, R. Discovering cluster evolution patterns with the Cluster Association-aware matrix factorization. Knowl. Inf. Syst. 2021, 63, 1397–1428. [Google Scholar] [CrossRef]

| Term Weights | Formulation | Description | |

|---|---|---|---|

| Local weights | Raw term frequency | ||

| Appearance of the term | |||

| indicates the highest term frequency | |||

| Logarithm of term frequency | |||

| represents the average number of terms found in all texts | |||

| Global weights | Inverse document frequency | ||

| Probabilistic idf | |||

| BM25 idf | |||

| Methods | Advantage | Disadvantage |

|---|---|---|

| BOW | It is simple and widely used. | It ignores syntactic and semantic relationships between words and leads to sparsity. |

| VSM | It is simple and effective. | It has trouble distinguishing between synonyms and polysemy. |

| LDA | Simplicity and effectiveness led to widely used. | It disregards the sequence of the words in a sentence and the multiple meanings of words. |

| DMM | It can obtain the representative words of each cluster. | It assumes that there is only one topic covered in each document. |

| LSA | It can distinguish between synonyms and polysemy and take semantic relationships among the concepts to find relevant documents. | It disregards the sequence of the words in a sentence. |

| Word2Vec | It can process semantic information quickly. | It ignores the order of words in a sentence. |

| Doc2Vec | It analyses word order and trains different-length texts. | It ignores polysemy and synonyms of words. |

| Glove | it preserves the regular linear pattern between words and words and is faster in training. | It cannot retain the memory relationship between words and words. |

| Method | Technique | Year | Dataset | ACC | NMI | Ref. |

|---|---|---|---|---|---|---|

| RecNN | K-means | 2017 | StackOverflow | 0.4079 | 0.4058 | [25] |

| Biomedical | 0.3705 | 0.3385 | ||||

| Bi-LSTM | K-means | StackOverflow | 0.4493 | 0.4093 | ||

| Biomedical | 0.356 | 0.3403 | ||||

| STC2 | K-means | StackOverflow | 0.5114 | 0.4908 | ||

| Biomedical | 0.43 | 0.3818 | ||||

| SG-DHNMF | / | 2020 | Tweets | 0.86 | [89] | |

| StackOverflow | 0.65 | |||||

| CNN | / | Tweets | 0.79 | |||

| StackOverflow | 0.5 | |||||

| TE-GSDMM | K-means++ | 2022 | Web Service | 0.514 | [123] | |

| BERT | K-means | 2021 | Tweets | 0.8126 | 0.867 | [124] |

| StackOverflow | 0.6253 | 0.5962 | ||||

| STN-GAE | Tweets | 0.4049 | 0.3546 | |||

| StackOverflow | 0.4049 | 0.4492 | ||||

| SCA-AE | Tweets | 0.8485 | 0.8919 | |||

| StackOverflow | 0.7655 | 0.6599 | ||||

| TAE | K-means | 2022 | StackOverflow | 62.8 | [19] | |

| BERT+ Mean | K-means | 2022 | AG News | 0.6467 | 0.4151 | [125] |

| BERT+ Mean | DEC | AG News | 0.8038 | 0.538 | ||

| BERT+ Mean | IDEC | AG News | 0.8019 | 0.5383 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmed, M.H.; Tiun, S.; Omar, N.; Sani, N.S. Short Text Clustering Algorithms, Application and Challenges: A Survey. Appl. Sci. 2023, 13, 342. https://doi.org/10.3390/app13010342

Ahmed MH, Tiun S, Omar N, Sani NS. Short Text Clustering Algorithms, Application and Challenges: A Survey. Applied Sciences. 2023; 13(1):342. https://doi.org/10.3390/app13010342

Chicago/Turabian StyleAhmed, Majid Hameed, Sabrina Tiun, Nazlia Omar, and Nor Samsiah Sani. 2023. "Short Text Clustering Algorithms, Application and Challenges: A Survey" Applied Sciences 13, no. 1: 342. https://doi.org/10.3390/app13010342