SAR Image Aircraft Target Recognition Based on Improved YOLOv5

Abstract

:1. Introduction

- (1)

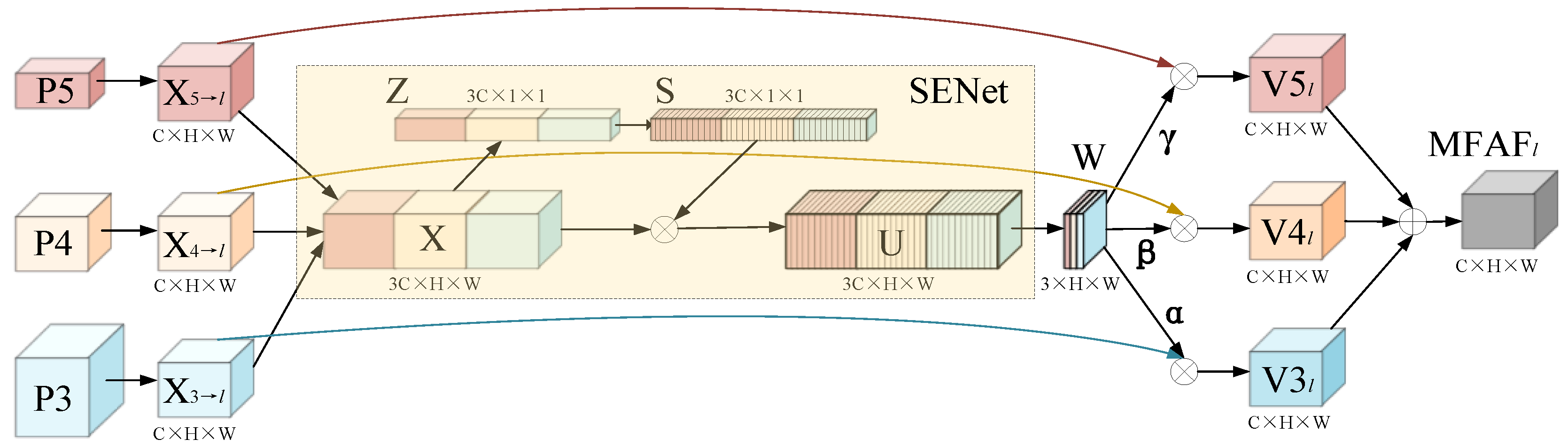

- A multiscale feature adaptive fusion (MFAF) module is proposed to fuse feature layers with three different scales and to adaptively adjust the contribution of the shallow and deep feature layers. This is more conducive to extracting the feature information of multiscale targets.

- (2)

- (3)

- (4)

- We use a coordinate attention (CA) [23] module as the backbone section of YOLOv5, which enhances the ability to extract features and improves the accuracy of aircraft recognition in SAR images.

2. Related Work

- Input: The images in the dataset are fed to the input section for training and validation. The input stage adopts the mosaic data augmentation method [24], which uses four images for random scaling, random clipping, and random layout, and then splices them into one image as training data. It can effectively enrich the dataset and improve the usage efficiency of a GPU. The input stage adopts the adaptive image scaling method to scale the original image to the standard size and sends it into the network for training. It can reduce the amount of calculation and improve the speed of detection. The input stage also adopts the adaptive anchor box method, which uses a K-means clustering algorithm for different datasets. It can calculate the best initial value of an anchor box for different training sets. It can also accelerate the convergence of the algorithm.

- Backbone: This is used to extract feature maps of different receptive fields. The backbone is mainly composed of CBS, C3, and SPPF. CBS is a composite convolution module and is a basic component of many important models. CBS is composed of Conv2d, BN, and SiLU, which are used to extract the features. C3 is composed of several bottleneck residue modules, which improve the learning ability of the model, lighten the model, ensure the recognition accuracy of the network, and reduce the model’s cost and memory space. SPPF transmits the input serially through three max-pooling layers of the 5 × 5 convolution core and concatenates the output of each max-pooling layer together. It is used to extract the features and enhance the receptive field of the network. SPPF has the same function as SPP, but SPPF is more efficient and faster.

- Neck: This is used to fuse the feature maps of different receptive fields. The neck combines the structure of feature pyramid networks (FPNs) [25] and the path aggregation networks (PANs) [26]. An FPN conveys semantic information from top to bottom, while a PAN conveys positioning information from bottom to top. The combination of the two improves the feature fusion capability of the network by aggregating the parameters of different receptive fields from different backbone layers. It can greatly improve the recognition accuracy for multiscale targets and the performance in terms of detecting dense targets.

- Head: YOLOv5 has three detection heads, which are used to predict the passenger aircraft targets at different scales in SAR images. The head section mainly includes the bounding box loss function and non-maximal suppression (NMS) [27]. CIOU is used as the loss function in YOLOv5 and accounts for the geometric factors of the distance from the center, the overlapping area, and the aspect ratio. It can make the algorithm converge quickly. In the prediction stage, the weighted NMS operation is adopted to obtain the optimal target box from the numerous target boxes that appear, which can enhance the performance of the model in terms of recognition.

3. Materials and Methods

3.1. Design of the Multiscale Feature Adaptive Fusion Module

3.2. Loss Function Update

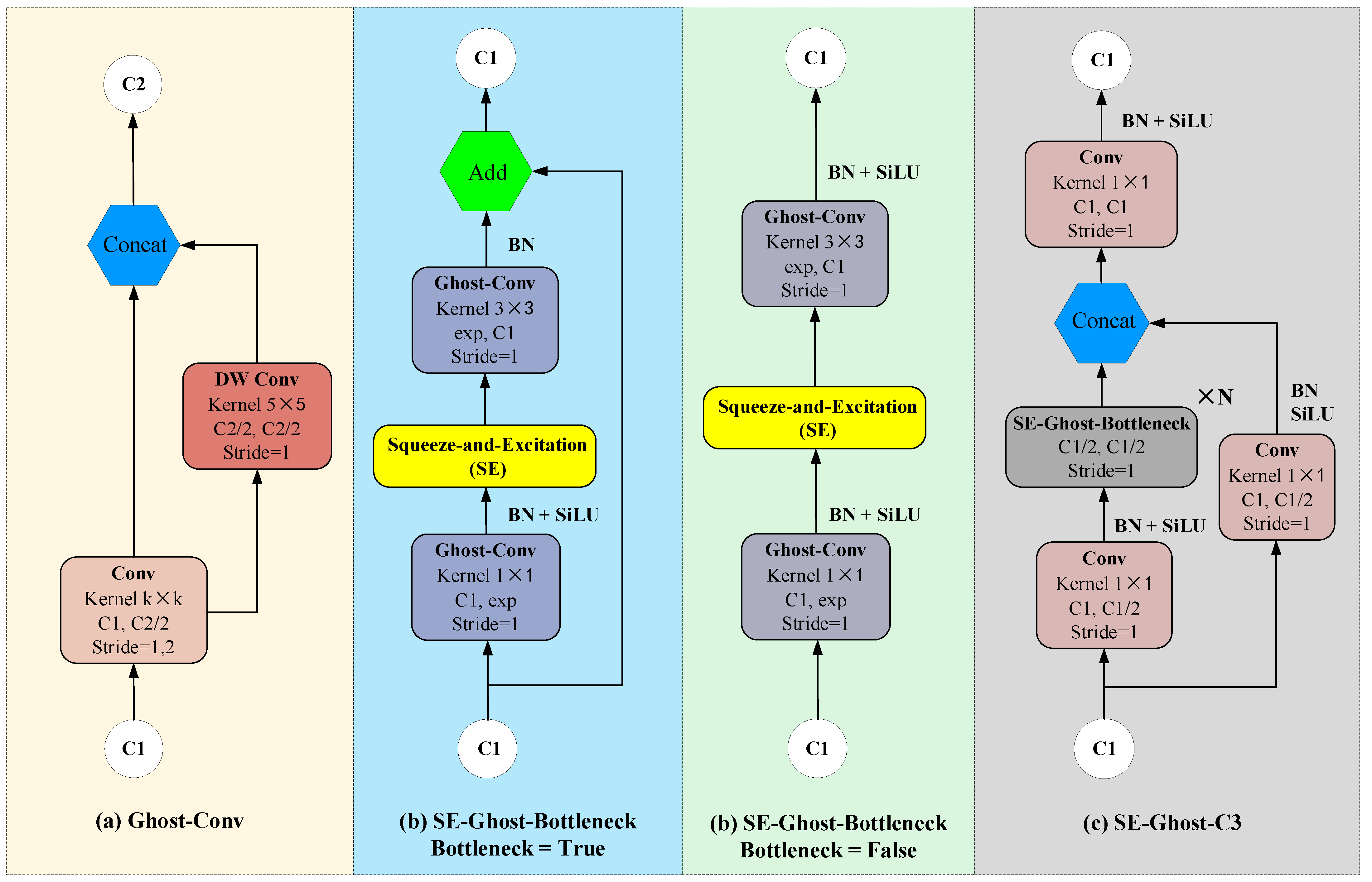

3.3. Design of the Ghost-Conv and SE-Ghost-C3 Lightweight Modules

3.4. Integration of the Coordinate Attention Module

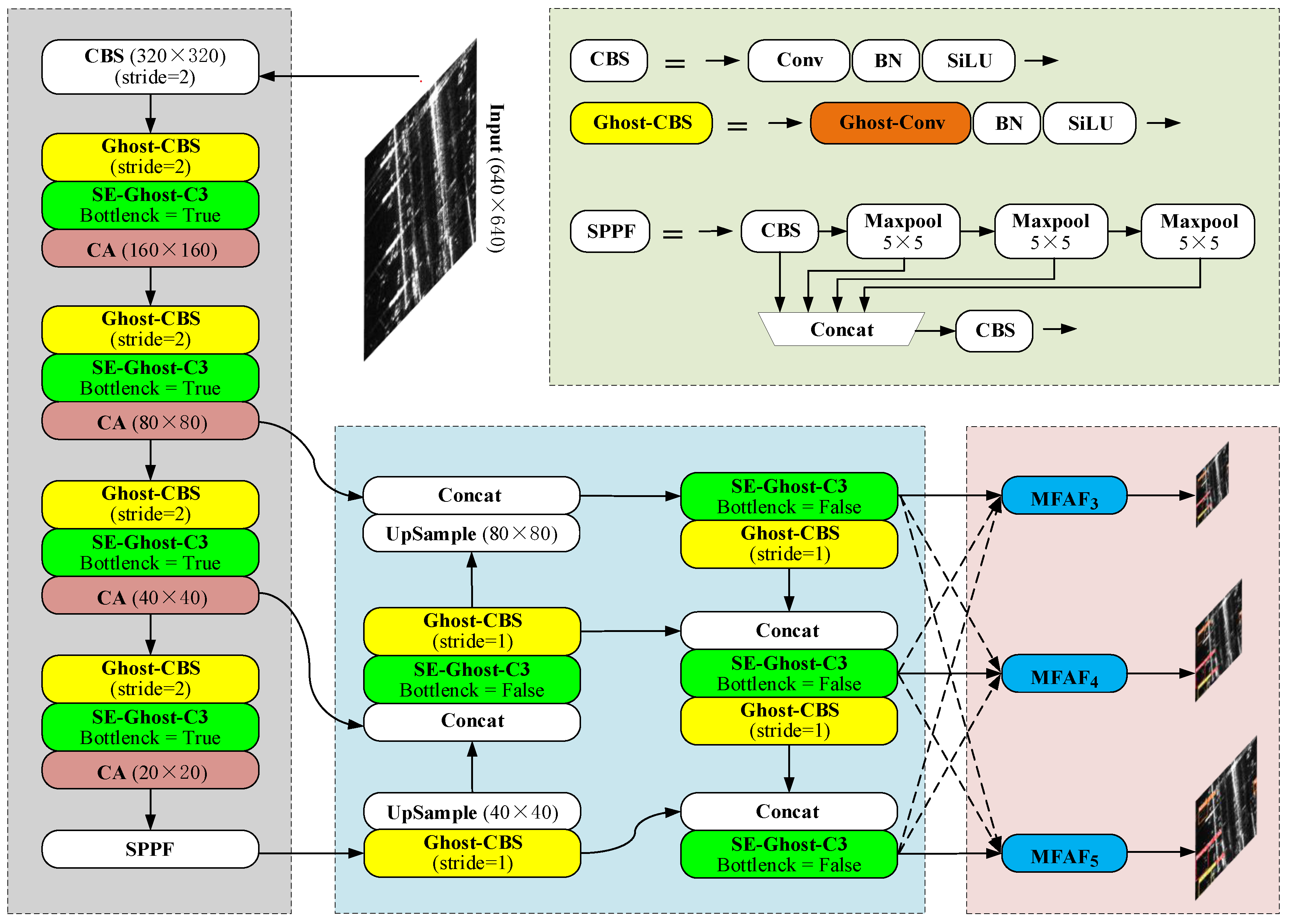

3.5. Improved YOLOv5 Structure

4. Experiments and Analysis

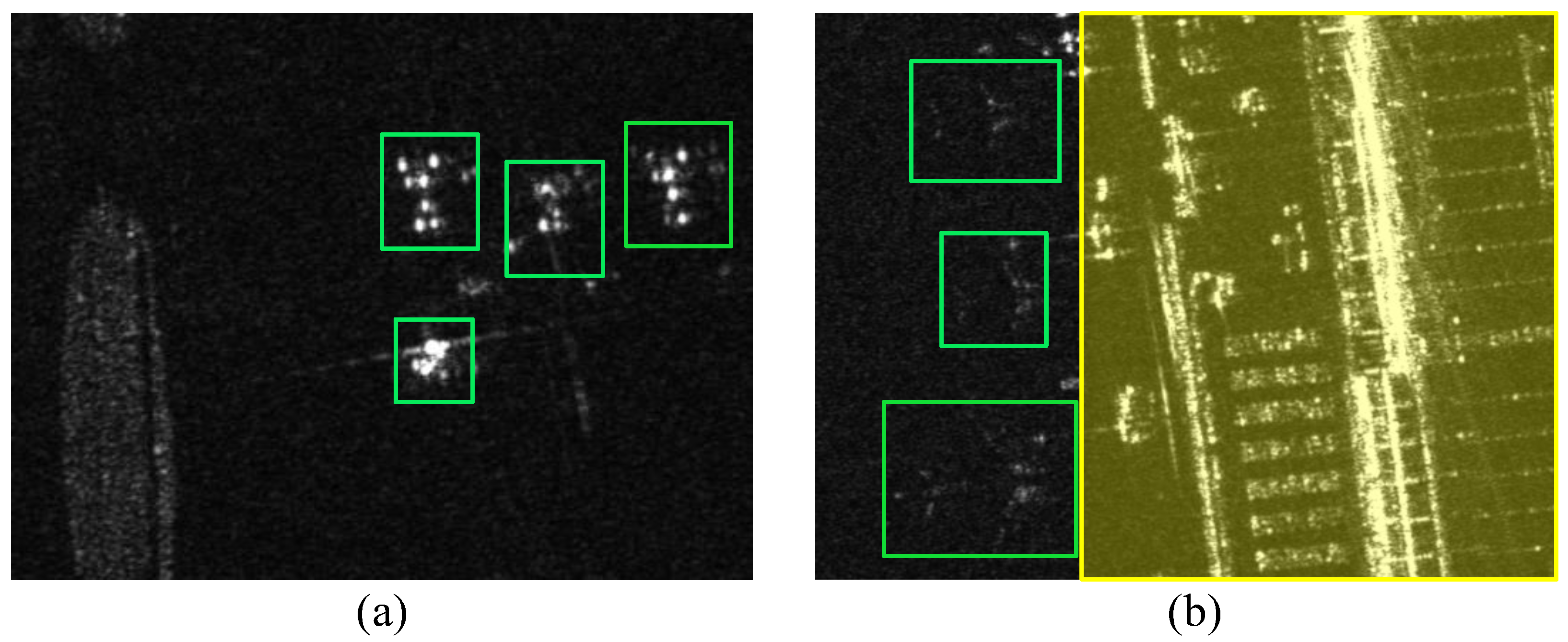

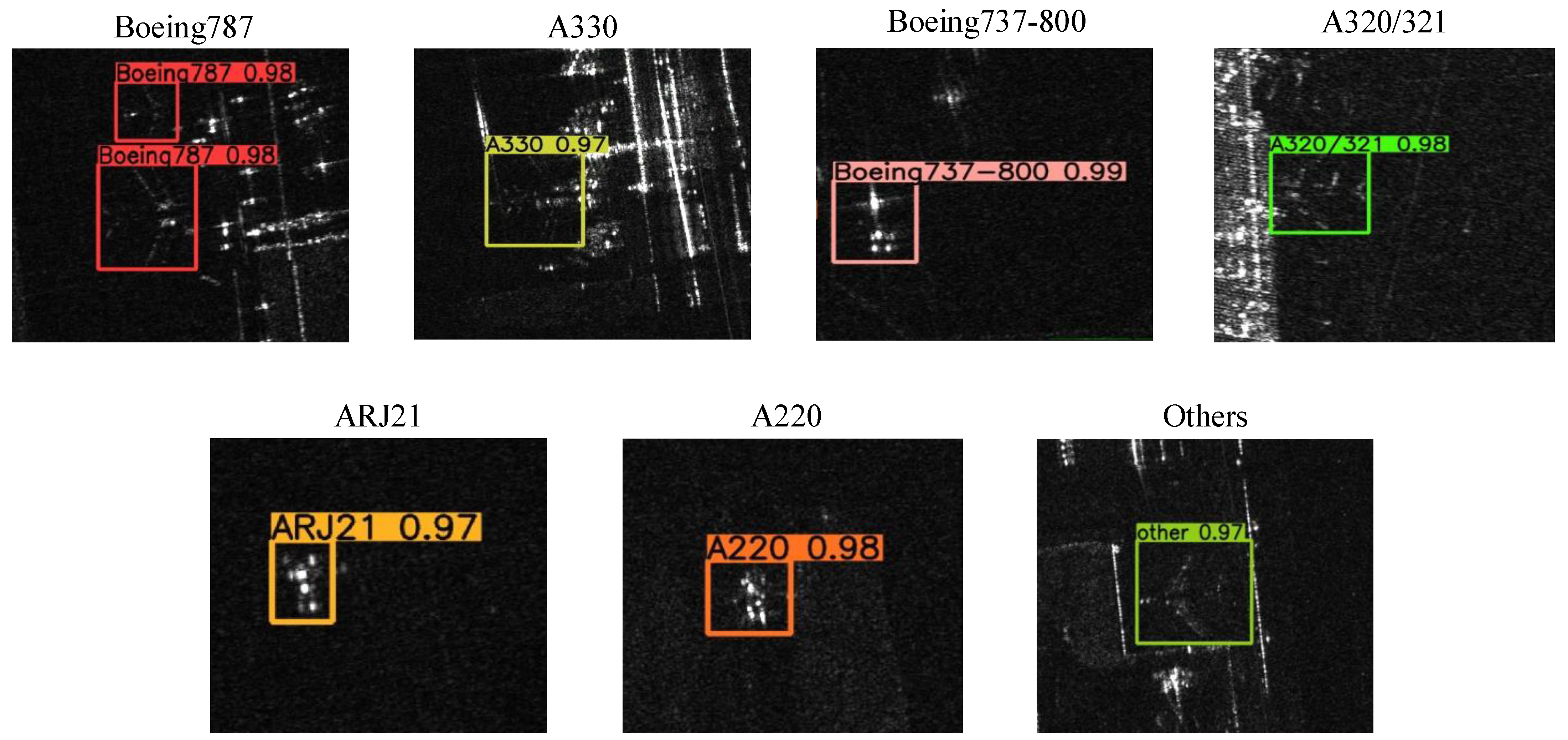

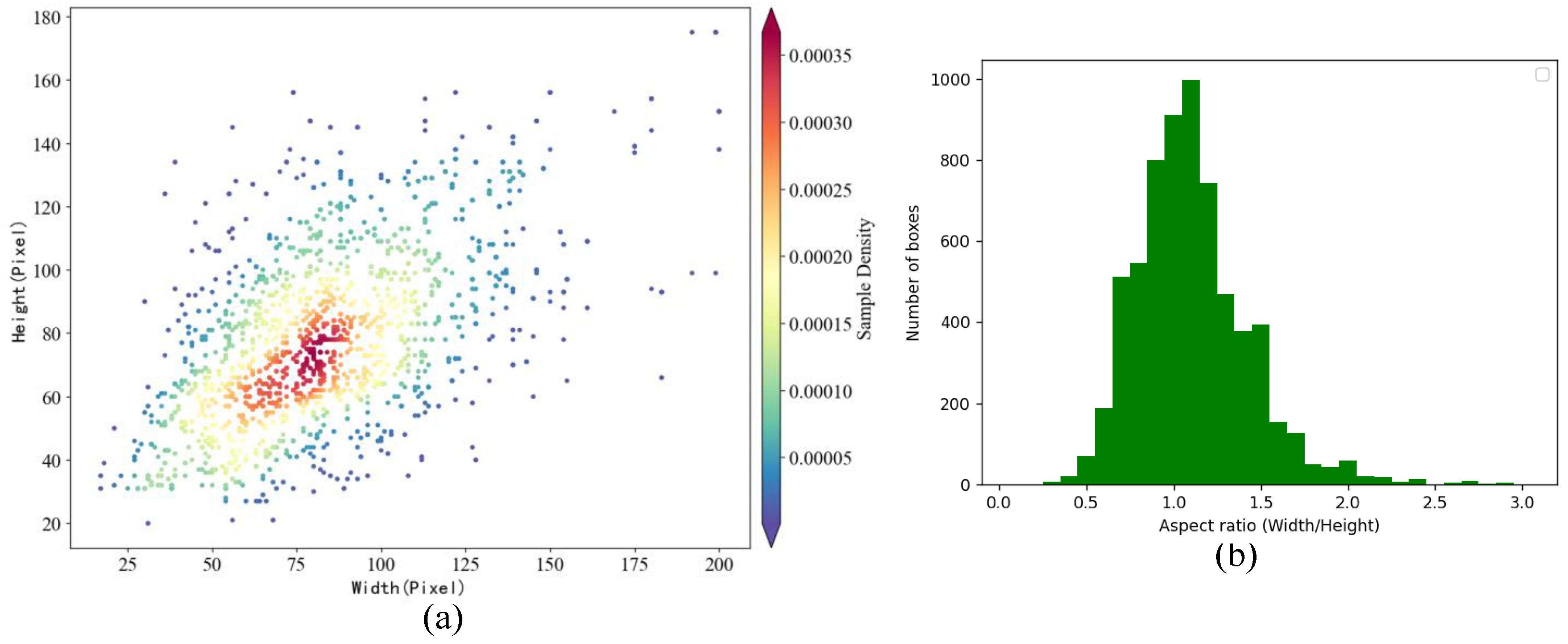

4.1. Description of the Dataset

4.2. Configuration of the Experimental Environment

4.3. Evaluation Indicators

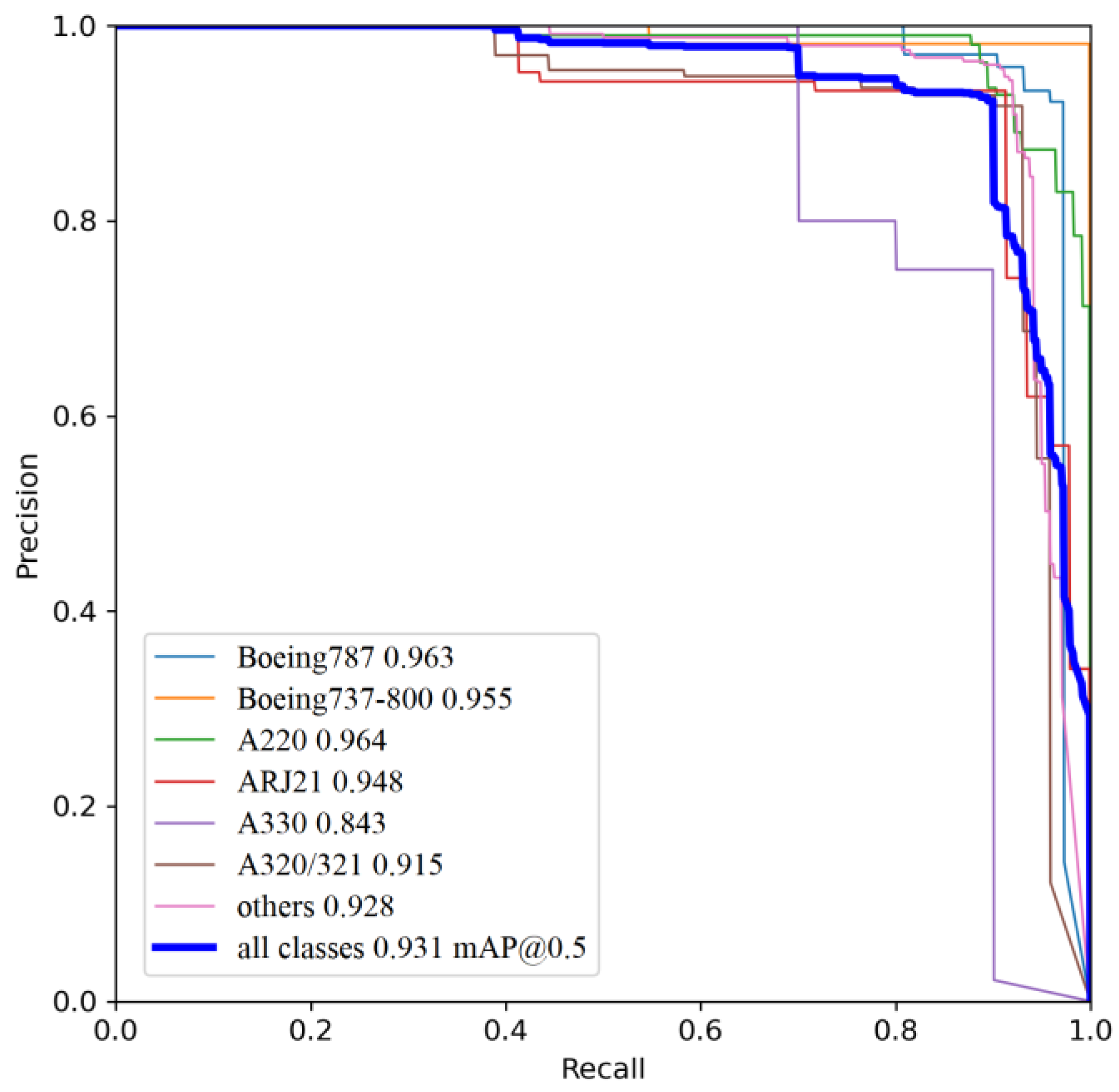

4.4. Analysis of the Experimental Results

4.5. Ablation Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fu, K.; Dou, F.; Li, H.; Diao, W.; Sun, X.; Xu, G. Aircraft Recognition in SAR Images Based on Scattering Structure Feature and Template Matching. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4206–4217. [Google Scholar] [CrossRef]

- He, C.; Tu, M.; Liu, X.; Xiong, D.; Liao, M. Mixture Statistical Distribution Based Multiple Component Model for Target Detection in High Resolution SAR Imagery. ISPRS Int. J. Geo-Inf. 2017, 6, 336. [Google Scholar] [CrossRef]

- Dou, F.; Diao, W.; Sun, X.; Zhang, Y.; Fu, K. Aircraft reconstruction in high-resolution SAR images using deep shape prior. ISPRS Int. J. Geo-Inf. 2017, 6, 330. [Google Scholar] [CrossRef]

- Feng, B.; Tang, W.; Feng, D. Target Recognition of SAR images via Hierarchical Fusion of Complementary Features. Opt. Int. J. Light Electron Opt. 2020, 217, 164695. [Google Scholar] [CrossRef]

- Zhang, Y.; Hao, Y. A Survey of SAR Image Target Detection Based on Convolutional Neural Networks. Remote Sens. 2022, 14, 6240. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 8–10 June 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Lin, T.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Zhang, L.; Li, C.; Zhao, L.; Xiong, B.; Kuang, G. A cascaded three-look network for aircraft detection in SAR images. Remote Sens. Lett. 2020, 11, 57–65. [Google Scholar] [CrossRef]

- Guo, Y.; Chen, S.; Zhan, R.; Wang, W.; Zhang, J. SAR Ship Detection Based on YOLOv5 Using CBAM and BiFPN. In Proceedings of the 2022 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 2147–2150. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar] [CrossRef]

- Xiao, X.; Jia, H.; Xiao, P.; Wang, H. Aircraft Detection in SAR Images Based on Peak Feature Fusion and Adaptive Deformable Network. Remote Sens. 2022, 14, 6077. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence 2020, New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar] [CrossRef]

- Gevorgyan, Z. SIoU Loss: More Powerful Learning for Bounding Box Regression. arXiv 2022, arXiv:2205.12740. [Google Scholar] [CrossRef]

- Tian, Z.; Huang, J.; Yang, Y.; Nie, W. KCFS-YOLOv5: A High-Precision Detection Method for Object Detection in Aerial Remote Sensing Images. Appl. Sci. 2023, 13, 649. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 1580–1589. [Google Scholar] [CrossRef]

- Hu, Y.; Liu, G.; Chen, Z.; Guo, J. Object Detection Algorithm for Wheeled Mobile Robot Based on an Improved YOLOv4. Appl. Sci. 2022, 12, 4769. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13708–13717. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Lin, Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2117–2125. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar] [CrossRef]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS—Improving object detection with one line of code. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5561–5569. [Google Scholar] [CrossRef]

- Liu, S.; Huang, D.; Wang, Y. Learning Spatial Fusion for Single-Shot Object Detection. arXiv 2019, arXiv:1911.09516. [Google Scholar] [CrossRef]

- Jie, H.; Li, S.; Samuel, A.; Gang, S.; Enhua, W. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar]

- Wang, G.; Wang, K.; Lin, L. Adaptively connected neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1781–1790. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ihsanto, E.; Ramli, K.; Sudiana, D.; Gunawan, T.S. Fast and Accurate Algorithm for ECG Authentication Using Residual Depthwise Separable Convolutional Neural Networks. Appl. Sci. 2020, 10, 3304. [Google Scholar] [CrossRef]

- Howard, A.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar] [CrossRef]

- Park, J.; Woo, S.; Lee, J.; Kweon, I.S. BAM: Bottleneck Attention Module. arXiv 2018, arXiv:1807.06514. [Google Scholar]

- 2021 Gaofen Challenge on Automated High-Resolution Earth Observation Image Interpretation. Available online: http://gaofen-challenge.com (accessed on 1 October 2021).

| Parameter | Configuration |

|---|---|

| CPU | Inter(R) Core(TM) i7-7820X CPU @ 3.60 GHz |

| GPU | NVIDIA TITAN Xp |

| Accelerator | CUDA 10.2 |

| Architecture | Pytorch 1.9 |

| Language | Python 3.8 |

| Epochs | 400 |

| Batch size | 32 |

| Learning rate | 0.01 |

| Optimizer | SGD |

| Mosaic | 1.0 |

| Flipud, Fliplr | 0, 0.5 |

| Scale | 0.5 |

| Method | Boeing 787 | Boeing 737 | A220 | ARJ21 | A330 | A320/321 | Others | All |

|---|---|---|---|---|---|---|---|---|

| AP50 | AP50 | AP50 | AP50 | AP50 | AP50 | AP50 | mAP50 | |

| Faster R-CNN | 0.911 | 0.852 | 0.849 | 0.876 | 0.801 | 0.858 | 0.867 | 0.859 |

| Retina-Net | 0.850 | 0.815 | 0.809 | 0.843 | 0.758 | 0.796 | 0.811 | 0.812 |

| SSD | 0.832 | 0.805 | 0.789 | 0.867 | 0.764 | 0.783 | 0.797 | 0.805 |

| YOLOv3 | 0.898 | 0.911 | 0.873 | 0.916 | 0.744 | 0.852 | 0.871 | 0.866 |

| YOLOv5s | 0.890 | 0.906 | 0.874 | 0.914 | 0.816 | 0.854 | 0.855 | 0.873 |

| Improved YOLOv5s | 0.963 | 0.955 | 0.964 | 0.948 | 0.843 | 0.915 | 0.928 | 0.931 |

| NO. | MFAF | SIOU | SE-Ghost | CA | mAP50 | mAP75 | mAP50–95 | PARAM | GFLOPs | FPS |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | × | × | × | × | 0.873 | 0.735 | 0.632 | 7.0M | 16.0 | 116 |

| 2 | √ | × | × | × | 0.912 | 0.813 | 0.733 | 11.5M | 16.0 | 111 |

| 3 | × | √ | × | × | 0.881 | 0.747 | 0.651 | 7.0M | 16.0 | 127 |

| 4 | √ | √ | × | × | 0.926 | 0.843 | 0.759 | 11.5M | 16.0 | 118 |

| 5 | × | × | √ | × | 0.870 | 0.730 | 0.628 | 4.1M | 12.1 | 118 |

| 6 | √ | √ | √ | × | 0.922 | 0.840 | 0.753 | 8.4M | 12.1 | 119 |

| 7 | × | × | × | √ | 0.885 | 0.756 | 0.652 | 7.5M | 17.6 | 116 |

| 8 | √ | √ | √ | √ | 0.931 | 0.856 | 0.772 | 8.8M | 14.2 | 118 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Hong, W.; Liu, Y.; Hu, D.; Xin, P. SAR Image Aircraft Target Recognition Based on Improved YOLOv5. Appl. Sci. 2023, 13, 6160. https://doi.org/10.3390/app13106160

Wang X, Hong W, Liu Y, Hu D, Xin P. SAR Image Aircraft Target Recognition Based on Improved YOLOv5. Applied Sciences. 2023; 13(10):6160. https://doi.org/10.3390/app13106160

Chicago/Turabian StyleWang, Xing, Wen Hong, Yunqing Liu, Dongmei Hu, and Ping Xin. 2023. "SAR Image Aircraft Target Recognition Based on Improved YOLOv5" Applied Sciences 13, no. 10: 6160. https://doi.org/10.3390/app13106160