An Adaptive Multitask Network for Detecting the Region of Water Leakage in Tunnels

Abstract

:1. Introduction

- (1)

- The background of the leakage image is complex, which is greatly affected by the actual project.

- (2)

- Due to the similar structure of water seepage and wet stains, and affected by illumi-nation and shooting Angle, it isn’t easy to detect the edge area.

- (1)

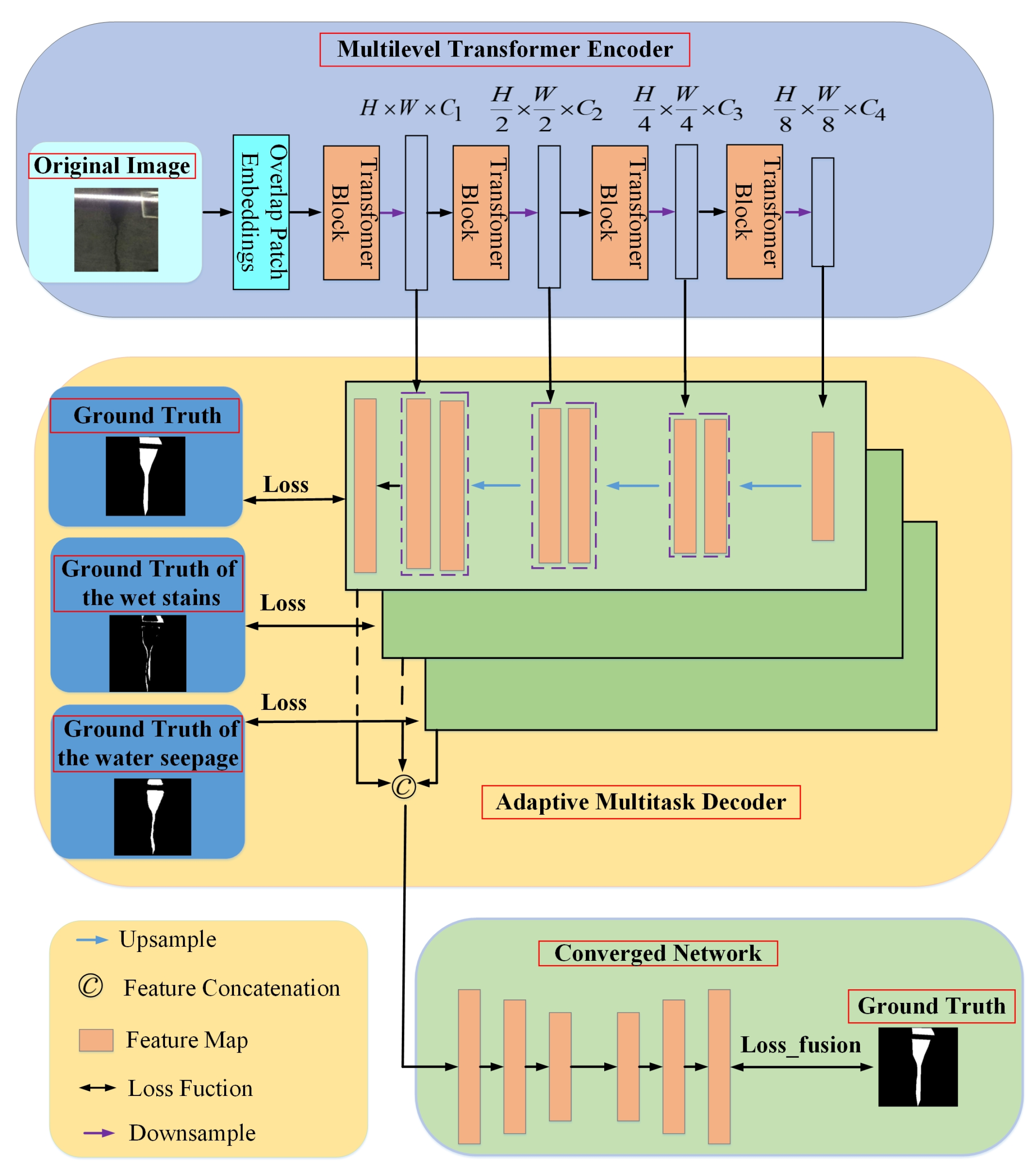

- Due to the complex background of the water leakage image, the information loss problem easily occurs during detection. To solve this problem, the encoder designed in this paper adopted a multilevel transformer and used depth separable convolution to mark the location information. Also, the multilevel transformer reduced the computational effort by lowering the length of sequences in the self-attentive mechanism and used a hierarchical transformer to extract multiple levels of features.

- (2)

- To solve the problem of unclear edge segmentation, we designed an adaptive multitask network branch that can automatically generate water seepage and wet stain labels without manual labeling. Then, the labels are input into the network training, and the fusion network fuses the rough segmentation map from the adaptive multitask decoder to get the final segmentation image.

2. Methods

2.1. Preliminaries

2.2. The Multilevel Transformer Encoder

2.2.1. Multiscale Feature

2.2.2. Overlapping Patch Merging

2.2.3. Self-Attention Mechanism

2.2.4. Positional Encoding

2.2.5. Hyperparameter Configuration in Multi-Level Transformer

2.3. The Adaptive Multitask Decoder

2.4. Converged Network

2.5. Loss Function

3. Datasets

3.1. Original Datasets

- (1)

- The articulated junctures are more prevalent. The articulated junctures assemble the tunnels. Therefore, the likelihood of leakage at these junctures is greater.

- (2)

- The area of exudation is not a simple and singular characteristic, the variation within the class is substantial, and a portion of the leakage area is disconnected.

- (3)

- A part of the objective is impeded. The lining surface is outfitted with illuminations, props, materiel, conduit lines, etc.

- (4)

- Influence of background noise. Noise, such as cement mortar and scratches, will inevitably be left on the segment during construction.

- (5)

- The impact of illumination conditions. The lining surface is distant from the light source due to the dissimilar position of light exudation, which is significantly affected by the luminary.

- (6)

- The edge of the leakage water is relatively shallow, and the difference between seepage and wall is not clear.

3.2. Enhance Datasets

4. Experiment Configuration

Evaluated Metrics

5. Results

5.1. Quality Result

5.2. Stability Results

5.3. Overfitting Analysis

5.4. Compared with SOTA Methods

5.5. Ablation Study

6. Conclusions

- (1)

- The encoder is a multilevel transformer to address the limitations of ViT.

- (2)

- An adaptive multitask decoder is proposed to accurately segment the water seepage and wet stains from water leakage images in tunnels.

- (3)

- A converged network is designed to fuse the coarse images of the adaptive multitask decoder.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xue, Y.; Cai, X.; Shadabfar, M.; Shao, H.; Zhang, S. Deep learning-based automatic recognition of water leakage area in shield tunnel lining. Tunn. Underground Space Technol. 2020, 104, 103524. [Google Scholar] [CrossRef]

- Wei, F.; Yao, G.; Yang, Y.; Sun, Y. Instance-level recognition and quantification for concrete surface bug hole based on deep learning. Autom. Constr. 2019, 107, 102920. [Google Scholar] [CrossRef]

- Huang, H.; Li, Q.; Zhang, D. Deep learning based image recognition for crack and leakage defects of metro shield tunnel. Tunn. Undergr. Space Technol. 2019, 77, 166–176. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Ma, L. Research on Pavement Crack Recognition Method Based on Digital Image Processing; Southeast University: Nanjing, China, 2018. [Google Scholar]

- Li, Q.; Zou, Q.; Zhang, D.; Mao, Q. FoSA: F* seed-growing approach for crack-line detection from pavement images. Image Vis. Comput. 2011, 29, 861–872. [Google Scholar] [CrossRef]

- Fujita, Y.; Mitani, Y.; Hamamoto, Y. A method for crack detection on a concrete structure. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 901–904. [Google Scholar]

- Almusawi, A.; Amintoosi, H. DNS tunneling detection method based on multilabel support vector machine. Secur. Commun. Netw. 2018, 2018, 6137098. [Google Scholar] [CrossRef]

- Buczak, A.L.; Hanke, P.A.; Cancro, G.J.; Toma, M.K.; Watkins, L.A.; Chavis, J.S. Detection of tunnels in PCAP data by random forests. In Proceedings of the 11th Annual Cyber and Information Security Research Conference, Oak Ridge, TN, USA, 5–7 April 2016; pp. 1–4. [Google Scholar]

- Bao, Y.; Li, H. Artificial Intelligence for civil engineering. China Civ. Eng. J. 2019, 52, 1–11. [Google Scholar]

- Yufei, L.; Jiansheng, F.; Jianguo, N. Review and prospect of digital-image-based crack detection of structure surface. China Civ. Eng. J. 2021, 54, 79–98. [Google Scholar]

- Khalaf, A.F.; Yassine, I.A.; Fahmy, A.S. Convolutional neural networks for deep feature learning in retinal vessel segmentation. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 385–388. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Huang, H.; Li, Q. Image recognition for water leakage in shield tunnel based on deep learning. Chin. J. Rock Mech. Eng. 2017, 36, 2861–2871. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv 2014, arXiv:1412.7062. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Cham, Switzerland, 2018; pp. 3–11. [Google Scholar]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Wu, J. Unet 3+: A full-scale connected unet for medical image segmentation. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 1055–1059. [Google Scholar]

- Dong, Y.; Wang, J.; Wang, Z.; Zhang, X.; Gao, Y.; Sui, Q.; Jiang, P. A deep-learning-based multiple defect detection method for tunnel lining damages. IEEE Access 2019, 7, 182643–182657. [Google Scholar] [CrossRef]

- Zhang, L.; Shen, J.; Zhu, B. A research on an improved Unet-based concrete crack detection algorithm. Struct. Health Monit. 2021, 20, 1864–1879. [Google Scholar] [CrossRef]

- Yang, Q.; Ji, X. Automatic pixel-level crack detection for civil infrastructure using Unet++ and deep transfer learning. IEEE Sens. J. 2021, 21, 19165–19175. [Google Scholar] [CrossRef]

- Li, M.; Wang, H.; Zhang, S.; Gao, P. Subway Water Leakage Detection Based on Improved deeplabV3+. In Proceedings of the 2022 IEEE 2nd International Conference on Computer Systems (ICCS), Qingdao, China, 23–25 September 2022; pp. 93–97. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Houlsby, N. An image is worth 16×16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Yang, G.; Liu, K.; Zhang, J.; Zhao, B.; Zhao, Z.; Chen, X.; Chen, B.M. Datasets and processing methods for boosting visual inspection of civil infrastructure: A comprehensive review and algorithm comparison for crack classification, segmentation, and detection. Constr. Build. Mater. 2022, 356, 129226. [Google Scholar] [CrossRef]

- Yang, L.; Wang, H.; Zeng, Q.; Liu, Y.; Bian, G. A hybrid deep segmentation network for fundus vessels via deep-learning framework. Neurocomputing 2021, 448, 168–178. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Chu, X.; Tian, Z.; Zhang, B.; Wang, X.; Wei, X.; Xia, H.; Shen, C. Conditional positional encodings for vision transformers. arXiv 2021, arXiv:2102.10882. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Chicco, D.; Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Wang, J.; Zhao, X. Estimating the uncertainty of average F1 scores. In Proceedings of the 2015 International Conference on the Theory of Information Retrieval, Northampton, MA, USA, 27–30 September 2015; pp. 317–320. [Google Scholar]

- Li, R.; Zheng, S.; Duan, C.; Wang, L.; Zhang, C. Land cover classification from remote sensing images based on multi-scale fully convolutional network. Geo-Spat. Inf. Sci. 2022, 25, 278–294. [Google Scholar] [CrossRef]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Zhou, Y. Transunet: Transformers make strong encoders for medical image segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

| Stage | Stride | Layer | Parameter |

|---|---|---|---|

| 1 | 1 | Patch Embed | 2 64 |

| Transformer Block | 2 | ||

| 2 | 2 | Patch Embed | 2 128 |

| Transformer Block | 2 | ||

| 3 | 2 | Patch Embed | 2 256 |

| Transformer Block | 2 | ||

| 4 | 2 | Patch Embed | 2 512 |

| Transformer Block | 2 |

| Category | Image | Describe | Train | Validation | Test |

|---|---|---|---|---|---|

| 1 |  | stitching + screw bolt | 41 | 15 | 6 |

| 2 |  | stitching + screw bolt + shielding | 35 | 12 | 7 |

| 3 |  | stitching + screw bolt + shadow | 60 | 14 | 5 |

| 4 |  | stitching + screw bolt + pipe + light | 31 | 7 | 8 |

| 5 |  | stitching + screw bolt + pipeline + light + shielding | 47 | 8 | 4 |

| 6 |  | region not connected | 45 | 9 | 7 |

| Confusion Matrix | Actual Value | ||

|---|---|---|---|

| Water Leakage | Background | ||

| Predictive value | Water Leakage | TP | FP |

| Background | FN | TN | |

| Environments | Dice | MIOU | PA | F1 |

|---|---|---|---|---|

| Additive noise | 0.948 | 0.903 | 0.981 | 0.946 |

| Chaotic backgrounds | 0.956 | 0.907 | 0.985 | 0.951 |

| Geometric modifications | 0.946 | 0.898 | 0.972 | 0.946 |

| Uneven illumination | 0.949 | 0.896 | 0.965 | 0.939 |

| Object occlusion | 0.958 | 0.909 | 0.961 | 0.938 |

| Method | F1 | PA | MIOU | FWIOU | Dice |

|---|---|---|---|---|---|

| Ours | 0.947 | 0.983 | 0.904 | 0.971 | 0.951 |

| TransUNet | 0.916 | 0.961 | 0.858 | 0.936 | 0.915 |

| UNet++ | 0.801 | 0.791 | 0.662 | 0.833 | 0.794 |

| UNet+++ | 0.848 | 0.834 | 0.725 | 0.856 | 0.844 |

| DeepLabv3+ | 0.785 | 0.784 | 0.821 | 0.832 | 0.756 |

| R | MIOU | Dice | PA | F1 |

|---|---|---|---|---|

| 0.5 | 0.822 | 0.852 | 0.937 | 0.931 |

| 0.75 | 0.904 | 0.951 | 0.983 | 0.947 |

| 0.9 | 0.833 | 0.862 | 0.943 | 0.926 |

| Method | F1 | PA | MIOU | FWIOU | Dice |

|---|---|---|---|---|---|

| Adaptive Multitask Network | 0.958 | 0.988 | 0.906 | 0.962 | 0.948 |

| Single-task Network | 0.912 | 0.951 | 0.852 | 0.923 | 0.921 |

| Method | F1 | PA | MIOU | FWIOU | Dice |

|---|---|---|---|---|---|

| Adaptive Multitask Network | 0.937 | 0.964 | 0.884 | 0.956 | 0.928 |

| Single-task Network | 0.906 | 0.932 | 0.836 | 0.896 | 0.906 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, L.; Wang, J.; Liu, S.; Yang, X. An Adaptive Multitask Network for Detecting the Region of Water Leakage in Tunnels. Appl. Sci. 2023, 13, 6231. https://doi.org/10.3390/app13106231

Zhao L, Wang J, Liu S, Yang X. An Adaptive Multitask Network for Detecting the Region of Water Leakage in Tunnels. Applied Sciences. 2023; 13(10):6231. https://doi.org/10.3390/app13106231

Chicago/Turabian StyleZhao, Liang, Jiawei Wang, Shipeng Liu, and Xiaoyan Yang. 2023. "An Adaptive Multitask Network for Detecting the Region of Water Leakage in Tunnels" Applied Sciences 13, no. 10: 6231. https://doi.org/10.3390/app13106231

APA StyleZhao, L., Wang, J., Liu, S., & Yang, X. (2023). An Adaptive Multitask Network for Detecting the Region of Water Leakage in Tunnels. Applied Sciences, 13(10), 6231. https://doi.org/10.3390/app13106231