5.2. Binocular Camera Target Detection and Tracking

The neural network adopted in this experiment was Yolo v5s, whose model profiles were identical to those of other Yolo v5 series networks, except for the depth_multiple and width_multiple. Yolo v5s boasts a one-deep depth and no module repetition, which enables a faster processing speed in large target recognition. The Yolo v5 loss function includes classification, localization, and confidence loss. The total loss function is the weighted sum of the three, and the confidence and classification loss in Yolo v5 are used. Binary cross entropy is used to process the information, and the positioning loss is determined by using the CIOU loss.

- (1)

Yolo v5s training

The dataset used in this experiment is the data with the label name “person” in the public dataset PASCAL VOC. To enhance the recognition ability of the model when analyzing densely populated environments, we added 9000 dense pedestrian pictures that we collected ourselves. The dataset size and the number of pedestrians are shown in

Table 3.

For the experiment, the dataset was divided into a training, verification, and test set in a ratio of 7:2:1.

We selected the Faster-RCNN and Yolo v3, v4, and v5s neural networks for comparison. The detection accuracy and running speed of the neural network are shown in

Table 4.

During training, the Yolo v5sV5.0 neural network was used; namely, the Yolov5s pretraining weights were used, the training picture size was 640 × 640, the batch-size was set to 32, the Max-det was set to 1000, the Epochs were 100, and the mAP, Precision, Recall, and Loss of the training results are shown in

Figure 9,

Figure 10,

Figure 11 and

Figure 12.

After the iteration, the loss was about 0.04786, the final convergence value of the mAP was 0.70475, and the precision and recall converged at 0.79443 and 0.61314, respectively. The dense crowd test is shown in

Figure 13.

The model detection performance was high and met the experimental requirements.

- (2)

DeepSORT multitarget tracking algorithm

Existing mainstream target-tracking algorithms are based on a tracking-by-detection strategy; that is, they function in accordance with the detection results. This strategy is employed in DeepSORT to track a crowd in a multiperson environment, whereby the number marked on each box is a unique ID to identify a person.

DeepSORT processes each frame as follows:

Obtain bbox → generate detections → predict Kalman filter → match predicted tracks with detections in the current frame using the Hungarian algorithm (cascade and IOU matching) → Kalman filter update.

- (3)

The tracking network was trained

The market-1501 dataset was used for training; it was collected and publicly released in 2015 within Tsinghua University campus. It consists of 1501 pedestrians captured by six cameras, including five high-definition cameras and one low-resolution camera, which resulted in a total of 32,668 pedestrian-bounding boxes. Each pedestrian was captured by at least two cameras, and multiple images of the same person may have been taken with different cameras. The training set contained 751 individuals with 12,936 images with an average of 17.2 training images per person, whereas the test set contained 750 individuals with 19,732 images with an average of 26.3 test images per person.

After partitioning the dataset, a feature extraction model was trained with 100 epochs. The tracking algorithms were evaluated using three metrics: multiple object tracking accuracy (MOTA), identification F1 (IDF1), and frame rate. The MOTA measures the performance of the tracker in object detection and trajectory maintenance, whereas IDF1 focuses more on the performance of the detector. IDF1 is used to assess the tracker’s ability to accurately track the same object in a video over a long period by calculating the proportion of correct initial identity numbers to all identity numbers appearing in the video. The frame rate reflects the processing speed. The evaluation results of the DeepSORT algorithm are shown in

Table 5.

The evaluation results demonstrated that the tracking network could fulfill the tracking tasks in the experiments.

- (4)

Detection and tracking processes

- (a)

Target detection using Yolo v5s: target detection was performed on each image using the Yolo v5s neural network to identify the location and class of each target.

- (b)

Preprocessing the detection results: the detection results were converted to the format required by the DeepSORT algorithm; that is, they were converted to the center coordinates and bounding box size of each coordinate.

- (c)

Running the DeepSORT algorithm: each target was tracked by the DeepSORT algorithm, which requires an initialized target list; thus, the ID of each target must be manually specified in the first frame.

- (d)

Target-state update: for each new frame, the detection results were matched with the tracking results of the previous frame to update the state of each target, whereby the state estimation and prediction were generally based on the Kalman filter.

- (e)

Output: the tracking results were output onto the image.

5.3. Binocular Camera Ranging

- (1)

Binocular parameter extraction

We captured 45 checkerboard images using a stereo camera setup, with both the left and right camera images containing distinct and complete checkerboard patterns, as shown in

Figure 14.

The images captured by the stereo camera setup were segmented and imported into MATLAB’s stereo camera calibrator. In the stereo camera calibrator, the square size of the checkerboard was set to 40 mm. The stereo camera calibrator automatically discarded any images with excessive errors from the left and right camera pairs and performed the stereo calibration. Stereo camera calibration outputs camera internal reference matrix, camera distortion coefficient, camera rotation matrix, camera translation matrix and other parameters, see

Table 6,

Table 7,

Table 8 and

Table 9.

Focal length = 1396.84 (unit: mm).

Baseline distance (baseline) = 121.9064 (unit: mm).

- (2)

Binocular camera calibration

The purpose of rectifying a stereo camera [

20] is to apply mathematical projection transformations to the left and right views captured from the same scene so that the two image planes become coplanar and parallel to the baseline, a process that is commonly referred to as coplanar alignment. After rectification, the epipolar lines in the two images will be completely horizontal, which results in the pixel positions of the same point in space being aligned in the same row in both images. Once coplanar alignment is achieved, the distance can be calculated using the triangulation principle in stereo vision. The schematic diagram of binocular camera correction principle is shown in

Figure 15.

- (3)

Depth information acquisition

The feature points in the left and right images are matched and compared in terms of attributes to determine the corresponding matched pairs. For each matched point pair, a parallax is obtained by calculating the corresponding pixel distances in the left and right images. The parallax, which is inversely proportional to the object distance, is the difference in the image position of the same object between two viewpoints. Based on the parallax and parameters, an object’s distance can be calculated using triangulation or other methods:

where

represents the focal length (in pixels),

denotes the baseline length,

signifies the disparity, and

and

are the column coordinates of the principal points of the two cameras.

As per the above equation, the depth accuracy of a pixel depends on the accuracy of the estimated disparity (d) at that pixel. Assuming a constant error in the disparity estimation, the depth accuracy deteriorates as the measured distance increases, which causes stereo cameras to be less suitable for measuring distant objects. To obtain reliable depth estimates for distant targets, the baseline distance of the cameras needs to be increased, but larger baseline distances result in smaller overlapping regions and greater content differences between left and right views, which, in turn, increases the difficulty of stereo matching. Cameras with larger focal lengths can be chosen, but larger focal lengths result in a smaller field of view, which makes it challenging to estimate the distance of objects close to the cameras. Therefore, for our experiment, human bodies were detected at distances ranging from 0.3 to 3 m.

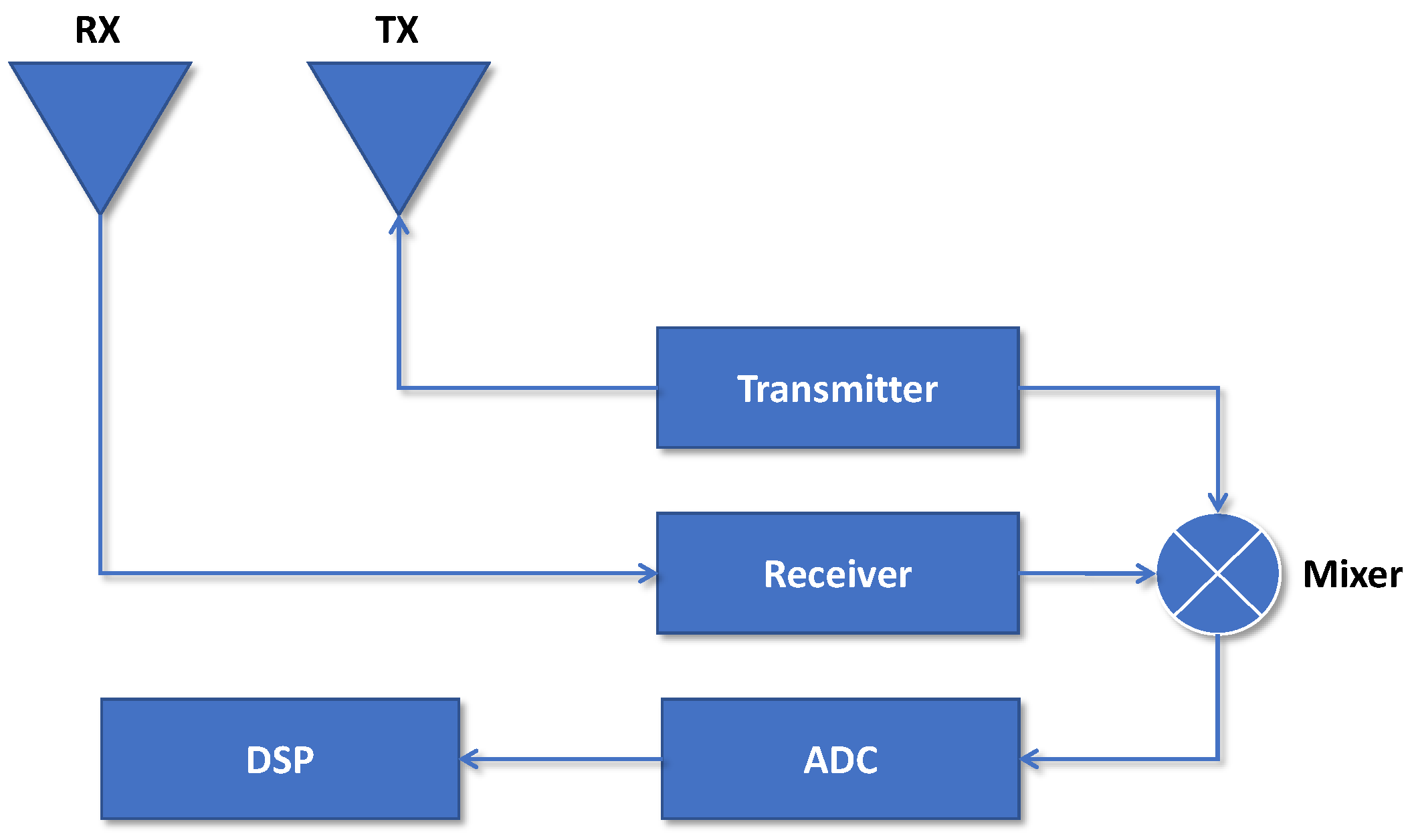

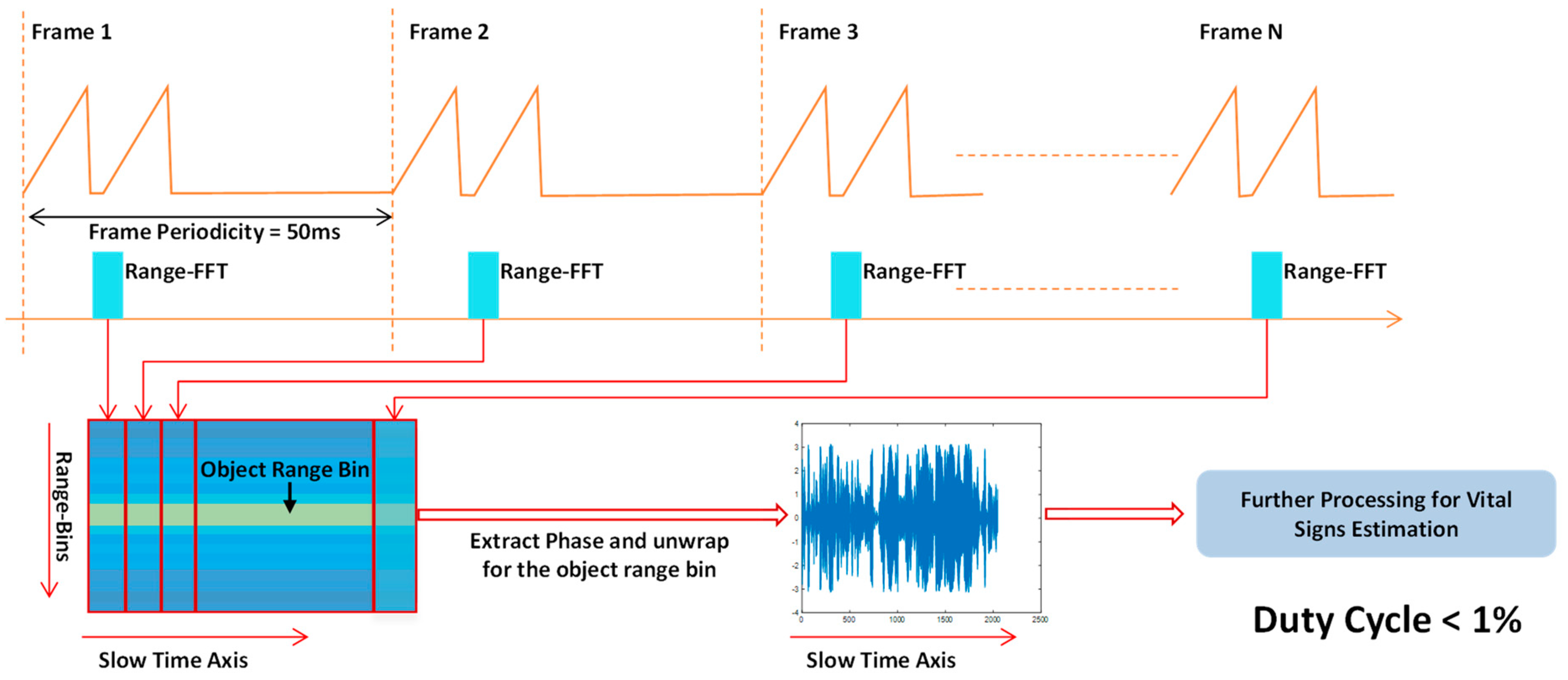

5.4. Millimeter Wave Radar Vital Sign Processing

The radar evaluation board utilized in this experiment was an IWR1642Boost produced by TI. The starting frequency of the transmit signal was set to 77 GHz, the FM slope was designated as 70.006 MHz/s, the number of samples per chirp was 200, the sampling rate was 4000 ksps, the frame periodicity was set to 50 ms, and each frame contained 1024 chirp signals. The mode was one send and four received. In the experiment, IWR1642 [

21] and DCA1000 [

22] were used to collect vital information on the streaming data mode.

- (1)

Determine the position of the human body.

Targets 1 and 2 were detected by the binocular camera, and the distances were 1.67 and 2.74 m, respectively, as shown in

Figure 16.

- (2)

Radar signal processing.

In the range-dimensional FFT of the raw data received by the radar, multiple targets were in the range-dimensional FFT, as shown in

Figure 17.

Because the distance resolution of the radar was 0.049 m, the frequency spectrum was centered on 1.67 and 2.74 m, the range was ±0.05 m to search, the point of maximum energy was taken as the target to be measured, and finally, the distances were determined to be 1.67 and 2.70 m. The spectral peak was the target to be measured and the range gate was locked, as shown in

Figure 18.

The phase information of targets 1 and 2 was extracted, and then phase unwrapping was performed and phase differences were determined to obtain the relationship between the phase and the number of sampling points, as shown in

Figure 19.

The results of medical research regarding the breathing, heartbeat displacement, and frequency parameters of ordinary adults are shown in

Table 10.

BR (0.1~0.5 Hz) and HR (0.8~2.0 Hz) filters were established; the respiratory signal was separated first, as shown in

Figure 20. And its spectrum was estimated, as shown in

Figure 21.

By calculating the data, we found that the respiratory rates of targets 1 and 2 were 22 and 26, respectively.

Then, the HR filter was used to separate the heartbeat signal, and a spectrum estimation was performed, as shown in the

Figure 22 and

Figure 23.

The heartbeat frequencies of targets 1 and 2 were 81 and 94, respectively.

The positions of targets 1 and 2 were changed, the corresponding target life signals continued to be detected, and life signal models were established for targets 1 and 2, as shown in the

Figure 24 and

Figure 25.

The life signals of the two targets could still be separated after the position changed. The farther the distance was, the smaller the detected phase change was, and the smaller the calculated amplitude was.

- (3)

Performance comparison between respiratory and heart rate.

To verify the accuracy and effectiveness of the method, the radar sensor and the binocular camera were used to collect 10 groups of 30-s heartbeat and breathing data in a two-person, general indoor environment, and the proposed method was used to calculate the breathing and heartbeat frequency. Additionally, we compared the data with the breathing frequency collected by a breathing sensor and the heartbeat frequency collected by a smart bracelet. The results are shown in

Table 11.

We used the Pearson correlation coefficient (PCC) to compare the data collected with our method and contact equipment [

23], whereby the PCC value ranged from 0 to 100%; 0 indicates that the two are completely uncorrelated, and 100% indicates that the two are completely correlated. The results are shown in

Table 10.

The vital signal data calculated by using the proposed method were very close to the data detected by the wearable sensors, and the correlation was high. The matching rate (PCC value) of the breathing rate obtained with the sensor and by using the proposed method reached 84.9%, and the matching rate (PCC value) of the heartbeat frequency obtained by using the proposed method and the smart bracelet reached 93.6%. The results showed that the noncontact life signal detection method using an FMCW millimeter wave radar and binocular camera can be used to complete noncontact detection tasks in an indoor environment and to match multiple targets with life signals within the detection range.