A Novel Approach for Improving Guitarists’ Performance Using Motion Capture and Note Frequency Recognition

Abstract

:1. Introduction

2. Related Work

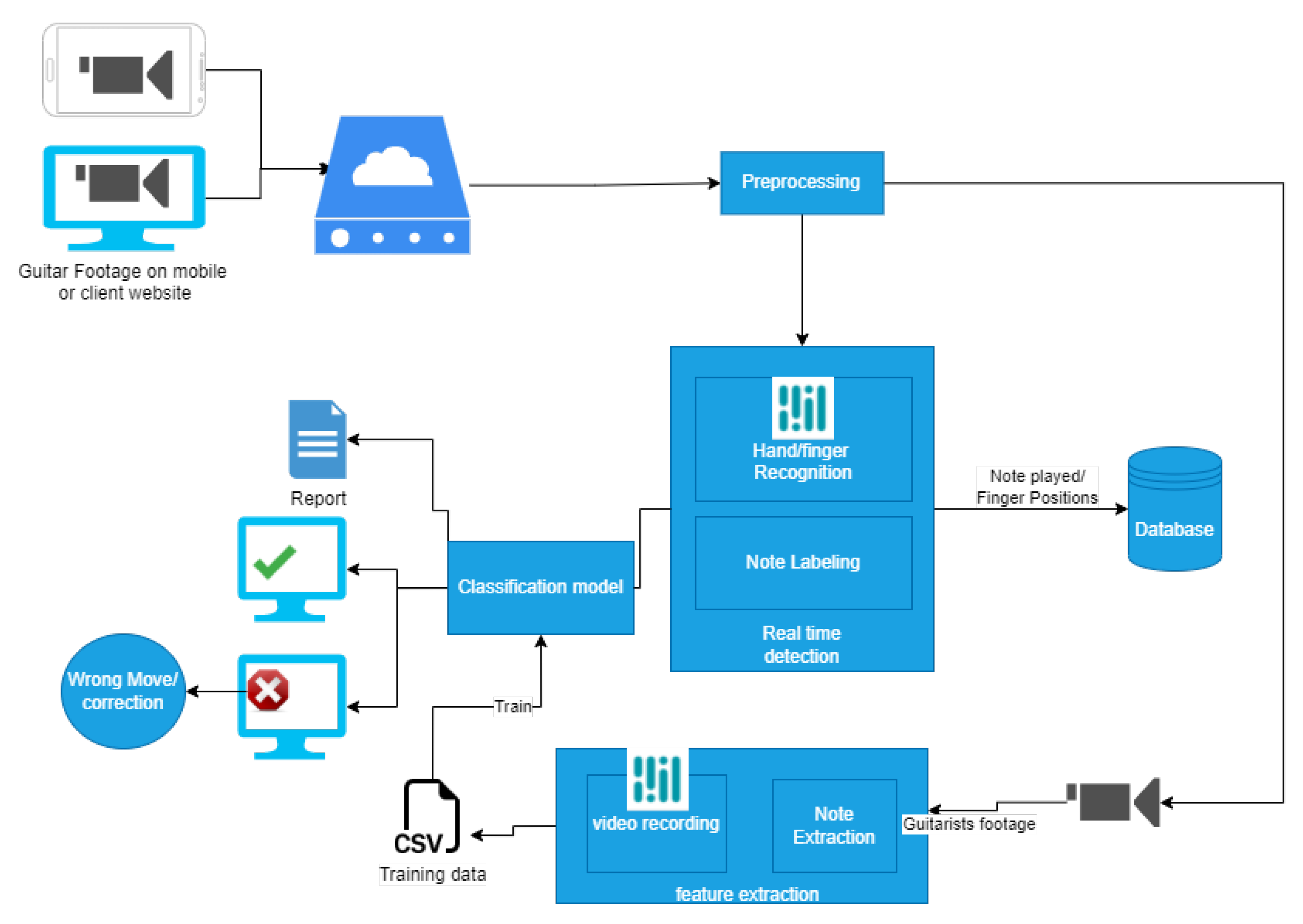

3. Proposed Approach

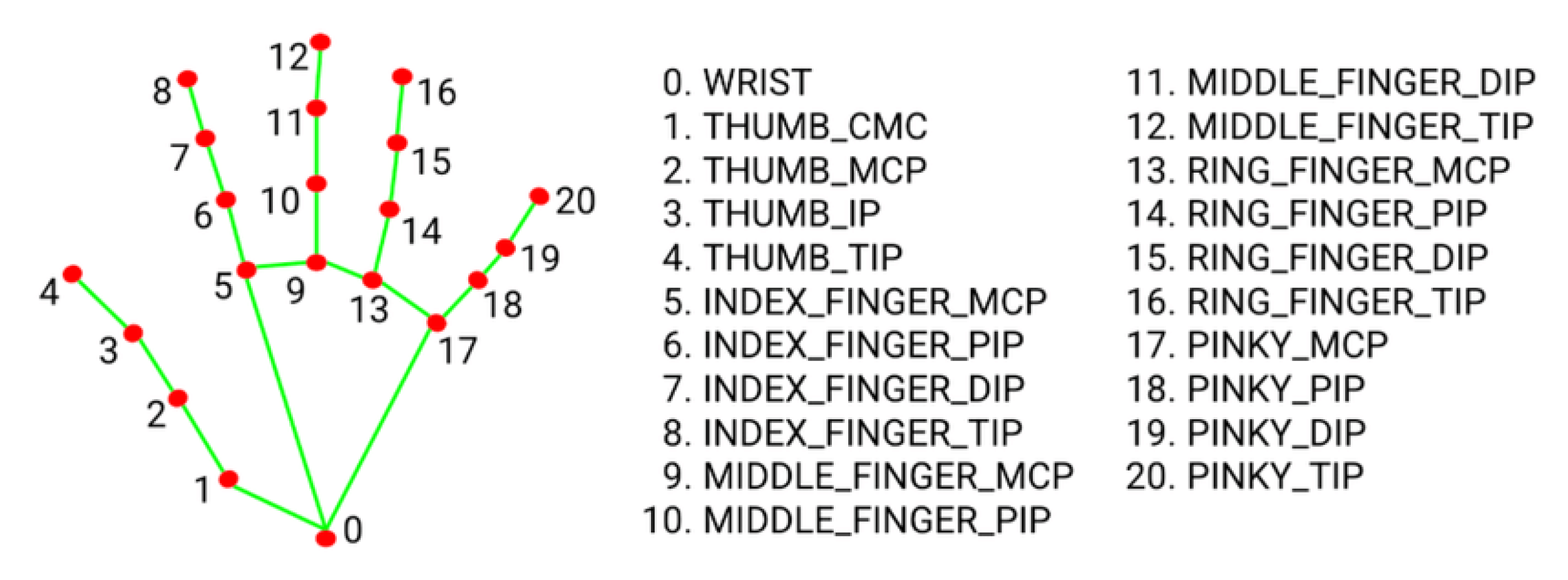

3.1. Real-Time Detection

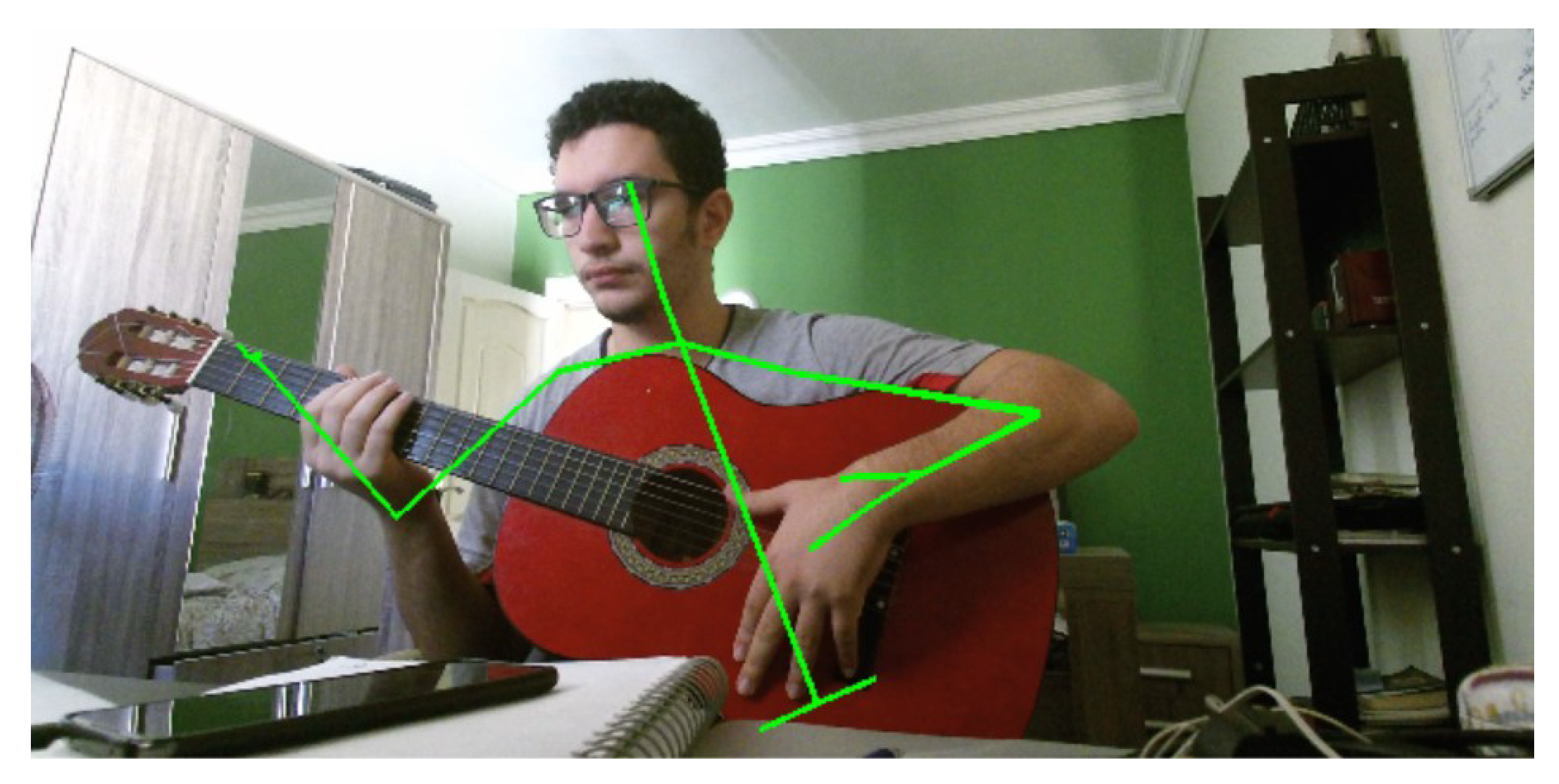

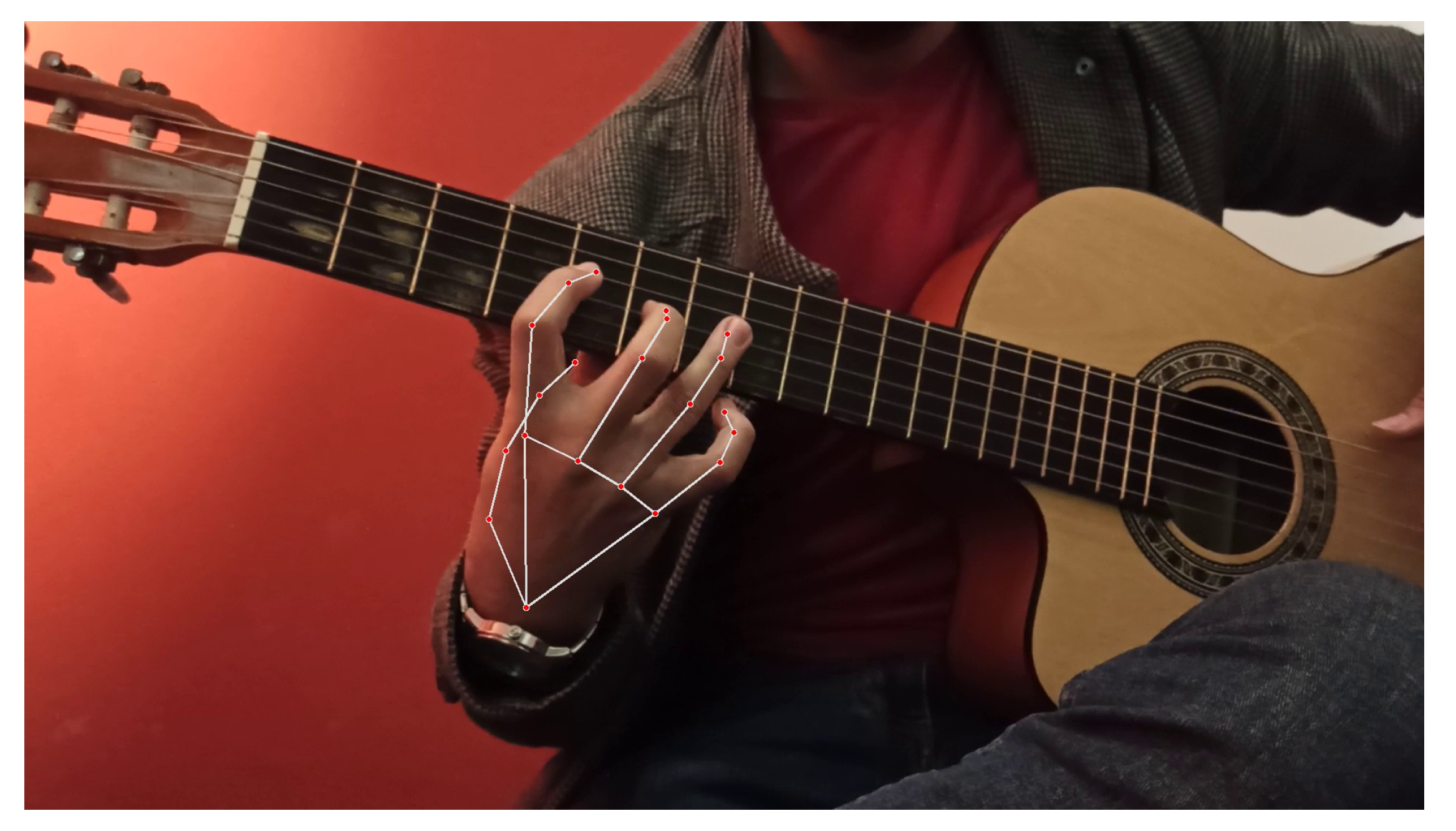

3.1.1. Finger Position Detection

3.1.2. Note Labeling

- X(k) is the transformed signal, which is a complex number (frequency domain) at index k;

- x(n) is the discrete signal at sequence n; N is the total number of samples in the input signal;

- refers to Euler’s formula, which encodes the amplitude of the frequency for per time unit, where k is the frequency index range, N is the total number of samples, and i denotes the imaginary unit.

- R is the number of magnitude spectra;

- x(f) is the magnitude spectrum of a signal.

3.2. Data Extraction

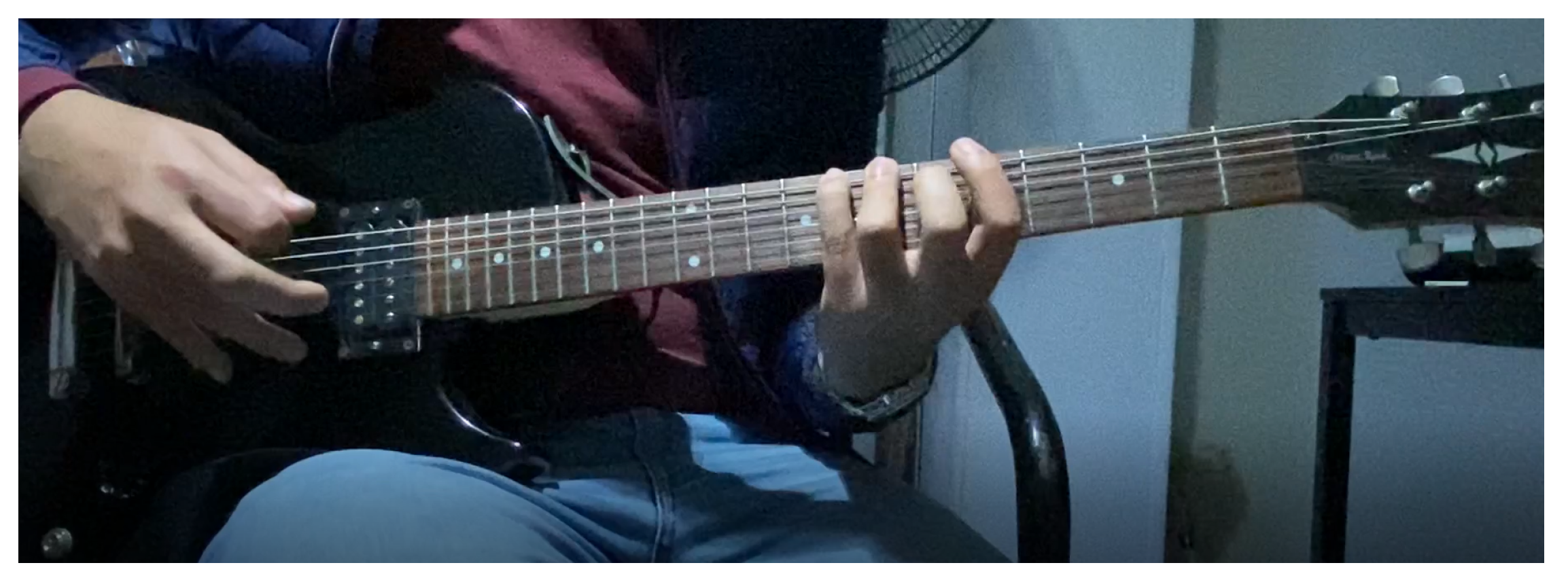

3.2.1. Video Recording

3.2.2. Note Extraction

3.3. Classification Model

4. Experiments and Results

4.1. Experimental Setting and Extracted Data

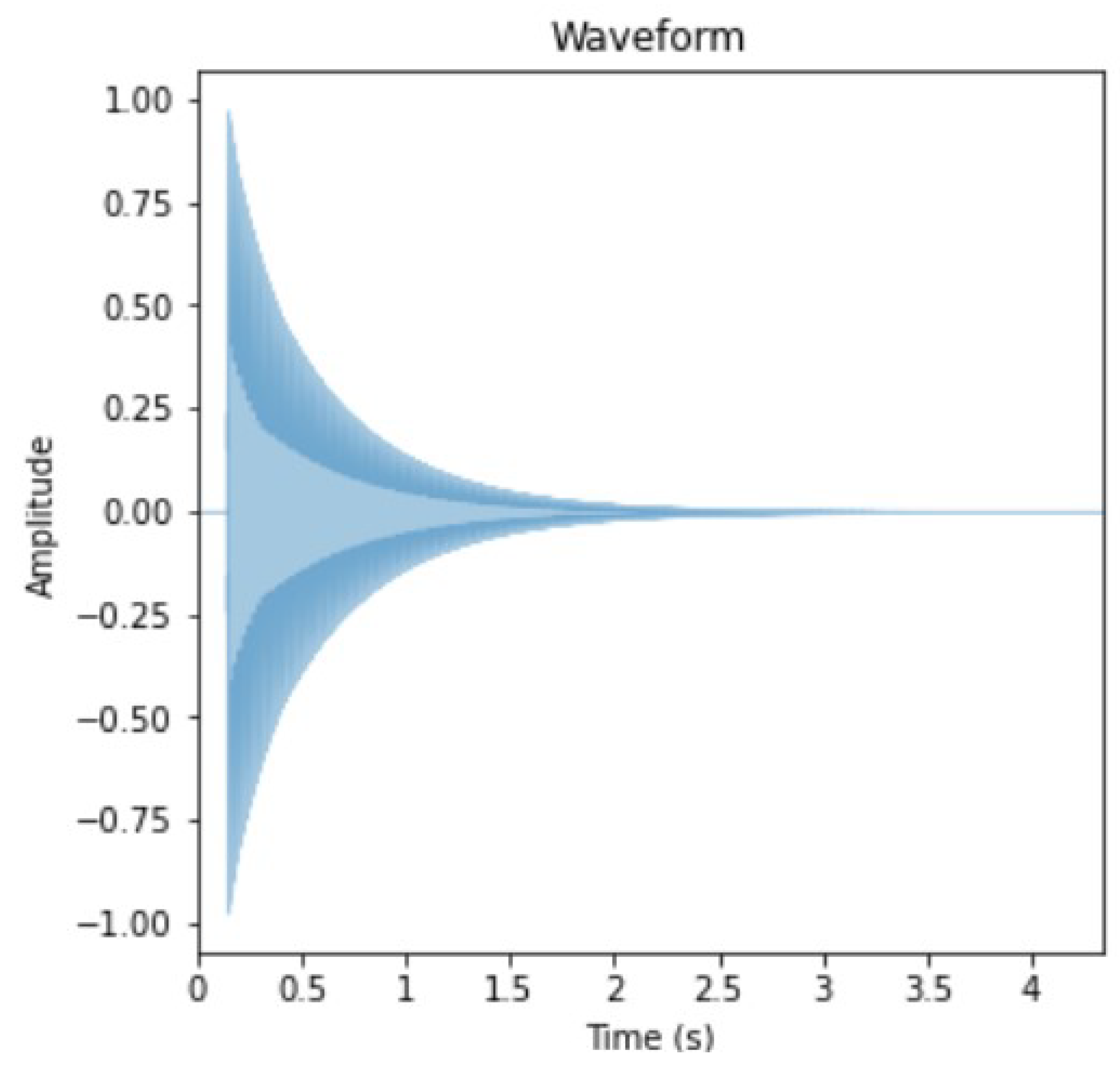

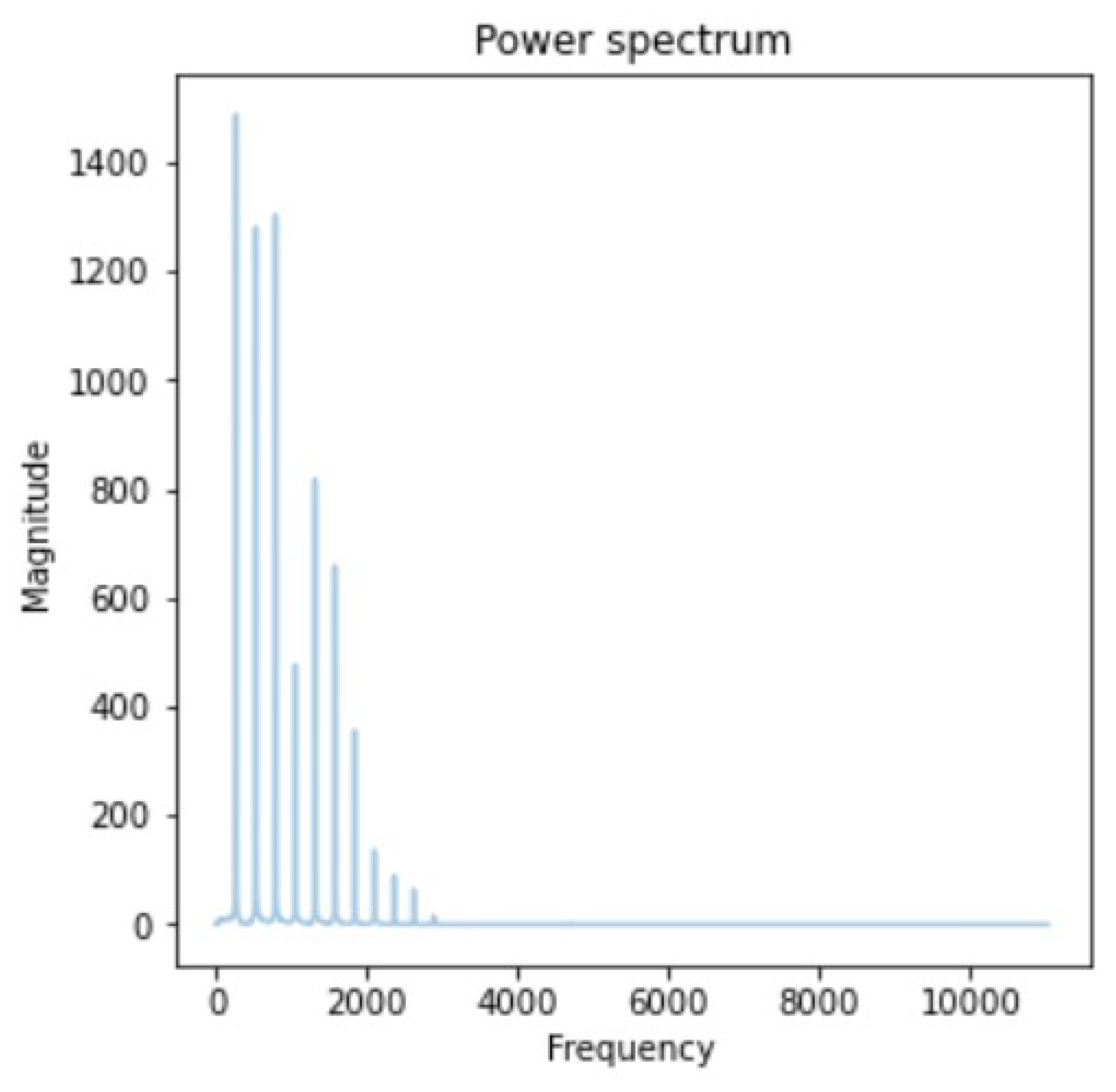

4.2. Note Extraction Using Signal Processing

Signal Processing Results

4.3. Motion Capture Experiment

4.4. Hand Position Classification Results

- Accuracy indicates the amount of correct classifications out of the total number of instances in the dataset;

- Precision measures the accuracy of positive predictions made;

- Recall measures the number correctly classified true positives;

- TP denotes true positives;

- TN denotes true negatives;

- FP denotes false positives;

- FN denotes false negatives.

4.5. Finger Classification Results

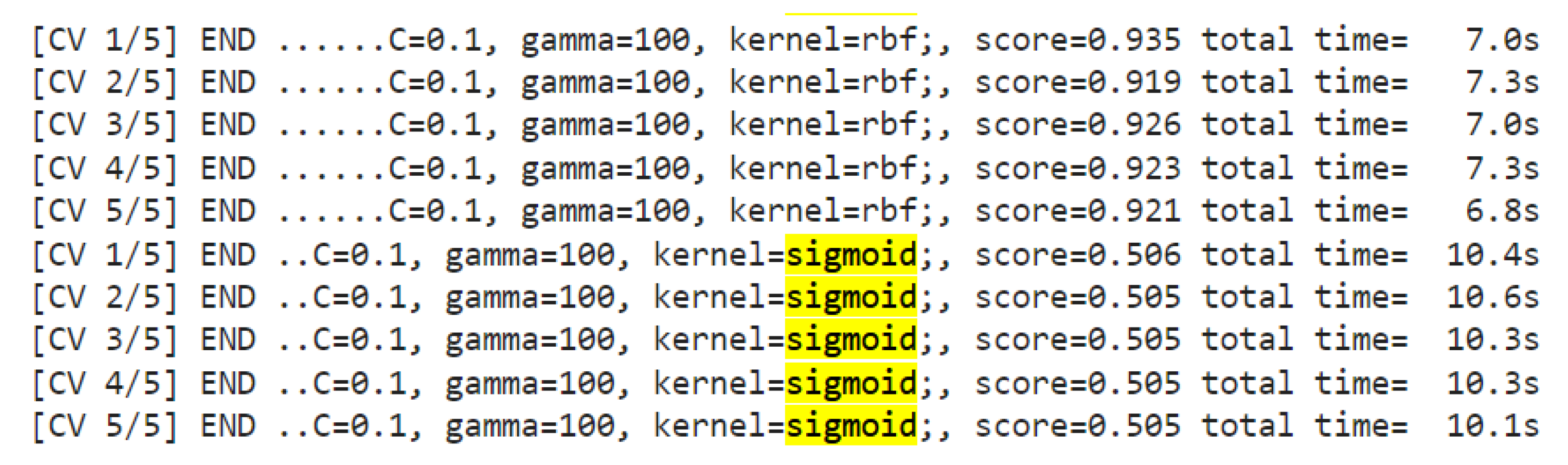

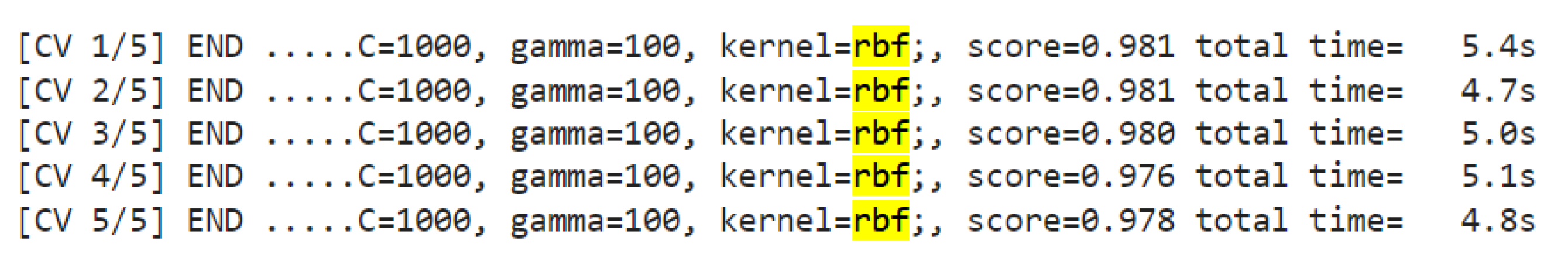

SVM Tuning

5. Comparative Analysis

6. Conclusions

7. Limitations

8. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Guy, P. Brief History of the Guitar. 2007. Available online: gyuguitars.com (accessed on 7 November 2022).

- Somogyi, E. The Guitar as an Icon of Culture, Class Status, and Social Values. Available online: https://esomogyi.com/articles/guitars-virtue-and-nudity-the-guitar-as-an-icon-of-culture-class-status-and-social-values/ (accessed on 7 November 2022).

- Central, O. What Is the World’s Best Selling Musical Instrument? 2007. Available online: orchestracentral.com (accessed on 15 February 2023).

- Staff, M. Guitar 101: What Is an Electric Guitar? Plus Tips for Perfecting Your Electric Guitar Techniques. Master Class. 2021. Available online: https://www.masterclass.com/articles/guitar-101-what-is-an-electric-guitar-plus-tips-for-perfecting-your-electric-guitar-technique (accessed on 15 February 2023).

- Peczeck, E. Left and Right Hands. Classical Guitar Academy. 2015. Available online: https://www.classicalguitaracademy.co.uk/left-right-hands/ (accessed on 15 February 2023).

- Matthies, A. Is Lead Guitar Harder Than Rhythm Guitar? April 2020. Available online: https://guitargearfinder.com/faq/lead-vs-rhythm-guitar/ (accessed on 15 February 2023).

- Constine, J. Fender Goes Digital So you do not have to quit Guitar. Techcrunch. 2015. Available online: https://techcrunch.com/2015/09/10/software-is-eating-rocknroll/ (accessed on 15 February 2023).

- Bienstock, R. 90 Percent of New Guitarists Abandon the Instrument within a Year, According to Fender. Guitar World. 2019. Available online: https://www.guitarworld.com/news/90-percent-of-new-guitarists-abandon-the-instrument-within-a-year-according-to-fender (accessed on 15 February 2023).

- Cole, J. In-Person, Online, or DIY: What is the Best Way to Learn Guitar? TakeLessons Blog. 2020. Available online: https://takelessons.com/blog/best-way-to-learn-guitar (accessed on 15 February 2023).

- Perez-Carrillo, A. Finger-string interaction analysis in guitar playing with optical motion capture. Front. Comput. Sci. 2019, 1, 8. [Google Scholar] [CrossRef]

- Patel, J.K.; Gopi, E.S. Musical Notes Identification Using Digital Signal Processing; National Institute of Technology: Punjab, India, 2015. [Google Scholar]

- Xue, Y.; Ju, Z. SEMG based Intention Identification of Complex Hand Motion using Nonlinear Time Series Analysis. In Proceedings of the 2019 9th International Conference on Information Science and Technology (ICIST), Cairo, Egypt, 24–26 March 2019; pp. 357–361. [Google Scholar] [CrossRef]

- Yoshikawa, M.; Mikawa, M.; Tanaka, K. Real-Time Hand Motion Estimation Using EMG Signals with Support Vector Machines. In Proceedings of the 2006 SICE-ICASE International Joint Conference, Busan, Republic of Korea, 18–21 October 2006; pp. 593–598. [Google Scholar] [CrossRef]

- Kashiwagi, Y.; Ochi, Y. A Study of Left Fingering Detection Using CNN for Guitar Learning. In Proceedings of the 2018 International Conference on Intelligent Autonomous Systems (ICoIAS), Singapore, 1–3 March 2018; pp. 14–17. [Google Scholar] [CrossRef]

- Burns, A.M.; Wanderley, M.M. Visual methods for the retrieval of guitarist fingering. In Proceedings of the 2006 conference on New Interfaces for Musical Expression. Citeseer, Paris, France, 4–6 June 2006; pp. 196–199. [Google Scholar]

- Kerdvibulvech, C.; Saito, H. Real-time guitar chord recognition system using stereo cameras for supporting guitarists. Proc. ECTI Trans. Electr. Eng. Electron. Commun. 2007, 5, 147–157. [Google Scholar]

- Yang, C.K.; Tondowidjojo, R. Kinect v2 Based Real-Time Motion Comparison with Re-targeting and Color Code Feedback. In Proceedings of the 2019 IEEE 8th Global Conference on Consumer Electronics (GCCE), Osaka, Japan, 15–18 October 2019; pp. 1053–1054. [Google Scholar]

- Yoshida, K.; Matsushita, S. Visualizing Strumming Action of Electric Guitar with Wrist-worn Inertial Motion Sensors. In Proceedings of the 2020 IEEE 9th Global Conference on Consumer Electronics (GCCE), Kobe, Japan, 13–16 October 2020; pp. 739–742. [Google Scholar]

- Chaikaew, A.; Somkuan, K.; Yuyen, T. Thai Sign Language Recognition: An Application of Deep Neural Network. In Proceedings of the 2021 Joint International Conference on Digital Arts, Media and Technology with ECTI Northern Section Conference on Electrical, Electronics, Computer and Telecommunication Engineering, Cha-am, Thailand, 3–6 March 2021; pp. 128–131. [Google Scholar] [CrossRef]

- Abeßer, J.; Schuller, G. Instrument-centered music transcription of solo bass guitar recordings. IEEE ACM Trans. Audio Speech Lang. Process. 2017, 25, 1741–1750. [Google Scholar] [CrossRef]

- Farhan, Y.; Ait Madi, A. Real-time Dynamic Sign Recognition using MediaPipe. In Proceedings of the 2022 IEEE 3rd International Conference on Electronics, Control, Optimization and Computer Science (ICECOCS), Kenitra, Morocco, 1–2 December 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Bugarin, C.A.Q.; Lopez, J.M.M.; Pineda, S.G.M.; Sambrano, M.F.C.; Loresco, P.J.M. Machine Vision-Based Fall Detection System using MediaPipe Pose with IoT Monitoring and Alarm. In Proceedings of the 2022 IEEE 10th Region 10 Humanitarian Technology Conference (R10-HTC), Hyderabad, India, 16–18 September 2022; pp. 269–274. [Google Scholar] [CrossRef]

- Thaman, B.; Cao, T.; Caporusso, N. Face Mask Detection using MediaPipe Facemesh. In Proceedings of the 2022 45th Jubilee International Convention on Information, Communication and Electronic Technology (MIPRO), Opatija, Croatia, 23–27 May 2022; pp. 378–382. [Google Scholar] [CrossRef]

- Wang, Z.; Ohya, J. A 3D guitar fingering assessing system based on CNN-hand pose estimation and SVR-assessment. Electron. Imaging 2018, 2018, 204-1–204-5. [Google Scholar] [CrossRef]

- Kirthika, P.; Chattamvelli, R. A Review of Raga Based Music Classification and Music Information Retrieval. In Proceedings of the 2012 IEEE International Conference on Engineering Education: Innovative Practices and Future Trends (AICERA), Kottayam, India, 19–21 July 2012. [Google Scholar]

- Parshanth, T.R.; Venugopalan, R. Note Identification in Carnatic Music From Frequency Spectrum. In Proceedings of the 2011 International Conference on Communications and Signal Processing, Kerala, India, 10–12 February 2011. [Google Scholar]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. Mediapipe: A framework for building perception pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar]

- Zhang, F.; Bazarevsky, V.; Vakunov, A.; Tkachenka, A.; Sung, G.; Chang, C.L.; Grundmann, M. Mediapipe hands: On-device real-time hand tracking. arXiv 2020, arXiv:2006.10214. [Google Scholar]

- Sripriya, N.; Nagarajan, T. Pitch estimation using harmonic product spectrum derived from DCT. In Proceedings of the 2013 IEEE International Conference of IEEE Region 10 (TENCON 2013), Xi’an, China, 22–25 October 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Raguraman, P.; Mohan, R.; Vijayan, M. LibROSA Based Assessment Tool for Music Information Retrieval Systems. In Proceedings of the 2019 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), San Jose, CA, USA, 28–30 March 2019; pp. 109–114. [Google Scholar] [CrossRef]

- Ooaku, T. Guitar Chord Recognition Based on Finger Patterns with Deep Learning. In Proceedings of the ICCIP ’18: Proceedings of the 4th International Conference on Communication and Information Processing, Qingdao, China, 2–4 November 2018; pp. 0785–0789. [Google Scholar] [CrossRef]

- Howard, B.; Howard, S. Lightglove: Wrist-worn virtual typing and pointing. In Proceedings of the Fifth International Symposium on Wearable Computers, Zurich, Switzerland, 8–9 October 2001; pp. 172–173. [Google Scholar] [CrossRef]

| Size | 2.5 GB |

|---|---|

| Number of rows or frames | 25,544 rows |

| Number of features | 68 |

| Duration | From 7 to 30 s |

| Number of videos | 67 |

| Number of classes | 2 |

| Number of incorrect classes | 33 |

| Number of correct classes | 32 |

| Guitar type | Cort cr50 Electric Guitar |

| Camera for recording | iPhone 6 |

| Size | 1.5 GB |

|---|---|

| Number of rows or frames | 12,607 rows |

| Number of features | 68 |

| Duration | from 2 to 30 s |

| Number of videos | 40 |

| Number of classes | 2 |

| Number of incorrect classes | 20 |

| Number of correct classes | 20 |

| Guitar type | Spanish classic guitar |

| Camera for recording | Xiaomi note 8 pro |

| DFT | HPS | ||||

|---|---|---|---|---|---|

| Note | Detected Frequency | Closest Frequency | Detected Frequency | Closest Frequency | Standard Frequency |

| C3 | 262 | 261 | 131 | 130 | 130 |

| C4 | 262 | 261 | 262 | 261 | 261 |

| C5 | 525 | 523 | 525 | 523 | 523 |

| C♯3 | 282 | 277 | 140 | 138 | 138 |

| C♯4 | 278 | 277 | 278 | 277 | 277 |

| C♯5 | 557 | 554 | 556 | 554 | 554 |

| D3 | 147 | 146 | 147 | 146 | 146 |

| D4 | 294 | 293 | 294 | 293 | 293 |

| D5 | 590 | 587 | 590 | 587 | 587 |

| D♯3 | 156 | 155 | 156 | 155 | 155 |

| D♯4 | 312 | 311 | 311 | 311 | 311 |

| D♯5 | 625 | 622 | 626 | 622 | 622 |

| E2 | 165 | 164 | 82 | 82 | 82 |

| E3 | 330 | 329 | 165 | 164 | 164 |

| E4 | 330 | 329 | 330 | 329 | 329 |

| E5 | 1323 | 1318 | 661 | 659 | 659 |

| F2 | 176 | 174 | 88 | 87 | 87 |

| F3 | 175 | 174 | 175 | 174 | 174 |

| F4 | 350 | 349 | 349 | 349 | 349 |

| F5 | 2106 | 2093 | 350 | 349 | 698 |

| F♯2 | 186 | 185 | 93 | 92 | 92 |

| F♯3 | 185 | 185 | 185 | 185 | 185 |

| F♯4 | 471 | 369 | 371 | 369 | 369 |

| F♯5 | 743 | 739 | 743 | 739 | 739 |

| G2 | 197 | 196 | 98 | 98 | 98 |

| G3 | 394 | 392 | 196 | 196 | 196 |

| G4 | 393 | 392 | 394 | 392 | 392 |

| G5 | 572 | 587 | 788 | 784 | 784 |

| G♯2 | 208 | 207 | 104 | 104 | 104 |

| G♯3 | 393 | 392 | 207 | 208 | 208 |

| G♯4 | 417 | 415 | 415 | 415 | 415 |

| G♯5 | 835 | 830 | 834 | 830 | 830 |

| A2 | 111 | 110 | 111 | 110 | 110 |

| A3 | 220 | 220 | 220 | 220 | 220 |

| A4 | 441 | 440 | 443 | 440 | 440 |

| A5 | 1766 | 1760 | 885 | 880 | 880 |

| A♯2 | 117 | 116 | 117 | 116 | 116 |

| A♯3 | 235 | 255 | 234 | 233 | 233 |

| A♯4 | 469 | 466 | 470 | 466 | 466 |

| A♯5 | 885 | 880 | 886 | 880 | 932 |

| B2 | 125 | 123 | 124 | 123 | 123 |

| B3 | 247 | 246 | 248 | 246 | 246 |

| B4 | 496 | 493 | 497 | 493 | 493 |

| B5 | 995 | 987 | 987 | 987 | 987 |

| Algorithm | |||||

|---|---|---|---|---|---|

| Metric | SVM | kNN | Naive Bayes | Random Forest | XG Boosting |

| Accuracy | 0.63 | 0.76 | 0.68 | 0.99 | 0.93 |

| Precision | 0.67 | 0.84 | 0.66 | 0.99 | 0.97 |

| Recall | 0.63 | 0.86 | 0.66 | 0.99 | 0.97 |

| Algorithm | |||||

|---|---|---|---|---|---|

| Model | SVM | kNN | Naive Bayes | Random Forest | XG Boosting |

| Accuracy | |||||

| Index | 71% | 97% | 70% | 98% | 90% |

| Middle | 81% | 98% | 68% | 98% | 90% |

| Ring | 82% | 98% | 66% | 97% | 92% |

| Pinky | 81% | 98% | 70% | 97% | 89% |

| Precision | |||||

| Index | 65% | 97% | 63% | 96% | 87% |

| Middle | 83% | 98% | 64% | 98% | 94% |

| Ring | 72% | 98% | 70% | 98% | 91% |

| Pinky | 83% | 97% | 79% | 97% | 88% |

| Recall | |||||

| Index | 21% | 97% | 74% | 95% | 86% |

| Middle | 28% | 98% | 81% | 97% | 91% |

| Ring | 19% | 98% | 79% | 97% | 92% |

| Pinky | 70% | 97% | 86% | 96% | 88% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Elashmawi, W.H.; Emad, J.; Serag, A.; Khaled, K.; Yehia, A.; Mohamed, K.; Sobeah, H.; Ali, A. A Novel Approach for Improving Guitarists’ Performance Using Motion Capture and Note Frequency Recognition. Appl. Sci. 2023, 13, 6302. https://doi.org/10.3390/app13106302

Elashmawi WH, Emad J, Serag A, Khaled K, Yehia A, Mohamed K, Sobeah H, Ali A. A Novel Approach for Improving Guitarists’ Performance Using Motion Capture and Note Frequency Recognition. Applied Sciences. 2023; 13(10):6302. https://doi.org/10.3390/app13106302

Chicago/Turabian StyleElashmawi, Walaa H., John Emad, Ahmed Serag, Karim Khaled, Ahmed Yehia, Karim Mohamed, Hager Sobeah, and Ahmed Ali. 2023. "A Novel Approach for Improving Guitarists’ Performance Using Motion Capture and Note Frequency Recognition" Applied Sciences 13, no. 10: 6302. https://doi.org/10.3390/app13106302