SM-TCNNET: A High-Performance Method for Detecting Human Activity Using WiFi Signals

Abstract

:1. Introduction

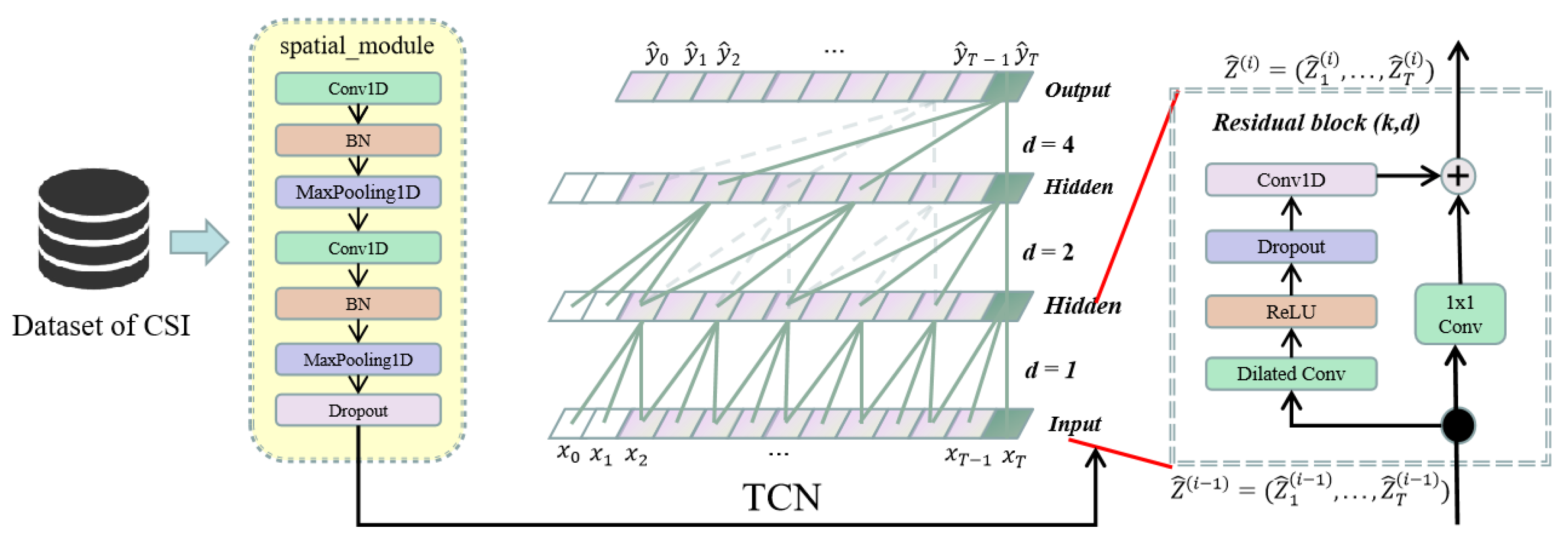

- A deep-learning-based model (SM-TCNNET) is proposed. The SM module is mainly used to extract the spatial features in CSI signals. The TCN probes the temporal information in the CSI signals, which improves the expressiveness of the model and its ability to accurately identify human activities.

- The model requires only a small amount of pre-processing of the CSI data, which makes it easily scalable to other activity recognition datasets.

- It verifies the accuracy of the SM-TCNNET model at various distances between the transmitter and the receiver. When the distance between the transmitter and the receiver is known, the distance from the human body to the connection line between the two is different. It evaluates the reliability and validity of CSI data, and it investigates the law of signal characteristics changing with distance.

- The performance of SM-TCNNET is evaluated using both the self-harvested dataset and the public dataset under various scenarios of line-of-sight (LOS) and non-line-of-sight (NLOS). In the self-harvested dataset, the accuracy of human activity recognition reaches 99.93% and 97.56% in different situations of LOS and NLOS, respectively; in the public dataset, our model detects human activity with 99.8% accuracy.

2. Dataset Description

3. System Design

3.1. Data Preprocessing

3.2. SM-TCNNET Model

3.2.1. Model Introduction

3.2.2. Training and Testing

4. Validation and Evaluation

4.1. Experimental Results and Analysis Based on Self-Collected Data Sets

4.1.1. TX and RX at Different Distances, Activity Accuracy Results and Analysis

4.1.2. Recognition Results and Analysis of Changing Human Position When the Distance between TX and RX Is Certain

4.1.3. Results and Analysis of the LOS and NLOS Cases When the Distance between TX and RX Is Certain

4.2. Experimental Results Based on StanWiFi Dataset

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Aloulou, H.; Abdulrazak, B.; de Marassé-Enouf, A.; Mokhtari, M. Participative Urban Health and Healthy Aging in the Age of AI: 19th International Conference, ICOST 2022, Paris, France, Paris, France, 27–30 June 2022, Proceedings; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Islam Md, M.; Nooruddin, S.; Karray, F. Multimodal Human Activity Recognition for Smart Healthcare Applications. In Proceedings of the 2022 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Prague, Czech Republic, 9–12 October 2022; pp. 196–203. [Google Scholar]

- Liagkou, V.; Sakka, S.; Stylios, C. Security and Privacy Vulnerabilities in Human Activity Recognition systems. In Proceedings of the 2022 7th South-East Europe Design Automation, Computer Engineering, Computer Networks and Social Media Conference (SEEDA-CECNSM), Ioannina, Greece, 23–25 September 2022; pp. 1–6. [Google Scholar]

- Qu, H.; Rahmani, H.; Xu, L.; Williams, B.; Liu, J. Recent Advances of Continual Learning in Computer Vision: An Overview. arXiv 2021, arXiv:2109.11369v2. [Google Scholar]

- Uddin, M.H.; Ara, J.M.; Rahman, M.H.; Yang, S.H. A Study of Real-Time Physical Activity Recognition from Motion Sensors via Smartphone Using Deep Neural Network. In Proceedings of the 2021 5th International Conference on Electrical Information and Communication Technology (EICT), Khulna, Bangladesh, 17–19 December 2021; pp. 17–19. [Google Scholar]

- Li, X.; He, Y.; Jing, X. A survey of deep learning-based human activity recognition in radar. Remote Sens. 2019, 11, 1068. [Google Scholar] [CrossRef]

- Qi, W.; Wang, N.; Su, H. DCNN based human activity recognition framework with depth vision guiding. Neurocomputing 2022, 486, 261–271. [Google Scholar] [CrossRef]

- Janardhanan, J.; Umamaheswari, S. Vision based Human Activity Recognition using Deep Neural Network Framework. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 3117–3128. [Google Scholar] [CrossRef]

- Aggarwal, K.; Arora, A. An Approach to Control the PC with Hand Gesture Recognition using Computer Vision Technique. In Proceedings of the 2022 9th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 23–25 March 2022; pp. 760–764. [Google Scholar]

- Mujahid, A.; Aslam, M.; Khan, M.U.G. Multi-Class Confidence Detection Using Deep Learning Approach. Appl. Sci. 2023, 13, 5567. [Google Scholar] [CrossRef]

- Adama, D.A.; Lotfi, A.; Ranson, R. A Survey of Vision-Based Transfer Learning in Human Activity Recognition. Electronics 2021, 10, 2412. [Google Scholar] [CrossRef]

- Lee, H. Developing a wearable human activity recognition (WHAR) system for an outdoor jacket. Int. J. Cloth. Sci. Technol. 2023, 35, 177–196. [Google Scholar] [CrossRef]

- Gao, C.; Chen, Y.; Jiang, X. Bi-STAN: Bilinear spatial-temporal attention network for wearable human activity recognition. Int. J. Mach. Learn. Cybern. 2023, 14, 2545–2561. [Google Scholar] [CrossRef]

- Sun, B.; Liu, M.; Zheng, R. Attention-based LSTM Network for Wearable Human Activity Recognition. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; pp. 8677–8682. [Google Scholar]

- Rosati, S.; Balestra, G.; Knaflitz, M. Comparison of Different Sets of Features for Human Activity Recognition by Wearable Sensors. Sensors 2018, 18, 4189. [Google Scholar] [CrossRef]

- Janarthanan, S. Optimized unsupervised deep learning assisted reconstructed coder in the on-nodule wearable sensor for human activity recognition. Measurement 2020, 164, 108050. [Google Scholar] [CrossRef]

- Yao, Y.; Liu, W.; Zhang, G. Radar-Based Human Activity Recognition Using Hyperdimensional Computing. IEEE Trans. Microw. Theory Tech. 2022, 70, 1605–1619. [Google Scholar] [CrossRef]

- Cao, Z.; Li, Z.; Guo, X. Towards Cross-Environment Human Activity Recognition Based on Radar Without Source Data. IEEE Trans. Veh. Technol. 2021, 70, 11843–11854. [Google Scholar] [CrossRef]

- Chen, H.; Ding, C.; Zhang, L. Human Activity Recognition using Temporal 3DCNN based on FMCW Radar. In Proceedings of the 2022 IEEE MTT-S International Microwave Biomedical Conference (IMBioC), Suzhou, China, 16–18 May 2022; pp. 245–247. [Google Scholar]

- Radhityo, D.; Suratman, F.; Istiqomah. Human Motion Change Detection Based on FMCW Radar. In Proceedings of the 2022 IEEE Asia Pacific Conference on Wireless and Mobile (APWiMob), Bandung, Indonesia, 9–10 December 2022; pp. 1–6. [Google Scholar]

- Janardhana, R.; Chinni, K.M. Performing Object and Activity Recognition Based on Data from a Camera and a Radar Sensor. US Patent US11361554B2, 14 June 2022. [Google Scholar]

- Shafiqul, I.M.; Jannat, M.K.; Kim, J.W.; Lee, S.W.; Yang, S.H. HHI-AttentionNet: An Enhanced Human-Human Interaction Recognition Method Based on a Lightweight Deep Learning Model with Attention Network from CSI. Sensors 2022, 22, 6018. [Google Scholar] [CrossRef]

- Kabir, M.H.; Rahman, M.H.; Shin, W. CSI-IANet: An Inception Attention Network for Human-Human Interaction Recognition Based on CSI Signal. IEEE Access 2021, 9, 166624–166638. [Google Scholar] [CrossRef]

- Su, J.; Liao, Z.; Sheng, Z.; Liu, A.X.; Singh, D.; Lee, H.N. Human activity recognition using self-powered sensors based on multilayer bi-directional long short-term memory networks. IEEE Sens. J. 2022, 1–9. [Google Scholar] [CrossRef]

- Li, H.; He, X.; Chen, X.; Fang, Y.; Fang, Q. Wi-motion: A robust human activity recognition using WiFi signals. IEEE Access 2019, 7, 153287–153299. [Google Scholar] [CrossRef]

- Hoang, M.T.; Yuen, B.; Dong, X.; Lu, T.; Westendorp, R.; Reddy, K. Recurrent neural networks for accurate RSSI indoor localization. IEEE Internet Things J. 2019, 6, 10639–10651. [Google Scholar] [CrossRef]

- Ren, Y.; Wang, Z.; Wang, Y.; Tan, S.; Chen, Y.; Yang, J. 3D Human Pose Estimation Using WiFi Signals. In Proceedings of the 19th ACM Conference on Embedded Networked Sensor Systems, Coimbra, Portugal, 15–17 November 2021; pp. 363–364. [Google Scholar]

- Guo, Z.; Xiao, F.; Sheng, B.; Fei, H.; Yu, S. WiReader: Adaptive air handwriting recognition based on commercial WiFi signal. IEEE Internet Things J. 2020, 7, 10483–10494. [Google Scholar] [CrossRef]

- Hao, Z.; Kang, Y.; Dang, X. Wi-Exercise: An Indoor Human Movement Detection Method Based on Bidirectional LSTM Attention. Mob. Inf. Syst. 2022, 2022, 1–14. [Google Scholar] [CrossRef]

- Cheng, K.; Xu, J.; Zhang, L. Human behavior detection and recognition method based on Wi-Fi signals. In Proceedings of the 2022 IEEE 10th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 17–19 June 2022; pp. 1065–1070. [Google Scholar]

- Alsaify, B.A.; Almazari, M.M.; Alazrai, R.; Alouneh, S.; Daoud, M.I. A CSI-Based Multi-Environment Human Activity Recognition Framework. Appl. Sci. 2022, 12, 930. [Google Scholar] [CrossRef]

- Fard Moshiri, P.; Shahbazian, R.; Nabati, M.; Ghorashi, S.A. A CSI-Based Human Activity Recognition Using Deep Learning. Sensors 2021, 21, 7225. [Google Scholar] [CrossRef]

- Showmik, I.A.; Sanam, T.F.; Imtiaz, H. Human Activity Recognition from Wi-Fi CSI Data Using Principal Component-Based Wavelet CNN. Digit. Signal Process. 2023, 138, 104056. [Google Scholar] [CrossRef]

- Yousefi, S.; Narui, H.; Dayal, S. A Survey on Behavior Recognition Using WiFi Channel State Information. IEEE Commun. Mag. 2017, 55, 98–104. [Google Scholar] [CrossRef]

- Chen, Z.; Zhang, L.; Jiang, C. WiFi CSI Based Passive Human Activity Recognition Using Attention Based BLSTM. IEEE Trans. Mob. Comput. 2019, 18, 2714–2724. [Google Scholar] [CrossRef]

- Yadav, S.K.; Sai, S.; Gundewar, A. CSITime: Privacy-preserving human activity recognition using WiFi channel state information. Neural Netw. 2022, 146, 11–21. [Google Scholar] [CrossRef]

- Salehinejad, H.; Valaee, S. LiteHAR: Lightweight Human Activity Recognition from WiFi Signals with Random Convolution Kernels. In Proceedings of the ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 22–27 May 2022; pp. 4068–4072. [Google Scholar]

| Metrics | Fold | Average | |||

|---|---|---|---|---|---|

| 1st | 2nd | 3rd | 4th | ||

| Accuracy | 100.00 | 99.82 | 99.89 | 100.00 | 99.93 |

| Precision | 100.00 | 99.84 | 99.85 | 100.00 | 99.93 |

| Recall | 100.00 | 99.82 | 99.81 | 100.00 | 99.91 |

| F1-score | 100.00 | 99.82 | 99.83 | 100.00 | 99.91 |

| Metrics | Fold | Average | |||

|---|---|---|---|---|---|

| 1st | 2nd | 3rd | 4th | ||

| Accuracy | 97.57 | 97.76 | 98.28 | 96.63 | 97.56 |

| Precision | 97.44 | 97.65 | 98.19 | 96.45 | 97.43 |

| Recall | 97.45 | 97.55 | 98.21 | 96.45 | 97.42 |

| F1-score | 97.43 | 97.60 | 98.20 | 96.45 | 97.42 |

| Study | Method and Year | Metrics (%) | |||

|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F1-Score | ||

| Yousefi et al. [34] | LSTM (2017) | 90.05 | — | — | — |

| Chen et al. [35] | ABLSTM (2018) | 97.30 | — | — | — |

| Yadav et al. [36] | CSITime (2022) | 98.00 | 99.16 | 98.87 | 99.01 |

| Salehinejad et al. [37] | LiteHAR (2022) | 93.00 | — | — | — |

| proposed | SM-TCNNET | 99.80 | 99.81 | 99.80 | 99.80 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, T.; Gao, S.; Zhu, Y.; Gao, Z.; Zhao, Z.; Che, Y.; Xia, T. SM-TCNNET: A High-Performance Method for Detecting Human Activity Using WiFi Signals. Appl. Sci. 2023, 13, 6443. https://doi.org/10.3390/app13116443

Li T, Gao S, Zhu Y, Gao Z, Zhao Z, Che Y, Xia T. SM-TCNNET: A High-Performance Method for Detecting Human Activity Using WiFi Signals. Applied Sciences. 2023; 13(11):6443. https://doi.org/10.3390/app13116443

Chicago/Turabian StyleLi, Tianci, Sicong Gao, Yanju Zhu, Zhiwei Gao, Zihan Zhao, Yinghua Che, and Tian Xia. 2023. "SM-TCNNET: A High-Performance Method for Detecting Human Activity Using WiFi Signals" Applied Sciences 13, no. 11: 6443. https://doi.org/10.3390/app13116443

APA StyleLi, T., Gao, S., Zhu, Y., Gao, Z., Zhao, Z., Che, Y., & Xia, T. (2023). SM-TCNNET: A High-Performance Method for Detecting Human Activity Using WiFi Signals. Applied Sciences, 13(11), 6443. https://doi.org/10.3390/app13116443