3D Reconstruction of Celadon from a 2D Image: Application to Path Tracing and VR

Abstract

1. Introduction

- We propose a general guideline for obtaining a 3D celadon model from one single 2D image without requiring any additional inputs.

- Our method considers the celadon in the input images as a surface of revolution and extracts a profile polyline and an axis of revolution from it.

- Using the fitted B-spline profile curve, we can generate 3D models at various resolutions we want.

- We automatically generate a texture image of the celadon by separating a region of the celadon from a background in the input image and applying linear interpolation.

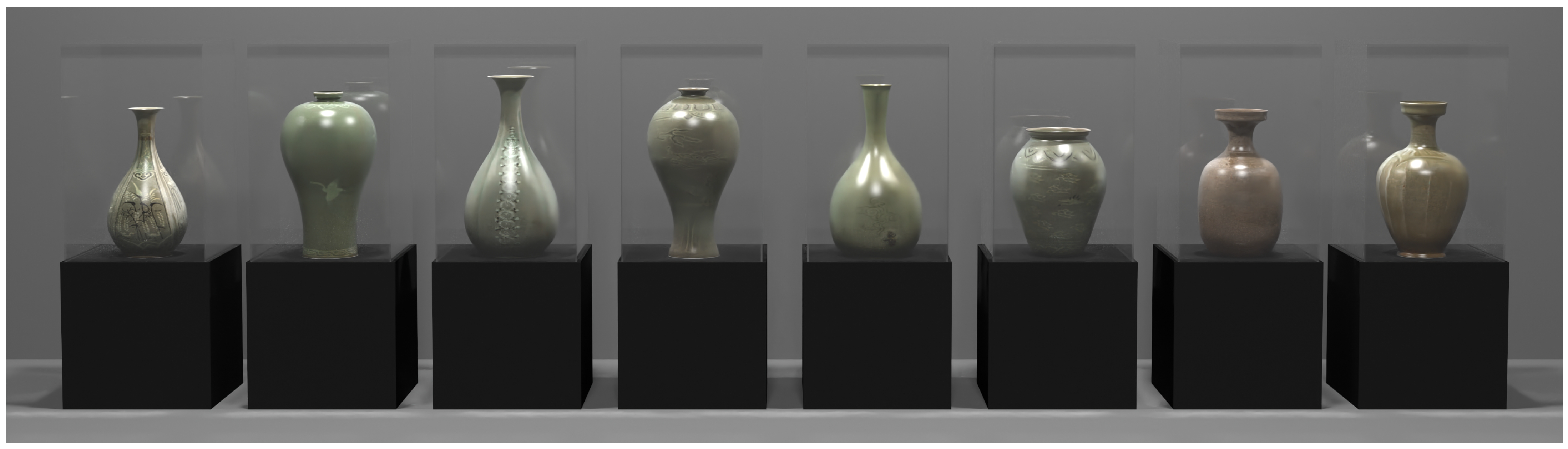

- We produce various scenes with our 3D celadon models using a path tracer [22] and assess their suitability.

- We also generated a VR celadon museum with the models using Unreal Engine 5, which shows that valuable cultural artifacts can be easily used as VR content and viewed by anyone interested.

2. Related Works

2.1. 2D Image Processing

2.2. 3D Rendering of Surfaces of Revolution (SORs)

3. 3D Reconstruction from a 2D Celadon Image

3.1. Extract a Profile Curve

| Algorithm 1: Extract a profile polyline. |

| Input : I - an input celadon image Output: - a profile polyline of the celadon # Contour detection convertToBinaryThreshold findContour() # Corner detection approximateContour() gaussianFilter() findCorners(, ) # Derive an axis of revolution , , PCA() getAxisDirection(, , C) makeAxisOfRevolution() # Select a profile polyline getProfilePolyline() |

3.2. Texture Generation

| Algorithm 2: Generate a texture. |

Input : I - a input celadon image Output: - a texture image of the celadon extractROI(I) makeBlankImage (ROI.dimension) makeScanlinesOf (ROI) foreach scanline in scanlines do scanline.map() if scanline.width < ROI.width then scanline.interpolate() end if end foreach |

3.3. Curve Fitting with a B-Spline Curve

3.4. Construct a Triangular Mesh

4. Experimental Results

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Carolyn, K. Koh Choo. A scientific study of traditional Korean celadons and their modern developments. Archaeometry 1995, 37, 53–81. [Google Scholar]

- Namwon, J. Introduction and Development of Koryŏ Celadon. In A Companion to Korean Art; Wiley: Hoboken, NJ, USA, 2020; pp. 133–158. [Google Scholar]

- Yan, L.; Liu, M.; Sun, H.; Li, L.; Feng, X. A comparative study of typical early celadon shards from Eastern Zhou and Eastern Han dynasty (China). J. Archaeol. Sci. Rep. 2020, 33, 102530. [Google Scholar] [CrossRef]

- Banterle, F.; Itkin, B.; Dellepiane, M.; Wolf, L.; Callieri, M.; Dershowitz, N.; Scopigno, R. Vasesketch: Automatic 3d representation of pottery from paper catalog drawings. In Proceedings of the 2017 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; Volume 1, pp. 683–690. [Google Scholar]

- Dashti, S.; Prakash, E.; Navarro-Newball, A.A.; Hussain, F.; Carroll, F. PotteryVR: Virtual reality pottery. Vis. Comput. 2022, 38, 4035–4055. [Google Scholar] [CrossRef] [PubMed]

- Kampel, M.; Sablatnig, R. Profile-based pottery reconstruction. In Proceedings of the 2003 Conference on Computer Vision and Pattern Recognition Workshop, Madison, WI, USA, 16–22 June 2003; Volume 1, p. 4. [Google Scholar]

- Karasik, A. A complete, automatic procedure for pottery documentation and analysis. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 29–34. [Google Scholar]

- Karasik, A.; Smilansky, U. 3D scanning technology as a standard archaeological tool for pottery analysis: Practice and theory. J. Archaeol. Sci. 2008, 35, 1148–1168. [Google Scholar] [CrossRef]

- Kabassi, K. Evaluating websites of museums: State of the art. J. Cult. Herit. 2017, 24, 184–196. [Google Scholar] [CrossRef]

- Shehade, M.; Stylianou-Lambert, T. Virtual Reality in Museums: Exploring the Experiences of Museum Professionals. Appl. Sci. 2020, 10, 4031. [Google Scholar] [CrossRef]

- Banfi, F.; Pontisso, M.; Paolillo, F.R.; Roascio, S.; Spallino, C.; Stanga, C. Interactive and Immersive Digital Representation for Virtual Museum: VR and AR for Semantic Enrichment of Museo Nazionale Romano, Antiquarium di Lucrezia Romana and Antiquarium di Villa Dei Quintili. ISPRS Int. J. Geo-Inf. 2023, 12, 28. [Google Scholar] [CrossRef]

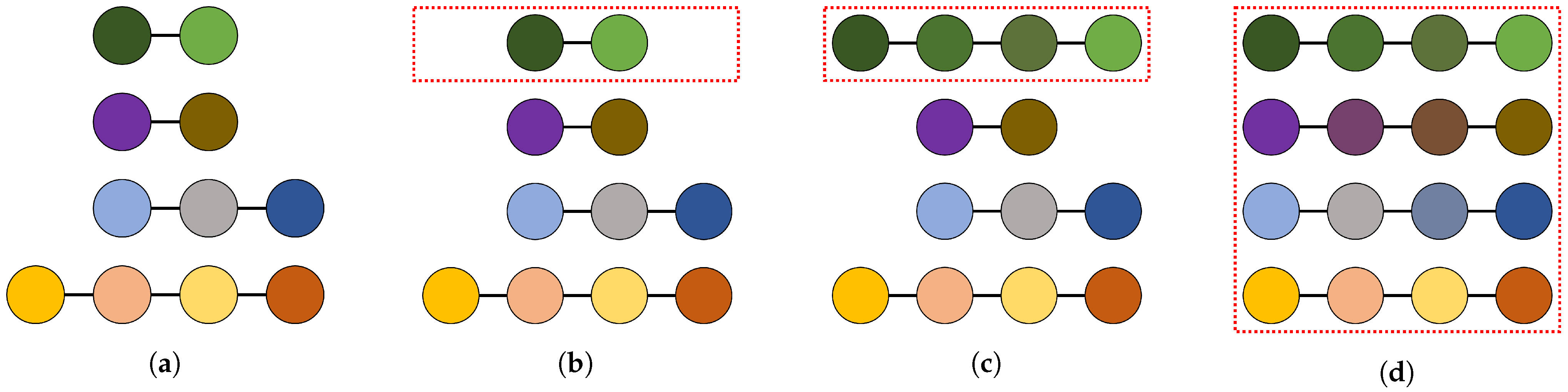

- Heeyoung, P.; Cheongtag, K.; Youngjin, P. The Variables of Surface of Revolution and its effects on Human Visual Preference. J. Korea Comput. Graph. Soc. 2022, 28, 31–40. [Google Scholar] [CrossRef]

- Dierckx, P. Algorithms for Smoothing Data with Periodic and Parametric Splines. Comput. Graph. Image Process. 1982, 20, 171–184. [Google Scholar] [CrossRef]

- Filip, D.; Magedson, R.; Markot, R. Surface algorithms using bounds on derivatives. Comput. Aided Geom. Des. 1986, 3, 295–311. [Google Scholar] [CrossRef]

- Cohen, E.; Riesenfeld, R.F.; Elber, G. Geometric Modeling with Splines: An Introduction; CRC Press: Boca Raton, FL, USA, 2001. [Google Scholar]

- Farin, G. Curves and Surfaces for Computer-Aided Geometric Design: A Practical Guide; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Douglas, D.H.; Peucker, T.K. Algorithms for the Reduction of the Number of Points Required to Represent a Digitized Line or Its Caricature. Cartographica 1973, 10, 112–122. [Google Scholar] [CrossRef]

- Lang, T. Rules for the robot draughtsmen. Geogr. Mag. 1969, 42, 50–51. [Google Scholar]

- Opheim, H. Smoothing A Digitzed Curve by Data Reduction Methods. In Eurographics Conference Proceedings; Encarnacao, J.L., Ed.; The Eurographics Association: Prague, Czechia, 1981. [Google Scholar] [CrossRef]

- Reumann, K.; Witkam, A.P.M. Optimizing curve segmentation in computer graphics. In Proceedings of the International Computing Symposium 1973, Davos, Switzerland, 4–7 September 1973; pp. 467–472. [Google Scholar]

- Visvalingam, M.; Whyatt, J.D. Line generalisation by repeated elimination of points. Cartogr. J. 1993, 30, 46–51. [Google Scholar] [CrossRef]

- Jakob, W.; Speierer, S.; Roussel, N.; Nimier-David, M.; Vicini, D.; Zeltner, T.; Nicolet, B.; Crespo, M.; Leroy, V.; Zhang, Z. Mitsuba 3 Renderer. 2022. Available online: https://mitsuba-renderer.org (accessed on 10 January 2023).

- Suzuki, S.; Abe, K. Topological Structural Analysis of Digitized Binary Images by Border Following. Comput. Vis. Graph. Image Process. 1985, 30, 32–46. [Google Scholar] [CrossRef]

- Moore, D.J.H. An Approach to the Analysis and Extraction of Pattern Features Using Integral Geometry. IEEE Trans. Syst. Man Cybern. 1972, 2, 97–102. [Google Scholar] [CrossRef]

- Moravec, H.P. Obstacle Avoidance and Navigation in the Real World by a Seeing Robot Rover; Stanford University: Stanford, CA, USA, 1980. [Google Scholar]

- Harris, C.; Stephens, M. A Combined Corner and Edge Detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 23.1–23.6. [Google Scholar] [CrossRef]

- Shi, J.; Tomasi, C. Good Features to Track. In Proceedings of the 1994 Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994. [Google Scholar]

- Hall, N.S.; Laflin, S. A computer aided design technique for pottery profiles. In Computer Applications in Archaeology; Computer Center, University of Birmingham: Birmingham, UK, 1984; pp. 178–188. [Google Scholar]

- Badiu, I.; Buna, Z.; Comes, R. Automatic generation of ancient pottery profiles using CAD software. J. Anc. Hist. Archaeol. 2015, 2. [Google Scholar] [CrossRef]

- Wong, K.Y.K.; Mendonça, P.R.S.; Cipolla, R. Reconstruction of surfaces of revolution from single uncalibrated views. Image Vis. Comput. 2004, 22, 829–836. [Google Scholar] [CrossRef]

- Colombo, C.; Del Bimbo, A.; Pernici, F. Metric 3D reconstruction and texture acquisition of surfaces of revolution from a single uncalibrated view. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 99–114. [Google Scholar] [CrossRef] [PubMed]

- Kajiya, J.T. New techniques for ray tracing procedurally defined objects. ACM Siggraph Comput. Graph. 1983, 17, 91–102. [Google Scholar] [CrossRef]

- Baciu, G.; Jia, J.; Lam, G. Ray tracing surfaces of revolution: An old problem with a new perspective. In Proceedings of the Computer Graphics International 2001, Hong Kong, China, 3–6 July 2001; pp. 215–222. [Google Scholar]

- Goryeo Celadon Museum. Available online: https://www.celadon.go.kr/ (accessed on 20 December 2022).

- Rendering Resources. Available online: https://benedikt-bitterli.me/resources/ (accessed on 10 January 2023).

- Unreal Engine 5. Available online: https://www.unrealengine.com/ (accessed on 21 February 2023).

- Parker, S.G.; Bigler, J.; Dietrich, A.; Friedrich, H.; Hoberock, J.; Luebke, D.; McAllister, D.; McGuire, M.; Morley, K.; Robison, A.; et al. Optix: A general purpose ray tracing engine. ACM Trans. Graph. (tog) 2010, 29, 1–13. [Google Scholar] [CrossRef]

| Celadon | Image Processing | B-spline | 3D Model | Approx. Error (pixels) | ||||

|---|---|---|---|---|---|---|---|---|

| Time (ms) | # Contour Points | # Control Points | # Domains | Time (ms) | # Vertices | # Faces | ||

| 64.06 | 688 | 15 | 261 | 982.62 | 94,320 | 187,920 | 2.27 | |

| 70.12 | 830 | 17 | 298 | 1076.89 | 107,640 | 214,560 | 2.17 | |

| 78.04 | 835 | 20 | 327 | 1176.80 | 118,080 | 235,440 | 1.38 | |

| 68.07 | 813 | 18 | 369 | 1375.21 | 133,200 | 265,680 | 2.33 | |

| 70.03 | 783 | 14 | 382 | 1469.63 | 137,880 | 275,040 | 1.85 | |

| 68.03 | 699 | 18 | 396 | 1462.05 | 142,920 | 285,120 | 1.45 | |

| 69.00 | 701 | 24 | 467 | 1779.77 | 168,480 | 336,240 | 1.26 | |

| 71.01 | 755 | 27 | 554 | 2082.57 | 199,800 | 398,880 | 1.46 | |

| Name | Celadon | Rendering Time (s) | |||||

|---|---|---|---|---|---|---|---|

| HD | FHD | 4K | |||||

| , | 2.08 | 15.97 | 3.46 | 35.99 | 10.08 | 72.04 | |

| , | 2.60 | 39.04 | 5.84 | 86.60 | 22.98 | 174.00 | |

| ,..., | 3.87 | 41.73 | 7.35 | 92.75 | 25.49 | 187.74 | |

| ,..., | 6.73 | 102.62 | 16.18 | 224.90 | 57.41 | 451.41 | |

| ,..., | 8.90 | 102.29 | 15.66 | 233.02 | 64.37 | 469.07 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.; Park, Y. 3D Reconstruction of Celadon from a 2D Image: Application to Path Tracing and VR. Appl. Sci. 2023, 13, 6848. https://doi.org/10.3390/app13116848

Kim S, Park Y. 3D Reconstruction of Celadon from a 2D Image: Application to Path Tracing and VR. Applied Sciences. 2023; 13(11):6848. https://doi.org/10.3390/app13116848

Chicago/Turabian StyleKim, Seongil, and Youngjin Park. 2023. "3D Reconstruction of Celadon from a 2D Image: Application to Path Tracing and VR" Applied Sciences 13, no. 11: 6848. https://doi.org/10.3390/app13116848

APA StyleKim, S., & Park, Y. (2023). 3D Reconstruction of Celadon from a 2D Image: Application to Path Tracing and VR. Applied Sciences, 13(11), 6848. https://doi.org/10.3390/app13116848