A Robust and Automated Vision-Based Human Fall Detection System Using 3D Multi-Stream CNNs with an Image Fusion Technique

Abstract

1. Introduction

2. Related Works

2.1. Wearable Sensors

2.2. Vision-Based Sensors

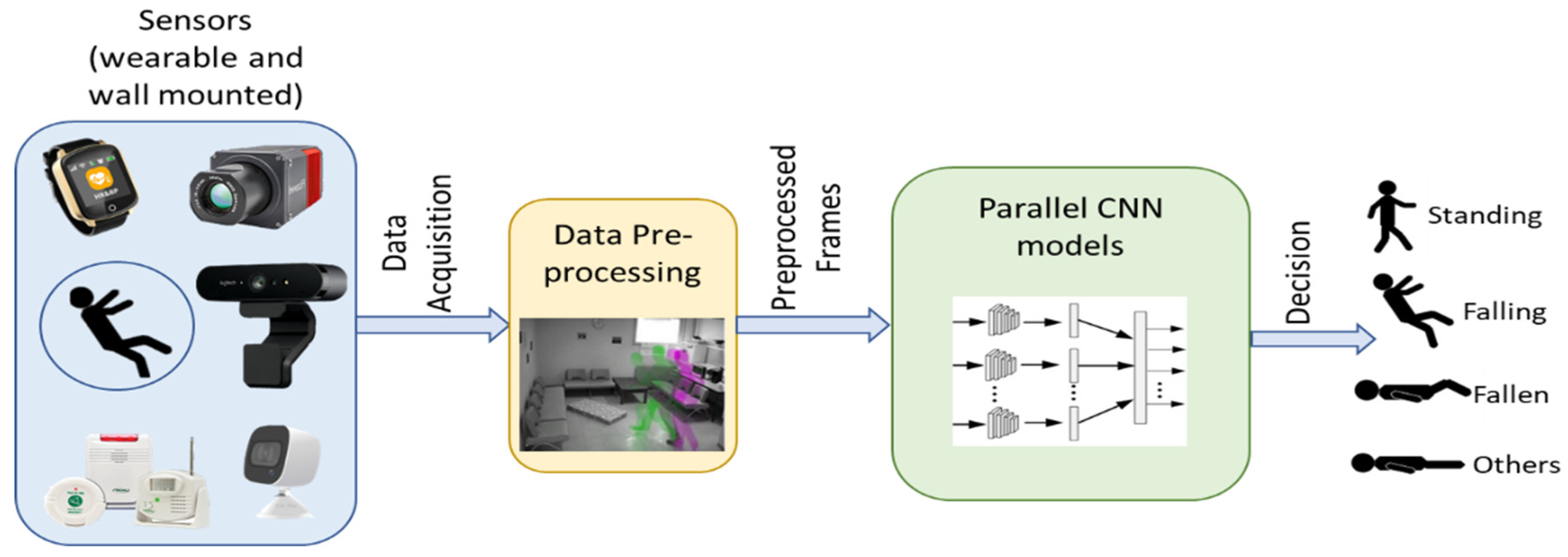

3. Our Proposed Method

3.1. Human Segmentation

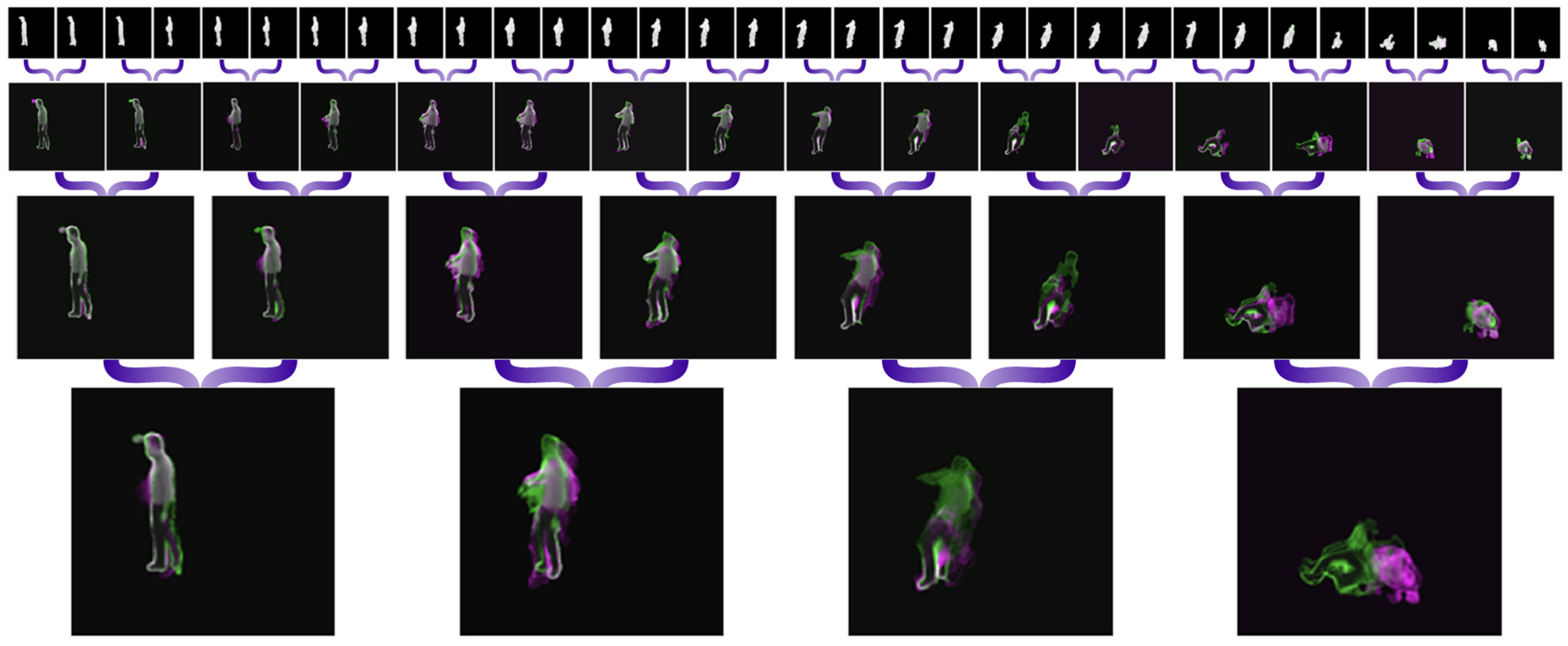

3.2. Pre-Processing with Image Fusion

3.3. Action Classification and Fall Alert

4. Experiments

4.1. Database Description

4.2. Dataset Preparation and Augmentation

4.3. Experimental Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Health Organization. Falls. 2021. Available online: https://www.who.int/news-room/fact-sheets/detail/falls (accessed on 10 October 2022).

- Alam, E.; Sufian, A.; Dutta, P.; Leo, M. Vision-based human fall detection systems using deep learning: A review. Comput. Biol. Med. 2022, 146, 105626. [Google Scholar] [CrossRef] [PubMed]

- Yu, M.; Rhuma, A.; Naqvi, S.M.; Wang, L.; Chambers, J. A posture recognition-based fall detection system for monitoring an elderly person in a smart home environment. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 1274–1286. [Google Scholar] [CrossRef] [PubMed]

- W.H.O. WHO Global Report on Falls Prevention in Older Age; World Health Organization Ageing and Life Course Unit: Geneva, Switzerland, 2008. [Google Scholar]

- San-Segundo, R.; Echeverry-Correa, J.D.; Salamea, C.; Pardo, J.M. Human activity monitoring based on hidden Markov models using a smartphone. IEEE Instrum. Meas. Mag. 2016, 19, 27–31. [Google Scholar] [CrossRef]

- Baek, J.; Yun, B.-J. Posture monitoring system for context awareness in mobile computing. IEEE Trans. Instrum. Meas. 2010, 59, 1589–1599. [Google Scholar] [CrossRef]

- Tao, Y.; Hu, H. A novel sensing and data fusion system for 3-D arm motion tracking in telerehabilitation. IEEE Trans. Instrum. Meas. 2008, 57, 1029–1040. [Google Scholar]

- Mubashir, M.; Shao, L.; Seed, L. A survey on fall detection: Principles and approaches. Neurocomputing 2013, 100, 144–152. [Google Scholar] [CrossRef]

- Shieh, W.-Y.; Huang, J.-C. Falling-incident detection and throughput enhancement in a multi-camera video-surveillance system. Med. Eng. Phys. 2012, 34, 954–963. [Google Scholar] [CrossRef]

- Miaou, S.-G.; Sung, P.-H.; Huang, C.-Y. A Customized Human Fall Detection System Using Omni-Camera Images and Personal Information. In Proceedings of the 1st Transdisciplinary Conference on Distributed Diagnosis and Home Healthcare, Arlington, VA, USA, 2–4 April 2006. [Google Scholar]

- Jansen, B.; Deklerck, R. Context aware inactivity recognition for visual fall detection. In Proceedings of the Pervasive Health Conference and Workshops, Innsbruck, Austria, 29 November–1 December 2006. [Google Scholar]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Eftychios, P. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018, 13. [Google Scholar] [CrossRef]

- Islam, M.M.; Nooruddin, S.; Karray, F.; Muhammad, G. Human activity recognition using tools of convolutional neural networks: A state of the art review, data sets, challenges, and future prospects. Comput. Biol. Med. 2022, 149, 106060. [Google Scholar] [CrossRef]

- Muhammad, G.; Alshehri, F.; Karray, F.; El Saddik, A.; Alsulaiman, M.; Falk, T.H. A comprehensive survey on multimodal medical signals fusion for smart healthcare systems. Inf. Fusion 2021, 76, 355–375. [Google Scholar] [CrossRef]

- Islam, M.M.; Nooruddin, S.; Karray, F.; Muhammad, G. Multi-level feature fusion for multimodal human activity recognition in Internet of Healthcare Things. Inf. Fusion 2023, 94, 17–31. [Google Scholar] [CrossRef]

- Altaheri, H.; Muhammad, G.; Alsulaiman, M.; Amin, S.U.; Altuwaijri, G.A.; Abdul, W.; Bencherif, M.A.; Faisal, M. Deep learning techniques for classification of electroencephalogram (EEG) motor imagery (MI) signals: A review. Neural Comput. Appl. 2021, 35, 14681–14722. [Google Scholar] [CrossRef]

- Pathak, A.R.; Pandey, M.; Rautaray, S. Application of Deep Learning for Object Detection. Procedia Comput. Sci. 2018, 132, 1706–1717. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision—Algorithms and Applications in Text. In Computer Science; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Guo, Z.; Huang, Y.; Hu, X.; Wei, H.; Zhao, B. A Survey on Deep learning based approaches for scene understanding in autononmous driving. Electroincs 2021, 10, 471. [Google Scholar]

- Li, F.-F.; Johnson, J.; Yeung, S. Detection and Segmentation. Lecture. 2011. Available online: http://cs231n.stanford.edu/slides/2018/cs231n_2018_lecture11.pdf (accessed on 10 March 2023).

- Liu, C.; Chen, L.-C.; Schroff, F.; Adam, H.; Hua, W.; Yuille, A.L.; Fei-Fei, L. Auto-deeplab: Hierarchical neural architecture search for semantic image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 82–92. [Google Scholar]

- Kirillov, A.; He, K.; Girshick, R.; Rother, C.; Dollár, P. Panoptic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9404–9413. [Google Scholar]

- Blasch, E.; Zheng, Y.; Liu, Z. Multispectral Image Fusion and Colorization; SPIE Press: Bellingham, WA, USA, 2018. [Google Scholar]

- Masud, M.; Gaba, G.S.; Choudhary, K.; Hossain, M.S.; Alhamid, M.F.; Muhammad, G. Lightweight and Anonymity-Preserving User Authentication Scheme for IoT-Based Healthcare. IEEE Internet Things J. 2021, 9, 2649–2656. [Google Scholar] [CrossRef]

- Muhammad, G.; Hossain, M.S. COVID-19 and Non-COVID-19 Classification using Multi-layers Fusion from Lung Ultrasound Images. Inf. Fusion 2021, 72, 80–88. [Google Scholar] [CrossRef]

- Haghighat, M.B.A.; Aghagolzadeh, A.; Seyedarabi, H. Multi-focus image fusion for visual sensor networks in DCT domain. Comput. Electr. Eng. 2011, 37, 789–797. [Google Scholar] [CrossRef]

- Haghighat, M.B.A.; Aghagolzadeh, A.; Seyedarabi, H. A non-reference image fusion metric based on mutual information of image features. Comput. Electr. Eng. 2011, 37, 744–756. [Google Scholar] [CrossRef]

- Trapasiya, S.; Parmar, R. A Comprehensive Survey of Various Approaches on Human Fall Detection for Elderly People. Wirel. Pers. Commun. 2022, 126, 1679–1703. [Google Scholar]

- Biroš, O.; Karchnak, J.; Šimšík, D.; Hošovský, A. Implementation of wearable sensors for fall detection into smart household. In Proceedings of the IEEE 12th International Symposium on Applied Machine Intelligence and Informatics (SAMI), Herl’any, Slovakia, 23–25 January 2014. [Google Scholar]

- Nafea, O.; Abdul, W.; Muhammad, G.; Alsulaiman, M. Sensor-Based Human Activity Recognition with Spatio-Temporal Deep Learning. Sensors 2021, 21, 2141. [Google Scholar] [CrossRef]

- Quadros, T.D.; Lazzaretti, A.E.; Schneider, F.K. A Movement Decomposition and Machine Learning-Based Fall Detection System Using Wrist Wearable Device. IEEE Sens. J. 2018, 18, 5082–5089. [Google Scholar] [CrossRef]

- Özdemir, A.T.; Barshan, B. Detecting Falls with Wearable Sensors Using Machine Learning Techniques. Sensors 2014, 14, 10691–10708. [Google Scholar] [CrossRef] [PubMed]

- Pernini, L.; Belli, A.; Palma, L.; Pierleoni, P.; Pellegrini, M.; Valenti, S. A High Reliability Wearable Device for Elderly Fall Detection. IEEE Sens. J. 2015, 15, 4544–4553. [Google Scholar]

- Yazar, A.; Erden, F.; Cetin, A.E. Multi-sensor ambient assisted living system for fall detection. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014. [Google Scholar]

- Santos, G.L.; Endo, P.T.; Monteiro, K.; Rocha, E.; Silva, I.; Lynn, T. Accelerometer-Based Human Fall Detection Using Convolutional Neural Networks. Sensors 2019, 19, 1644. [Google Scholar] [CrossRef]

- Islam, M.M.; Nooruddin, S.; Karray, F.; Muhammad, G. Internet of Things: Device Capabilities, Architectures, Protocols, and Smart Applications in Healthcare Domain. IEEE Internet Things J. 2023, 10, 3611–3641. [Google Scholar] [CrossRef]

- Alshehri, F.; Muhammad, G. A Comprehensive Survey of the Internet of Things (IoT) and AI-Based Smart Healthcare. IEEE Access 2021, 9, 3660–3678. [Google Scholar] [CrossRef]

- Chelli, A.; Pätzold, M. A Machine Learning Approach for Fall Detection and Daily Living Activity Recognition. IEEE Access 2019, 7, 38670–38687. [Google Scholar] [CrossRef]

- Muhammad, G.; Rahman, S.K.M.M.; Alelaiwi, A.; Alamri, A. Smart Health Solution Integrating IoT and Cloud: A Case Study of Voice Pathology Monitoring. IEEE Commun. Mag. 2017, 55, 69–73. [Google Scholar] [CrossRef]

- Muhammad, G.; Alhussein, M. Security, trust, and privacy for the Internet of vehicles: A deep learning approach. IEEE Consum. Electron. Mag. 2022, 6, 49–55. [Google Scholar] [CrossRef]

- Leone, A.; Diraco, G.; Siciliano, P. Detecting falls with 3D range camera in ambient assisted living applications: A preliminary study. Med. Eng. Phys. 2011, 33, 770–781. [Google Scholar] [CrossRef]

- Jokanovic, B.; Amin, M.; Ahmad, F. Radar fall motion detection using deep learning. In Proceedings of the IEEE Radar Conference (RadarConf16), Philadelphia, PA, USA, 2–6 May 2016. [Google Scholar]

- Amin, M.G.; Zhang, Y.D.; Ahmad, F.; Ho, K.D. Radar Signal Processing for Elderly Fall Detection: The future for in-home monitoring. IEEE Signal Process. Mag. 2016, 33, 71–80. [Google Scholar] [CrossRef]

- Yang, L.; Ren, Y.; Hu, H.; Tian, B. New Fast Fall Detection Method Based on Spatio-Temporal Context Tracking of Head by Using Depth Images. Sensors 2015, 15, 23004–23019. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Wang, H.; Xue, B.; Zhou, M.; Ji, B.; Li, Y. Depth-Based Human Fall Detection via Shape Features and Improved Extreme Learning Machine. IEEE J. Biomed. Health Inform. 2014, 18, 1915–1922. [Google Scholar] [CrossRef]

- Angal, Y.; Jagtap, A. Fall detection system for older adults. In Proceedings of the IEEE International Conference on Advances in Electronics, Communication and Computer Technology (ICAECCT), Pune, India, 2–3 December 2016. [Google Scholar]

- Stone, E.E.; Skubic, M. Fall Detection in Homes of Older Adults Using the Microsoft Kinect. IEEE J. Biomed. Health Inform. 2015, 19, 290–301. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Ren, Y.; Zhang, W. 3D depth image analysis for indoor fall detection of elderly people. Digit. Commun. Netw. 2016, 2, 24–34. [Google Scholar] [CrossRef]

- Adhikari, K.; Bouchachia, A.; Nait-Charif, H. Activity recognition for indoor fall detection using convolutional neural network. In Proceedings of the Fifteenth IAPR International Conference on Machine Vision Applications (MVA), Nagoya, Japan, 8–12 May 2017. [Google Scholar]

- Fan, K.; Wang, P.; Zhuang, S. Human fall detection using slow feature analysis. Multimed. Tools Appl. 2019, 78, 9101–9128. [Google Scholar] [CrossRef]

- Xu, H.; Leixian, S.; Zhang, Q.; Cao, G. Fall Behavior Recognition Based on Deep Learning and Image Processing. Int. J. Mob. Comput. Multimed. Commun. 2018, 9, 1–15. [Google Scholar] [CrossRef]

- Bian, Z.-P.; Hou, J.; Chau, L.-P.; Magnenat-Thalmann, N. Fall Detection Based on Body Part Tracking Using a Depth Camera. IEEE J. Biomed. Health Inform. 2015, 19, 430–439. [Google Scholar] [CrossRef]

- Wang, S.; Chen, L.; Zhou, Z.; Sun, X.; Dong, J. Human Fall Detection in Surveillance Video Based on PCANet. Multimed. Tools Appl. 2016, 75, 11603–11613. [Google Scholar] [CrossRef]

- Benezeth, Y.; Emile, B.; Laurent, H.; Rosenberger, C. Vision-Based System for Human Detection and Tracking in Indoor Environment. Int. J. Soc. Robot. 2009, 2, 41–52. [Google Scholar] [CrossRef]

- Liu, H.; Zuo, C. An Improved Algorithm of Automatic Fall Detection. AASRI Procedia 2012, 1, 353–358. [Google Scholar] [CrossRef]

- Lu, K.-L.; Chu, E.T.-H. An Image-Based Fall Detection System for the Elderly. Appl. Sci. 2018, 8, 1995. [Google Scholar] [CrossRef]

- Debard, G.; Karsmakers, P.; Deschodt, M.; Vlaeyen, E.; Bergh, J.; Dejaeger, E.; Milisen, K.; Goedemé, T.; Tuytelaars, T.; Vanrumste, B. Camera Based Fall Detection Using Multiple Features Validated with Real Life Video. In Proceedings of the Workshop 7th International Conference on Intelligent Environments, Nottingham, UK, 25–28 July 2011. [Google Scholar]

- Shawe-Taylor, J.; Sun, S. Kernel Methods and Support Vector Machines. Acad. Press Libr. Signal Process. 2014, 1, 857–881. [Google Scholar]

- Shawe-Taylor, J.; Cristianini, N. An Introduction to Support Vector Machines and Other Kernel-Based Learning Methods 22; Cambridge University Press: London, UK, 2001. [Google Scholar]

- Muaz, M.; Ali, S.; Fatima, A.; Idrees, F.; Nazar, N. Human Fall Detection. In Proceedings of the 16th International Multi Topic Conference, INMIC, Lahore, Pakistan, 19–20 December 2013. [Google Scholar]

- Leite, G.; Silva, G.; Pedrini, H. Three-Stream Convolutional Neural Network for Human Fall Detection. In Deep Learning Applications 2; Springer: Singapore, 2020; pp. 49–80. [Google Scholar]

- Zou, S.; Min, W.; Liu, L.; Wang, Q.A.Z.X. Movement Tube Detection Network Integrating 3D CNN and Object Detection Framework to Detect Fall. Electronics 2021, 10, 898. [Google Scholar] [CrossRef]

- Charfi, I.; Mitéran, J.; Dubois, J.; Atri, M.; Tourki, R. Optimised spatio-temporal descriptors for real-time fall detection: Comparison of SVM and Adaboost based classification. J. Electron. Imaging 2013, 22, 17. [Google Scholar] [CrossRef]

- Lu, N.; Wu, Y.; Feng, L.; Song, J. Deep Learning for Fall Detection: Three-Dimensional CNN Combined with LSTM on Video Kinematic Data. IEEE J. Biomed. Health Inform. 2019, 23, 314–323. [Google Scholar] [CrossRef] [PubMed]

- Min, W.; Cui, H.; Rao, H.; Li, Z.; Yao, L. Detection of Human Falls on Furniture Using Scene Analysis Based on Deep Learning and Activity Characteristics. IEEE Access 2018, 6, 9324–9335. [Google Scholar] [CrossRef]

- Kong, Y.; Huang, J.; Huang, S.; Wei, Z.; Wang, S. Learning Spatiotemporal Representations for Human Fall Detection in Surveillance Video. J. Vis. Commun. Image Represent. 2019, 59, 215–230. [Google Scholar] [CrossRef]

- Taramasco, C.; Rodenas, T.; Martinez, F.; Fuentes, P.; Munoz, R.; Olivares, R.; De Albuquerque, V.H.; Demongeot, J. A Novel Monitoring System for Fall Detection in Older People. IEEE Access 2018, 6, 43563–43574. [Google Scholar] [CrossRef]

- Ogas, J.; Khan, S.; Mihailidis, A. DeepFall: Non-Invasive Fall Detection with Deep Spatio-Temporal Convolutional Autoencoders. J. Healthc. Inform. Res. 2020, 4, 50–70. [Google Scholar]

- Gu, C.; Sun, C.; Ross, D.A.; Vondrick, C.; Pantofaru, C.; Li, Y.; Vijayanarasimhan, S.; Toderici, G.; Ricco, S.; Sukthankar, R.; et al. Ava: A video dataset of spatio-temporally localized atomic visual actions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Peng, X.; Schmid, C. Multi-region Two-Stream R-CNN for Action Detection. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 28, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Carreira, J.; Zisserman, A. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Fan, Y.; Levine, M.D.; Wen, G.; Qiu, S. A deep neural network for real-time detection of falling humans in naturally occurring scenes. Neurocomputing 2017, 260, 43–58. [Google Scholar] [CrossRef]

- Núñez-Marcos, A.; Azkune, G.; Arganda-Carreras, I. Vision-Based Fall Detection with Convolutional Neural Networks. Wirel. Commun. Mob. Comput. 2017, 2017, 1–16. [Google Scholar] [CrossRef]

- Hsieh, Y.Z.; Jeng, Y.-L. Development of Home Intelligent Fall Detection IoT System Based on Feedback Optical Flow Convolutional Neural Network. IEEE Access 2018, 6, 6048–6057. [Google Scholar] [CrossRef]

- Carneiro, S.A.; da Silva, G.P.; Leite, G.V.; Moreno, R.; Guimarães, S.J.F.; Pedrini, H. Multi-Stream Deep Convolutional Network Using High-Level Features Applied to Fall Detection in Video Sequences. In Proceedings of the International Conference on Systems, Signals and Image Processing (IWSSIP), Osijek, Croatia, 5–7 June 2019. [Google Scholar]

- Leite, G.; Silva, G.; Pedrini, H. Fall Detection in Video Sequences Based on a Three-Stream Convolutional Neural Network. In Proceedings of the 18th IEEE International Conference on Machine Learning and Applications (ICMLA), Boca Raton, FL, USA, 16–19 December 2019. [Google Scholar]

- Menacho, C.; Ordoñez, J. Fall detection based on CNN models implemented on a mobile robot. In Proceedings of the 17th International Conference on Ubiquitous Robots (UR), Kyoto, Japan, 22–26 June 2020. [Google Scholar]

- Chhetri, S.; Alsadoon, A.; Al-Dala, T.; Prasad, P.W.C.; Rashid, T.A.; Maag, A. Deep learning for vision-based fall detection system: Enhanced optical dynamic flow. Comput. Intell. 2020, 37, 578–595. [Google Scholar] [CrossRef]

- Vishnu, C.; Datla, R.; Roy, D.; Babu, S.; Mohan, C.K. Human Fall Detection in Surveillance Videos Using Fall Motion Vector Modeling. IEEE Sens. J. 2021, 21, 17162–17170. [Google Scholar] [CrossRef]

- Berlin, S.J.; John, M. Vision based human fall detection with Siamese convolutional neural networks. J. Ambient Intell. Humaniz. Comput. 2022, 13, 5751–5762. [Google Scholar] [CrossRef]

- Alanazi, T.; Muhammad, G. Human Fall Detection Using 3D Multi-Stream Convolutional Neural Networks with Fusion. Diagnostics 2022, 12, 20. [Google Scholar] [CrossRef]

- Gruosso, M.; Capece, N.; Erra, U. Human segmentation in surveillance video with deep learning. Multimed. Tools Appl. 2021, 80, 1175–1199. [Google Scholar] [CrossRef]

- Soille, P. Morphological Image Analysis: Principles and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing; Pearson Education Limited: London, UK, 2018. [Google Scholar]

- Musallam, Y.K.; Al Fassam, N.I.; Muhammad, G.; Amin, S.U.; Alsulaiman, M.; Abdul, W.; Altaheri, H.; Bencherif, M.A.; Algabri, M. Electroencephalography-based motor imagery classification using temporal convolutional network fusion. Biomed. Signal Process. Control 2021, 69, 102826. [Google Scholar] [CrossRef]

- Chamle, M.; Gunale, K.G.; Warhade, K.K. Automated unusual event detection in video surveillance. In Proceedings of the International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 26–27 August 2016. [Google Scholar]

- Alaoui, A.Y.; El Hassouny, A.; Thami, R.O.H.; Tairi, H. Human Fall Detection Using Von Mises Distribution and Motion Vectors of Interest Points. Assoc. Comput. Mach. 2017, 82, 5. [Google Scholar]

- Poonsri, A.; Chiracharit, W. Improvement of fall detection using consecutive-frame voting. In Proceedings of the International Workshop on Advanced Image Technology (IWAIT), Chiang Mai, Thailand, 7–9 January 2018. [Google Scholar]

- Alaoui, A.Y.; Tabii, Y.; Thami, R.O.H.; Daoudi, M.; Berretti, S.; Pala, P. Fall Detection of Elderly People Using the Manifold of Positive Semidefinite Matrices. J. Imaging 2021, 7, 109. [Google Scholar] [CrossRef]

| Measurement | Equation |

|---|---|

| Accuracy | |

| Sensitivity | |

| Specificity | |

| Precision |

| Scenario | Data Type | Labeling Type | Labeling As | No. of Data |

|---|---|---|---|---|

| Human Segmentation | Image | Pixel as class | Person | 355 |

| Background | ||||

| Fall Action | Video | Video as class | Fall | 120 |

| Not a Fall | 57 |

| Model | Global Accuracy | Mean Sensitivity | Mean IoU | Weighted IoU |

|---|---|---|---|---|

| Human Segmentation | 98.46% | 92.78% | 83.98% | 97.24% |

| Model | Accuracy | Sensitivity | Specificity | Precision |

|---|---|---|---|---|

| Proposed Method | 99.44% | 99.12% | 99.12% | 99.59% |

| Model | Year | Accuracy | Sensitivity | Specificity | Precision |

|---|---|---|---|---|---|

| Chamle et al. [87] | 2016 | 79.30% | 84.30% | 73.07% | 79.40% |

| Núñez et al. [74] | 2017 | 97.00% | 99.00% | 97.00% | - |

| Alaoui et al. [88] | 2017 | 90.90% | 94.56% | 81.08% | 90.84% |

| Poonsri et al. [89] | 2018 | 86.21% | 93.18% | 64.29% | 91.11% |

| Carneiro et al. [76] | 2019 | 98.43% | 99.90% | 98.32% | - |

| Leite et al. [61] | 2020 | 99.00% | 99.00% | - | - |

| Zou et al. [62] | 2021 | 97.23% | 100.00% | 97.04% | - |

| Vishnu et al. [80] | 2021 | 78.50% | 98.90% | - | 99.10% |

| Youssfi et al. [90] | 2021 | 93.67% | 100.00% | 87.00% | 83.62% |

| Our Previous Work [82] | 2022 | 99.03% | 99.00% | 99.68% | 99.00% |

| Our Proposed Method | 2023 | 99.44% | 99.12% | 99.12% | 99.59% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alanazi, T.; Babutain, K.; Muhammad, G. A Robust and Automated Vision-Based Human Fall Detection System Using 3D Multi-Stream CNNs with an Image Fusion Technique. Appl. Sci. 2023, 13, 6916. https://doi.org/10.3390/app13126916

Alanazi T, Babutain K, Muhammad G. A Robust and Automated Vision-Based Human Fall Detection System Using 3D Multi-Stream CNNs with an Image Fusion Technique. Applied Sciences. 2023; 13(12):6916. https://doi.org/10.3390/app13126916

Chicago/Turabian StyleAlanazi, Thamer, Khalid Babutain, and Ghulam Muhammad. 2023. "A Robust and Automated Vision-Based Human Fall Detection System Using 3D Multi-Stream CNNs with an Image Fusion Technique" Applied Sciences 13, no. 12: 6916. https://doi.org/10.3390/app13126916

APA StyleAlanazi, T., Babutain, K., & Muhammad, G. (2023). A Robust and Automated Vision-Based Human Fall Detection System Using 3D Multi-Stream CNNs with an Image Fusion Technique. Applied Sciences, 13(12), 6916. https://doi.org/10.3390/app13126916