Research on Digital Meter Reading Method of Inspection Robot Based on Deep Learning

Abstract

1. Introduction

2. Image Blur Detection and Restoration

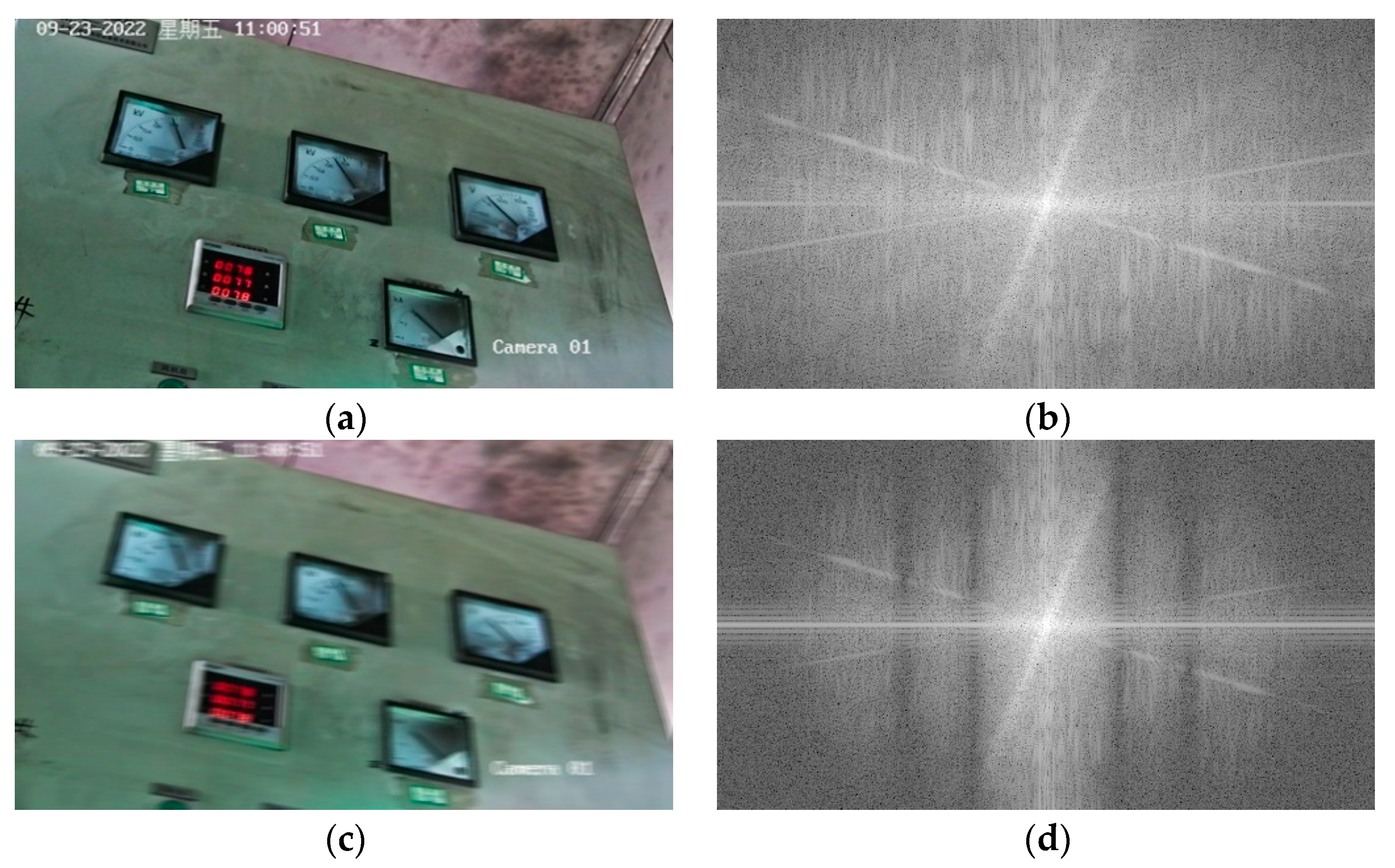

2.1. Image Blur Detection Based on FFT

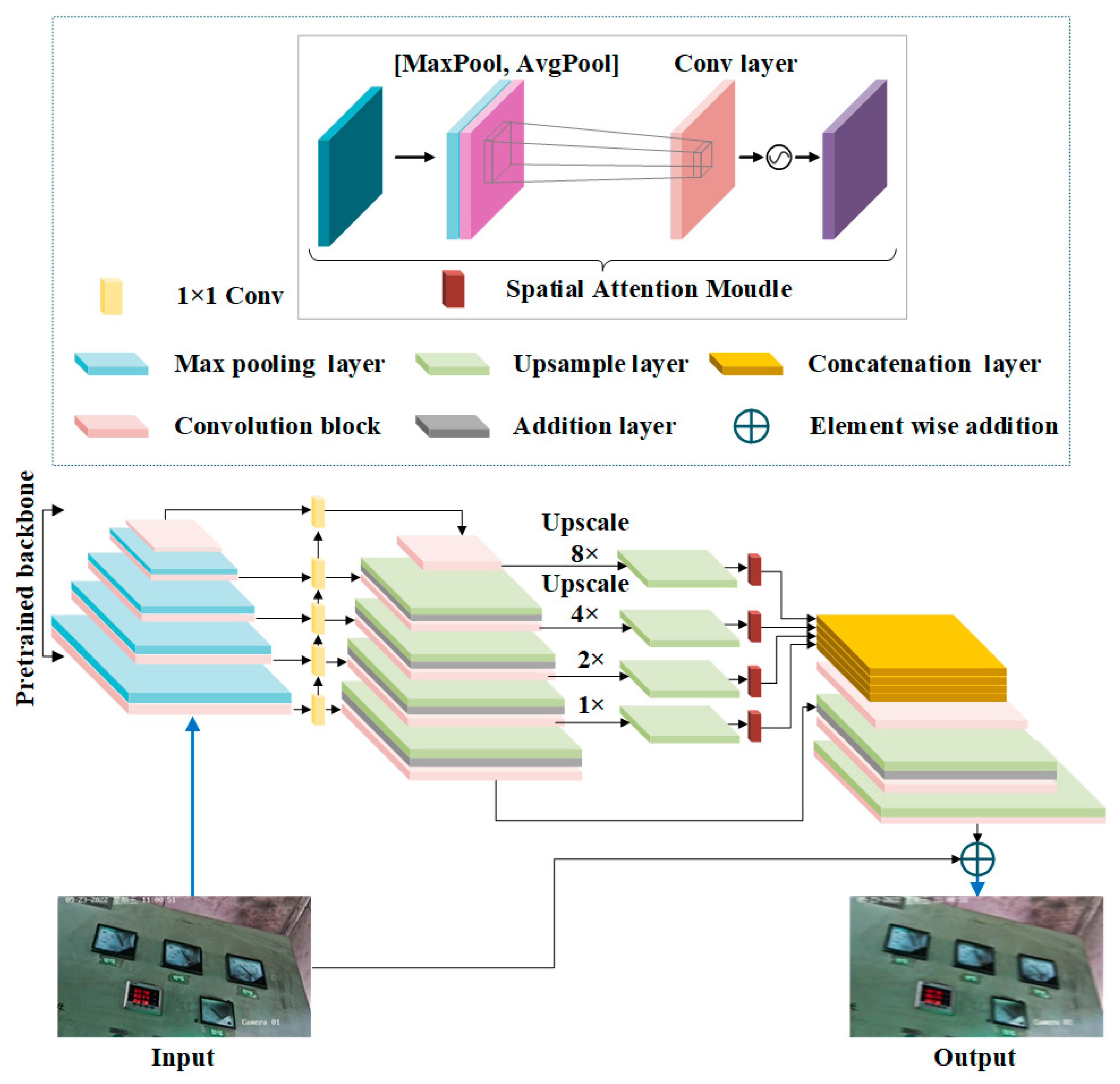

2.2. Deep-Learning-Based Image Motion Blur Restoration

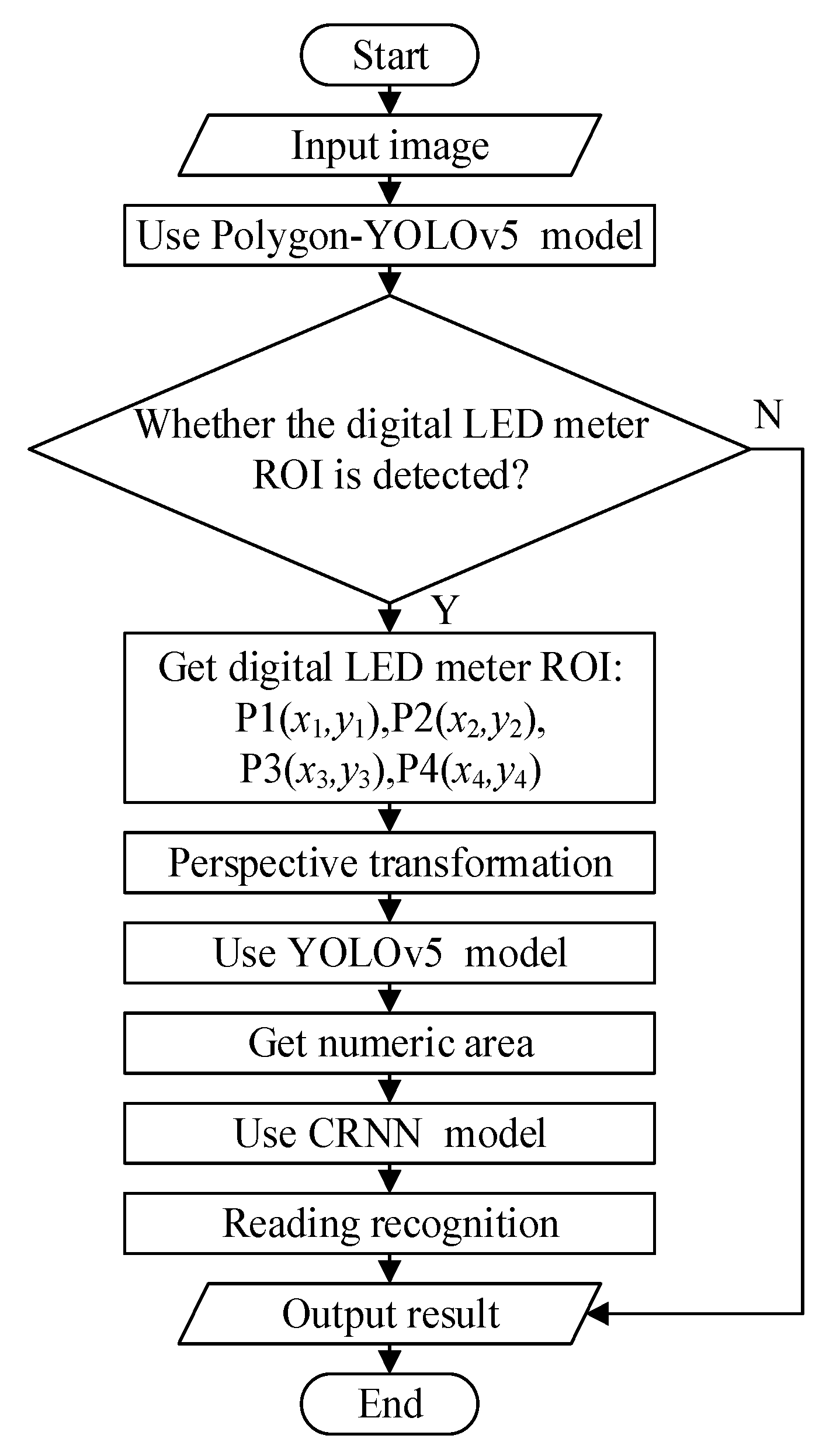

3. Digital Meter Detection and Identification

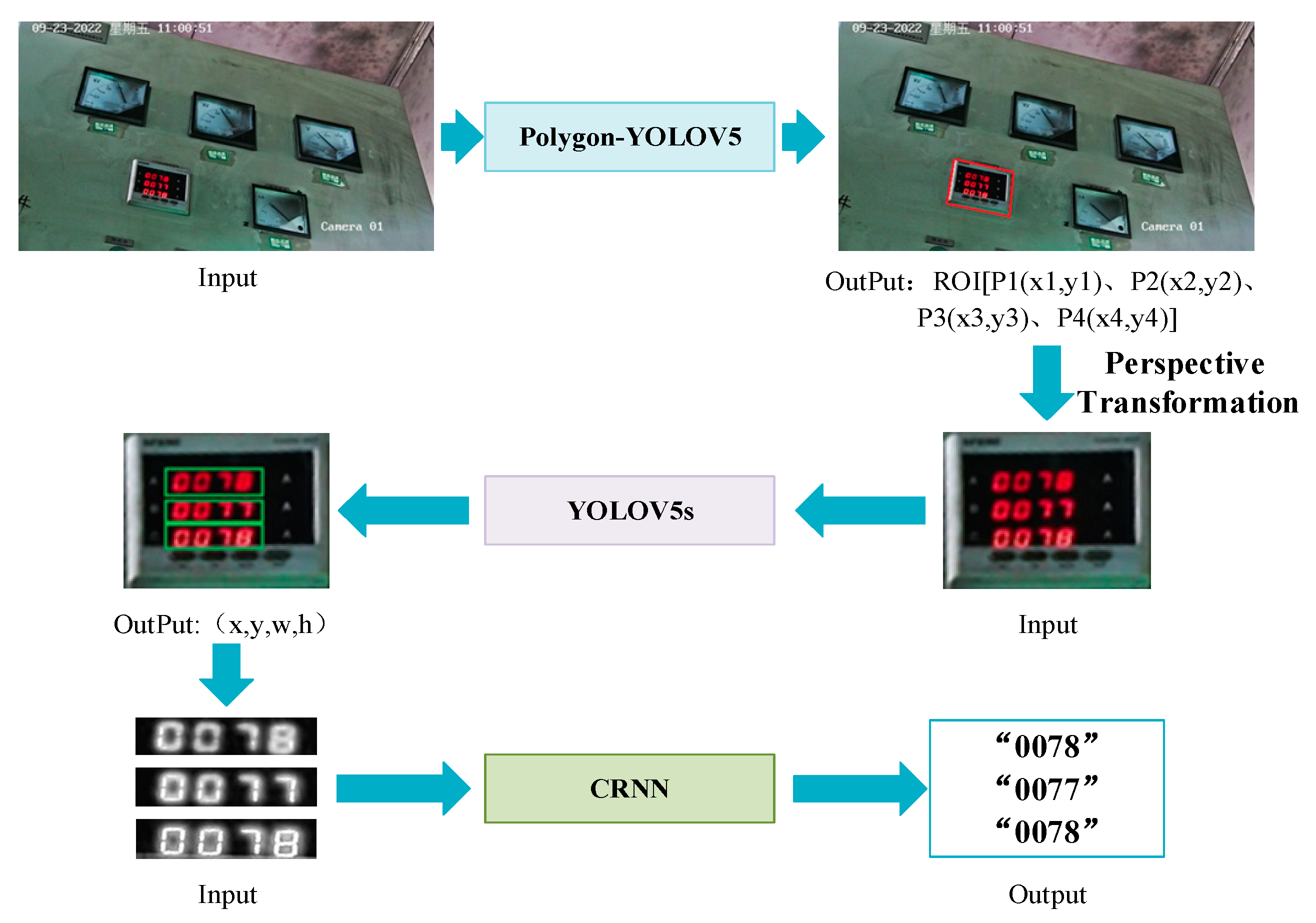

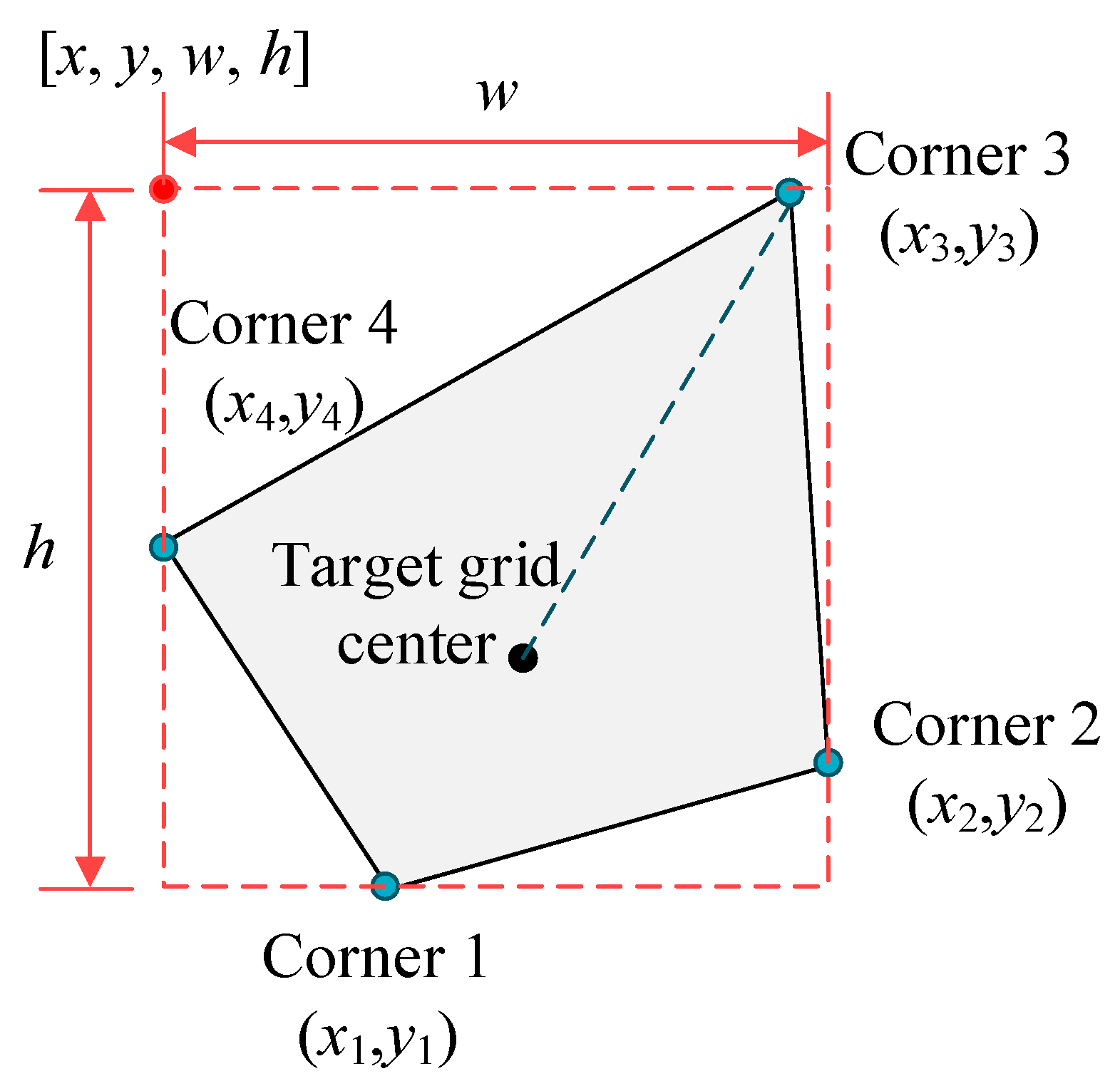

3.1. The Model of Polygon-YOLOv5

3.2. Perspective Transformation

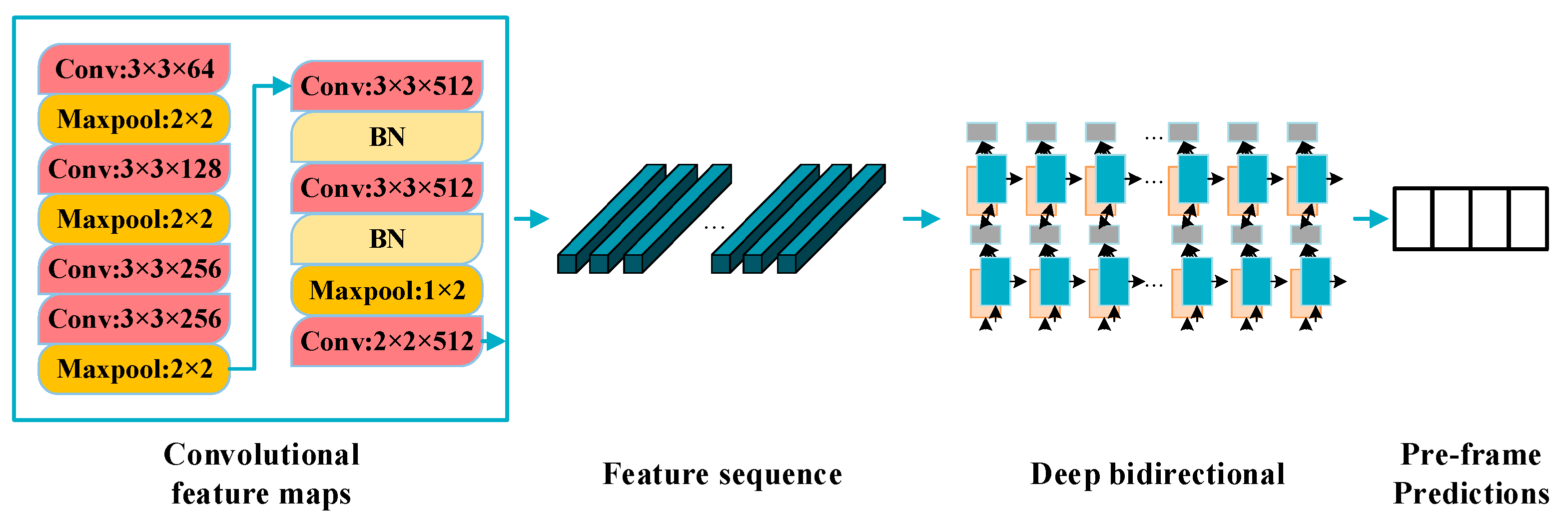

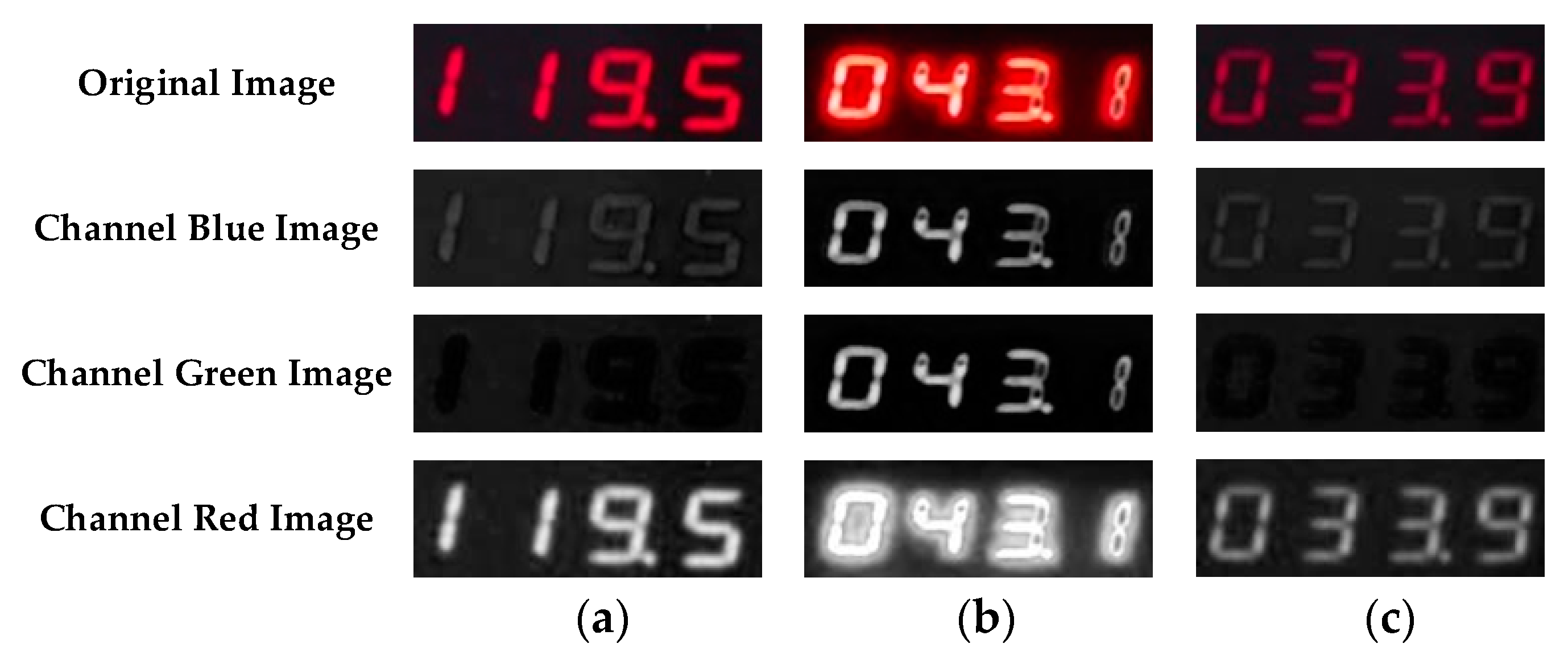

3.3. Digit LED Recognition of Meter by CRNN

4. Experiments and Discussion

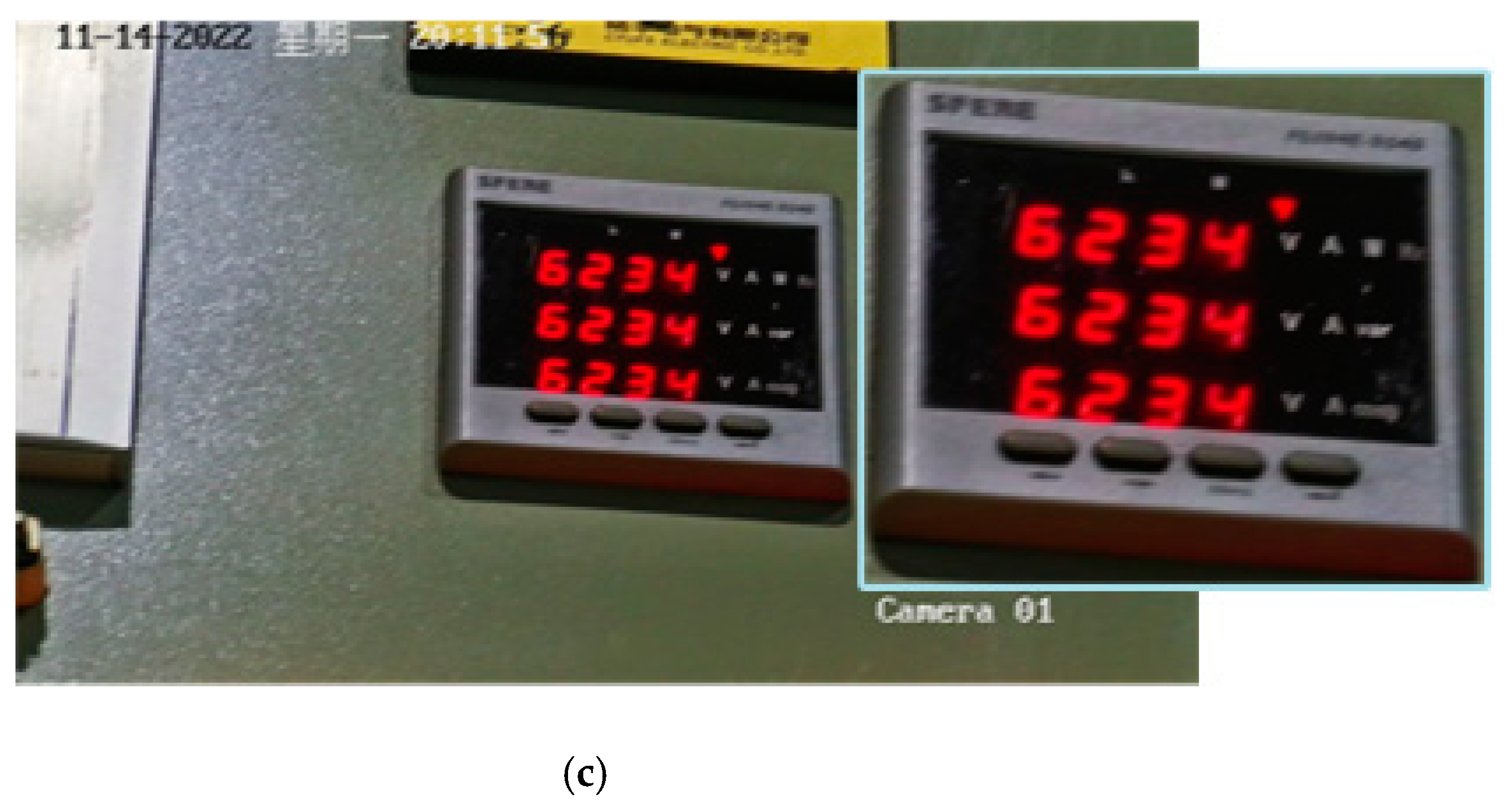

4.1. Motion Blur Restoration Experiment

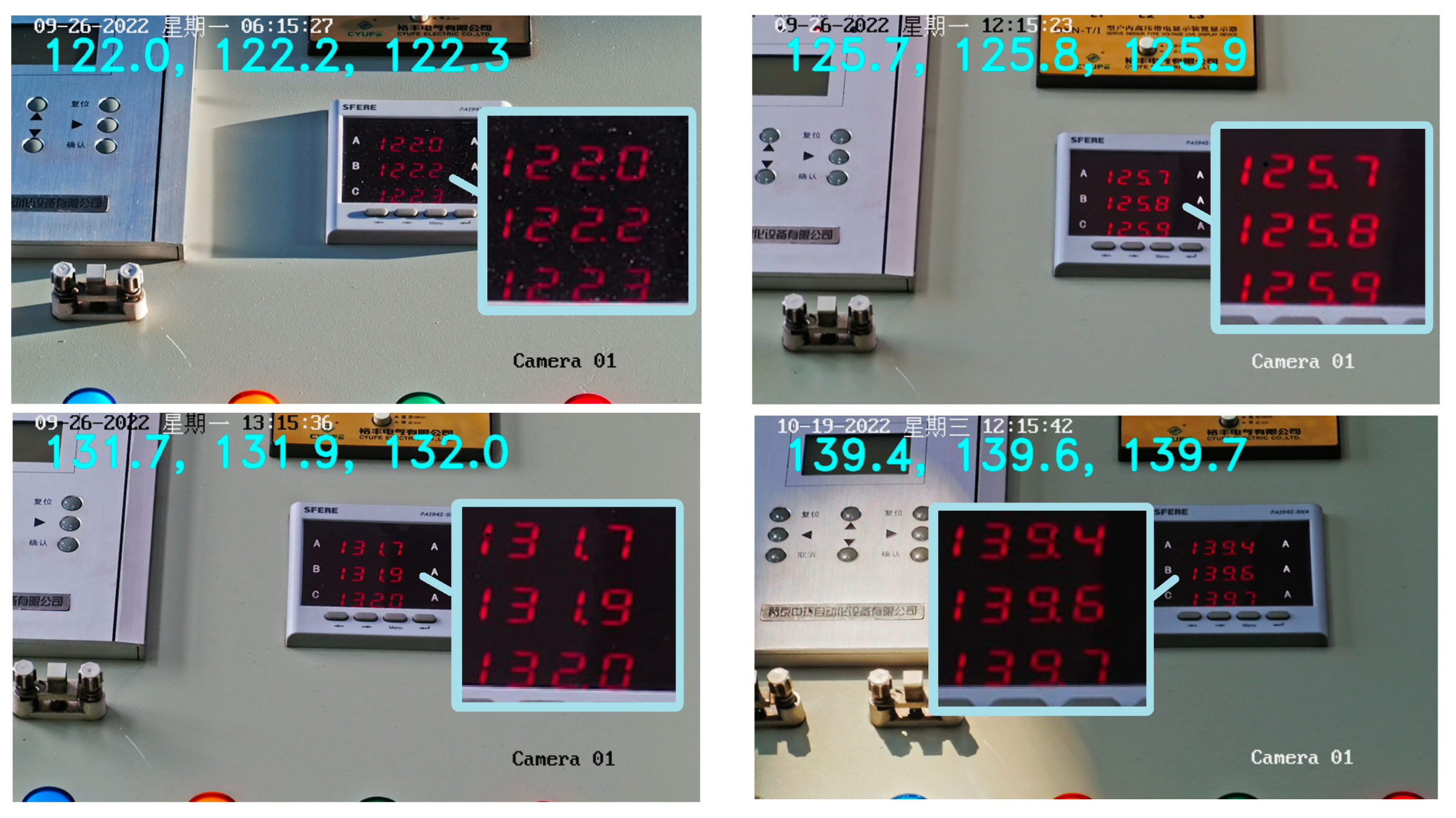

4.2. Reading Experiment of LED Digit Meter

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Misyris, G.S.; Venzke, A.; Chatzivasileiadis, S. Physics-informed neural networks for power systems. In Proceedings of the 2020 IEEE Power & Energy Society General Meeting (PESGM), Montreal, QC, Canada, 2–6 August 2020; pp. 1–5. [Google Scholar]

- Ma, X.Z.; Ma, Y.; Cunha, P.; Liu, Q.; Kudtarkar, K.; Xu, D.; Wang, J.; Chen, Y.; Wong, Z.J.; Liu, M.; et al. Strategical Deep Learning for Photonic Bound States in the Continuum. Laser Photonics Rev. 2022, 16, 2100658. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Yao, J.; Qi, J.M.; Zhang, J.; Shao, H.M.; Yang, J.; Li, X. A real-time detection algorithm for Kiwifruit defects based on YOLOv5. Electronics 2021, 10, 1711. [Google Scholar] [CrossRef]

- Li, Z.; Lu, K.; Zhang, Y.; Li, Z.; Liu, J.B. Research on Energy Efficiency Management of Forklift Based on Improved YOLOv5 Algorithm. J. Math. 2021, 2021, 5808221. [Google Scholar] [CrossRef]

- Xu, B.; Cui, X.; Ji, W.; Yuan, H.; Wang, J.C. Apple Grading Method Design and Implementation for Automatic Grader Based on Improved YOLOv5. Agriculture 2023, 13, 124. [Google Scholar] [CrossRef]

- Ji, W.; Peng, J.Q.; Xu, B.; Zhang, T. Real-time detection of underwater river crab based on multi-scale pyramid fusion image enhancement and MobileCenterNet model. Comput. Electron. Agric. 2023, 204, 107522. [Google Scholar] [CrossRef]

- Xiang, L.; Wen, H.; Zhao, M. Pill Box Text Identification Using DBNet-CRNN. Int. J. Environ. Res. Public Health 2023, 20, 3881. [Google Scholar] [CrossRef]

- Shi, B.G.; Bai, X.; Yao, C. An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 2298–2304. [Google Scholar] [CrossRef] [PubMed]

- Tian, E.; Zhang, H.; Hanafiah, M.M. A pointer location algorithm for computer vision based automatic reading recognition of pointer gauges. Open Phys. 2019, 17, 86–92. [Google Scholar] [CrossRef]

- Zhang, H.F.; Chen, Q.Q.; Lei, L.T. A YOLOv3-Based Industrial Instrument Classification and Reading Recognition Method. Mob. Inf. Syst. 2022, 2022, 7817309. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Duan, K.; Keerthi, S.S.; Poo, A.N. Evaluation of simple performance measures for tuning SVM hyperparameters. Neurocomputing 2003, 51, 41–59. [Google Scholar] [CrossRef]

- Hou, L.Q.; Sun, X.P.; Wang, S. A coarse-fine reading recognition method for pointer meters based on CNN and computer vision. Eng. Res. Express 2022, 4, 035046. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 1440–1448. [Google Scholar]

- Deng, G.H.; Huang, T.B.; Lin, B.H.; Liu, H.K.; Yang, R.; Jing, W.L. Automatic Meter Reading from UAV Inspection Photos in the Substation by Combining YOLOv5s and DeeplabV3+. Sensors 2022, 22, 7090. [Google Scholar] [CrossRef] [PubMed]

- Li, L.L.; Wang, Z.F.; Zhang, T.T. Gbh-yolov5: Ghost convolution with bottleneckCSP and tiny target prediction head incorporating YOLOv5 for PV panel defect detection. Electronics 2023, 12, 561. [Google Scholar] [CrossRef]

- Liu, J.D.; Zhang, Z.; Shan, C.F.; Tan, T.N. Kinematic skeleton graph augmented network for human parsing. Neurocomputing 2020, 413, 457–470. [Google Scholar] [CrossRef]

- Martinelli, F.; Mercaldo, F.; Santone, A. Water Meter Reading for Smart Grid Monitoring. Sensors 2023, 23, 75. [Google Scholar] [CrossRef]

- Bi, C.; Hao, X.; Li, J.F.; Fang, J.G. Experimental study on focusing evaluation functions of images of film cooling hole. J. Astronaut. Metrol. Meas. 2019, 39, 77. [Google Scholar]

- Fan, Y.Y.; Shen, X.H.; Sang, Y.J. No reference image sharpness assessment based on contrast sensitivity. Opt. Precis. Eng. 2011, 19, 2485–2493. [Google Scholar] [CrossRef]

- Yang, H.L.; Huang, P.H.; Lai, S.H. A novel gradient attenuation Richardson–Lucy algorithm for image motion deblurring. Signal Process. 2014, 103, 399–414. [Google Scholar] [CrossRef]

- Hiller, A.D.; Chin, R.T. Iterative Wiener filters for image restoration. In Proceedings of the International Conference on Acoustics, Speech, and Signal Processing, Albuquerque, NM, USA, 3–6 April 1990; pp. 1901–1904. [Google Scholar]

- Jain, C.; Kumar, A.; Chugh, A.; Charaya, N. Efficient image deblurring application using combination of blind deconvolution method and blur parameters estimation method. ECS Trans. 2022, 107, 3695. [Google Scholar] [CrossRef]

- Lee, X. Polygon-YOLOv5. 2022. Available online: https://github.com/XinzeLee/PolygonObjectDetection (accessed on 23 November 2022).

- Kupyn, O.; Martyniuk, T.; Wu, J.; Wang, Z. Deblurgan-v2: Deblurring (orders-of-magnitude) faster and better. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8878–8887. [Google Scholar]

- Kupyn, O.; Budzan, V.; Mykhailych, M.; Mishkin, D.; Matas, J. Deblurgan: Blind motion deblurring using conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake, UT, USA, 18–22 June 2018; pp. 8183–8192. [Google Scholar]

- Ling, J.; Xue, H.; Song, L.; Xie, R.; Gu, X. Region-aware adaptive instance normalization for image harmonization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Place Virtually, 19–25 June 2021; pp. 9361–9370. [Google Scholar]

- Ramachandran, P.; Zoph, B.; Le, Q.V. Searching for activation functions. arXiv 2017, arXiv:1710.05941. [Google Scholar]

- Benjdira, B.; Khursheed, T.; Koubaa, A.; Ammar, A.; Ouni, K. Car detection using unmanned aerial vehicles: Comparison between faster r-cnn and yolov3. In Proceedings of the 2019 1st International Conference on Unmanned Vehicle Systems-Oman (UVS), Muscat, Oman, 5–7 February 2019; pp. 1–6. [Google Scholar]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

| DeblurGAN | Improved DeblurGAN | |

|---|---|---|

| PSNR | 25.534 | 26.562 |

| SSIM | 0.770 | 0.861 |

| Missing Rate of Meter Region | Error Rate of Meter Region | |

|---|---|---|

| SVM | 4.5% | 2.5% |

| YOLOv5s | 3% | 1.5% |

| Our method | 1% | 0% |

| Accuracy of Reading | |

|---|---|

| SVM | 79% |

| YOLOv5s | 85% |

| YOLOv5s+ minimum enclosing rectangle | 89% |

| Our method | 98% |

| Accuracy of Reading | |

|---|---|

| Without preprocessing | 86.4% |

| Preprocessing | 98.8% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, W.; Zhao, Z.; Tao, J.; Lian, C.; Zhang, C. Research on Digital Meter Reading Method of Inspection Robot Based on Deep Learning. Appl. Sci. 2023, 13, 7146. https://doi.org/10.3390/app13127146

Lin W, Zhao Z, Tao J, Lian C, Zhang C. Research on Digital Meter Reading Method of Inspection Robot Based on Deep Learning. Applied Sciences. 2023; 13(12):7146. https://doi.org/10.3390/app13127146

Chicago/Turabian StyleLin, Wenwei, Ziyang Zhao, Jin Tao, Chaoming Lian, and Chentao Zhang. 2023. "Research on Digital Meter Reading Method of Inspection Robot Based on Deep Learning" Applied Sciences 13, no. 12: 7146. https://doi.org/10.3390/app13127146

APA StyleLin, W., Zhao, Z., Tao, J., Lian, C., & Zhang, C. (2023). Research on Digital Meter Reading Method of Inspection Robot Based on Deep Learning. Applied Sciences, 13(12), 7146. https://doi.org/10.3390/app13127146