Voice Disorder Multi-Class Classification for the Distinction of Parkinson’s Disease and Adductor Spasmodic Dysphonia

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset

2.2. Features

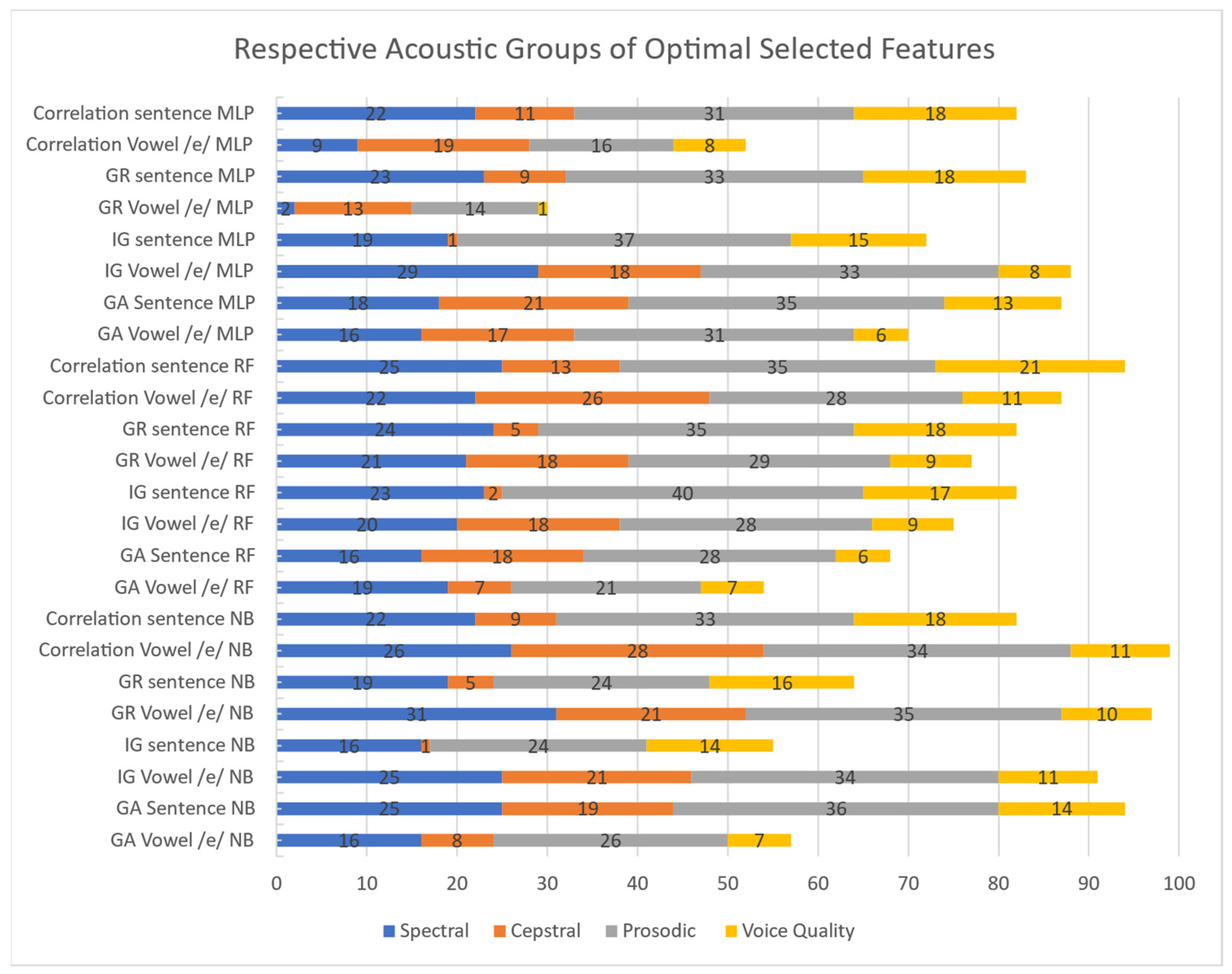

- Correlation: the features were ranked according to the value of their cross-correlation with the class.

- Information Gain (IG): measures the change in entropy, according to Shannon’s definition, that a given dataset endures when it is the result of a split performed according to some criterion/threshold on each given feature. It is simply computed as a difference between post (conditioned) and prior entropy values [27,28].

- Gain Ratio (GR): normalized version of the IG, in order to tackle its potential sensitivity to the dataset cardinality, according to the following formula:where IG is the IG for the feature X that presents n different values, and N(t) is the number of occurrences of the value t. The denominator is defined as “split information”. Note that, for continuous features, IG and GR are computed by sorting all the measured values and creating a corresponding number of splits, with each value acting as a below/above threshold [29].

- Genetic Algorithm (GA): a GA classifier was used as a wrapper, used on one feature at a time, to identify the best performing ones. A 10-fold cross-validation was employed to reduce data selection bias [30].

2.3. Classifiers

- Naïve Bayes (NB).

- Random Forest (RF), with 100 iterations/bags created by sampling with repetition up to a dimension as big as the original set (100% bags).

- Multi-layer Perceptron (MLP), i.e., a fully connected artificial Neural Network with a number of hidden layers equal to the number of features + number of classes divided by two, trained with a learning rate of 0.3 and a momentum of 0.2.

2.4. Statistics

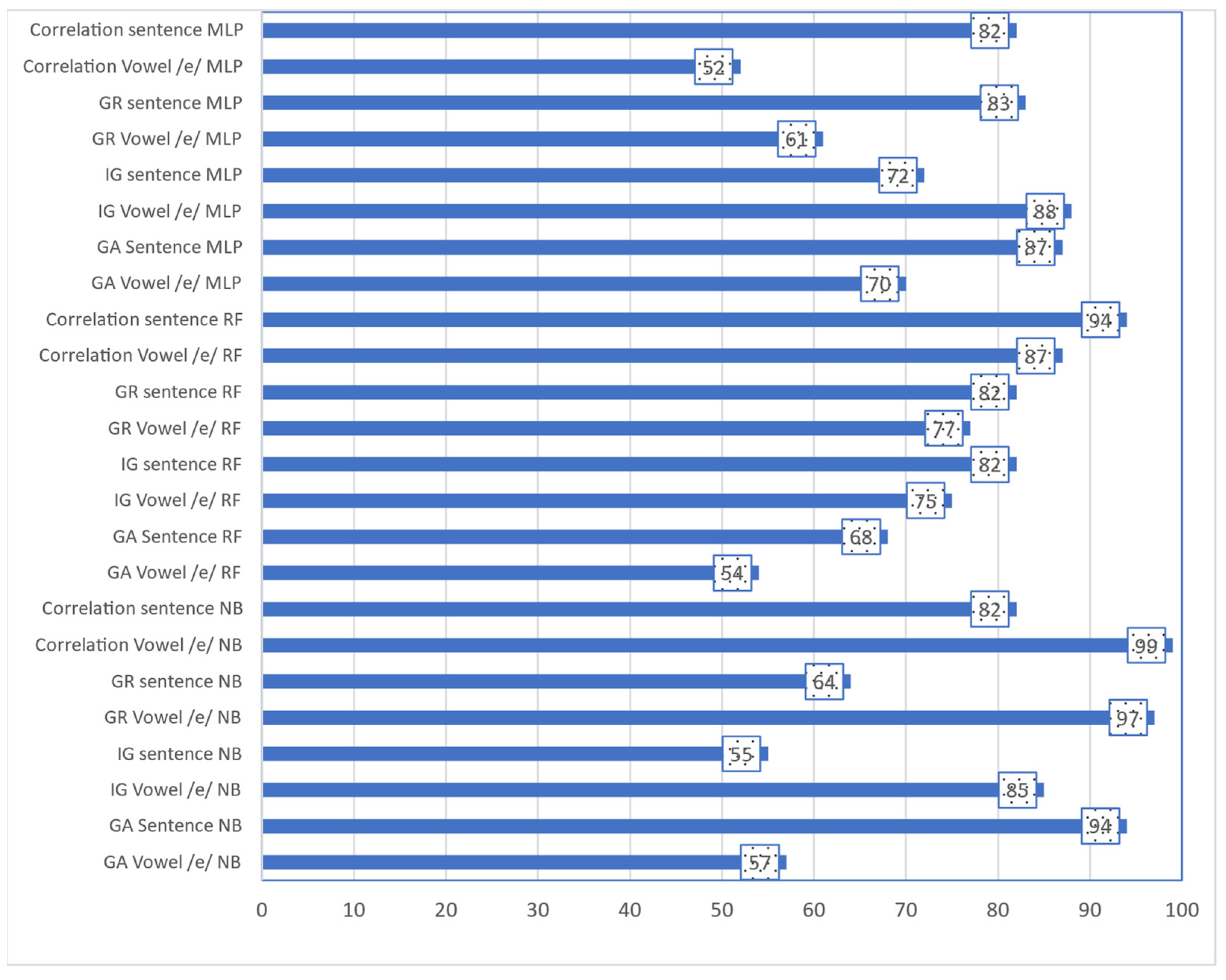

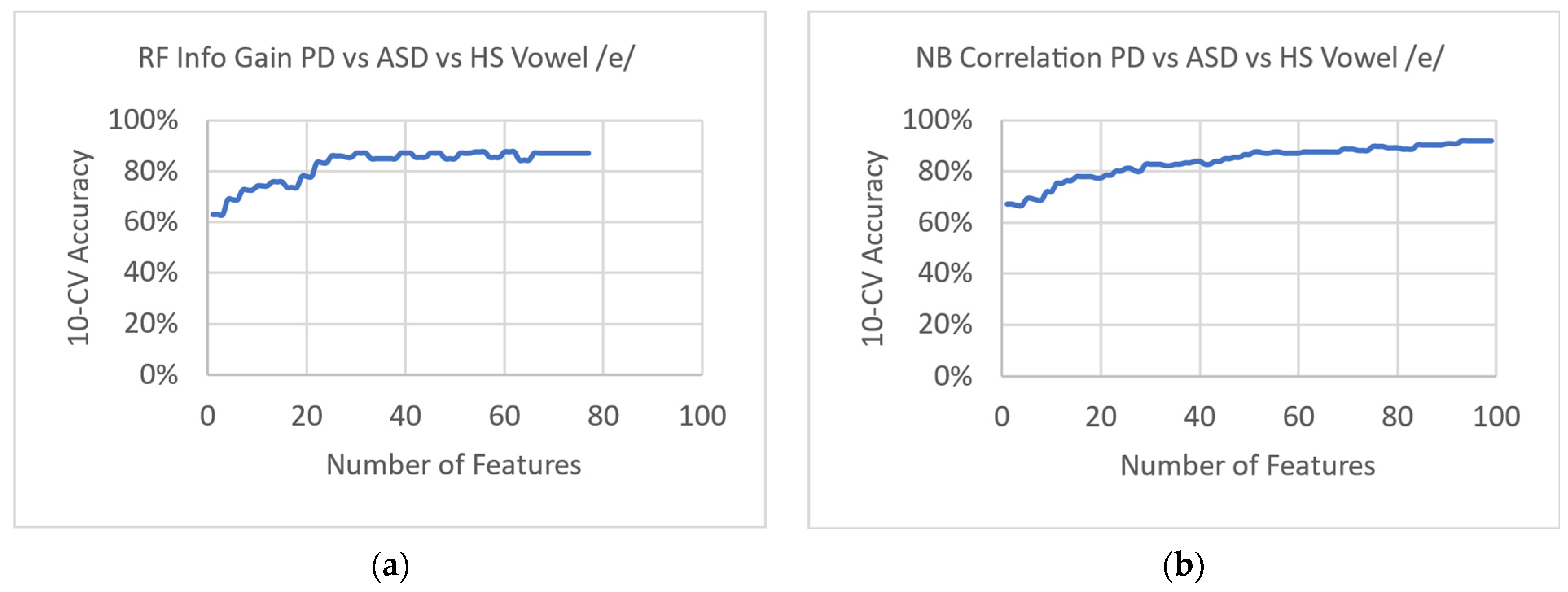

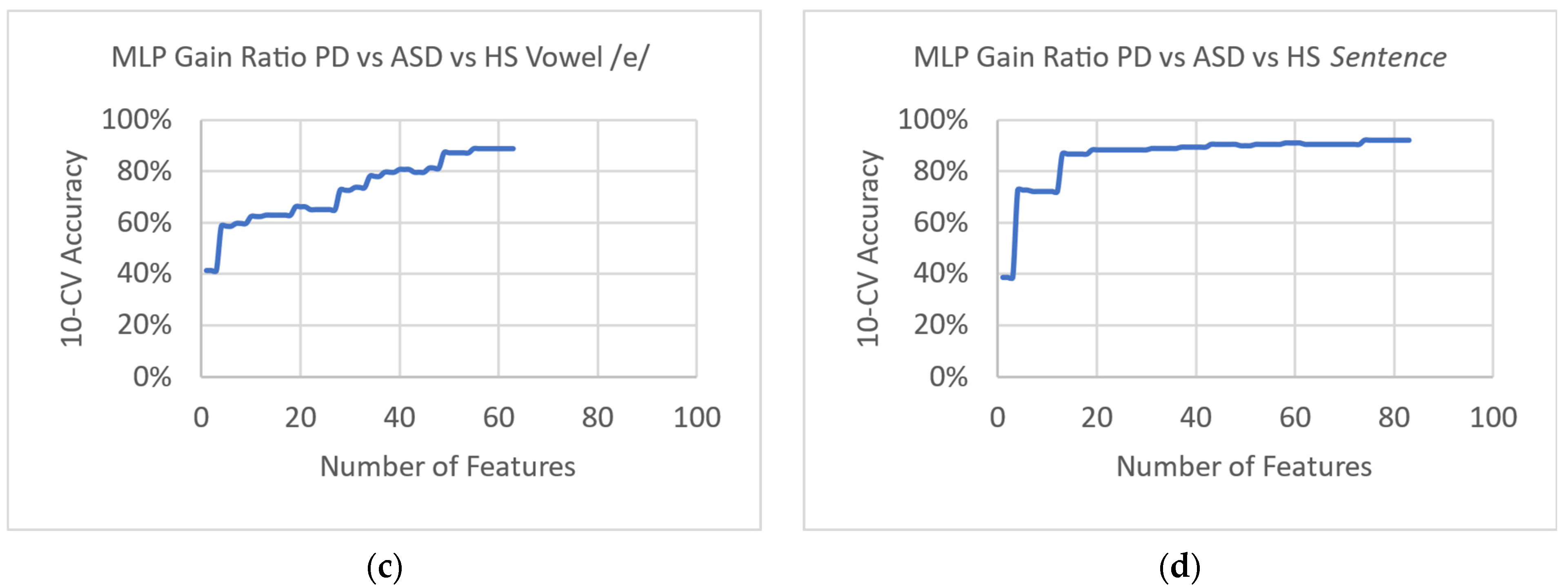

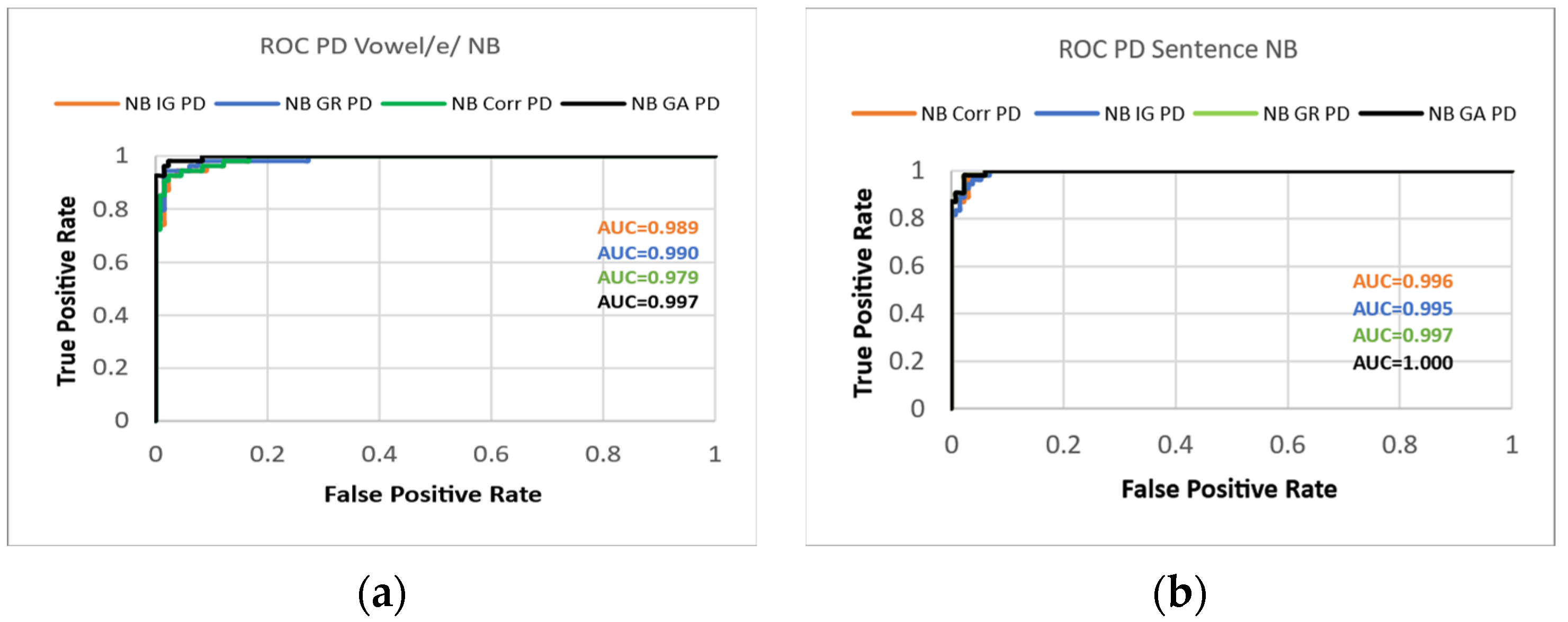

3. Results

4. Discussion

4.1. Literature Review

| Study | Database | Pathology | Vocal Tasks | Feature Domains | Feature Selection | Classifier | Accuracy |

|---|---|---|---|---|---|---|---|

| Mekyska et al. [39] | MEEI, PdA, PARCZ | PD, ASD, conversion dysphonia, erythema, nodules, polyps, oedemas, carcinomas | /a/ | Phonation, tongue movement, speech quality, spectrum, wavelet, EMD, non-linear dynamics | Mann–Whitney U test | SVM, RF | 100% |

| Barche et al. [40] | SVD | Dysphonia (various), laryngeal nerve palsy, Laryngitis and Leukoplakia | /a/, /i/, /u/ | eGeMAPS, MFCC, PLP, Glottal, Intonation, MFFC | N/A | SVM | 85.20% |

| Verde et al. [41]. | SVD | 71 Pathologies | /a/ | F0, Jitter, Shimmer, HNR, MFCC | Corr., IG | SVM, DT, BC, LMT, IBLA | 85.77% |

| Alves et al. [42] | “Hospital das Clinicas” | Reinke’s Edema, vocal nodules, neurologic diseases | /a/ | MFCC | Statistics | SVM, KNN | 100% |

| Al-Dhief et al. [43] | SVD | 71 Pathologies | /a/ | MFCC | N/A | OSELM | 85% |

| Gupta [45] | FEMH | Vocal nodules, poylps, cysts, glottis neoplasm, unilateral vocal paralysis | /a/ | MFCC | N/A | LSTM | 56% UAR |

| Pham et al. [44] | FEMH | Vocal nodules, polyps, cysts, glottis neoplasm, unilateral vocal paralysis | /a/ | MFCC | N/A | SVM, RF, KNN, GB, EL | 68.48% |

| Forero et al. [47] | Speech therapist | Nodules and vocal paralysis | /a/ | F0, jitter, shimmer | N/A | ANN, SVM, HMM | 97.20% |

| Hemmerling et al. [49] | SVD | 71 Pathologies | /a/, /i/, /u/ | Various (28) + PCA | N/A | RF, Clustering | 100% |

| Fang et al. [46] | FEMH | Vocal nodules, polyps, cysts, glottis neoplasm, unilateral vocal paralysis | /a/ | MFCC | N/A | ANN, SVMM, GMM | 99.32% |

| Ours | Custom (Tor Vergata) | PD, ASD | /e/+ sentence | Compare 2016 (6373) | Corr., IG, GR, GA | NB, RF, MLP | 99.46% |

4.2. Comments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Asci, F.; Costantini, G.; Di Leo, P.; Zampogna, A.; Ruoppolo, G.; Berardelli, A.; Saggio, G.; Suppa, A. Machine-Learning Analysis of Voice Samples Recorded through Smartphones: The Combined Effect of Ageing and Gender. Sensors 2020, 20, 5022. [Google Scholar] [CrossRef]

- Saggio, G.; Costantini, G. Worldwide Healthy Adult Voice Baseline Parameters: A Comprehensive Review. J. Voice 2022, 36, 637–649. [Google Scholar] [CrossRef]

- König, A.; Satt, A.; Sorin, A.; Hoory, R.; Toledo-Ronen, O.; Derreumaux, A.; Manera, V.; Verhey, F.; Aalten, P.; Robert, P.H.; et al. Automatic speech analysis for the assessment of patients with predementia and Alzheimer’s disease. Alzheimers Dement. Diagn. Assess. Dis. Monit. 2015, 1, 112–124. [Google Scholar] [CrossRef] [PubMed]

- Almeida, J.S.; Filho, P.P.R.; Carneiro, T.; Wei, W.; Damaševičius, R.; Maskeliūnas, R.; de Albuquerque, V.H.C. Detecting Parkinson’s disease with sustained phonation and speech signals using machine learning techniques. Pattern Recognit. Lett. 2019, 125, 55–62. [Google Scholar] [CrossRef]

- Costantini, G.; Di Leo, P.; Asci, F.; Zarezadeh, Z.; Marsili, L.; Errico, V.; Suppa, A.; Saggio, G. Machine Learning based Voice Analysis in Spasmodic Dysphonia: An Investigation of Most Relevant Features from Specific Vocal Tasks. In Proceedings of the 14th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2021), Online, 11–13 February 2021; pp. 103–113. [Google Scholar] [CrossRef]

- Parkinson’s Foundation. 10 Early Signs of Parkinson’s Disease’, Parkinson’s Foundation. Available online: https://www.parkinson.org/understanding-parkinsons/10-early-warning-signs (accessed on 18 July 2020).

- Goetz, C.G.; Fahn, S.; Martinez-Martin, P.; Poewe, W.; Sampaio, C.; Stebbins, G.T.; Stern, M.B.; Tilley, B.C.; Dodel, R.; Dubois, B.; et al. The MDS-sponsored Revision of the Unified Parkinson’s Disease Rating Scale. Milwaukee: International Parkinson and Movement Disorder Society. 2019. Available online: https://www.movementdisorders.org/MDS/MDS-Rating-Scales/MDS-Unified-Parkinsons-Disease-Rating-Scale-MDS-UPDRS.htm (accessed on 20 July 2020).

- Hoffman, M.R.; Jiang, J.J.; Rieves, A.L.; McElveen, K.A.B.; Ford, C.N. Differentiating between adductor and abductor spasmodic dysphonia using airflow interruption: Differentiating Between SD Subtypes. Laryngoscope 2009, 119, 1851–1855. [Google Scholar] [CrossRef]

- Merati, A.L.; Abaza, M.; Altman, K.W.; Sulica, L.; Belamowicz, S.; Heman-Ackah, Y.D. Common Movement Disorders Affecting the Larynx: A Report from the Neurolaryngology Committee of the AAO-HNS. Otolaryngol. Neck Surg. 2005, 133, 654–665. [Google Scholar] [CrossRef]

- Lopes, B.P.; das Graças, R.R.; Bassi, I.B.; Neto, A.L.d.R.; de Oliveira, J.B.; Cardoso, F.E.C.; Gama, A.C.C. Quality of life in voice: A study in Parkinson’s disease and in adductor spasmodic dysphonia. Rev. CEFAC 2012, 15, 427–435. [Google Scholar] [CrossRef]

- Jiang, F.; Jiang, Y.; Zhi, H.; Dong, Y.; Li, H.; Ma, S.; Wang, Y.; Dong, Q.; Shen, H.; Wang, Y. Artificial intelligence in healthcare: Past, present and future. Stroke Vasc. Neurol. 2017, 2, 230–243. [Google Scholar] [CrossRef] [PubMed]

- Asci, F.; Costantini, G.; Saggio, G.; Suppa, A. Fostering Voice Objective Analysis in Patients with Movement Disorders. Mov. Disord. 2021, 36, 1041. [Google Scholar] [CrossRef] [PubMed]

- Suppa, A.; Asci, F.; Saggio, G.; Marsili, L.; Casali, D.; Zarezadeh, Z.; Ruoppolo, G.; Berardelli, A.; Costantini, G. Voice analysis in adductor spasmodic dysphonia: Objective diagnosis and response to botulinum toxin. Park. Relat. Disord. 2020, 73, 23–30. [Google Scholar] [CrossRef]

- Robotti, C.; Costantini, G.; Saggio, G.; Cesarini, V.; Calastri, A.; Maiorano, E.; Piloni, D.; Perrone, T.; Sabatini, U.; Ferretti, V.V.; et al. Machine Learning-based Voice Assessment for the Detection of Positive and Recovered COVID-19 Patients. J. Voice 2021, in press. [CrossRef]

- Costantini, G.; Cesarini, V.; Robotti, C.; Benazzo, M.; Pietrantonio, F.; Di Girolamo, S.; Pisani, A.; Canzi, P.; Mauramati, S.; Bertino, G.; et al. Deep learning and machine learning-based voice analysis for the detection of COVID-19: A proposal and comparison of architectures. Knowl.-Based Syst. 2022, 253, 109539. [Google Scholar] [CrossRef]

- Costantini, G.; Cesarini, V.; Di Leo, P.; Amato, F.; Suppa, A.; Asci, F.; Pisani, A.; Calculli, A.; Saggio, G. Artificial Intelligence-Based Voice Assessment of Patients with Parkinson’s Disease Off and On Treatment: Machine vs. Deep-Learning Comparison. Sensors 2023, 23, 2293. [Google Scholar] [CrossRef]

- Costantini, G.; Parada-Cabaleiro, E.; Casali, D.; Cesarini, V. The Emotion Probe: On the Universality of Cross-Linguistic and Cross-Gender Speech Emotion Recognition via Machine Learning. Sensors 2022, 22, 2461. [Google Scholar] [CrossRef] [PubMed]

- Costantini, G.; Cesarini, V.; Brenna, E. High-Level CNN and Machine Learning Methods for Speaker Recognition. Sensors 2023, 23, 3461. [Google Scholar] [CrossRef] [PubMed]

- Carrón, J.; Campos-Roca, Y.; Madruga, M.; Pérez, C.J. A mobile-assisted voice condition analysis system for Parkinson’s disease: Assessment of usability conditions. Biomed. Eng. Online 2021, 20, 114. [Google Scholar] [CrossRef]

- Amato, F.; Saggio, G.; Cesarini, V.; Olmo, G.; Costantini, G. Machine learning- and statistical-based voice analysis of Parkinson’s disease patients: A survey. Expert Syst. Appl. 2023, 219, 119651. [Google Scholar] [CrossRef]

- Schuller, B.; Steidl, S.; Batliner, A.; Hirschberg, J.; Burgoon, J.K.; Baird, A.; Elkins, A.; Zhang, Y.; Coutinho, E.; Evanini, K. The INTERSPEECH 2016 computational paralinguistics challenge: 17th Annual Conference of the International Speech Communication Association, INTERSPEECH 2016. In Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH, San Francisco, CA, USA, 8–12 September 2016. [Google Scholar] [CrossRef]

- Bogert, B.P. The quefrency alanysis of time series for echoes; Cepstrum, pseudo-autocovariance, cross-cepstrum and saphe cracking. Time Ser. Anal. 1963, 15, 209–243. [Google Scholar]

- Hermansky, H.; Morgan, N. RASTA processing of speech. IEEE Trans. Speech Audio Process. 1994, 2, 578–589. [Google Scholar] [CrossRef]

- Yeldener, S. EP 1163662 A4 20040616—Method of Determining the Voicing Probability of Speech Signals. Available online: https://data.epo.org/gpi/EP1163662A4 (accessed on 24 May 2022).

- Eyben, F.; Schuller, B. openSMILE:): The Munich open-source large-scale multimedia feature extractor. SIGMultimedia Rec. 2015, 6, 4–13. [Google Scholar] [CrossRef]

- Hall, M.A. Correlation-based Feature Selection for Machine Learning. Ph.D. Dissertation, The University of Waikato, Hamilton, New Zealand, 1999. [Google Scholar]

- Shannon, C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Yücelbaş, C. A new approach: Information gain algorithm-based k-nearest neighbors hybrid diagnostic system for Parkinson’s disease. Phys. Eng. Sci. Med. 2021, 44, 511–524. [Google Scholar] [CrossRef] [PubMed]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Sastry, K.; Goldberg, D.; Kendall, G. Genetic Algorithms. In Search Methodologies: Introductory Tutorials in Optimization and Decision Support Techniques; Burke, E.K., Kendall, G., Eds.; Springer: Boston, MA, USA, 2006; pp. 97–125. [Google Scholar] [CrossRef]

- Taylor, C.R. Dynamic Programming and the Curses of Dimensionality. In Applications of Dynamic Programming to Agricultural Decision Problems; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar] [CrossRef]

- Demšar, J.; Demsar, J. Statistical Comparisons of Classifiers over Multiple Data Sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

- Razali, N.M.; Wah, Y.B. Power comparisons of Shapiro-Wilk, Kolmogorov-Smirnov, Lilliefors and Anderson-Darling tests. J. Stat. Model. Anal. 2011, 2, 21–33. [Google Scholar]

- Benavoli, A.; Corani, G.; Mangili, F. Should we really use post-hoc tests based on mean-ranks? J. Mach. Learn. Res. 2016, 17, 152–161. [Google Scholar]

- Iman, R.L.; Davenport, J.M. Approximations of the critical region of the fbietkan statistic. Commun. Stat. Theory Methods 1980, 9, 571–595. [Google Scholar] [CrossRef]

- Ruxton, G.D.; Neuhäuser, M.; Ruxton, G.D.; Neuhäuser, M. When should we use one-tailed hypothesis testing?: One-tailed hypothesis testing. Methods Ecol. Evol. 2010, 1, 114–117. [Google Scholar] [CrossRef]

- Weninger, F.; Eyben, F.; Schuller, B.W.; Mortillaro, M.; Scherer, K.R. On the Acoustics of Emotion in Audio: What Speech, Music, and Sound have in Common. Front. Psychol. 2013, 4, 292. [Google Scholar] [CrossRef]

- Schuller, B.; Steidl, S.; Batliner, A.; Epps, J.; Eyben, F.; Ringeval, F.; Marchi, E.; Zhang, Y. The Interspeech 2014 Computational Paralinguistics Challenge: Cognitive & Physical Load. In Proceedings of the INTERSPEECH 2014, 5th Annual Conference of the International Speech Communication Association, Singapore, 14–18 September 2014; pp. 427–431. [Google Scholar]

- Mekyska, J.; Janousova, E.; Gomez-Vilda, P.; Smekal, Z.; Rektorova, I.; Eliasova, I.; Kostalova, M.; Mrackova, M.; Alonso-Hernandez, J.B.; Faundez-Zanuy, M.; et al. Robust and complex approach of pathological speech signal analysis. Neurocomputing 2015, 167, 94–111. [Google Scholar] [CrossRef]

- Barche, P.; Gurugubelli, K.; Vuppala, A.K. Towards Automatic Assessment of Voice Disorders: A Clinical Approach. In Proceedings of the Interspeech 2020, Shanghai, China, 25–29 October 2020. [Google Scholar] [CrossRef]

- Verde, L.; De Pietro, G.; Sannino, G. Voice Disorder Identification by Using Machine Learning Techniques. IEEE Access 2018, 6, 16246–16255. [Google Scholar] [CrossRef]

- Alves, M.; Silva, G.; Bispo, B.C.; Dajer, M.E.; Rodrigues, P.M. Voice Disorders Detection Through Multiband Cepstral Features of Sustained Vowel. J. Voice 2021, 37, 322–331. [Google Scholar] [CrossRef]

- Al-Dhief, F.T.; Latiff, N.M.A.; Malik, N.N.N.A.; Sabri, N.; Baki, M.M.; Albadr, M.A.A.; Abbas, A.F.; Hussein, Y.M.; Mohammed, M.A. Voice Pathology Detection Using Machine Learning Technique. In Proceedings of the 2020 IEEE 5th International Symposium on Telecommunication Technologies (ISTT), Shah Alam, Malaysia, 9–11 November 2020; pp. 99–104. [Google Scholar] [CrossRef]

- Pham, M.; Lin, J.; Zhang, Y. Diagnosing Voice Disorder with Machine Learning. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 5263–5266. [Google Scholar] [CrossRef]

- Gupta, V. Voice Disorder Detection Using Long Short Term Memory (LSTM) Model. arXiv 2018, arXiv:1812.01779. [Google Scholar]

- Fang, S.-H.; Tsao, Y.; Hsiao, M.-J.; Chen, J.-Y.; Lai, Y.-H.; Lin, F.-C.; Wang, C.-T. Detection of Pathological Voice Using Cepstrum Vectors: A Deep Learning Approach. J. Voice 2018, 33, 634–641. [Google Scholar] [CrossRef] [PubMed]

- Forero, M.L.A.; Kohler, M.; Vellasco, M.M.; Cataldo, E. Analysis and Classification of Voice Pathologies Using Glottal Signal Parameters. J. Voice 2015, 30, 549–556. [Google Scholar] [CrossRef]

- Aich, S.; Kim, H.-C.; Younga, K.; Hui, K.L.; Al-Absi, A.A.; Sain, M. A Supervised Machine Learning Approach using Different Feature Selection Techniques on Voice Datasets for Prediction of Parkinson’s Disease. In Proceedings of the 2019 21st International Conference on Advanced Communication Technology (ICACT), Pyeongchang, Republic of Korea, 17–20 February 2019. [Google Scholar] [CrossRef]

- Hemmerling, D.; Orozco-Arroyave, J.R.; Skalski, A.; Gajda, J.; Nöth, E. Automatic Detection of Parkinson’s Disease Based on Modulated Vowels. In Proceedings of the Interspeech 2016, San Francisco, CA, USA, 8–12 September 2016. [Google Scholar] [CrossRef]

- Jeancolas, L.; Benali, H.; Benkelfat, B.-E.; Mangone, G.; Corvol, J.-C.; Vidailhet, M.; Lehericy, S.; Petrovska-Delacretaz, D. Automatic detection of early stages of Parkinson’s disease through acoustic voice analysis with mel-frequency cepstral coefficients. In Proceedings of the 2017 International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), Fez, Morocco, 22–24 May 2017. [Google Scholar] [CrossRef]

- Fayad, R.; Hajj-Hassan, M.; Constantini, G.; Zarazadeh, Z.; Errico, V.; Saggio, G.; Suppa, A.; Asci, F. Vocal Test Analysis for the Assessment of Adductor-type Spasmodic Dysphonia. In Proceedings of the 2021 Sixth International Conference on Advances in Biomedical Engineering (ICABME), Werdanyeh, Lebanon, 7–9 October 2021; pp. 167–170. [Google Scholar] [CrossRef]

- Schlotthauer, G.; Torres, M.E.; Jackson-Menaldi, M.C. A Pattern Recognition Approach to Spasmodic Dysphonia and Muscle Tension Dysphonia Automatic Classification. J. Voice 2010, 24, 346–353. [Google Scholar] [CrossRef]

- Powell, M.E.; Cancio, M.R.; Young, D.; Nock, W.; Abdelmessih, B.; Zeller, A.; Morales, I.P.; Zhang, P.; Garrett, C.G.; Schmidt, D.; et al. Decoding phonation with artificial intelligence (D e P AI): Proof of concept. Laryngoscope Investig. Otolaryngol. 2019, 4, 328–334. [Google Scholar] [CrossRef]

| Number of Classifiers | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|

| q0.05 | 1.96 | 2.343 | 2.569 | 2.728 | 2.85 | 2.949 | 3.031 | 3.102 | 3.164 |

| q0.10 | 1.645 | 2.052 | 2.291 | 2.459 | 2.589 | 2.693 | 2.78 | 2.855 | 2.92 |

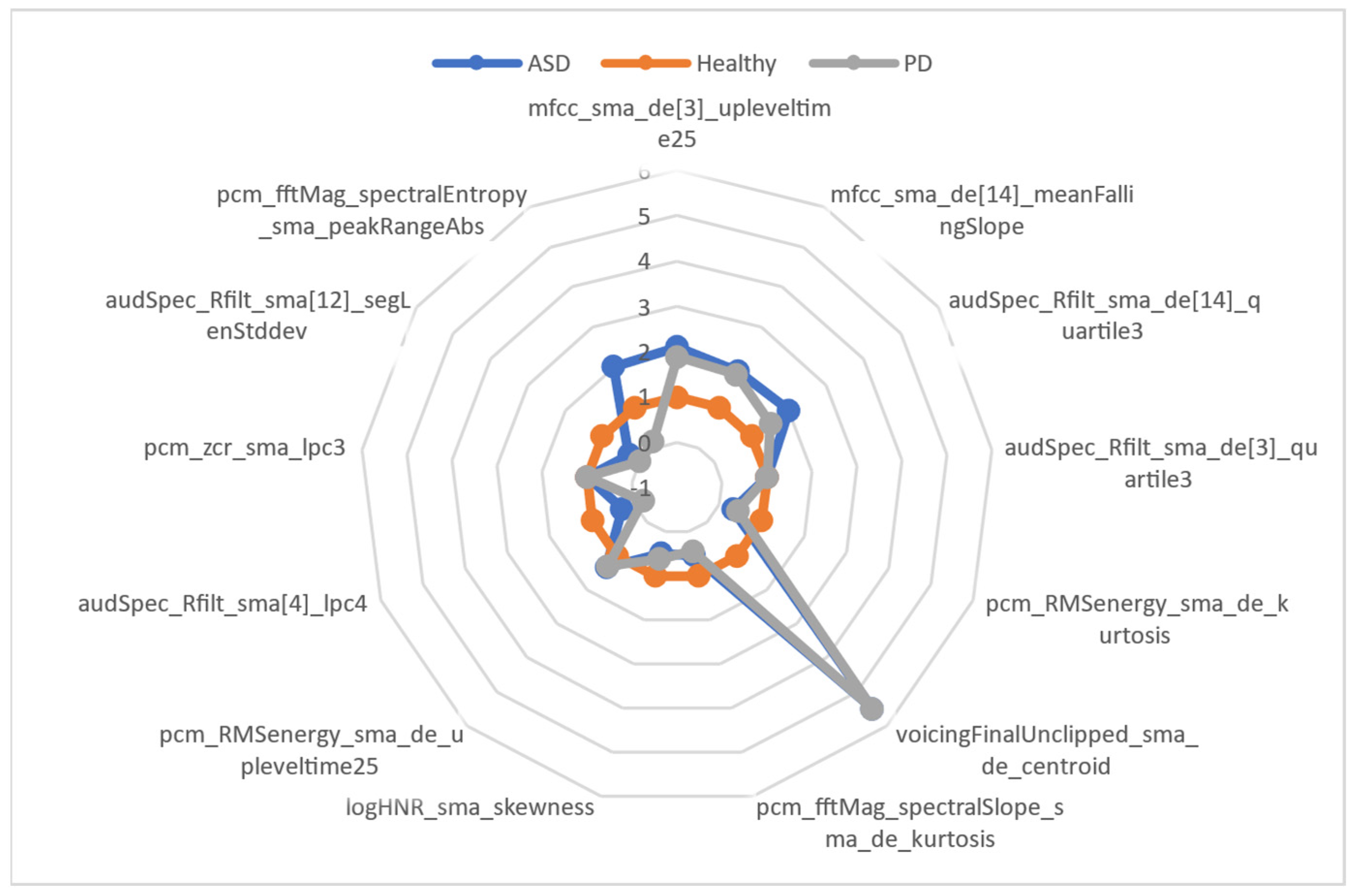

| Features | Group of LLDS | LLD | Functionals | Group |

|---|---|---|---|---|

| mfcc_sma_de[3]_upleveltime25 | Cepstral | MFCC | Up-Level Time 25% | Temporal |

| mfcc_sma_de[14]_meanFallingSlope | Cepstral | MFCC | Mean of Falling Slopes | Peaks |

| audSpec_Rfilt_sma_de[14]_quartile3 | Prosodic | RASTA-style filtered auditory spectrum | Quartile 3 | Percentiles |

| audSpec_Rfilt_sma_de[3]_quartile3 | Prosodic | RASTA-style filtered auditory spectrum | Quartile 3 | Percentiles |

| pcm_RMSenergy_sma_de_kurtosis | Prosodic | RMS Energy | Kurtosis | Moments |

| voicingFinalUnclipped_sma_de_centroid | Voice Quality | Voicing | Centroid | Temporal |

| pcm_fftMag_spectralSlope_sma_de_kurtosis | Spectral | Spectral Slope | Kurtosis | Moments |

| logHNR_sma_skewness | Voice Quality | HNR | Skewness | Moments |

| pcm_RMSenergy_sma_de_upleveltime25 | Prosodic | RMS Energy | Up-Level Time 25% | Temporal |

| audSpec_Rfilt_sma[4]_lpc4 | Prosodic | ZCR | Linear Prediction Coefficient 3 | Modulation |

| pcm_zcr_sma_lpc3 | Prosodic | ZCR | Linear Prediction Coefficient 4 | Modulation |

| audSpec_Rfilt_sma[12]_segLenStddev | Prosodic | RASTA-style filtered auditory spectrum | Standard Deviation | Moments |

| pcm_fftMag_spectralEntropy_sma_peakRangeAbs | Spectral | Spectral Entropy | Amplitude Range of Peaks | Peaks |

| Task | Classifier | Feature Selection | ACC | TP Rate | FP Rate | Precision | Recall | F-Measure | MCC | ROC Area | PRC Area |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Vowel/e/ | NB | Corr. | 91.94% | 0.919 | 0.042 | 0.92 | 0.919 | 0.919 | 0.88 | 0.986 | 0.976 |

| IG | 92.47% | 0.925 | 0.038 | 0.925 | 0.925 | 0.924 | 0.887 | 0.984 | 0.974 | ||

| GR | 93.55% | 0.935 | 0.035 | 0.936 | 0.935 | 0.935 | 0.902 | 0.985 | 0.975 | ||

| GA | 95.70% | 0.957 | 0.022 | 0.957 | 0.957 | 0.957 | 0.935 | 0.994 | 0.99 | ||

| RF | Corr. | 87.10% | 0.871 | 0.067 | 0.872 | 0.871 | 0.87 | 0.806 | 0.97 | 0.953 | |

| IG | 88.17% | 0.882 | 0.061 | 0.882 | 0.882 | 0.881 | 0.821 | 0.974 | 0.959 | ||

| GR | 87.10% | 0.871 | 0.068 | 0.871 | 0.871 | 0.87 | 0.806 | 0.97 | 0.95 | ||

| GA | 93.55% | 0.935 | 0.033 | 0.936 | 0.935 | 0.935 | 0.903 | 0.983 | 0.972 | ||

| MLP | Corr. | 90.32% | 0.903 | 0.05 | 0.903 | 0.903 | 0.902 | 0.856 | 0.965 | 0.944 | |

| IG | 89.25% | 0.892 | 0.055 | 0.892 | 0.892 | 0.891 | 0.84 | 0.966 | 0.936 | ||

| GR | 88.71% | 0.887 | 0.059 | 0.887 | 0.887 | 0.886 | 0.831 | 0.961 | 0.935 | ||

| GA | 93.01% | 0.93 | 0.036 | 0.931 | 0.93 | 0.93 | 0.896 | 0.969 | 0.949 | ||

| Sentence | NB | Corr. | 93.01% | 0.93 | 0.037 | 0.93 | 0.93 | 0.93 | 0.894 | 0.992 | 0.986 |

| IG | 91.94% | 0.919 | 0.042 | 0.919 | 0.919 | 0.919 | 0.877 | 0.985 | 0.966 | ||

| GR | 93.01% | 0.93 | 0.037 | 0.93 | 0.93 | 0.93 | 0.893 | 0.989 | 0.98 | ||

| GA | 99.46% | 0.995 | 0.003 | 0.995 | 0.995 | 0.995 | 0.992 | 0.998 | 0.996 | ||

| RF | Corr. | 87.63% | 0.876 | 0.069 | 0.88 | 0.876 | 0.876 | 0.813 | 0.981 | 0.966 | |

| IG | 89.78% | 0.898 | 0.056 | 0.899 | 0.898 | 0.898 | 0.845 | 0.982 | 0.969 | ||

| GR | 90.86% | 0.909 | 0.048 | 0.909 | 0.909 | 0.909 | 0.861 | 0.982 | 0.968 | ||

| GA | 96.77% | 0.968 | 0.018 | 0.969 | 0.968 | 0.968 | 0.951 | 0.991 | 0.984 | ||

| MLP | Corr. | 93.01% | 0.93 | 0.037 | 0.932 | 0.93 | 0.93 | 0.895 | 0.982 | 0.969 | |

| IG | 91.40% | 0.914 | 0.046 | 0.915 | 0.914 | 0.914 | 0.869 | 0.98 | 0.967 | ||

| GR | 91.94% | 0.919 | 0.042 | 0.92 | 0.919 | 0.919 | 0.878 | 0.982 | 0.97 | ||

| GA | 98.39% | 0.984 | 0.009 | 0.984 | 0.984 | 0.984 | 0.975 | 0.988 | 0.978 |

| Comparison | p-Value | Test | Null Hypothesis | Results |

|---|---|---|---|---|

| NB, MLP, RF | <0.0001 | Iman and Davenport | The performances of all classifiers are equal | Performances are unequal |

| NB-RF | <0.05 | Nemenyi | NB and RF are equal in performance | NB > RF |

| NB-MLP | 0.01 | Wilcoxon | NB and MLP are equal in performance | NB > MLP |

| RF-MLP | 0.01–0.025 | Wilcoxon | RF and MLP are equal in performance | MLP > RF |

| Sentence, vowel/e/ | 0.01 | Iman and Davenport | Sentence and vowel/e/ have equal performances | Sentence performs better |

| Corr., IG, GR, GA | 0.04 | Iman and Davenport test | All feature selection methods are equal in performance | The performance of all feature selection methods is unequal |

| GA-IG | <0.05 | Nemenyi test | GA and IG are equal in performance | GA > IG |

| GA-GR | 0.05 | Wilcoxon test | GA and GR are equal in performance | GA > GR |

| GA-Corr. | 0.05 | Wilcoxon test | GA and Correlation are equal in performance | GA > Correlation |

| IG-GR | >0.20 | Wilcoxon test | IG and GR are equal in performance | IG and GR are similar in performance |

| Corr.-GR | >0.20 | Wilcoxon test | Correlation and GR are equal in performance | Correlation and GR are similar in performance |

| Corr.-IG | >0.20 | Wilcoxon test | Correlation and IG are equal in performance | Correlation and IG are similar in performance |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cesarini, V.; Saggio, G.; Suppa, A.; Asci, F.; Pisani, A.; Calculli, A.; Fayad, R.; Hajj-Hassan, M.; Costantini, G. Voice Disorder Multi-Class Classification for the Distinction of Parkinson’s Disease and Adductor Spasmodic Dysphonia. Appl. Sci. 2023, 13, 8562. https://doi.org/10.3390/app13158562

Cesarini V, Saggio G, Suppa A, Asci F, Pisani A, Calculli A, Fayad R, Hajj-Hassan M, Costantini G. Voice Disorder Multi-Class Classification for the Distinction of Parkinson’s Disease and Adductor Spasmodic Dysphonia. Applied Sciences. 2023; 13(15):8562. https://doi.org/10.3390/app13158562

Chicago/Turabian StyleCesarini, Valerio, Giovanni Saggio, Antonio Suppa, Francesco Asci, Antonio Pisani, Alessandra Calculli, Rayan Fayad, Mohamad Hajj-Hassan, and Giovanni Costantini. 2023. "Voice Disorder Multi-Class Classification for the Distinction of Parkinson’s Disease and Adductor Spasmodic Dysphonia" Applied Sciences 13, no. 15: 8562. https://doi.org/10.3390/app13158562

APA StyleCesarini, V., Saggio, G., Suppa, A., Asci, F., Pisani, A., Calculli, A., Fayad, R., Hajj-Hassan, M., & Costantini, G. (2023). Voice Disorder Multi-Class Classification for the Distinction of Parkinson’s Disease and Adductor Spasmodic Dysphonia. Applied Sciences, 13(15), 8562. https://doi.org/10.3390/app13158562