Tracking Sensor Location by Video Analysis in Double-Shell Tank Inspections

Abstract

:Featured Application

Abstract

1. Introduction

2. Materials and Methods

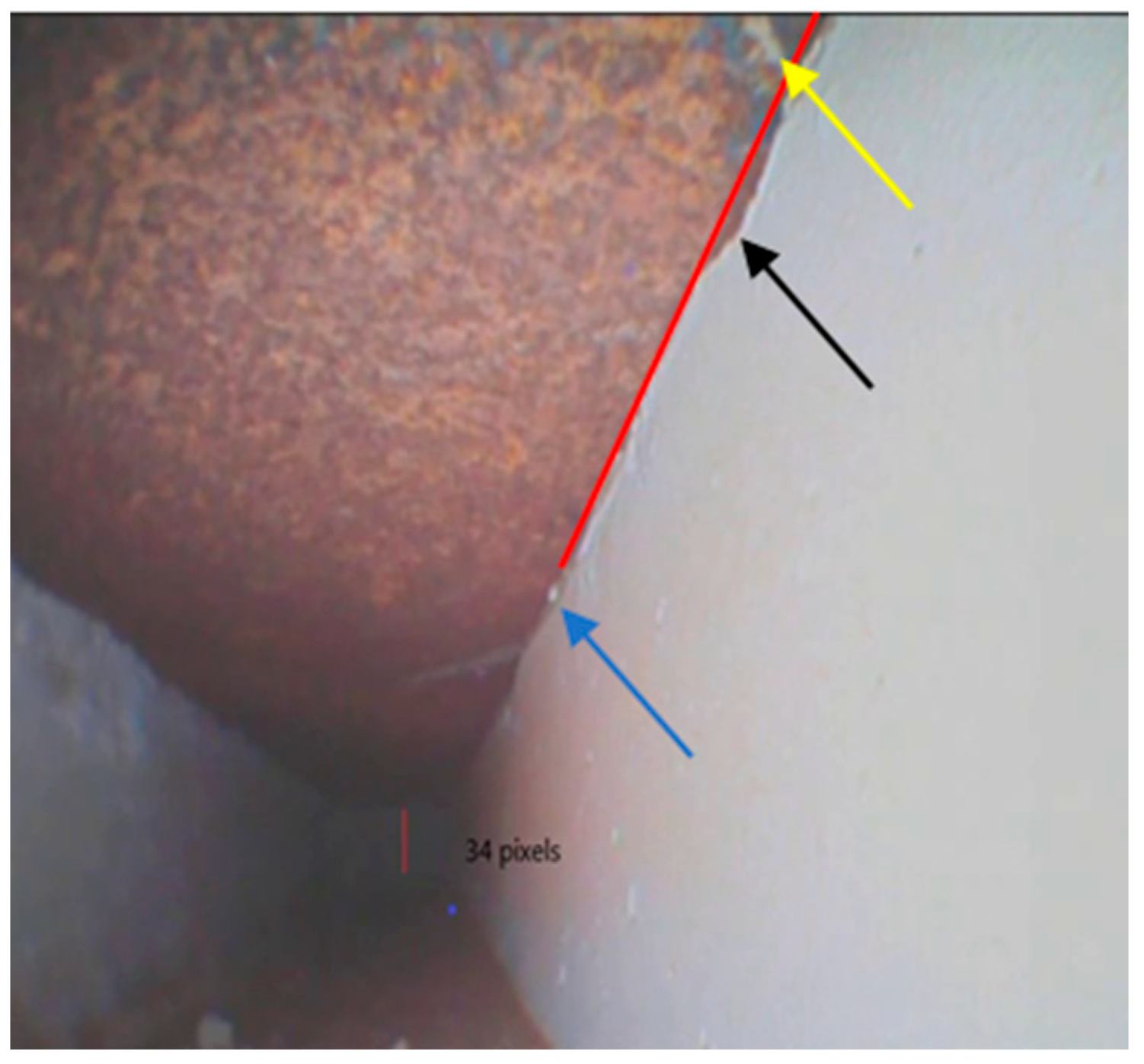

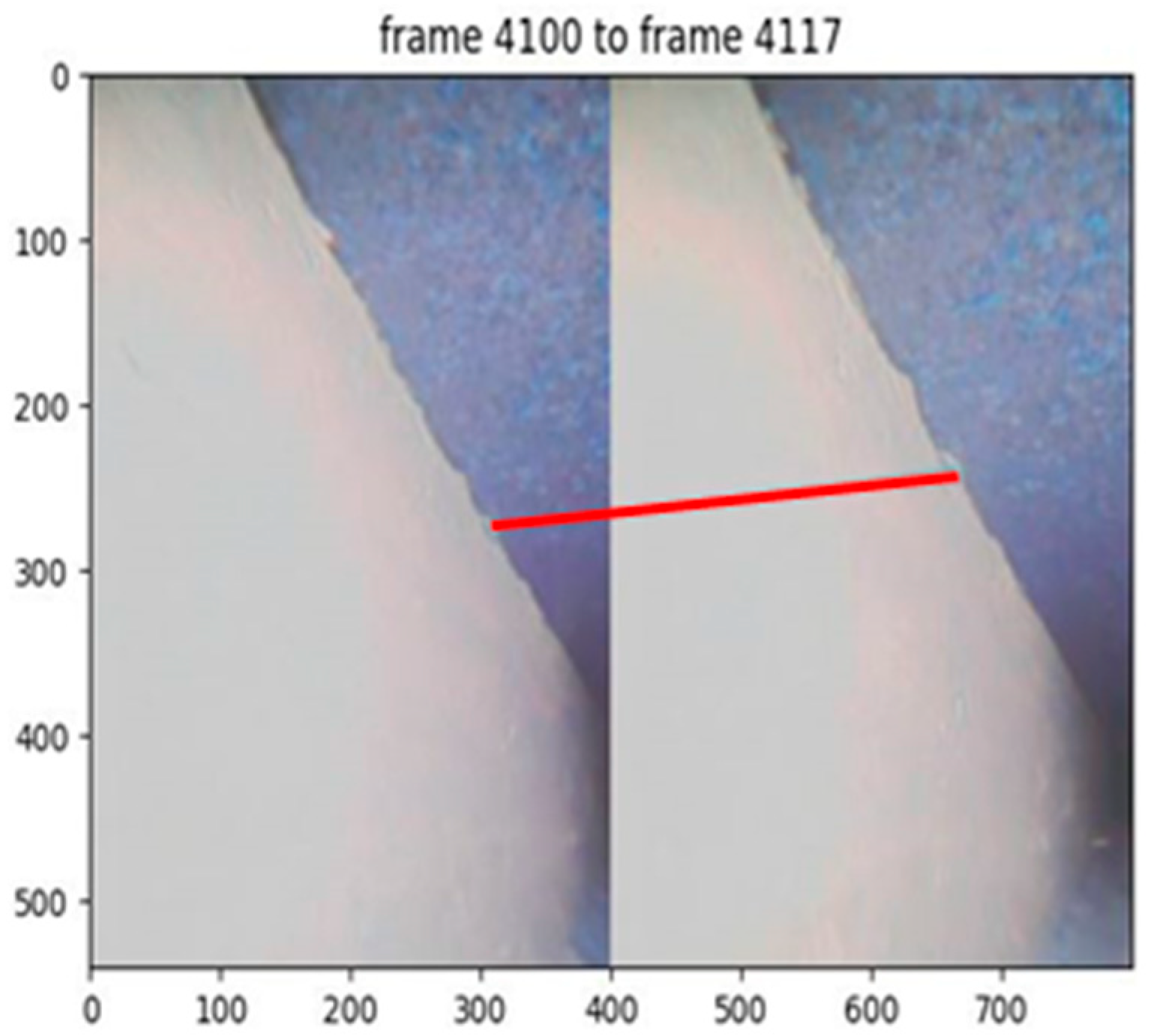

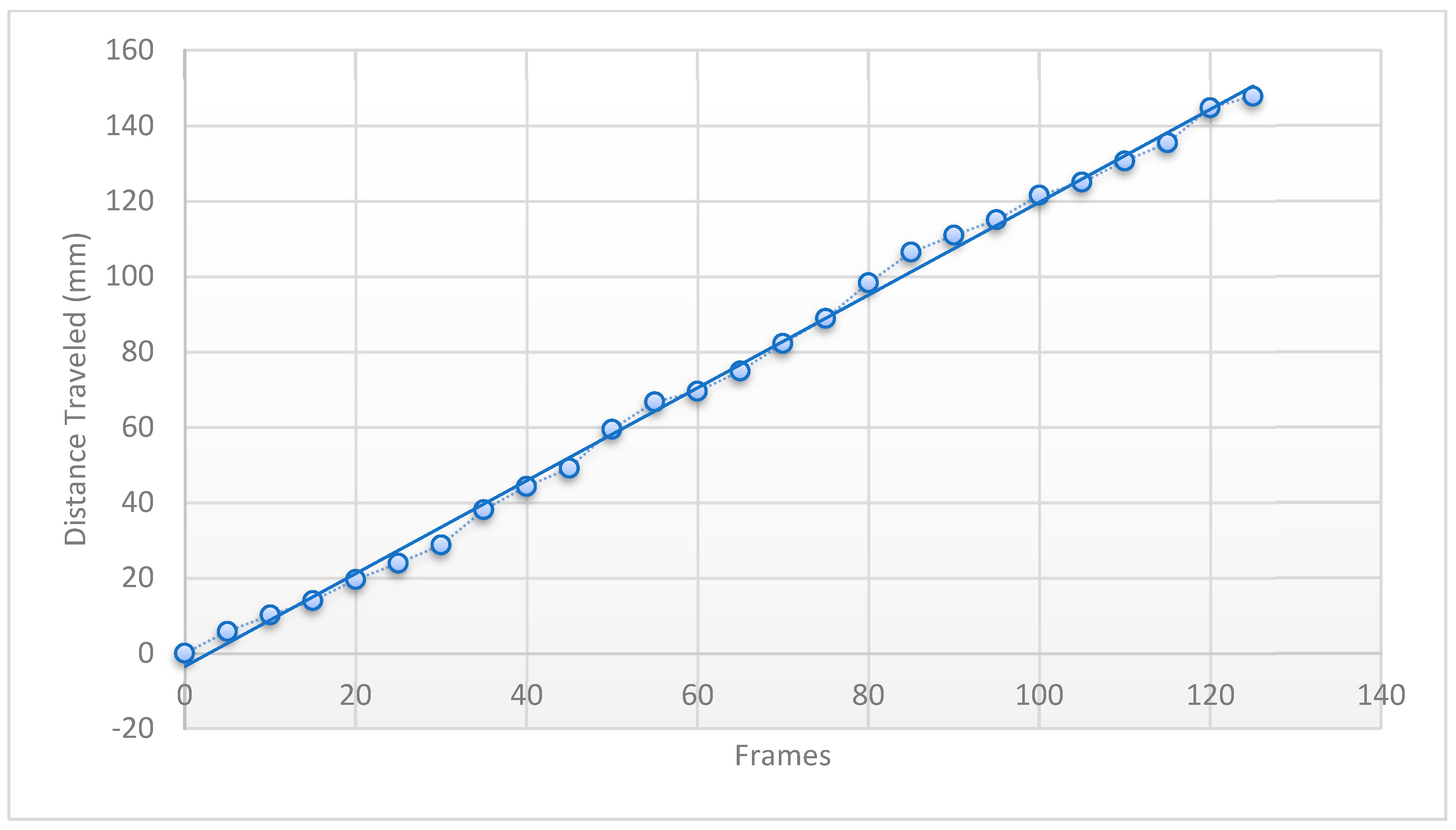

2.1. Prediction of Distance Moved from Analysis of Video Acquired during Movement—This Work Was Previously Reported [7] and Included Here for a Complete Discussion of Our Method of Sensor Tracking by Video Analysis

2.2. Tracking Sensor Location by Video Analysis

3. Results

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Girardot, C.; Venetz, T.; Boomer, K.; Johnson, J. Hanford Single-Shell Tank and Double-Shell Tank Integrity Programs. In Proceedings of the Waste Management Symposium, Phoenix, AZ, USA, 6–10 March 2016. [Google Scholar]

- Denslow, K.; Glass, S.; Larche, M.; Boomer, K.; Gunter, J. Hanford Under-tank Inspection with Ultrasonic Volumetric Non-destructive Examination Technology. In Proceedings of the Waste Management Symposium, Phoenix, AZ, USA, 18–22 March 2018. [Google Scholar]

- Zhoa, J.; Durham, N.; Abdel-Hati, K.; McKenzie, C.; Thomson, D.J. Acoustic guided wave techniques for detecting corrosion damage of electric grounding rods. Measurement 2019, 147, 106858. [Google Scholar] [CrossRef]

- Borigo, C.; Love, F.; Owens, S.; Rose, J.L. Guided Wave Phased Array Technology for Rapid Inspection of Hanford Double Shell Tank Liners. In Proceedings of the ASNT Annual Conference, Nashville, TN, USA, 30 October–2 November 2017. [Google Scholar]

- Denslow, K.; Moran, T.; Larche, M.; Glass, S.; Boomer, K.; Kelly, S.; Wooley, T.; Gunter, J.; Rice, J.; Stewart, D.; et al. Progress on Advancing Robotic Ultrasonic Volumetric Inspection Technology for Hanford Under-tank Inspection. In Proceedings of the WM2019 Conference, Phenix, AZ, USA, 3–7 March 2019. [Google Scholar]

- Hede, A.; Wooley, T.; Boomer, K.; Gunter, J.; Soon, G.; Nelson, E.; Denslow, K. Hanford Double-Shell Primary Tank Bottom Inspection Technology Development. In Proceedings of the WM2022 Conference, Phenix, AZ, USA, 6–10 March 2022. [Google Scholar]

- Cree, C.; Cater, E.; Wang, H.; Mo, C.; Miller, J. Tracking Robot Location in Non-Destructive Evaluation of Double-Shell Tanks. Appl. Sci. 2020, 10, 7318. [Google Scholar] [CrossRef]

- Dobell, C.; Hamilton, G. Marsupial Miniature Robotic Crawler Development and Deployment in Nuclear Waste Double Shell Tank Refractory Air Slots. In Proceedings of the ASNT Annual Conference, Nashville, TN, USA, 30 October–2 November 2017. [Google Scholar]

- Mastafa, M.; Stancu, A.; Delanoue, N.; Codres, E. Guaranteed SLAM—An interval approach. Robot. Auton. Syst. 2018, 100, 160–170. [Google Scholar] [CrossRef]

- Abouzabir, A.; Elouardi, A.; Latif, R.; Bouaziz, S.; Tajer, A. Embedding SLAM algorithms: Has it come of age? Robot. Auton. Syst. 2018, 100, 14–26. [Google Scholar] [CrossRef]

- Rosebrock, A. Triangle Similarity for Object/Marker to Camera Distance. Available online: https://www.pyimagesearch.com/2015/01/19/find-distance-camera-objectmarker-using-python-opencv/ (accessed on 19 January 2015).

- Gunter, J.R. Primary Tank Bottom Visual Inspection System Development and Initial Deployment at Tank AP-107. RPP-RPT-61208, November 2018.

- Canny, J. A Computational Approach to Edge Detection. Technical Report 6. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI–8, 679–698. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Lowe, D. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. Available online: https://docs.opencv.org/master/da/df5/tutorial_py_sift_intro.html (accessed on 10 July 2023). [CrossRef]

- Arandjelovic, R. Three things everyone should know to improve object retrieval. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), CVPR ‘12, Washington, DC, USA, 16–21 June 2012; pp. 2911–2918. Available online: https://docs.opencv.org/3.4/d5/d6f/tutorial_feature_flann_matcher.html (accessed on 10 July 2023).

- Bradski, G. The OpenCV Library. Dr. Dobb J. Softw. Tools 2000, 120, 122–125. [Google Scholar]

| Start Frame | Finish Frame | mm Pre-Frame |

|---|---|---|

| 4700 | 4720 | 1.0174 |

| 4720 | 4740 | 1.1418 |

| 4740 | 4759 | 1.7639 |

| 4759 | 4779 | 1.2402 |

| 4779 | 4799 | 0.9720 |

| run1 | run2 | run3 | run4 | run5 | Average | std dev | % Error | Target | |

|---|---|---|---|---|---|---|---|---|---|

| FS | 156.24 | 157.39 | 158.74 | 153.33 | 152.64 | 155.67 | 1.25 | 2.14 | 152.40 |

| FS | 168.24 | 164.11 | 149.27 | 133.57 | 161.88 | 155.41 | 9.97 | 1.98 | 152.40 |

| FS | 138.26 | 148.56 | 132.07 | 146.21 | 158.72 | 144.76 | 8.33 | −5.01 | 152.40 |

| FS | 166.71 | 159.31 | 137.48 | 143.69 | 146.13 | 150.66 | 15.20 | −1.14 | 152.40 |

| BS | 141.70 | 152.52 | 154.82 | 143.27 | 129.57 | 144.38 | 7.01 | −5.26 | 152.40 |

| BS | 153.99 | 144.12 | 173.71 | 166.41 | 139.52 | 155.55 | 15.07 | 2.07 | 152.40 |

| BS | 142.88 | 153.81 | 177.22 | 181.05 | 163.22 | 163.64 | 17.54 | 7.37 | 152.40 |

| FT | 154.42 | 149.22 | 131.95 | 143.16 | 151.91 | 146.13 | 11.77 | −4.11 | 152.40 |

| FT | 194.58 | 156.18 | 174.24 | 167.56 | 182.45 | 175.00 | 19.21 | 14.83 | 152.40 |

| FT | 88.43 | 79.78 | 76.93 | 99.94 | 77.02 | 84.42 | 5.99 | 3.86 | 81.28 |

| BT | 126.13 | 148.89 | 92.18 | 100.77 | 130.03 | 119.60 | 28.54 | −21.52 | 152.40 |

| BT | 144.41 | 160.74 | 267.46 | 241.84 | 140.80 | 191.05 | 66.83 | 25.36 | 152.40 |

| FW | 164.80 | 152.61 | 149.62 | 167.20 | 144.89 | 155.82 | 8.04 | 2.25 | 152.40 |

| FW | 144.85 | 135.50 | 137.12 | 156.97 | 139.76 | 142.84 | 5.00 | −6.27 | 152.40 |

| FW | 163.80 | 154.26 | 156.55 | 156.66 | 176.80 | 161.61 | 4.98 | 6.05 | 152.40 |

| FW | 153.66 | 144.39 | 192.53 | 239.20 | 201.25 | 186.21 | 25.54 | 22.18 | 152.40 |

| Total | 2403.11 | 2361.39 | 2461.88 | 2540.83 | 2396.58 | 2432.76 | 50.49 | 2.77 | 2367.28 |

| Site | Position Error (mm) |

|---|---|

| 1 | 8.01 |

| 2 | 11.9 |

| 3 | 20.0 |

| 4 | 23.7 |

| 5 | 41.9 |

| 6 | 38.0 |

| 7 | 48.5 |

| 8 | 60.7 |

| 9 | 71.9 |

| 10 | 82.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Price, J.; Aaberg, E.; Mo, C.; Miller, J. Tracking Sensor Location by Video Analysis in Double-Shell Tank Inspections. Appl. Sci. 2023, 13, 8708. https://doi.org/10.3390/app13158708

Price J, Aaberg E, Mo C, Miller J. Tracking Sensor Location by Video Analysis in Double-Shell Tank Inspections. Applied Sciences. 2023; 13(15):8708. https://doi.org/10.3390/app13158708

Chicago/Turabian StylePrice, Jacob, Ethan Aaberg, Changki Mo, and John Miller. 2023. "Tracking Sensor Location by Video Analysis in Double-Shell Tank Inspections" Applied Sciences 13, no. 15: 8708. https://doi.org/10.3390/app13158708

APA StylePrice, J., Aaberg, E., Mo, C., & Miller, J. (2023). Tracking Sensor Location by Video Analysis in Double-Shell Tank Inspections. Applied Sciences, 13(15), 8708. https://doi.org/10.3390/app13158708