A Symbol Recognition System for Single-Line Diagrams Developed Using a Deep-Learning Approach

Abstract

:Featured Application

Abstract

1. Introduction

1.1. Contributions of This Study

- The utilization of a DCGAN and an LSGAN to generate mixed-quality single-line symbols;

- The combination of original and synthetic datasets to create an augmented dataset, thereby improving classification accuracy;

- The proposal of a YOLO-based solution to enhance performance in single-line symbol classification and recognition tasks. As part of this effort, the YOLO V5 training set is expanded by incorporating newly generated synthetic data;

- A comprehensive comparison and analysis of classification accuracy between the original dataset and the augmented dataset.

1.2. Structure of the Study

2. Related Works

2.1. Digitization of Engineering Documents

2.2. GAN Networks

3. Proposed Method

3.1. SLD Symbol Recognition Method

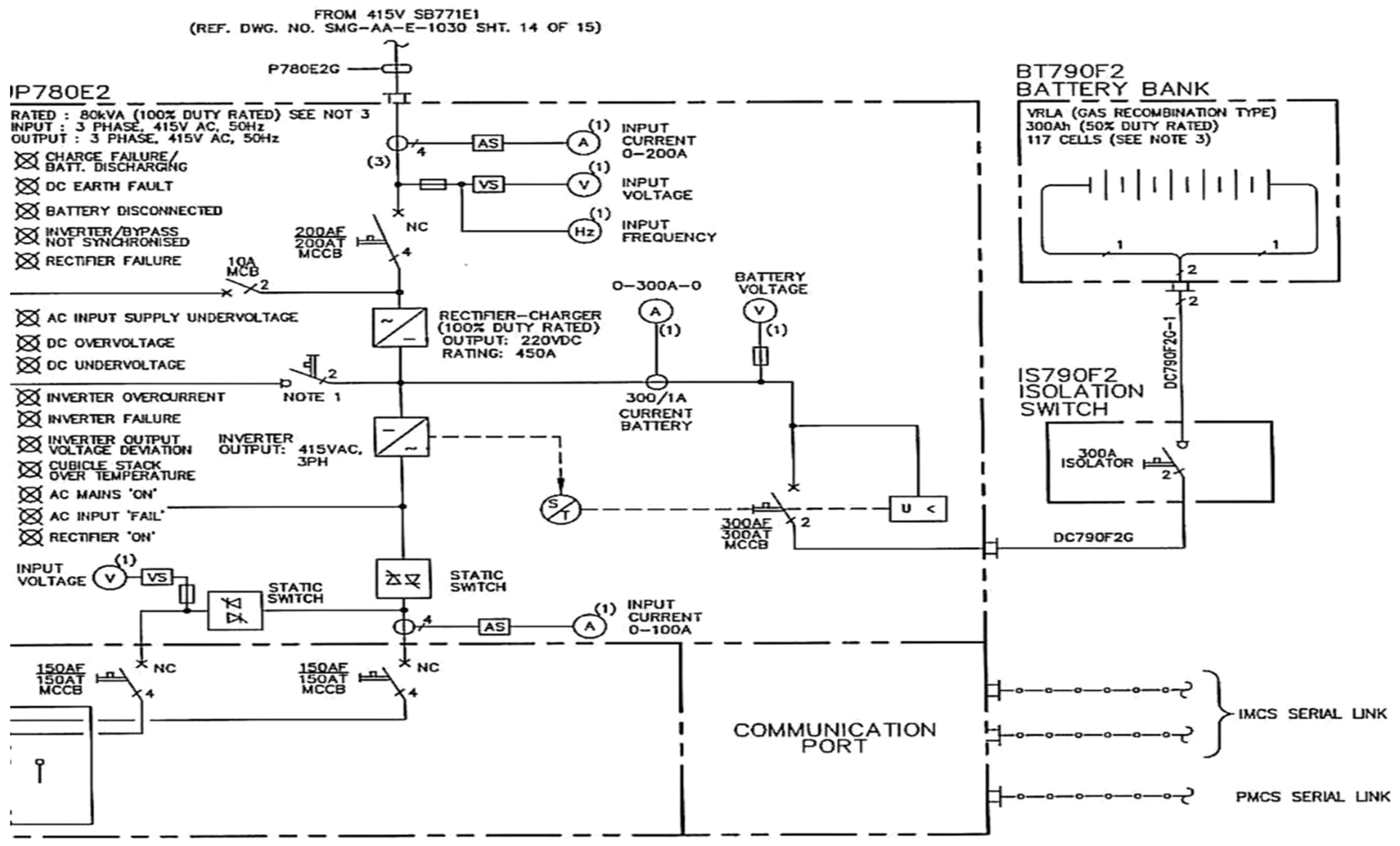

3.2. Dataset SLD Diagrams

Analysis of SLD Data

3.3. Data Generation by GANs

3.3.1. Framework Structure of the DCGAN

3.3.2. Network Structure of the LSGAN

3.4. Symbol Spotting Based on YOLO V5

The Structure of the YOLO V5 Network

4. Experiments and Results

4.1. Data Generation Results

4.1.1. Construction of the SLD Image Dataset

4.1.2. SLD Dataset Distribution

4.2. Evaluation of YOLO V5 Training

4.2.1. Computer Hardware Configuration

4.2.2. Evolution of Hyper-Parameters

4.2.3. Results and Evaluations

5. Discussion

5.1. Comparison with Different Data Augmentation Techniques

5.2. Analysis of the Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Moreno-García, C.F.; Elyan, E.; Jayne, C. Heuristics-Based Detection to Improve Text/Graphics Segmentation in Complex Engineering Drawings. In Proceedings of the Engineering Applications of Neural Networks: 18th International Conference (EANN 2017), Athens, Greece, 25–27 August 2017; pp. 87–98. [Google Scholar] [CrossRef]

- Bhanbhro, H.; Hassan, S.R.; Nizamani, S.Z.; Bakhsh, S.T.; Alassafi, M.O. Enhanced Textual Password Scheme for Better Security and Memorability. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Ali-Gombe, A.; Elyan, E. MFC-GAN: Class-imbalanced dataset classification using Multiple Fake Class Generative Adversarial Network. Neurocomputing 2019, 361, 212–221. [Google Scholar] [CrossRef]

- Elyan, E.; Jamieson, L.; Ali-Gombe, A. Deep learning for symbols detection and classification in engineering drawings. Neural Netw. 2020, 129, 91–102. [Google Scholar] [CrossRef] [PubMed]

- Huang, R.; Gu, J.; Sun, X.; Hou, Y.; Uddin, S. A Rapid Recognition Method for Electronic Components Based on the Improved YOLO-V3 Network. Electronics 2019, 8, 825. [Google Scholar] [CrossRef] [Green Version]

- Jamieson, L.; Moreno-Garcia, C.F.; Elyan, E. Deep Learning for Text Detection and Recognition in Complex Engineering Diagrams. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Karthi, M.; Muthulakshmi, V.; Priscilla, R.; Praveen, P.; Vanisri, K. Evolution of YOLO-V5 Algorithm for Object Detection: Automated Detection of Library Books and Performace validation of Dataset. In Proceedings of the 2021 International Conference on Innovative Computing, Intelligent Communication and Smart Electrical Systems (ICSES), Chennai, India, 24–25 September 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Lee, H.; Lee, J.; Kim, H.; Mun, D. Dataset and method for deep learning-based reconstruction of 3D CAD models containing machining features for mechanical parts. J. Comput. Des. Eng. 2021, 9, 114–127. [Google Scholar] [CrossRef]

- Naosekpam, V.; Sahu, N. Text detection, recognition, and script identification in natural scene images: A Review. Int. J. Multimedia Inf. Retr. 2022, 11, 291–314. [Google Scholar] [CrossRef]

- Theisen, M.F.; Flores, K.N.; Balhorn, L.S.; Schweidtmann, A.M. Digitization of chemical process flow diagrams using deep convolutional neural networks. Digit. Chem. Eng. 2023, 6, 100072. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Dong, Z.; Gao, M. Improved YOLOv5 network for real-time multi-scale traffic sign detection. Neural Comput. Appl. 2022, 35, 7853–7865. [Google Scholar] [CrossRef]

- Whang, S.E.; Roh, Y.; Song, H.; Lee, J.-G. Data collection and quality challenges in deep learning: A data-centric AI perspective. VLDB J. 2023, 32, 791–813. [Google Scholar] [CrossRef]

- Guptaa, M.; Weia, C.; Czerniawskia, T. Automated Valve Detection in Piping and Instrumentation (P&ID) Diagrams. In Proceedings of the 39th International Symposium on Automation and Robotics in Construction (ISARC 2022), Bogota, Colombia, 13–15 July 2022; pp. 630–637. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object dtection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Diwan, T.; Anirudh, G.; Tembhurne, J.V. Object detection using YOLO: Challenges, architectural successors, datasets and applications. Multimedia Tools Appl. 2022, 82, 9243–9275. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Hwang, K.-I. YOLO with adaptive frame control for real-time object detection applications. Multimed. Tools Appl. 2022, 81, 36375–36396. [Google Scholar] [CrossRef]

- Gada, M. Object Detection for P&ID Images using various Deep Learning Techniques. In Proceedings of the 2021 International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 27–29 January 2021; pp. 1–5. [Google Scholar]

- Zhang, Q.; Zhang, M.; Chen, T.; Sun, Z.; Ma, Y.; Yu, B. Recent advances in convolutional neural network acceleration. Neurocomputing 2018, 323, 37–51. [Google Scholar] [CrossRef] [Green Version]

- Hong, J.; Li, Y.; Xu, Y.; Yuan, C.; Fan, H.; Liu, G.; Dai, R. Substation One-Line Diagram Automatic Generation and Visualization. In Proceedings of the 2019 IEEE Innovative Smart Grid Technologies-Asia (ISGT Asia), Chengdu, China, 21–24 May 2019; pp. 1086–1091. [Google Scholar] [CrossRef] [Green Version]

- Ismail, M.H.A.; Tailakov, D. Identification of Objects in Oilfield Infrastructure Using Engineering Diagram and Machine Learning Methods. In Proceedings of the 2021 IEEE Symposium on Computers & Informatics (ISCI), Kuala Lumpur, Malaysia, 16 October 2021; pp. 19–24. [Google Scholar] [CrossRef]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo algorithm developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Liu, X.; Meng, G.; Pan, C. Scene text detection and recognition with advances in deep learning: A survey. Int. J. Doc. Anal. Recognit. (IJDAR) 2019, 22, 143–162. [Google Scholar] [CrossRef]

- Mani, S.; Haddad, M.A.; Constantini, D.; Douhard, W.; Li, Q.; Poirier, L. Automatic Digitization of Engineering Diagrams using Deep Learning and Graph Search. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 673–679. [Google Scholar]

- Moreno-García, C.F.; Elyan, E.; Jayne, C. New trends on digitisation of complex engineering drawings. Neural Comput. Appl. 2018, 31, 1695–1712. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, T.; Van Pham, L.; Nguyen, C.; Van Nguyen, V. Object Detection and Text Recognition in Large-scale Technical Drawings. In Proceedings of the 10th International Conference on Pattern Recognition Applications and Methods (Icpram), Vienna, Austria, 17 December 2021; pp. 612–619. [Google Scholar]

- Nurminen, J.K.; Rainio, K.; Numminen, J.-P.; Syrjänen, T.; Paganus, N.; Honkoila, K. Object detection in design diagrams with machine learning. In Proceedings of the International Conference on Computer Recognition Systems, Polanica Zdroj, Poland, 20–22 May 2019; pp. 27–36. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Rezvanifar, A.; Cote, M.; Albu, A.B. Symbol Spotting on Digital Architectural Floor Plans Using a Deep Learning-based Framework. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 2419–2428. [Google Scholar] [CrossRef]

- Sarkar, S.; Pandey, P.; Kar, S. Automatic Detection and Classification of Symbols in Engineering Drawings. arXiv 2022, arXiv:2204.13277. [Google Scholar]

- Shetty, A.K.; Saha, I.; Sanghvi, R.M.; Save, S.A.; Patel, Y.J. A review: Object detection models. In Proceedings of the 2021 6th International Conference for Convergence in Technology (I2CT), Maharashtra, India, 2–4 April 2021; pp. 1–8. [Google Scholar]

- Shin, H.-J.; Jeon, E.-M.; Kwon, D.-k.; Kwon, J.-S.; Lee, C.-J. Automatic Recognition of Symbol Objects in P&IDs using Artificial Intelligence. Plant J. 2021, 17, 37–41. [Google Scholar]

- Wang, Q.S.; Wang, F.S.; Chen, J.G.; Liu, F.R. Faster R-CNN Target-Detection Algorithm Fused with Adaptive Attention Mechanism. Laser Optoelectron P 2022, 12, 59. [Google Scholar] [CrossRef]

- Wen, L.; Jo, K.-H. Fast LiDAR R-CNN: Residual Relation-Aware Region Proposal Networks for Multiclass 3-D Object Detection. IEEE Sens. J. 2022, 22, 12323–12331. [Google Scholar] [CrossRef]

- Yu, E.-S.; Cha, J.-M.; Lee, T.; Kim, J.; Mun, D. Features Recognition from Piping and Instrumentation Diagrams in Image Format Using a Deep Learning Network. Energies 2019, 12, 4425. [Google Scholar] [CrossRef] [Green Version]

- Denton, E.L.; Chintala, S.; Fergus, R. Deep generative image models using a laplacian pyramid of adversarial networks. Adv. Neural Inf. Process. Syst. 2015. [Google Scholar]

- Dong, Q.; Gong, S.; Zhu, X. Class rectification hard mining for imbalanced deep learning. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1851–1860. [Google Scholar]

- Dosovitskiy, A.; Springenberg, J.T.; Riedmiller, M.; Brox, T. Discriminative unsupervised feature learning with convolutional neural networks. Adv. Neural Inf. Process. Syst. 2014. [CrossRef] [PubMed] [Green Version]

- Fernández, A.; López, V.; Galar, M.; del Jesus, M.J.; Herrera, F. Analysing the classification of imbalanced data-sets with multiple classes: Binarization techniques and ad-hoc approaches. Knowledge-Based Syst. 2013, 42, 97–110. [Google Scholar] [CrossRef]

- Frid-Adar, M.; Klang, E.; Amitai, M.; Goldberger, J.; Greenspan, H. Synthetic data augmentation using GAN for improved liver lesion classification. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 289–293. [Google Scholar] [CrossRef] [Green Version]

- Yun, D.-Y.; Seo, S.-K.; Zahid, U.; Lee, C.-J. Deep Neural Network for Automatic Image Recognition of Engineering Diagrams. Appl. Sci. 2020, 10, 4005. [Google Scholar] [CrossRef]

- Zhang, Z.; Xia, S.; Cai, Y.; Yang, C.; Zeng, S. A Soft-YoloV4 for High-Performance Head Detection and Counting. Mathematics 2021, 9, 3096. [Google Scholar] [CrossRef]

- Zhao, Z.-Q.; Zheng, P.; Xu, S.-T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [Green Version]

- Costagliola, G.; Deufemia, V.; Risi, M. A Multi-layer Parsing Strategy for On-line Recognition of Hand-drawn Diagrams. In Proceedings of the Visual Languages and Human-Centric Computing (VL/HCC’06), Brighton, UK, 4–8 September 2006; pp. 103–110. [Google Scholar] [CrossRef]

- Feng, G.; Viard-Gaudin, C.; Sun, Z. On-line hand-drawn electric circuit diagram recognition using 2D dynamic programming. Pattern Recognit. 2009, 42, 3215–3223. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Viard-Gaudin, C.; Wu, L. An Online Hand-Drawn Electric Circuit Diagram Recognition System Using Hidden Markov Models. In Proceedings of the 2008 International Symposium on Information Science and Engineering, Shanghai, China, 20–22 December 2008; Volume 2, pp. 143–148. [Google Scholar] [CrossRef]

- Luque, A.; Carrasco, A.; Martín, A.; de Las Heras, A. The impact of class imbalance in classification performance metrics based on the binary confusion matrix. Pattern Recognit. 2019, 91, 216–231. [Google Scholar] [CrossRef]

- Douzas, G.; Bacao, F. Effective data generation for imbalanced learning using conditional generative adversarial networks. Expert Syst. Appl. 2018, 91, 464–471. [Google Scholar] [CrossRef]

- Baur, C.; Albarqouni, S.; Navab, N. MelanoGANs: High resolution skin lesion synthesis with GANs. arXiv 2018, arXiv:1804.04338. [Google Scholar]

- Antoniou, A.; Storkey, A.; Edwards, H. Data Augmentation Generative Adversarial Networks. arXiv 2017, arXiv:1711.04340. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, C.; Li, Y.; Loy, C.C.; Tang, X. Learning deep representation for imbalanced classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5375–5384. [Google Scholar]

- Inoue, H. Data augmentation by pairing samples for images classification. arXiv 2018, arXiv:1801.02929. [Google Scholar]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive growing of gans for improved quality, stability, and variation. arXiv 2017, arXiv:1710.10196. [Google Scholar]

- Mariani, G.; Scheidegger, F.; Istrate, R.; Bekas, C.; Malossi, C. Bagan: Data augmentation with balancing gan. arXiv 2018, arXiv:1803.09655. [Google Scholar]

- Odena, A. Semi-supervised learning with generative adversarial networks. arXiv 2016, arXiv:1606.01583. [Google Scholar]

- Wan, L.; Wan, J.; Jin, Y.; Tan, Z.; Li, S.Z. Fine-Grained Multi-Attribute Adversarial Learning for Face Generation of Age, Gender and Ethnicity. In Proceedings of the 2018 International Conference on Biometrics (ICB), Gold Coast, QLD, Australia, 20–23 February 2018; pp. 98–103. [Google Scholar] [CrossRef]

- Yue, Y.; Liu, H.; Meng, X.; Li, Y.; Du, Y. Generation of High-Precision Ground Penetrating Radar Images Using Improved Least Square Generative Adversarial Networks. Remote Sens. 2021, 13, 4590. [Google Scholar] [CrossRef]

- Uzun, C.; Çolakoğlu, M.B.; İnceoğlu, A. GAN as a generative architectural plan layout tool: A case study for training DCGAN with Palladian Plans and evaluation of DCGAN outputs. A|Z ITU J. Fac. Arch. 2020, 17, 185–198. [Google Scholar] [CrossRef]

| Group | Original Images | Synthetic Images | |

|---|---|---|---|

| DCGAN | LSGAN | ||

| 1 | ✓ | ||

| 2 | ✓ | ✓ | ✓ |

| Group | Total Images | Generated Image Size (px) | Total Images Generated |

|---|---|---|---|

| 1 | 200 | 64 × 64 | 1000 |

| 2 | 200 | 32 × 32 | 1000 |

| 3 | 300 | 64 × 64 | 1000 |

| 4 | 300 | 32 × 32 | 1000 |

| Symbol Name | Original Symbol | Original Instances | Generated Symbol | Generated Instances |

|---|---|---|---|---|

| Voltmeter |  | 66 |  | 600 |

| CT |  | 102 |  | 569 |

| Circuit Breaker |  | 298 |  | 610 |

| Delta |  | 158 |  | 690 |

| Transformer |  | 89 |  | 577 |

| Inductance |  | 35 |  | 687 |

| Generator |  | 309 |  | 689 |

| OCB |  | 55 |  | 580 |

| Relay |  | 68 |  | 489 |

| Switch |  | 425 |  | 468 |

| Motor |  | 79 |  | 560 |

| Air Circuit Breaker |  | 155 |  | 798 |

| Iron-Core Inductor |  | 39 |  | 789 |

| Draw-Out Fuse |  | 20 |  | 780 |

| Ground |  | 25 |  | 670 |

| Diode |  | 30 |  | 633 |

| Symbol | Original Dataset | Augmented Dataset | ||||||

|---|---|---|---|---|---|---|---|---|

| Samples | Precision | Recall | F1 Score | Samples | Precision | Recall | F1 Score | |

| Voltmeter | 66 | 0.78 | 0.82 | 0.77 | 666 | 1.00 | 1.00 | 1.00 |

| CT | 102 | 0.92 | 1.00 | 0.95 | 671 | 1.00 | 0.98 | 0.98 |

| Circuit Breaker | 298 | 0.78 | 0.82 | 0.77 | 908 | 1.00 | 1.00 | 1.00 |

| Delta | 158 | 0.33 | 1.00 | 0.49 | 848 | 1.00 | 1.00 | 1.00 |

| Transformer | 89 | 0.90 | 0.77 | 0.82 | 666 | 1.00 | 1.00 | 1.00 |

| Inductance | 35 | 0.68 | 1.00 | 0.80 | 722 | 0.92 | 1.00 | 0.95 |

| Generator | 309 | 1.00 | 0.58 | 0.73 | 998 | 1.00 | 1.00 | 1.00 |

| OCB | 55 | 1.00 | 0.91 | 0.95 | 635 | 1.00 | 1.00 | 1.00 |

| Relay | 68 | 0.38 | 0.01 | 0.019 | 557 | 1.00 | 1.00 | 1.00 |

| Switch | 425 | 1.00 | 0.68 | 0.80 | 893 | 1.00 | 0.98 | 0.98 |

| Motor | 79 | 1.00 | 0.98 | 0.98 | 639 | 1.00 | 0.98 | 0.98 |

| Air Circuit Breaker | 155 | 0.56 | 0.98 | 0.70 | 953 | 1.00 | 1.00 | 1.00 |

| Iron-Core Inductor | 39 | 0.56 | 0.98 | 0.70 | 828 | 1.00 | 1.00 | 1.00 |

| Draw-Out Fuse | 20 | 0.33 | 1.0 | 0.49 | 800 | 1.00 | 1.00 | 1.00 |

| Ground | 25 | 0.00 | 0.00 | 0.00 | 695 | 0.92 | 1.00 | 0.95 |

| Diode | 30 | 0.92 | 1.00 | 0.95 | 663 | 1.00 | 0.98 | 0.98 |

| Dataset | Accuracy | False Detection | Missed Detection |

|---|---|---|---|

| Original | 89% | 3 | 4 |

| Augmented | 95% | 2 | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bhanbhro, H.; Kwang Hooi, Y.; Kusakunniran, W.; Amur, Z.H. A Symbol Recognition System for Single-Line Diagrams Developed Using a Deep-Learning Approach. Appl. Sci. 2023, 13, 8816. https://doi.org/10.3390/app13158816

Bhanbhro H, Kwang Hooi Y, Kusakunniran W, Amur ZH. A Symbol Recognition System for Single-Line Diagrams Developed Using a Deep-Learning Approach. Applied Sciences. 2023; 13(15):8816. https://doi.org/10.3390/app13158816

Chicago/Turabian StyleBhanbhro, Hina, Yew Kwang Hooi, Worapan Kusakunniran, and Zaira Hassan Amur. 2023. "A Symbol Recognition System for Single-Line Diagrams Developed Using a Deep-Learning Approach" Applied Sciences 13, no. 15: 8816. https://doi.org/10.3390/app13158816

APA StyleBhanbhro, H., Kwang Hooi, Y., Kusakunniran, W., & Amur, Z. H. (2023). A Symbol Recognition System for Single-Line Diagrams Developed Using a Deep-Learning Approach. Applied Sciences, 13(15), 8816. https://doi.org/10.3390/app13158816