M2GF: Multi-Scale and Multi-Directional Gabor Filters for Image Edge Detection

Abstract

:1. Introduction

- A set of Gabor filters is used to attain rich and detailed features of edge under different scales and channels.

- A novel fusion strategy is proposed to obtain more accurate features of edge that are not disturbed by noise.

- A new method for calculating hysteresis threshold is designed to obtain the edge detection results with high accuracy and robust noise.

2. Related Work

2.1. The Conversion of RGB Space to CIE L*a*b* Space

2.2. The Multi-Scale and Multi-Directional Gabor Filter

3. The Proposed Edge Detection

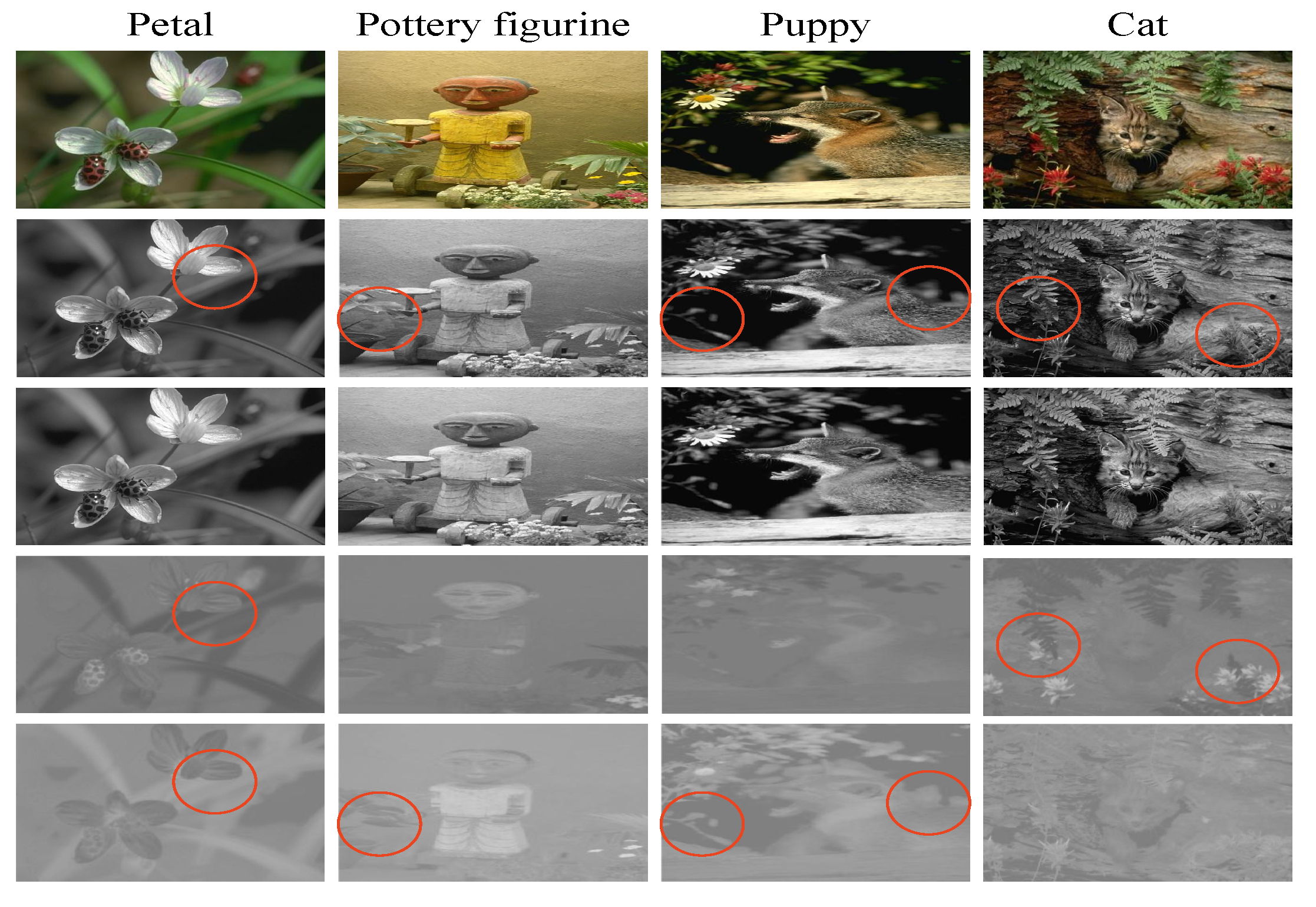

3.1. The ESMs of the Color Image and the Proposal of Fused Edge Features

3.2. Proposed Edge Detection

- (i)

- Convert color images to L*a*b* space.

- (ii)

- Extract edge strength maps from the each channel by multi-directional Gabor filters with multi-scale, and the fused edge feature is attained by the computation of ESMs in terms of Equation (8).

- (iii)

- Calculate the global and local average changes of the image, and :where refers to the dimension of the image, is the fusion of features in Equation (8). The Q is a matrix with side length W, whose values are all ones. And the Q is convolved with while is the stride. The visual system is simulated to perform contrast equalization on the improved fusion of ESM, which is defined as

- (iv)

- Apply the non-maxima suppression for each pixel, the gradient modulus and orientation are used to determine whether it is the maximum of .

- (v)

- Set the upper and lower limitations, which are determined by the histogram of the fused edge feature of the input image. The dimension of the image is , and the coefficients and :where the symbol “[]” is down-integer operation, and represent the th pixel and the th pixel listed the ESM from smallest to largest.

- (vi)

- Make the hysteresis decision. The determination of edge pixels is implemented in two stages. All the pixels whose value of the fused ESM exceeds are recognized as edge pixels. A candidate edge pixel with values of fused feature between and is regarded as an edge pixel if it can be connected with strong edge pixels in the four- or eight-neighborhood criterion.

4. Experiments

4.1. The Superiority of CIE L*a*b* Color Space

4.2. PR Curve Assessment

4.3. FOM Index Assessment

4.4. Experiments on the BSDS and NYUD Dataset

5. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jing, J.; Liu, S.; Wang, G.; Zhang, W.; Sun, C. Recent advances on image edge detection: A comprehensive review. Neurocomputing 2022, 503, 259–271. [Google Scholar] [CrossRef]

- Torre, V.; Poggio, T.A. On Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 147–163. [Google Scholar] [CrossRef] [PubMed]

- Upla, K.P.; Joshi, M.V.; Gajjar, P.P. An Edge Preserving Multiresolution Fusion: Use of Contourlet Transform and MRF Prior. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3210–3220. [Google Scholar] [CrossRef]

- Shui, P.; Zhang, W. Noise-robust edge detector combining isotropic and anisotropic Gaussian kernels. Pattern Recognit. 2012, 45, 806–820. [Google Scholar] [CrossRef]

- Zhang, W.; Zhao, Y.; Breckon, T.P.; Chen, L. Noise robust image edge detection based upon the automatic anisotropic Gaussian kernels. Pattern Recognit. 2017, 63, 193–205. [Google Scholar] [CrossRef]

- Li, Y.; Bi, Y.; Zhang, W.; Sun, C. Multi-Scale Anisotropic Gaussian Kernels for Image Edge Detection. IEEE Access 2020, 8, 1803–1812. [Google Scholar] [CrossRef]

- Jing, J.; Liu, S.; Liu, C.; Gao, T.; Zhang, W.; Sun, C. A novel decision mechanism for image edge detection. In Proceedings of the International Conference on Intelligent Computing, Melbourne, Australia, 25–27 June 2021; Springer: Cham, Switzerland, 2021; pp. 274–287. [Google Scholar]

- Shi, J.; Malik, J. Normalized cuts and image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 888–905. [Google Scholar]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- Ramirez Rivera, A.; Murshed, M.; Kim, J.; Chae, O. Background modeling through statistical edge-segment distributions. IEEE Trans. Circuits Syst. Video Technol. 2013, 23, 1375–1387. [Google Scholar] [CrossRef]

- Manjunath, B.; Ma, W. Texture features for browsing and retrieval of image data. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 837–842. [Google Scholar] [CrossRef]

- Cheng, M.; Mitra, N.J.; Huang, X.; Torr, P.H.S.; Hu, S. Global contrast based salient region detection. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 569–582. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Tian, Q.; Lu, K.; Huang, Q.; Gao, W. Edge-SIFT: Discriminative binary descriptor for scalable partial-duplicate mobile search. IEEE Trans. Image Process. 2013, 22, 2889–2902. [Google Scholar] [CrossRef] [PubMed]

- Shui, P.; Zhang, W. Corner Detection and Classification Using Anisotropic Directional Derivative Representations. IEEE Trans. Image Process. 2013, 22, 3204–3218. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Sun, C.; Breckon, T.; Alshammari, N. Discrete curvature representations for noise robust image corner detection. IEEE Trans. Image Process. 2019, 28, 4444–4459. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, F.; Zhu, L.; Zhou, Z. Corner detection using Gabor filters. Iet Image Process. 2014, 8, 639–646. [Google Scholar] [CrossRef]

- Zhang, W.; Shui, P. Contour-based corner detection via angle difference of principal directions of anisotropic Gaussian directional derivatives. Pattern Recognit. 2015, 48, 2785–2797. [Google Scholar] [CrossRef]

- Zhang, W.; Sun, C.; Gao, Y. Image intensity variation information for interest point detection. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 9883–9894. [Google Scholar] [CrossRef]

- Gary, L. Object knowledge changes visual appearance: Semantic effects on color afterimages. Acta Psychol. 2015, 161, 117–130. [Google Scholar]

- Wang, F.; Shui, P. Noise-robust color edge detector using gradient matrix and anisotropic Gaussian directional derivative matrix. Pattern Recognit. 2016, 52, 346–357. [Google Scholar] [CrossRef]

- Belen, M.; Gordon, W.; Piotr, D.; Diego, G. Special Section on Advanced Displays: A survey on computational displays: Pushing the boundaries of optics, computation, and perception. Comput. Graph. 2013, 37, 1012–1038. [Google Scholar]

- Nevatia, R. A Color Edge Detector and Its Use in Scene Segmentation. IEEE Trans. Syst. Man Cybern. 1977, 7, 820–826. [Google Scholar]

- Ruzon, M.; Tomasi, C. Edge, junction, and corner detection using color distributions. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1281–1295. [Google Scholar] [CrossRef]

- Topal, C.; Akinlar, C. Edge Drawing: A combined real-time edge and segment detector. J. Vis. Commun. Image Represent. 2012, 23, 862–872. [Google Scholar] [CrossRef]

- Khotanzad, A.; Chen, J.Y. Unsupervised segmentation of textured images by edge detection in multidimensional feature. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 414–421. [Google Scholar] [CrossRef]

- Evans, A.; Liu, X. A morphological gradient approach to color edge detection. IEEE Trans. Image Process. 2006, 15, 1454–1463. [Google Scholar] [CrossRef] [PubMed]

- Robinson, G.S. Color Edge Detection. Opt. Eng. 1977, 16, 479–484. [Google Scholar] [CrossRef]

- Tsang, W.; Tsang, P. Suppression of False Edge Detection Due to Specular Reflection in Color Images. Pattern Recognit. Lett. 1997, 18, 165–171. [Google Scholar] [CrossRef]

- Scharcanski, J.; Venetsanopoulos, A. Edge detection of color images using directional operators. IEEE Trans. Circuits Syst. Video Technol. 1997, 7, 397–401. [Google Scholar] [CrossRef]

- Tai, S.; Shihming, Y. A fast method for image noise estimation using Laplacian operator and adaptive edge detection. In Proceedings of the 2008 3rd International Symposium on Communications, Control and Signal Processing, St Julians, Malta, 12–14 March 2008; pp. 1077–1081. [Google Scholar]

- Deng, C.; Ma, W.; Yin, Y. An edge detection approach of image fusion based on improved Sobel operator. In Proceedings of the 2011 4th International Congress on Image and Signal Processing, Shanghai, China, 15–17 October 2011; Volume 3, pp. 1189–1193. [Google Scholar]

- Jing, J.; Gao, T.; Zhang, W.; Gao, Y.; Sun, C. Image feature information extraction for interest point detection: A comprehensive review. arXiv 2021, arXiv:2106.07929. [Google Scholar] [CrossRef]

- Zhang, W.; Sun, C. Corner detection using multi-directional structure tensor with multiple scales. Int. J. Comput. Vis. 2020, 128, 438–459. [Google Scholar] [CrossRef]

- Zhang, W.; Sun, C. Corner detection using second-order generalized Gaussian directional derivative representations. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1213–1224. [Google Scholar] [CrossRef] [PubMed]

- Akinlar, C.; Topal, C. ColorED: Color edge and segment detection by Edge Drawing (ED). J. Vis. Commun. Image Represent. 2017, 44, 82–94. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef] [PubMed]

- Kanade, T. Image Understanding Research at CMU. In Proceedings of the Image Understanding Workshop, Los Angeles, CA, USA, 23–25 February 1987; Volume 2, pp. 32–40. [Google Scholar]

- Daugman, J.G. Uncertainty relation for resolution in space, spatial frequency, and orientation optimized by two-dimensional visual cortical filters. J. Opt. Soc. Am. A-Opt. Image Sci. Vis. 1985, 2, 1160–1169. [Google Scholar] [CrossRef] [PubMed]

- Hill, P.; Achim, A.; Al-Mualla, M.E.; Bull, D. Contrast Sensitivity of the Wavelet, Dual Tree Complex Wavelet, Curvelet, and Steerable Pyramid Transforms. IEEE Trans. Image Process. 2016, 25, 2739–2751. [Google Scholar] [CrossRef] [PubMed]

- Ni, Z.; Zeng, H.; Ma, L.; Hou, J.; Chen, J.; Ma, K. A Gabor Feature-Based Quality Assessment Model for the Screen Content Images. IEEE Trans. Image Process. 2018, 27, 4516–4528. [Google Scholar] [CrossRef]

- Liu, C.; Wechsler, H. Gabor feature based classification using the enhanced fisher linear discriminant model for face recognition. IEEE Trans. Image Process. 2002, 11, 467–476. [Google Scholar]

- Xie, S.; Tu, Z. Holistically-Nested Edge Detection. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1395–1403. [Google Scholar]

- Liu, Y.; Cheng, M.; Hu, X.; Jiawang, B.; Zhang, L.; Bai, X.; Tang, J. Richer Convolutional Features for Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1939–1946. [Google Scholar] [CrossRef]

- Jing, J.; Liu, S.; Li, P.; Zhang, L. The fabric defect detection based on CIE L*a*b* color space using 2-D Gabor filter. J. Text. Inst. 2016, 107, 1305–1313. [Google Scholar] [CrossRef]

- Lei, T.; Zhang, Y.; Wang, Y.; Liu, S.; Guo, Z. A conditionally invariant mathematical morphological framework for color images. Inf. Sci. 2017, 387, 34–52. [Google Scholar] [CrossRef]

- Liang, J.; Zhou, J.; Bai, X.; Qian, Y. Salient object detection in hyperspectral imagery. In Proceedings of the International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; pp. 2393–2397. [Google Scholar]

- Alvarez, L.; Lions, P.L.; Morel, J.M. Image selective smoothing and edge detection by nonlinear diffusion. II. Siam J. Numer. Anal. 1992, 29, 845–866. [Google Scholar] [CrossRef]

- Arbeláez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 898–916. [Google Scholar] [CrossRef] [PubMed]

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Computer Vision—ECCV 2012: 12th European Conference on Computer Vision, Florence, Italy, October 7–13 2012; Proceedings, Part V 12; Springer: Berlin/Heidelberg, Germany, 2012; pp. 746–760. [Google Scholar]

- Coleman, S.A.; Scotney, B.W.; Suganthan, S. Edge detecting for range data using Laplacian operators. IEEE Trans. Image Process. 2010, 19, 2814–2824. [Google Scholar] [CrossRef]

- Dollár, P.; Zitnick, C.L. Fast edge detection using structured forests. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 1558–1570. [Google Scholar]

- Bae, Y.; Lee, W.H.; Choi, Y.; Jeon, Y.W.; Ra, J.B. Automatic road extraction from remote sensing images based on a normalized second derivative map. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1858–1862. [Google Scholar]

- Martin, D.; Fowlkes, C.; Malik, J. Learning to detect natural image boundaries using local brightness, color, and texture cues. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 530–549. [Google Scholar] [CrossRef]

- Pratt, W.K. Digital Image Processing; John Wiley and Sons, Incorporated: Hoboken, NJ, USA, 1978. [Google Scholar]

| Methods | AP | R50 | ||

|---|---|---|---|---|

| Color Canny [37] | 0.563 | 0.576 | 0.570 | 0.578 |

| Laplacian [30] | 0.598 | 0.615 | 0.583 | 0.725 |

| I-Sobel [31] | 0.581 | 0.587 | 0.586 | 0.693 |

| CMG [26] | 0.611 | 0.636 | 0.607 | 0.751 |

| ColorED [35] | 0.617 | 0.629 | 0.618 | 0.771 |

| AGDD [20] | 0.634 | 0.651 | 0.632 | 0.797 |

| Proposed | 0.672 | 0.695 | 0.652 | 0.828 |

| Methods | AP | R50 | ||

|---|---|---|---|---|

| CMG [26] | 0.651 | 0.661 | 0.637 | 0.773 |

| ColorED [35] | 0.673 | 0.667 | 0.653 | 0.794 |

| AGDD [20] | 0.677 | 0.716 | 0.629 | 0.804 |

| HED [42] | 0.741 | 0.757 | 0.749 | 0.900 |

| RCF [43] | 0.765 | 0.780 | 0.760 | 0.888 |

| Proposed | 0.685 | 0.708 | 0.689 | 0.843 |

| Methods | Plane | Elephant | Tree | Flower |

|---|---|---|---|---|

| Color Canny [37] | 0.6675 | 0.6726 | 0.7731 | 0.7886 |

| CMG [26] | 0.7223 | 0.7434 | 0.7745 | 0.7678 |

| Laplacian method [30] | 0.6127 | 0.6439 | 0.6328 | 0.6456 |

| I-Sobel method [31] | 0.6517 | 0.6219 | 0.6473 | 0.6756 |

| ColorED [35] | 0.7713 | 0.7616 | 0.7925 | 0.8053 |

| AGDD [20] | 0.7753 | 0.7727 | 0.8006 | 0.8124 |

| Proposed | 0.7837 | 0.7842 | 0.8075 | 0.8168 |

| Methods | Plane | Elephant | Tree | Flower |

|---|---|---|---|---|

| Color Canny [37] | 2.252 | 2.732 | 2.029 | 2.067 |

| CMG [26] | 2.297 | 2.721 | 2.112 | 2.189 |

| Laplacian method [30] | 2.437 | 2.588 | 2.187 | 2.186 |

| I-Sobel method [31] | 2.271 | 2.734 | 2.125 | 2.154 |

| ColorED [35] | 6.810 | 7.078 | 6.936 | 6.859 |

| AGDD [20] | 5.287 | 5.968 | 5.853 | 5.684 |

| Proposed | 6.219 | 6.446 | 6.329 | 6.283 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Bi, Y.; Zhang, W.; Ren, J.; Chen, J. M2GF: Multi-Scale and Multi-Directional Gabor Filters for Image Edge Detection. Appl. Sci. 2023, 13, 9409. https://doi.org/10.3390/app13169409

Li Y, Bi Y, Zhang W, Ren J, Chen J. M2GF: Multi-Scale and Multi-Directional Gabor Filters for Image Edge Detection. Applied Sciences. 2023; 13(16):9409. https://doi.org/10.3390/app13169409

Chicago/Turabian StyleLi, Yunhong, Yuandong Bi, Weichuan Zhang, Jie Ren, and Jinni Chen. 2023. "M2GF: Multi-Scale and Multi-Directional Gabor Filters for Image Edge Detection" Applied Sciences 13, no. 16: 9409. https://doi.org/10.3390/app13169409

APA StyleLi, Y., Bi, Y., Zhang, W., Ren, J., & Chen, J. (2023). M2GF: Multi-Scale and Multi-Directional Gabor Filters for Image Edge Detection. Applied Sciences, 13(16), 9409. https://doi.org/10.3390/app13169409