A Novel Autonomous Landing Method for Flying–Walking Power Line Inspection Robots Based on Prior Structure Data

Abstract

:1. Introduction

2. Related Works

2.1. Power Line Detection

2.2. Robot Landing Method

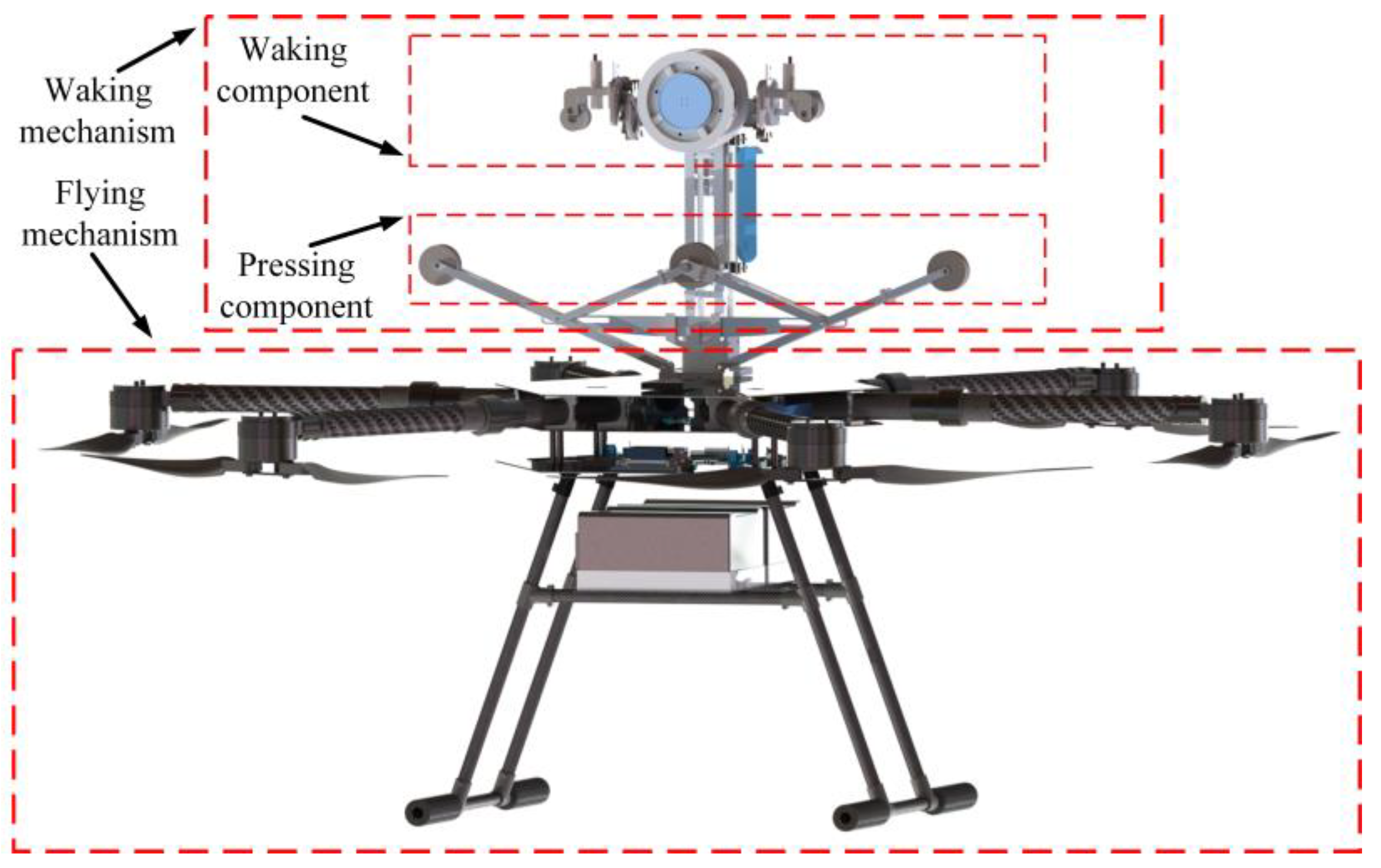

3. System Overview

4. Autonomous Landing Method

4.1. Power Line Detection and Location Estimation

4.1.1. OPTL Detection

4.1.2. Location Estimation

4.2. SLA Determination

4.2.1. Prior Structure Data

- (1)

- Before FPLIR inspection, the OPTL information is stored in the database of the onboard computer.

- (2)

- The OPTL information is associated with safe slope data in the database.

- (3)

- When the FPLIR inspection starts, the slope of the power line is calculated using the algorithm provided in Section 4.2.2.

4.2.2. Procedure of SLA Determination

4.3. Trajectory Generation and Tracking

4.3.1. Dynamic Model of the FPLIR

4.3.2. Landing Trajectory Generation

4.3.3. Model Predictive Control

5. Experimental Validation

5.1. Experiments in the Simulated Environment

5.2. Experiments in the Real Environment

5.2.1. Experimental Platform

5.2.2. Landing Line Experiments

6. Discussion

6.1. Segmentation Network Choice

6.2. Performance Analysis of Binocular Depth Measurement

6.3. Limitations

- (1)

- The accurate estimation of the relative position of the FPLIR and the power line is crucial for the FPLIR to be able to land on the power line autonomously. Since the diameter of the power line is small, the binocular camera is not able to measure the depth when the FPLIR is far away from the power line. To accurately estimate the relative position, the FPLIR and the power line need a minor initial position, which would result in a shorter attitude adjustment time during the FPLIR landing line, increasing the risk of failure.

- (2)

- MPC depends on a well-described system model to optimize system performance and ensure constraint satisfaction. Therefore, an accurate dynamics model is critical to the success of the control system. Since the FPLIR is not a standard multi-rotor UAV, there are inaccuracies in the dynamics model’s description of the FPLIR. If the speed of the FPLIR is too high, it will result in poor trajectory tracking.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jalil, B.; Leone, G.R.; Martinelli, M.; Moroni, D.; Pascali, M.A.; Berton, A. Fault detection in power equipment via an unmanned aerial system using multi modal data. Sensors 2019, 19, 3014. [Google Scholar] [CrossRef]

- Menendez, O.; Cheein, F.A.A.; Perez, M.; Kouro, S. Robotics in power systems: Enabling a more reliable and safe grid. IEEE Ind. Electron. Mag. 2017, 11, 22–34. [Google Scholar] [CrossRef]

- Disyadej, T.; Promjan, J.; Muneesawang, P.; Poochinapan, K.; Grzybowski, S. Application in O&M Practices of Overhead Power Line Robotics. In Proceedings of the 2019 IEEE PES GTD Grand International Conference and Exposition Asia (GTD Asia), Bangkok, Thailand, 19–23 March 2019; pp. 347–351. [Google Scholar]

- Wu, G.; Cao, H.; Xu, X.; Xiao, H.; Li, S.; Xu, Q.; Liu, B.; Wang, Q.; Wang, Z.; Ma, Y. Design and application of inspection system in a self-governing mobile robot system for high voltage transmission line inspection. In Proceedings of the 2009 Asia-Pacific Power and Energy Engineering Conference, Wuhan, China, 27–31 March 2009; pp. 1–4. [Google Scholar]

- Wale, P.B. Maintenance of transmission line by using robot. In Proceedings of the 2016 International Conference on Automatic Control and Dynamic Optimization Techniques (ICACDOT), Pune, India, 9–10 September 2016; pp. 538–542. [Google Scholar]

- Xie, X.; Liu, Z.; Xu, C.; Zhang, Y. A multiple sensors platform method for power line inspection based on a large unmanned helicopter. Sensors 2017, 17, 1222. [Google Scholar] [CrossRef]

- Debenest, P.; Guarnieri, M.; Takita, K.; Fukushima, E.F.; Hirose, S.; Tamura, K.; Kimura, A.; Kubokawa, H.; Iwama, N.; Shiga, F. Expliner-Robot for inspection of transmission lines. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 3978–3984. [Google Scholar]

- Elizondo, D.; Gentile, T.; Candia, H.; Bell, G. Overview of robotic applications for energized transmission line work—Technologies, field projects and future developments. In Proceedings of the 2010 1st International Conference on Applied Robotics for the Power Industry, Montreal, QC, Canada, 5–7 October 2010; pp. 1–7. [Google Scholar]

- Luque-Vega, L.F.; Castillo-Toledo, B.; Loukianov, A.; Gonzalez-Jimenez, L.E. Power line inspection via an unmanned aerial system based on the quadrotor helicopter. In Proceedings of the MELECON 2014–2014 17th IEEE Mediterranean Electrotechnical Conference, Beirut, Lebanon, 13–16 April 2014; pp. 393–397. [Google Scholar]

- Hui, X.; Bian, J.; Yu, Y.; Zhao, X.; Tan, M. A novel autonomous navigation approach for UAV power line inspection. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), Macau, Macao, 5–8 December 2017; pp. 634–639. [Google Scholar]

- Ahmed, M.F.; Mohanta, J.; Sanyal, A.; Yadav, P.S. Path Planning of Unmanned Aerial Systems for Visual Inspection of Power Transmission Lines and Towers. IETE J. Res. 2023, 1–21. [Google Scholar] [CrossRef]

- Hamelin, P.; Miralles, F.; Lambert, G.; Lavoie, S.; Pouliot, N.; Montfrond, M.; Montambault, S. Discrete-time control of LineDrone: An assisted tracking and landing UAV for live power line inspection and maintenance. In Proceedings of the 2019 International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 11–14 June 2019; pp. 292–298. [Google Scholar]

- Alhassan, A.B.; Zhang, X.; Shen, H.; Xu, H. Power transmission line inspection robots: A review, trends and challenges for future research. Int. J. Electr. Power Energy Syst. 2020, 118, 105862. [Google Scholar] [CrossRef]

- Mirallès, F.; Hamelin, P.; Lambert, G.; Lavoie, S.; Pouliot, N.; Montfrond, M.; Montambault, S. LineDrone Technology: Landing an unmanned aerial vehicle on a power line. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 6545–6552. [Google Scholar]

- Ramon-Soria, P.; Gomez-Tamm, A.E.; Garcia-Rubiales, F.J.; Arrue, B.C.; Ollero, A. Autonomous landing on pipes using soft gripper for inspection and maintenance in outdoor environments. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 5832–5839. [Google Scholar]

- Hang, K.; Lyu, X.; Song, H.; Stork, J.A.; Dollar, A.M.; Kragic, D.; Zhang, F. Perching and resting—A paradigm for UAV maneuvering with modularized landing gears. Sci. Robot. 2019, 4, eaau6637. [Google Scholar] [CrossRef] [PubMed]

- Thomas, J.; Loianno, G.; Daniilidis, K.; Kumar, V. Visual servoing of quadrotors for perching by hanging from cylindrical objects. IEEE Robot. Autom. Lett. 2015, 1, 57–64. [Google Scholar] [CrossRef]

- Paneque, J.L.; Martinez-de-Dios, J.R.; Ollero, A.; Hanover, D.; Sun, S.; Romero, A.; Scaramuzza, D. Perception-Aware Perching on Powerlines with Multirotors. IEEE Robot. Autom. Lett. 2022, 7, 3077–3084. [Google Scholar] [CrossRef]

- Schofield, O.B.; Iversen, N.; Ebeid, E. Autonomous power line detection and tracking system using UAVs. Microprocess. Microsyst. 2022, 94, 104609. [Google Scholar] [CrossRef]

- Yan, G.; Li, C.; Zhou, G.; Zhang, W.; Li, X. Automatic extraction of power lines from aerial images. IEEE Geosci. Remote Sens. Lett. 2007, 4, 387–391. [Google Scholar] [CrossRef]

- Li, Z.; Liu, Y.; Hayward, R.; Zhang, J.; Cai, J. Knowledge-based power line detection for UAV surveillance and inspection systems. In Proceedings of the 2008 23rd International Conference Image and Vision Computing New Zealand, Christchurch, New Zealand, 26–28 November 2008; pp. 1–6. [Google Scholar]

- Yang, T.W.; Yin, H.; Ruan, Q.Q.; Da Han, J.; Qi, J.T.; Yong, Q.; Wang, Z.T.; Sun, Z.Q. Overhead power line detection from UAV video images. In Proceedings of the 2012 19th International Conference on Mechatronics and Machine Vision in Practice (M2VIP), Auckland, New Zealand, 28–30 November 2012; pp. 74–79. [Google Scholar]

- Cerón, A.; Prieto, F. Power line detection using a circle based search with UAV images. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 632–639. [Google Scholar]

- Song, B.; Li, X. Power line detection from optical images. Neurocomputing 2014, 129, 350–361. [Google Scholar] [CrossRef]

- Xie, S.; Tu, Z. Holistically-nested edge detection. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7-13 December 2015; pp. 1395–1403. [Google Scholar]

- Shen, W.; Wang, X.; Wang, Y.; Bai, X.; Zhang, Z. Deepcontour: A deep convolutional feature learned by positive-sharing loss for contour detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3982–3991. [Google Scholar]

- Bertasius, G.; Shi, J.; Torresani, L. Deepedge: A multi-scale bifurcated deep network for top-down contour detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4380–4389. [Google Scholar]

- Zhang, H.; Yang, W.; Yu, H.; Zhang, H.; Xia, G.-S. Detecting power lines in UAV images with convolutional features and structured constraints. Remote Sens. 2019, 11, 1342. [Google Scholar] [CrossRef]

- Madaan, R.; Maturana, D.; Scherer, S. Wire detection using synthetic data and dilated convolutional networks for unmanned aerial vehicles. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 3487–3494. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Bisenet: Bilateral segmentation network for real-time semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 325–341. [Google Scholar]

- Yu, C.; Gao, C.; Wang, J.; Yu, G.; Shen, C.; Sang, N. Bisenet v2: Bilateral network with guided aggregation for real-time semantic segmentation. Int. J. Comput. Vis. 2021, 129, 3051–3068. [Google Scholar] [CrossRef]

- Fan, M.; Lai, S.; Huang, J.; Wei, X.; Chai, Z.; Luo, J.; Wei, X. Rethinking BiSeNet for real-time semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9716–9725. [Google Scholar]

- Erginer, B.; Altug, E. Modeling and PD control of a quadrotor VTOL vehicle. In Proceedings of the 2007 IEEE Intelligent Vehicles Symposium, Istanbul, Turkey, 13–15 June 2007; pp. 894–899. [Google Scholar]

- Mellinger, D.; Michael, N.; Kumar, V. Trajectory generation and control for precise aggressive maneuvers with quadrotors. Int. J. Robot. Res. 2012, 31, 664–674. [Google Scholar] [CrossRef]

- Thomas, J.; Pope, M.; Loianno, G.; Hawkes, E.W.; Estrada, M.A.; Jiang, H.; Cutkosky, M.R.; Kumar, V. Aggressive flight with quadrotors for perching on inclined surfaces. J. Mech. Robot. 2016, 8, 051007. [Google Scholar] [CrossRef]

- Mao, J.; Li, G.; Nogar, S.; Kroninger, C.; Loianno, G. Aggressive visual perching with quadrotors on inclined surfaces. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 5242–5248. [Google Scholar]

- Popek, K.M.; Johannes, M.S.; Wolfe, K.C.; Hegeman, R.A.; Hatch, J.M.; Moore, J.L.; Katyal, K.D.; Yeh, B.Y.; Bamberger, R.J. Autonomous grasping robotic aerial system for perching (agrasp). In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–9. [Google Scholar]

- Ahmed, B.; Pota, H.R. Backstepping-based landing control of a RUAV using tether incorporating flapping correction dynamics. In Proceedings of the 2008 American Control Conference, Seattle, WA, USA, 11–13 June 2008; pp. 2728–2733. [Google Scholar]

- Wang, R.; Zhou, Z.; Shen, Y. Flying-wing UAV landing control and simulation based on mixed H 2/H∞. In Proceedings of the 2007 International Conference on Mechatronics and Automation, Harbin, China, 5–8 August 2007; pp. 1523–1528. [Google Scholar]

- Escareño, J.; Salazar, S.; Romero, H.; Lozano, R. Trajectory control of a quadrotor subject to 2D wind disturbances. J. Intell. Robot. Syst. 2013, 70, 51–63. [Google Scholar] [CrossRef]

- Kamel, M.; Stastny, T.; Alexis, K.; Siegwart, R. Model predictive control for trajectory tracking of unmanned aerial vehicles using robot operating system. In Robot Operating System (ROS); Springer: Berlin/Heidelberg, Germany, 2017; pp. 3–39. [Google Scholar]

- Small, E.; Sopasakis, P.; Fresk, E.; Patrinos, P.; Nikolakopoulos, G. Aerial navigation in obstructed environments with embedded nonlinear model predictive control. In Proceedings of the 2019 18th European Control Conference (ECC), Naples, Italy, 25–28 June 2019; pp. 3556–3563. [Google Scholar]

- Nguyen, H.; Kamel, M.; Alexis, K.; Siegwart, R. Model predictive control for micro aerial vehicles: A survey. In Proceedings of the 2021 European Control Conference (ECC), Delft, The Netherlands, 29 June–2 July 2021; pp. 1556–1563. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Such, M.; Jimenez-Octavio, J.R.; Carnicero, A.; Lopez-Garcia, O. An approach based on the catenary equation to deal with static analysis of three dimensional cable structures. Eng. Struct. 2009, 31, 2162–2170. [Google Scholar] [CrossRef]

- Feng, Y.; Zhang, C.; Baek, S.; Rawashdeh, S.; Mohammadi, A. Autonomous landing of a UAV on a moving platform using model predictive control. Drones 2018, 2, 34. [Google Scholar] [CrossRef]

| Method Category | Author/Method | Advantages | Limitations |

|---|---|---|---|

| Traditional method | Yan et al. [20], Li et al. [21], Yang et al. [22], Cerón et al. [23], Song et al. [24] | Simple model, fast and automatic, low data requirements | Low noise resistance, low extraction accuracy |

| Deep learning-based method | Holistically Nested Edge Detection [25], DeepContour [26], DeepEdge [27] Zhang et al. [28], Madaan et al. [29] | Diverse use of information, high scene applicability, high extraction accuracy | Complex model, high data requirements, low extraction efficiency |

| Voltage Level (KV) | Ground Wire Type | Tower Type | Tower Height (m) | High Difference (m) | Span (m) | Slope | Voltage Level (KV) | Ground Wire Type |

|---|---|---|---|---|---|---|---|---|

| 500 | LGJ-95/55 | ZB3-31.5 | 127.008 | 18.283 | 349 | 1.7/10.9 | 16.69 | |

| ZB6-26 | 145.291 |

| FPLIR before landing () | ||||||||

| FPLIR after landing () |

| Function Type | SSE | R-Square | RMSE | MAPE |

|---|---|---|---|---|

| Quadratic polynomial | 31,519 | 0.9776 | 3.972 | 0.0175% |

| Third polynomial | 31,498 | 0.9776 | 3.971 | 0.0147% |

| Quartic polynomial | 31,494 | 0.9776 | 3.972 | 0.0142% |

| Title | X (m) | Y (m) | Z (m) |

|---|---|---|---|

| RMSE | |||

| Error at landing | |||

| Maximum error |

| Title | X (m) | Y (m) | Z (m) |

|---|---|---|---|

| RMSE | |||

| Error at landing | |||

| Maximum error |

| Title | X (m) | Y (m) | Z (m) |

|---|---|---|---|

| RMSE | |||

| Error at landing | |||

| Maximum error |

| Title | X (m/s) | Y (m/s) | Z (m/s) |

|---|---|---|---|

| RMSE | |||

| Error at landing | |||

| Maximum error |

| X (m) | Y (m) | Z (m) | |

|---|---|---|---|

| Reference [15] | |||

| Our method |

| X (m/s) | Y (m/s) | Z (m/s) | |

|---|---|---|---|

| Reference [15] | |||

| Our method |

| Model | Resolution | Backbone | mIoU (%) | Speed (FPS) |

|---|---|---|---|---|

| STDC1-Seg70 | STDC1 | 82.848 | 29.3 | |

| STDC2-Seg70 | STDC2 | 83.294 | 24.1 | |

| STDC1-Seg90 | STDC1 | 85.521 | 17.5 | |

| STDC2-Seg90 | STDC2 | 85.784 | 13.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, Y.; Qin, X.; Li, B.; Lei, J.; Zhang, J.; Wang, Y.; Feng, T. A Novel Autonomous Landing Method for Flying–Walking Power Line Inspection Robots Based on Prior Structure Data. Appl. Sci. 2023, 13, 9544. https://doi.org/10.3390/app13179544

Zeng Y, Qin X, Li B, Lei J, Zhang J, Wang Y, Feng T. A Novel Autonomous Landing Method for Flying–Walking Power Line Inspection Robots Based on Prior Structure Data. Applied Sciences. 2023; 13(17):9544. https://doi.org/10.3390/app13179544

Chicago/Turabian StyleZeng, Yujie, Xinyan Qin, Bo Li, Jin Lei, Jie Zhang, Yanqi Wang, and Tianming Feng. 2023. "A Novel Autonomous Landing Method for Flying–Walking Power Line Inspection Robots Based on Prior Structure Data" Applied Sciences 13, no. 17: 9544. https://doi.org/10.3390/app13179544