A Method for Extracting a Laser Center Line Based on an Improved Grayscale Center of Gravity Method: Application on the 3D Reconstruction of Battery Film Defects

Abstract

:1. Introduction

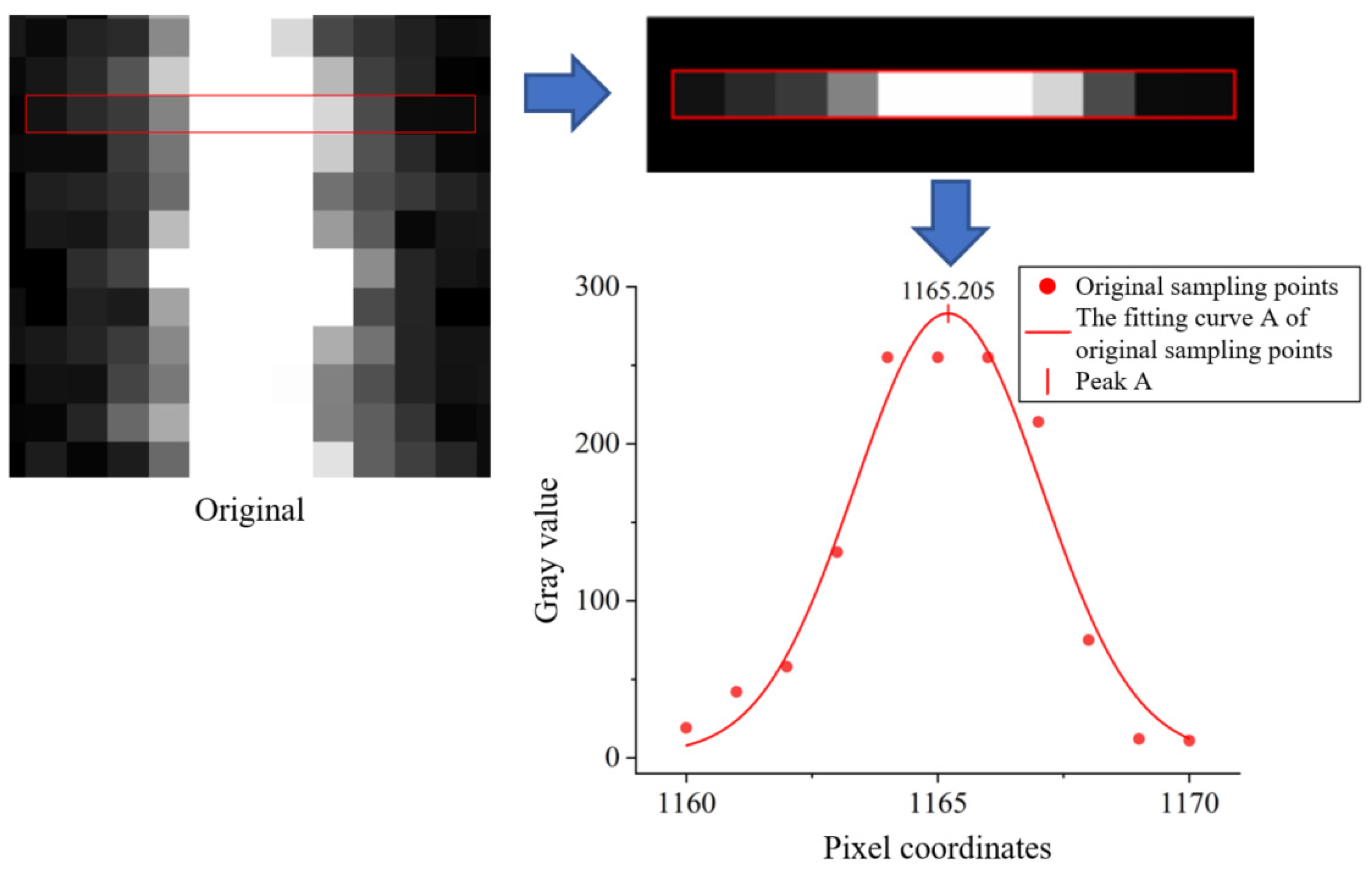

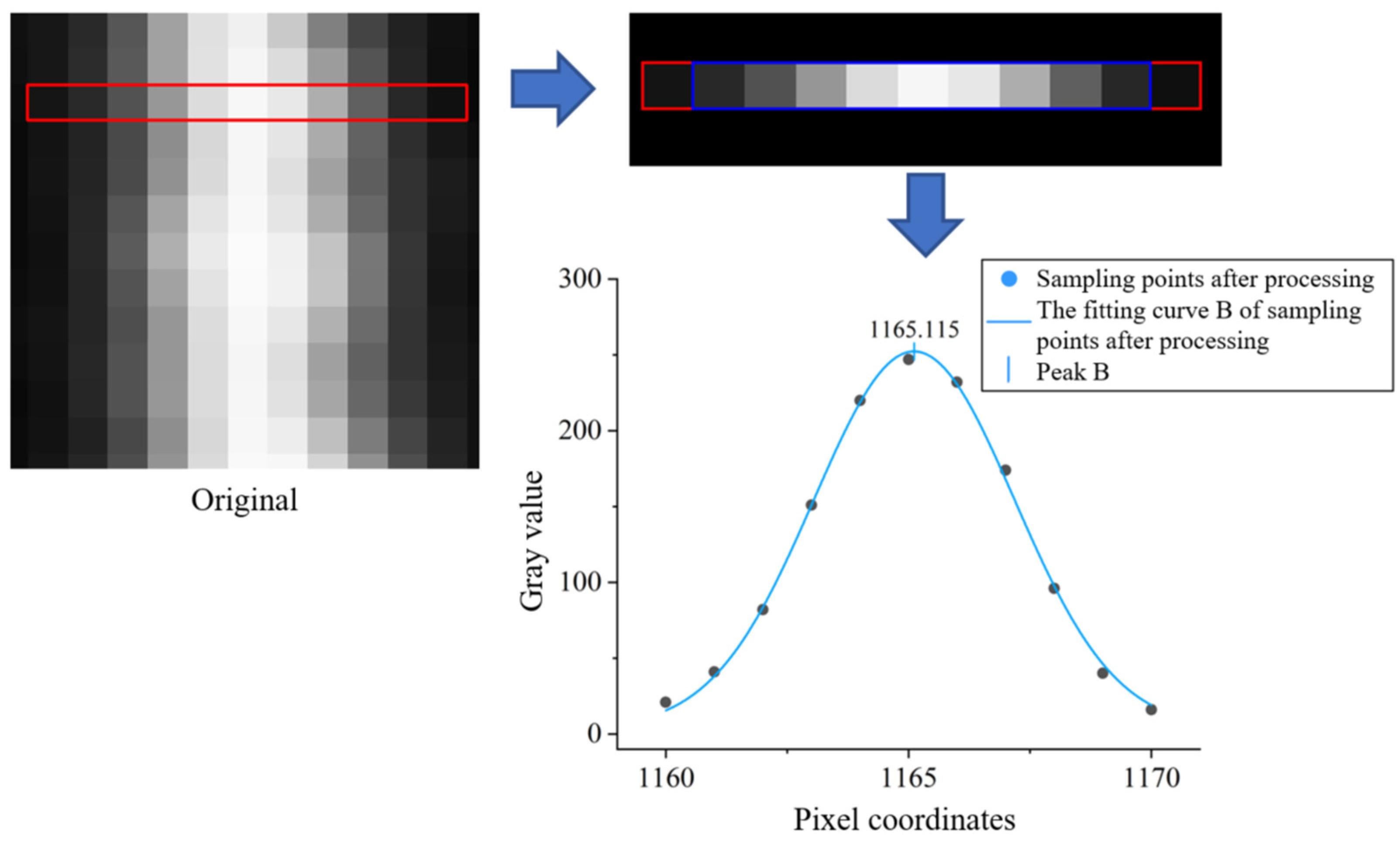

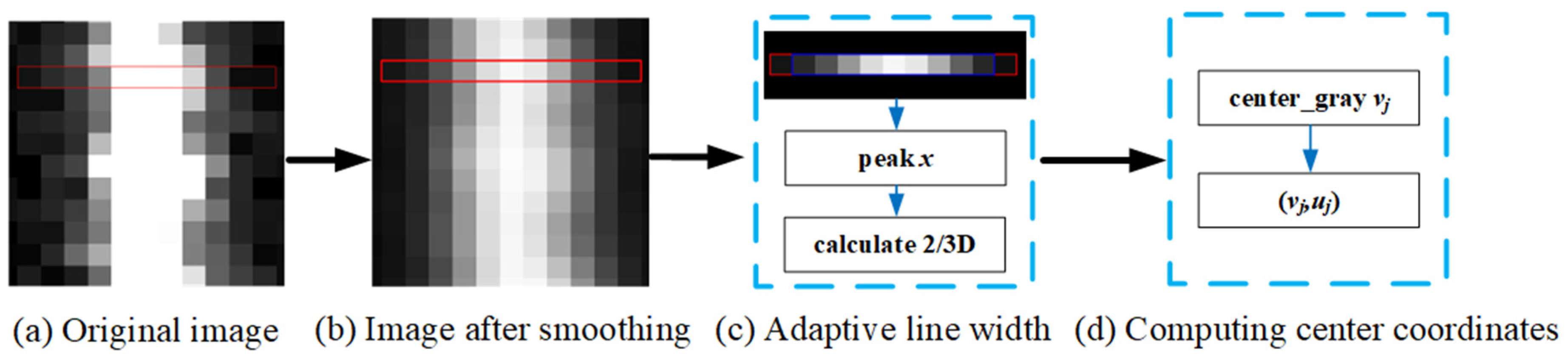

2. Proposed Method

2.1. Principles of Laser Triangulation

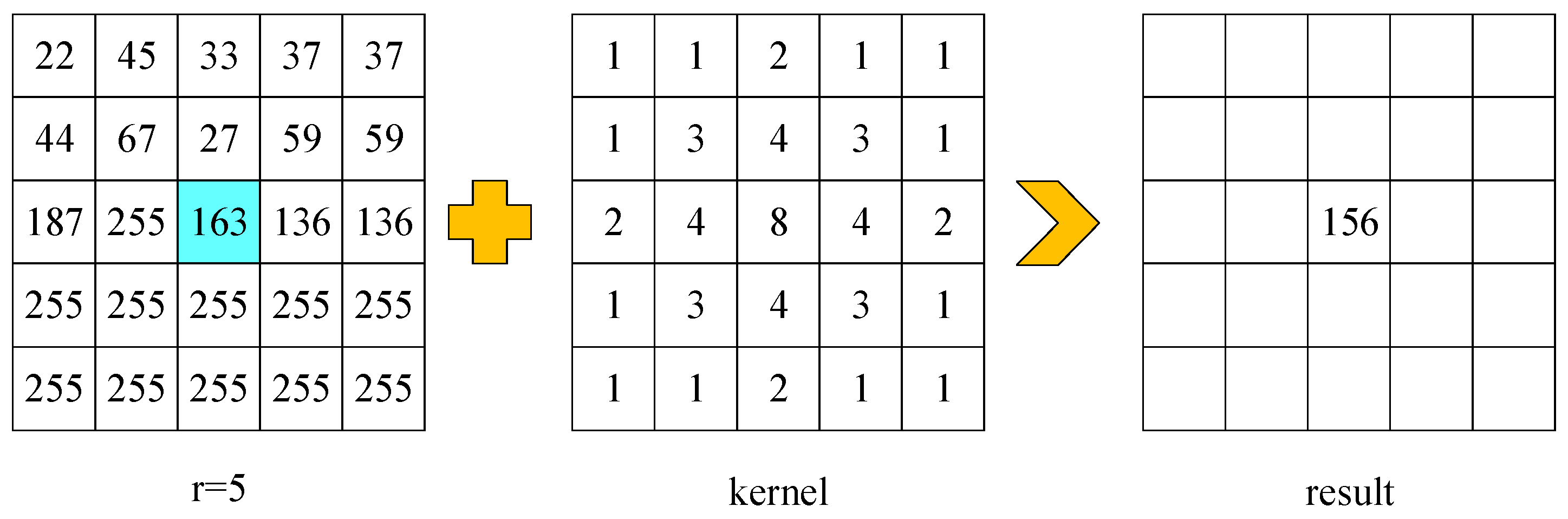

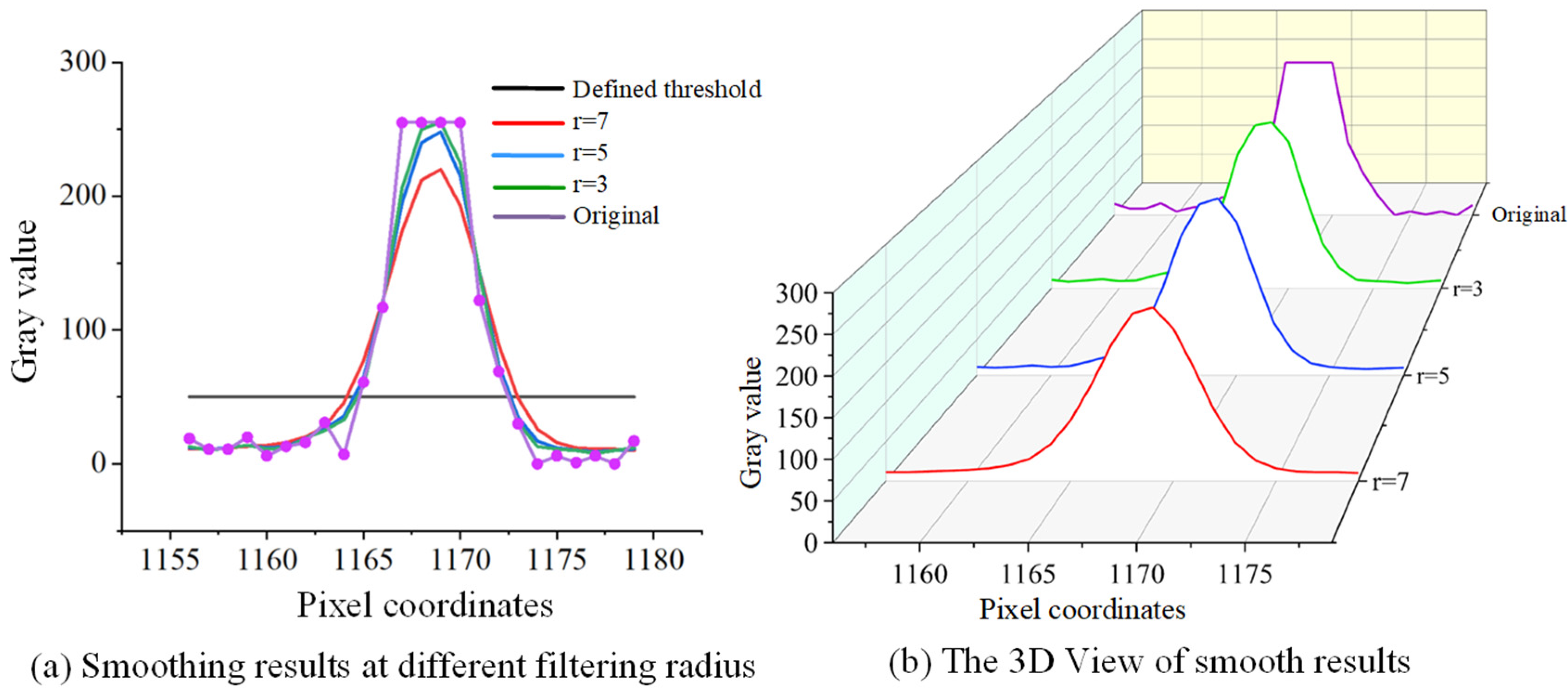

2.2. Image Preprocessing

3. The Improved Laser Line Extraction

4. The Experiment and Discussion

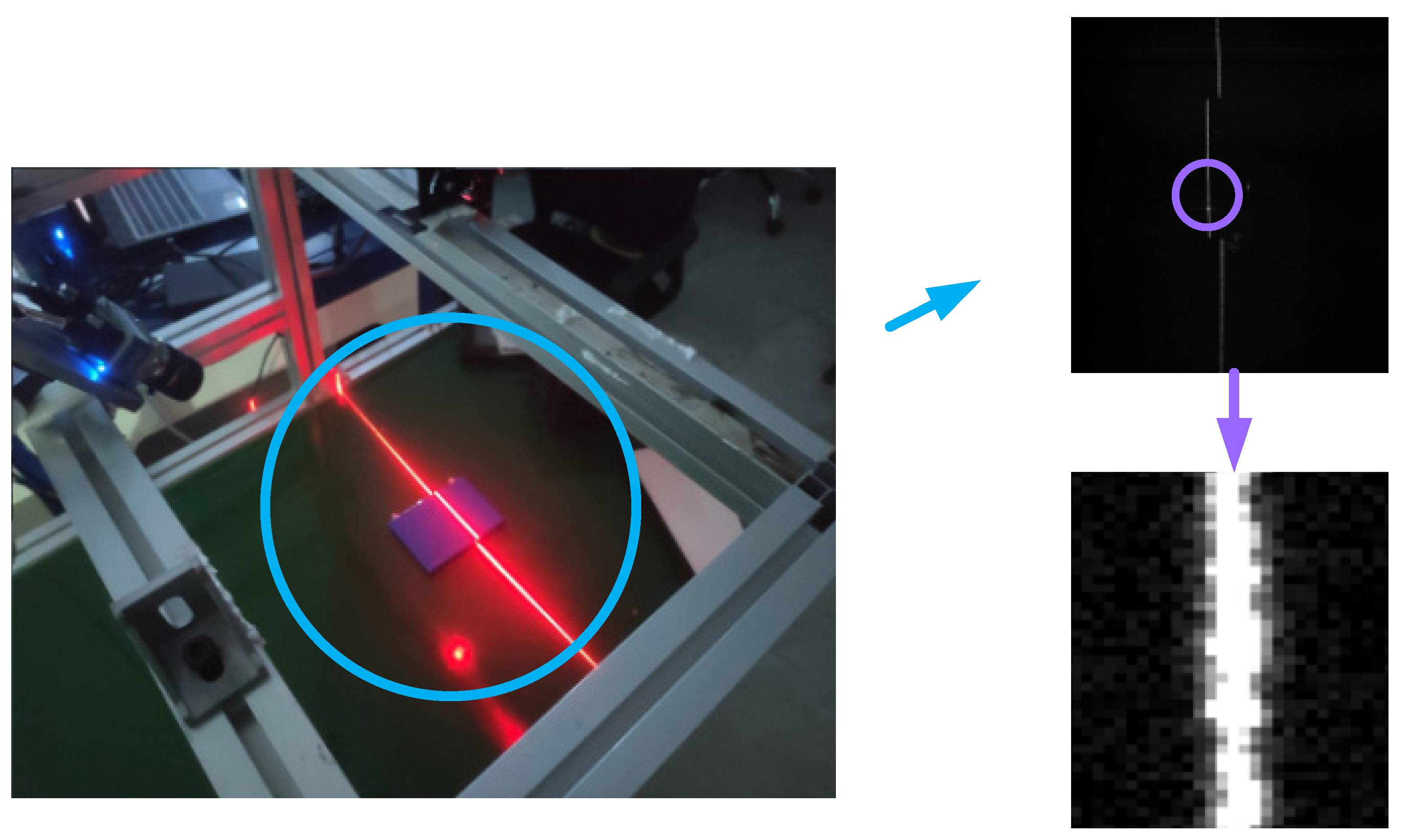

4.1. The Selection of the Camera, Line Laser, and Lithium Battery

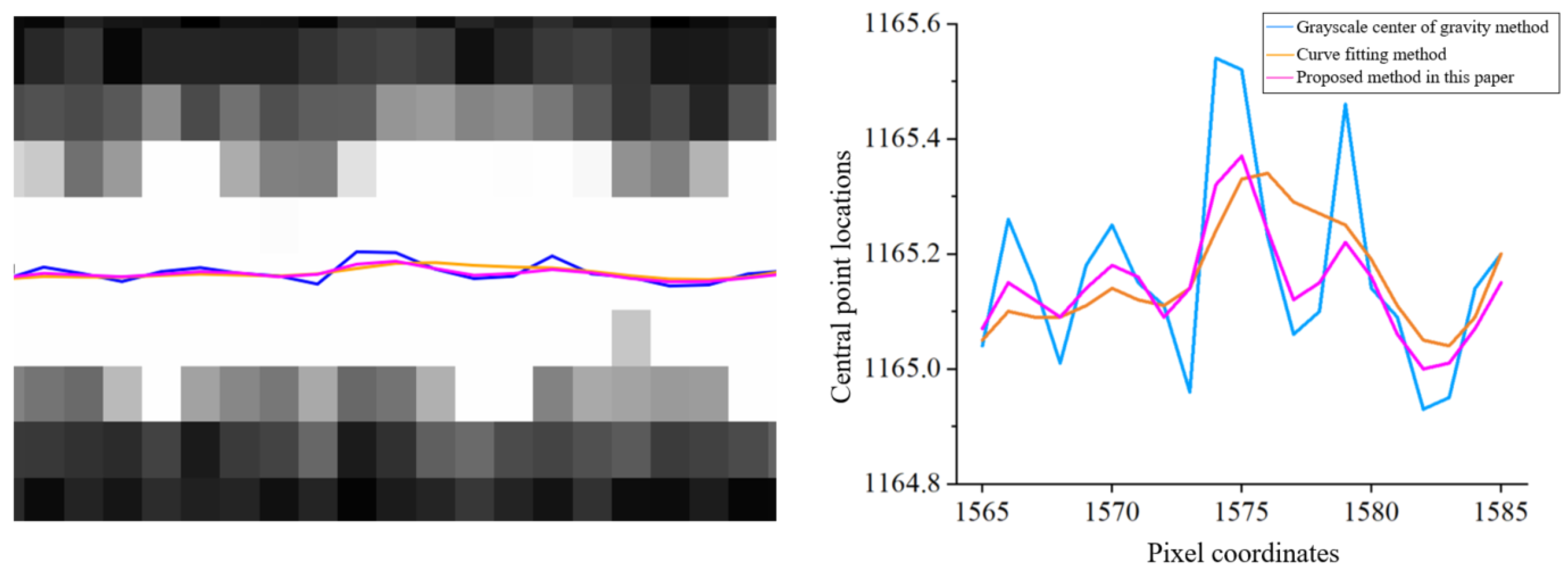

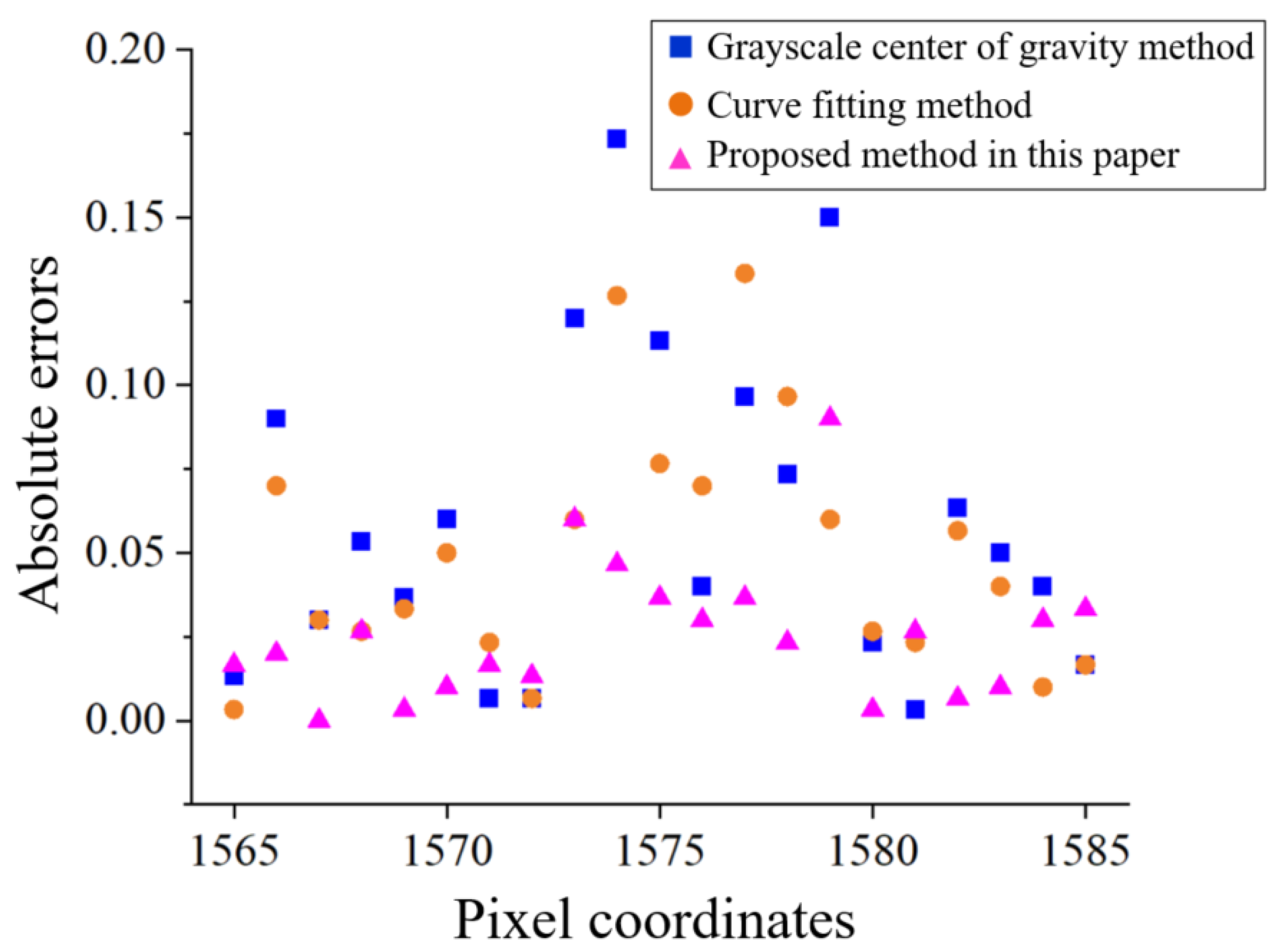

4.2. The Experimental Precision Analysis

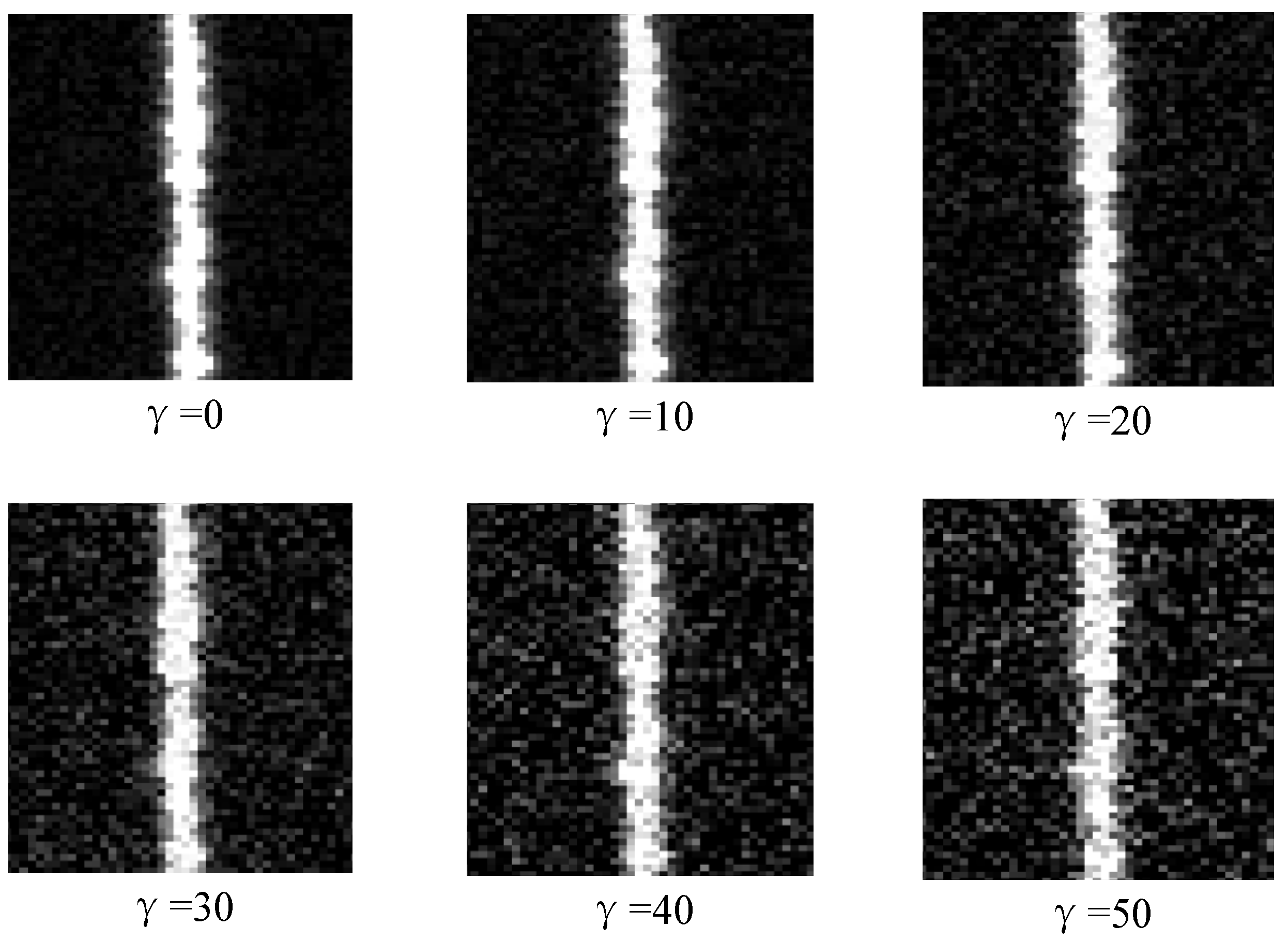

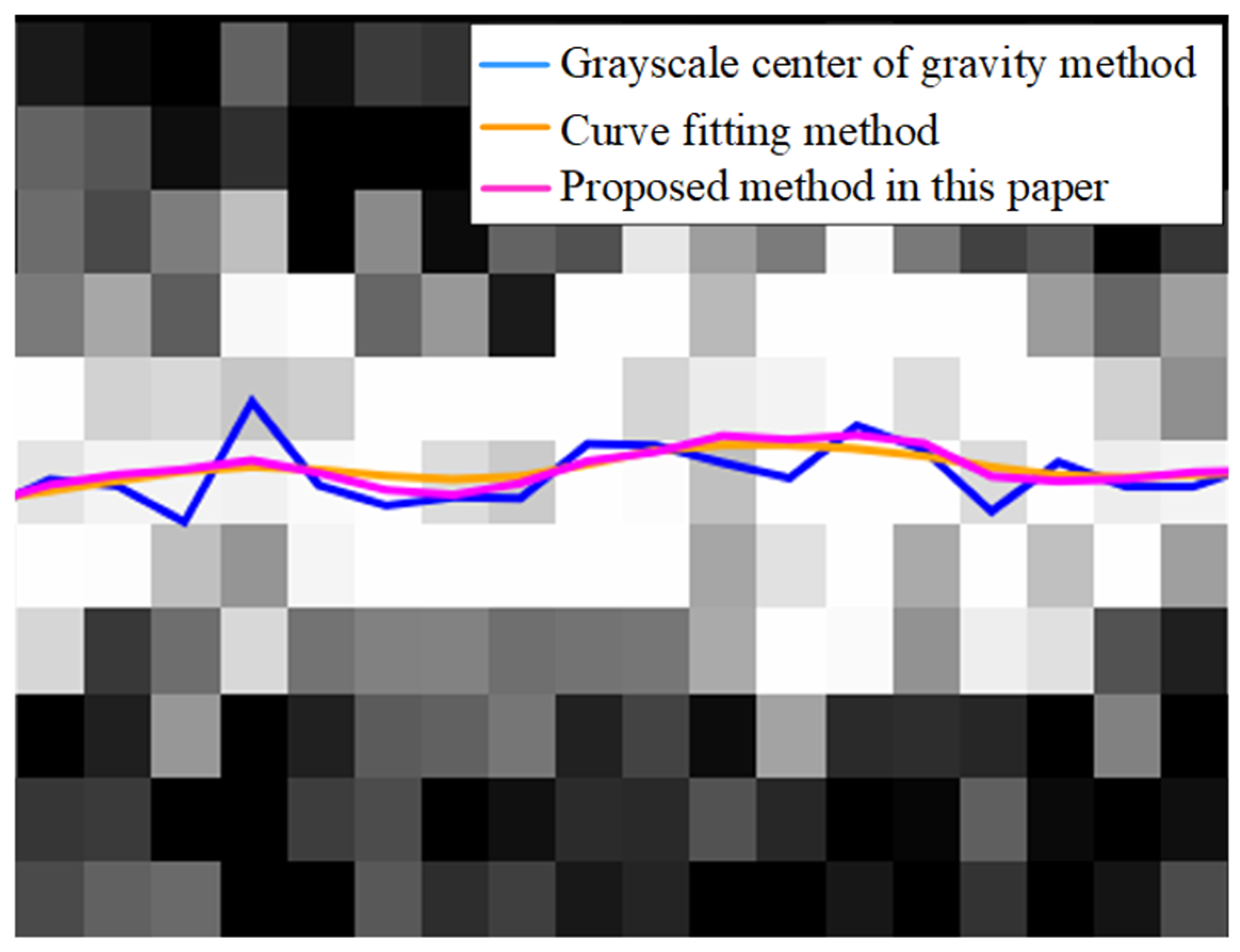

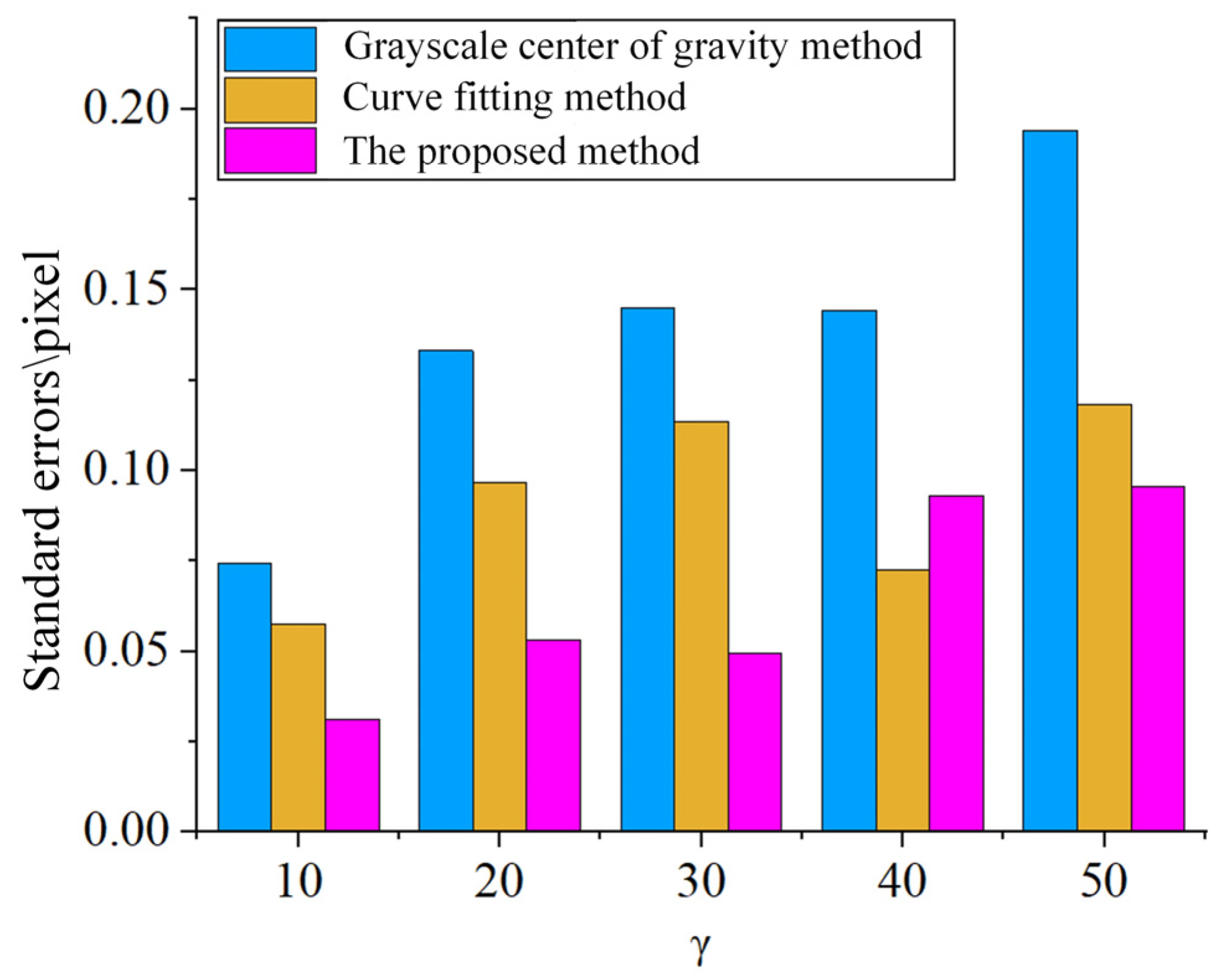

4.3. The Noise Resistance Analysis

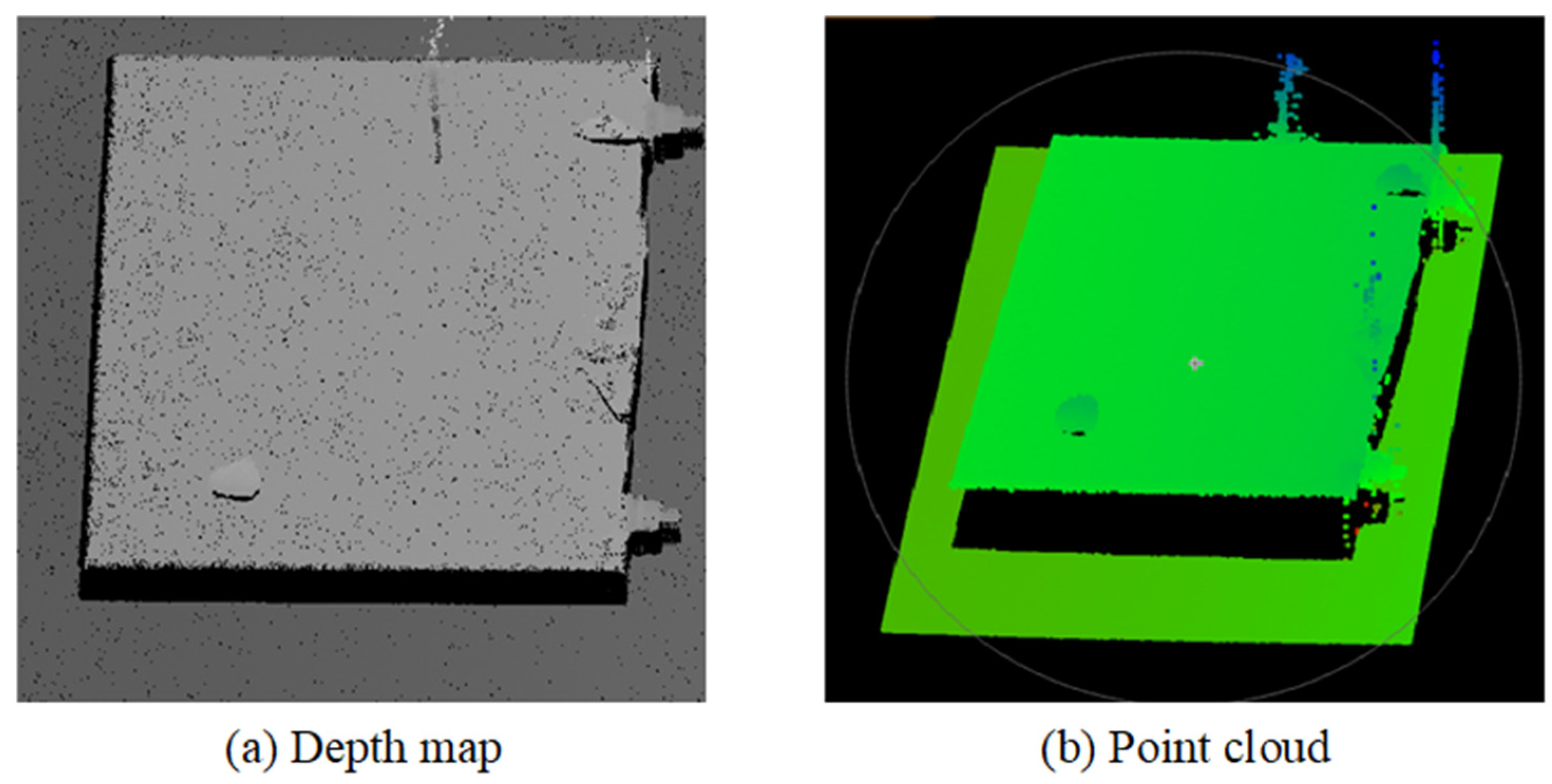

4.4. The 3D Reconstruction Result

5. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lang, X.L.; Zhang, Y.; Shu, S.B.; Liang, H.J.; Zhang, Y.Z. Lithium battery surface defect detection based on the YOLOv3 detection algorithm. In Proceedings of the 10th International Symposium on Precision Mechanical Measurements, Qingdao, China, 15–17 October 2021. [Google Scholar]

- Ni, J.Y.; Wu, Y.L.; Xu, J.N.; Liu, Y. RD-GRF for Automatic Classification of Surface Defects of Lithium-ion Battery Electrodes. In Proceedings of the 40th Chinese Control Conference (CCC), Shanghai, China, 26–28 July 2021; pp. 7395–7399. [Google Scholar]

- Chen, Y.G.; Shu, Y.F.; Li, X.M.A.; Xiong, C.W.; Cao, S.Y.; Wen, X.Y.; Xie, Z.C. Research on detection algorithm of lithium battery surface defects based on embedded machine vision. J. Intell. Fuzzy Syst. 2021, 41, 4327–4335. [Google Scholar] [CrossRef]

- Ma, L.Y.; Xie, W.; Zhang, Y. Blister Defect Detection Based on Convolutional Neural Network for Polymer Lithium-Ion Battery. Appl. Sci. 2019, 9, 1085. [Google Scholar] [CrossRef]

- Dandage, H.K.; Lin, K.M.; Lin, H.H.; Chen, Y.J.; Tseng, K.S. Surface defect detection of cylindrical lithium-ion battery by multiscale image augmentation and classification. Int. J. Mod. Phys. B 2021, 35, 2140011. [Google Scholar] [CrossRef]

- Wu, K.; Tan, J.; Liu, C.B. Cross-Domain Few-Shot Learning Approach for Lithium-Ion Battery Surface Defects Classification Using an Improved Siamese Network. IEEE Sens. J. 2022, 22, 11847–11856. [Google Scholar] [CrossRef]

- Liu, X.H.; Wu, L.Q.; Guo, X.Q.; Andriukaitis, D.; Krolczyk, G.; Li, Z.X. A novel approach for surface defect detection of lithium battery based on improved K-nearest neighbor and Euclidean clustering segmentation. Int. J. Adv. Manuf. Technol. 2023, 127, 971–985. [Google Scholar] [CrossRef]

- Zhao, L.M.; Ouyang, Q.; Chen, D.F.; Wen, L.Y. Surface defects inspection method in hot slab continuous casting process. Ironmak. Steelmak. 2011, 38, 464–470. [Google Scholar] [CrossRef]

- Arciniegas, J.R.; Gonzalez, A.L.; Quintero, L.A.; Contreras, C.R.; Meneses, J.E. Three-dimensional shape measurement system applied to superficial inspection of non-metallic pipes for the hydrocarbons transport. In Proceedings of the Conference on Dimensional Optical Metrology and Inspection for Practical Applications III, Baltimore, MD, USA, 5–6 May 2014. [Google Scholar]

- Kang, D.; Jang, Y.J.; Won, S. Development of an inspection system for planar steel surface using multispectral photometric stereo. Opt. Eng. 2013, 52, 039701. [Google Scholar] [CrossRef]

- Chien, K.C.C.; Tu, H.Y. Complex defect inspection for transparent substrate by combining digital holography with machine learning. J. Opt. 2019, 21, 085701. [Google Scholar] [CrossRef]

- Dawda, A.; Nguyen, M. Defects Detection in Highly Specular Surface using a Combination of Stereo and Laser Reconstruction. In Proceedings of the 35th International Conference on Image and Vision Computing New Zealand (IVCNZ), Electr Network, Wellington, New Zealand, 25–27 November 2020. [Google Scholar]

- Yang, P.C.; Hu, D.; Wang, C.Y.; Zhang, Y.X.; You, D.Y.; Gao, X.D.; Zhang, N.F. Weld Surface Imperfection Detection by 3D Reconstruction of Laser Displacement Sensing. In Proceedings of the 5th International Conference on Mechanical, Control and Computer Engineering (ICMCCE), Harbin, China, 25–27 December 2020; pp. 2102–2105. [Google Scholar]

- Liu, L.; Cai, H.; Tian, M.Z.; Liu, D.D.; Cheng, Y.; Yin, W. Research on 3D reconstruction technology based on laser measurement. J. Braz. Soc. Mech. Sci. Eng. 2023, 45, 297. [Google Scholar] [CrossRef]

- Lizcano, C.; Marquez, M. Three-dimensional surfaces reconstruction base on laser triangulation. In Proceedings of the 5th Iberoamerican Meeting on Optics/8th Latin American Meeting on Optics, Lasers, and Their Applications, Porlamar, Venezuela, 3–8 October 2004; pp. 1322–1327. [Google Scholar]

- Li, J.X.; Zhou, Q.; Li, X.H.; Chen, R.M.; Ni, K. An Improved Low-Noise Processing Methodology Combined with PCL for Industry Inspection Based on Laser Line Scanner. Sensors 2019, 19, 3398. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.L.; He, X.F. Fast automatic three-dimensional road model reconstruction based on mobile laser scanning system. Optik 2015, 126, 725–730. [Google Scholar] [CrossRef]

- Wang, W.D. A novel rapid point-cloud surface reconstruction algorithm for laser imaging radar. Multimed. Tools Appl. 2019, 78, 8737–8749. [Google Scholar] [CrossRef]

- Liu, T.; Wang, N.N.; Fu, Q.; Zhang, Y.; Wang, M.H. Research on 3D Reconstruction Method Based on Laser Rotation Scanning. In Proceedings of the 16th IEEE International Conference on Mechatronics and Automation (IEEE ICMA), Tianjin, China, 4–7 August 2019; pp. 1600–1604. [Google Scholar]

- Jiang, T.G. Three-Dimensional Data Registration in Laser based 3D Scanning Reconstruction. In Proceedings of the 8th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Zhejiang University, Hangzhou, China, 11–12 September 2016; pp. 457–460. [Google Scholar]

- Sun, H.; Luo, Q.; Yang, Y.Y.; Cao, J.; Hao, Q. An innovative method of calculating target angle based on laser echo in laser imaging system. In Proceedings of the International Conference on Optical Instruments and Technology—Optoelectronic Measurement Technology and Systems, Beijing, China, 17–19 November 2013. [Google Scholar]

- Jia, N.N.; Li, Z.Y.; Ren, J.L.; Wang, Y.J.; Yang, L.Q. A 3D reconstruction method based on grid laser and gray scale photo for visual inspection of welds. Opt. Laser Technol. 2019, 119, 105648. [Google Scholar] [CrossRef]

- Li, S.H.; He, Y.X.; Li, Q.Q.; Chen, M. Using Laser Measuring and SFM Algorithm for Fast 3D Reconstruction of Objects. J. Russ. Laser Res. 2018, 39, 591–599. [Google Scholar] [CrossRef]

- Xu, X.B.; Fei, Z.W.; Yang, J.; Tan, Z.Y.; Luo, M.Z. Line structured light calibration method and centerline extraction: A review. Results Phys. 2020, 19, 103637. [Google Scholar] [CrossRef]

- He, Z.X.; Kang, L.P.; Zhao, X.Y.; Zhang, S.Y.; Tan, J.R. Robust laser stripe extraction for 3D measurement of complex objects. Meas. Sci. Technol. 2021, 32, 065002. [Google Scholar] [CrossRef]

- Li, Z.K.; Ma, L.P.; Long, X.L.; Chen, Y.Z.; Deng, H.T.; Yan, F.X.; Gu, Q.Y. Hardware-Oriented Algorithm for High-Speed Laser Centerline Extraction Based on Hessian Matrix. IEEE Trans. Instrum. Meas. 2021, 70, 5010514. [Google Scholar] [CrossRef]

- Yang, H.T.; Wang, Z.; Yu, W.B.; Zhang, P. Center Extraction Algorithm of Linear Structured Light Stripe Based on Improved Gray Barycenter Method. In Proceedings of the 33rd Chinese Control and Decision Conference (CCDC), Kunming, China, 22–24 May 2021; pp. 1783–1788. [Google Scholar]

- Yin, X.Q.; Tao, W.; Zhao, H. A Novel Breakpoint Detection Method Based on Dynamic Programming for Linear Laser Scanner. In Proceedings of the 10th International Symposium on Precision Engineering Measurements and Instrumentation (ISPEMI), Kunming, China, 8–10 August 2018. [Google Scholar]

- Tian, Q.G.; Zhang, X.Y.; Ma, Q.; Ge, B.Z. Utilizing polygon segmentation technique to extract and optimize light stripe centerline in line-structured laser 3D scanner. Pattern Recognit. 2016, 55, 100–113. [Google Scholar] [CrossRef]

- Chen, C.; Mei, X.S.; Hou, D.X.; Fan, Z.J.; Huang, W.W. A Voronoi-Diagram-based method for centerline extraction in 3D industrial line-laser reconstruction using a graph-centrality-based pruning algorithm. Optik 2022, 261, 169179. [Google Scholar] [CrossRef]

- Hou, W.Q.; Jing, H.C.; Guo, A.; Chen, Y.Q.; Song, D.S. Accurate laser centerline extraction algorithm used for 3D reconstruction of brake caliper surface. Opt. Laser Technol. 2023, 167, 109743. [Google Scholar] [CrossRef]

- Wang, J.; Cheng, W.; Li, W.L.; Ma, X.Q.; Yan, W.N. Research on extraction method of centerline of large format laser stripe. In Proceedings of the 2nd IYSF Academic Symposium on Artificial Intelligence and Computer Engineering, Xi’an, China, 8–10 October 2021. [Google Scholar]

| Type | Argument |

|---|---|

| Sensor model type | On-Semi Python5000 P1, CMOS |

| Resolution | 2592 × 2048 |

| Black and white/color | Black and white |

| Support interface | GigE Vision |

| Pixel size | 4.8 μm × 4.8 μm |

| Frame rate | 22 fps |

| Interface speed | 1 Gbps |

| Pixel depth | 8 bit |

| Supporting lens | C-Mount; CS-Mount |

| Shutter type | Global shutter |

| Power requirements | Support PoE power supply; 10–36 VDC |

| Synchronization | Software/hardware trigger, PTP |

| Exposure control | Hardware trigger, API programming |

| Dimension | 21 × 29 × 44 mm |

| Weight | 46 g |

| Index | Argument |

|---|---|

| Core power | 150 MW |

| Output wavelength | 60 nm |

| Dimension | φ22 × 70 mm |

| Operating temperature | −10 °C~+55 °C |

| Operating voltage | DC2.8 V~5.2 V |

| Working life | 13,000 h |

| Operating temperature | −10 °C~+50 °C |

| Storage temperature | −45 °C~+80 °C |

| Measurement Methods | The Grayscale Centers of Gravity Method | The Curve Fitting Method | The Method Proposed in This Paper | The Mean of Three Methods | |||

|---|---|---|---|---|---|---|---|

| Pixel Coordinates | Measured Values x1/Pixel | Absolute Deviations σ1/Pixel | Measured Values x2/Pixel | Absolute Deviations σ2/Pixel | Measured Values x3/Pixel | Absolute Deviations σ3/Pixel | The Estimated True Values x^/Pixel |

| 1565 | 1165.040 | 0.013 | 1165.050 | 0.003 | 1165.070 | 0.017 | 1165.053 |

| 1566 | 1165.260 | 0.090 | 1165.100 | 0.070 | 1165.150 | 0.020 | 1165.170 |

| 1567 | 1165.150 | 0.030 | 1165.090 | 0.030 | 1165.120 | 0.000 | 1165.120 |

| 1568 | 1165.010 | 0.053 | 1165.090 | 0.027 | 1165.090 | 0.027 | 1165.063 |

| 1569 | 1165.180 | 0.037 | 1165.110 | 0.033 | 1165.140 | 0.003 | 1165.143 |

| 1570 | 1165.250 | 0.060 | 1165.140 | 0.050 | 1165.180 | 0.010 | 1165.190 |

| 1571 | 1165.150 | 0.007 | 1165.120 | 0.023 | 1165.160 | 0.017 | 1165.143 |

| 1572 | 1165.110 | 0.007 | 1165.110 | 0.007 | 1165.090 | 0.013 | 1165.103 |

| 1573 | 1164.960 | 0.120 | 1165.140 | 0.060 | 1165.140 | 0.060 | 1165.080 |

| 1574 | 1165.540 | 0.173 | 1165.240 | 0.127 | 1165.320 | 0.047 | 1165.367 |

| 1575 | 1165.520 | 0.113 | 1165.330 | 0.077 | 1165.370 | 0.037 | 1165.407 |

| 1576 | 1165.230 | 0.040 | 1165.340 | 0.070 | 1165.240 | 0.030 | 1165.270 |

| 1577 | 1165.060 | 0.097 | 1165.290 | 0.133 | 1165.120 | 0.037 | 1165.157 |

| 1578 | 1165.100 | 0.073 | 1165.270 | 0.097 | 1165.150 | 0.023 | 1165.173 |

| 1579 | 1165.460 | 0.150 | 1165.250 | 0.060 | 1165.220 | 0.090 | 1165.310 |

| 1580 | 1165.140 | 0.023 | 1165.190 | 0.027 | 1165.160 | 0.003 | 1165.163 |

| 1581 | 1165.090 | 0.003 | 1165.110 | 0.023 | 1165.060 | 0.027 | 1165.087 |

| 1582 | 1164.930 | 0.063 | 1165.050 | 0.057 | 1165.000 | 0.007 | 1164.993 |

| 1583 | 1164.950 | 0.050 | 1165.040 | 0.040 | 1165.010 | 0.010 | 1165.000 |

| 1584 | 1165.140 | 0.040 | 1165.090 | 0.010 | 1165.070 | 0.030 | 1165.100 |

| 1585 | 1165.200 | 0.017 | 1165.200 | 0.017 | 1165.150 | 0.033 | 1165.183 |

| Average Absolute Deviations MAE/pixel | 0.060 | 0.050 | 0.026 | ||||

| Extraction Methods | The Grayscale Centers of Gravity Method | The Curve Fitting Method | The Proposed Method in This Paper |

|---|---|---|---|

| Standard errors σ/pixel | 0.0273 | 0.0175 | 0.0051 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yao, R.; Wang, B.; Hu, M.; Hua, D.; Wu, L.; Lu, H.; Liu, X. A Method for Extracting a Laser Center Line Based on an Improved Grayscale Center of Gravity Method: Application on the 3D Reconstruction of Battery Film Defects. Appl. Sci. 2023, 13, 9831. https://doi.org/10.3390/app13179831

Yao R, Wang B, Hu M, Hua D, Wu L, Lu H, Liu X. A Method for Extracting a Laser Center Line Based on an Improved Grayscale Center of Gravity Method: Application on the 3D Reconstruction of Battery Film Defects. Applied Sciences. 2023; 13(17):9831. https://doi.org/10.3390/app13179831

Chicago/Turabian StyleYao, Rongbin, Baiyi Wang, Mengya Hu, Dezheng Hua, Lequn Wu, He Lu, and Xinhua Liu. 2023. "A Method for Extracting a Laser Center Line Based on an Improved Grayscale Center of Gravity Method: Application on the 3D Reconstruction of Battery Film Defects" Applied Sciences 13, no. 17: 9831. https://doi.org/10.3390/app13179831