Resource Allocation Strategy for Satellite Edge Computing Based on Task Dependency

Abstract

:1. Introduction

- Considering the interdependence of tasks in satellite edge computing, tasks can be processed at satellite edge nodes. An application completion rate model is established in this paper.

- A scheduling algorithm based on the in-degree of tasks (TBID) is proposed, and the processing order of tasks is obtained. The subtasks with an in-degree of 0 in the directed acyclic graph (DAG) are scheduled each time to form a subtask set, and the tasks in the task set are processed each time and then deleted. The application program chooses whether to process in the satellite or locally according to the deadline delay.

- An improved sparrow search algorithm is proposed to solve the resource allocation problem of subtasks scheduled in each iteration. The opposition-based learning is introduced to increase the initial population diversity. The random search mechanism from the whale optimization algorithm is introduced to improve the global search ability of the algorithm. The problem of local optimum is solved by Cauchy variation.

2. Related Research

3. System Models and Problem Formulation

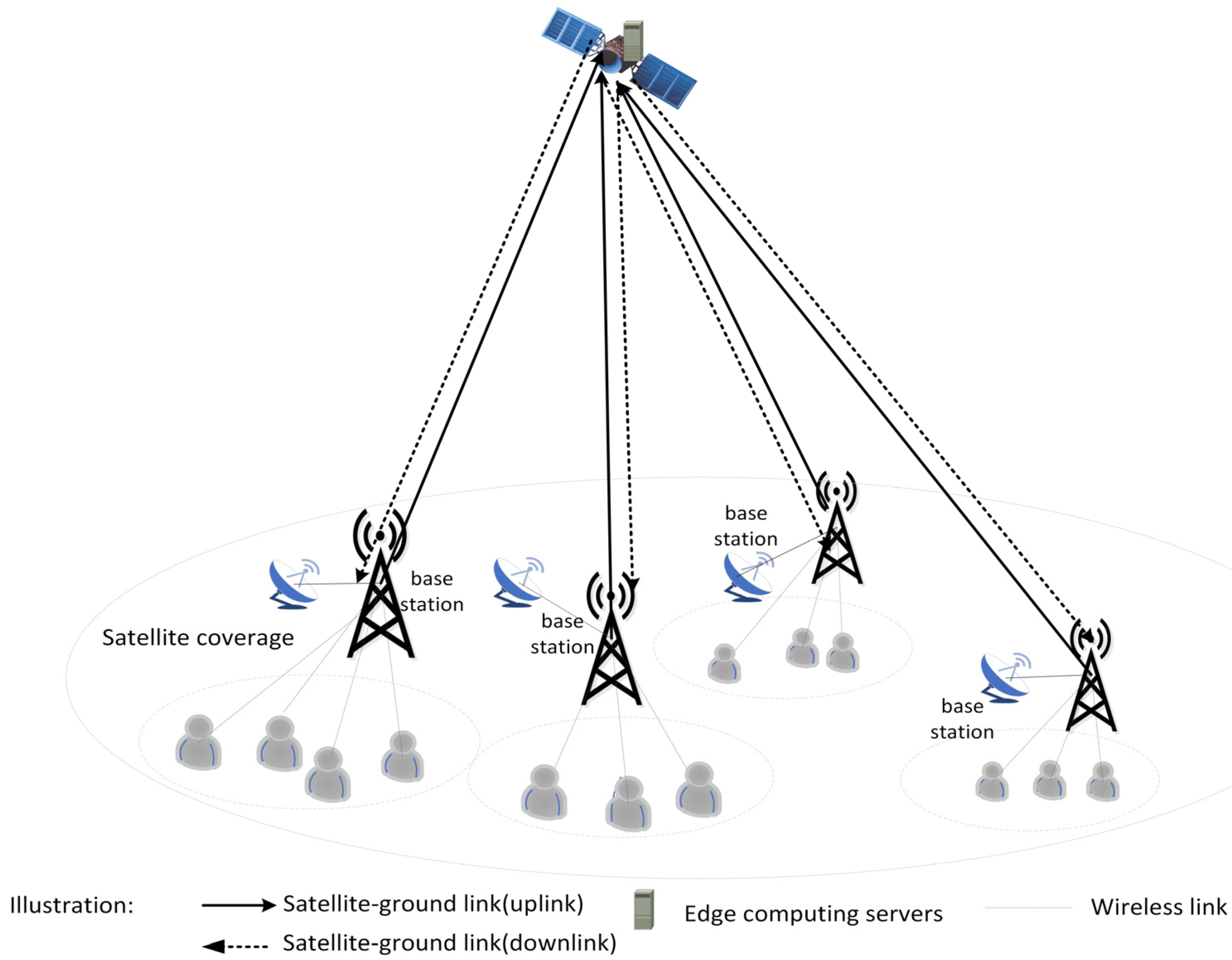

3.1. Network Model

3.2. Task Model

3.2.1. Application Model

- At the beginning of each time slot, multiple users on the ground initiate a task request.

- Through the scheduling algorithm, the task processing approach and the set of subtasks to be processed are determined.

- If the task needs to be offloaded to the satellite, the resource allocation algorithm obtains the resources to be allocated.

- The satellite edge node calculates the results for the ground user.

3.2.2. Application Completion Rate Model

3.3. Task Scheduling Model

3.3.1. Scheduling Model Based on Subtask Degree

| Algorithm 1 TBID scheduling algorithm |

| Input: Applications for all users Output: The completion rate of the application |

| 1. for i = 1 to m do |

| 2. Compare the priorities of each application into a set of high- and low-priority tasks, with high priority executed at satellite edge nodes and low priority locally executed |

| 3. while is not null |

| 4. Use the BFS algorithm to traverse the application’s DAG |

| 5. end while |

| 6. Obtain the number of layers for each DAG |

| 7. end for |

| 8. for i = 1 to m do |

| 9. while is not null |

| 10. Traverse the application’s DAG and look for nodes with an indegree of 0 to add to subtask set L. |

| 11. According to Algorithm 2, the resource allocation strategy F and B of sub- task set L and the completion delay of subtask set tasks are obtained |

| 12. Delete subtask nodes and task-related edges |

| 13. end while |

| 14. The application completion delay is calculated according to Equation (6) |

| 15. end for |

| 16. Calculate the application completion rate according to Equation (7). |

| Algorithm 2 Satellite edge resource allocation method based on improved sparrow algorithm |

| Inputs: subtask set, the proportion of producers, the proportion of investigators early warning value, the maximum number of iterations T, population size Output: optimal resource allocation vectors F, B, subtask set completion delay 1. The TBID scheduling algorithm is used to obtain the subtask set L 2. According to Equation (12), opposition-based learning is used to initialize the population 3. for t = 1 to T do 4. Calculate the fitness value according to Equations (8)–(11) and find the optimal fit 5. for i = 1 to the number of producers do 6. Update the location of the sparrow finder according to Equation (13). 7. end for 8. for i = number of producers +1 to population number do 9. Update the position of the sparrow joiner according to Equation (14). 10. end for 11. for i = 1 to the number of investigators do 12. Update the position of the Sparrow Vigilant according to Equation (15). 13. end for 14. The Cauchy mutation is used to apply Formula (16) to interfere with the population position so that individual sparrows can jump out of the local optimum. 15. if the fitness of the new solution is better than that of the previous solution, and the new solution is updated to the global optimal solution 16. t = t + 1 17. end for 18. return the completion delay of tasks in the optimal resource allocation strategy F, B, and subtask sets |

3.3.2. Improve the Resource Allocation Method of the Sparrow Search Algorithm

- (1)

- Initialize the population in combination with the opposition-based learning strategy.

- (2)

- Producer location updates combined with whale-optimized random search strategies.

- (3)

- Population disturbance combined with Cauchy variation

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Taleb, T.; Samdanis, K.; Mada, B.; Flinck, H.; Dutta, S.; Sabella, D. On multi-access edge computing: A survey of the emerging 5G network edge cloud architecture and orchestration. IEEE Commun. Surv. Tutor. 2017, 19, 1657–1681. [Google Scholar] [CrossRef]

- Yao, H.; Wang, L.; Wang, X.; Lu, Z.; Liu, Y. The Space-Terrestrial Integrated Network (STIN): An Overview. IEEE Commun. Mag. 2018, 56, 178–185. [Google Scholar] [CrossRef]

- Tang, Q.; Xie, R.; Liu, X.; Zhang, Y.; He, C.; Li, C.; Huang, T. Integrating MEC’s satellite-ground collaborative network: Architecture, key technologies, and challenges. J. Commun. 2020, 41, 162–181. [Google Scholar]

- Liu, Y.; Wang, S.; Zhao, Q.; Du, S.; Zhou, A.; Ma, X.; Yang, F. Dependency-aware task scheduling in vehicular edge computing. IEEE Internet Things J. 2020, 7, 4961–4971. [Google Scholar] [CrossRef]

- Ma, Y.; Liang, W.; Huang, M.; Xu, W.; Guo, S. Virtual Network Function Service Provisioning in MEC via Trading Off the Usages between Computing and Communication Resources. In Proceedings of the IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Paris, France, 29 April–2 May 2019; pp. 1–7. [Google Scholar]

- Zhang, Z.; Zhang, W.; Tseng, F.-H. Satellite Mobile Edge Computing: Improving QoS of High-Speed Satellite-Terrestrial Networks Using Edge Computing Techniques. IEEE Netw. 2019, 33, 70–76. [Google Scholar] [CrossRef]

- Xie, R.; Tang, Q.; Wang, Q.; Liu, X.; Yu, F.R.; Huang, T. Satellite-terrestrial integrated edge computing networks: Architecture, challenges, and open issues. IEEE Netw. 2020, 34, 224–231. [Google Scholar] [CrossRef]

- Cui, G.; Long, Y.; Xu, L.; Wang, W. Joint offloading and resource allocation for satellite-assisted vehicle-to-vehicle communication. IEEE Syst. J. 2021, 15, 3958–3969. [Google Scholar] [CrossRef]

- Qiu, C.; Yao, H.; Yu, F.R.; Xu, F.; Zhao, C. Deep Q-Learning Aided Networking, Caching, and Computing Resources Allocation in Software-Defined Satellite-Terrestrial Net-works. IEEE Trans. Veh. Technol. 2019, 68, 5871–5883. [Google Scholar] [CrossRef]

- Wang, F.; Jiang, D.; Qi, S.; Qiao, C.; Shi, L. A Dynamic Resource Scheduling Scheme in Edge Computing Satellite Networks. Mob. Netw. Appl. 2021, 26, 597–608. [Google Scholar] [CrossRef]

- Jia, M.; Zhang, L.; Wu, J.; Guo, Q.; Gu, X. Joint Computing and Communication Resource Allocation for Edge Computing towards Huge LEO Networks. China Commun. Mag. 2022, 19, 73–84. [Google Scholar] [CrossRef]

- Song, Z.; Hao, Y.; Liu, Y.; Sun, X. Energy-Efficient Multiaccess Edge Computing for Terrestrial-Satellite Internet of Things. IEEE Internet Things J. 2021, 8, 14202–14218. [Google Scholar] [CrossRef]

- Cheng, L.; Feng, G.; Sun, Y.; Liu, M.; Qin, S. Dynamic Computation Offloading in Satellite Edge Computing. ICC 2022. In Proceedings of the IEEE International Conference on Communications, Seoul, Korea, 16–20 May 2022; pp. 4721–4726. [Google Scholar]

- Gao, X.; Liu, R.; Kaushik, A.; Zhang, H. Dynamic Resource Allocation for Virtual Network Function Placement in Satellite Edge Clouds. Sciencing 2022, 9, 2252–2265. [Google Scholar] [CrossRef]

- Wei, K.; Tang, Q.; Guo, J.; Zeng, M.; Fei, Z.; Cui, Q. Resource Scheduling and Offloading Strategy Based on LEO Satellite Edge Computing. In Proceedings of the 2021 IEEE 94th Vehicular Technology Conference, Norman, OK, USA, 27–30 September 2021; pp. 1–6. [Google Scholar]

- Zhang, S.; Cui, G.; Long, Y.; Wang, W. Joint computing and communication resource allocation for satellite communication networks with edge computing. China Commun. 2021, 18, 236–252. [Google Scholar] [CrossRef]

- Tang, Q.; Fei, Z.; Li, B.; Han, Z. Computation Offloading in LEO Satellite Networks with Hybrid Cloud and Edge Computing. IEEE Internet Things J. 2021, 8, 9164–9176. [Google Scholar] [CrossRef]

- Li, P.; Wang, Y.; Wang, Z. A Game-Based Joint Task Offloading and Computation Resource Allocation Strategy for Hybrid Edgy-Cloud and Cloudy-Edge Enabled LEO Satellite Networks. In Proceedings of the 2022 IEEE/CIC International Conference on Communications in China (ICCC), Sanshui, Foshan, China, 11–13 August 2022; pp. 868–873. [Google Scholar]

- Zhang, H.; Xi, S.; Jiang, H.; Shen, Q.; Shang, B.; Wang, J. Resource Allocation and Offloading Strategy for UAV-Assisted LEO Satellite Edge Computing. Drones 2023, 7, 383. [Google Scholar] [CrossRef]

- Tong, M.; Wang, X.; Li, S.; Peng, L. Joint Offloading Decision and Resource Allocation in Mobile Edge Computing-Enabled Satellite-Terrestrial Network. Symmetry 2022, 14, 564. [Google Scholar] [CrossRef]

- Zhu, X.; Xiao, Y. Adaptive offloading and scheduling algorithm for big data-based mobile edge computing. Neurocomputing 2022, 485, 285–296. [Google Scholar] [CrossRef]

- Sun, M.; Bao, T.; Xie, D.; Lv, H.; Si, G. Towards Application-Driven Task Offloading in Edge Computing Based on Deep Reinforcement Learning. Micromachines 2021, 12, 1011. [Google Scholar] [CrossRef] [PubMed]

- Sadatdiynov, K.; Cui, L.; Huang, J. Offloading dependent tasks in MEC-enabled IoT systems: A preference-based hybrid optimization method. Peer–Peer Netw. Appl. 2023, 16, 657–674. [Google Scholar] [CrossRef]

- Sulaiman, M.; Halim, Z.; Lebbah, M.; Waqas, M.; Tu, S. An Evolutionary Computing-Based Efficient Hybrid Task Scheduling Approach for Heterogeneous Computing Environment. J. Grid Comput. 2021, 19, 11. [Google Scholar] [CrossRef]

- Wang, J.; Hu, J.; Min, G.; Zhan, W.; Zomaya, A.Y.; Georgalas, N. Dependent Task Offloading for Edge Computing based on Deep Reinforcement Learning. IEEE Trans. Comput. 2022, 71, 2449–2461. [Google Scholar] [CrossRef]

- Chen, J.; Yang, Y.; Wang, C.; Zhang, H.; Qiu, C.; Wang, X. Multitask Offloading Strategy Optimization Based on Directed Acyclic Graphs for Edge Computing. IEEE Internet Things J. 2022, 9, 9367–9378. [Google Scholar] [CrossRef]

- Huynh, L.N.T.; Pham, Q.-V.; Pham, X.-Q.; Nguyen, T.D.T.; Hossain, M.D.; Huh, E.-N. Efficient Computation Offloading in Multi-Tier Multi-Access Edge Computing Systems: A Particle Swarm Optimization Approach. Appl. Sci. 2020, 10, 203. [Google Scholar] [CrossRef]

- Zhao, X.; Yang, F.; Han, Y.; Cui, Y. An Opposition-Based Chaotic Salp Swarm Algorithm for Global Optimization. IEEE Access 2020, 8, 36485–36501. [Google Scholar] [CrossRef]

- Li, J.; Shang, Y.; Qin, M.; Yang, Q.; Cheng, N.; Gao, W.; Kwak, K.S. Multiobjective Oriented Task Scheduling in Heterogeneous Mobile Edge Computing Networks. IEEE Trans. Veh. Technol. 2022, 71, 8955–8966. [Google Scholar] [CrossRef]

- Ma, S.; Song, S.; Yang, S.; Zhao, J.; Yang, F.; Zhai, L. Dependent tasks offloading based on particle swarm optimization algorithm in multi-access edge computing. Appl. Soft Comput. 2021, 112, 107790. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, J.; Zhou, Y.; Yang, L.; He, B. Dependent task offloading with an energy-latency tradeoff in mobile edge computing. IET Commun. 2022, 16, 1993–2001. [Google Scholar] [CrossRef]

- Chai, F.; Zhang, Q.; Yao, H.; Xin, X.; Gao, R.; Guizani, M. Joint Multi-task Offloading and Resource Allocation for Mobile Edge Computing Systems in Satellite IoT. IEEE Trans. Veh. Technol. 2023, 72, 7783–7795. [Google Scholar] [CrossRef]

- Cui, G.; Li, X.; Xu, L.; Wang, W. Latency and Energy Optimization for MEC Enhanced SAT-IoT Networks. IEEE Access 2020, 8, 55915–55926. [Google Scholar] [CrossRef]

| Literature | Independent Task | Dependent Task | Satellite Computing | Local Computing | Ground Computing | Resource Allocation | Task Delay |

|---|---|---|---|---|---|---|---|

| [8,11,12] | √ | √ | √ | √ | √ | ||

| [10,14,18,19] | √ | √ | √ | √ | √ | ||

| [13,15,16,17,20] | √ | √ | √ | √ | √ | √ | |

| [21,24,25] | √ | √ | √ | ||||

| [22,23,26] | √ | √ | √ | √ |

| The Improved Sparrow Search Algorithm | Mapping Relationships |

|---|---|

| Individual vectors | Resource allocation results |

| Individual weight | The result of resource allocation for each subtask |

| Fitness function | Subtask completion delay |

| Population | Different collections of resource allocation policies |

| Parameters | Value |

|---|---|

| The maximum available computing resources for satellite MEC | |

| Local computing resource | |

| Satellite MEC available bandwidth resources | 10 MHz |

| Subtask size | [50 kb, 100 kb] |

| Number of subtasks per DAG | 10–15 |

| CPU computing power | |

| The application tolerates latency | 0.5–1.5 s |

| Total number of iterations | 200 |

| Sparrow population | 100 |

| Proportion of producers | 10% |

| Proportion of investigators | 20% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Z.; Jiang, Y.; Rong, J. Resource Allocation Strategy for Satellite Edge Computing Based on Task Dependency. Appl. Sci. 2023, 13, 10027. https://doi.org/10.3390/app131810027

Liu Z, Jiang Y, Rong J. Resource Allocation Strategy for Satellite Edge Computing Based on Task Dependency. Applied Sciences. 2023; 13(18):10027. https://doi.org/10.3390/app131810027

Chicago/Turabian StyleLiu, Zhiguo, Yingru Jiang, and Junlin Rong. 2023. "Resource Allocation Strategy for Satellite Edge Computing Based on Task Dependency" Applied Sciences 13, no. 18: 10027. https://doi.org/10.3390/app131810027