A Calibration Method for Time Dimension and Space Dimension of Streak Tube Imaging Lidar

Abstract

:1. Introduction

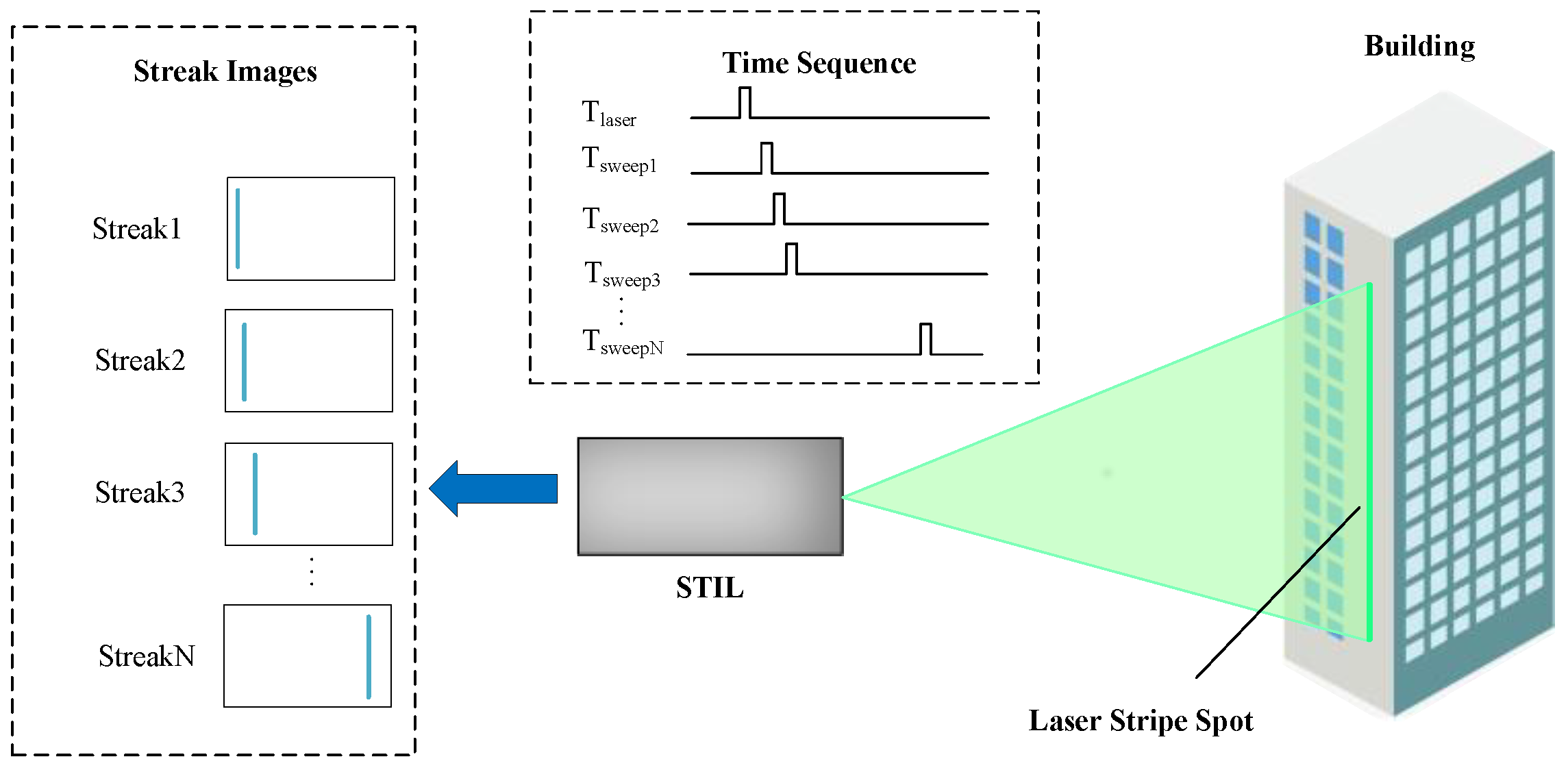

2. Coupling Mechanism

3. Calibration Method

3.1. Time Calibration

3.1.1. Time Calibration Experiment

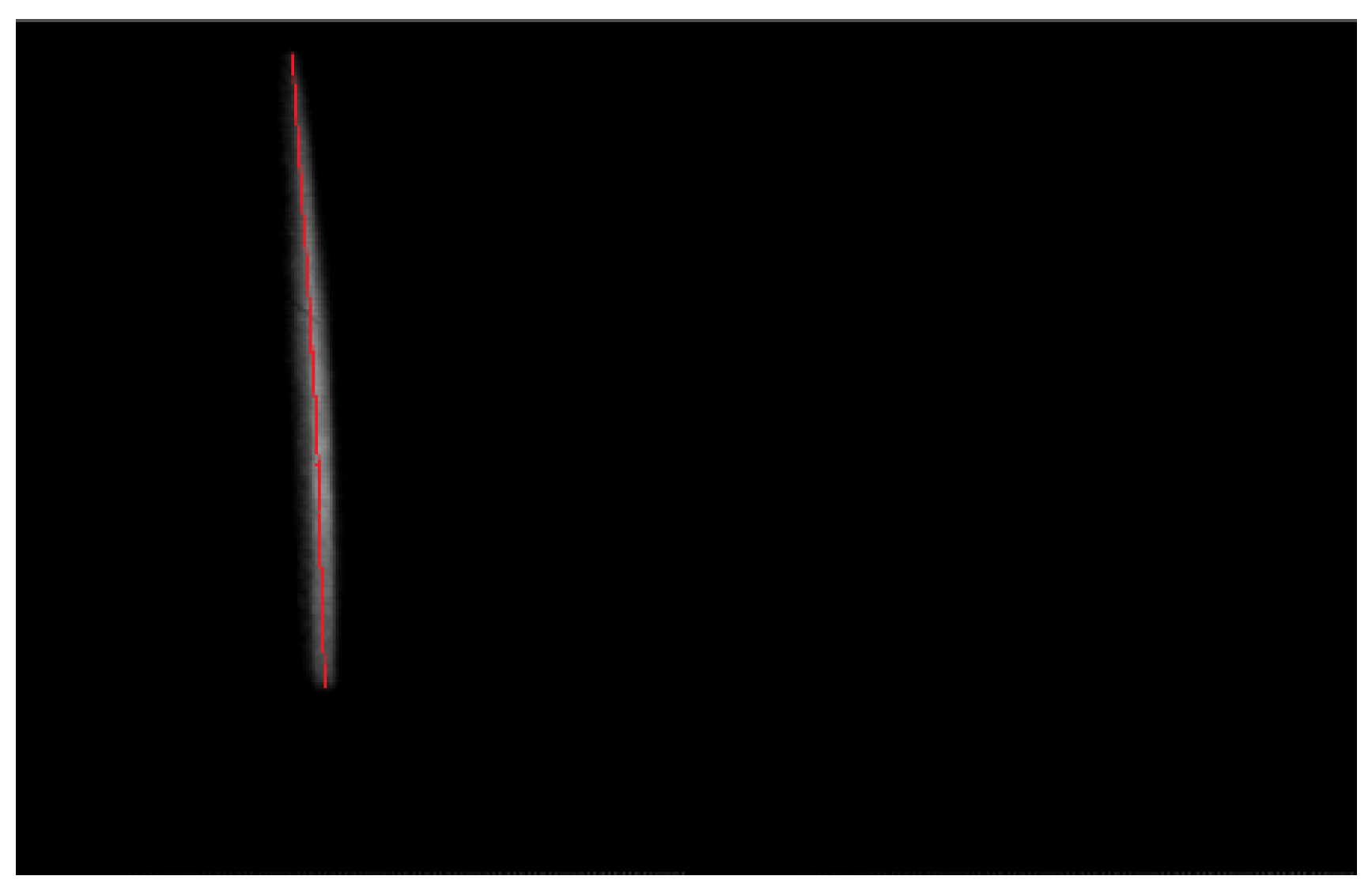

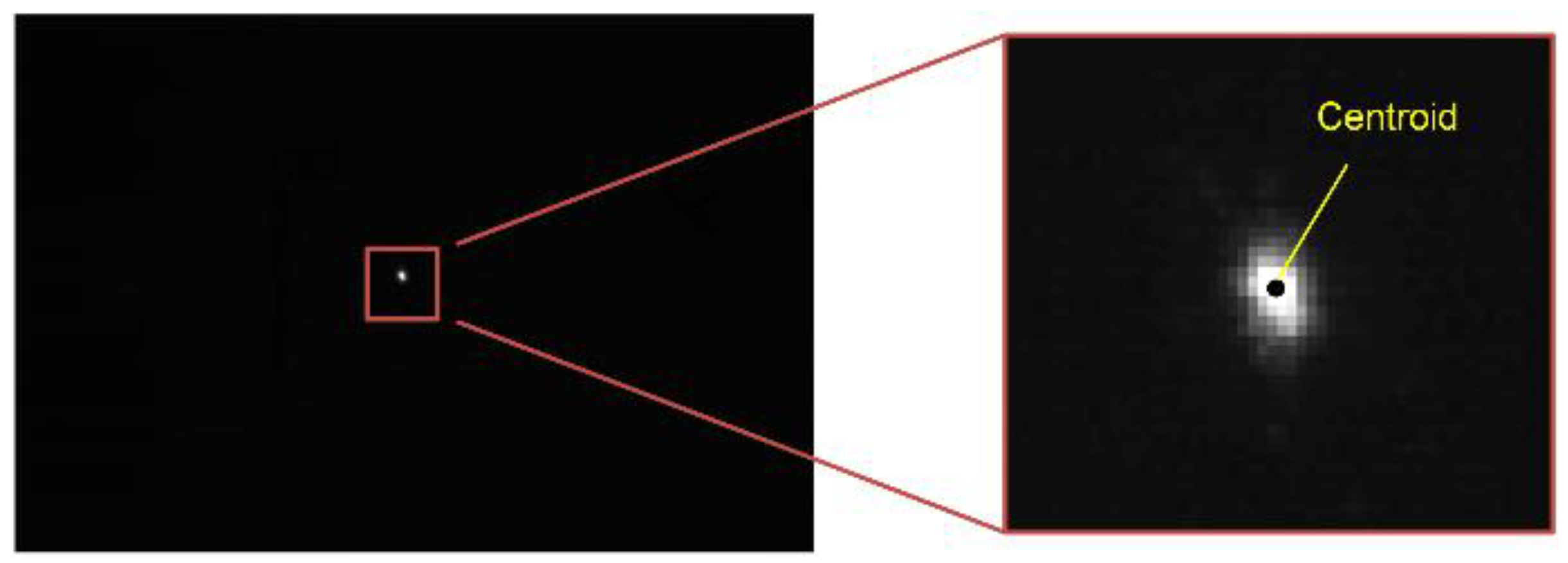

3.1.2. Data Processing for Time Calibration

3.2. Angle Calibration Experiment

3.2.1. Angle Calibration Experiment

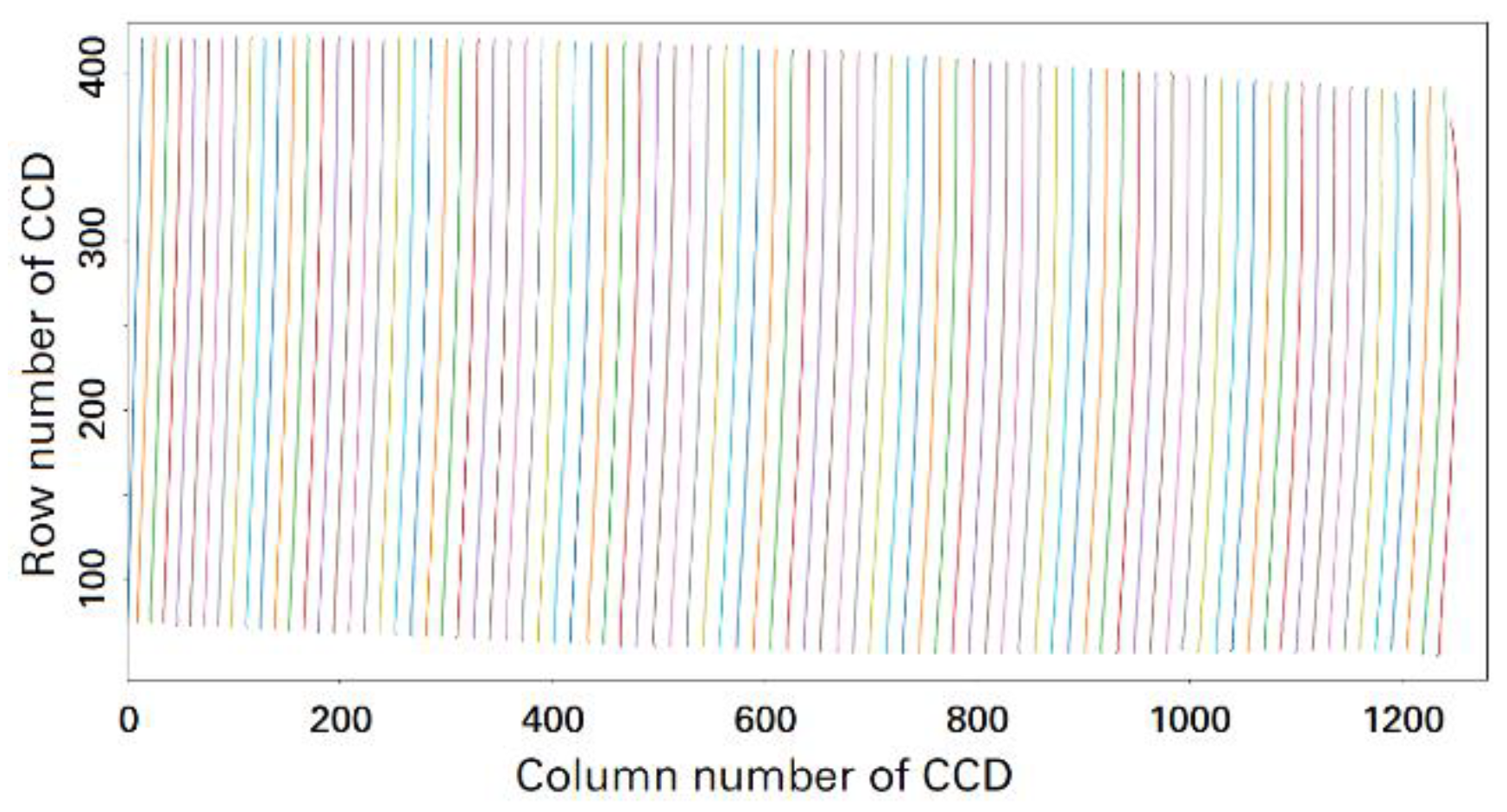

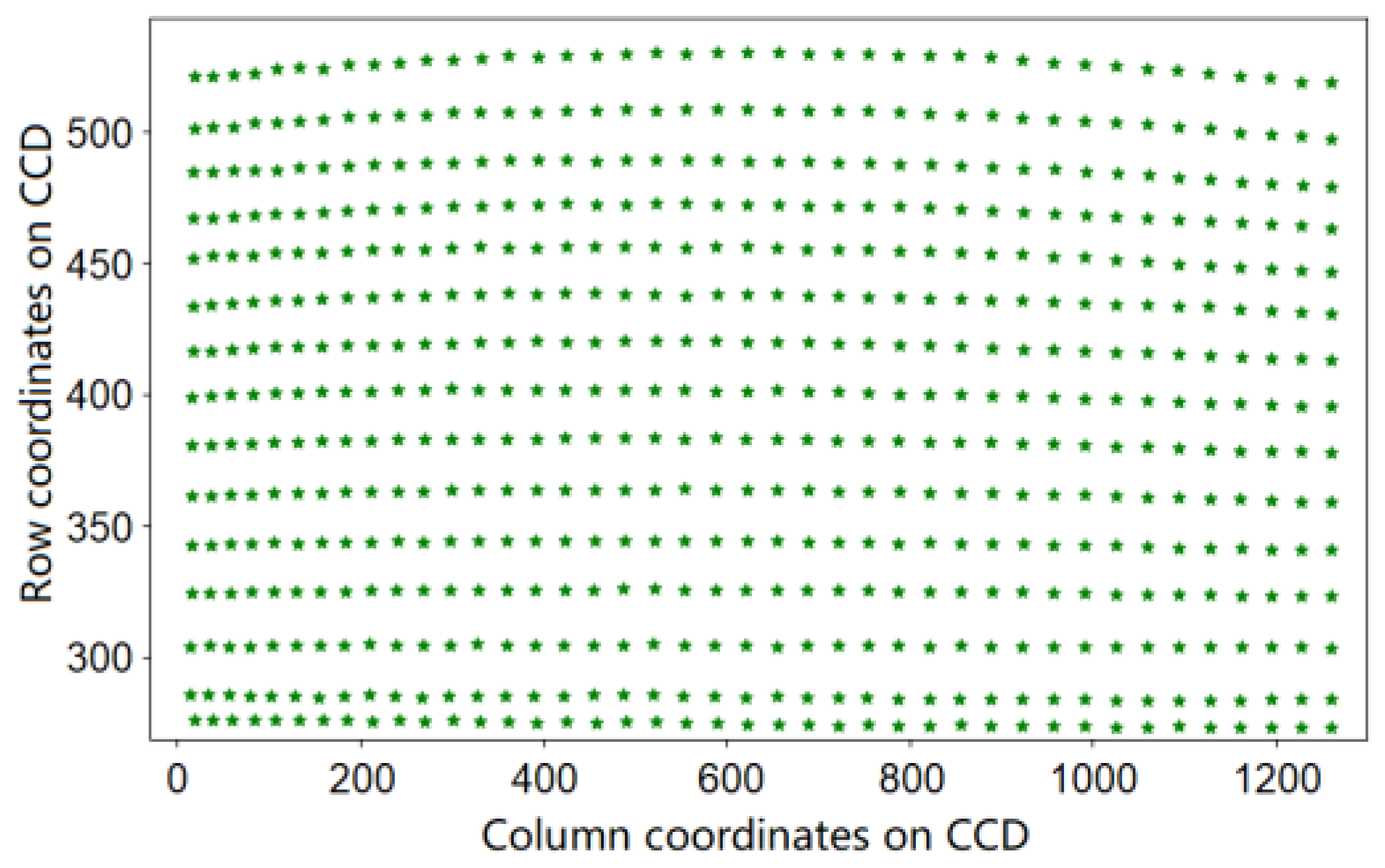

3.2.2. Data Processing for Angle Calibration

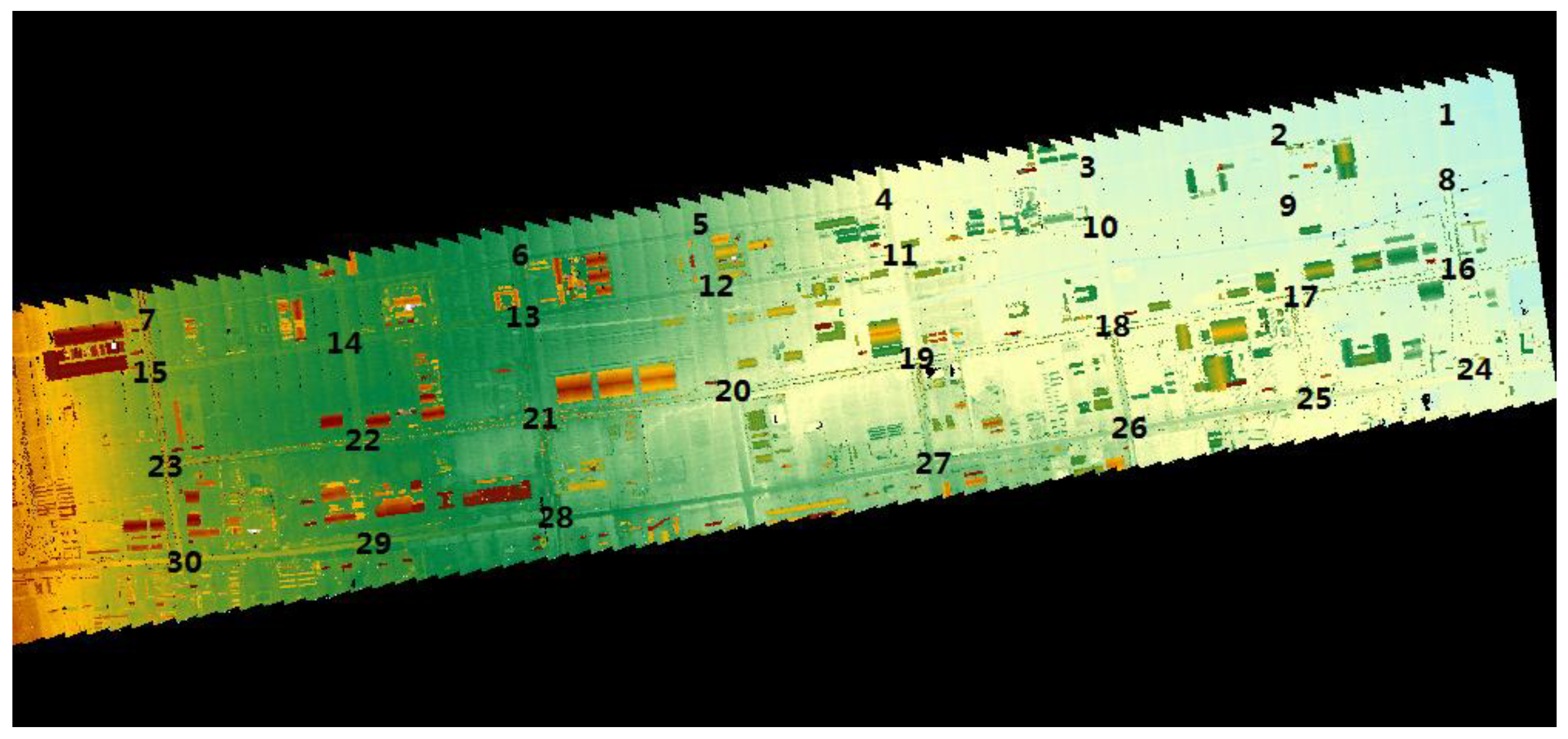

4. Calibration Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cheng, L.; Wang, Y.; Chen, Y.; Li, M. Using LiDAR for digital documentation of ancient city walls. J. Cult. Herit. 2016, 17, 188–193. [Google Scholar] [CrossRef]

- Du, M.; Li, H.; Roshanianfard, A. Design and Experimental Study on an Innovative UAV-LiDAR Topographic Mapping System for Precision Land Levelling. Drones 2022, 6, 403. [Google Scholar] [CrossRef]

- Jantzi, A.; Jemison, W.; Illig, D.; Mullen, L. Spatial and temporal domain filtering for underwater lidar. J. Opt. Soc. Am. A 2021, 38, B10–B18. [Google Scholar] [CrossRef] [PubMed]

- Collings, S.; Martin, T.J.; Hernandez, E.; Edwards, S.; Filisetti, A.; Catt, G.; Marouchos, A.; Boyd, M.; Embry, C. Findings from a Combined Subsea LiDAR and Multibeam Survey at Kingston Reef, Western Australia. Remote Sens. 2020, 12, 2443. [Google Scholar] [CrossRef]

- Palacin, J.; Martinez, D.; Rubies, E.; Clotet, E. Mobile Robot Self-Localization with 2D Push-Broom LIDAR in a 2D Map. Sensors 2020, 20, 2500. [Google Scholar] [CrossRef] [PubMed]

- Tian, L.; Shen, L.; Xue, Y.; Chen, L.; Chen, P.; Tian, J.; Zhao, W. 3-D Imaging Lidar Based on Miniaturized Streak Tube. Meas. Sci. Rev. 2023, 23, 80–85. [Google Scholar] [CrossRef]

- Sun, J.; Wang, Q. 4-D image reconstruction for Streak Tube Imaging Lidar. Laser Phys. 2009, 19, 502–504. [Google Scholar] [CrossRef]

- Wei, J.; Wang, Q.; Sun, J.; Gao, J. High-resolution imaging of long-distance target with a single-slit streak-tube lidar. J. Russ. Laser Res. 2010, 31, 307–312. [Google Scholar] [CrossRef]

- Li, W.; Guo, S.; Zhai, Y.; Han, S.; Liu, F.; Lai, Z. Occluded target detection of streak tube imaging lidar using image inpainting. Meas. Sci. Technol. 2021, 32, 045404. [Google Scholar] [CrossRef]

- Ye, G.; Fan, R.; Lu, W.; Dong, Z.; Li, X.; He, P.; Chen, D. Depth resolution improvement of streak tube imaging lidar using optimal signal width. Opt. Eng. 2016, 55, 103112. [Google Scholar] [CrossRef]

- Gleckler, A.D. Multiple-Slit Streak Tube Imaging Lidar (MS-STIL) applications. In Proceedings of the Conference on Laser Radar Technology and Applications V, Orlando, FL, USA, 26–28 April 2000; pp. 266–278. [Google Scholar]

- Gleckler, A.D.; Gelbart, A.; Bowden, J.M. Multispectral and hyperspectral 3D imaging lidar based upon the multiple slit streak tube imaging lidar. In Proceedings of the Conference on Laser Radar Technology and Applications VI, Orlando, FL, USA, 13–17 April 2001; pp. 328–335. [Google Scholar]

- Gao, J.; Han, S.; Liu, F.; Zhai, Y.; Dai, Q.; Shimura, T. Streak tube imaging system based on compressive sensing. In Proceedings of the Conference on Optoelectronic Imaging and Multimedia Technology V, Beijing, China, 11–12 October 2018; Volume 10817. [Google Scholar]

- Eagleton, R.T.; James, S.F. Dynamic range measurements on streak image tubes with internal and external microchannel plate image amplification. Rev. Sci. Instrum. 2003, 74, 2215–2219. [Google Scholar] [CrossRef]

- Xu, Y.; Xu, T.; Liu, H.; Cai, H.; Wang, C. Gain regulation of the microchannel plate system. Int. J. Mass Spectrom. 2017, 421, 234–237. [Google Scholar]

- Li, H.; Chen, P.; Tian, J.; Xue, Y. High time-resolution detector based on THz pulse accelerating and scanning electron beam. Acta Phys. Sin.-CH 2022, 71, 028501. [Google Scholar] [CrossRef]

- Li, X.; Gu, L.; Zong, F.; Zhang, J.; Yang, Q. Temporal resolution limit estimation of x-ray streak cameras using a CsI photocathode. J. Appl. Phys. 2015, 118, 083105. [Google Scholar] [CrossRef]

- Tian, L.; Shen, L.; Li, L.; Wang, X.; Chen, P.; Wang, J.; Zhao, W.; Tian, J. Small-size streak tube with high edge spatial resolution. Optik 2021, 242, 166791. [Google Scholar] [CrossRef]

- Tian, L.; Shen, L.; Chen, L.; Li, L.; Tian, J.; Chen, P.; Zhao, W. A New Design of Large-format Streak Tube with Single-lens Focusing System. Meas. Sci. Rev. 2021, 21, 191–196. [Google Scholar] [CrossRef]

- Li, S.; Wang, Q.; Liu, J.; Guang, Y.; Hou, X.; Zhao, W.; Yao, B. Research of range resolution of streak tube imaging system. In Proceedings of the Conference on 27th International Congress on High-Speed Photography and Photonics, Xi’an, China, 17–22 September 2006; p. 6279. [Google Scholar]

- Yan, Y.; Wang, H.; Song, B.; Chen, Z.; Fan, R.; Chen, D.; Dong, Z. Airborne Streak Tube Imaging LiDAR Processing System: A Single Echo Fast Target Extraction Implementation. Remote Sens. 2023, 15, 1128. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Shao, F.; Fan, Z.; Wang, X.; Dong, C.; Dong, Z.; Fan, R.; Chen, D. A Calibration Method for Time Dimension and Space Dimension of Streak Tube Imaging Lidar. Appl. Sci. 2023, 13, 10042. https://doi.org/10.3390/app131810042

Chen Z, Shao F, Fan Z, Wang X, Dong C, Dong Z, Fan R, Chen D. A Calibration Method for Time Dimension and Space Dimension of Streak Tube Imaging Lidar. Applied Sciences. 2023; 13(18):10042. https://doi.org/10.3390/app131810042

Chicago/Turabian StyleChen, Zhaodong, Fangfang Shao, Zhigang Fan, Xing Wang, Chaowei Dong, Zhiwei Dong, Rongwei Fan, and Deying Chen. 2023. "A Calibration Method for Time Dimension and Space Dimension of Streak Tube Imaging Lidar" Applied Sciences 13, no. 18: 10042. https://doi.org/10.3390/app131810042

APA StyleChen, Z., Shao, F., Fan, Z., Wang, X., Dong, C., Dong, Z., Fan, R., & Chen, D. (2023). A Calibration Method for Time Dimension and Space Dimension of Streak Tube Imaging Lidar. Applied Sciences, 13(18), 10042. https://doi.org/10.3390/app131810042