Automatic Robust Crackle Detection and Localization Approach Using AR-Based Spectral Estimation and Support Vector Machine

Abstract

:1. Introduction

2. Materials and Methods

2.1. Dataset

2.2. Modeling of Simulated Crackle Sounds

2.3. Proposed Method

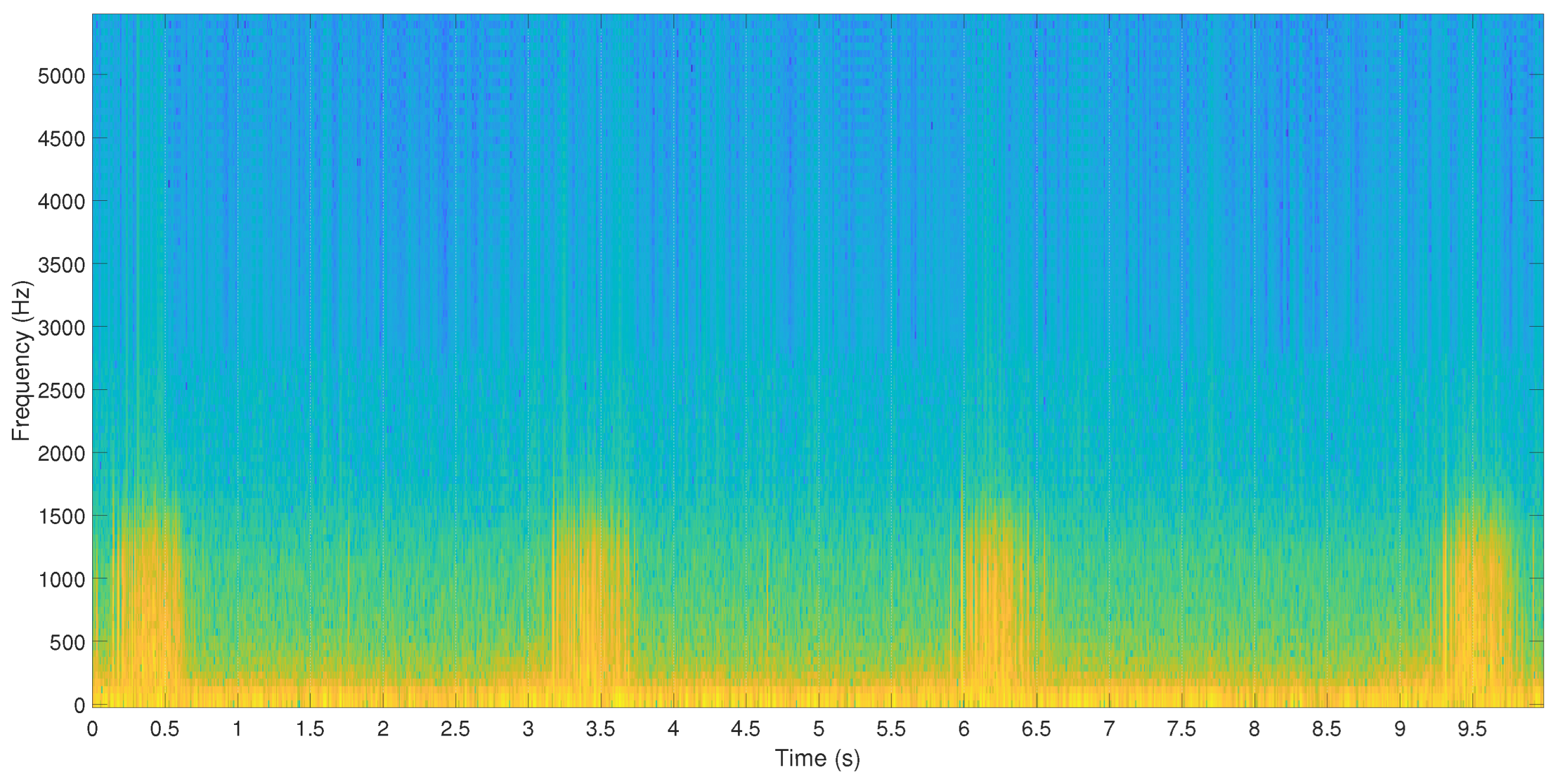

2.3.1. Preprocessing

2.3.2. Autoregressive (AR) Parameter Estimation

2.3.3. SVM Classifier

3. Evaluation

3.1. Metrics

3.2. Setup

3.3. State-of-the-Art Methods for Comparison

3.4. Results and Discussion

4. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- World Health Organization. Pneumonia. 2017. Available online: https://www.who.int/health-topics/pneumonia#tab=tab_1 (accessed on 23 September 2023).

- Pneumonia—Diagnosis and treatment—Mayo Clinic. 2021. Available online: https://www.mayoclinic.org/diseases-conditions/pneumonia/diagnosis-treatment/drc-20354210 (accessed on 23 September 2023).

- Ponte, D.F.; Moraes, R.; Hizume, D.C.; Alencar, A.M. Characterization of crackles from patients with fibrosis, heart failure and pneumonia. Med. Eng. Phys. 2013, 35, 448–456. [Google Scholar] [CrossRef] [PubMed]

- İçer, S.; Gengeç, Ş. Classification and analysis of non-stationary characteristics of crackle and rhonchus lung adventitious sounds. Digit. Signal Process. 2014, 28, 18–27. [Google Scholar] [CrossRef]

- Pancaldi, F.; Sebastiani, M.; Cassone, G.; Luppi, F.; Cerri, S.; Della Casa, G.; Manfredi, A. Analysis of pulmonary sounds for the diagnosis of interstitial lung diseases secondary to rheumatoid arthritis. Comput. Biol. Med. 2018, 96, 91–97. [Google Scholar] [CrossRef] [PubMed]

- Reyes, B.A.; Olvera-Montes, N.; Charleston-Villalobos, S.; González-Camarena, R.; Mejía-Ávila, M.; Aljama-Corrales, T. A smartphone-based system for automated bedside detection of crackle sounds in diffuse interstitial pneumonia patients. Sensors 2018, 18, 3813. [Google Scholar] [CrossRef] [PubMed]

- Sovijarvi, A.; Dalmasso, F.; Vanderschoot, J.; Malmberg, L.; Righini, G.; Stoneman, S. Definition of terms for applications of respiratory sounds. Eur. Respir. Rev. 2000, 10, 597–610. [Google Scholar]

- Salazar, A.J.; Alvarado, C.; Lozano, F.E. System of heart and lung sounds separation for store-and-forward telemedicine applications. In Revista Facultad de Ingeniería Universidad de Antioquia; 2012; pp. 175–181. Available online: https://revistas.udea.edu.co/index.php/ingenieria/issue/view/1223 (accessed on 21 September 2023).

- Sovijarvi, A. Characteristics of breath sounds and adventitious respiratory sounds. Eur. Respir. Rev. 2000, 10, 591–596. [Google Scholar]

- Pramono, R.X.A.; Bowyer, S.; Rodriguez-Villegas, E. Automatic adventitious respiratory sound analysis: A systematic review. PLoS ONE 2017, 12, e0177926. [Google Scholar] [CrossRef] [PubMed]

- Hoevers, J.; Loudon, R.G. Measuring crackles. Chest 1990, 98, 1240–1243. [Google Scholar] [CrossRef]

- Cohen, A. Signal processing methods for upper airway and pulmonary dysfunction diagnosis. IEEE Eng. Med. Biol. Mag. 1990, 9, 72–75. [Google Scholar] [CrossRef]

- Speranza, C.G.; Moraes, R. Instantaneous frequency based index to characterize respiratory crackles. Comput. Biol. Med. 2018, 102, 21–29. [Google Scholar] [CrossRef]

- Chan, T.K.; Chin, C.S. A Comprehensive Review of Polyphonic Sound Event Detection. IEEE Access 2020, 8, 103339–103373. [Google Scholar] [CrossRef]

- Radad, M. Application of single-frequency time-space filtering technique for seismic ground roll and random noise attenuation. J. Earth Space Phys. 2018, 44, 41–51. [Google Scholar]

- Hadiloo, S.; Radad, M.; Mirzaei, S.; Foomezhi, M. Seismic facies analysis by ANFIS and fuzzy clustering methods to extract channel patterns. In Proceedings of the 79th EAGE Conference and Exhibition 2017, Paris, France, 12–15 June 2017; Volume 2017, pp. 1–5. [Google Scholar]

- Kaisia, T.; Sovijärvi, A.; Piirilä, P.; Rajala, H.; Haltsonen, S.; Rosqvist, T. Validated method for automatic detection of lung sound crackles. Med. Biol. Eng. Comput. 1991, 29, 517–521. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Wang, X.; Han, F.; Zhao, H. The detection of crackles based on mathematical morphology in spectrogram analysis. Technol. Health Care 2015, 23, S489–S494. [Google Scholar] [CrossRef] [PubMed]

- Hadjileontiadis, L.; Panas, S. Nonlinear separation of crackles and squawks from vesicular sounds using third-order statistics. In Proceedings of the 18th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Amsterdam, The Netherlands, 31 October–3 November 1996; Volume 5, pp. 2217–2219. [Google Scholar]

- Charleston-Villalobos, S.; Martinez-Hernandez, G.; Gonzalez-Camarena, R.; Chi-Lem, G.; Carrillo, J.G.; Aljama-Corrales, T. Assessment of multichannel lung sounds parameterization for two-class classification in interstitial lung disease patients. Comput. Biol. Med. 2011, 41, 473–482. [Google Scholar] [CrossRef] [PubMed]

- Hadjileontiadis, L.J.; Panas, S.M. Separation of discontinuous adventitious sounds from vesicular sounds using a wavelet-based filter. IEEE Trans. Biomed. Eng. 1997, 44, 1269–1281. [Google Scholar] [CrossRef] [PubMed]

- Lu, X.; Bahoura, M. An integrated automated system for crackles extraction and classification. Biomed. Signal Process. Control 2008, 3, 244–254. [Google Scholar] [CrossRef]

- Serbes, G.; Sakar, C.O.; Kahya, Y.P.; Aydin, N. Pulmonary crackle detection using time–frequency and time–scale analysis. Digit. Signal Process. 2013, 23, 1012–1021. [Google Scholar] [CrossRef]

- Stasiakiewicz, P.; Dobrowolski, A.P.; Targowski, T.; Gałązka-Świderek, N.; Sadura-Sieklucka, T.; Majka, K.; Skoczylas, A.; Lejkowski, W.; Olszewski, R. Automatic classification of normal and sick patients with crackles using wavelet packet decomposition and support vector machine. Biomed. Signal Process. Control 2021, 67, 102521. [Google Scholar] [CrossRef]

- Hadjileontiadis, L.J. Wavelet-based enhancement of lung and bowel sounds using fractal dimension thresholding-Part I: Methodology. IEEE Trans. Biomed. Eng. 2005, 52, 1143–1148. [Google Scholar] [CrossRef]

- Hadjileontiadis, L.J. Wavelet-based enhancement of lung and bowel sounds using fractal dimension thresholding-Part II: Application results. IEEE Trans. Biomed. Eng. 2005, 52, 1050–1064. [Google Scholar] [CrossRef] [PubMed]

- Pinho, C.; Oliveira, A.; Jácome, C.; Rodrigues, J.; Marques, A. Automatic crackle detection algorithm based on fractal dimension and box filtering. Procedia Comput. Sci. 2015, 64, 705–712. [Google Scholar] [CrossRef]

- Pal, R.; Barney, A. Iterative envelope mean fractal dimension filter for the separation of crackles from normal breath sounds. Biomed. Signal Process. Control 2021, 66, 102454. [Google Scholar] [CrossRef]

- Liu, X.; Ser, W.; Zhang, J.; Goh, D.Y.T. Detection of adventitious lung sounds using entropy features and a 2-D threshold setting. In Proceedings of the 2015 10th International Conference on Information, Communications and Signal Processing (ICICS), Cairns, Australia, 2–4 December 2015; pp. 1–5. [Google Scholar]

- Rizal, A.; Hidayat, R.; Nugroho, H.A. Pulmonary crackle feature extraction using tsallis entropy for automatic lung sound classification. In Proceedings of the 2016 1st International Conference on Biomedical Engineering (IBIOMED), Yogyakarta, Indonesia, 5–6 October 2016; pp. 1–4. [Google Scholar]

- Hadjileontiadis, L.J. Empirical mode decomposition and fractal dimension filter. IEEE Eng. Med. Biol. Mag. 2007, 26, 30. [Google Scholar]

- Mastorocostas, P.A.; Theocharis, J.B. A dynamic fuzzy neural filter for separation of discontinuous adventitious sounds from vesicular sounds. Comput. Biol. Med. 2007, 37, 60–69. [Google Scholar] [CrossRef] [PubMed]

- Maruf, S.O.; Azhar, M.U.; Khawaja, S.G.; Akram, M.U. Crackle separation and classification from normal Respiratory sounds using Gaussian Mixture Model. In Proceedings of the 2015 IEEE 10th International Conference on Industrial and Information Systems (ICIIS), Peradeniya, Sri Lanka, 18–20 December 2015; pp. 267–271. [Google Scholar]

- Mendes, L.; Vogiatzis, I.M.; Perantoni, E.; Kaimakamis, E.; Chouvarda, I.; Maglaveras, N.; Henriques, J.; Carvalho, P.; Paiva, R.P. Detection of crackle events using a multi-feature approach. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 3679–3683. [Google Scholar]

- Li, J.; Hong, Y. Crackles detection method based on time-frequency features analysis and SVM. In Proceedings of the 2016 IEEE 13th International Conference on Signal Processing (ICSP), Chengdu, China, 6–10 November 2016; pp. 1412–1416. [Google Scholar]

- Grønnesby, M.; Solis, J.C.A.; Holsbø, E.; Melbye, H.; Bongo, L.A. Feature extraction for machine learning based crackle detection in lung sounds from a health survey. arXiv 2017, arXiv:1706.00005. [Google Scholar]

- Pramudita, B.A.; Istiqomah, I.; Rizal, A. Crackle detection in lung sound using statistical feature of variogram. In AIP Conference Proceedings; AIP Publishing LLC: Melville, NY, USA, 2020; Volume 2296, p. 020014. [Google Scholar]

- García, M.R.; Villalobos, S.C.; Villa, N.C.; González, A.J.; Camarena, R.G.; Corrales, T.A. Automated extraction of fine and coarse crackles by independent component analysis. Health Technol. 2020, 10, 459–463. [Google Scholar] [CrossRef]

- Liu, Y.X.; Yang, Y.; Chen, Y.H. Lung sound classification based on Hilbert-Huang transform features and multilayer perceptron network. In Proceedings of the 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Kuala Lumpur, Malaysia, 12–15 December 2017; pp. 765–768. [Google Scholar]

- Hong, K.J.; Essid, S.; Ser, W.; Foo, D.G. A robust audio classification system for detecting pulmonary edema. Biomed. Signal Process. Control 2018, 46, 94–103. [Google Scholar] [CrossRef]

- Bardou, D.; Zhang, K.; Ahmad, S.M. Lung sounds classification using convolutional neural networks. Artif. Intell. Med. 2018, 88, 58–69. [Google Scholar] [CrossRef]

- Nguyen, T.; Pernkopf, F. Lung sound classification using snapshot ensemble of convolutional neural networks. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 760–763. [Google Scholar]

- Messner, E.; Fediuk, M.; Swatek, P.; Scheidl, S.; Smolle-Juttner, F.M.; Olschewski, H.; Pernkopf, F. Crackle and breathing phase detection in lung sounds with deep bidirectional gated recurrent neural networks. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2018; pp. 356–359. [Google Scholar]

- Perna, D.; Tagarelli, A. Deep auscultation: Predicting respiratory anomalies and diseases via recurrent neural networks. In Proceedings of the 2019 IEEE 32nd International Symposium on Computer-Based Medical Systems (CBMS), Cordoba, Spain, 5–7 June 2019; pp. 50–55. [Google Scholar]

- Acharya, J.; Basu, A. Deep neural network for respiratory sound classification in wearable devices enabled by patient specific model tuning. IEEE Trans. Biomed. Circuits Syst. 2020, 14, 535–544. [Google Scholar] [CrossRef]

- Messner, E.; Fediuk, M.; Swatek, P.; Scheidl, S.; Smolle-Jüttner, F.M.; Olschewski, H.; Pernkopf, F. Multi-channel lung sound classification with convolutional recurrent neural networks. Comput. Biol. Med. 2020, 122, 103831. [Google Scholar] [CrossRef] [PubMed]

- Hadjileontiadis, L.J.; Rekanos, I.T. Detection of explosive lung and bowel sounds by means of fractal dimension. IEEE Signal Process. Lett. 2003, 10, 311–314. [Google Scholar] [CrossRef]

- Sankur, B.; Kahya, Y.P.; Güler, E.Ç.; Engin, T. Comparison of AR-based algorithms for respiratory sounds classification. Comput. Biol. Med. 1994, 24, 67–76. [Google Scholar] [CrossRef] [PubMed]

- Kahya, Y.P.; Yeginer, M.; Bilgic, B. Classifying respiratory sounds with different feature sets. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006; pp. 2856–2859. [Google Scholar]

- Sen, I.; Saraclar, M.; Kahya, Y.P. A comparison of SVM and GMM-based classifier configurations for diagnostic classification of pulmonary sounds. IEEE Trans. Biomed. Eng. 2015, 62, 1768–1776. [Google Scholar] [CrossRef] [PubMed]

- Dorantes-Mendez, G.; Charleston-Villalobos, S.; Gonzalez-Camarena, R.; Chi-Lem, G.; Carrillo, J.; Aljama-Corrales, T. Crackles detection using a time-variant autoregressive model. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–24 August 2008; pp. 1894–1897. [Google Scholar]

- Henry, B.; Royston, T.J. Localization of adventitious respiratory sounds. J. Acoust. Soc. Am. 2018, 143, 1297–1307. [Google Scholar] [CrossRef] [PubMed]

- Kompis, M.; Pasterkamp, H.; Wodicka, G.R. Acoustic imaging of the human chest. Chest 2001, 120, 1309–1321. [Google Scholar] [CrossRef] [PubMed]

- Rao, A.; Huynh, E.; Royston, T.J.; Kornblith, A.; Roy, S. Acoustic methods for pulmonary diagnosis. IEEE Rev. Biomed. Eng. 2018, 12, 221–239. [Google Scholar] [CrossRef]

- Rocha, B.M.; Pessoa, D.; Marques, A.; Carvalho, P.; Paiva, R.P. Automatic classification of adventitious respiratory sounds: A (un) solved problem? Sensors 2020, 21, 57. [Google Scholar] [CrossRef]

- Pal, R.; Barney, A. A dataset for systematic testing of crackle separation techniques. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 4690–4693. [Google Scholar]

- Charbonneau, G. Basic techniques for respiratory sound analysis. Eur. Respir. Rev. 2000, 10, 625–635. [Google Scholar]

- Kiyokawa, H.; Greenberg, M.; Shirota, K.; Pasterkamp, H. Auditory detection of simulated crackles in breath sounds. Chest 2001, 119, 1886–1892. [Google Scholar] [CrossRef]

- Earis, J.; Cheetham, B. Current methods used for computerized respiratory sound analysis. Eur. Respir. Rev. 2000, 10, 586–590. [Google Scholar]

- Benesty, J.; Sondhi, M.M.; Huang, Y. (Eds.) Springer Handbook of Speech Processing; Springer: Berlin, Germany, 2008; Volume 1. [Google Scholar]

- Vapnik, V.; Guyon, I.; Hastie, T. Support Vector Machines.

- Aykanat, M.; Kılıç, Ö.; Kurt, B.; Saryal, S. Classification of lung sounds using convolutional neural networks. Eurasip J. Image Video Process. 2017, 2017, 1–9. [Google Scholar] [CrossRef]

- Kochetov, K.; Putin, E.; Balashov, M.; Filchenkov, A.; Shalyto, A. Noise masking recurrent neural network for respiratory sound classification. In Proceedings of the International Conference on Artificial Neural Networks, Rhodes, Greece, 4–7 October 2018; pp. 208–217. [Google Scholar]

- Liu, R.; Cai, S.; Zhang, K.; Hu, N. Detection of adventitious respiratory sounds based on convolutional neural network. In Proceedings of the 2019 International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Bangkok, Thailand, 21–24 October 2019; pp. 298–303. [Google Scholar]

- Minami, K.; Lu, H.; Kim, H.; Mabu, S.; Hirano, Y.; Kido, S. Automatic classification of large-scale respiratory sound dataset based on convolutional neural network. In Proceedings of the 2019 19th International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 15–18 October 2019; pp. 804–807. [Google Scholar]

- Ma, Y.; Xu, X.; Yu, Q.; Zhang, Y.; Li, Y.; Zhao, J.; Wang, G. LungBRN: A smart digital stethoscope for detecting respiratory disease using bi-resnet deep learning algorithm. In Proceedings of the 2019 IEEE Biomedical Circuits and Systems Conference (BioCAS), Nara, Japan, 17–19 October 2019; pp. 1–4. [Google Scholar]

- Ngo, D.; Pham, L.; Nguyen, A.; Phan, B.; Tran, K.; Nguyen, T. Deep learning framework applied for predicting anomaly of respiratory sounds. In Proceedings of the 2021 International Symposium on Electrical and Electronics Engineering (ISEE), Ho Chi Minh City, Vietnam, 15–16 April 2021; pp. 42–47. [Google Scholar]

- Demir, F.; Ismael, A.M.; Sengur, A. Classification of lung sounds with CNN model using parallel pooling structure. IEEE Access 2020, 8, 105376–105383. [Google Scholar] [CrossRef]

| Scenario | Type | Model | Noise | Diagnosis | SNR | |||

|---|---|---|---|---|---|---|---|---|

| Simulated | FCS [57] | ATS | 10 | 15 | - | [−10 dB, 10 dB] | 315 | |

| FCS [11] | Hoevers | 10 | 15 | - | [−10 dB, 10 dB] | 315 | ||

| FCS [12] | Cohen | 10 | 15 | - | [−10 dB, 10 dB] | 315 | ||

| CCS [57] | ATS | 10 | 15 | - | [−10 dB, 10 dB] | 315 | ||

| CCS [11] | Hoevers | 10 | 15 | - | [−10 dB, 10 dB] | 315 | ||

| CCS [12] | Cohen | 10 | 15 | - | [−10 dB, 10 dB] | 315 | ||

| Real | FCS [28,56] | - | 10 | 15 | IPF | [−10 dB, 10 dB] | 315 | |

| CCS [28,56] | - | 10 | 15 | BE | [−10 dB, 10 dB] | 315 |

| Scenario | Type | Noise | Diagnosis | SNR | Accuracy [Acc] | Sensitivity [] | Precision [] | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| IEM-FD | TVAR | Proposed | IEM-FD | TVAR | Proposed | IEM-FD | TVAR | Proposed | |||||||

| Simulated 1 | FCS [57] | 10 | 15 | NR | - | [−10 dB, 10 dB] | 46.94% | 69.23% | 98.36% | 97.46% | 73.02% | 99.94% | 46.94% | 93.33% | 98.42% |

| Simulated 2 | FCS [11] | 10 | 15 | NR | - | [−10 dB, 10 dB] | 33.21% | 81.36% | 95.85% | 100% | 92.60% | 97.81% | 33.21% | 87.27% | 97.93% |

| Simulated 3 | FCS [12] | 10 | 15 | NR | - | [−10 dB, 10 dB] | 47.72% | 76.21% | 98.03% | 97.14% | 81.56% | 99.94% | 47.72% | 91.98% | 98.08% |

| Simulated 4 | CCS [57] | 10 | 15 | NR | - | [−10 dB, 10 dB] | 41.86% | 46.00% | 96.57% | 93.17% | 48.54% | 98.25% | 42.15% | 82.64% | 98.27% |

| Simulated 5 | CCS [11] | 10 | 15 | NR | - | [−10 dB, 10 dB] | 47.63% | 71.86% | 98.63% | 97.46% | 76.73% | 99.90% | 47.63% | 91.68% | 98.71% |

| Simulated 6 | CCS [12] | 10 | 15 | NR | - | [−10 dB, 10 dB] | 41.12% | 38.68% | 95.47% | 91.49% | 40.76% | 96.86% | 41.46% | 76.70% | 98.54% |

| Real 1 | FCS [28,56] | 10 | 15 | NR | IPF | [−10 dB, 10 dB] | 30.70% | 92.04% | 94.59% | 79.96% | 97.30% | 96.10% | 33.26% | 94.39% | 98.32% |

| Real 2 | CCS [28,56] | 10 | 15 | NR | BE | [−10 dB, 10 dB] | 30.64% | 22.70% | 74.78% | 46.54% | 23.87% | 76.03% | 53.86% | 66.33% | 97.72% |

| Scenario | Type | Noise | Diagnosis | SNR | Accuracy (%) [] | Sensitivity (%) [] | Precision (%) [] | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | CNN | SVM | CNN | SVM | CNN | |||||||

| Simulated 1 | FCS [57] | 10 | 15 | NR | - | [−10 dB, 10 dB] | 98.36 | 97.61 | 99.94 | 97.94 | 98.42 | 99.49 |

| Simulated 2 | FCS [11] | 10 | 15 | NR | - | [−10 dB, 10 dB] | 95.85 | 97.93 | 97.81 | 98.28 | 97.93 | 99.53 |

| Simulated 3 | FCS [12] | 10 | 15 | NR | - | [−10 dB, 10 dB] | 98.03 | 97.44 | 99.94 | 97.72 | 98.08 | 99.50 |

| Simulated 4 | CCS [57] | 10 | 15 | NR | - | [−10 dB, 10 dB] | 96.57 | 92.98 | 98.25 | 92.73 | 98.27 | 99.60 |

| Simulated 5 | CCS [11] | 10 | 15 | NR | - | [−10 dB, 10 dB] | 98.63 | 98.25 | 99.90 | 98.54 | 98.71 | 99.55 |

| Simulated 6 | CCS [12] | 10 | 15 | NR | - | [−10 dB, 10 dB] | 95.47 | 94.34 | 96.86 | 94.21 | 98.54 | 99.58 |

| Real 1 | FCS [28,56] | 10 | 15 | NR | IPF | [−10 dB, 10 dB] | 94.58 | 91.42 | 96.10 | 93.11 | 98.32 | 99.34 |

| Real 2 | CCS [28,56] | 10 | 15 | NR | BE | [−10 dB, 10 dB] | 74.78 | 72.34 | 76.03 | 74.00 | 97.72 | 98.12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mang, L.D.; Carabias-Orti, J.J.; Canadas-Quesada, F.J.; de la Torre-Cruz, J.; Muñoz-Montoro, A.; Revuelta-Sanz, P.; Combarro, E.F. Automatic Robust Crackle Detection and Localization Approach Using AR-Based Spectral Estimation and Support Vector Machine. Appl. Sci. 2023, 13, 10683. https://doi.org/10.3390/app131910683

Mang LD, Carabias-Orti JJ, Canadas-Quesada FJ, de la Torre-Cruz J, Muñoz-Montoro A, Revuelta-Sanz P, Combarro EF. Automatic Robust Crackle Detection and Localization Approach Using AR-Based Spectral Estimation and Support Vector Machine. Applied Sciences. 2023; 13(19):10683. https://doi.org/10.3390/app131910683

Chicago/Turabian StyleMang, Loredana Daria, Julio José Carabias-Orti, Francisco Jesús Canadas-Quesada, Juan de la Torre-Cruz, Antonio Muñoz-Montoro, Pablo Revuelta-Sanz, and Eilas Fernandez Combarro. 2023. "Automatic Robust Crackle Detection and Localization Approach Using AR-Based Spectral Estimation and Support Vector Machine" Applied Sciences 13, no. 19: 10683. https://doi.org/10.3390/app131910683