Detection and Classification of Buildings by Height from Single Urban High-Resolution Remote Sensing Images

Abstract

:1. Introduction

- As a method for urban images with large-area shadows, it fully utilizes the shadow information to detect and classify buildings.

- There are lower requirements for image quality, as only RGB band information is used to extract buildings and classify them by height levels. The information of reference height or related angle information is not required.

- The proposed approach could use seed-blocks to detect buildings with high precision and a low missed detection rate.

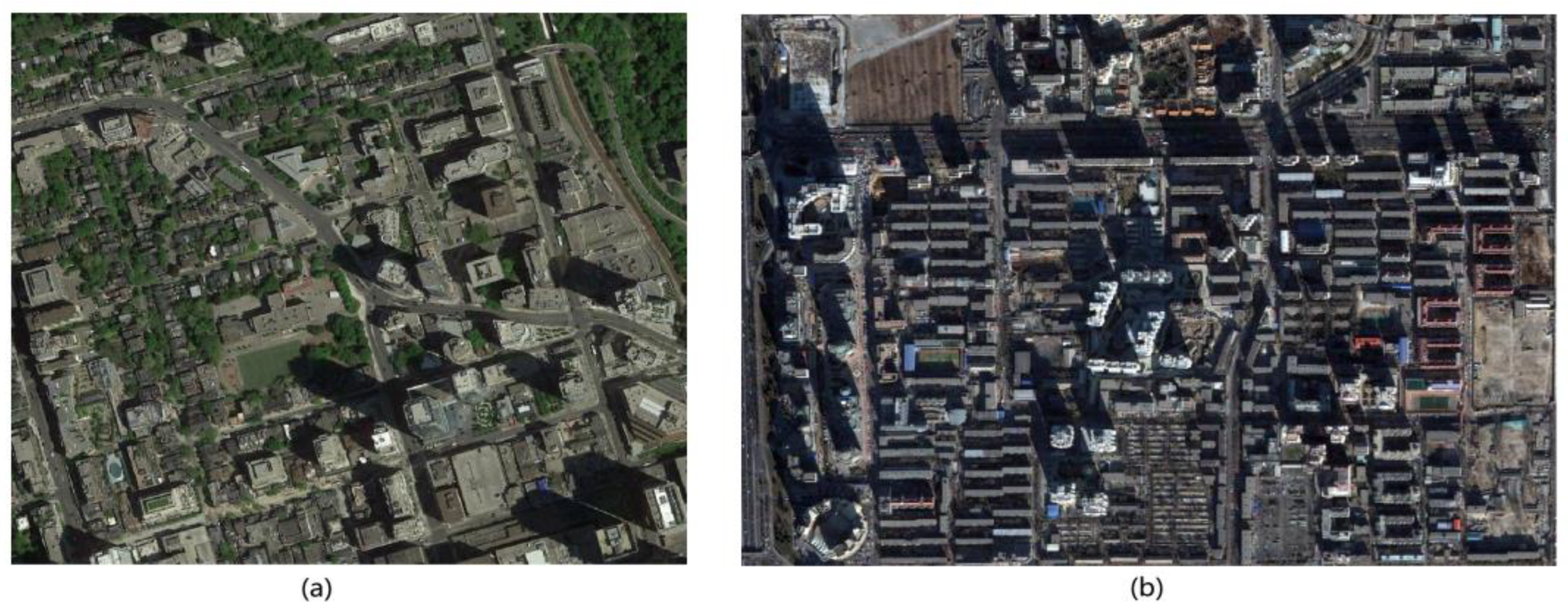

2. Experimental Data and Study Areas

3. Methodology

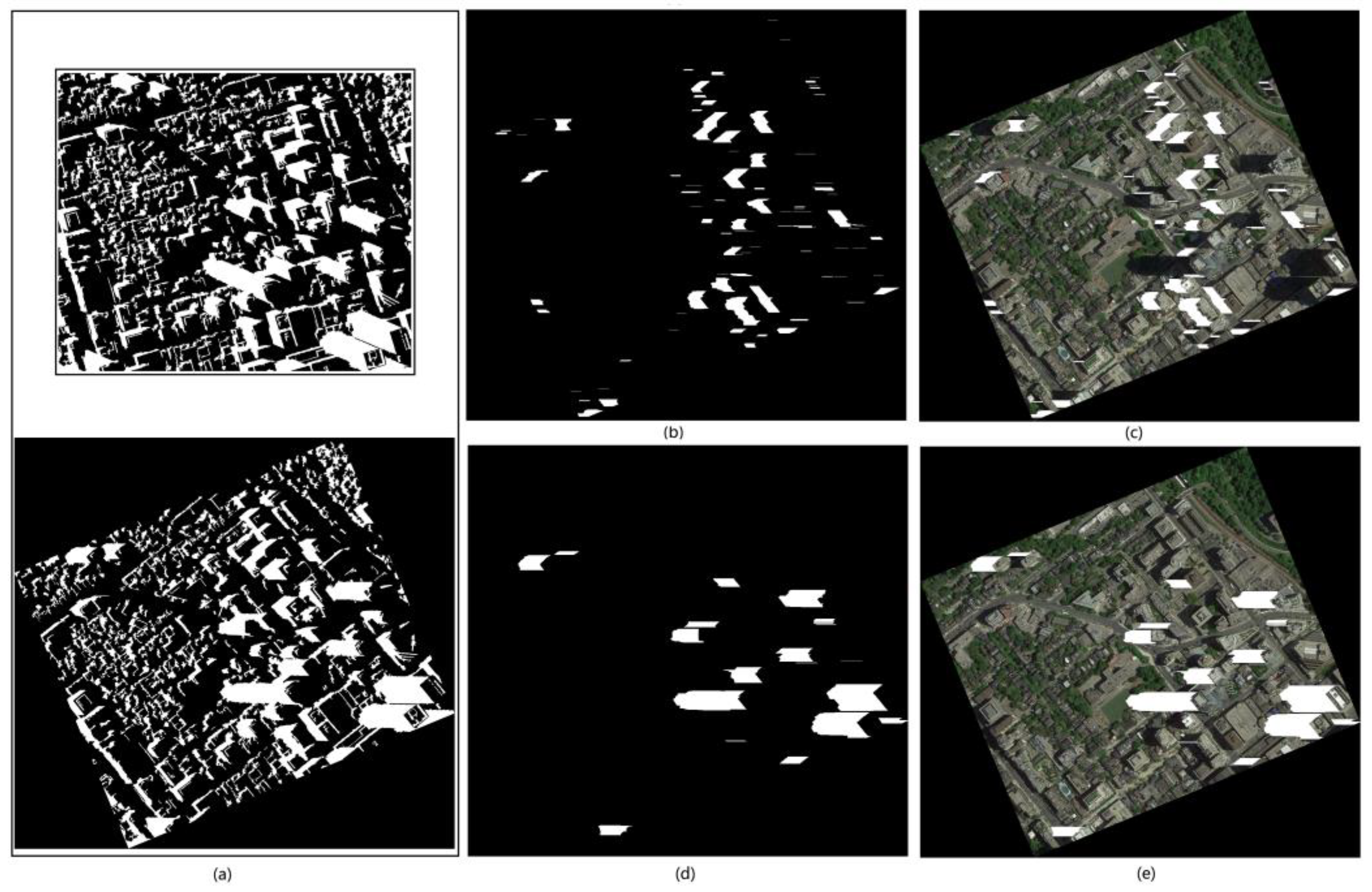

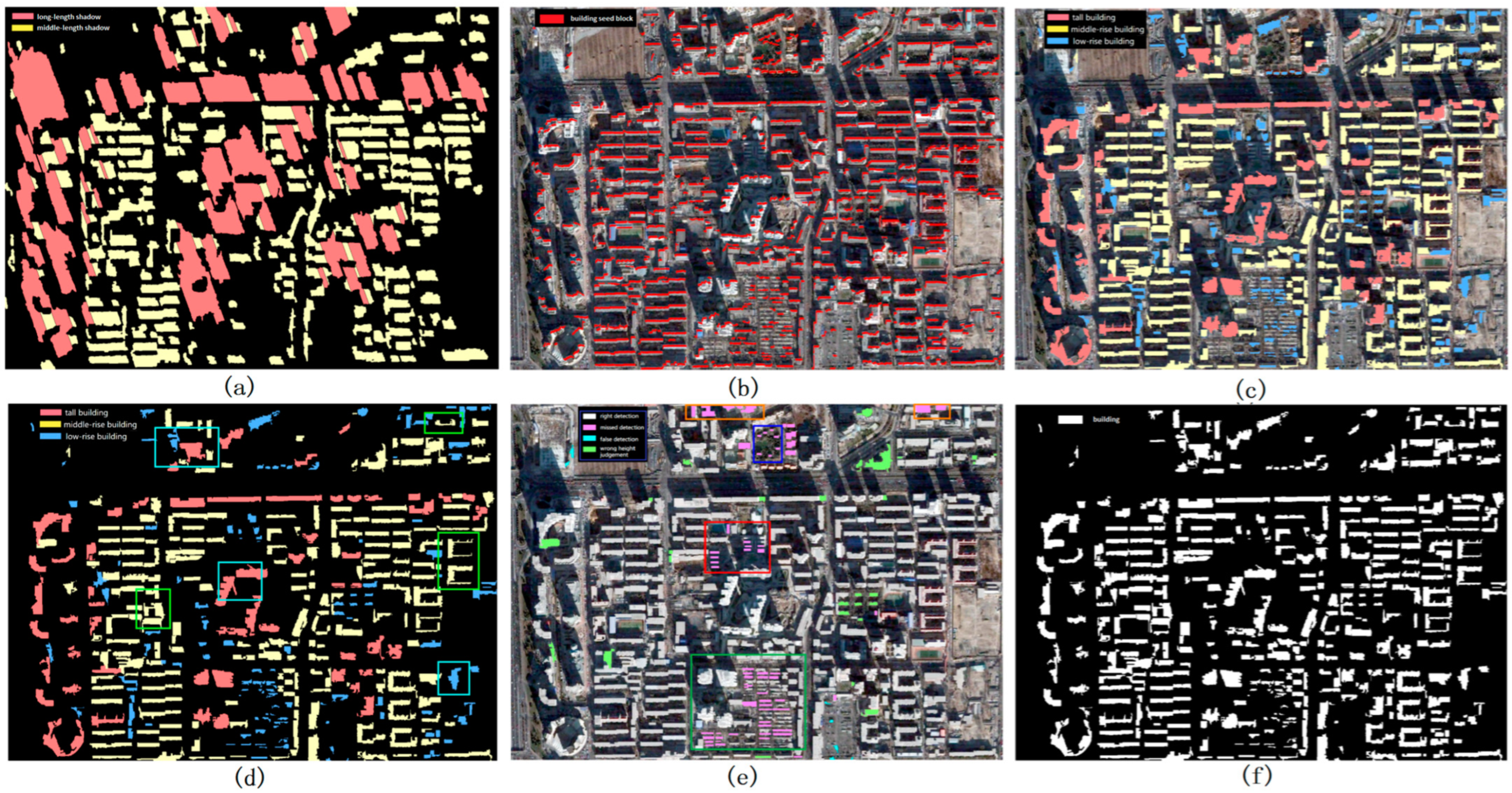

3.1. Shadow Detection

3.2. Shadow Direction Acquisition

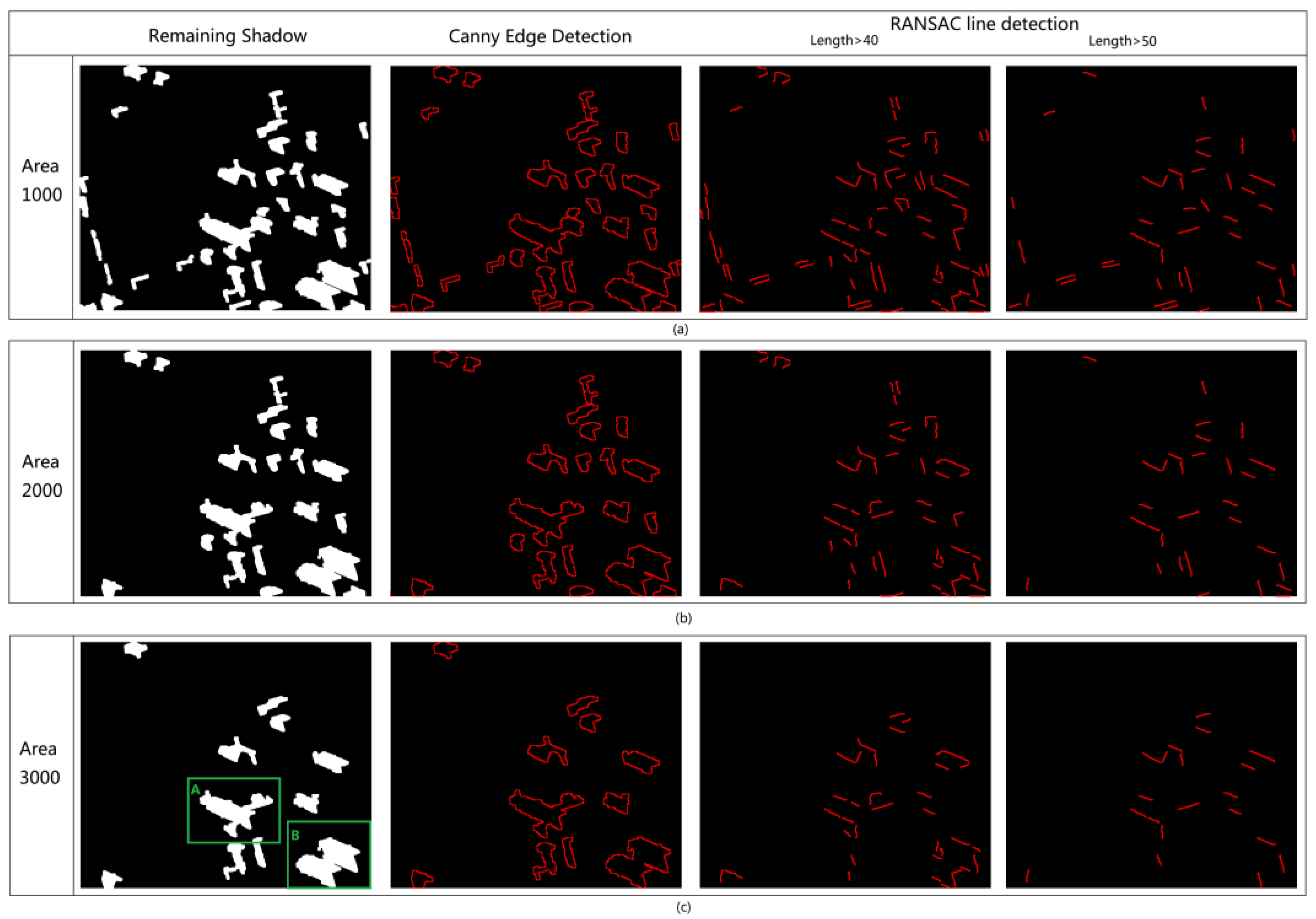

3.2.1. Shadow Filtered by Area

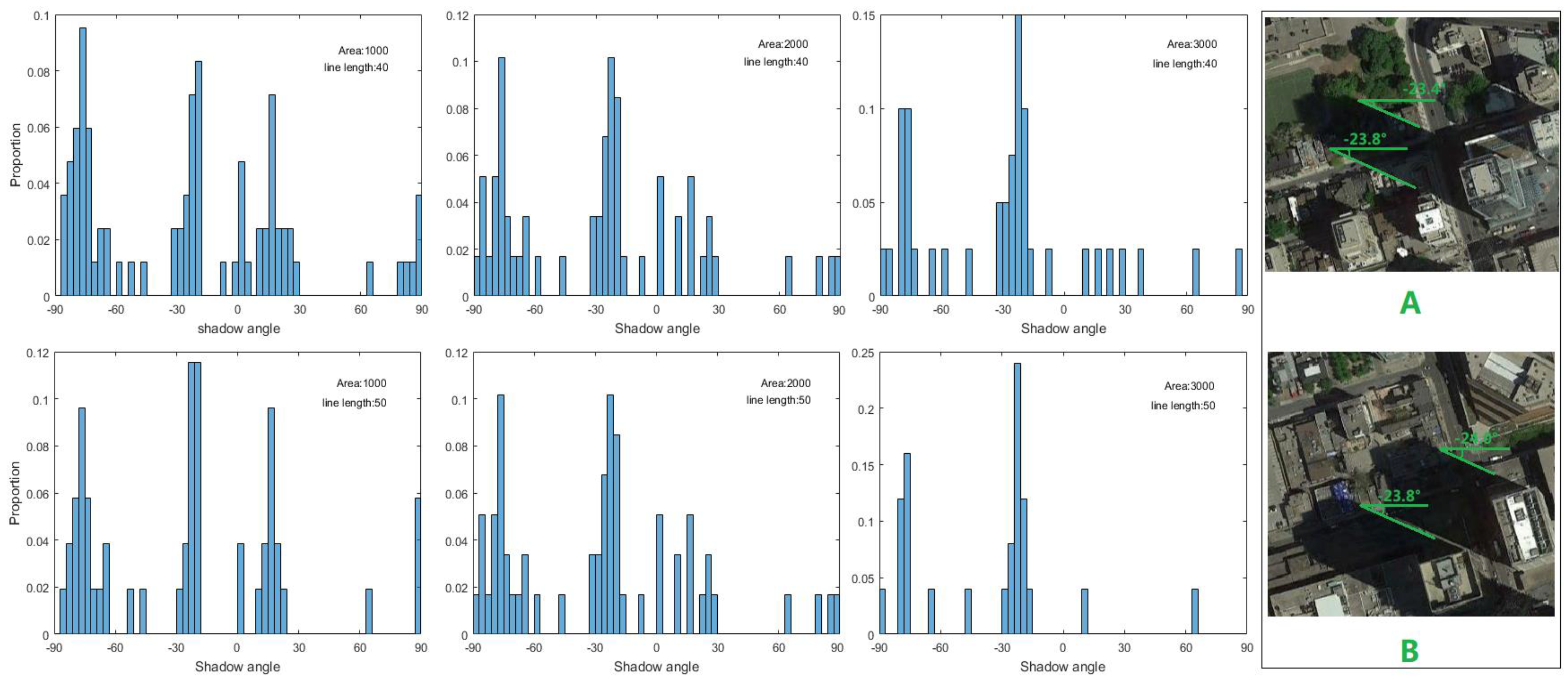

3.2.2. Estimating the Shadow Angle

3.2.3. Shadow Direction Confirmation

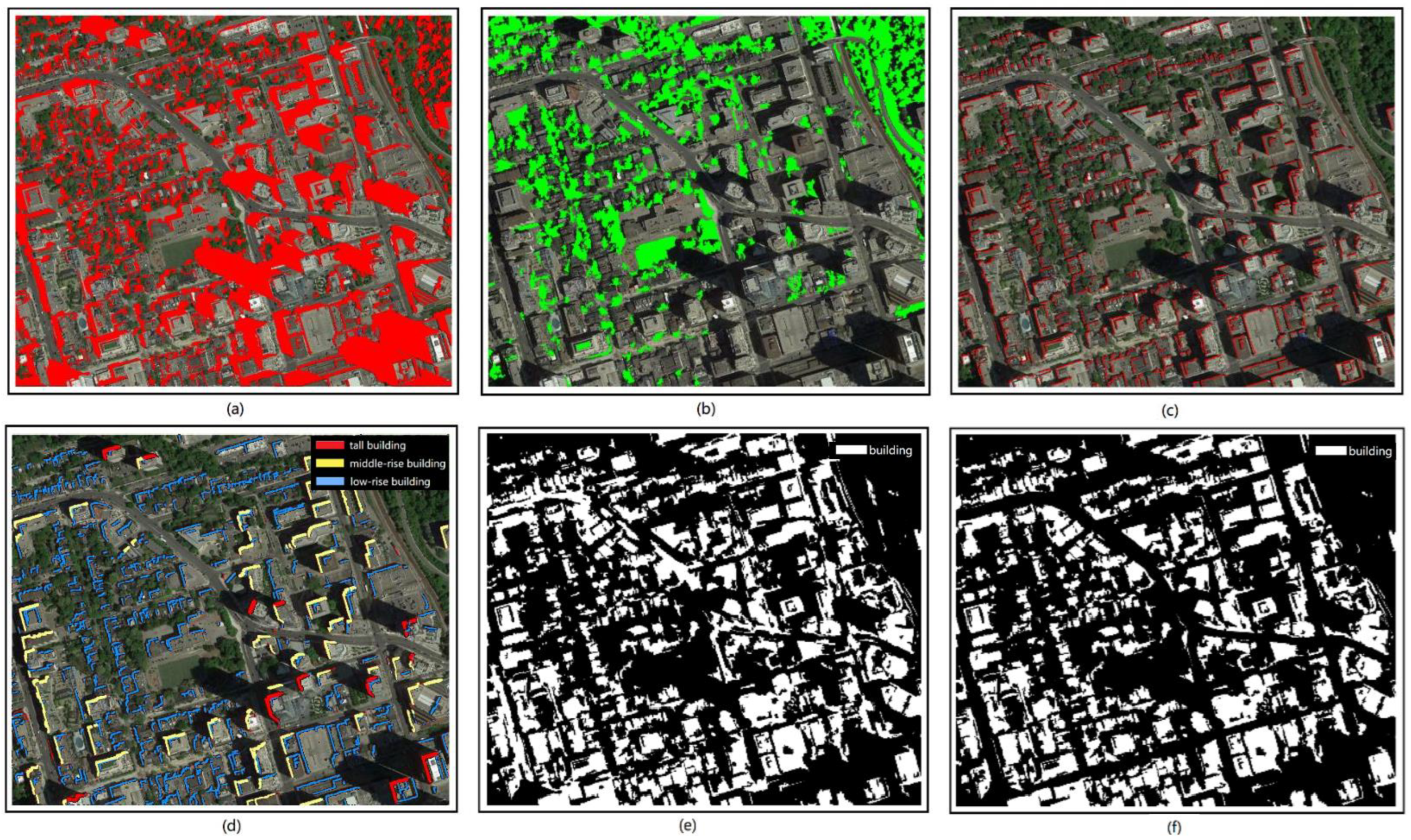

3.3. Building Detection

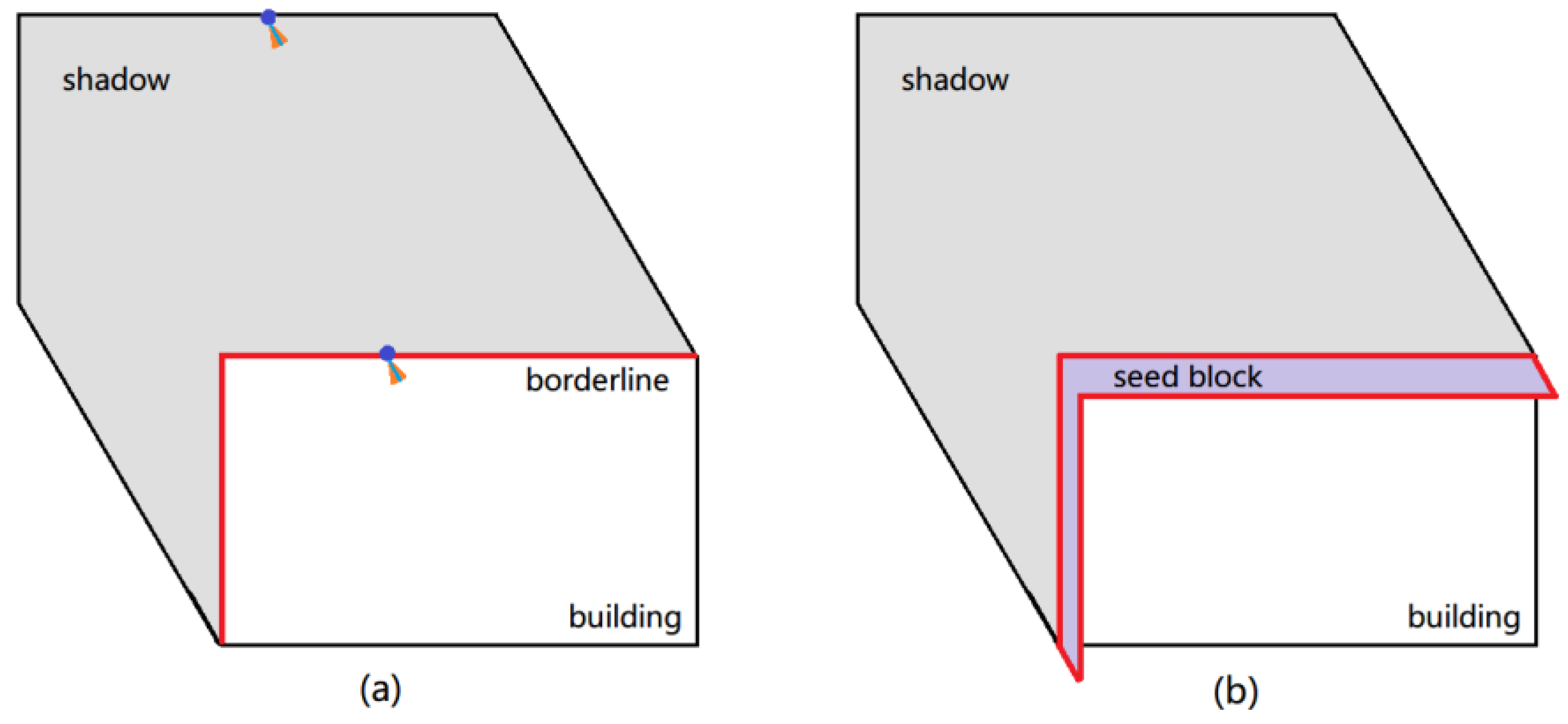

3.3.1. Seed-Block Generation

3.3.2. Set Reliable Areas for Buildings

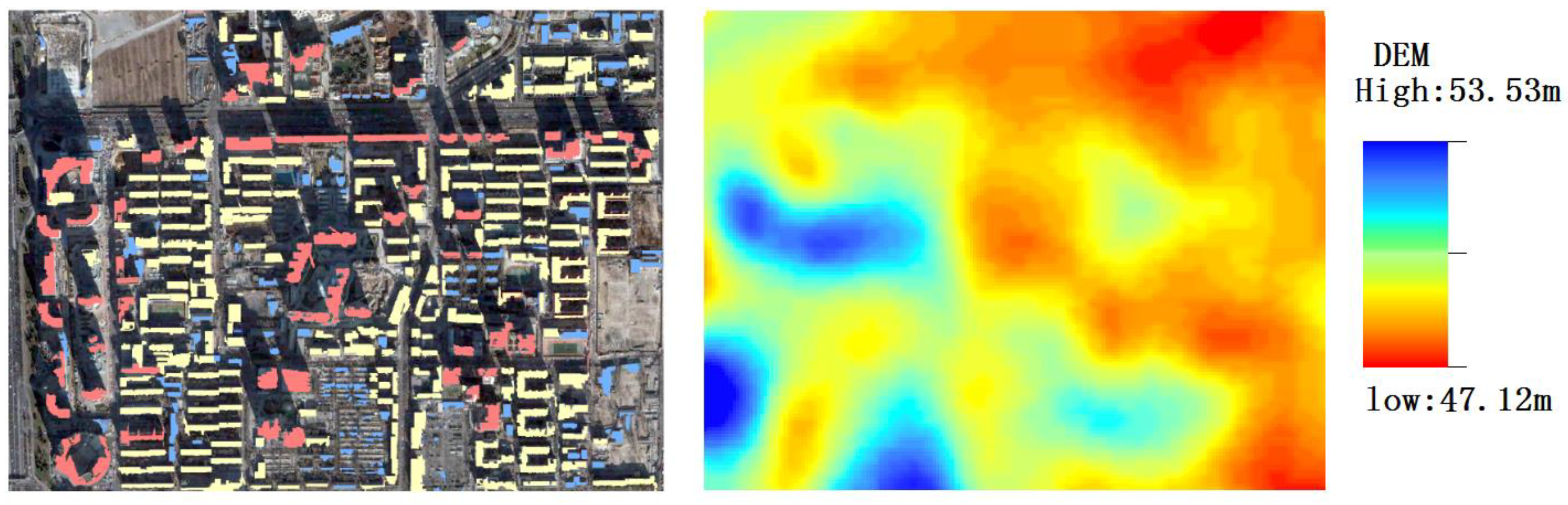

3.4. Building Height-Classification

4. Results and Analysis

4.1. The Toronto Urban Scene

4.2. The Beijing Urban Scene

5. Discussion

5.1. Discussion regarding Errors

5.2. Potential Application

5.3. Discussion of Application

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Khoshelham, K.; Nardinocchi, C.; Frontoni, E.; Mancini, A.; Zingaretti, P. Performance evaluation of automated approaches to building detection in multi-source aerial data. ISPRS J. Photogramm. Remote Sens. 2010, 65, 123–133. [Google Scholar] [CrossRef]

- Shao, Z.; Cheng, T.; Fu, H.; Li, D.; Huang, X. Emerging Issues in Mapping Urban Impervious Surfaces Using High-Resolution Remote Sensing Images. Remote Sens. 2023, 15, 2562. [Google Scholar] [CrossRef]

- Wellmann, T.; Lausch, A.; Andersson, E.; Knapp, S.; Cortinovis, C.; Jache, J.; Haase, D. Remote sensing in urban planning: Contributions towards ecologically sound policies? Landsc. Urban Plan. 2020, 204, 103921. [Google Scholar] [CrossRef]

- Akçay, H.G.; Aksoy, S. Building detection using directional spatial constraints. In Proceedings of the 2010 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Honolulu, HI, USA, 25–30 July 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 1932–1935. [Google Scholar]

- Collins, W.G.; El-Beik, A. Population census with the aid of aerial photographs: An experiment in the city of leeds. Photogramm. Rec. 1971, 7, 16–26. [Google Scholar] [CrossRef]

- Wu, S.-S.; Qiu, X.; Wang, L. Population estimation methods in gis and remote sensing: A review. GIScience Remote Sens. 2005, 42, 80–96. [Google Scholar] [CrossRef]

- Taubenböck, H.; Esch, T.; Felbier, A.; Wiesner, M.; Roth, A.; Dech, S. Monitoring urbanization in mega cities from space. Remote Sens. Environ. 2012, 117, 162–176. [Google Scholar] [CrossRef]

- Patel, N.N.; Angiuli, E.; Gamba, P.; Gaughan, A.; Lisini, G.; Stevens, F.R.; Trianni, G. Multitemporal settlement and population mapping from Landsat using Google Earth Engine. Int. J. Appl. Earth Obs. Geoinf. 2015, 35, 199–208. [Google Scholar] [CrossRef]

- Bai, T.; Wang, L.; Yin, D.; Sun, K.; Chen, Y.; Li, W.; Li, D. Deep learning for change detection in remote sensing: A review. Geo-Spat. Inf. Sci. 2022. [Google Scholar] [CrossRef]

- Yonaba, R.; Koïta, M.; Mounirou, L.A.; Tazen, F.; Queloz, P.; Biaou, A.C.; Yacouba, H. Spatial and transient modelling of land use/land cover (LULC) dynamics in a Sahelian landscape under semi-arid climate in northern Burkina Faso. Land Use Policy 2021, 103, 105305. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. A multidirectional and multiscale morphological index for automatic building extraction from multispectral geoeye-1 imagery. Photogramm. Eng. Remote Sens. 2011, 77, 721–732. [Google Scholar] [CrossRef]

- Myint, S.W.; Lam, N.S.-N.; Tyler, J.M. Wavelets for urban spatial feature discrimination. Photogramm. Eng. Remote Sens. 2004, 70, 803–812. [Google Scholar] [CrossRef]

- Zhou, G.Q.; Sha, H.J. Building Shadow Detection on Ghost Images. Remote Sens. 2020, 12, 679. [Google Scholar] [CrossRef]

- Pesaresi, M.; Gerhardinger, A.; Kayitakire, F. A robust built-up area presence index by anisotropic rotation-invariant textural measure. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2008, 1, 180–192. [Google Scholar] [CrossRef]

- Zhang, H.; Sun, K.; Li, W. Object-oriented shadow detection and removal from urban high-resolution remote sensing images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6972–6982. [Google Scholar] [CrossRef]

- Shi, L.; Zhao, Y. Urban feature shadow extraction based on high-resolution satellite remote sensing images. Alex. Eng. Journa 2023, 77, 443–460. [Google Scholar] [CrossRef]

- Sirmacek, B.; Unsalan, C. Building detection from aerial images using invariant color features and shadow information. In Proceedings of the 2008 23rd International Symposium on Computer and Information Sciences, ISCIS’08, Istanbul, Turkey, 27–29 October 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 1–5. [Google Scholar]

- Ok, A.O.; Senaras, C.; Yuksel, B. Automated detection of arbitrarily shaped buildings in complex environments from monocular vhr optical satellite imagery. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1701–1717. [Google Scholar] [CrossRef]

- Chaudhuri, D.; Kushwaha, N.; Samal, A.; Agarwal, R. Automatic building detection from high-resolution satellite images based on morphology and internal gray variance. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1767–1779. [Google Scholar] [CrossRef]

- Guo, Z.; Du, S. Mining parameter information for building extraction and change detection with very high-resolution imagery and gis data. GIScience Remote Sens. 2017, 54, 38–63. [Google Scholar] [CrossRef]

- Peng, J.; Zhang, D.; Liu, Y. An improved snake model for building detection from urban aerial images. Pattern Recognit. Lett. 2005, 26, 587–595. [Google Scholar] [CrossRef]

- Ahmadi, S.; Zoej, M.V.; Ebadi, H.; Moghaddam, H.A.; Mohammadzadeh, A. Automatic urban building boundary extraction from high resolution aerial images using an innovative model of active contours. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, 150–157. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. Morphological building/shadow index for building extraction from high-resolution imagery over urban areas. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 161–172. [Google Scholar] [CrossRef]

- Lin, C.; Huertas, A.; Nevatia, R. Detection of buildings using perceptual grouping and shadows. In Proceedings of the 1994 Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, CVPR’94, Seattle, WA, USA, 21–23 June 1994; IEEE: Piscataway, NJ, USA, 1994; pp. 62–69. [Google Scholar]

- Nevatia, R.; Lin, C.; Huertas, A. A system for building detection from aerial images. In Automatic Extraction of Man-Made Objects from Aerial and Space Images (II); Springer: Berlin/Heidelberg, Germany, 1997; pp. 77–86. [Google Scholar]

- Ma, R. Dem generation and building detection from lidar data. Photogramm. Eng. Remote Sens. 2005, 71, 847–854. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Trinder, J.; Clode, S.; Kubik, K. Using the dempster–shafer method for the fusion of lidar data and multi-spectral images for building detection. Inf. Fusion 2005, 6, 283–300. [Google Scholar] [CrossRef]

- Lao, J.; Wang, C.; Zhu, X.; Xi, X.; Nie, S.; Wang, J.; Zhou, G. Retrieving building height in urban areas using ICESat-2 photon-counting LiDAR data. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102596. [Google Scholar] [CrossRef]

- Meng, X.; Wang, L.; Currit, N. Morphology-based building detection from airborne lidar data. Photogramm. Eng. Remote Sens. 2009, 75, 437–442. [Google Scholar] [CrossRef]

- Qin, R.; Tian, J.; Reinartz, P. Spatiotemporal inferences for use in building detection using series of very-high-resolution space-borne stereo images. Int. J. Remote Sens. 2016, 37, 3455–3476. [Google Scholar] [CrossRef]

- Qi, F.; Zhai, J.Z.; Dang, G. Building height estimation using google earth. Energy Build. 2016, 118, 123–132. [Google Scholar] [CrossRef]

- Liasis, G.; Stavrou, S. Satellite images analysis for shadow detection and building height estimation. ISPRS J. Photogramm. Remote Sens. 2016, 119, 437–450. [Google Scholar] [CrossRef]

- Dare, P.M. Shadow analysis in high-resolution satellite imagery of urban areas. Photogramm. Eng. Remote Sens. 2005, 71, 169–177. [Google Scholar] [CrossRef]

- Salvador, E.; Cavallaro, A.; Ebrahimi, T. Shadow identification and classification using invariant color models. In Proceedings of the 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing, Proceedings (Cat. No.01CH37221), (ICASSP’01), Salt Lake City, UT, USA, 7–11 May 2001; IEEE: Piscataway, NJ, USA, 2001; pp. 1545–1548. [Google Scholar]

- Wang, Q.; Yan, L.; Yuan, Q.; Ma, Z. An automatic shadow detection method for vhr remote sensing orthoimagery. Remote Sens. 2017, 9, 469. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Baatz, M. Multiresolution segmentation: An optimization approach for high quality multi-scale image segmentation. Angew. Geogr. Inf. Verarb. 2000, 12, 12–23. [Google Scholar]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Bai, T.; Sun, K.; Li, W.; Li, D.; Chen, Y.; Sui, H. A novel class-specific object-based method for urban change detection using high-resolution remote sensing imagery. Photogramm. Eng. Remote Sens. 2021, 87, 249–262. [Google Scholar] [CrossRef]

- Olson, D.L.; Delen, D. Advanced Data Mining Techniques; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Zhang, H.; Xu, W.; Ren, H.; Dong, L.; Fan, Z. Dense and Low-rise Residential Areas Detection by Shadow Data Mining in Urban High-resolution Images. In Proceedings of the 2021 International Conference on Intelligent Computing, Automation and Applications (ICAA), Nanjing, China, 25–27 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 514–519. [Google Scholar]

| Site | Location | Source | Date | Resolution | Size | Coordinate (Center) | |

|---|---|---|---|---|---|---|---|

| 1 | Yorkville, Toronto, Canada | Google Earth | 26 May 2015 | 0.7 m | 1078 × 912 | 43°40′23.07″ N | 79°23′27.20″ E |

| 2 | Chaoyang District, Beijing, China | Google earth | 12 December 2003 | 1.21 m | 1480 × 1087 | 39°56′11.69″ N | 116°26′08.85″ E |

| Latitude (°) Date | −90~−23.5 | −23.5~0 | 0~23.5 | 23.5~90 |

|---|---|---|---|---|

| 21 May/22 May~21 June/22 June | south | south | uncertain | north |

| 21 June/22 June~22 September/23 September | south | south | uncertain | north |

| 22 September/23 September~22 December/23 December | south | uncertain | north | north |

| 22 December/23 December~21 May/22 May | south | uncertain | north | north |

| Method | Target | Precision | Recall | Omission | False Alarm | WH 1 |

|---|---|---|---|---|---|---|

| Random Forest(Sampling ratio 35%) | buildings | 72.5% | 73.4% | 26.6% | 27.5% | - |

| buildings (road removed) | 78.2% | 73.4% | 26.6% | 21.8% | - | |

| Proposed method | buildings | 98.6% | 89.8% | 10.2% | 1.7% | 3% |

| Building Class | Ground Truth | Proposed Method | ||||

|---|---|---|---|---|---|---|

| Correct | Omission | False Alarm | WWH 1 | PWH 2 | ||

| high | 63 | 60 | 2 | 0 | 2 | 4 |

| middle | 192 | 183 | 2 | 1 | 8 | 2 |

| low | 180 | 111 | 57 | 6 | 8 | 1 |

| total | 435 | 354 | 61 | 7 | 18 | 7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, H.; Xu, C.; Fan, Z.; Li, W.; Sun, K.; Li, D. Detection and Classification of Buildings by Height from Single Urban High-Resolution Remote Sensing Images. Appl. Sci. 2023, 13, 10729. https://doi.org/10.3390/app131910729

Zhang H, Xu C, Fan Z, Li W, Sun K, Li D. Detection and Classification of Buildings by Height from Single Urban High-Resolution Remote Sensing Images. Applied Sciences. 2023; 13(19):10729. https://doi.org/10.3390/app131910729

Chicago/Turabian StyleZhang, Hongya, Chi Xu, Zhongjie Fan, Wenzhuo Li, Kaimin Sun, and Deren Li. 2023. "Detection and Classification of Buildings by Height from Single Urban High-Resolution Remote Sensing Images" Applied Sciences 13, no. 19: 10729. https://doi.org/10.3390/app131910729

APA StyleZhang, H., Xu, C., Fan, Z., Li, W., Sun, K., & Li, D. (2023). Detection and Classification of Buildings by Height from Single Urban High-Resolution Remote Sensing Images. Applied Sciences, 13(19), 10729. https://doi.org/10.3390/app131910729