The Application Status and Trends of Machine Vision in Tea Production

Abstract

:1. Introduction

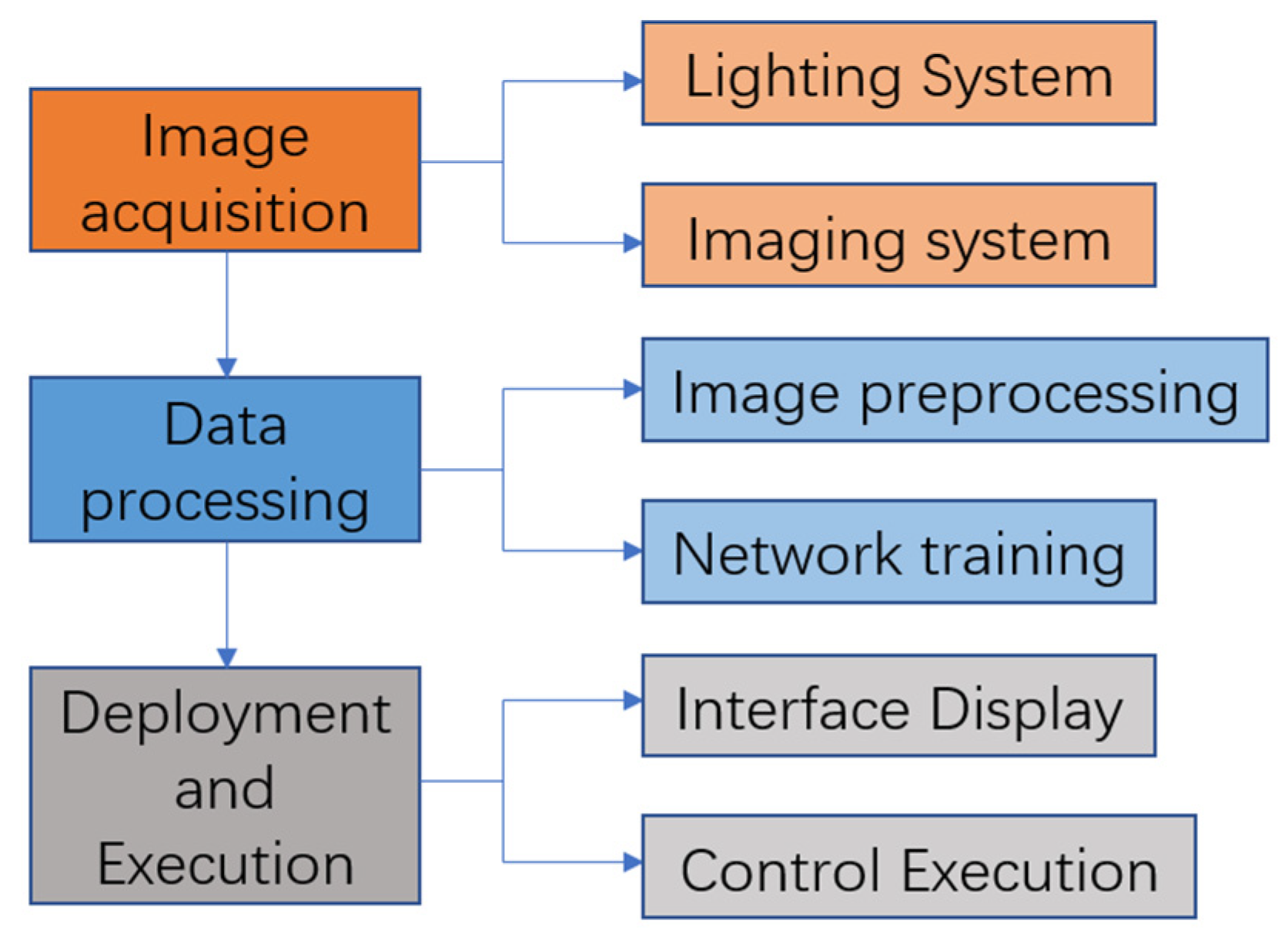

2. Pest and Disease Detection

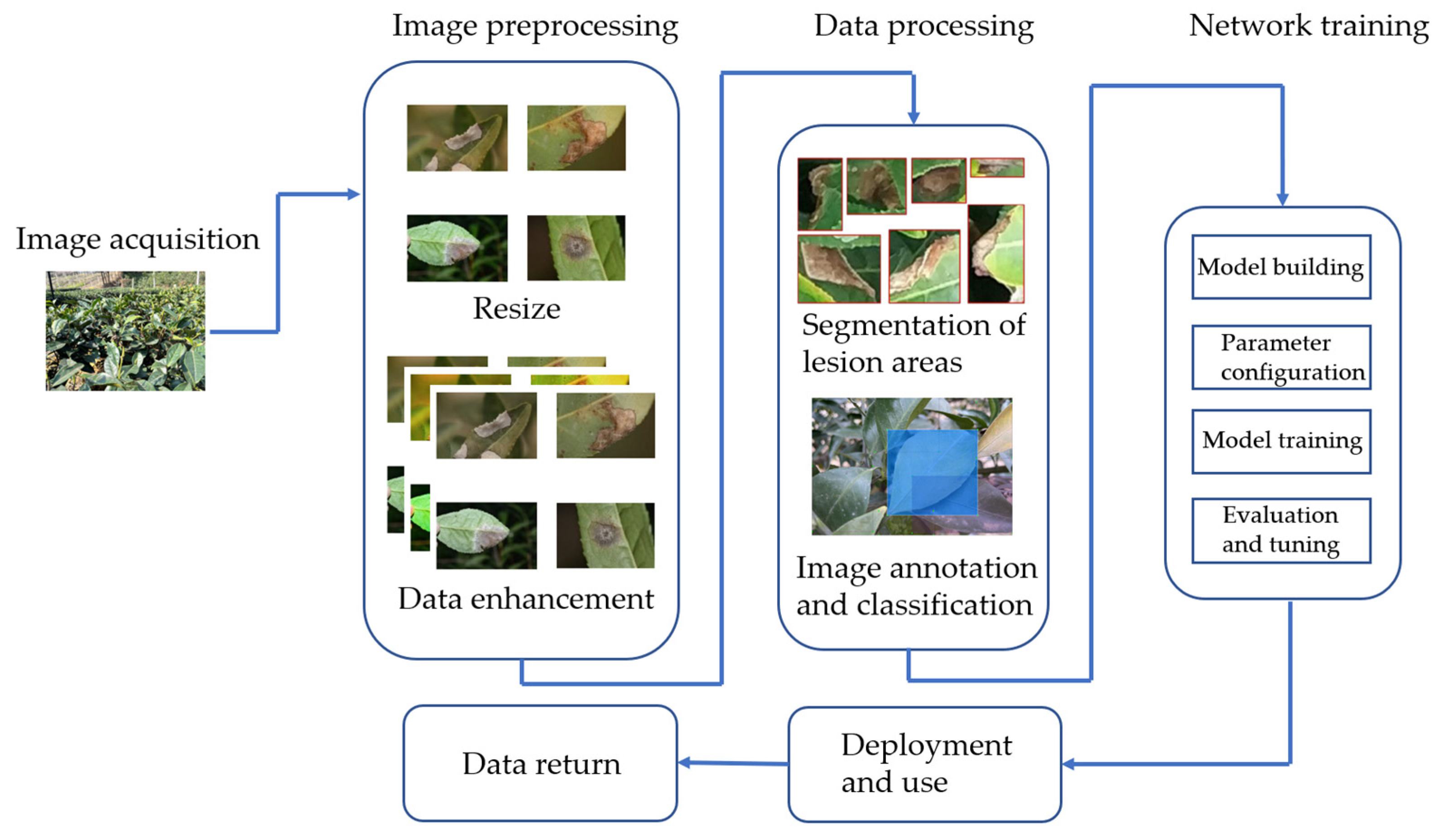

2.1. Disease Detection

| Ref. | Year | Disease Type | Task | Color Model | Method | Advantage | Disadvantage |

|---|---|---|---|---|---|---|---|

| Wang [14] | 2017 | Tea white spot, tea brown leaf spot, tea cloud leaf blight | Identification and classification of three kinds of diseases | HSV | The original image is processed through filtering and Otsu segmentation, 34 components are extracted, and then the optimal scheme is obtained through training with BP network | It can be applied to the task of recognition under complex backgrounds | Each of the three algorithms has its own advantages and are not better combined |

| Lin et al. [15] | 2019 | Tea red leaf spot, tea white spot, tea round red spot | Identification and classification of three kinds of diseases | HSV | Threshold iterative algorithm, maximum inter-class variance method, K-nearest neighbor algorithm | The recognition rate is 93.33% | The influence of color characteristics needs to be further studied |

| Sun et al. [16] | 2019 | Five diseases such as tea anthracnose, tea brown leaf blight, and tea net bubble blight | Segmentation of disease area | None | The image is segmented via simple linear iterative clustering, and then trained and segmented through svm. | The segmentation effect is good, and the background can be eliminated quickly and effectively | There is room for improvement in the parameters of svm. |

| Sun et al. [17] | 2019 | Tea ring spot, tea anthracnose, tea cloud leaf blight | Identification and classification of three kinds of diseases | L*, a*, b* | Uses 7 preprocessing methods, and then uses AlexNet network training | The accuracy can reach 93.3% in the highest combination mode | The number of samples is too small |

| Hu et al. [18] | 2019 | Tea red scab, tea red spot, tea blight | Identification and classification of three kinds of diseases | RGB 2R-G-B | Uses C-DCGAN to enhance the training samples, and then uses vgg16 network for training | Can be used in small sample cases | Only support vector machines are used |

| Mukhopadhyay et al. [19] | 2020 | Five diseases such as red rust, red spider disease, and thrips disease | Automatic detection and identification of diseases | HIS | Clustering recognition method of tea disease region image based on non-dominated sorting genetic algorithm (NSGA-II) | The detection effect is good, and a cloud system is provided | There is still room for improvement in the function of clustering algorithm |

| Hu et al. [20] | 2021 | Tea blight | Detect, identify, and estimate the severity of the disease | None | The original image is enhanced using Retinex algorithm, and then VGG16 network is used | Compared with the classical machine learning method, the average detection accuracy and severity classification accuracy of this method are improved by more than 6% and 9%, respectively | Only one disease of tea blight was studied |

| Li et al. [21] | 2022 | Five kinds of diseases, such as tea white spot and tea ring spot | Recognition and classification of data with small samples and uneven distribution | None | The pretraining model is obtained through pretraining using PlantVillage dataset, and the training is carried out in the improved DenseNet model | It can effectively alleviate the influence of uneven sample distribution on the model performance and improve the accuracy of the model | The disease condition grade has not been studied |

| Zhang et al. [22] | 2017 | Anthracnose, red leaf spot, tea white spot | Searching for the optimal spectral characteristics of disease recognition | None | Image features are extracted based on color moment and gray level co-occurrence matrix, and then BP neural network optimized via genetic algorithm is trained. | The spectral characteristics composed of relative spectral reflectance of 560, 640, and 780 nm have significant effect on the classification of tea diseases | Under the influence of light and background under natural conditions, the recognition efficiency is low |

| Lu et al. [23] | 2019 | Red leaf disease | Prediction of tea red leaf disease by fluorescence transmission technique | None | The prediction models of feature spectrum combined with gray co-occurrence matrix texture and LBP operator texture are established by extreme learning machine (ELM) | The recognition rate of diseases in different stages is improved | The experiment was carried out only in the laboratory environment |

| Liu et al. [24] | 2021 | Tea algal spot | Establishment of chlorophyll fluorescence spectrum combined with chemometrics recognition model | None | The model effect of principal component analysis (PCA) combined with linear discriminant analysis (LDA) | The recognition speed is fast, and the accuracy is as high as 98.9% | The experiment was carried out only in the laboratory environment |

2.2. Pest Detection

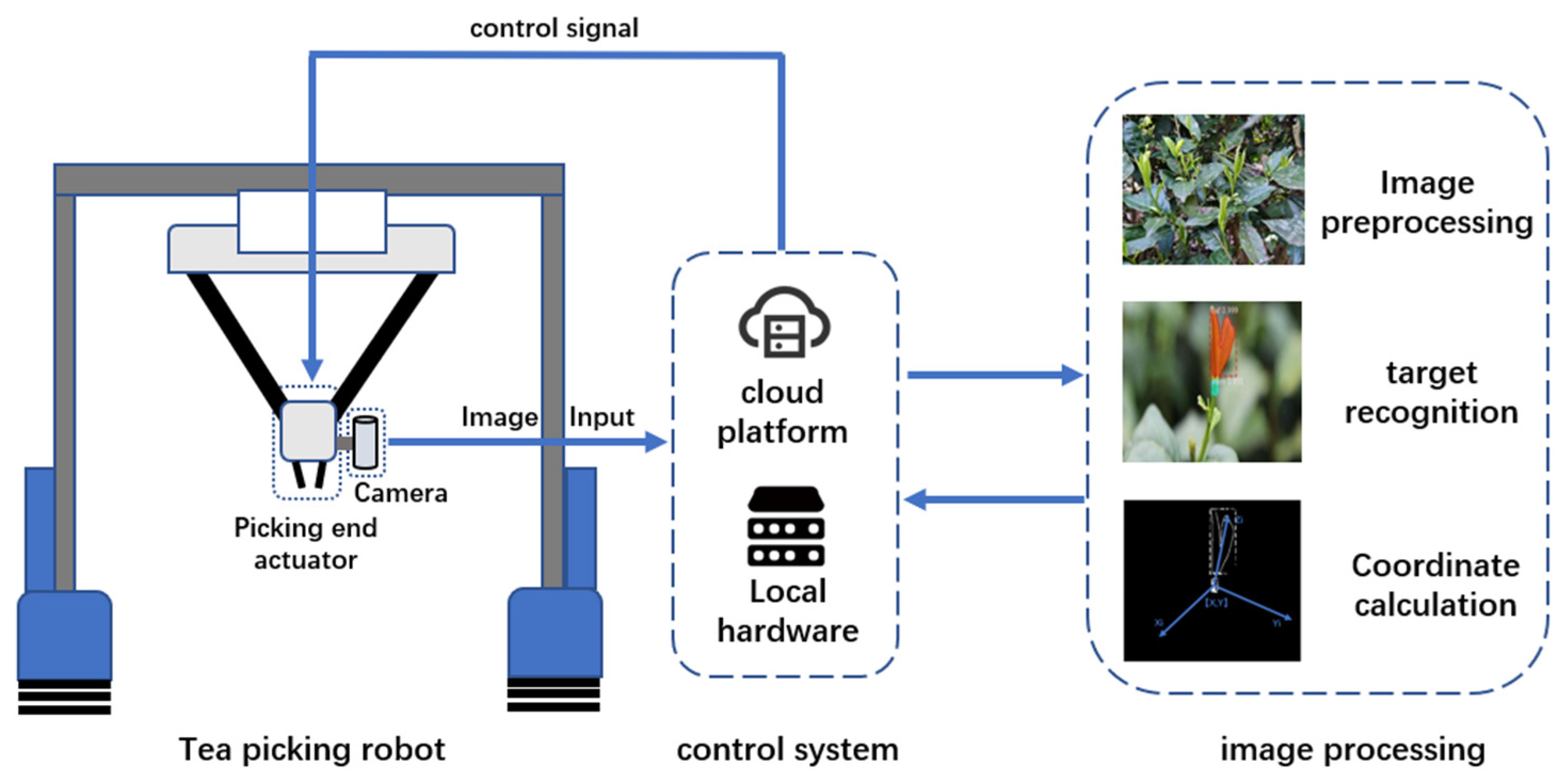

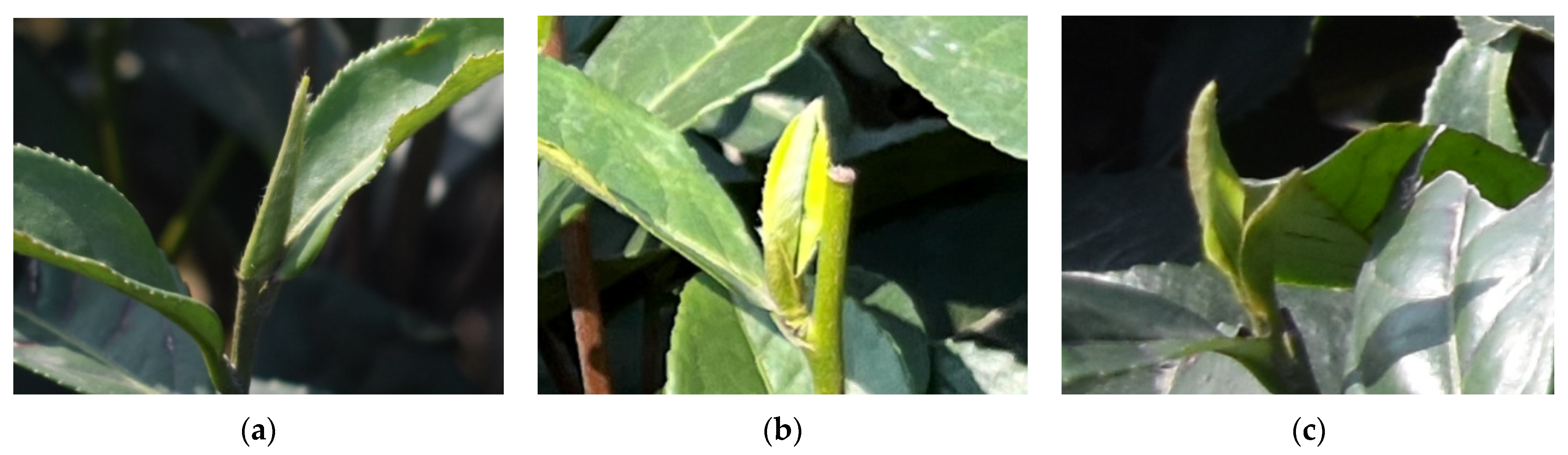

3. Intelligent Tea Picking

3.1. Target Recognition and Localization

3.2. Tea Production Estimation

4. Production Management of Tea

4.1. Quality Evaluation and Grading of Tea

4.2. Farm Management Information System

5. Application Challenges and Trends

- Combination of multiple types of sensors.

- 2.

- Establishment of multilevel standard datasets.

- 3.

- Research on identification and location strategies.

- 4.

- Research on joint application of multiple systems.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Food and Agriculture Organization of the United Nations. International Tea Market: Market Situation, Prospects and Emerging Issues; Food and Agriculture Organization of the United Nations: Rome, Italy, 2022. [Google Scholar]

- Pan, R.; Zhao, X.; Du, J.; Tong, J.; Huang, P.; Shang, H.; Leng, Y. A Brief Analysis on Tea Import and Export Trade in China during 2021. China Tea 2022, 44, 6. [Google Scholar]

- Parida, B.R.; Mahato, T.; Ghosh, S. Monitoring tea plantations during 1990–2022 using multi-temporal satellite data in Assam (India). Trop. Ecol. 2023; Online ahead of print. [Google Scholar] [CrossRef]

- Clarke, C.; Richter, B.S.; Rathinasabapathi, B. Genetic and morphological characterization of United States tea (Camellia sinensis): Insights into crop history, breeding strategies, and regional adaptability. Front. Plant Sci. 2023, 14, 1149682. [Google Scholar] [CrossRef] [PubMed]

- Carloni, P.; Albacete, A.; Martínez-Melgarejo, P.A.; Girolametti, F.; Truzzi, C.; Damiani, E. Comparative Analysis of Hot and Cold Brews from Single-Estate Teas (Camellia sinensis) Grown across Europe: An Emerging Specialty Product. Antioxidants 2023, 12, 1306. [Google Scholar] [CrossRef] [PubMed]

- Pan, S.Y.; Nie, Q.; Tai, H.C.; Song, X.L.; Tong, Y.F.; Zhang, L.J.F.; Wu, X.W.; Lin, Z.H.; Zhang, Y.Y.; Ye, D.Y.; et al. Tea and tea drinking: China’s outstanding contributions to the mankind. Chin. Med. 2022, 17, 27. [Google Scholar] [CrossRef]

- Da Silva Pinto, M. Tea: A new perspective on health benefits. Food Res. Int. 2013, 53, 558–567. [Google Scholar] [CrossRef]

- Beringer, T.; Kulak, M.; Müller, C.; Schaphoff, S.; Jans, Y. First process-based simulations of climate change impacts on global tea production indicate large effects in the World’s major producer countries. Environ. Res. Lett. 2020, 15, 034023. [Google Scholar] [CrossRef]

- Zhen, D.; Qian, Y.; Zhou, W.; Xu, S.; Sun, B. Discussion on the shortage of Tea picking and Labor in Songyang. China Tea 2017, 39, 2. [Google Scholar]

- Wang, H.; Gu, J.; Wang, M. A review on the application of computer vision and machine learning in the tea industry. Front. Sustain. Food Syst. 2023, 7, 1172543. [Google Scholar] [CrossRef]

- Zhu, Y.; Ling, Z.; Zhang, Y. Research progress and prospect of machine vision technology. J. Graph. 2020, 41, 20. [Google Scholar]

- Chen, X. The Occurrence Trend and Green Control of Tea Diseases in China. China Tea 2022, 44, 7–14. [Google Scholar]

- Datta, S.; Gupta, N. A Novel Approach for the Detection of Tea Leaf Disease Using Deep Neural Network. Procedia Comput. Sci. 2023, 218, 2273–2286. [Google Scholar] [CrossRef]

- Wang, J. Research on The Identification Methods of Tea Leaf Disease Based on Image Characteristics. Master’s Thesis, Nanjing Agricultural University, Nanjing, China, 2017. [Google Scholar]

- Lin, B.; Qiu, X.; He, Y.; Zhu, X.; Zhang, Y. Research on Intelligent Diagnosis and Recognition Algorithms for Tea Tree Diseases. Jiangsu Agric. Sci. 2019, 47, 7. [Google Scholar]

- Sun, Y.; Jiang, Z.; Zhang, L.; Dong, W.; Rao, Y. SLIC_SVM based leaf diseases saliency map extraction of tea plant. Comput. Electron. Agric. 2019, 157, 102–109. [Google Scholar] [CrossRef]

- Sun, Y.; Jiang, Z.; Dong, W.; Zhang, L.i.; Rao, Y.; Li, S. Image recognition of tea plant disease based on convolutional neural network and small samples. Jiangsu J. Agric. Sci. 2019, 25, 48–55. [Google Scholar]

- Hu, G.; Wu, H.; Zhang, Y.; Wan, M. A low shot learning method for tea leaf’s disease identification. Comput. Electron. Agric. 2019, 163, 104852. [Google Scholar] [CrossRef]

- Somnath, M.; Munti, P.; Ramen, P.; Debashis, D. Tea leaf disease detection using multi-objective image segmentation. Multimed. Tools Appl. 2020, 80, 753–771. [Google Scholar]

- Gensheng, H.; Huaiyu, W.; Yan, Z.; Mingzhu, W. Detection and severity analysis of tea leaf blight based on deep learning. Comput. Electr. Eng. 2021, 90, 107023. [Google Scholar]

- Li, Z.; Xu, J.; Zheng, L.; Tie, J.; Tie, J. Small sample recognition method of tea disease based on improved DenseNet. Trans. Chin. Soc. Agric. Eng. 2022, 38, 182–190. [Google Scholar]

- Zhang, S.; Wang, Z.; Zou, X.; Qian, Y.; Yu, L. Recognition of tea disease spot based on hyperspectral image and genetic optimization neural network. Trans. Chin. Soc. Agric. Eng. 2017, 33, 8. [Google Scholar]

- Lu, B.; Sun, J.; Yang, N.; Wu, X.; Zhou, X. Prediction of Tea Diseases Based on Fluorescence Transmission Spectrum and Texture of Hyperspectral Image. Spectrosc. Spectr. Anal. 2019, 39, 7. [Google Scholar]

- Liu, Y.; Lin, X.; Gao, H.; Wang, S.; Gao, X. Research on Tea Cephaleuros Virescens Kunze Model Based on Chlorophy II Fluorescence Spectroscopy. Spectrosc. Spectr. Anal. 2021, 41, 6. [Google Scholar]

- Sun, Y. Study of Pest Information for Tea Plant Based on Electronic Nose. Ph.D. Thesis, Zhejiang University, Hangzhou, China, 2018. [Google Scholar]

- Zhang, H.; Mao, H.; Qiu, D. Feature extraction for the stored-grain insect detection system based on image recognition technology. Trans. Chin. Soc. Agric. Eng. 2009, 25, 126–130. [Google Scholar]

- Liang, W.; Cao, H. Rice Pest Identification Based on Convolutional Neural Network. Jiangsu Agric. Sci. 2017, 45, 4. [Google Scholar]

- Yan, Z. Research on Identification Technology of Tea pests Based on Deep Learning. Master’s Thesis, Chongqing University of Technology, Chongqing, China, 2022. [Google Scholar]

- Xu, R.; Jin, Z.; Luo, L.; Feng, H.; Fang, H.; Wang, X. Population Monitoring of the Empoasca onukii and Its Control with Audio-optical Emitting Technology. China Tea Process. 2022, 3, 28–33. [Google Scholar] [CrossRef]

- Wang, X.; Tang, D. Research Progress on Mechanical Tea Plucking. Acta Tea Sin. 2022, 63, 275–282. [Google Scholar] [CrossRef]

- Li, Y.T. Research on the Visual Detection and Localization Technology of Tea Harvesting Robot. Ph.D. Thesis, Zhejiang Sci-Tech University, Hangzhou, China, 2022. [Google Scholar]

- Lu, D.; Yi, J. The Significance and Implementation Path of Mechanized Picking of Famous Green Tea in China. China Tea 2018, 40, 1–4. [Google Scholar]

- Yang, F.; Yang, L.; Tian, Y.; Yang, Q. Recognition of the Tea Sprout Based on Color and Shape Features. Trans. Chin. Soc. Agric. Mach. 2009, 40, 119–123. [Google Scholar]

- Wang, J. Segmentation Algorithm of Tea Combined with the Color and Region Growing. J. Tea Sci. 2011, 31, 72–77. [Google Scholar] [CrossRef]

- Wu, X.; Tang, X.; Zhang, F.; Gu, J. Tea buds image identification based on lab color model and K-means clustering. J. Chin. Agric. Mech. 2015, 36, 161–164 + 179. [Google Scholar] [CrossRef]

- Sun, X. The Research of Tea Buds Detection and Leaf Diseases Image Recognitionn Based on Deep Learning. Master’s Thesis, Shandong Agricultural University, Taian, China, 2019. [Google Scholar]

- Guo, S.; Yoon, S.-C.; Li, L.; Wang, W.; Zhuang, H.; Wei, C.; Liu, Y.; Li, Y. Recognition and Positioning of Fresh Tea Buds Using YOLOv4-lighted + ICBAM Model and RGB-D Sensing. Agriculture 2023, 13, 518. [Google Scholar] [CrossRef]

- Lyu, J.; Fang, M.; Yao, Q.; Wu, C.; He, Y.; Bian, L.; Zhong, X. Detection model for tea buds based on region brightness adaptive correction. Trans. Chin. Soc. Agric. Eng. 2021, 37, 278–285. [Google Scholar] [CrossRef]

- Gong, T.; Wang, Z.L. A tea tip detection method suitable for tea pickers based on YOLOv4 network. In Proceedings of the 2021 3rd International Symposium on Robotics & Intelligent Manufacturing Technology (ISRIMT), Online, 25–26 September 2021. [Google Scholar]

- Chen, M. Recognition and Location of High-Quality Tea Buds Based on Computer Vision. Master Thesis, Qingdao University of Science and Technology, Qingdao, China, 2019. [Google Scholar]

- Xu, F.; Zhang, K.; Zhang, W.; Wang, R.; Wang, T.; Wan, S.; Liu, B.; Rao, Y. Identification and Localization Method of Tea Bud Leaf Picking Point Based on Improved YOLOv4 Algorithm. J. Fudan Univ. Nat. Sci. 2022, 61, 460–471. [Google Scholar] [CrossRef]

- Zhang, K. Three-Dimensional Localization of the Picking Point of Houkui Tea in the Natural Environment Based on YOLOv4. Master’s Thesis, Anhui Agricultural University, Hefei, China, 2022. [Google Scholar]

- Chen, Y.-T.; Chen, S.-F. Localizing plucking points of tea leaves using deep convolutional neural networks. Comput. Electron. Agric. 2020, 171, 105298. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, K.; Zhang, W.; Wang, R.; Wan, S.; Rao, Y.; Jiang, Z.; Gu, L. Tea picking point detection and location based on Mask-RCNN. Inf. Process. Agric. 2023, 10, 267–275. [Google Scholar] [CrossRef]

- Yan, L.; Wu, K.; Lin, J.; Xu, X.; Zhang, J.; Zhao, X.; Tayor, J.; Chen, D. Identification and picking point positioning of tender tea shoots based on MR3P-TS model. Front. Plant Sci. 2022, 13, 962391. [Google Scholar] [CrossRef]

- Chunyu, Y.; Zhonghui, C.; Zhilin, L.; Ruixin, L.; Yuxin, L.; Hui, X.; Ping, L.; Benliang, X. Tea Sprout Picking Point Identification Based on Improved DeepLabV3+. Agriculture 2022, 12, 1594. [Google Scholar]

- Zhang, X. Research on Tea Recognition Method Based on Machine Vision Features for Intelligent Tea Picking Robot. Master’s Thesis, Shanghai Jiaotong University, Shanghai, China, 2020. [Google Scholar]

- Wang, F.; Cui, D.; Li, L. Target recognition and positioning algorithm of picking robot based on deep learning. Electron. Meas. Technol. 2021, 44, 162–167. [Google Scholar] [CrossRef]

- Yang, S.; Wang, Y.; Guo, H.; Wang, X.; Liu, N. Binocular camera multi-pose calibration method based on radial alignment constraint algorithm. J. Comput. Appl. 2018, 38, 2655–2659. [Google Scholar]

- Li, Y.; He, L.; Jia, J.; Lv, J.; Chen, J.; Qiao, X.; Wu, C. In-field tea shoot detection and 3D localization using an RGB-D camera. Comput. Electron. Agric. 2021, 185, 106149. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, J.; Li, Y.; Gui, Z.; Yu, T. Tea Bud Detection and 3D Pose Estimation in the Field with a Depth Camera Based on Improved YOLOv5 and the Optimal Pose-Vertices Search Method. Agriculture 2023, 13, 1405. [Google Scholar] [CrossRef]

- Chen, D.; Han, W.; Zhou, X.; Wu, K.; Zhang, J. Tea Yield Prediction in Zhejiang Province Based on Adaboost BP Model. J. Tea Sci. 2021, 41, 564–576. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, H.; Chen, J.; Wang, H.; Xing, Z.; Zhang, Z. Comparation of rice yield estimation model combining spectral index screening method and statistical regression algorithm. Trans. Chin. Soc. Agric. Eng. 2021, 37, 208–216. [Google Scholar]

- Hhuang, Z. Study on Wheat Yield Estimation Based on Deep Learning Ear Recognition. Master’s Thesis, Shandong Agricultural University, Taian, China, 2022. [Google Scholar]

- Li, T. Research on Recognition and Counting Method of Tea Buds Based on Deep Learning. Master’s Thesis, Anhui University, Hefei, China, 2021. [Google Scholar]

- Xu, H.; Ma, W.; Tan, Y.; Liu, X.; Zheng, Y.; Tian, Z. Yield estimation method for tea based on YOLOv5 deep learning. J. China Agric. Univ. 2022, 27, 213–220. [Google Scholar]

- Li, S. Application of Computer Vision in Tea Grade Testing. J. Agric. Mech. Res. 2019, 41, 219–222. [Google Scholar] [CrossRef]

- Borah, S.; Bhuyan, M. Quality indexing by machine vision during fermentation in black tea manufacturing. In Proceedings of the Sixth International Conference on Quality Control by Artificial Vision, Gatlinberg, TN, USA, 19–22 May 2003. [Google Scholar]

- Gao, D. Research on the Tea Sorting Based on Characteristic of Color and Shape. Master’s Thesis, University of Science and Technology of China, Hefei, China, 2016. [Google Scholar]

- Kim, S.; Kwak, J.-Y.; Ko, B. Automatic Classification Algorithm for Raw Materials using Mean Shift Clustering and Stepwise Region Merging in Color. J. Broadcast Eng. 2016, 21, 425–435. [Google Scholar] [CrossRef]

- Song, Y.; Xie, H.; Ning, J.; Zhang, Z. Grading Keemun black tea based on shape feature parameters of machine vision. Trans. Chin. Soc. Agric. Eng. 2018, 34, 279–286. [Google Scholar]

- Aboulwafa, M.M.; Youssef, F.S.; Gad, H.A.; Sarker, S.D.; Ashour, M.L. Authentication and Discrimination of Green Tea Samples Using UV-Visible, FTIR and HPLC Techniques Coupled with Chemometrics Analysis. J. Pharm. Biomed. Anal. 2018, 164, 653–658. [Google Scholar] [CrossRef]

- Chen, L.; Xu, B.; Zhao, C.; Duan, D.; Cao, Q.; Wang, F. Application of Multispectral Camera in Monitoring the Quality Parameters of Fresh Tea Leaves. Remote Sens. 2021, 13, 3719. [Google Scholar] [CrossRef]

- Wickramasinghe, N.; Ekanayake, E.M.S.L.B.; Wijedasa, M.A.C.S.; Wijesinghe, A.; Madhujith, T.; Ekanayake, M.P.; Godaliyadda, G.M.R.; Herath, V. Validation of Multispectral Imaging for The Detection of Sugar Adulteration in Black Tea. In Proceedings of the 2021 10th International Conference on Information and Automation for Sustainability (ICIAfS), Negambo, Sri Lanka, 11–13 August 2021; pp. 494–499. [Google Scholar]

- Huang, H.; Chen, X.; Han, Z.; Fan, Q.; Zhu, Y.; Hu, P. Tea Buds Grading Method Based on Multiscale Attention Mechanism and Knowledge Distillation. Trans. Chin. Soc. Agric. Mach. 2022, 53, 399–407 + 458. [Google Scholar]

- Cao, Q.; Yang, G.; Wang, F.; Chen, L.; Xu, B.; Zhao, C.; Duan, D.; Jiang, P.; Xu, Z.; Yang, H. Discrimination of tea plant variety using in-situ multispectral imaging system and multi-feature analysis. Comput. Electron. Agric. 2022, 202, 107360. [Google Scholar] [CrossRef]

- Zhang, J.; Li, Z. Intelligent Tea-Picking System Based on Active Computer Vision and Internet of Things. Secur. Commun. Netw. 2021, 2021, 5302783. [Google Scholar] [CrossRef]

- Lu, J.; Yang, Z.; Sun, Q.; Gao, Z.; Ma, W. A Machine Vision-Based Method for Tea Buds Segmentation and Picking Point Location Used on a Cloud Platform. Agronomy 2023, 13, 1537. [Google Scholar] [CrossRef]

- Villa-Henriksen, A.; Edwards, G.T.C.; Pesonen, L.A.; Green, O.; Sørensen, C.A.G. Internet of Things in arable farming: Implementation, applications, challenges and potential. Biosyst. Eng. 2020, 191, 60–84. [Google Scholar] [CrossRef]

| Camera Type | RGB Binocular Camera | Structured Light | TOF |

|---|---|---|---|

| Mainstream Brands | Dajiang, Oni | Kinect V1, Realsense | Kinect V2, V3 |

| Working principle | Passive: triangulation calculation based on mathing results for RGB image features | Active: actively projecting known encoded light sources to improve matching feature performance | Active: direct measurement based on the flight time of infrared lasers |

| Measuring range | 0.1 m~20 m (the farther the distance, the lower the accuracy) | 0.1 m~20 m (the farther the distance, the lower the accuracy) | 0.1 m~20 m (the farther the distance, the lower the accuracy) |

| Measurement accuracy | 0.01 mm~1 mm | 0.01 mm~1 mm | Up to centimeter level |

| Environmental limitations | Due to significant changes in light intensity and object texture, it cannot be used at night | The indoor effect is good, but it will be affected to some extent under strong outdoor light | Not affected by changes in lighting and object texture, but will be affected by reflective objects |

| Resolution ratio | Up to 2K resolution | Up to 1280 × 720 | Usually 512 × 424 |

| Frame rate | 1 to 90 FPS | 1 to 30 FPS | Up to hundreds of FPS |

| Software complexity | Higher | Medium | Higher |

| Consumption | Lower | Medium | High power consumption |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Z.; Ma, W.; Lu, J.; Tian, Z.; Peng, K. The Application Status and Trends of Machine Vision in Tea Production. Appl. Sci. 2023, 13, 10744. https://doi.org/10.3390/app131910744

Yang Z, Ma W, Lu J, Tian Z, Peng K. The Application Status and Trends of Machine Vision in Tea Production. Applied Sciences. 2023; 13(19):10744. https://doi.org/10.3390/app131910744

Chicago/Turabian StyleYang, Zhiming, Wei Ma, Jinzhu Lu, Zhiwei Tian, and Kaiqian Peng. 2023. "The Application Status and Trends of Machine Vision in Tea Production" Applied Sciences 13, no. 19: 10744. https://doi.org/10.3390/app131910744

APA StyleYang, Z., Ma, W., Lu, J., Tian, Z., & Peng, K. (2023). The Application Status and Trends of Machine Vision in Tea Production. Applied Sciences, 13(19), 10744. https://doi.org/10.3390/app131910744