Deep Learning-Based Estimation of Muckpile Fragmentation Using Simulated 3D Point Cloud Data

Abstract

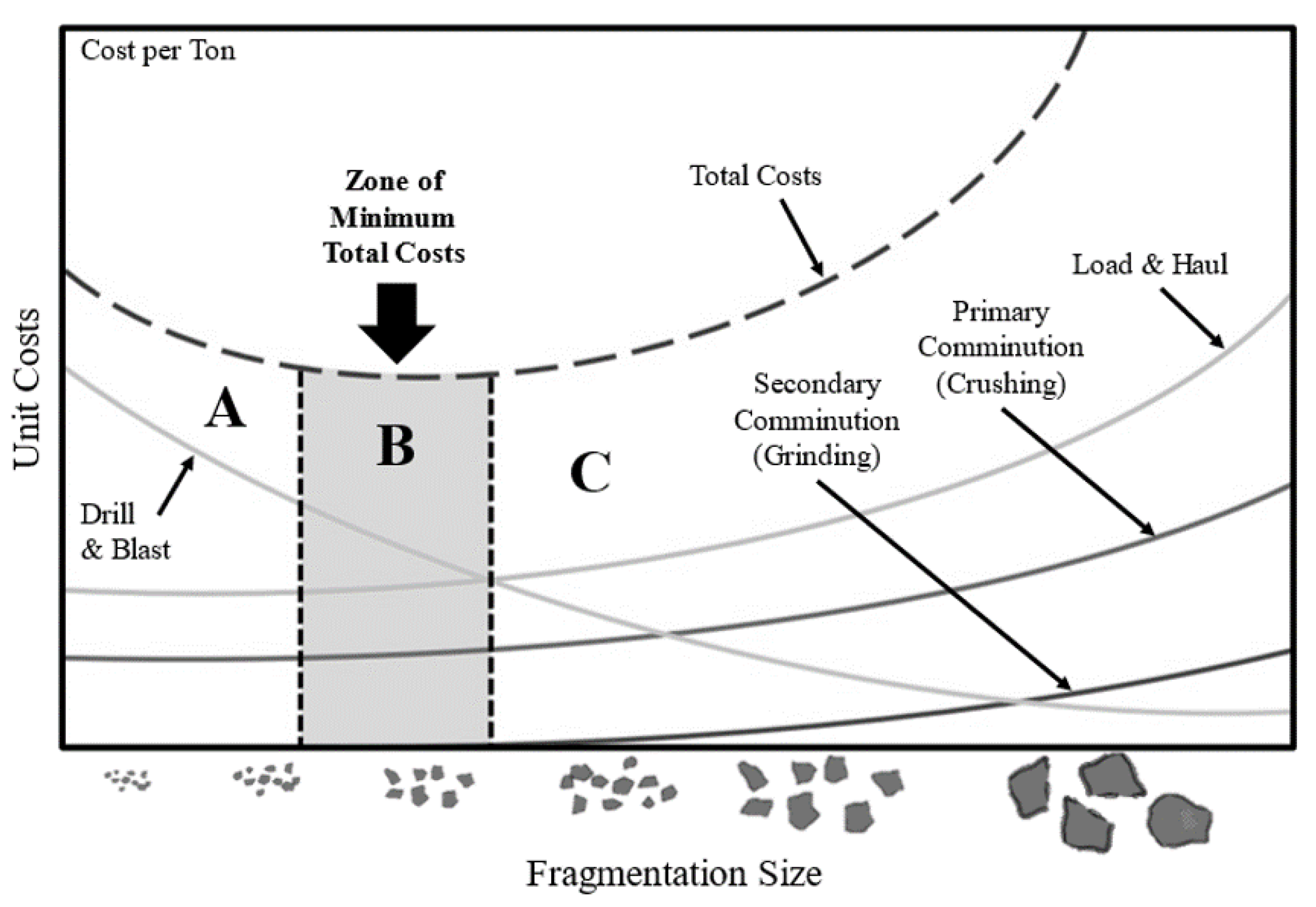

:1. Introduction

2. Related Work

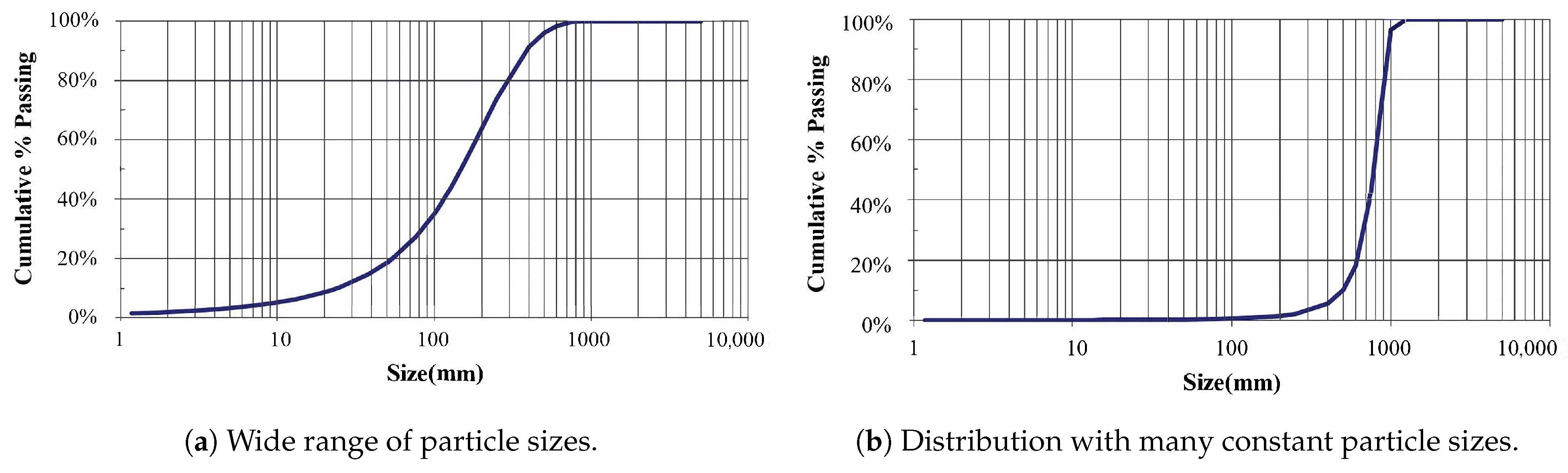

2.1. Estimation for the Particle Size Distribution

2.2. Estimation Methods Using 2D Images

2.3. Estimation Using 3D Images

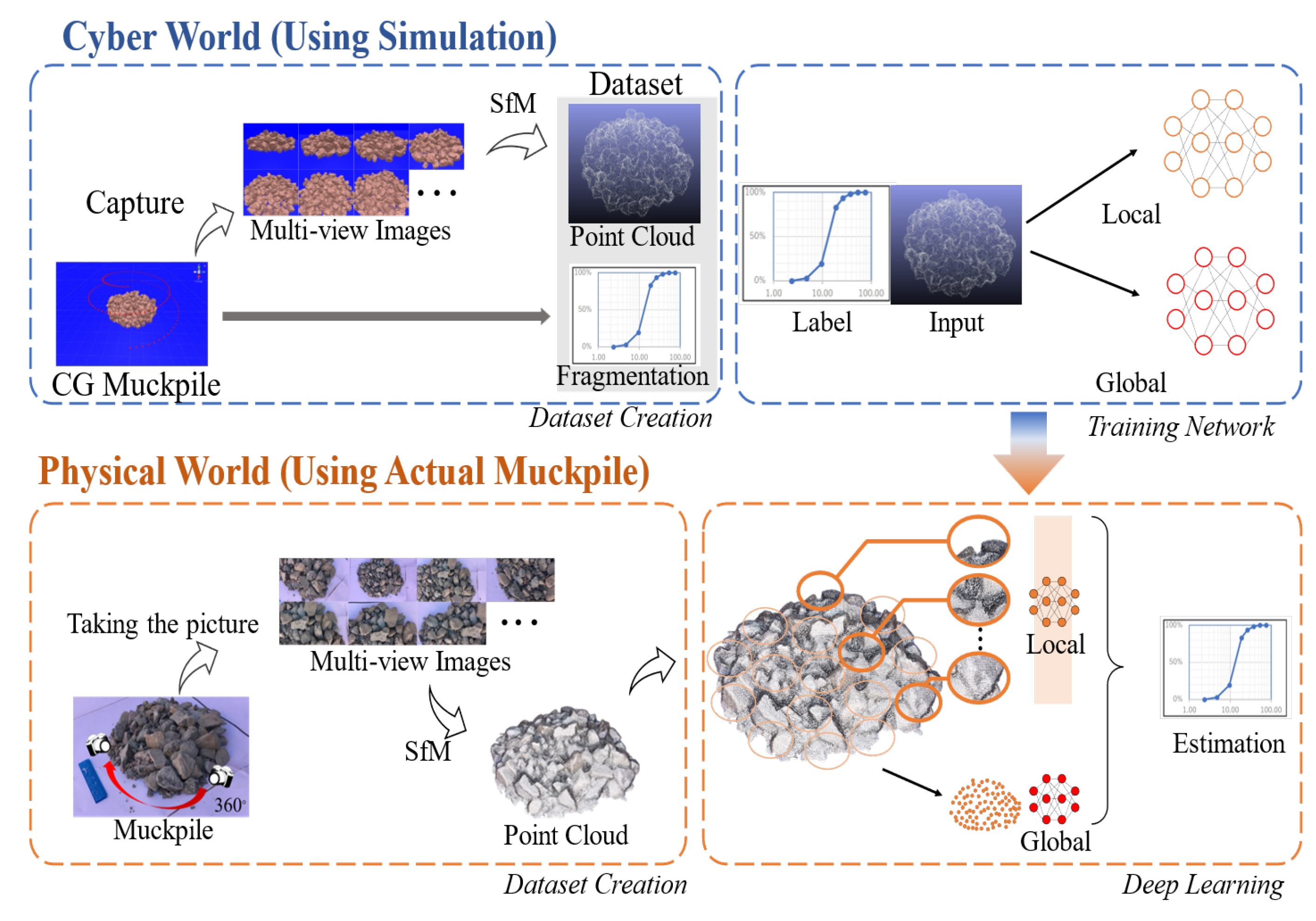

3. Methodology

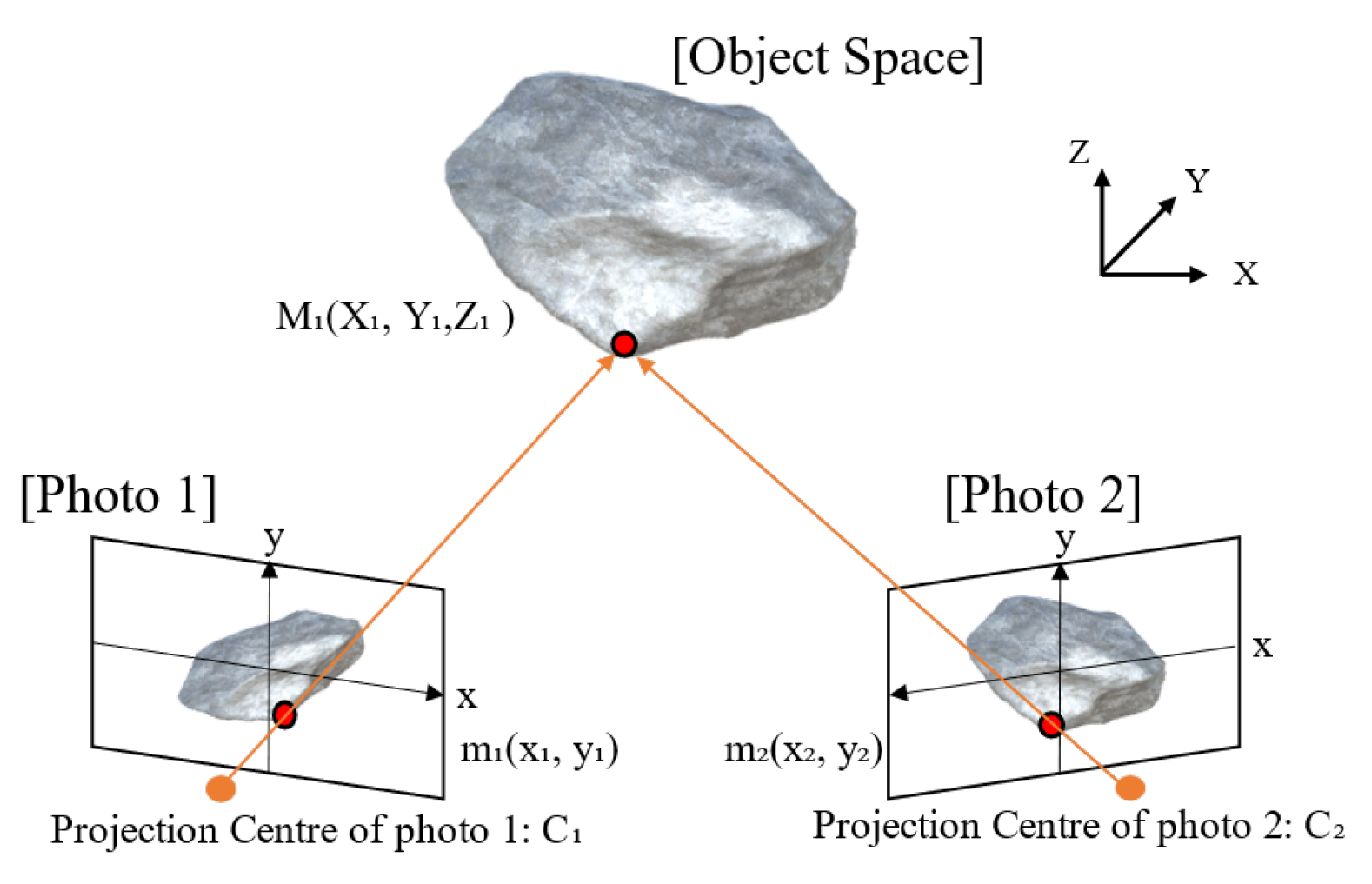

3.1. Photogrammetry

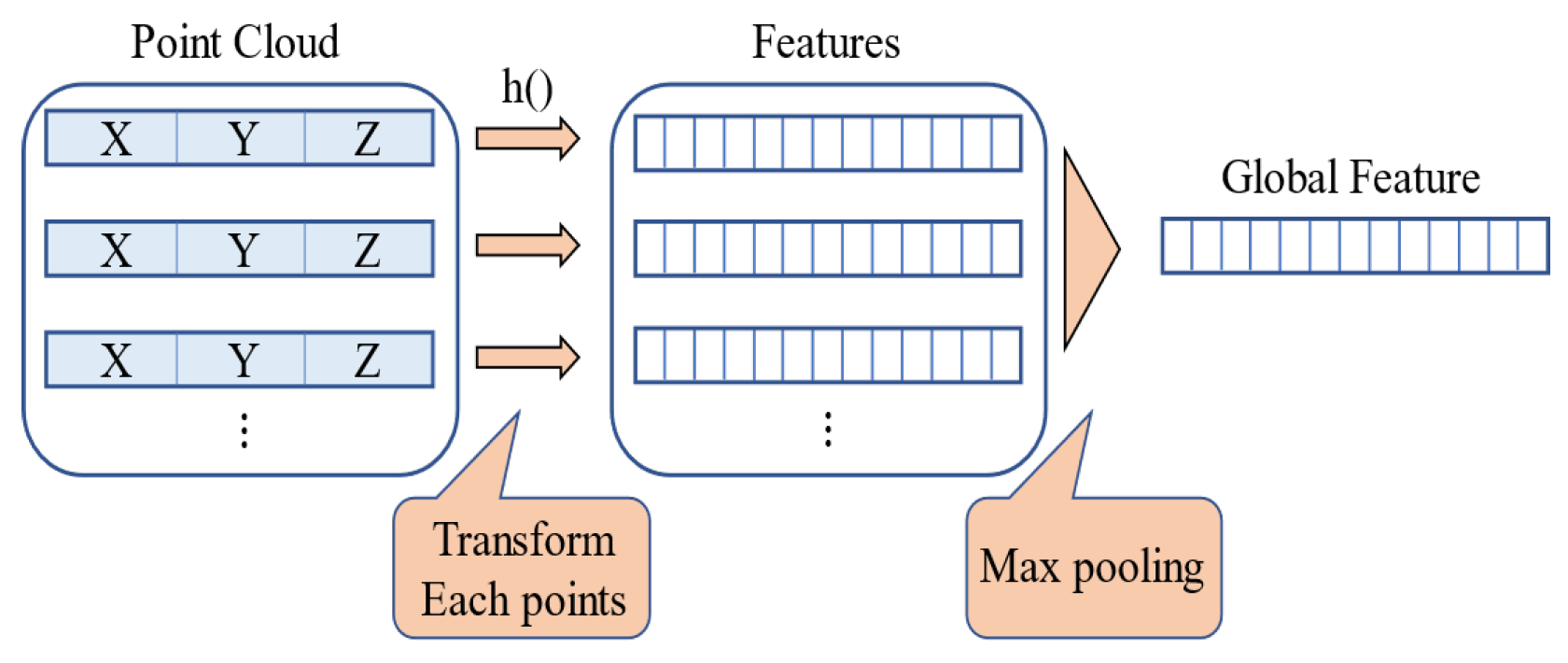

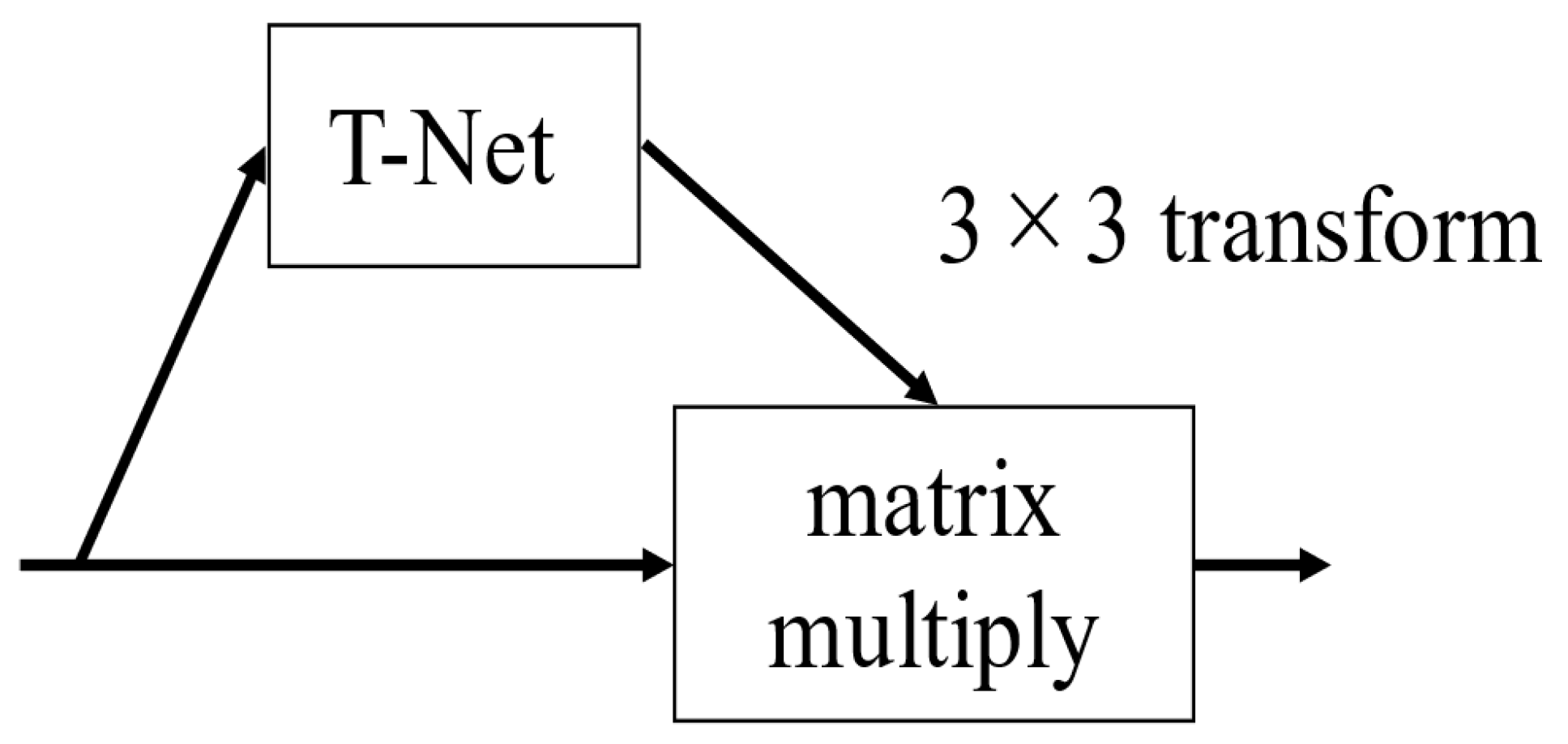

3.2. Deep Learning

3.3. Experimental Procedures

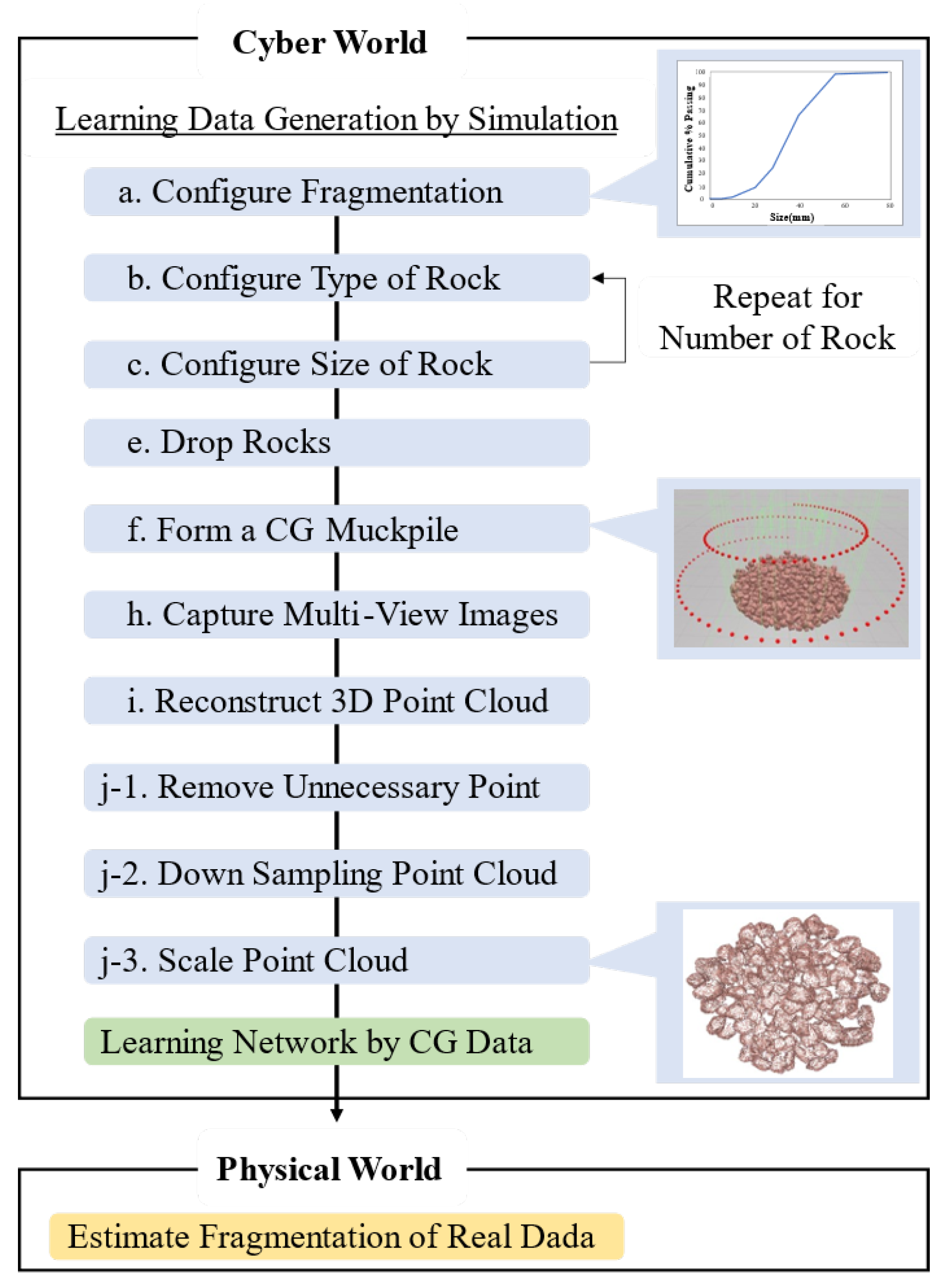

4. Simulation-Based Learning Data Generation

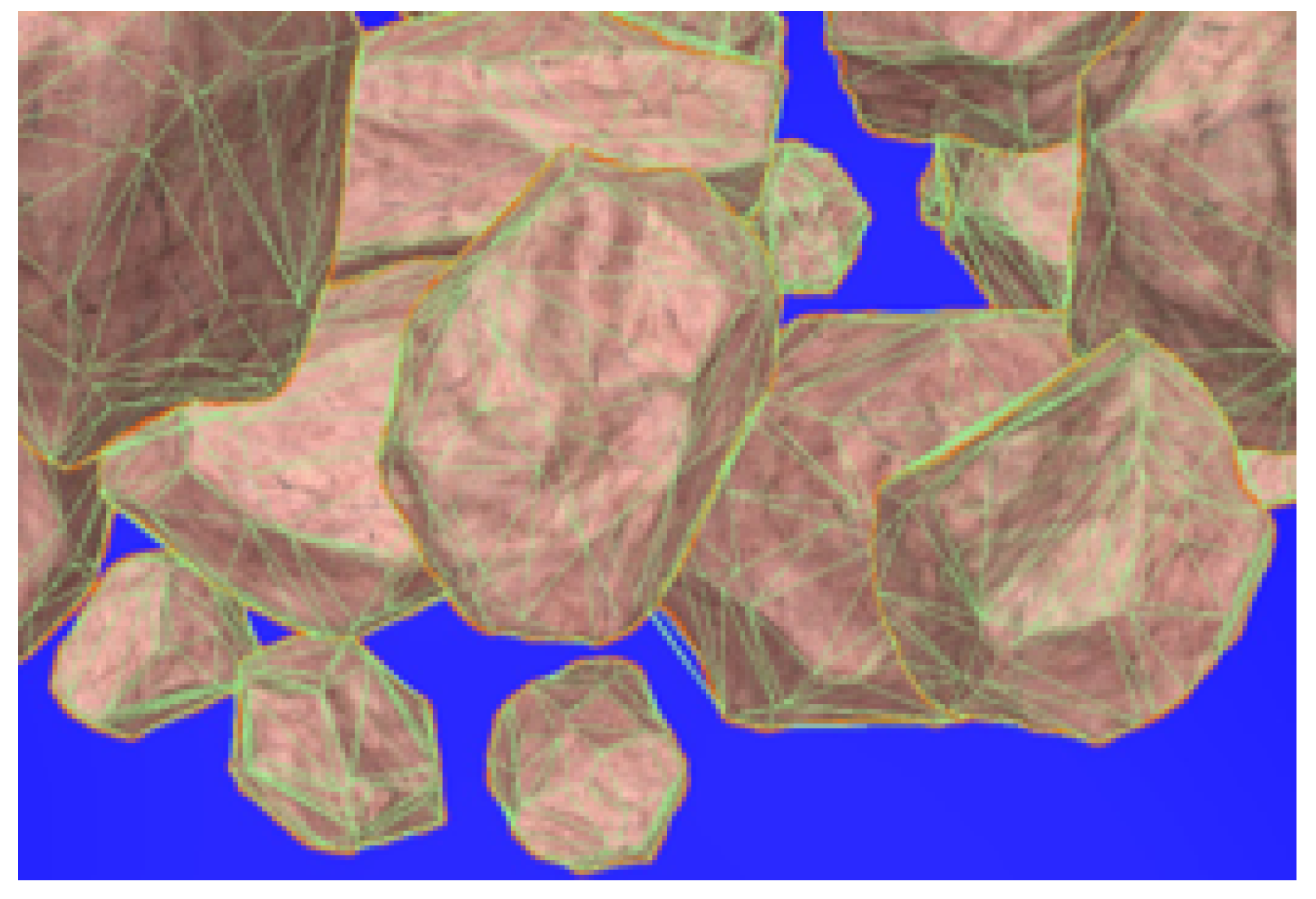

4.1. Creation of Muckpile CG Model

- (a)

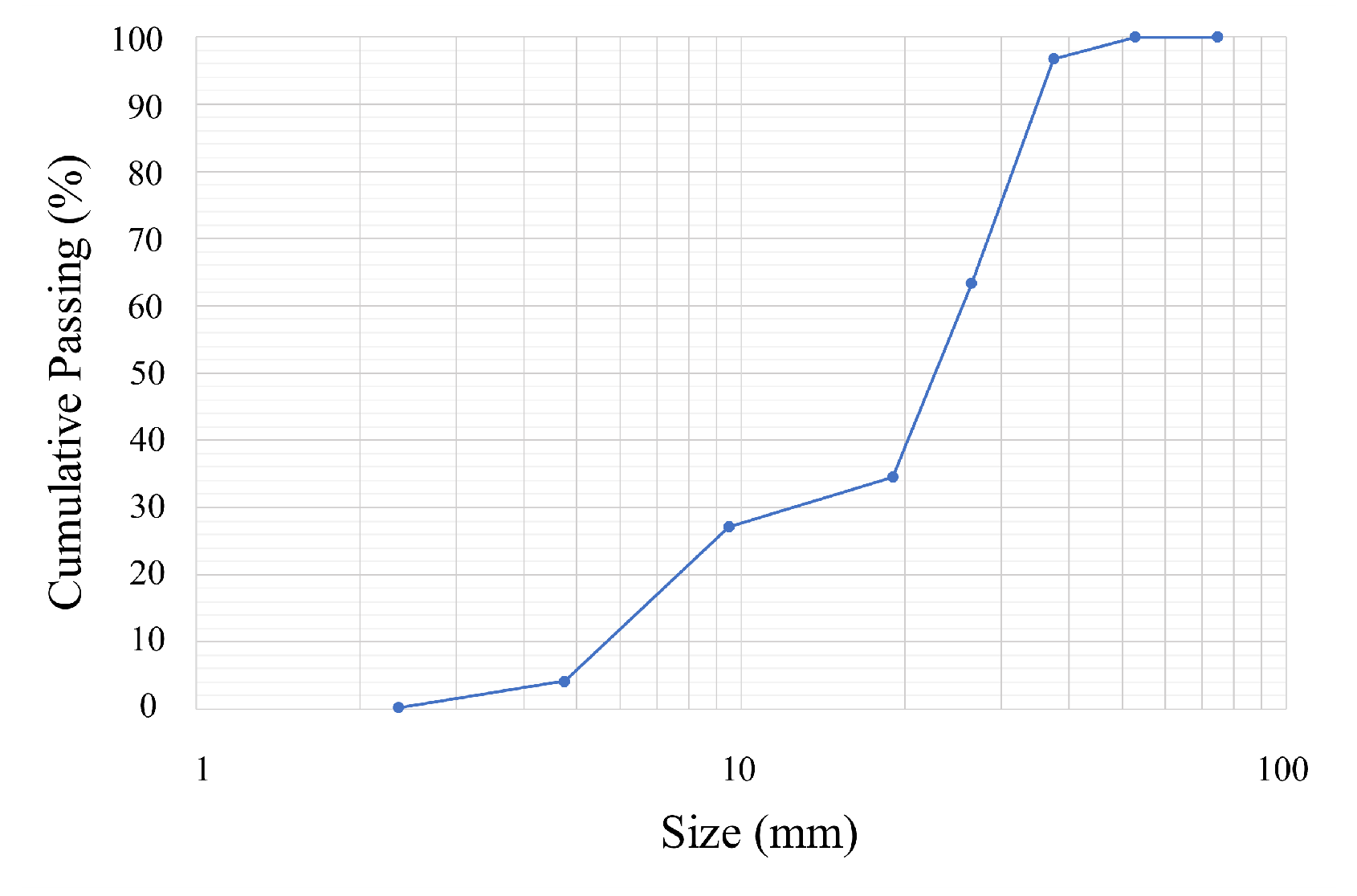

- Configure Fragmentation

- (b)

- Configure Type of Rock

- (c)

- Configure Size of Rock

- (d)

- Placing the rocks in mid-air in the simulation

- (e)

- Drop Rocks

- (f)

- Form a CG Muckpile

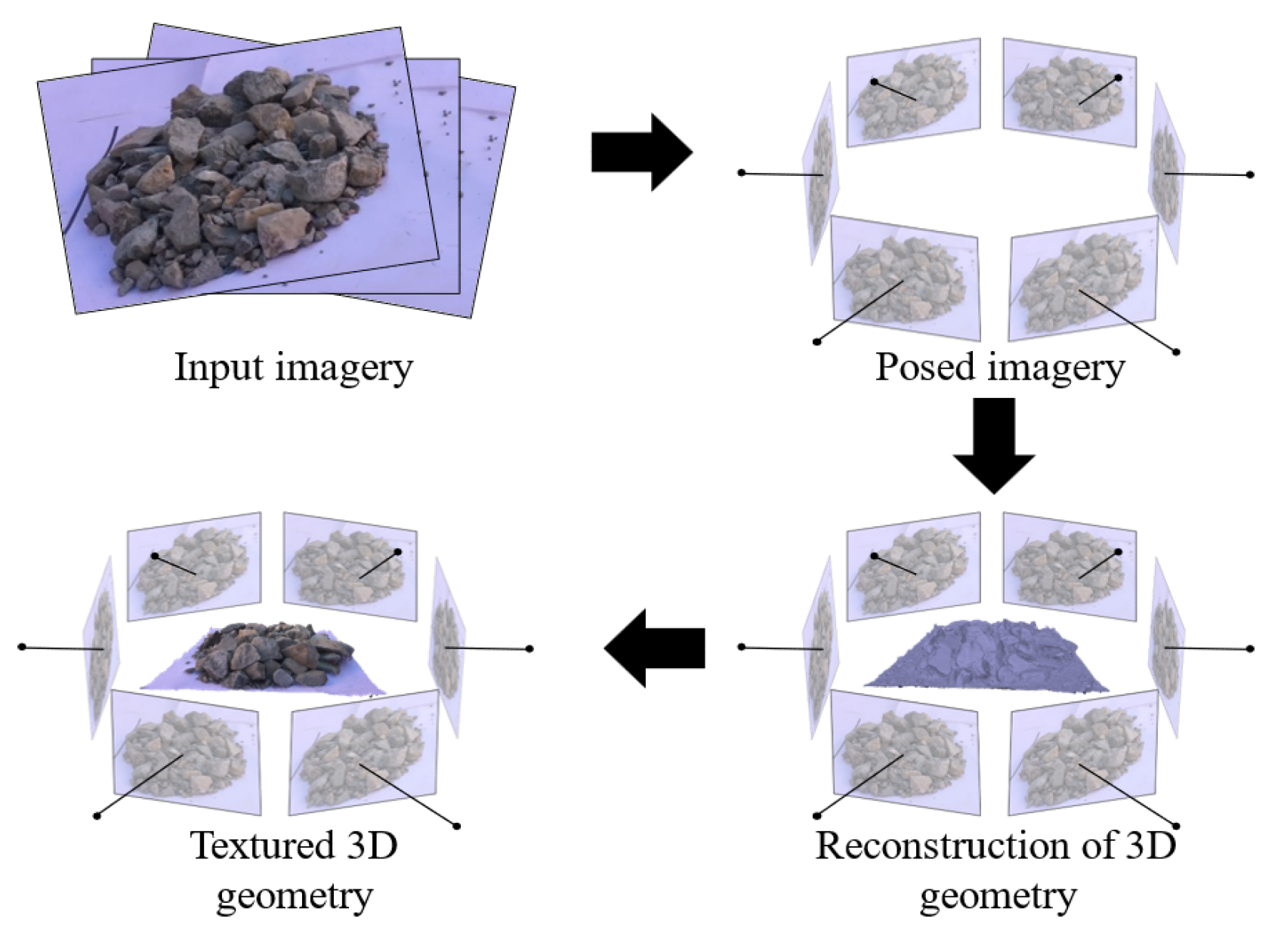

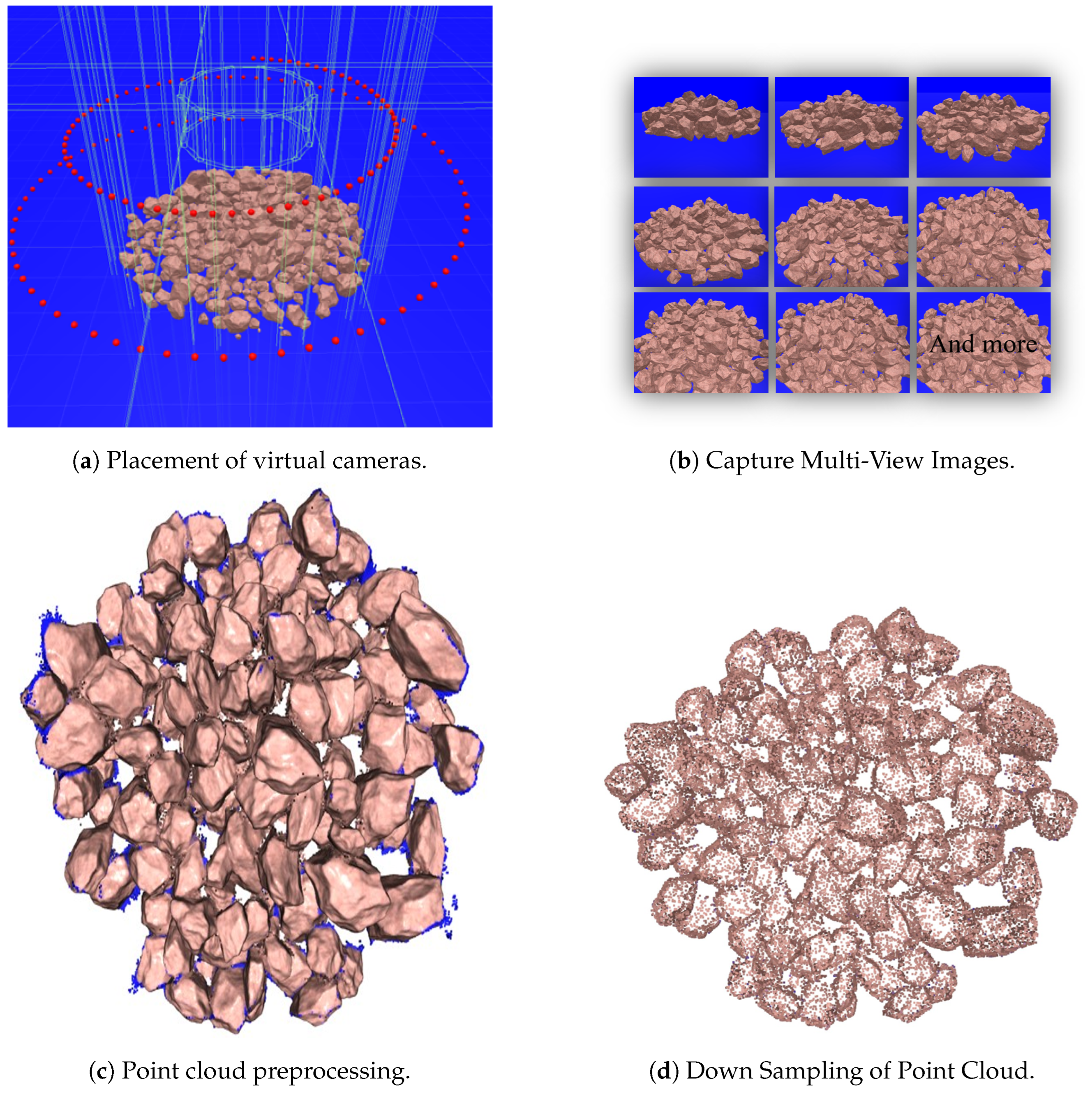

4.2. Creating Point Cloud Data

- (g)

- Placement of Virtual Cameras Around the Muckpile CG Model

- (h)

- Capture Multi-View Images

- (i)

- Reconstruct 3D Point Cloud

- (j)

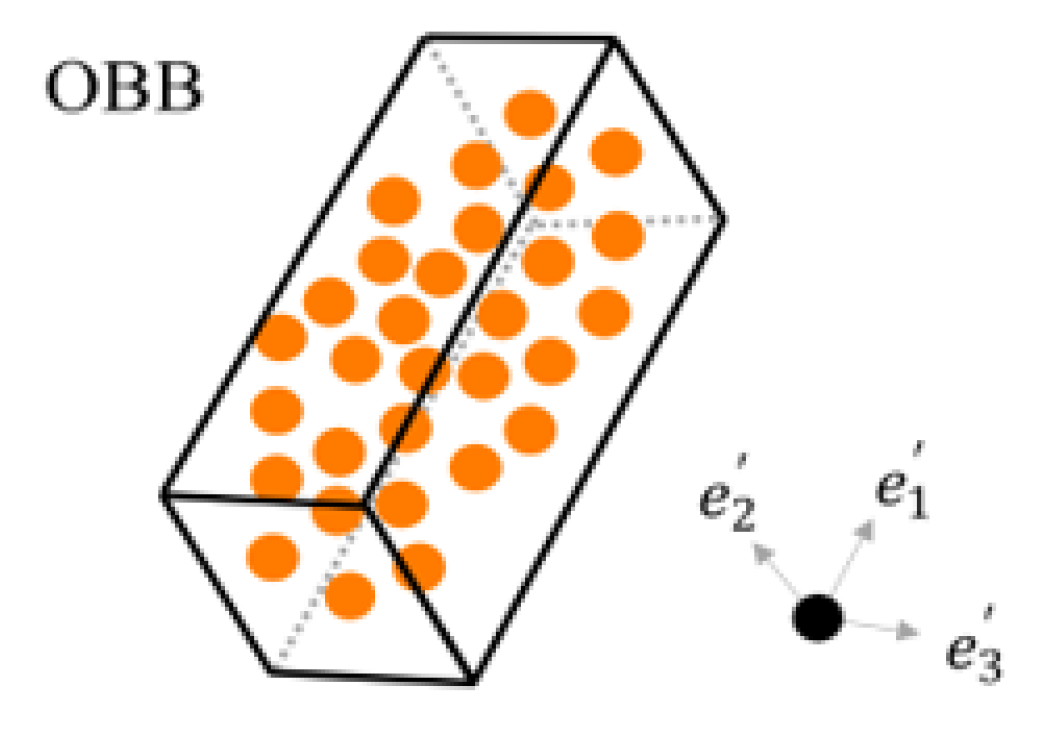

- Point Cloud Preprocessing:

- (j-1)

- Remove Unnecessary Point

- (j-2)

- Down Sampling of Point Cloud

- (j-3)

- Scale Point Cloud

5. Verification of Particle Size Distribution Estimation

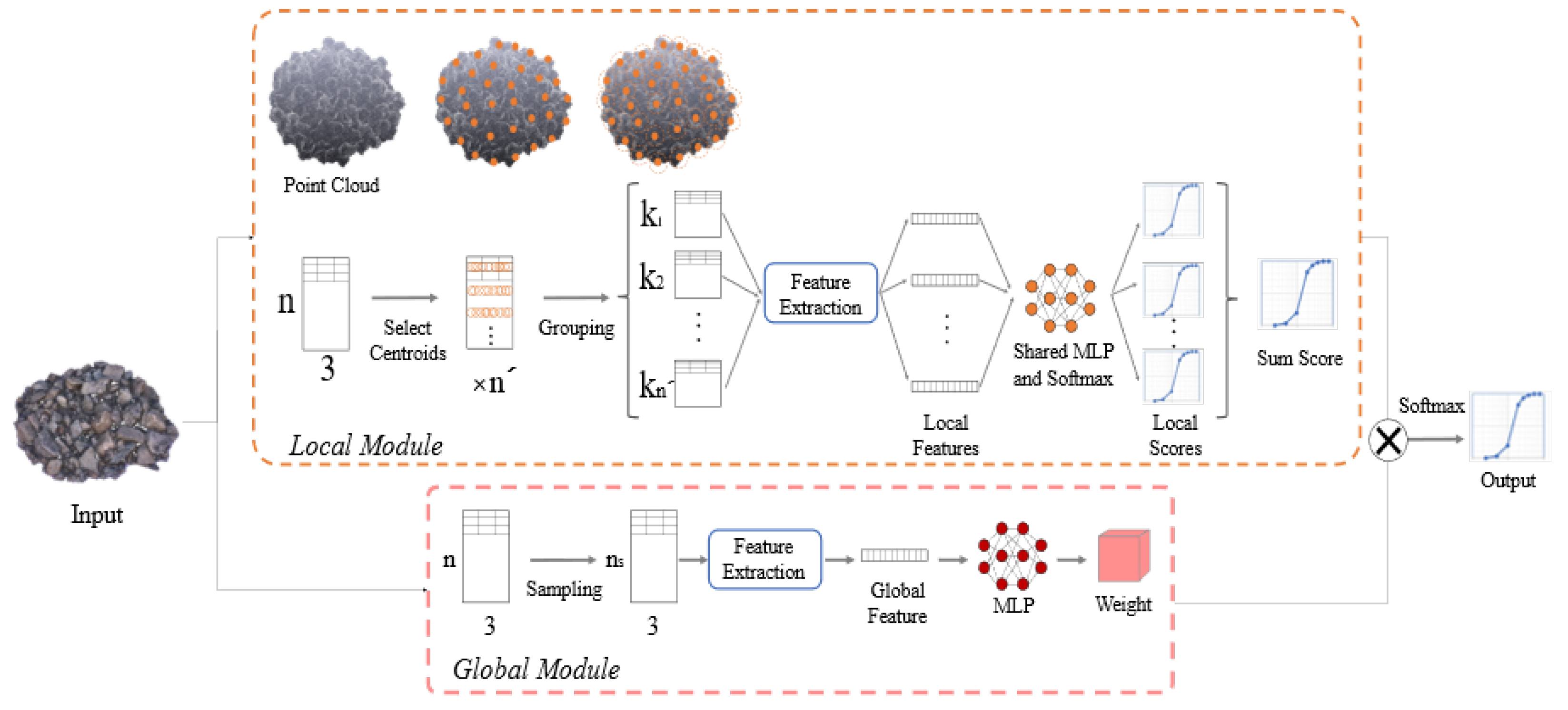

5.1. Particle Size Distribution Estimation Network

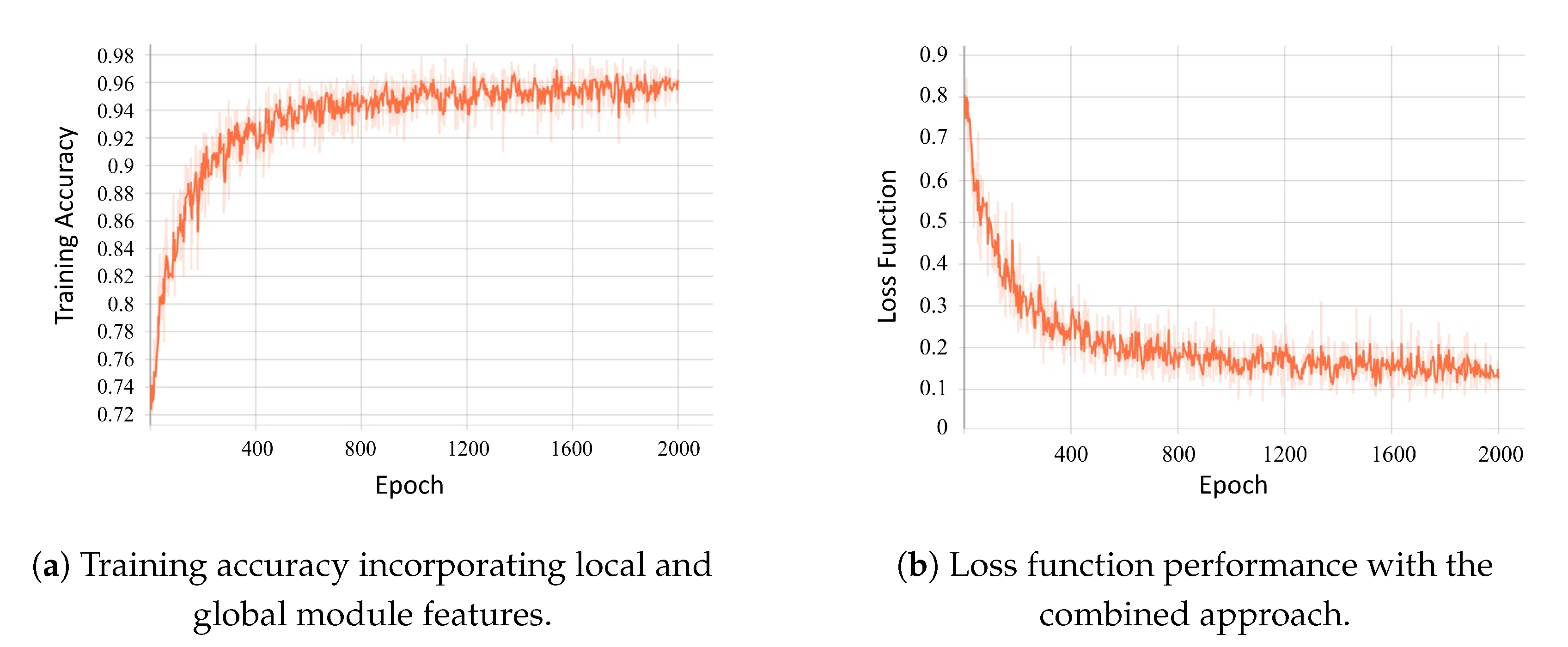

5.2. Particle Size Distribution Estimation Using CG Data

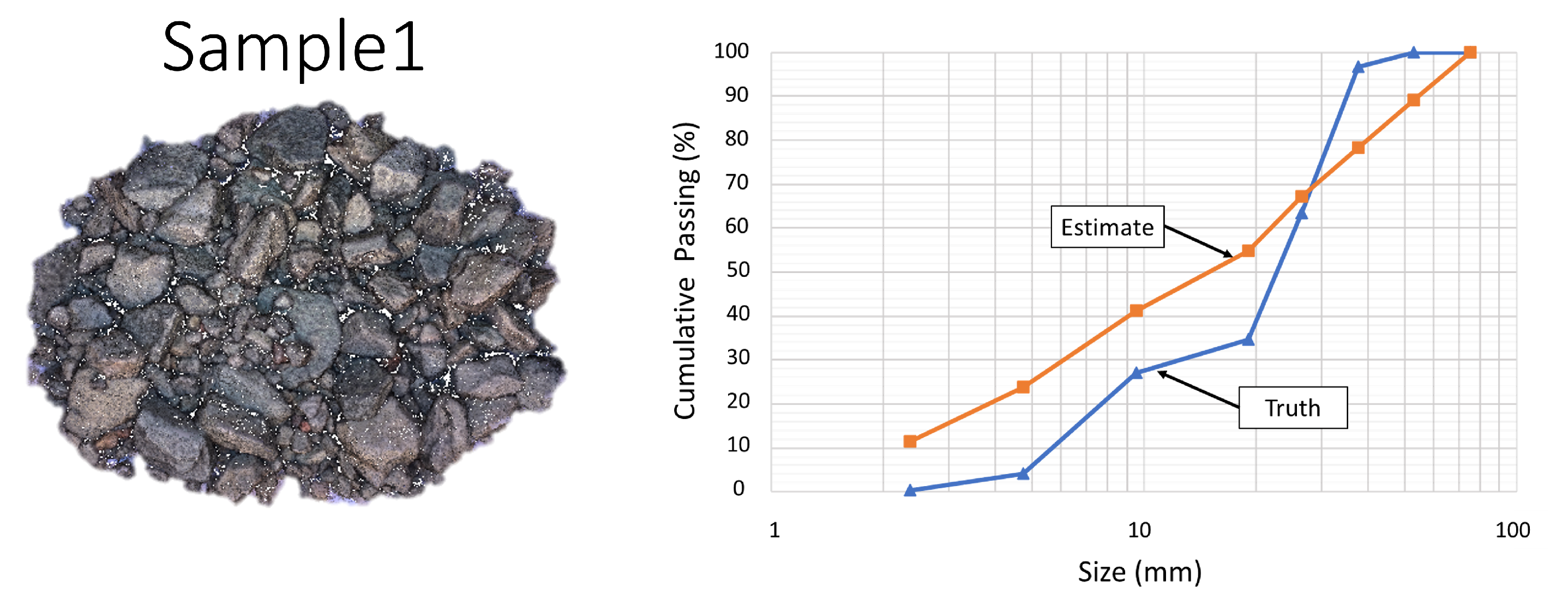

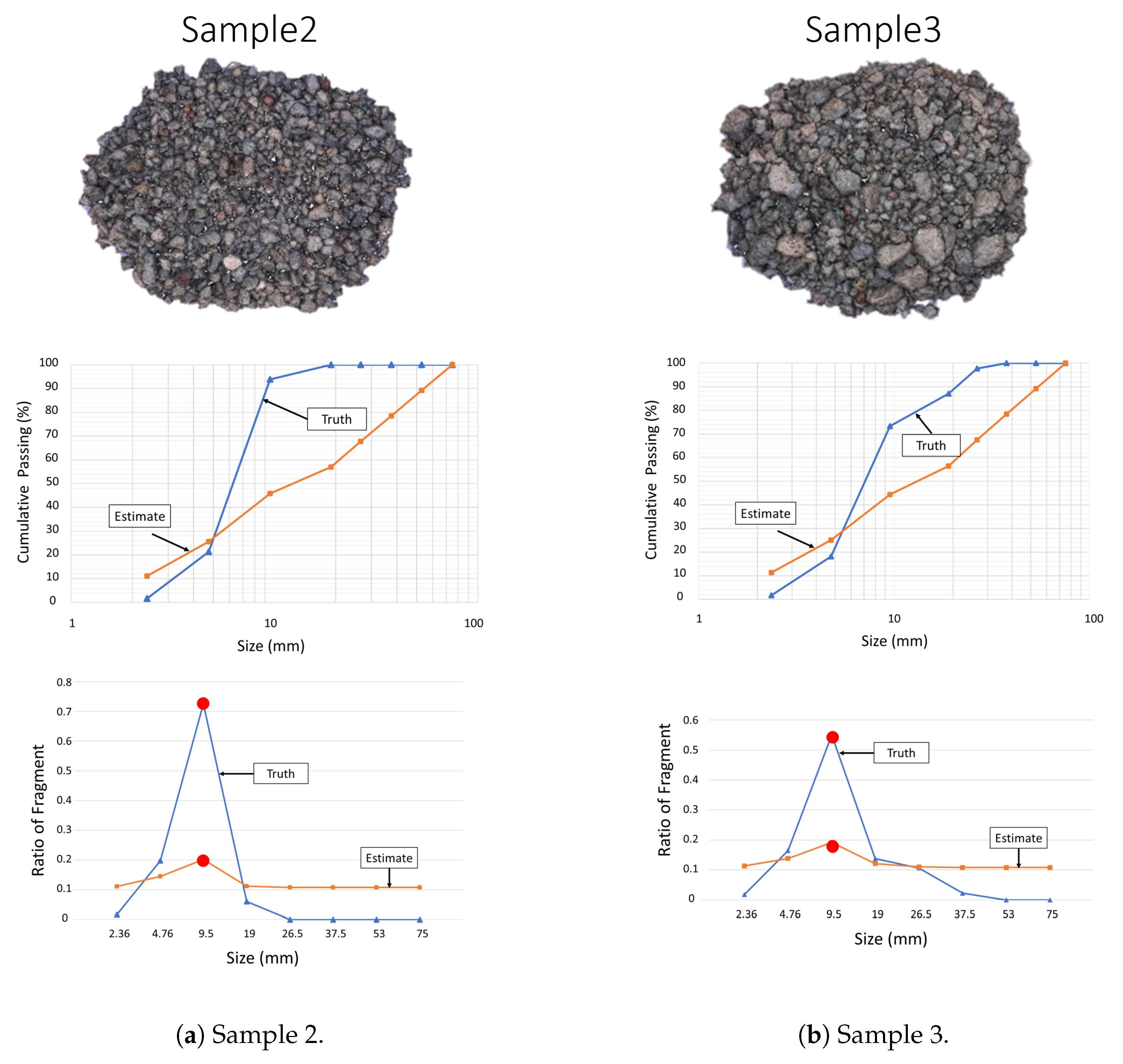

5.3. Particle Size Distribution Estimation Using Actual Data

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Takahashi, Y.; Yamaguchi, K.; Sasaoka, T.; Hamanaka, A.; Shimada, H.; Ichinose, M.; Kubota, S.; Saburi, T. The Effect of Blasting Design and Rock Mass Conditions on Flight Behavior of Rock Fragmentation in Surface Mining. J. Min. Mater. Process. Inst. Jpn. 2019, 135, 94–100. [Google Scholar] [CrossRef]

- Adebola, J.M.; Ajayi, O.D.; Elijah, P. Rock Fragmentation Prediction Using Kuz-Rum Model. J. Environ. Earth Sci. 2016, 6, 110–115. [Google Scholar]

- Fragmentation Analysis. Maptek. Available online: https://www.maptek.com/products/pointstudio/fragmentation_analysis.html (accessed on 15 January 2023).

- Norbert, M.; Palangio, T.; Franklin, J. WipFrag Image Based Granulometry System. In Proceedings of the FRAGBLAST 5 Workshop on Mearsurement of Blast Fragmentation, Montreal, QC, Canada, 23–24 August 1996; pp. 91–99. [Google Scholar]

- Norbert, M.; Palangio, C. WipFrag System 2-Online Fragmentation Analysis. In Proceedings of the FRAGBLAST 6, 6th International Symposium for Rock Fragmentation by Blasting, Johannesburg, South Africa, 8–12 August 1999; pp. 111–115. [Google Scholar]

- Taiwo, B.O.; Fissha, Y.; Palangio, T.; Palangio, A.; Ikeda, H.; Cheepurupalli, N.R.; Khan, N.M.; Akinlabi, A.A.; Famobuwa, O.V.; Faluyi, J.O.; et al. Assessment of Charge Initiation Techniques Effect on Blast Fragmentation and Environmental Safety: An Application of WipFrag Software. Mining 2023, 3, 532–551. [Google Scholar] [CrossRef]

- Tungol, Z.; Kawamura, Y.; Kitahara, I.; Jang, H.D. Development of a Remote Rock Fragmentation Size Distribution Measurement System for Surface Mines Using 3D Photogrammetry. In Proceedings of the 10th International Conference on Explosives and Blasting, Chengdu, China, 27–30 October 2019. [Google Scholar]

- Cunningham, C. Fragmentation Estimation and the Kuz-Ram model—Four Years On. In Proceedings of the 2nd International Symposium on Rock Fragmentation by Blasting, Keystone, CO, USA, 23–26 August 1987. [Google Scholar]

- Sanchidrian, J.A.; Segarra, P.; Lopez, L.M.; Domingo, F.J. A model to predict the size distribution of rock fragments. Int. J. Rock Mech. Min. Sci. 2009, 46, 1294–1301. [Google Scholar]

- Gheibie, S.; Aghababaei, H.; Hoseinie, S.H. Development of an empirical model for predicting rock fragmentation due to blasting. In Proceedings of the 9th International Symposium on Rock Fragmentation by Blasting, Granada, Spain, 13–17 August 2009; pp. 453–458. [Google Scholar]

- Thornton, C.; Krysiak, Z.; Hounslow, M.J.; Jones, R. Particle size distribution analysis of an aggregate of fine spheres from a single photographic image. Part. Part. Syst. Charact. 1997, 14, 70–76. [Google Scholar]

- Palangio, T.; Pistorius, C. On-line analysis of coarse material on conveyor belts. Min. Eng. 2000, 52, 37–40. [Google Scholar]

- Gharibi, R.; Swift, S.J.; Booker, J.D.; Franklin, S.E. Particle size distribution measurement from millimeters to nanometers and from rods to platelets. J. Colloid Interface Sci. 2011, 361, 590–596. [Google Scholar]

- Coope, R.; Onederra, I.; Williams, D. Image analysis of rock fragmentation: A review. Int. J. Min. Sci. Technol. 2017, 27, 171–180. [Google Scholar]

- Segui, X.; Arroyo, P.; Llorens, J. Automated estimation of particle size distributions from 3D image data. In Proceedings of the Conference on Applications of Digital Image Processing XXXIII, San Diego, CA, USA, 2–4 August 2010; International Society for Optics and Photonics: Bellingham, WA, USA, 2010; Volume 7799, p. 77990P. [Google Scholar]

- Cunningham, C. The Kuz-Ram Fragmentation Model-20 Years On. In Proceedings of the European Federation of Explosives Engineers, Brighton, UK, 13–16 September 2005; pp. 201–210. [Google Scholar]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. Structure-from-Motion photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S. 3-D uncertainty-based topographic change detection with structure-from-motion photogrammetry: Precision maps for ground control and directly georeferenced surveys. Earth Surf. Process. Landf. 2014, 39, 1769–1788. [Google Scholar] [CrossRef]

- Furukawa, Y.; Ponce, J. Towards internet-scale multi-view stereo. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1434–1441. [Google Scholar]

- Chung, S.; Noy, M. Experience in Fragmentation Control. In Measurement of Blast Fragmentation; Balkema: Rotterdam, The Netherlands, 1996; pp. 247–252. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Marr, D. Visual information processing: The structure and creation of visual representations. Philos. Trans. R. Soc. Lond. Ser. Biol. Sci. 1980, 290, 199–218. [Google Scholar] [CrossRef]

- Shi, S.; Wang, Q.; Xu, P.; Chu, X. VoxNet: A 3D Convolutional Neural Network for Real-Time Object Recognition. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 922–928. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic Graph CNN for Learning on Point Clouds. ACM Trans. Graph. TOG 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Fowler, M.J.; Henstock, T.J.; Schofield, D.I. Integration of terrestrial and airborne LiDAR data for rock mass characterization. J. Appl. Remote Sens. 2013, 7, 073506. [Google Scholar]

- Varshosaz, M.; Khoshelham, K.; Amiri, N. Integration of terrestrial laser scanning and close range photogrammetry for 3D modeling of an open-pit mine. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 365–372. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Remondino, F.; Stiros, S.G. 3D reconstruction of static human body with a digital camera. Appl. Geomat. 2016, 8, 61–70. [Google Scholar]

- Fraser, C.S. Digital camera self-calibration. ISPRS J. Photogramm. Remote Sens. 1997, 52, 149–159. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Al-Bakri, A.Y.; Sazid, M. Application of Artificial Neural Network for Prediction and Optimization of Blast-Induced Impacts. Mining 2021, 1, 315–334. [Google Scholar] [CrossRef]

- Furukawa, Y.; Ponce, J. Accurate, Dense, and Robust Multiview Stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1362–1376. [Google Scholar] [CrossRef]

- Kazhdan, M.; Bolitho, M.; Hoppe, H. Poisson surface reconstruction. In Proceedings of the 4th Eurographics Symposium on Geometry Processing, Sardinia, Italy, 26–28 June 2006; Volume 7. [Google Scholar]

- Agisoft Metashape. Available online: https://www.agisoft.com (accessed on 15 April 2023).

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 2017, 5099–5108. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum PointNets for 3D object detection from RGB-D data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 918–927. [Google Scholar]

- Super Computer. University of Tsukuba, Center for Computational Sciences. Available online: https://www.ccs.tsukuba.ac.jp/supercomputer/#Cygnus (accessed on 15 April 2023).

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Rock Properties | Value |

|---|---|

| Dynamic Friction | 0.9 |

| Static Friction | 0.9 |

| Bounciness | 0.1 |

| Friction Combine | Average |

| Bounciness Combine | Average |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ikeda, H.; Sato, T.; Yoshino, K.; Toriya, H.; Jang, H.; Adachi, T.; Kitahara, I.; Kawamura, Y. Deep Learning-Based Estimation of Muckpile Fragmentation Using Simulated 3D Point Cloud Data. Appl. Sci. 2023, 13, 10985. https://doi.org/10.3390/app131910985

Ikeda H, Sato T, Yoshino K, Toriya H, Jang H, Adachi T, Kitahara I, Kawamura Y. Deep Learning-Based Estimation of Muckpile Fragmentation Using Simulated 3D Point Cloud Data. Applied Sciences. 2023; 13(19):10985. https://doi.org/10.3390/app131910985

Chicago/Turabian StyleIkeda, Hajime, Taiga Sato, Kohei Yoshino, Hisatoshi Toriya, Hyongdoo Jang, Tsuyoshi Adachi, Itaru Kitahara, and Youhei Kawamura. 2023. "Deep Learning-Based Estimation of Muckpile Fragmentation Using Simulated 3D Point Cloud Data" Applied Sciences 13, no. 19: 10985. https://doi.org/10.3390/app131910985

APA StyleIkeda, H., Sato, T., Yoshino, K., Toriya, H., Jang, H., Adachi, T., Kitahara, I., & Kawamura, Y. (2023). Deep Learning-Based Estimation of Muckpile Fragmentation Using Simulated 3D Point Cloud Data. Applied Sciences, 13(19), 10985. https://doi.org/10.3390/app131910985