CREBAS: Computer-Based REBA Evaluation System for Wood Manufacturers Using MediaPipe

Abstract

1. Introduction

2. Backgrounds

2.1. Musculoskeletal Risk Posture Analysis Technique

2.2. Posture Estimation Techniques

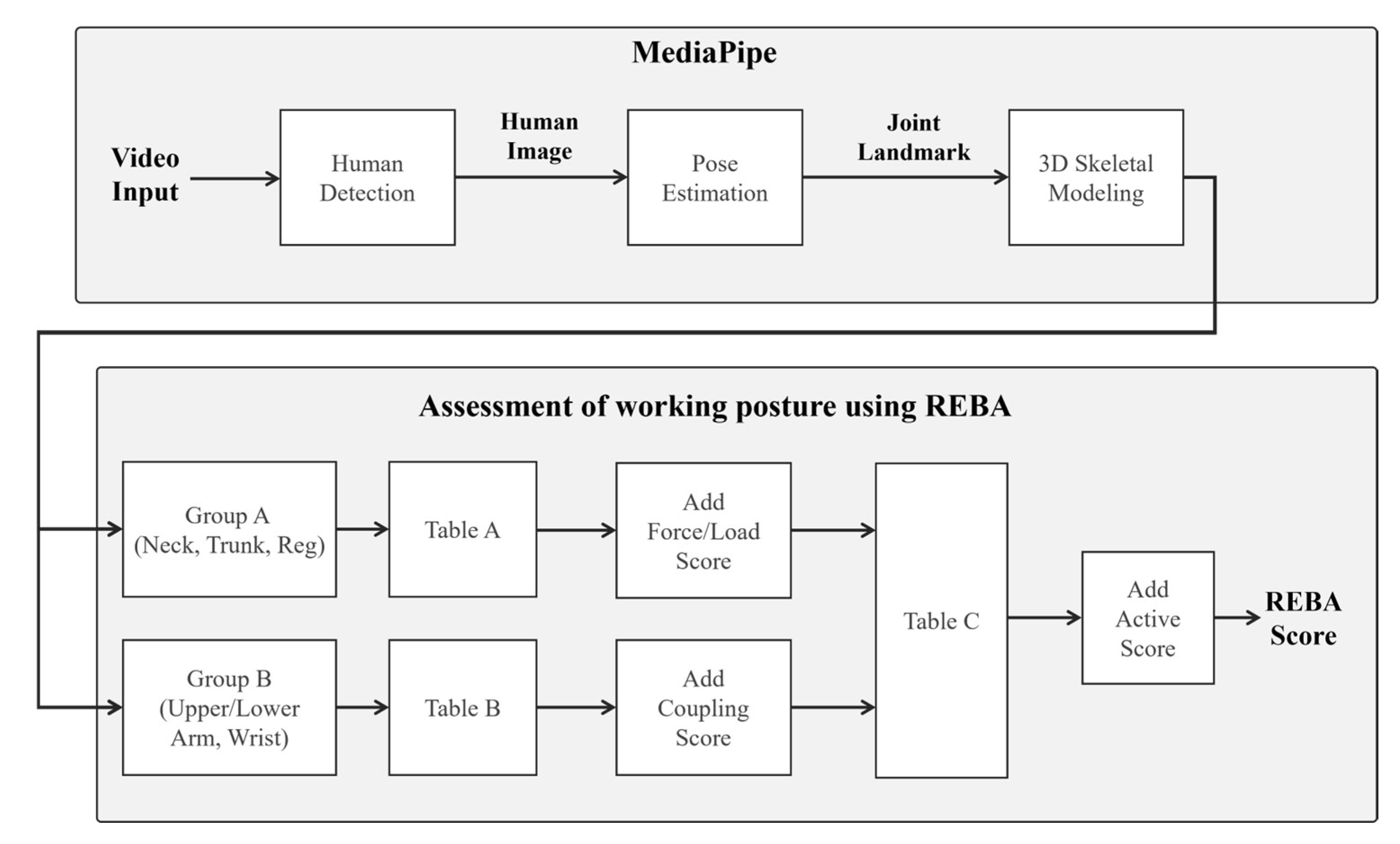

3. REBA Evaluation System Using MediaPipe

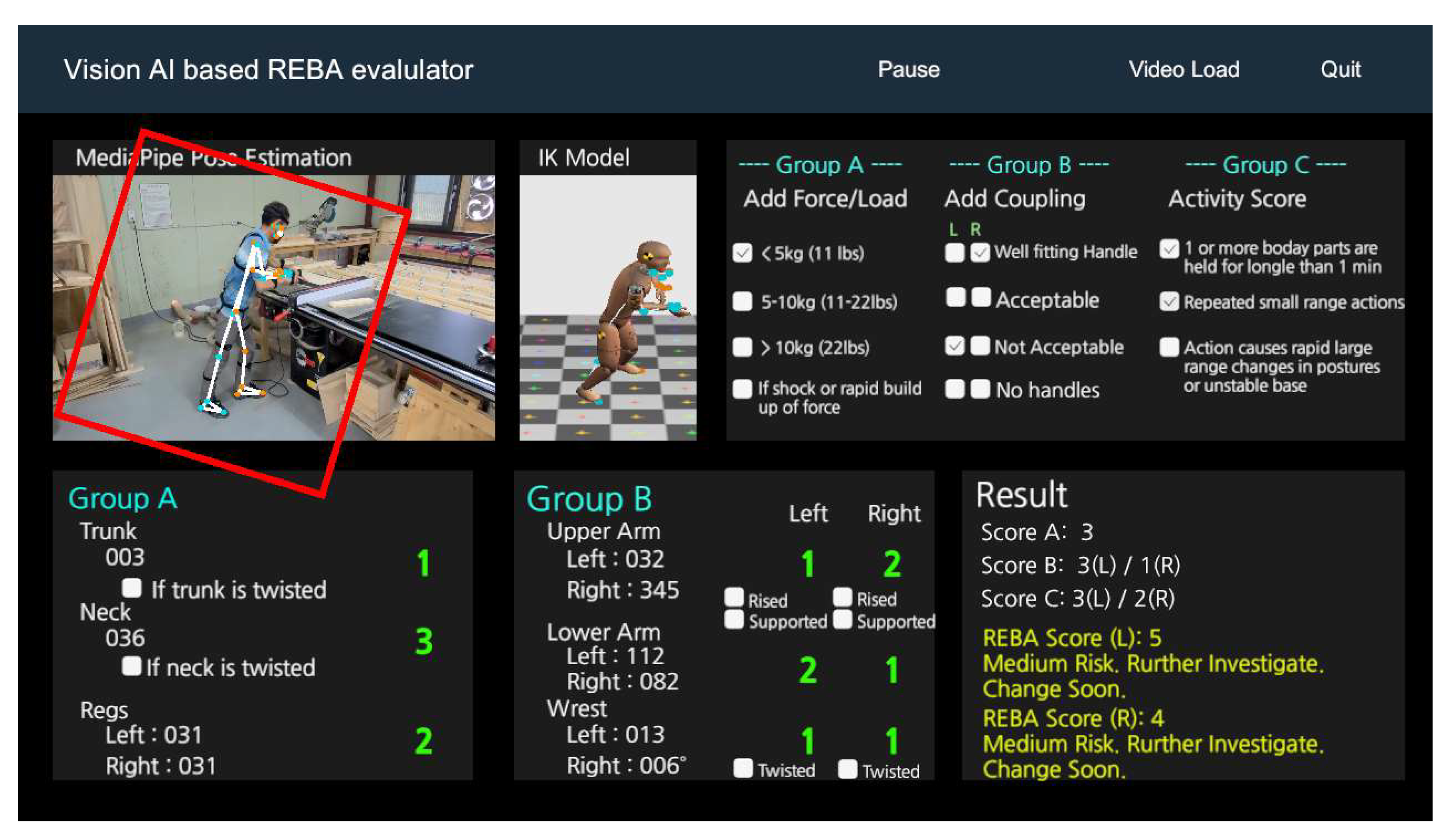

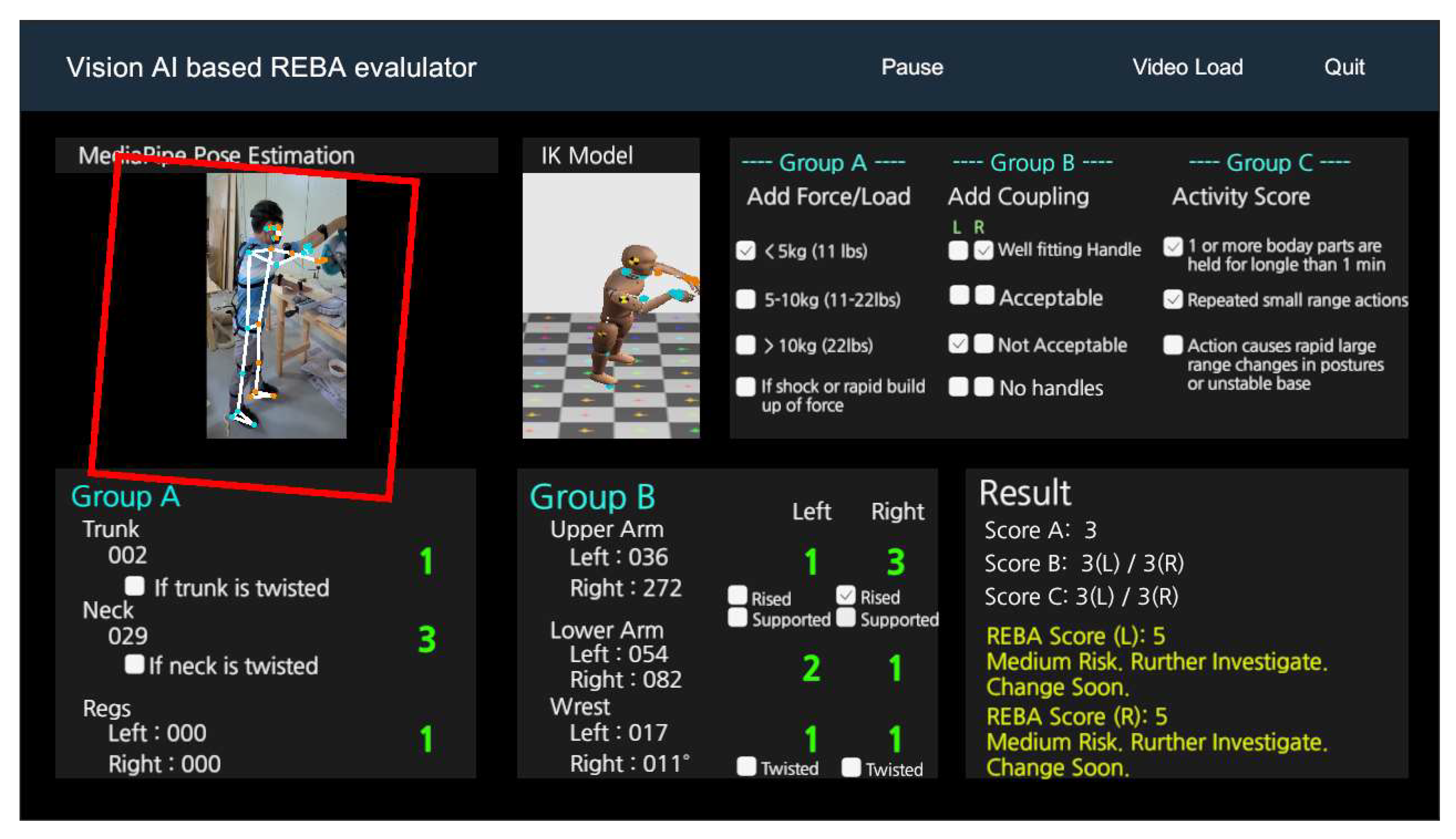

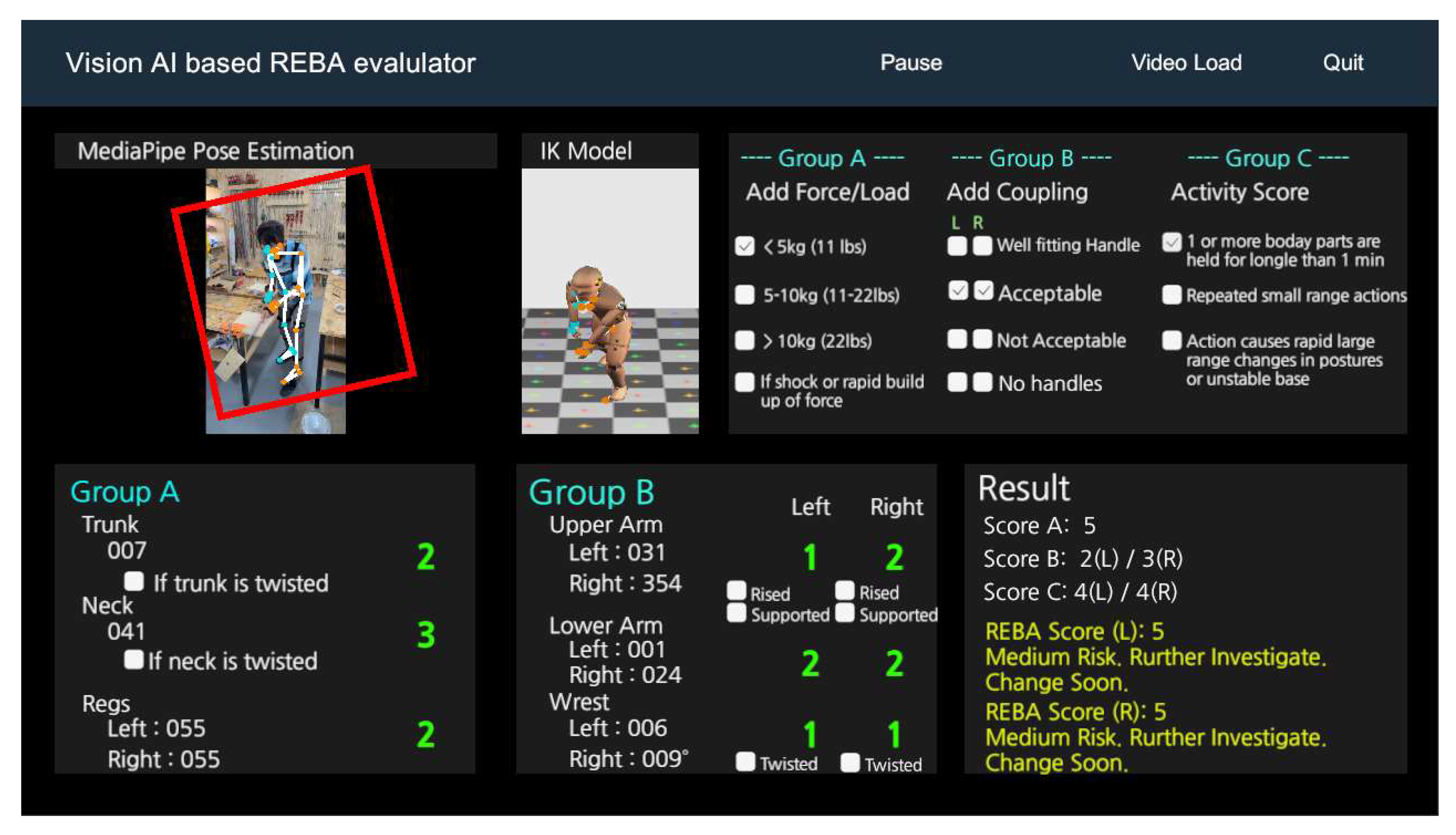

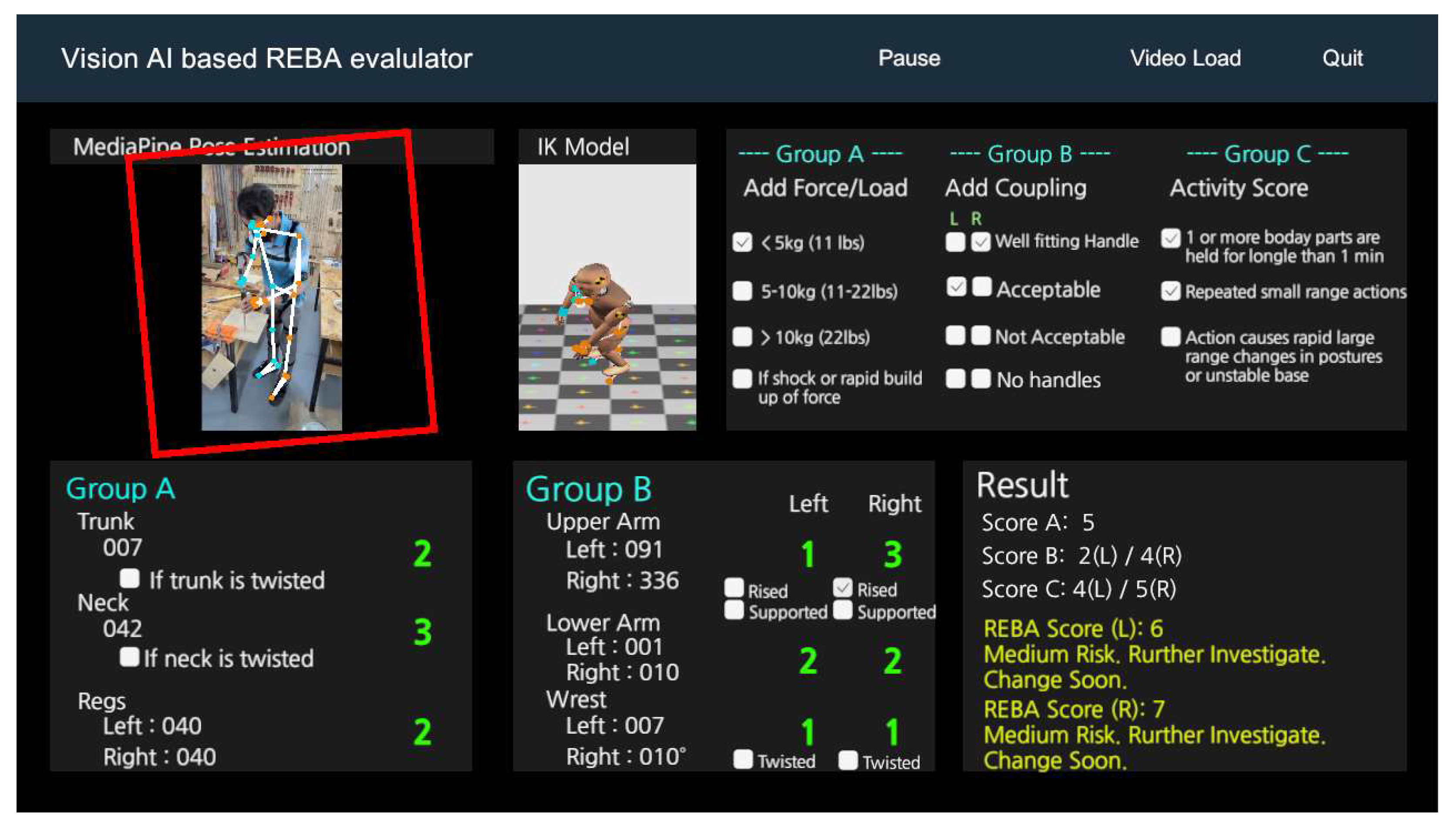

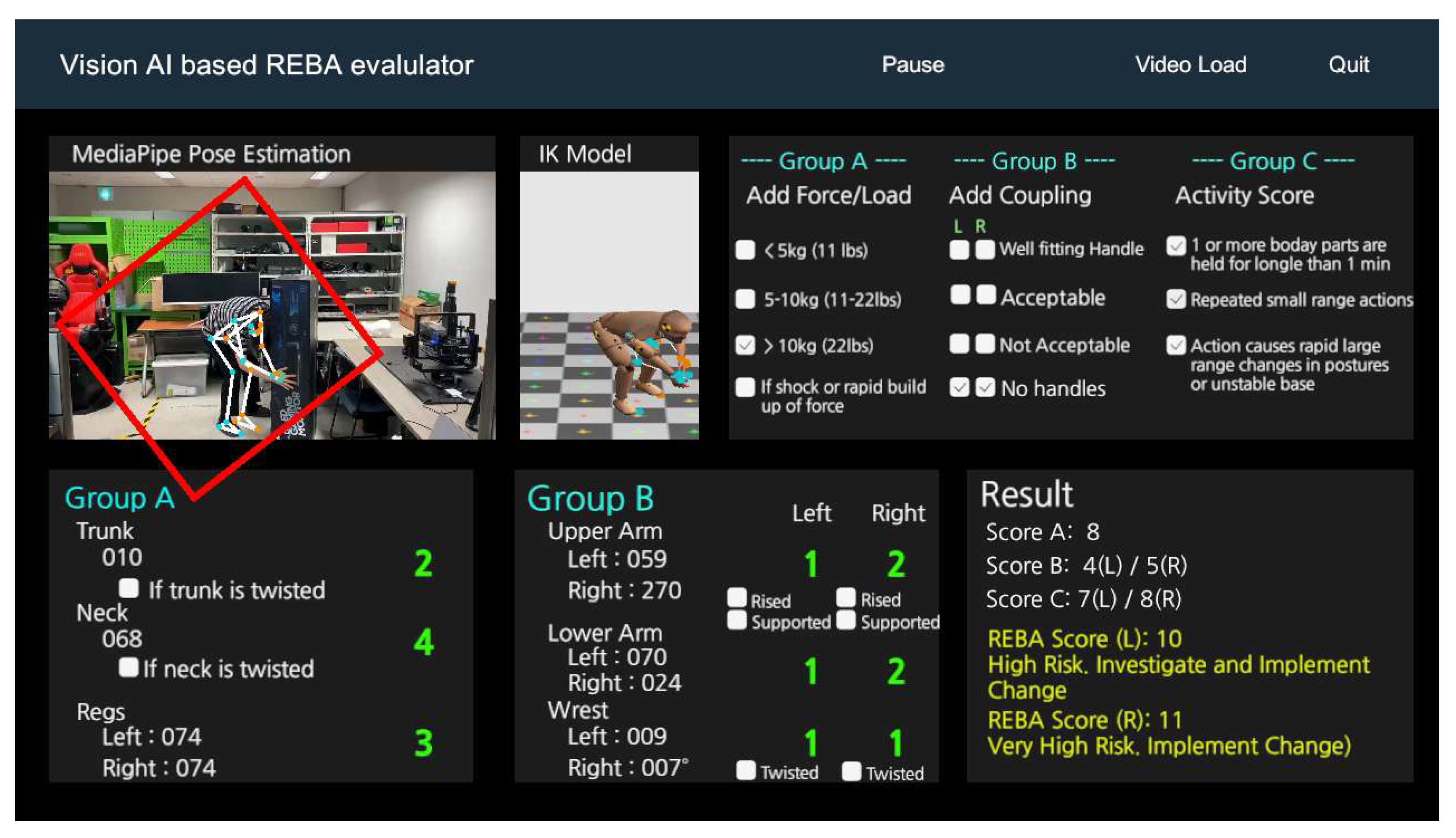

3.1. System Design

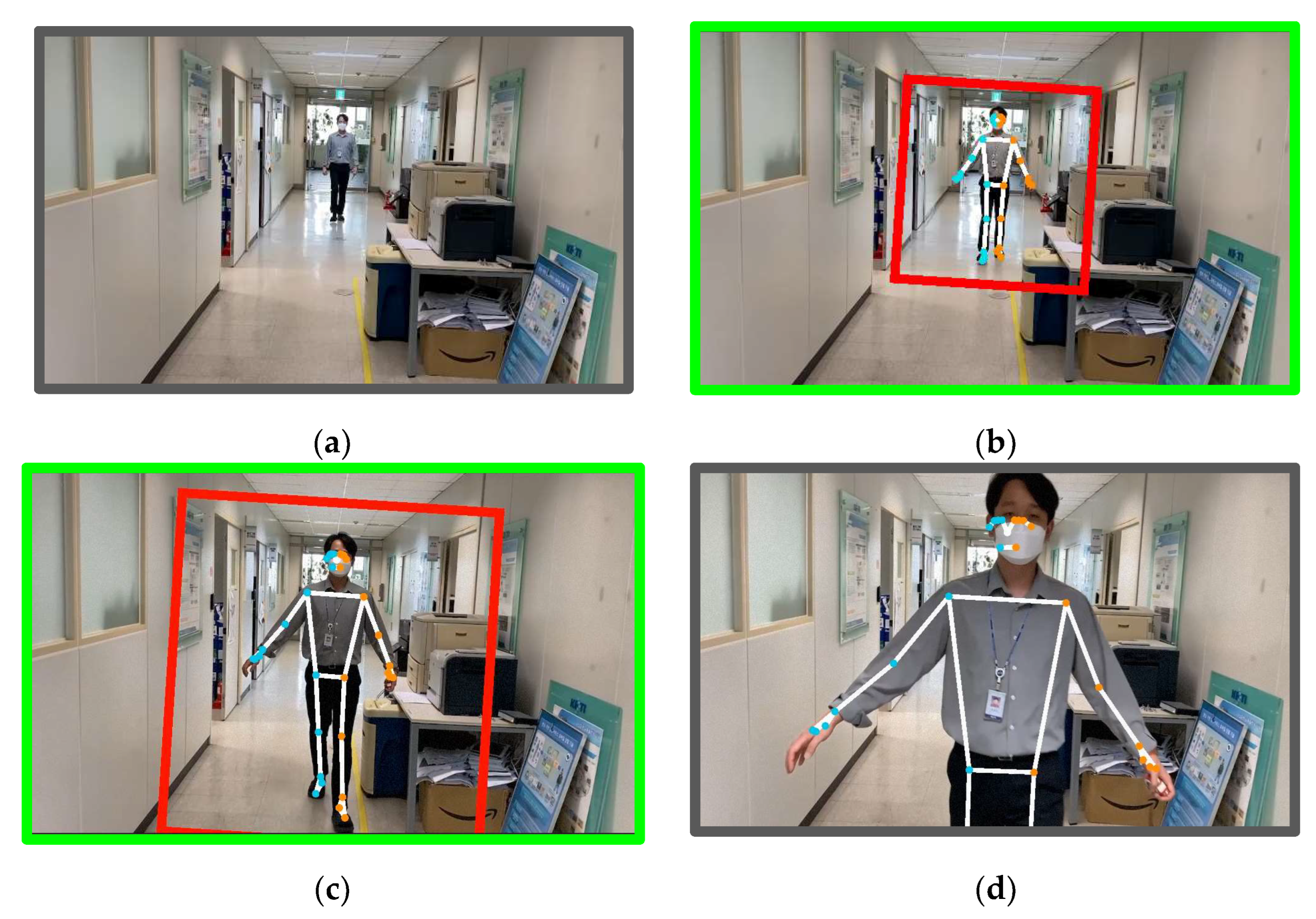

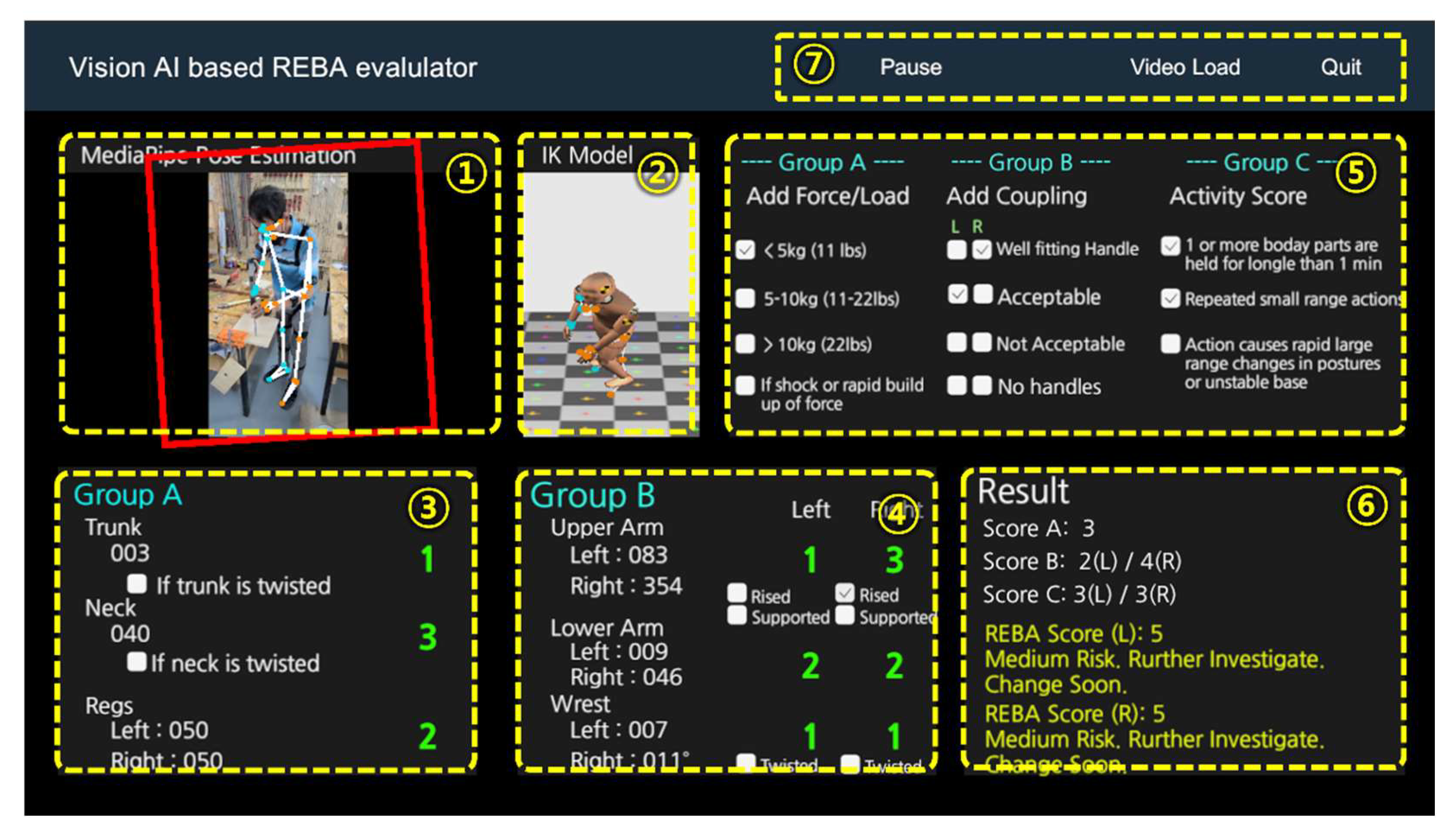

- Visualization of Human Pose Estimation results: The working posture image is encoded in RGB32 format and 640 × 480 resolution and output through the Image UI. The Bounding Box of the body area obtained by MediaPipe’s Human Detector and the joint obtained by Pose Tracking is overlaid on the image.

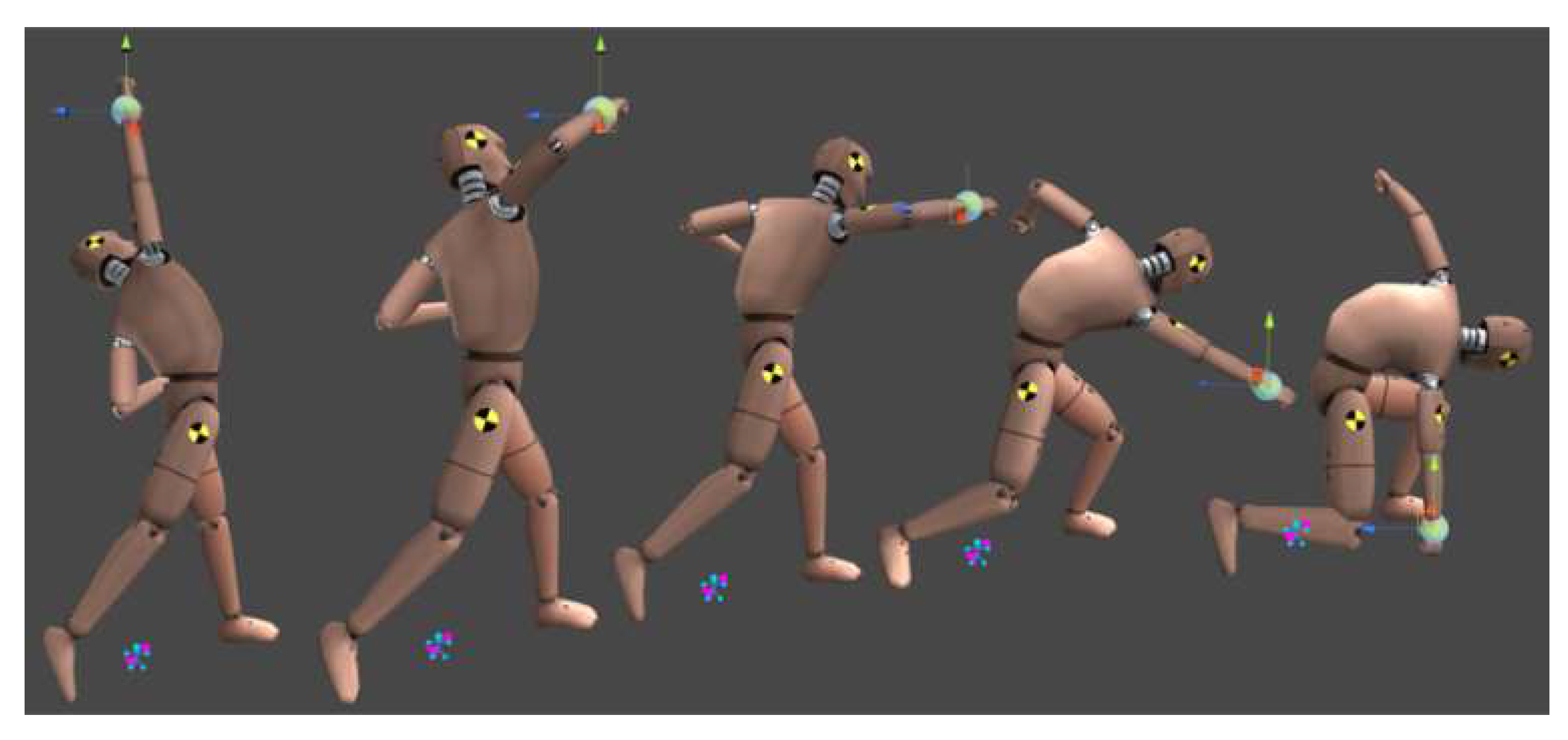

- Visualization of Inverse Kinematics (IK) modeling: The position coordinates of each joint calculated through Human Pose Estimation are input to the target of the inverse kinematics (IK) model, and the applied result is displayed.

- Details of Group A (waist, neck, legs): The bending angles of the hip, neck, and leg joints included in group A are displayed in white, and the scores for each part are displayed in green based on the REBA rule.

- Details of Group B (upper arm, forearm, wrist): The bending angles of the shoulder, elbow, and wrist joints included in group B are displayed in white, and the scores for each part are displayed in green based on the REBA rule.

- Selection of additional risk factors: Select additional deduction factors based on the group A’s weight/force classification, group B’s grip type, and group C’s behavioral score.

- Final results (score A, score B, score C, REBA score): The result of group A is score A, the result of group B is score B, and the sum of score A and score B is score C. The final REBA score is displayed in yellow by each left body and right body.

- Top menu (pause, image selection, end): The pause button is to stop the video being played, and the image selection button is to call up another image. The end button is to end the program.

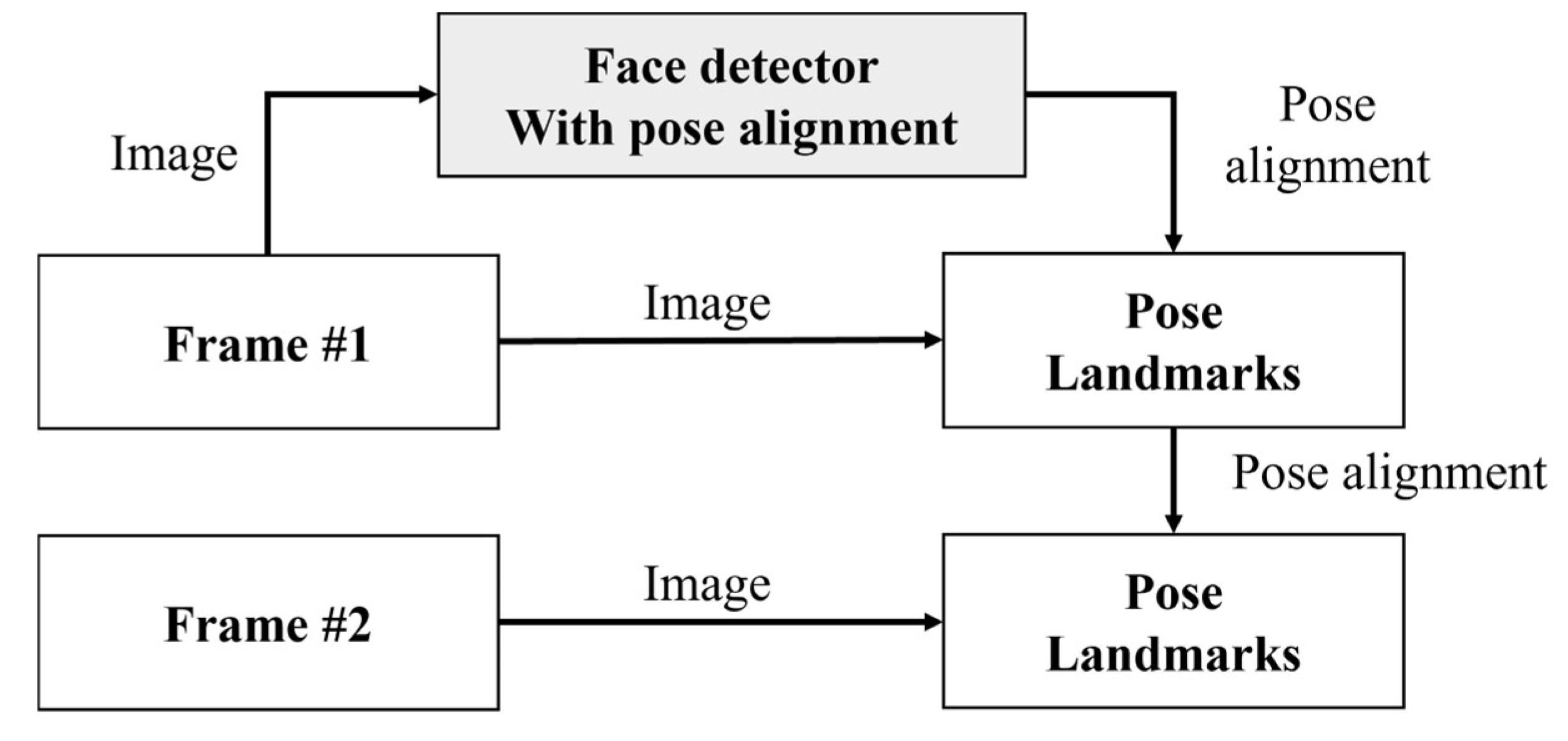

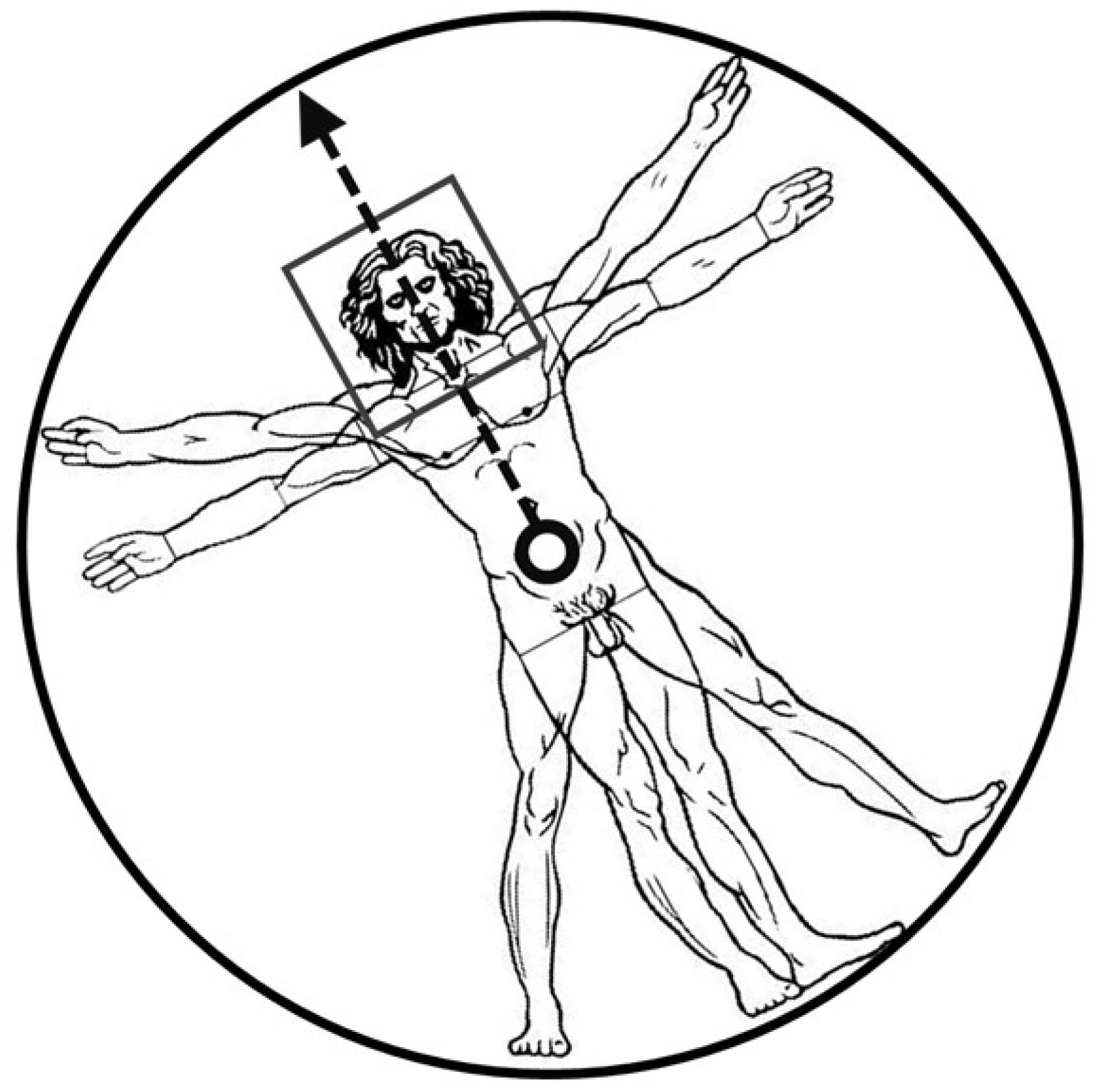

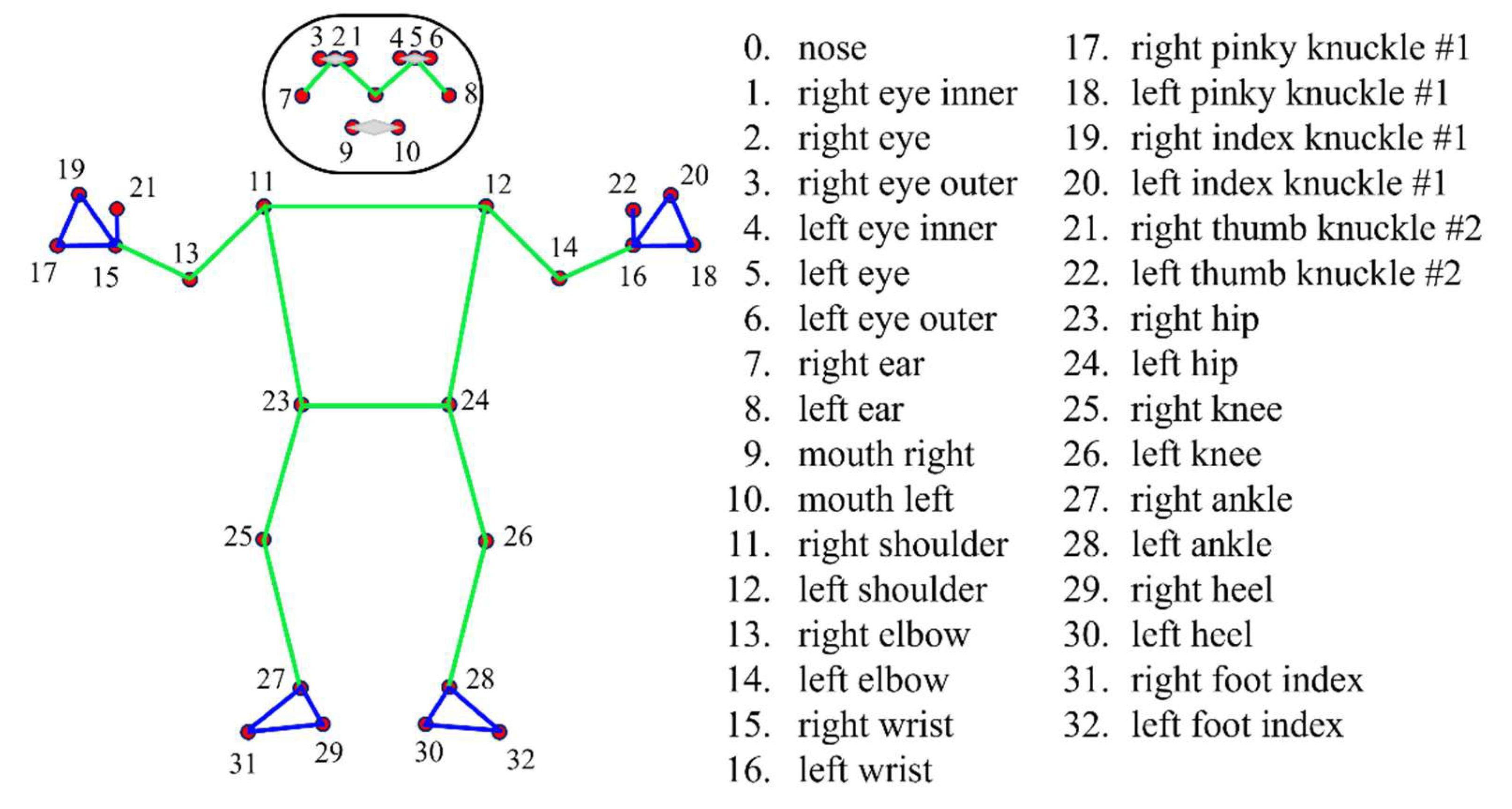

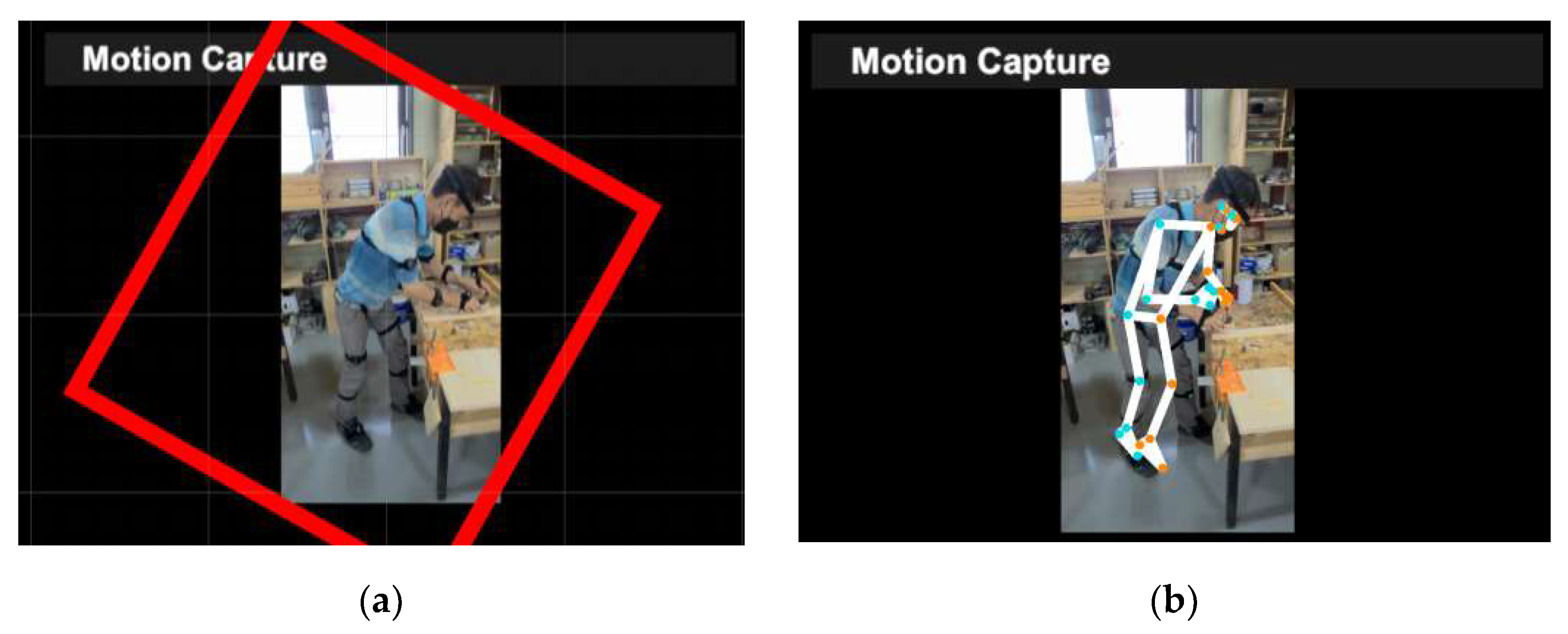

3.2. Joint Position Estimation

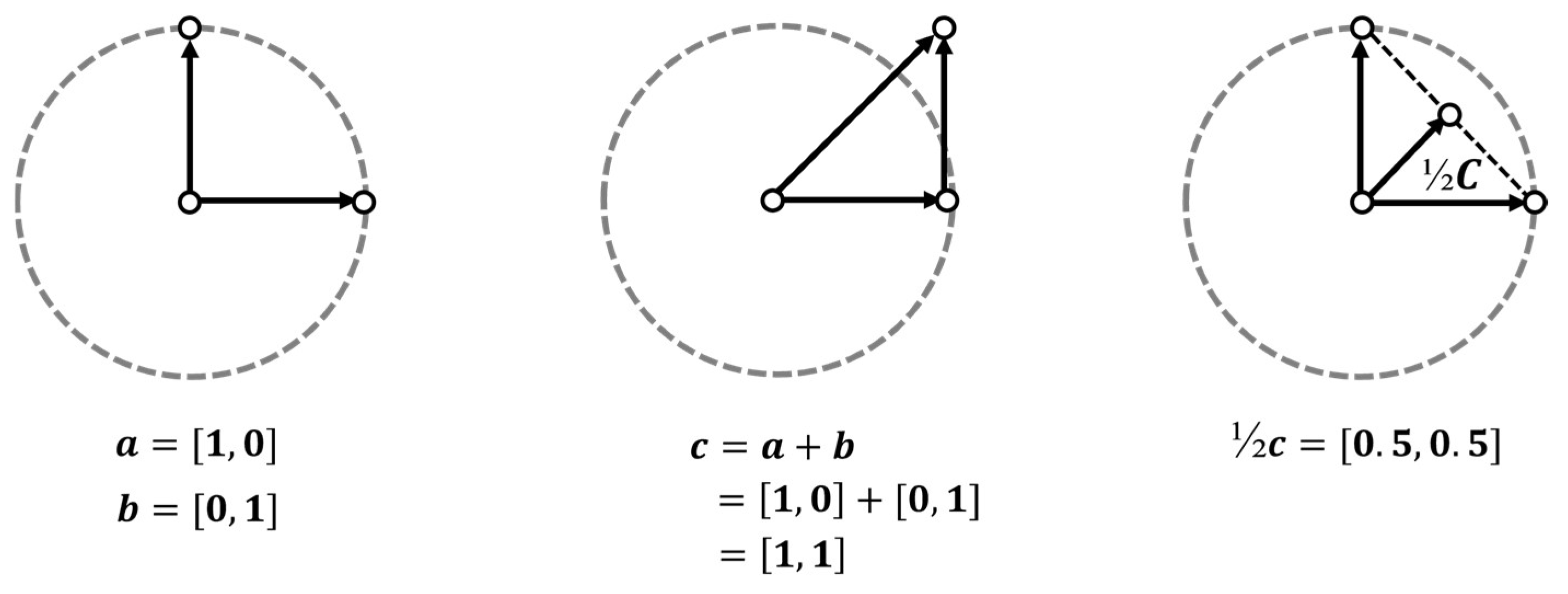

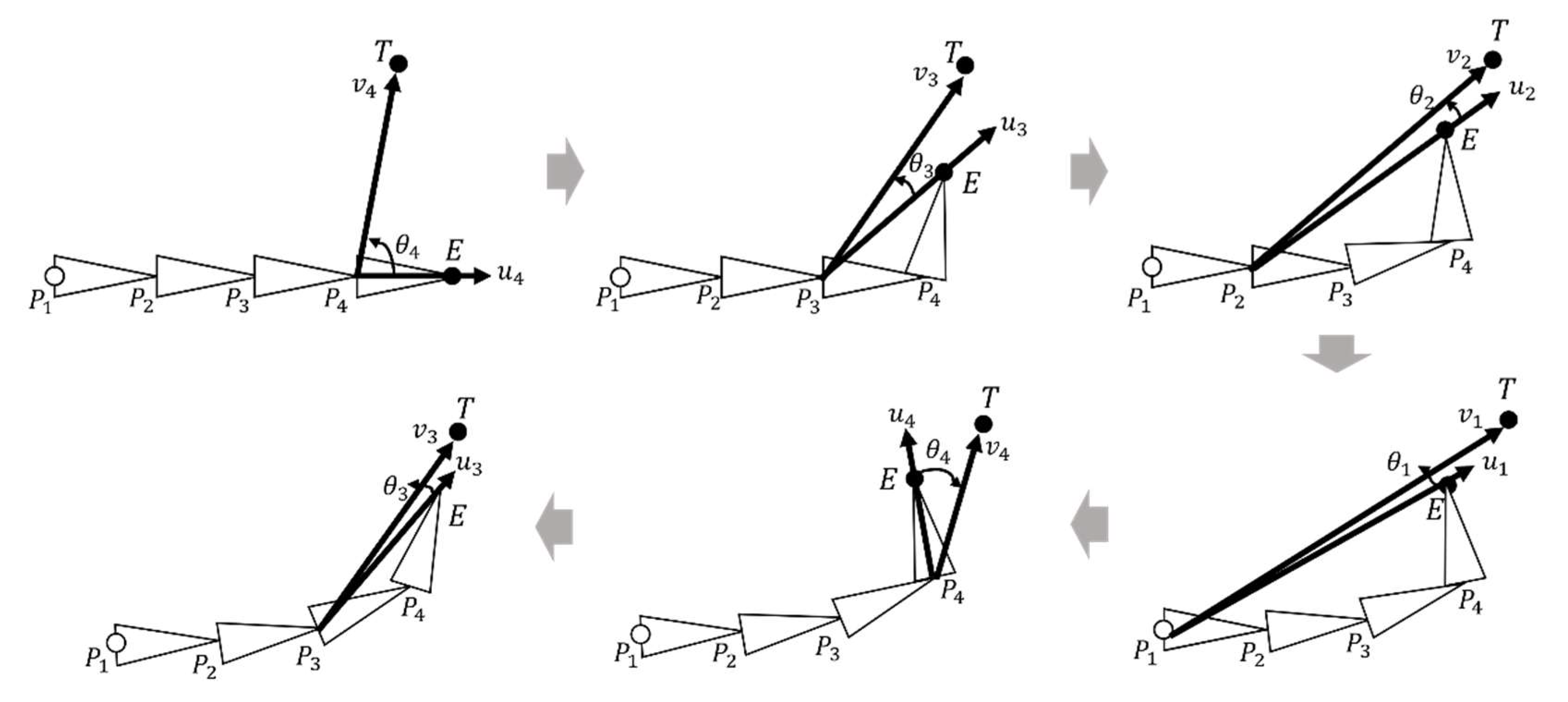

3.3. Calculation of the Angles of Joints Using Inverse Kinematics (IK)

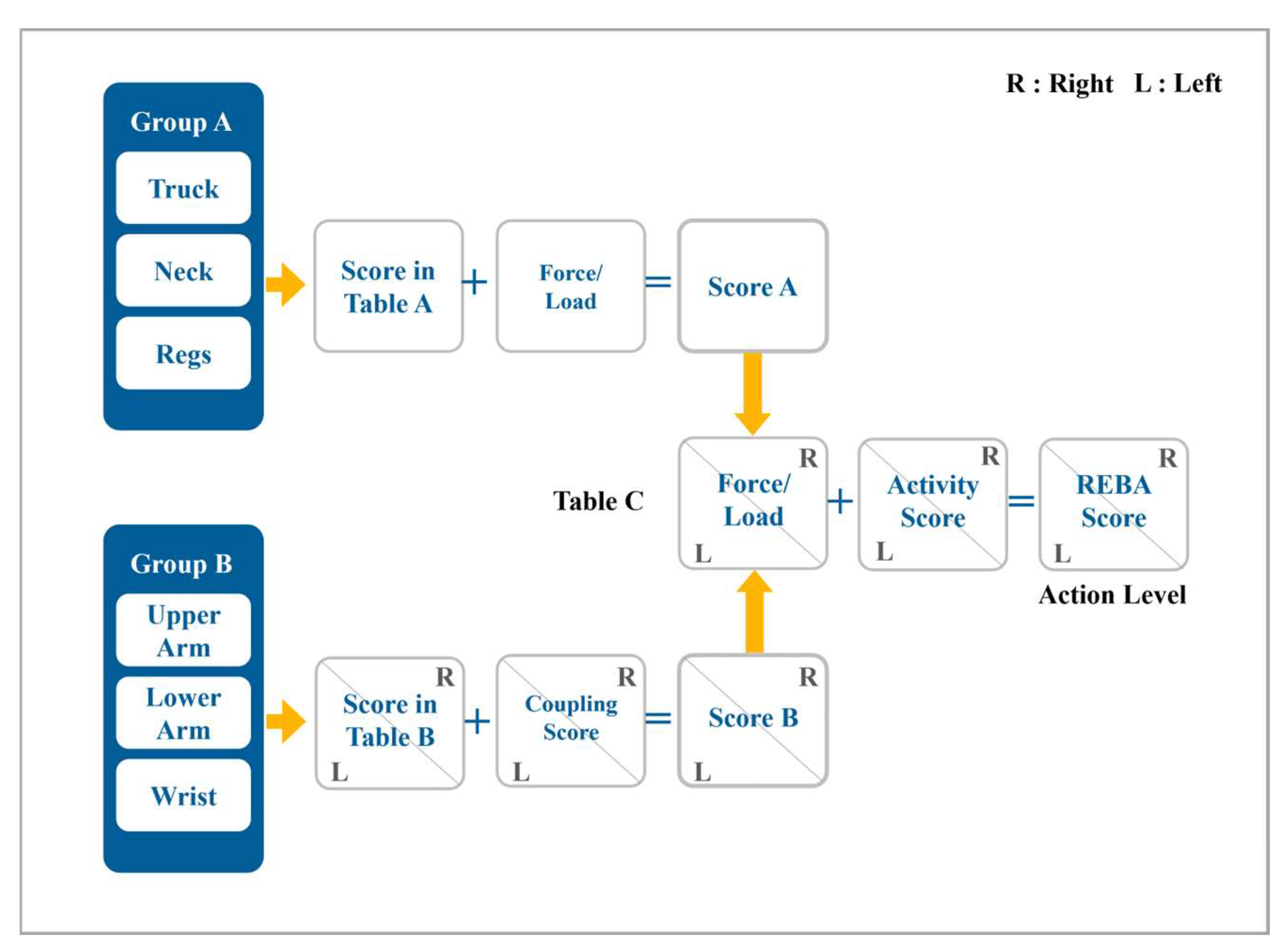

3.4. REBA Posture Evaluation Algorithm

3.4.1. Group A Evaluation

3.4.2. Group B Evaluation

3.4.3. Determination of the Overall Workload

4. Experiments and Results

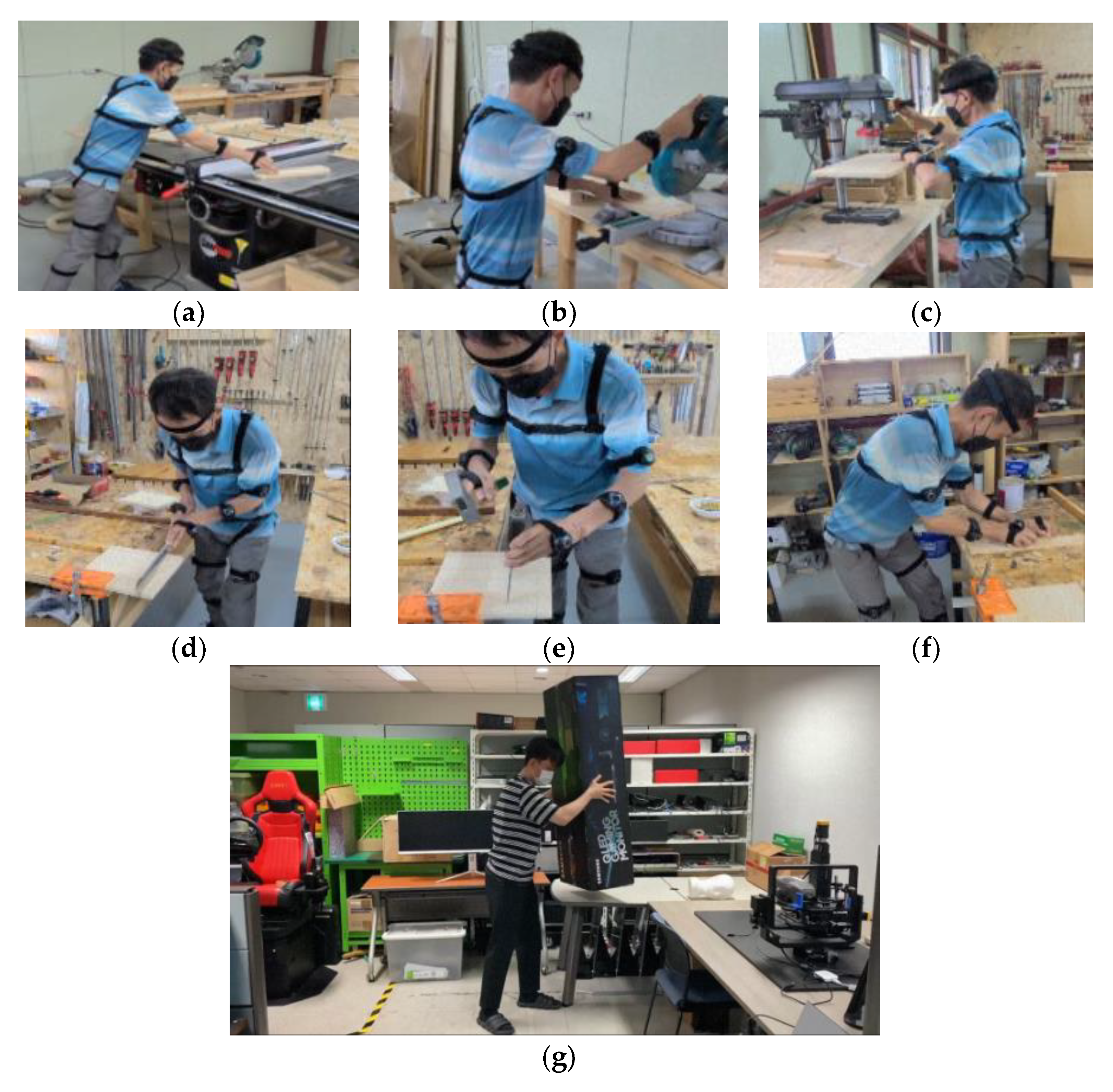

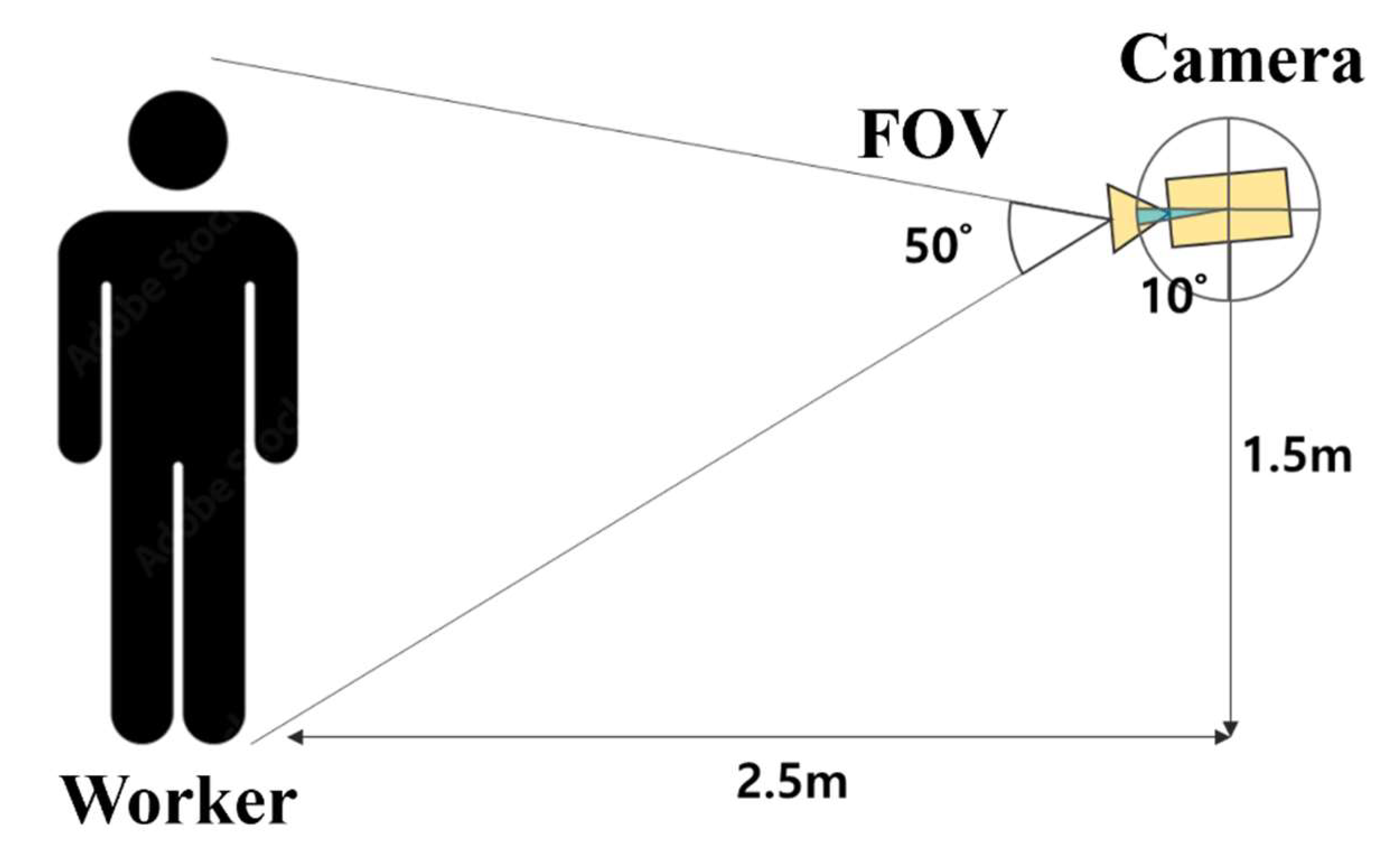

4.1. Experimental Environments

4.2. Evaluation Results by REBA Experts

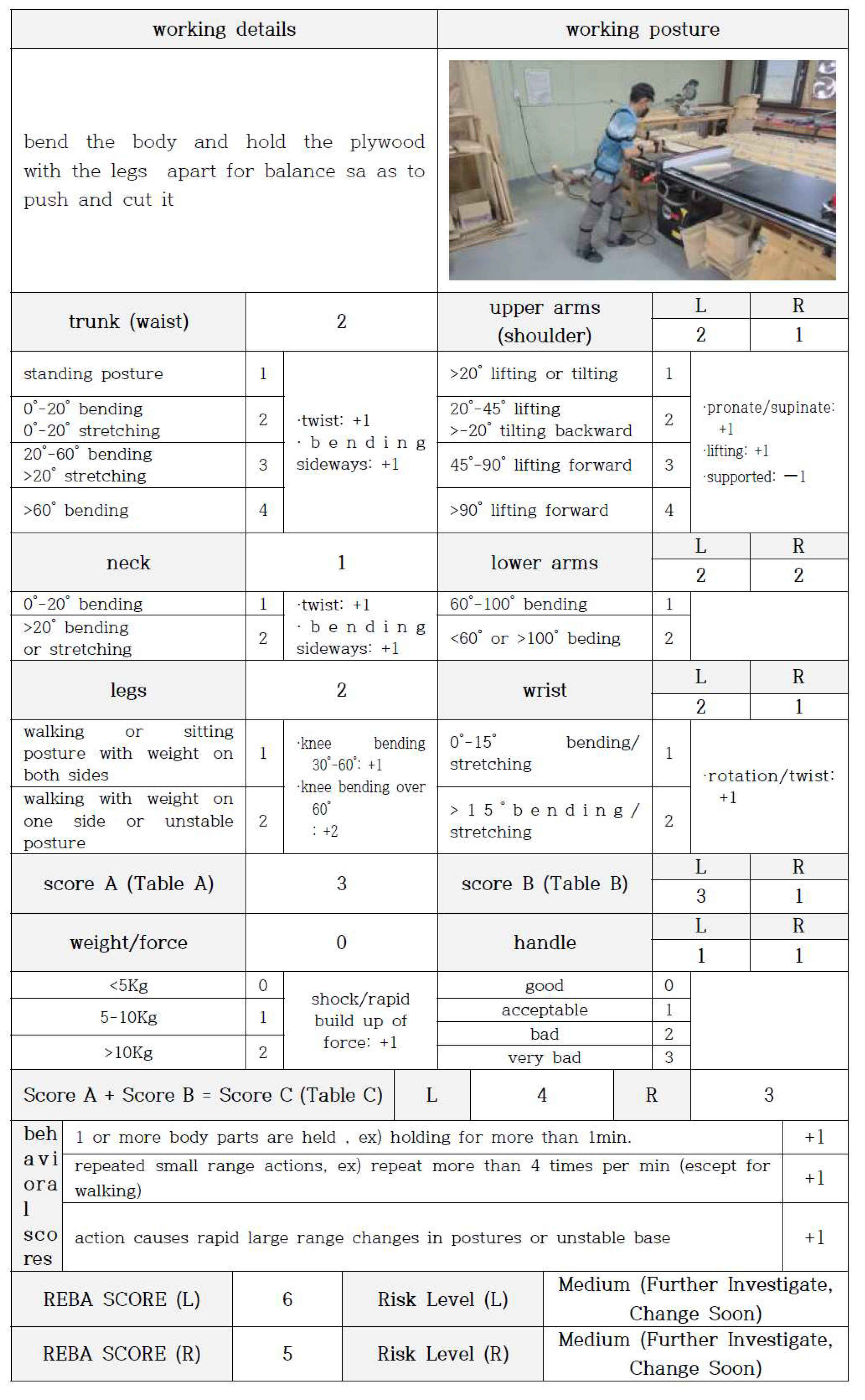

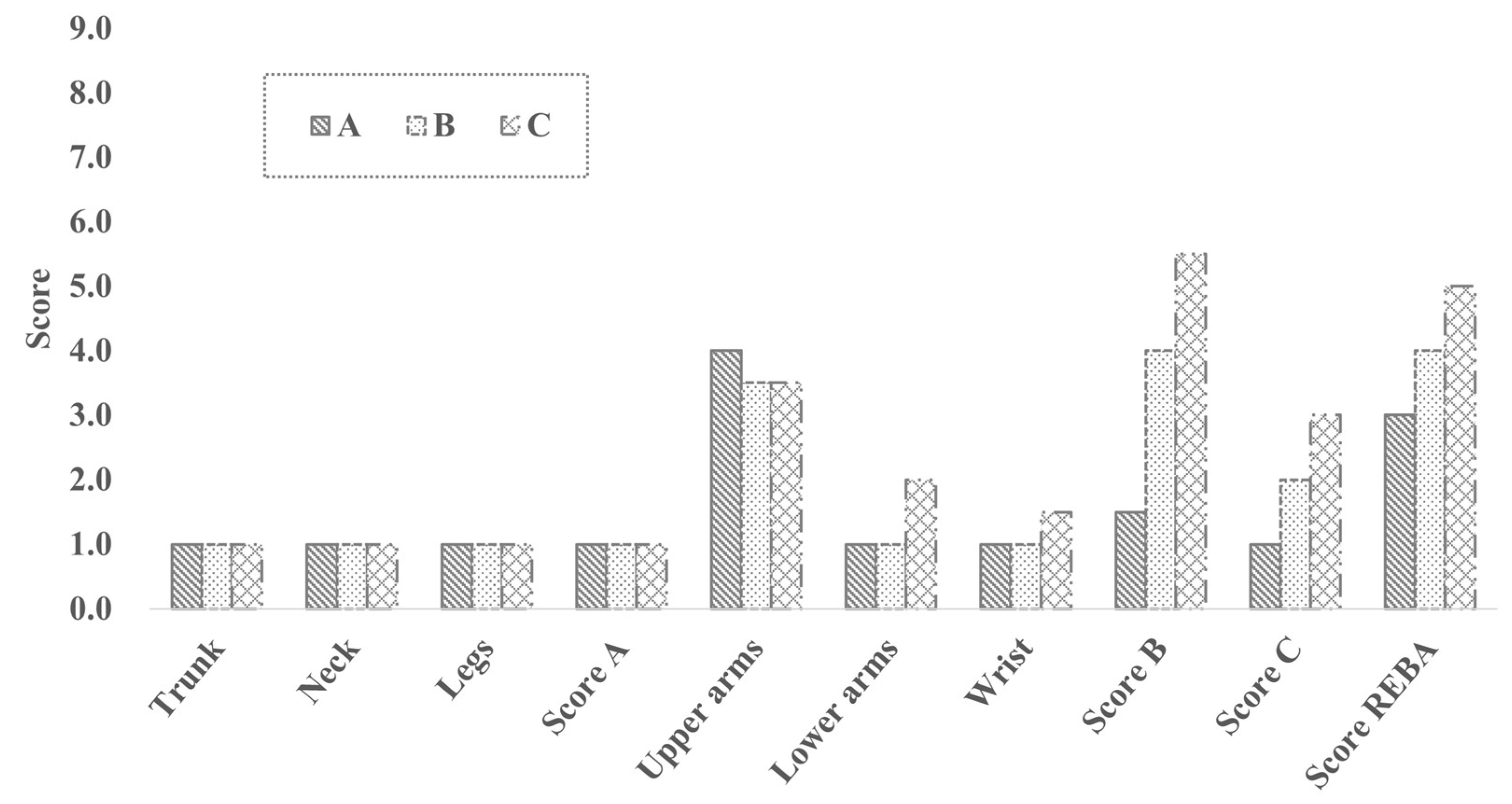

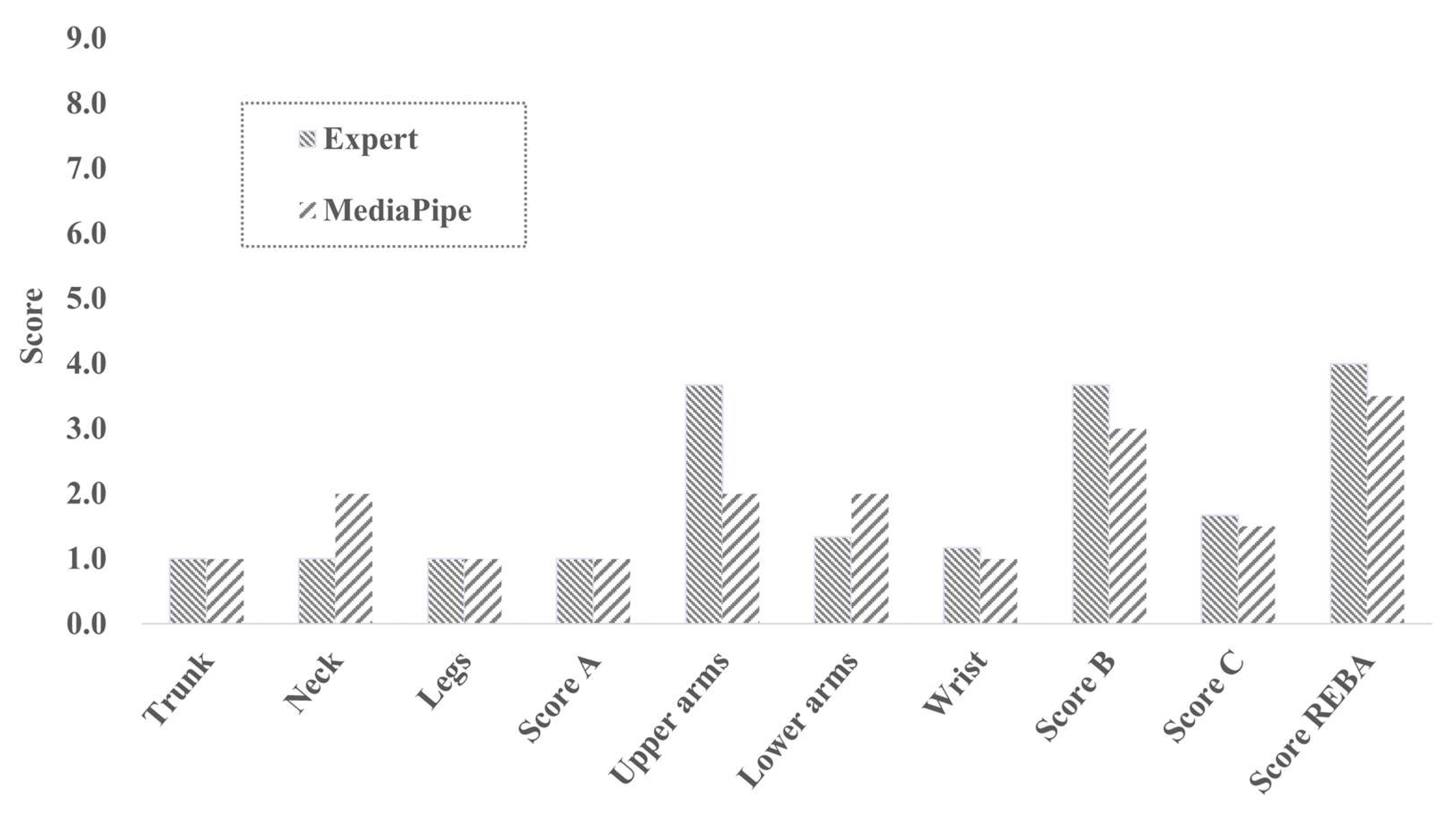

4.2.1. Evaluation of Cutting Machine Working Posture

4.2.2. Evaluation of Circular Saw Working Posture

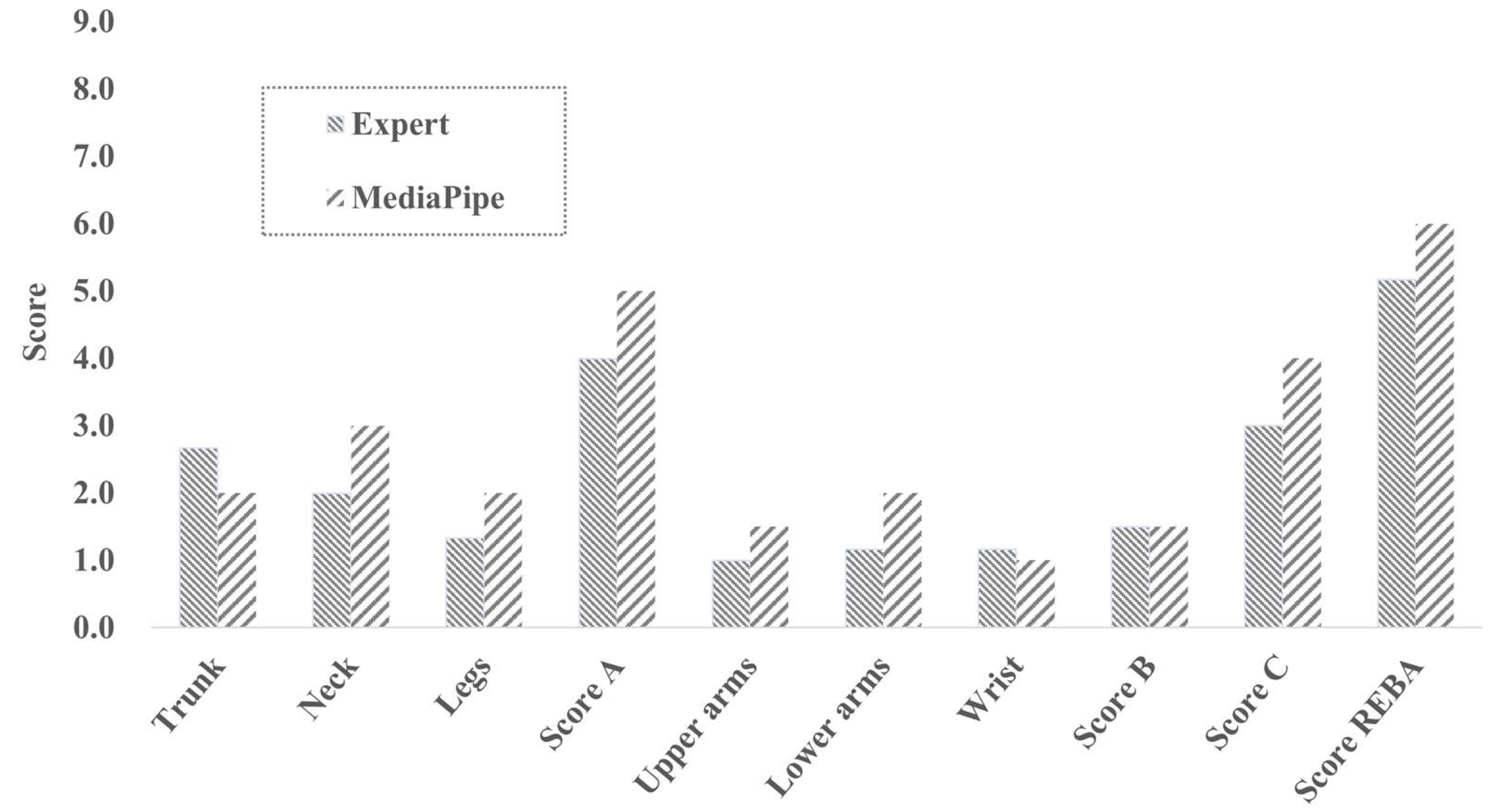

4.2.3. Evaluation of Drilling Machine Working Posture

4.2.4. Evaluation of Saw Working Posture

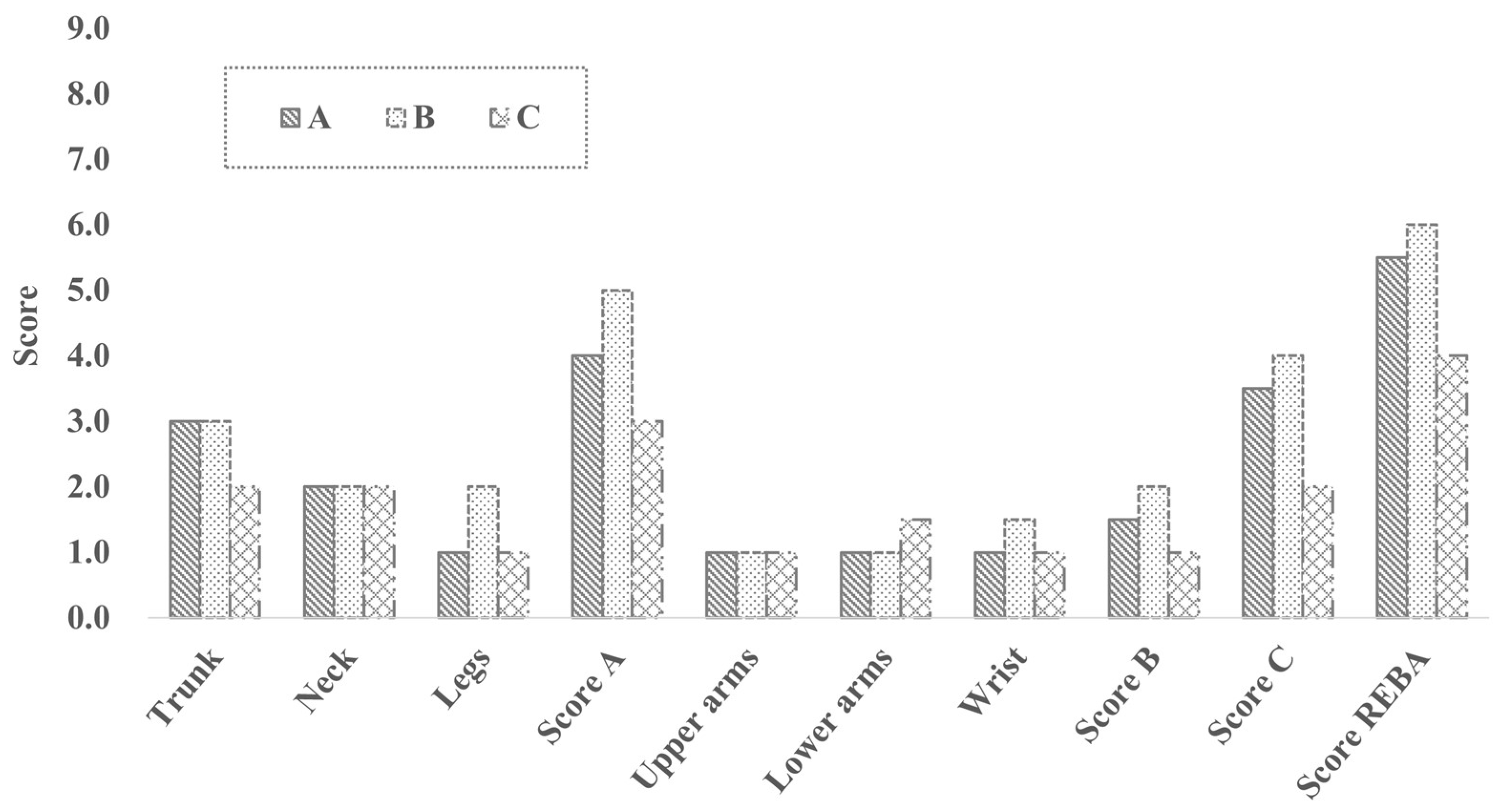

4.2.5. Evaluation of Chisel Working Posture

4.2.6. Evaluation of Planer Working Posture

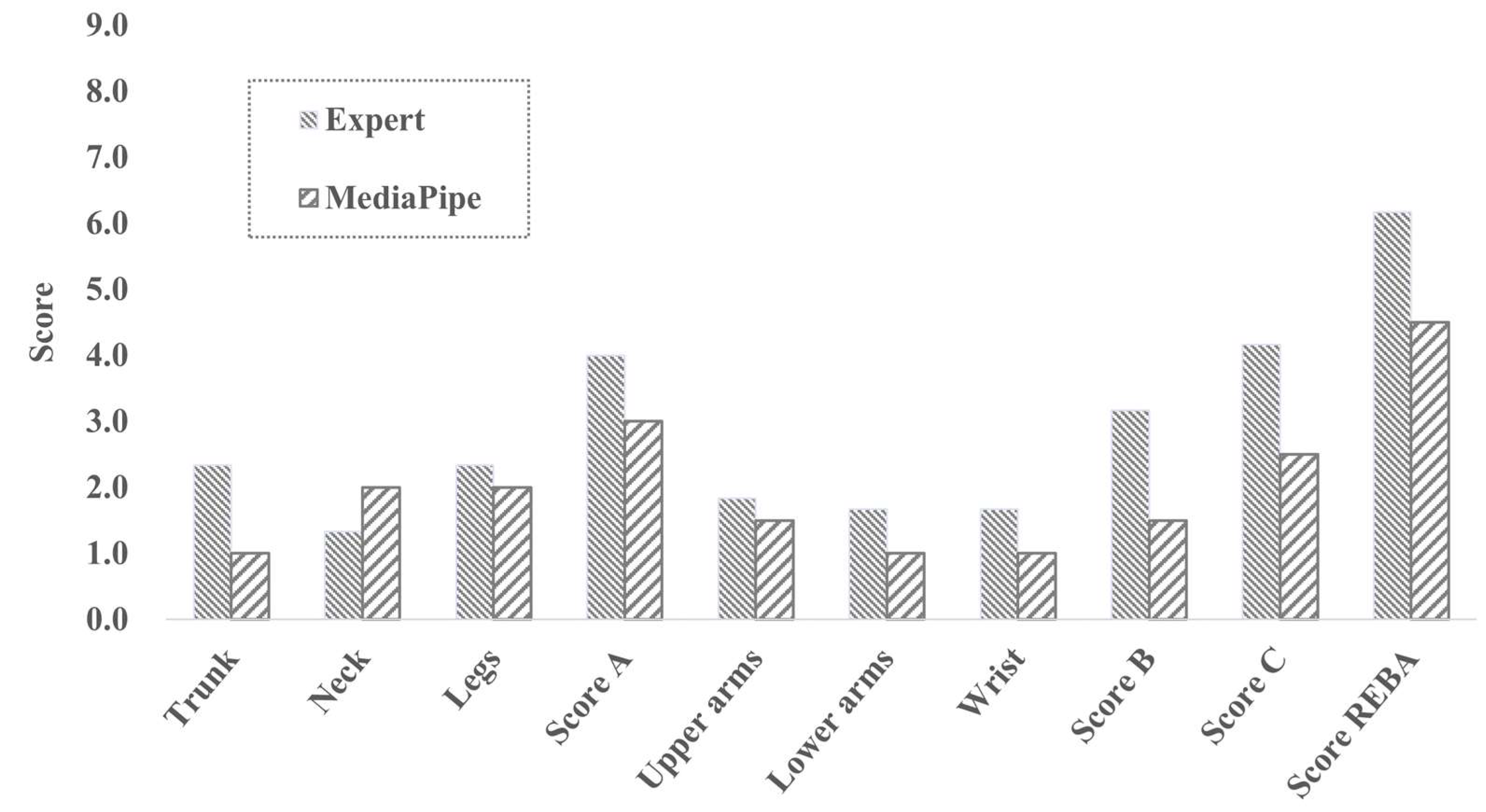

4.2.7. Evaluation of the Posture for Heavy Load Work

4.3. Evaluation Results by Computer-Based REBA

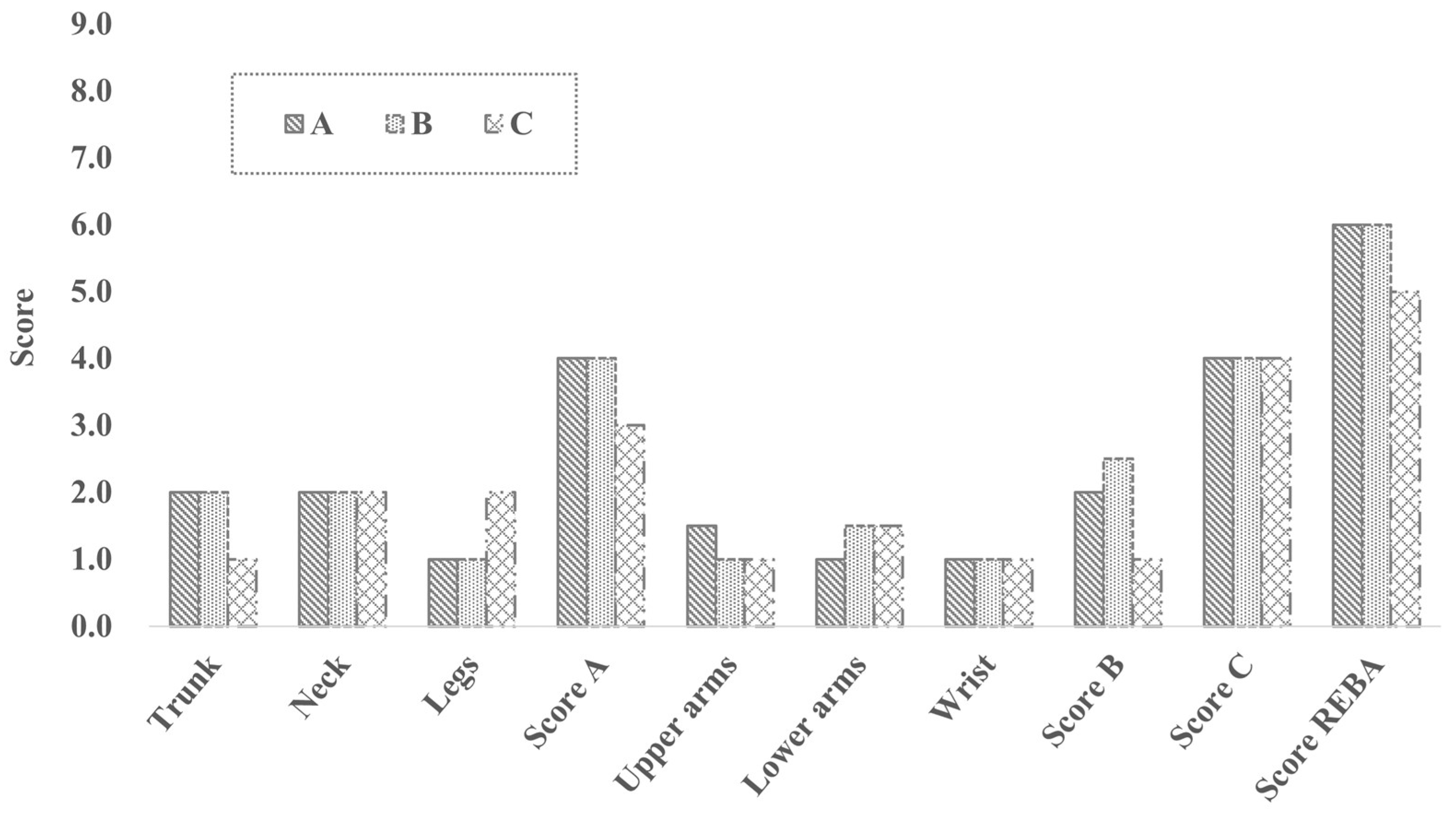

4.3.1. Evaluation of Cutting Machine Working Posture

4.3.2. Evaluation of Circular Saw Working Posture

4.3.3. Evaluation of Drilling Machine Working Posture

4.3.4. Evaluation of Saw Working Posture

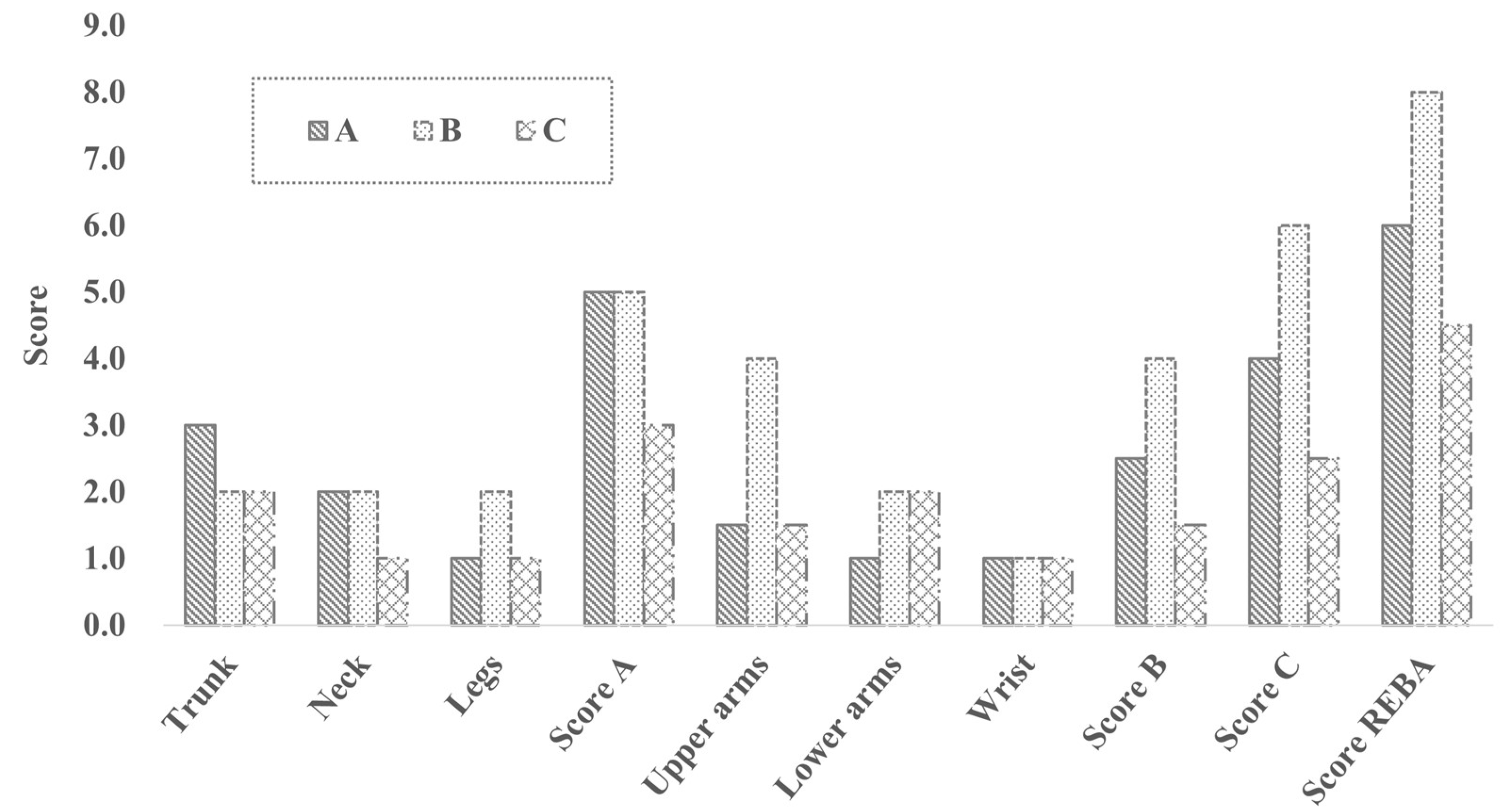

4.3.5. Evaluation of Chisel Working Posture

4.3.6. Evaluation of Planer Working Posture

4.3.7. Evaluation of the Posture for Heavy Load Work

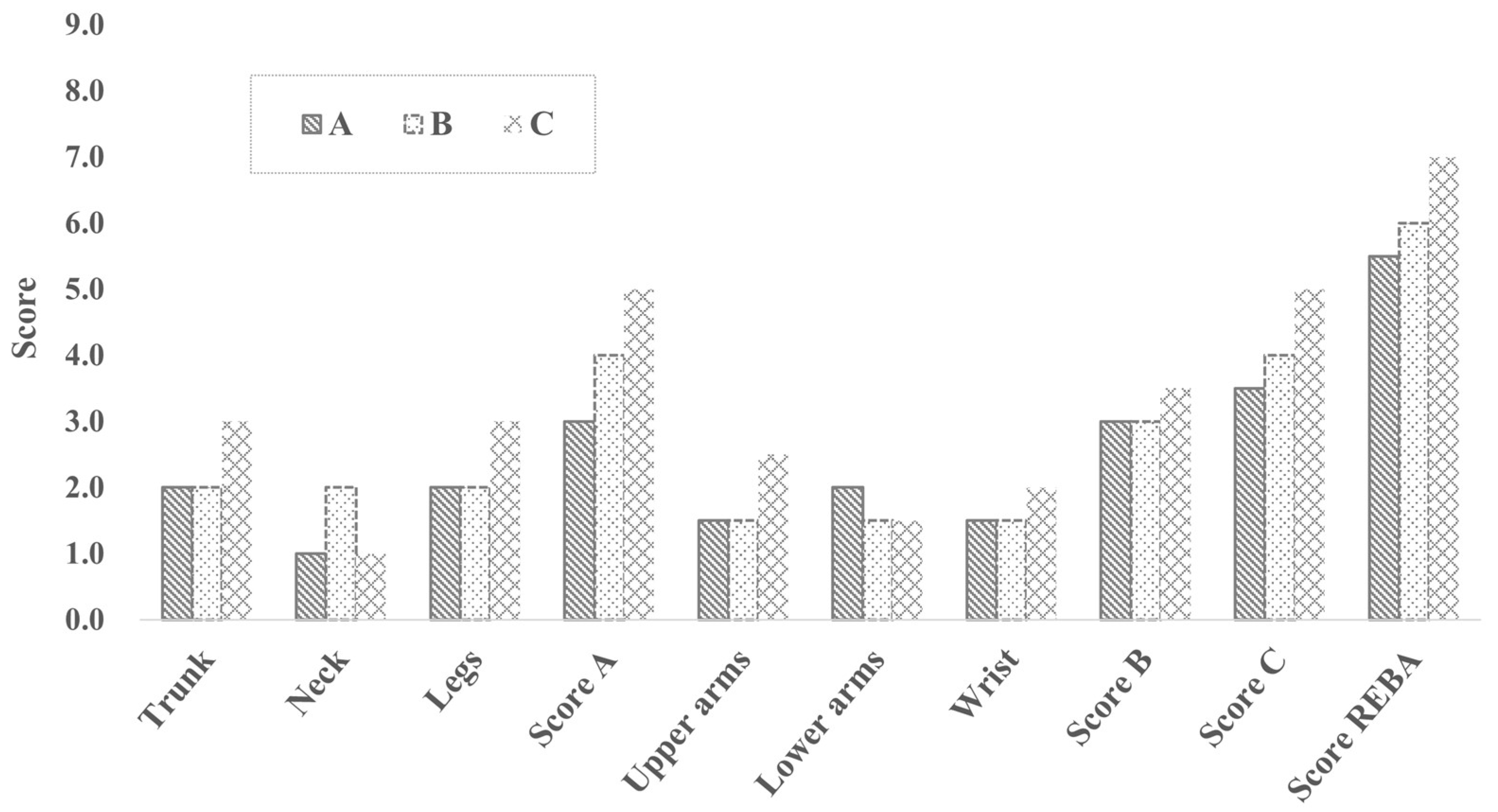

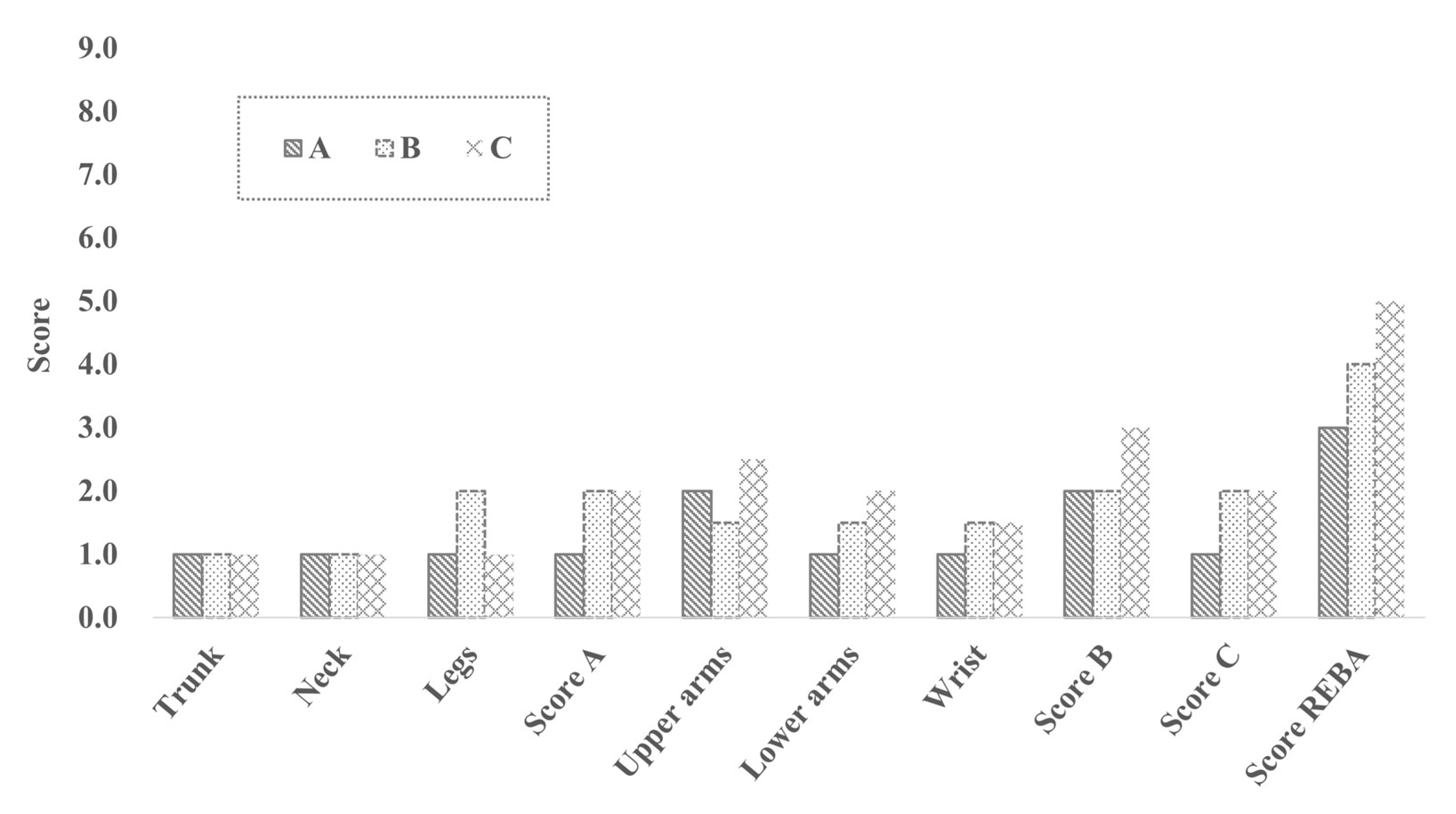

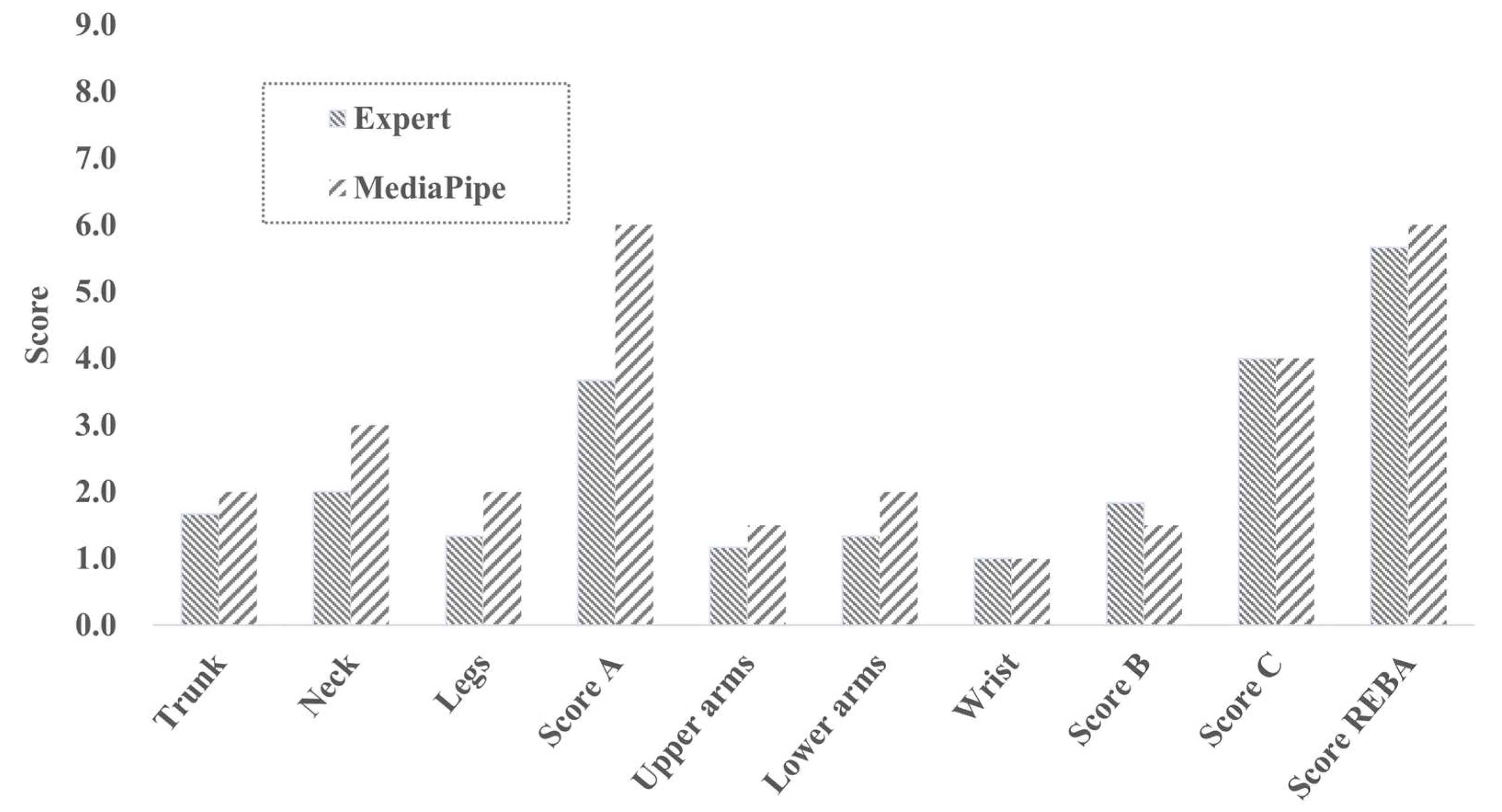

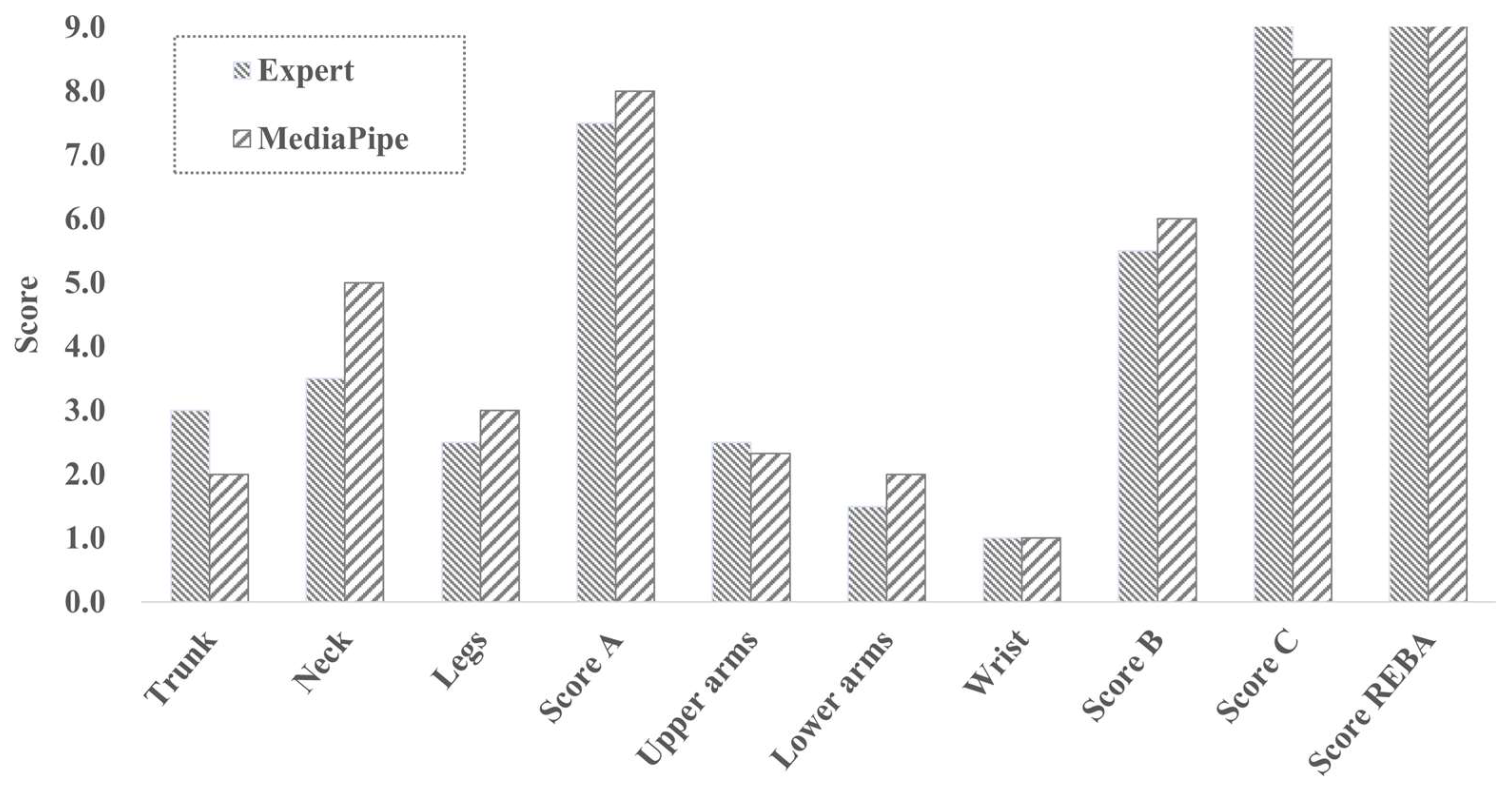

4.4. Comparison of Evaluation Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Korea Occupational Safety & Health Agency (KOSHA). Musculoskeletal disorder Protection Business Manual. Available online: https://kosha.or.kr/kosha/data/musculoskeletalPreventionData_G.do?mode=download&articleNo=296739&attachNo=230707 (accessed on 6 May 2022).

- Yoo, C. An Analysis of Characteristics of Musculoskeletal Disorders Risk Factors. J. Ergon. Soc. Korea 2009, 28, 17–25. [Google Scholar] [CrossRef]

- Korea Occupational Safety & Health Agency (KOSHA). The Status of Industrial Accident (2012–2021). Available online: http://www.kosha.or.kr/kosha/data/industrialAccidentStatus.do (accessed on 6 May 2022).

- Korea Occupational Safety & Health Agency (KOSHA). 2019 Working Environment Factual Survey. Available online: http://www.kosha.or.kr/kosha/business/inspection.do (accessed on 6 May 2022).

- Hao, P.; Li, Y.B.; Wu, S.S.; Yang, X.Y. Investigation and analysis of work-related occupational musculoskeletal disorders and associated risk factors of manufacturing workers. Zhonghua Laodong Weisheng Zhiyebing Zazhi 2020, 38, 187–192. Available online: https://www-ncbi-nlm-nih-gov.libproxy.smu.ac.kr/pubmed/32306691 (accessed on 1 January 2023). [PubMed]

- Anonymous. Prevalence of Musculoskeletal Symptoms and Assessment of Working Conditions in an Iranian Petrochemical Industry. J. Health Sci. Surveill. Syst. 2013, 1, 33–40. Available online: https://explore.openaire.eu/search/publication?articleId=doajarticles::8228592723614b3d27cc21a38d1cc7a9 (accessed on 31 December 2022).

- Korea Occupational Safety & Health Agency (KOSHA). Musculoskeletal Risk Assessment Tool Manual. Available online: https://www.kosha.or.kr/kosha/business/musculoskeletal_c_d.do (accessed on 6 May 2022).

- Lee, K.; Shin, Y.; Koo, H.; Gwon, S. Comparison of Posture Evaluation Methods of OWAS, RULA and REBA in Orchards. Proc. Ergon. Soc. Korea 2011, 59–62. Available online: https://www-dbpia-co-kr.libproxy.smu.ac.kr/journal/articleDetail?nodeId=NODE01815744 (accessed on 1 January 2023).

- Nelfiyanti; Mohamed, N.; Rashid, M.F.F.A. Analysis of Measurement and Calculation of MSD Complaint of Chassis Assembly Workers Using OWAS, RULA and REBA Method. Int. J. Automot. Mech. Eng. 2022, 19, 9681. [Google Scholar] [CrossRef]

- Cheon, W.; Jung, K. Analysis of Accuracy and Reliability for OWAS, RULA, and REBA to Assess Risk Factors of Work-related Musculoskeletal Disorders. J. Korea Saf. Manag. Sci. 2020, 22, 31–38. [Google Scholar]

- Gómez-Galán, M.; Callejón-Ferre, Á.; Pérez-Alonso, J.; Díaz-Pérez, M.; Carrillo-Castrillo, J. Musculoskeletal Risks: RULA Bibliometric Review. Int. J. Environ. Res. Public Health 2020, 17, 4354. [Google Scholar] [CrossRef]

- Gorde, M.S.; Borade, A.B. The Ergonomic Assessment of Cycle Rickshaw Operators Using Rapid Upper Limb Assessment (Rula) Tool and Rapid Entire Body Assessment (Reba) Tool. Syst. Saf. 2019, 1, 219–225. [Google Scholar] [CrossRef]

- McAtamney, L.; Nigel Corlett, E. RULA: A survey method for the investigation of work-related upper limb disorders. Appl. Ergon. 1993, 24, 91–99. [Google Scholar] [CrossRef]

- Hignett, S.; McAtamney, L. Rapid Entire Body Assessment (REBA). Appl. Ergon. 2000, 31, 201–205. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Park, H. Working Posture Analysis for Preventing Musculoskeletal Disorders using Kinect and AR Markers. Korean J. Comput. Des. Eng. 2018, 23, 19–28. [Google Scholar] [CrossRef]

- Marín, J.; Marín, J.J. Forces: A Motion Capture-Based Ergonomic Method for the Today’s World. Sensors 2021, 21, 5139. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- OpenPose. Available online: https://github.com/CMU-Perceptual-Computing-Lab/openpose (accessed on 6 May 2022).

- Zhe, C.; Simon, T.; Shih-En, W.; Sheikh, Y. Realtime Multi-person 2D Pose Estimation Using Part Affinity Fields. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1302–1310. [Google Scholar]

- Komilov, D.; Jung, K. Development of a Semi-Automatic Rapid Entire Body Assessment System using the Open Pose and a Single Working Image. Proc. Korean Inst. Ind. Eng. 2020, 84, 1503–1517. Available online: https://www-dbpia-co-kr.libproxy.smu.ac.kr/journal/articleDetail?nodeId=NODE10505750 (accessed on 27 December 2022).

- MediaPipe Object Detection. Available online: https://google.github.io/mediapipe/solutions/object_detection.html (accessed on 6 May 2022).

- MediaPipe Pose. Available online: https://google.github.io/mediapipe/solutions/pose.html (accessed on 6 May 2022).

- Detection of Human Body Landmarks-MediaPipe and OpenPose Comparison. Available online: https://www.hearai.pl/post/14-openpose/ (accessed on 6 May 2022).

- Unity3D. Available online: https://unity.com/ (accessed on 6 May 2022).

- Bazarevsky, V.; Grishchenko, I.; Raveendran, K.; Zhu, T.; Zhang, F.; Grundmann, M. BlazePose: On-device Real-Time Body Pose Tracking. 2020. Available online: https://arxiv.org/abs/2006.10204 (accessed on 27 December 2022).

- FinalIK Document. Available online: http://www.root-motion.com/finalikdox/html/pages.html (accessed on 6 May 2022).

- CCD IK. Available online: http://www.root-motion.com/finalikdox/html/page5.html (accessed on 6 May 2022).

| Evaluation Tool | Characteristics | Evaluation Reliability Standard Deviation t (160) |

|---|---|---|

| OWAS | Easy and simple to apply to the field quickly Difficulty in detailed analysis due to its oversimplification | trunk: 0.96 lower arms: 0.95 upper arms: 0.17 weight: 0.14 |

| RULA | Measure the overall workload, but evaluation is focused on the upper limbs Evaluation accuracy has the highest reliability among the three | ScoreA: 1.13 ScoreB: 1.28 trunk:1.35 neck: 1.35 legs: 0.49 upper arms: 0.86 forearm: 0.73 wrist: 0.90 wrist twist: 0.26 |

| REBA | Compensate for the shortcomings of RULA confined to the upper limbs Improve the body load measurement | Score A: 1.44 Score B: 1.71 trunk: 0.73 neck: 0.70 lower arms: 0.83 upper arms: 0.82 forearm: 0.50 wrist: 0.65 |

| Model | FPS | AR Dataset, PCK@0.2 | Yoga Dataset, PCK@0.2 |

|---|---|---|---|

| OpenPose (CPU) | 0.4 | 87.8 | 83.4 |

| BlazePose Full | 10 | 84.1 | 84.5 |

| BlazePose Lite | 31 | 79.6 | 77.6 |

| MediaPipe (CPU) | OpenPose (CPU) | OpenPose (GPU) |

|---|---|---|

|  |  |

| 30.0 fps | 0.3 fps | 10.0 fps |

| Index | Working Posture |

|---|---|

| 1 | Upright posture |

| 2 | 0~20° bending or 0~20° reclining |

| 3 | 20~60° bending or more than 20° reclining |

| 4 | More than 60 bending° |

| +1 | Trunk is twisted or bent sideways |

| Index | Working Posture |

|---|---|

| 1 | 0~20° bending |

| 2 | More than 20° bending |

| +1 | Neck is twisted or bent sideways |

| Index | Working Posture |

|---|---|

| 1 | Both legs kept side by side or walking/sitting |

| 2 | Only one foot is supported on the ground |

| +1 | Knee bent 30° to 60° |

| +2 | Knee bent more than 60° |

| Neck | Legs | Waist | ||||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| 1 | 1 | 1 | 2 | 2 | 3 | 4 |

| 2 | 2 | 3 | 4 | 5 | 6 | |

| 3 | 3 | 4 | 5 | 6 | 7 | |

| 4 | 4 | 5 | 6 | 7 | 8 | |

| 2 | 1 | 1 | 3 | 4 | 5 | 6 |

| 2 | 2 | 4 | 5 | 6 | 7 | |

| 3 | 3 | 5 | 6 | 7 | 8 | |

| 4 | 4 | 6 | 7 | 8 | 9 | |

| 3 | 1 | 3 | 4 | 5 | 6 | 7 |

| 2 | 3 | 5 | 6 | 7 | 8 | |

| 3 | 5 | 6 | 7 | 8 | 9 | |

| 4 | 6 | 7 | 8 | 9 | 9 | |

| <5 kg | 5–10 kg | >10 kg | Shock or Sudden Force |

|---|---|---|---|

| 0 | 1 | 2 | +1 |

| Index | Working Posture |

|---|---|

| 1 | 20° reclining or 20° lifting forward |

| 2 | Reclining more than 20° or 20~45° lifting forward |

| 3 | 45~90° lifting forward |

| 4 | More than 90° lifting forward |

| +1 | Upper arm is stretched or rotated |

| +1 | Shoulders lifted |

| -1 | Arm is supported or leaned on something |

| Index | Working Posture |

|---|---|

| 1 | 60~100° lifting |

| 2 | More than 100° lifting or 0~60° lifting |

| Index | Working Posture |

|---|---|

| 1 | 0~15° bending or lifting |

| 2 | More than 15° bending or lifting |

| +1 | Wrist is twisted |

| Lower Arm | Wrist | Upper Arm (Shoulder) | ||||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| 1 | 1 | 1 | 2 | 2 | 3 | 4 |

| 2 | 2 | 3 | 4 | 5 | 6 | |

| 3 | 3 | 4 | 5 | 6 | 7 | |

| 2 | 1 | 1 | 3 | 4 | 5 | 6 |

| 2 | 2 | 4 | 5 | 6 | 7 | |

| 3 | 3 | 5 | 6 | 7 | 8 | |

| Good | Acceptable | Bad | Very Bad |

|---|---|---|---|

| With a strong and well-fixed handle located in the center of gravity | With acceptable handle or if a part of the object can be used like a handle | Not suitable to hold by hand even though it can be lifted or with an inappropriate handle | No handle or with a dangerous type of handle |

| Posture Score A | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | ||

| Posture score B | 1 | 1 | 1 | 2 | 3 | 4 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| 2 | 1 | 2 | 3 | 4 | 4 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | |

| 3 | 1 | 2 | 3 | 4 | 4 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | |

| 4 | 2 | 3 | 3 | 4 | 5 | 7 | 8 | 9 | 10 | 11 | 11 | 12 | |

| 5 | 3 | 4 | 4 | 5 | 6 | 8 | 9 | 10 | 10 | 11 | 12 | 12 | |

| 6 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 10 | 11 | 12 | 12 | |

| 7 | 4 | 5 | 6 | 7 | 8 | 9 | 9 | 10 | 11 | 11 | 12 | 12 | |

| 8 | 5 | 6 | 7 | 8 | 8 | 9 | 10 | 10 | 11 | 12 | 12 | 12 | |

| 9 | 6 | 6 | 7 | 8 | 9 | 10 | 10 | 10 | 11 | 12 | 12 | 12 | |

| 10 | 7 | 7 | 8 | 9 | 9 | 10 | 11 | 11 | 12 | 12 | 12 | 12 | |

| 11 | 7 | 7 | 8 | 9 | 9 | 10 | 11 | 11 | 12 | 12 | 12 | 12 | |

| 12 | 7 | 8 | 8 | 9 | 9 | 10 | 11 | 11 | 12 | 12 | 12 | 12 | |

| Index | Behavior Scores |

|---|---|

| +1 | If one or more body parts are held (ex: hold for more than a minute) |

| +1 | Repetitive tasks in a narrow range (ex: repeat more than 4 times per minute except for walking) |

| +1 | Rapidly changing behavior over a wide range or unstable lower body posture |

| Level | REBA Score | Risk Level | Measurement (Further Investigate) |

|---|---|---|---|

| 0 | 1 | Very low | No change |

| 1 | 2–3 | Low | Change may be needed |

| 2 | 4–7 | Medium | Change soon |

| 3 | 8–10 | High | Investigate and change soon |

| 4 | 11–15 | Very high | Implement change |

| Type | Data Size (MB) 1 | Minimum | Maximum | Average |

|---|---|---|---|---|

| Cutter | 6.51 | 1 | 1 | 2.6 |

| Circular saw | 4.98 | 1 | 1 | 2 |

| Drilling machine | 5.11 | 1.3 | 1 | 1.3 |

| Saw | 6.76 | 0.3 | 0 | 0 |

| Chisel | 6.16 | 1.6 | 1 | 4 |

| Planer | 4.93 | 1 | 2.6 | 1 |

| Heavy load | 8.68 | 1.3 | 1.3 | 1.3 |

| Group | Body Part | Cutter | Circular Saw | Drilling Machine | Saw | Chisel | Planer | Heavy Load |

|---|---|---|---|---|---|---|---|---|

| A | Waist | 2.3 | 1 | 1 | 2.6 | 1.6 | 2.3 | 3 |

| Neck | 1.3 | 1 | 1 | 2 | 2 | 1.6 | 3.6 | |

| Legs | 2.3 | 1.3 | 1 | 1.3 | 1 | 1.3 | 2.3 | |

| Weight | 0 | 0.3 | 0 | 0 | 1 | 1 | 2 | |

| Score A | 4 | 1.6 | 1 | 4 | 3.3 | 3.6 | 7.3 | |

| B | Upper Arms | 2 | 1 | 2.6 | 1 | 0.6 | 2.6 | 3 |

| Lower Arms | 1.6 | 1.3 | 1.3 | 1.3 | 1 | 1.6 | 1 | |

| Wrist | 2 | 1 | 1 | 1.3 | 1 | 1 | 1 | |

| Handle | 1.3 | 1 | 1 | 1.3 | 0.6 | 1.3 | 3 | |

| Score B (Left) | 4 | 2 | 3.6 | 0.6 | 1.6 | 4 | 3 | |

| Upper Arms | 1.6 | 3 | 3.3 | 1 | 1.6 | 2.3 | 3 | |

| Lower Arms | 1.6 | 1.6 | 1.3 | 1 | 1.6 | 1.6 | 1 | |

| Wrist | 2 | 1.6 | 1.3 | 1 | 1 | 1 | 1 | |

| Handle | 0.6 | 0 | 0 | 0 | 0.6 | 1 | 3 | |

| Score B (Right) | 2.3 | 4 | 3.6 | 1 | 2 | 3.3 | 3 | |

| REBA Score | Left | 6.6 | 3.6 | 3.6 | 5.3 | 5 | 7 | 13 |

| Right | 5.6 | 4.3 | 4 | 5 | 5 | 6.6 | 13 |

| Group | Body Part | Cutter | Circular Aw | Drilling Machine | Saw | Chisel | Planer | Heavy Load |

|---|---|---|---|---|---|---|---|---|

| A | Waist | 1 | 1 | 1 | 2 | 2 | 2 | 2 |

| Neck | 3 | 2 | 2 | 3 | 3 | 3 | 5 | |

| Legs | 2 | 1 | 1 | 2 | 2 | 2 | 3 | |

| Weight | 0 | 1 | 0 | 0 | 1 | 0 | 2 | |

| Score A | 3 | 2 | 1 | 5 | 6 | 5 | 8 | |

| B | Upper Arms | 1 | 1 | 1 | 1 | 1 | 1 | 2 |

| Lower Arms | 1 | 1 | 2 | 2 | 2 | 1 | 2 | |

| Wrist | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| Handle | 1 | 1 | 1 | 0 | 0 | 1 | 2 | |

| Score B (Left) | 2 | 2 | 2 | 1 | 1 | 2 | 5 | |

| Upper Arms | 2 | 3 | 3 | 2 | 2 | 2 | 3 | |

| Lower Arms | 1 | 1 | 2 | 2 | 2 | 1 | 2 | |

| Wrist | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| Handle | 0 | 0 | 0 | 0 | 0 | 1 | 2 | |

| Score B (Right) | 1 | 3 | 4 | 2 | 2 | 2 | 7 | |

| REBA Score | Left | 5 | 3 | 3 | 6 | 6 | 6 | 10 |

| Right | 4 | 3 | 4 | 6 | 6 | 6 | 11 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeong, S.-o.; Kook, J. CREBAS: Computer-Based REBA Evaluation System for Wood Manufacturers Using MediaPipe. Appl. Sci. 2023, 13, 938. https://doi.org/10.3390/app13020938

Jeong S-o, Kook J. CREBAS: Computer-Based REBA Evaluation System for Wood Manufacturers Using MediaPipe. Applied Sciences. 2023; 13(2):938. https://doi.org/10.3390/app13020938

Chicago/Turabian StyleJeong, Seong-oh, and Joongjin Kook. 2023. "CREBAS: Computer-Based REBA Evaluation System for Wood Manufacturers Using MediaPipe" Applied Sciences 13, no. 2: 938. https://doi.org/10.3390/app13020938

APA StyleJeong, S.-o., & Kook, J. (2023). CREBAS: Computer-Based REBA Evaluation System for Wood Manufacturers Using MediaPipe. Applied Sciences, 13(2), 938. https://doi.org/10.3390/app13020938