1. Introduction

Spatial language understanding (SLU) involves recognizing and reasoning about spatial semantics, e.g., spatial objects, relations, and transformations [

1], in natural languages’ descriptions, and this has drawn much attention in recent years. Conventional SLU tasks include spatial role labeling [

2,

3], spatial question answering [

4], and spatial reasoning [

5]. SLU is an essential function of natural language processing systems, and it is necessary for many applications, such as robotics [

6,

7,

8], navigation [

9,

10,

11], traffic management [

12,

13], and query answering systems [

4].

In the realm of SLU, extant research exhibits a pronounced concentration on the context of the English language, while there is a noticeable lack of investigations on non-English settings. Extending in this direction, Zhan et al. [

14] proposed Spatial Cognition Evaluation (SpaCE) in order to benchmark Chinese spatial language understanding via abnormal spatial semantics recognition, spatial role labeling, and spatial scene matching. Unlike in the context of English, Chinese SLU has some specific challenges due to its ambiguous characteristics and complex grammar. For example,

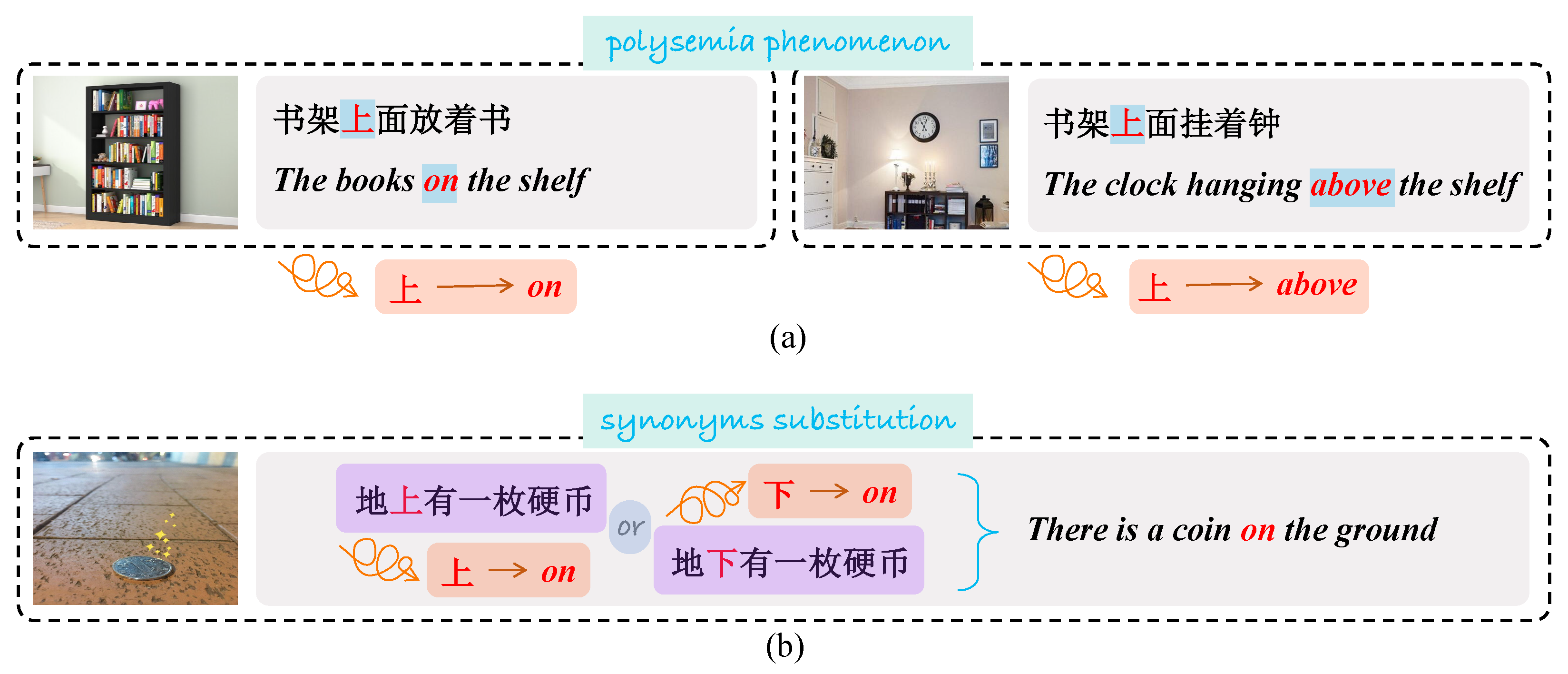

Figure 1a shows the phenomenon of polysemia among Chinese spatial prepositions. The word “上” in the sentence “书架上面放着书” (the books on the shelf) means “on”, while the “上” in the sentence ‘书架上面挂着钟”’ (the clock hanging above the shelf) means “above”. With the same word “上” or phrase “上面”, the sentences may express different spatial relationships, which is a common phenomenon in Chinese. Meanwhile, some conflicting prepositions may express the same meaning, which is also known as the substitution of synonyms. In

Figure 1b, the “上” (which usually denotes “on”) in “地上有一枚硬币” and the “下” (which usually denotes “under”) in “地下有一枚硬币” describe the same thing, i.e., “there is a coin on the ground”. The substitution of synonyms occurs in many languages but does so more frequently in Chinese due to the lax usage of syntax. In these situations, traditional methods that extract spatial information based on spatial prepositions impair performance. The solution to this issue is to reason spatial relationships with the holistic semantics of a sentence and the common linguistic knowledge of the Chinese language.

Very recently, the integration of LLMs into the NLP task has garnered increasing attention, which has facilitated the achievement of remarkable zero-/few-shot performance. Researchers have devised mechanisms such as in-context learning (ICL) that enable LLMs to directly generate results for an input question with just a few demonstrations. However, the approach of straightforward prompts falls short of eliciting the profound language comprehension and knowledge manipulation capabilities of LLMs. Consequently, it struggles to effectively address intricate and challenging spatial reasoning. In this study, we explore the newly emerged chain-of-thought (CoT) mechanism in which LLMs not only produce the most probable reasoning results, but also provide clues/the rationale underpinning an inference. This involves incorporating the holistic spatial information of the input descriptions, as well as the extensive common knowledge that LLMs have. The vanilla CoT simply concatenates the target problem statement with “Let us think step by step” as an input prompt for LLMs. It fails to explicitly focus on the key steps of spatial reasoning, leading to unstable results. In this study, we propose the Spatial-CoT template, where we manually decompose the problems of SLU into a three-step process to address the specific issues in spatial reasoning.

Concretely, we investigate CoT reasoning for three Chinese spatial understanding tasks: abnormal spatial semantics recognition (ASpSR), spatial role labeling (SpRL), and spatial scene matching (SpSM). For each task, we design a CoT prompt template, namely, Spatial-CoT, from a shallow entity to holistic semantics. Technically, our Spatial-CoT reasoning comprises three pivotal steps, with each being a special analyzer: an entity analyzer (EA), context analyzer (CA), and common knowledge analyzer (CKA). The overall framework is shown in

Figure 2. First, the EA should recognize all of the related entities in the spatial scene of a given description, providing entity-level clues for deeper reasoning. Subsequently, the CA employs the entity clues and makes a prediction based on the provided context, making a preliminary decision about the task from its inclination. Finally, the CKA refines the decision based on common knowledge and language conventions. At its core, Spatial-CoT unfolds as a sequence of logical steps, with each building upon the insights of the preceding step, leading to conclusive outcomes.

We evaluated our method on the SpaCE dataset, which includes an abnormal spatial semantics recognition task, spatial role labeling task, and spatial scene matching task. The results underscore the efficacy of our proposed Spatial-CoT reasoning framework in LLM-based Chinese SLU. The Spatial-CoT method outperformed vanilla prompts and surpassed the current state-of-the-art supervised baseline.

The rest of this study is organized as follows.

Section 2 describes the related work.

Section 3 introduces the details of the designs of the CoT three subtasks.

Section 4 compares the performance of the current approach with that of other models.

Section 5 summarizes the conclusions.

2. Related Work

Spatial language understanding is a fundamental task in natural language understanding. One of the essential functions of natural language is the expression of spatial relationships between objects. An early method of spatial information extraction was introduced in the form of spatial role labeling (SpRL) by Kordjamshidi et al. [

2]. Furthermore, SemEval2012 introduced the SRL task while mainly focusing on static spatial relations, and SemEval-2013 expanded static spatial relations to capture fine-grained semantics. SemEval-2015 [

15] first proposed the evaluation of implementation systems for the SpaceEval annotation scheme, which is the current spatial information annotation scheme, and many spatial information extraction systems have been developed based on it [

16,

17,

18]. Multimodal approaches to spatial understanding have also been recently proposed. In CLEF 2017, Kordjamshidi et al. [

19] proposed multimodal spatial role labeling (mSpRL). The authors of Zhao et al. [

20,

21] proposed the task of visual spatial description, thus extending the line of spatial language understanding. Differing from most efforts that focused on the English context, CCL2021 (

http://ccl.pku.edu.cn:8084/SpaCE2021/task, accessed on 1 September 2023) was the first proposal of the SpaCE task, which used a non-English language for spatial language understanding. In this study, we follow this work and propose an LLM-based framework for better spatial reasoning.

Prior research on spatial language understanding heavily relied on supervised learning and fine-tuned pre-trained models while using specific datasets with extensive labeled data [

2,

22,

23], thus achieving remarkable performance. While traditional supervised frameworks face challenges such as the need for extensive labeled data and weak generalizability, recent LLMs have emerged as a solution to these issues. LLMs (e.g., ChatGPT [

24], LLaMA [

25], and Vacuna [

26]) have garnered unprecedented attention and exhibit human-level language understanding, thus amassing rich linguistic and commonsense knowledge. LLMs equipped with the prompt learning and ICL paradigm [

27,

28,

29,

30,

31,

32] have demonstrated robust performance in scenarios with little or even no supervision, which is a remarkable trait. In the context of LLM-based methods, the initial research involved constructing prompts to inquire targets directly. To address the need for in-depth reasoning, researchers have turned to the recent CoT strategy [

33,

34,

35,

36] to enhance LLMs’ inference capabilities. CoT empowers LLMs to engage in human-like reasoning processes, thus not only providing answers, but also furnishing the underlying thought process behind those answers.

However, conventional CoT simply employs a single prompt template such as “let us think step by step” to inspire the LLM [

37,

38,

39]. These methods improve reasoning performance on larger LLMs but fail to indicate the explicit reasoning steps for the provided task. Beyond single prompt CoT, Wei et al. [

40] encourage internal dialogue by forcing the LLM to generate a sequence of intermediate steps for reasoning problems. Zhou et al. [

41] go a step further; they (automatically) break a complex problem into simpler sub-problems and then solve them in sequence. Previous multi-step CoT methods primarily focus on arithmetic problems or logical problems, which are relatively easily symbolized and generalized. However, these strategies may struggle to maintain control over intermediate reasoning steps when confronted with diverse tasks, making them less applicable to our spatial understanding problem. Thus, in this study, we focused on the spatial understanding problem and designed the Spatial-CoT framework, aiming to solve the challenges of the phenomenon of polysemia and the substitution of synonyms in the Chinese language that previous methods have failed to address.

4. Experiment

4.1. Experimental Setting

Dataset. We evaluated our proposed method on the SpaCE [

14] dataset, a Chinese spatial language understanding corpus that comprises more than 10,000 examples. SpaCE encompasses a wide range of domains, such as news, literature, textbooks, sports corpuses, and encyclopedias. The dataset comprises annotations of abnormal spatial semantics recognition, spatial role labeling, and spatial scene matching. The detailed dataset statistics are listed in

Table 2.

Table 2.

The statistics of the SpaCE dataset. “#SENT” means the number of sentences. “#SPAN” means the number of SRL spans. “#ROLE” means the number of role types.

Table 2.

The statistics of the SpaCE dataset. “#SENT” means the number of sentences. “#SPAN” means the number of SRL spans. “#ROLE” means the number of role types.

| Split | ASpSR | SpRL | SpSM |

|---|

|

#SENT

|

#SENT

|

#SPAN

|

#ROLE

|

#SENT

|

|---|

| Train | 10,993 | 1529 | 25,108 | 15 | - |

| Test | 1602 | 700 | 3815 | 15 | 10 |

In this work, we adopted the official dev split for the test set and processed zero-shot/1-shot CoT. We also reproduced some supervised models for comparison, and we split the official training set into 7:1 for training and validation. For the SpSM task, there was no training set, but 10 examples were used for evaluation (

https://2030nlp.github.io/SpaCE2023/index.html, accessed on 1 September 2023), and we only reported its results with the LLM-based methods.

Baselines.We provide a comparison of our proposed method with extensive baseline methods. We first report results on conventional supervised methods, such as

BERT-like language models. We adopted a state-of-the-art framework, MRC-SRL [

42] for the SpSRL task. The MRC-SRL treat the SRL task as an multiple-choice machine reading comprehension (MRC) problem [

43]. For ASpSR, we used a conventional BERT-based text classification framework [

44], where the pooled outputs of the BERT backbone are used to predict whether the given sentence has abnormal expressions through a binary classification layer.

We also include the results of a prompt-learning paradigm for the LLM. We report three types of prompting baselines: (1)

Vanilla Prompt (zero-shot). We include vanilla prompt learning [

45], where only the description of the task’s target is fed to the LLM as an input. (2)

In-Context Learning (ICL). In-context learning [

27] adds demonstrations to the prompt template, thus strongly guiding the generation of output answers for desired tasks. (3)

Vanilla CoT (zero-shot). Following reference [

37], vanilla CoT appends “Let us think step by step” to the prompt with or without any demonstration examples, guiding the LLM to decompose a problem into steps. (4)

Vanilla CoT-ICL. Based on zero-shot CoT, CoT-ICL add demonstrations to further guide the generation in an ICL paradigm. Technically, we list the input template of the three methods in

Table 3.

Implementation Details. For supervised ASpSR, we use a BERT-base backbone and a binary linear classifier. For supervised SpRL, we follow reference [

42] to use a BERT-base model as the base encoder and use two special symbols <p> and </p> to mark the predicate of the input sentence. We adopt Adam as optimizer, and the warmup rate is 0.05, the initial learning rate is 1 × 10

−5. For LLM-based methods, we select ChatGPT3.5 (

https://openai.com/blog/chatgpt, accessed on 1 September 2023), which is the top-performing open-source large language model. The temperature value of ChatGPT is set to 0.2. The detailed parameters are presented in

Appendix B.

Evaluation. For the ASpSR and SpSM tasks, we use the accuracy as the evaluation metric. For the SpRL task, we use the F1 score to evaluate the sequence labeling performance; this is denoted as RL-F1, and the extra label classification is denoted as Ext-F1. We also follow the human evaluation of SpaCE on CCL2023 to evaluate the rationales of the SpSM task. Concretely, we involved two evaluators to score the generated explanation for each SpSM question. A higher score indicates a clearer explanation of the reasoning behind identifying similarities and differences in spatial scenes. The scores are categorized into six levels ranging from 0 to 5:

5: Using external knowledge and worldly understanding to restate spatial scenes.

4: Rewrite the differences between the two descriptions and restate spatial scenes.

3: When determining that two descriptions are identical, the differing string is directly used as the reason without rewriting it, without restating the spatial scene.

2: (a) The explanations provided are related to spatial meaning but not specific to the spatial scene where the differing string is located; (b) When determining that two descriptions are NOT identical, the differing string is directly used as the reason without rewriting it, without restating the spatial scene.

1: The explanations provided are NOT related to spatial meaning.

0: (a) No explanations or the explanations are not related to the questions; (b) Wrong differing string.

We report the average value of the two evaluators as the final score.

4.2. Main Results

Table 4 details the performance of the baselines and our CoT method. We observed that the vanilla prompts for LLMs were inferior to existing supervised methods, but the ICL and CoT knowledge significantly boosted it, especially when at least one demonstration was used. Our Spatial-CoT method further outperformed the others on all three spatial language understanding tasks. Overall, SpRL was more difficult than ASpSR and SpSM.

Under the one-shot setting, the vanilla prompt and vanilla CoT methods were transformed into ICL-based methods, and these are denoted as ICL and vanilla CoT-ICL. The demonstration is randomly selected from the training set. We can see that the ICL could enhance the LLM significantly when one demonstration was provided.

Specifically, with the zero-shot setting, the LLM-based methods were comparable to the full-setting supervised method and even outperformed it on the SpRL task, demonstrating the generation capabilities of LLMs. Moreover, our Spatial-CoT showed the following scores: 8.84 ASpSR ACC, 2.96 RL-F1, 3.01 Ext-F1, and 8.02 SpSM F1. These were absolute improvements on the SpaCE dataset in comparison with the vanilla prompt methods. The ICL method was able to enhance the prompt-based and CoT methods by providing a demonstration to guide the LLM, earning 9.11 ASpSR Acc, 0.06 RL-F1, and 2.19 SpSM F1 for the vanilla prompt, and 1.39 ASpSR Acc, 2.42 RL-F1, 2.42 Ext-F1, and 2.64 SpSM F1 for Spatial CoT.

For the SpSM task, there was no training set; thus, we only report the few-shot/zero-shot performance of the LLM-based methods. We also report the human evaluations of the generated rationales. Scores from 0 to 5 were chosen, where 5 was the highest. We recruited two students to score the generated rationales and report the average values. Compared with the vanilla prompt or CoT methods, our proposed Spatial-CoT was also able to generate more explicit rationales.

4.3. Analysis

4.3.1. Few-Shot Analysis

To explore the impact of the scale of the training set and demonstrations, we compare the few-shot performance of each method. In few-shot supervised methods, i.e., BERT/MRC-SRL, we randomly select 1, 2, 4, 8, and 16 samples from the original training set for fine-tuning. In few-shot LLM-ICL methods, the selected samples with their ground-truth are used as demonstrations.

The results are shown in

Figure 3. For the BERT-based methods, the x-axis denotes the number of training samples, while for the ICL-based methods, it denotes the number of demonstrations. We found that, with the few-shot setting, the LLM-based methods outperformed the supervised method, although the gap gradually decreased with an increasing number of training samples. In settings with a small number of samples, the supervised model may not have been well trained, while the LLM-based ICL was able to leverage rich common knowledge to process the reasoning. The CoT method further outperformed the others due to the rational design of the fine-grained reasoning steps. The curve of the supervised method was steeper than those of the LLM-based methods, especially on the 0 point. This demonstrated that the supervised model was sensitive to the scale of the training sample. In contrast, the ICL-based methods were almost unaffected by the number of demonstrations, as long as at least one was provided.

4.3.2. Step Ablation

To illustrate the necessity of each reasoning step, a step ablation study was conducted. We display the quantified contributions of each reasoning step of Spatial-CoT in

Table 5. The results reveal that the entity analyzer, context analyzer, and common knowledge analyzer steps all contributed to the tasks. The entity analyzer contributed the most because it provided the most important entity clues for spatial reasoning. Additionally, we found that the CA and CKA contributed to the reasoning, but their order did not affect the final performance much.

Moreover, the order of each step also matters. We experimented on a sampled subset of SpaCE and explored the performance when using different chain orders. We found that the EA-CA-CKA reasoning sequence performed better than any other chain order.

4.3.3. Prompt Analysis

We also explored the performance with different prompt templates. We tested some prompts that contained different keywords for instructions. The results are shown in

Figure 4. The label “w/o Role Instructions” denotes that the prompt does not contain role instructions such as “You are acting as an entity analyzer”, and the label “w/o Step Instructions” denotes that the prompt does not contain instructions such as “based on the previous results…”. We found that it is useful to use suitable instructions in the CoT prompt, which can guide the LLM with a presupposed role and force it to reason with clues generated from reasoning chains. These instructions could improve each task by at least 1–2 points based on our investigation in

Figure 4. We also explored whether different expressions of the same instruction would affect the final results; we tried many phrases, such as “

You are trying to extract entities from…” and “

You are now an entity analyzer.”, to replace the instruction “

You are acting as an entity analyzer”. We found that the expressions of these instructions hardly affected the results, while their existence mattered.

4.3.4. Case Study

We qualitatively assessed the effectiveness of our CoT methods for the Chinese phenomenon of polysemia and the substitution of synonyms through some case studies. As shown in

Figure 5, we chose some typical cases from the SpaCE dataset. We can see in the case “她不会匍匍前进,也不能快跑。她干脆直着身子,一摇一摆,慢慢地向方场上走去。一段还没有炸断的铁栏杆拦在她里面,她也不打算跨过去。她太衰老了,跨不过去,因此慢慢地绕过了那段铁栏杆,走进了方场。” (“She couldn’t crawl forward, nor could she run quickly. She simply stood upright, swaying gently, and slowly walked toward the open field. A segment of iron railing, which had not yet been blown apart, blocked her in. She had no intention of crossing it either. She was too old to do so. Therefore, she slowly went around that section of iron railing and entered the open field.”), there is an abnormal expression, “一段还没有炸断的铁栏杆拦在她里面”, where “里面” (in her) should be “前面” (on her way). The vanilla prompt could not recognize this mistake, as shown in the top-left panel of

Figure 5. Spatial CoT still failed to recognize the mistake based only on the entity and context clues. When prompted with “the common sense” and “language usage” instructions, the LLMs finally refined the decision and gave the abnormal words.

For the case “乒乓球先是旋转了几下,接着从台面滚落到地上” and “乒乓球先是旋转了几下,接着从台面滚落到地下”, the two sentences have the same meaning: “The ping pong ball first spun a few times, and then rolled off the table onto the ground”. Based on Chinese language usage, the only difference in the two sentences, “落到地上” and “落到地下”, is actually an expression of the same action—“fall to the ground”. However, with the vanilla prompt, the LLM output that the two descriptions did not describe the same spatial scene, as shown in top-right panel of

Figure 5. When feeding the instruction “based on common sense and language usage”, our Spatial-CoT successfully guided the LLM to give the right decision.

5. Conclusions and Future Work

In this study, we investigated Chinese spatial language understanding with LLMs. We proposed the Spatial-CoT framework, which divides the reasoning process into three steps, namely, entity extraction, context analysis, and common knowledge analysis, and we designed a corresponding prompt template for Chinese SLU. First was a step for entity extraction, which provided base-entity-level clues for spatial reasoning. Next, the context analyzer employed the entity clues to make a preliminary prediction based on the input context. Finally, common knowledge was checked, and the output was refined based on common sense and Chinese language usage. With the proposed Spatial-CoT framework, an LLM can reason from a holistic perspective, thus effectively addressing the phenomena of polysemy and synonym substitution in Chinese spatial language understanding. On the SpaCE dataset, our strategy demonstrated superiority over vanilla prompt-learning methods, and it had competitive performance with respect to fully trained supervised models.

Finally, we discuss the limitations. This work has the following major limitations. First, our method needs a pre-trained large language model, which is expensive and time-consuming in the pre-training stage. Accessing the API-based LLM, such as ChatGPT4.0, also comes at a considerable cost. We will also continue studying how to use an open source large language model to solve spatial language understanding problems. Second, the generalization of our method remains to be tested. More experiments on other spatial understanding tasks should be verified. We will leave these studies in the future. Furthermore, we also plan to explore the following directions. For an in-depth exploration of the spatial language understanding in other languages, more high-quality multilingual data should be built. In another direction, we could extend this task to multi-modal settings, which could be applied to many real-life scenarios, such as vision and language navigation, remote manipulation, and robotics.