A Novel CA-RegNet Model for Macau Wetlands Auto Segmentation Based on GF-2 Remote Sensing Images

Abstract

:1. Introduction

2. Study Region Overview

3. Data and Methodology

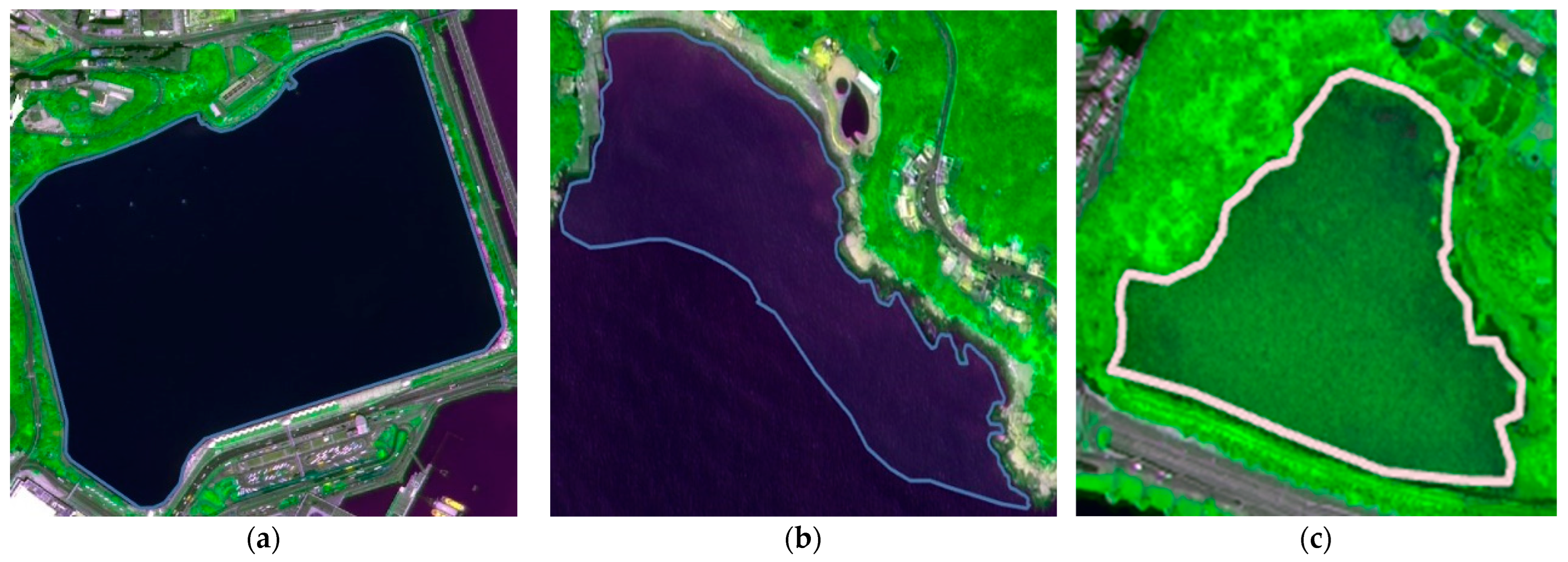

3.1. Dataset

3.2. Deep Learning Models

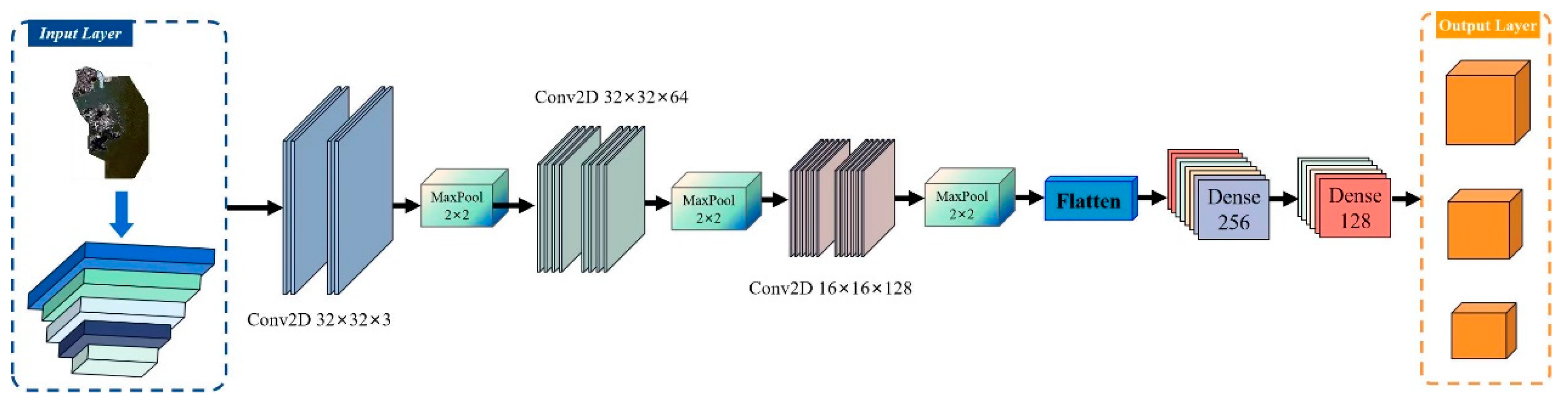

3.2.1. ResNet Model

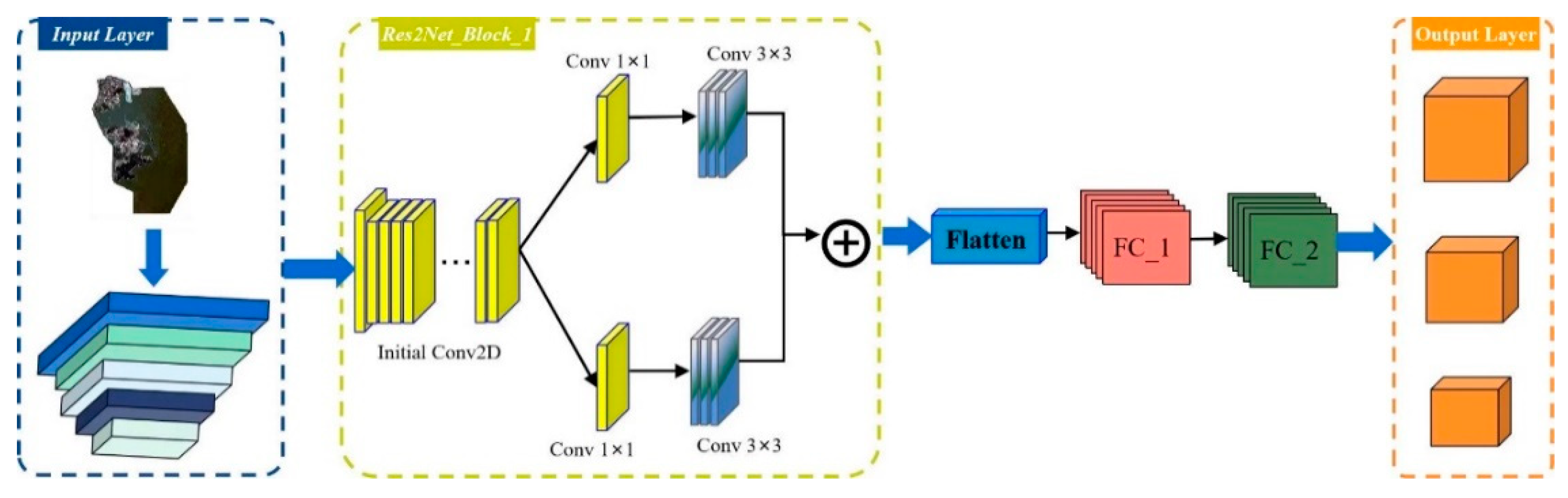

3.2.2. The Res2Net Model

3.2.3. RegNet Model

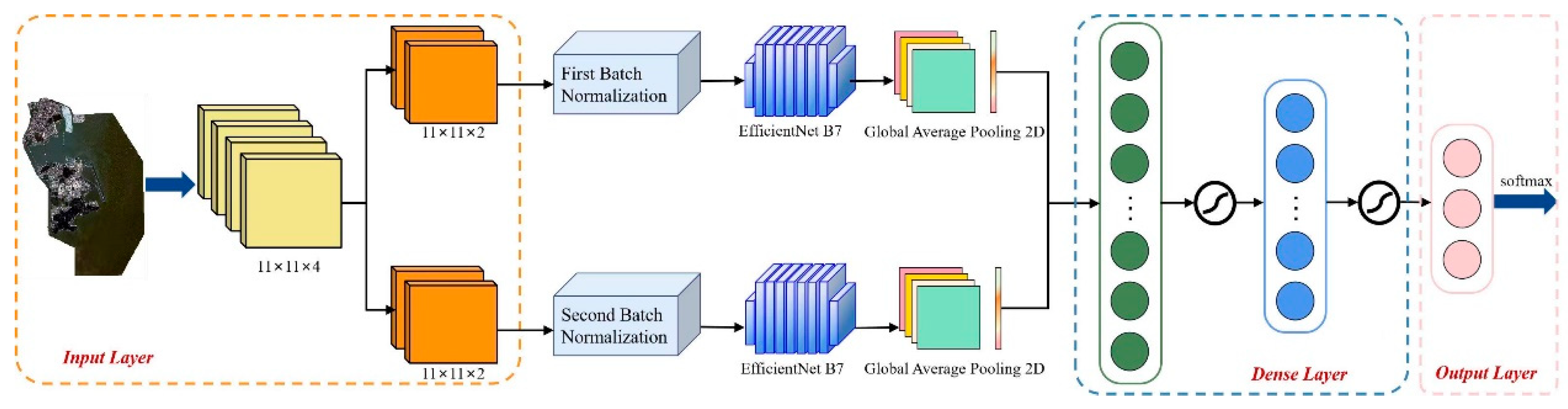

3.2.4. EfficientNet B7 Model

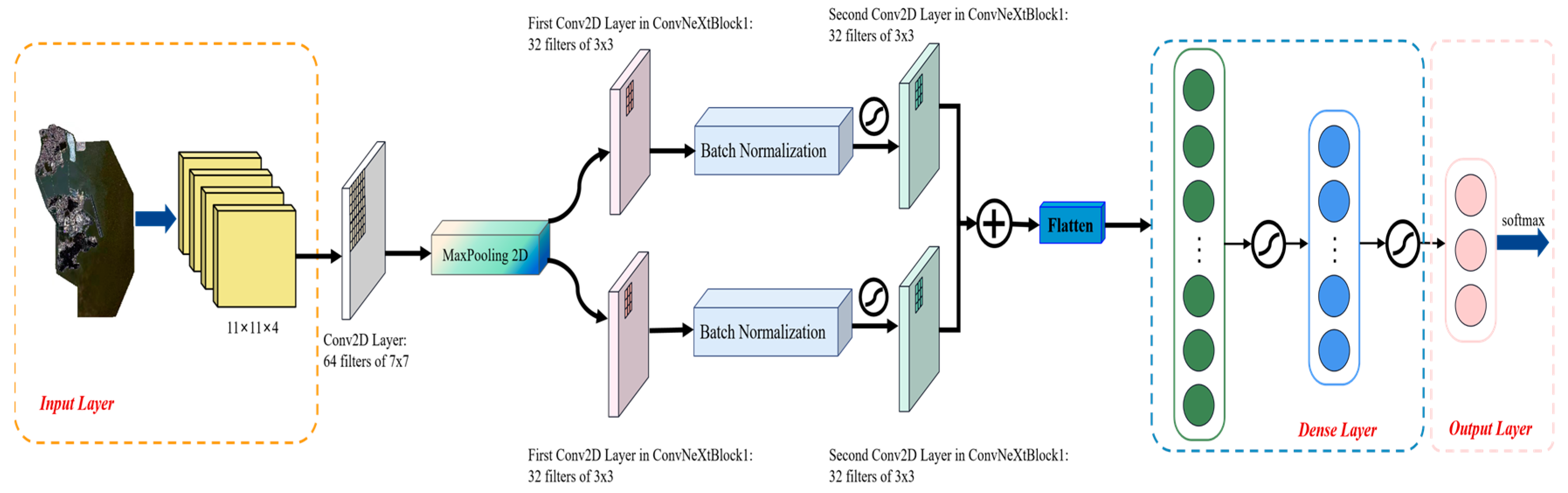

3.2.5. ConvNeXt Model

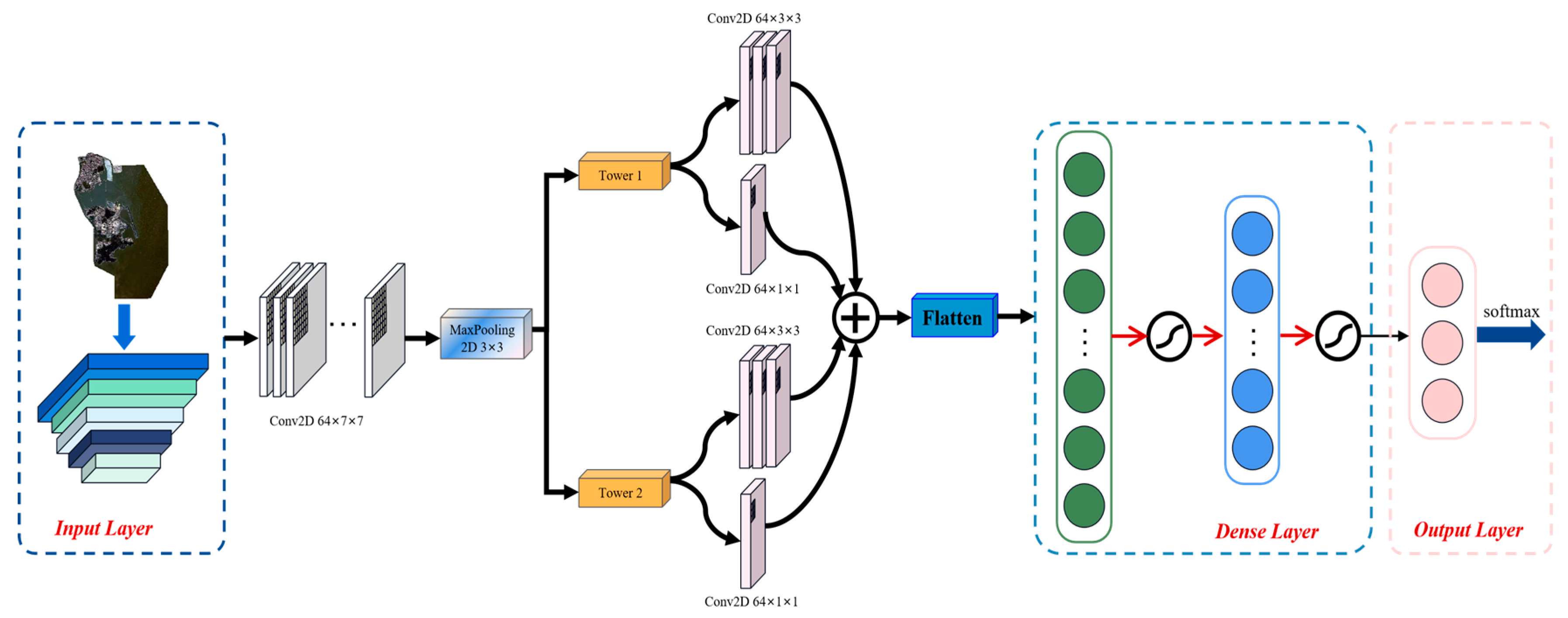

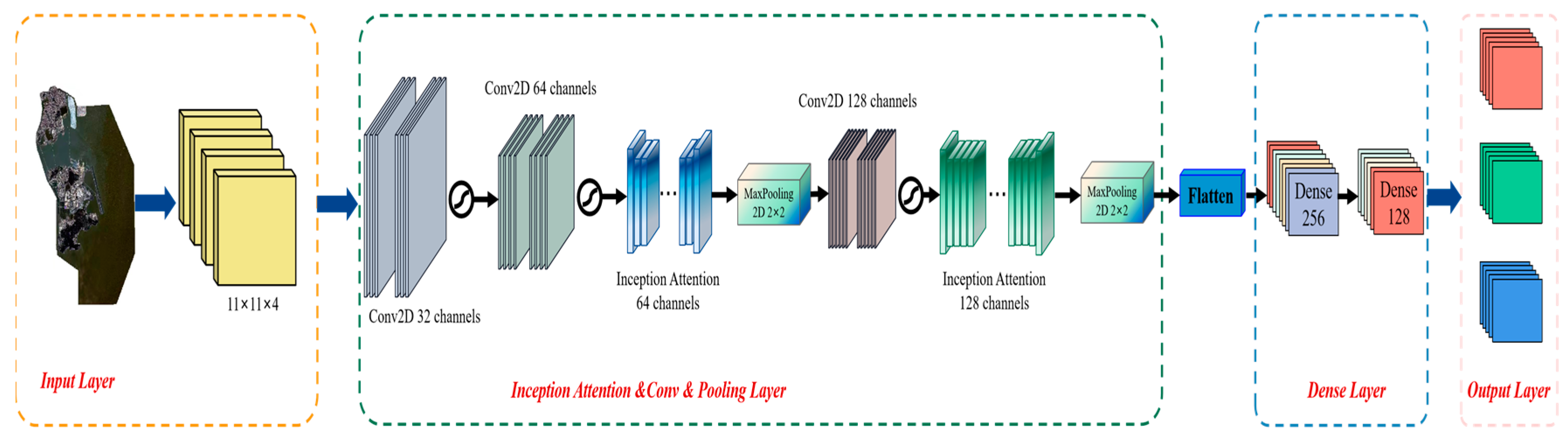

3.2.6. Inception-RegNet Model

3.2.7. SE-RegNet Model

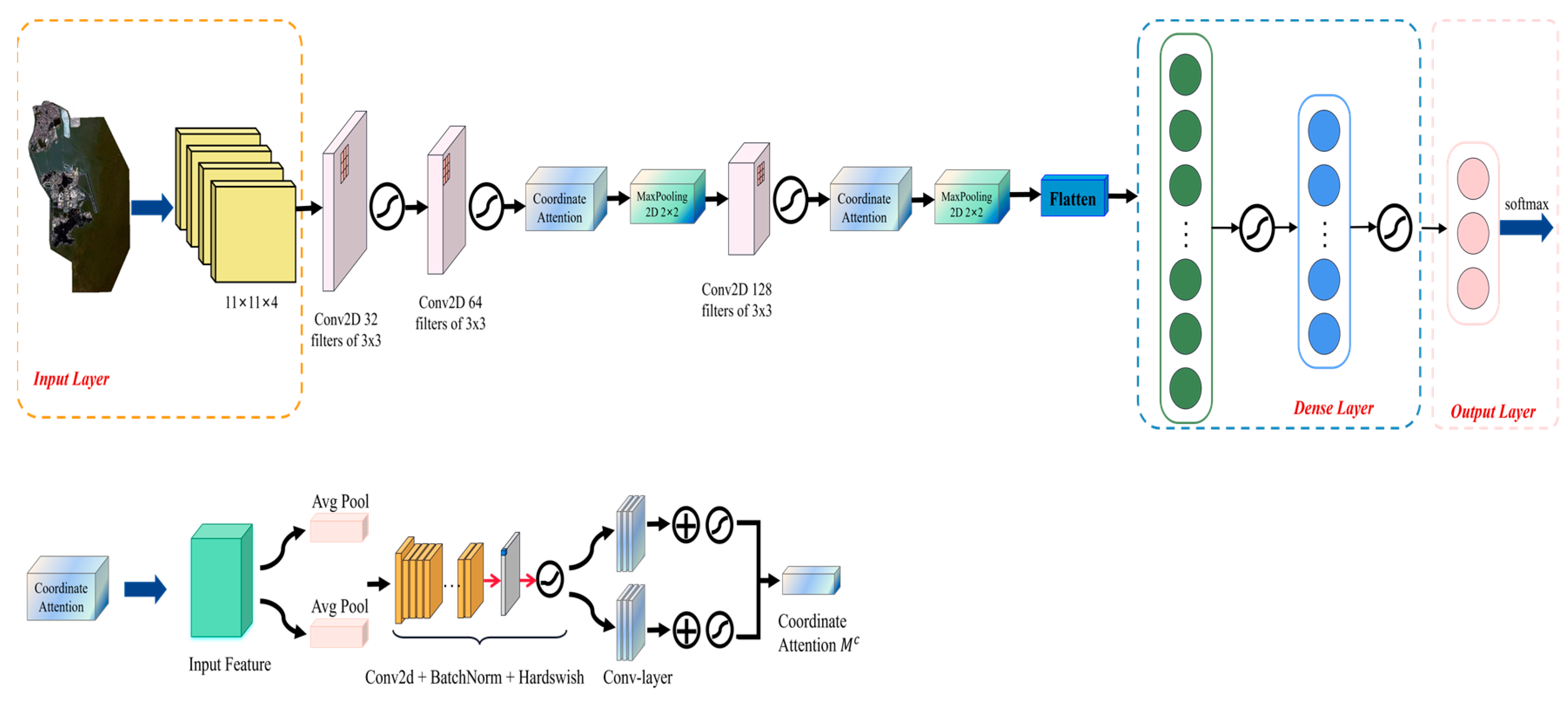

3.2.8. CA-RegNet Model

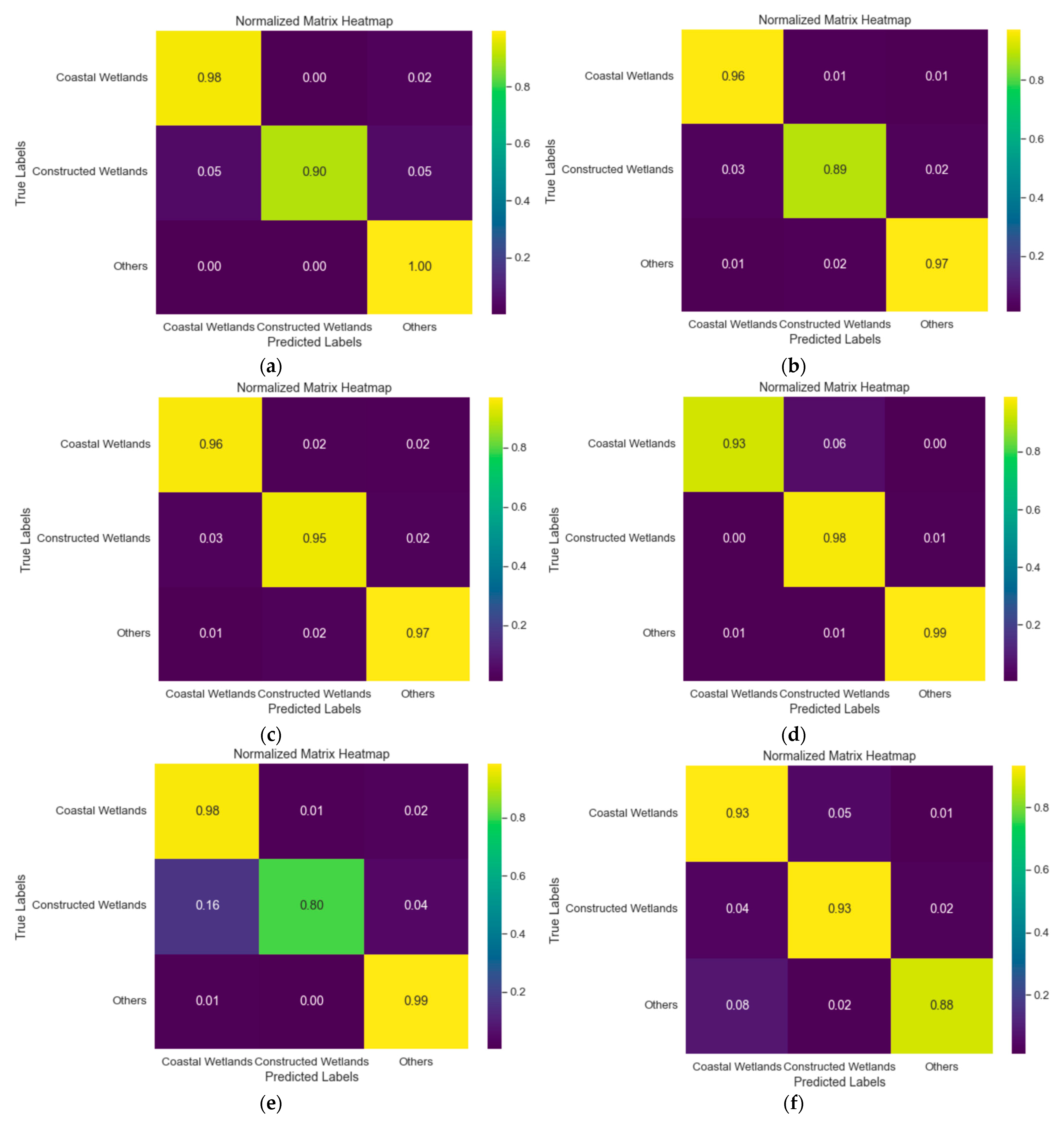

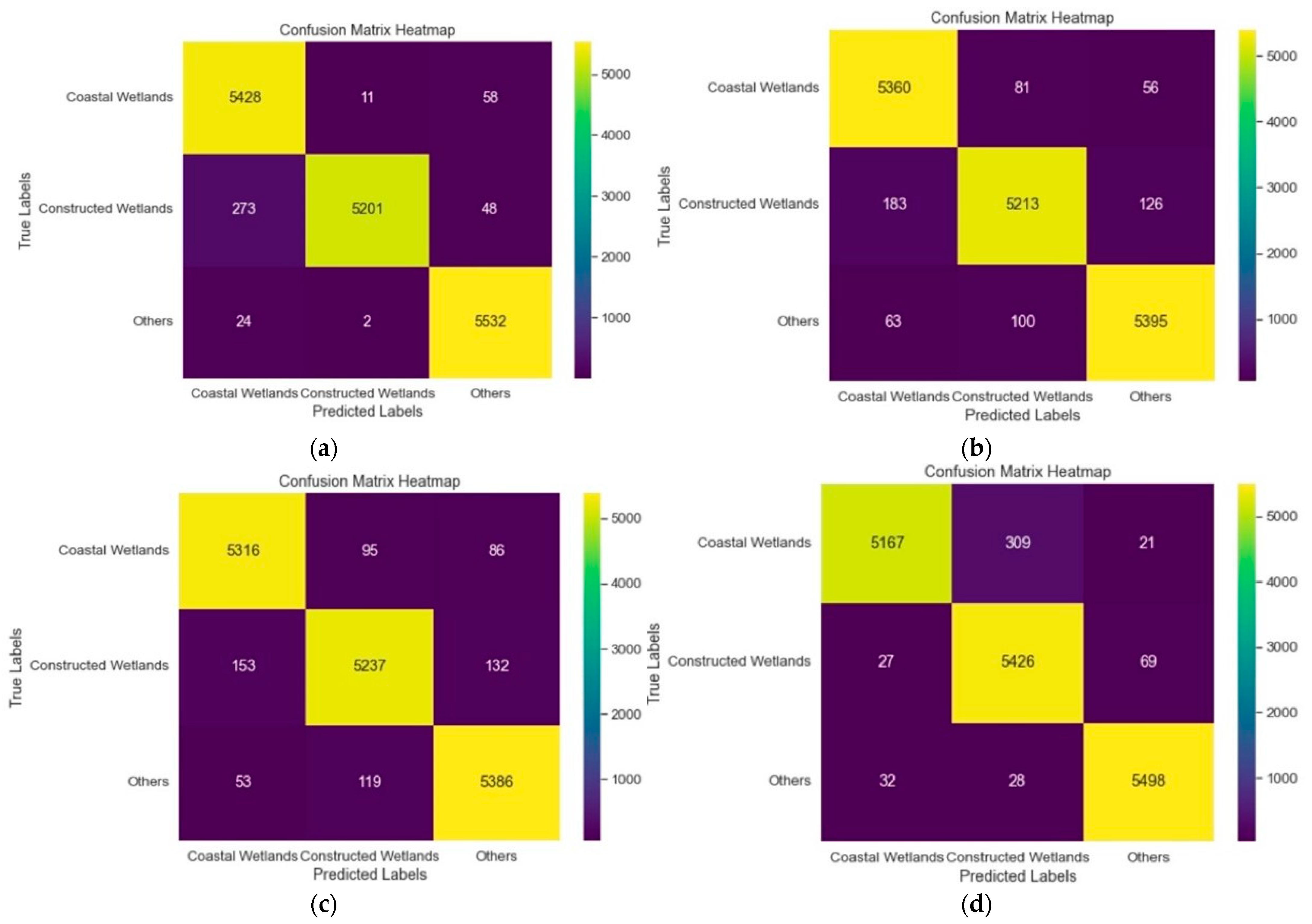

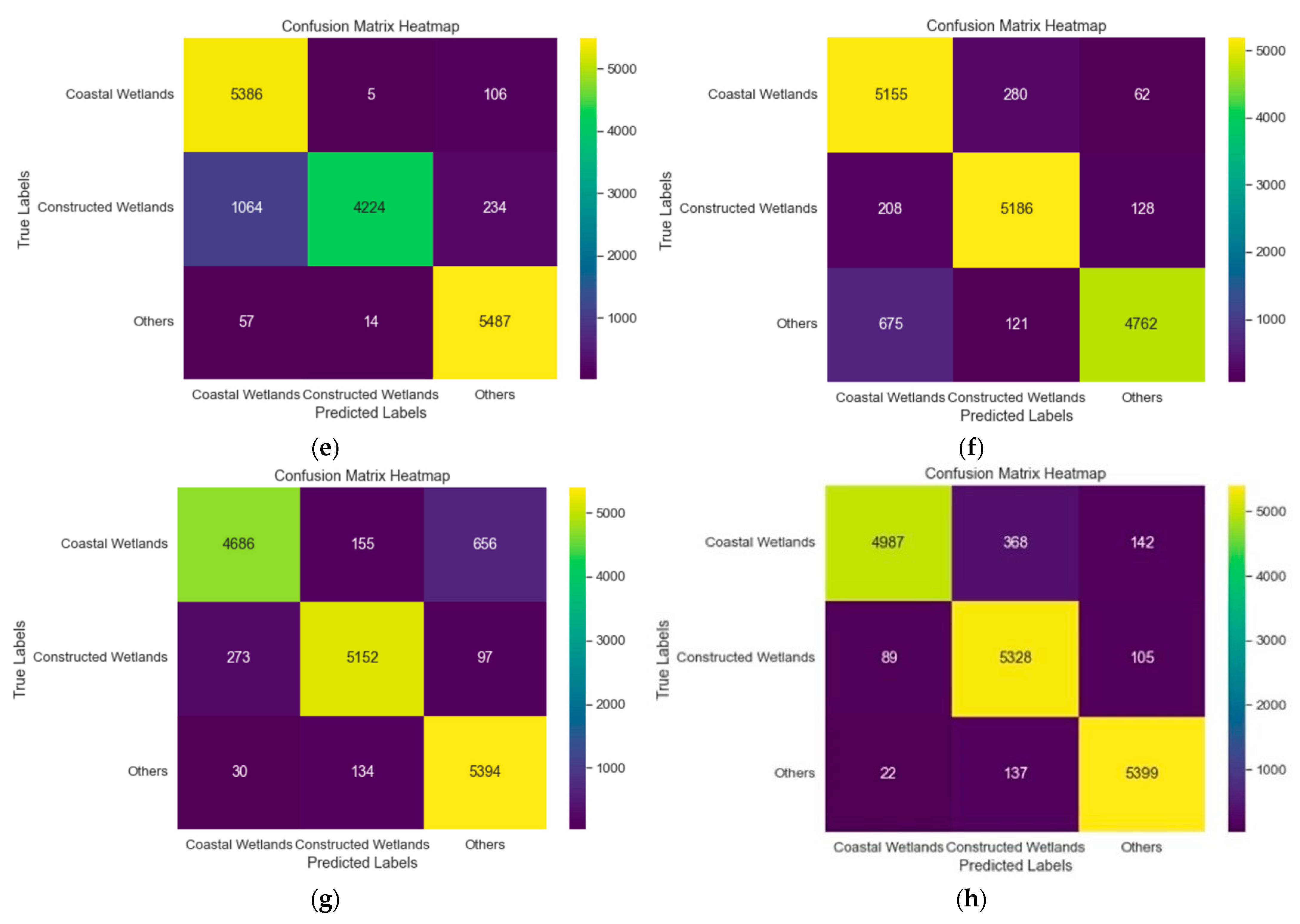

4. Results Show

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nunziata, F.; Ferrentino, E.; Marino, A.; Buono, A.; Migliaccio, M. Monitoring Harsh Costal Environments Using Polarimetric SAR Data: The Case of Solway Firth Wetlands. In Proceedings of the IEEE International Symposium on Geoscience and Remote Sensing IGARSS, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 4019–4022. [Google Scholar]

- Lin, X.F.; Cheng, Y.; Chen, G.; Chen, W.J.; Chen, R.; Gao, D.M.; Zhang, Y.L.; Wu, Y.B. Semantic Segmentation of China’s Coastal Wetlands Based on Sentinel-2 and Segformer. Remote Sens. 2023, 15, 3714. [Google Scholar] [CrossRef]

- Hosseiny, B.; Mahdianpari, M.; Brisco, B.; Mohammadimanesh, F.; Salehi, B. WetNet: A Spatial-Temporal Ensemble Deep Learning Model for Wetland Classification Using Sentinel-1 and Sentinel-2. IEEE Trans. Geosci. Remote Sens. 2021, 60, 4406014. [Google Scholar] [CrossRef]

- Jiao, L.L.; Sun, W.W.; Yang, G.; Ren, G.B.; Liu, Y.N. A Hierarchical Classification Framework of Satellite Multispectral/Hyperspectral Images for Mapping Coastal Wetlands. Remote Sens. 2019, 19, 2238. [Google Scholar] [CrossRef]

- Huang, Y.; Peng, J.T.; Chen, N.; Sun, W.W.; Du, Q.; Ren, K.; Huang, K. Cross-scene wetland mapping on hyperspectral remote sensing images using adversarial domain adaptation network. ISPRS J. Photogramm. Remote Sens. 2023, 203, 37–54. [Google Scholar] [CrossRef]

- Gina, L.; Saby, L.; Band, E.; Goodall, L. Effects of LiDAR DEM Smoothing and Conditioning Techniques on a Topography-Based Wetland Identification Model. Water Resour. Res. 2019, 55, 4343–4363. [Google Scholar]

- Li, Y.F.; Wang, C.; Wright, A.; Liu, H.Y.; Zhang, H.B.; Zong, Y. Combination of GF-2 high spatial resolution imagery and land surface factors for predicting soil salinity of muddy coasts. CATENA 2021, 202, 105304. [Google Scholar] [CrossRef]

- Dong, F.; Yang, F.; Liu, Z.H.; Wang, X. Spatial distribution of wetland vegetation biomass in the Longhu area using GF-2 data. IOP Conf. Ser. Earth Environ. Sci. 2019, 233, 042042. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A. An Object-Based Image Analysis Method for Enhancing Classification of Land Covers Using Fully Convolutional Networks and Multi-View Images of Small Unmanned Aerial System. Remote Sens. 2018, 10, 457. [Google Scholar] [CrossRef]

- Duro, D.C.; Franklin, S.E.; Dube, M.C. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classifications of agricultural landscapes using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Li, X.; Xu, F.; Lyu, X.; Gao, H.; Tong, Y.; Cai, S.; Li, S.; Liu, D. Dual attention deep fusion semantic segmentation networks of large-scale satellite remote-sensing images. Int. J. Remote Sens. 2021, 42, 3583–3610. [Google Scholar] [CrossRef]

- Hu, Q.; Woldt, W.; Neale, C.; Zhou, Y.Z.; Drahota, J.; Varner, D.; Bishop, A.; LaGrange, T.; Zhang, L.G.; Tang, Z.H. Utilizing unsupervised learning, multi-view imaging, and CNN-based attention facilitates cost-effective wetland mapping. Remote Sens. Environ. 2021, 267, 112757. [Google Scholar] [CrossRef]

- Lopez-Taipa, S.; Ruiz, P.; Smith, M.; Matthews, J.; Zercher, B.; Sydorenko, L.; Varia, N.; Jin, Y.Z.; Wang, M.Z.; Dunn, J.B.; et al. Machine Learning with high-resolution aerial imagery and data fusion to improve and automate the detection of wetlands. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102581. [Google Scholar] [CrossRef]

- He, Z.; He, D.; Mei, X.; Hu, S. Wetland Classification Based on a New Efficient Generative Adversarial Network and Jilin-1 Satellite Image. Remote Sens. 2019, 11, 2455. [Google Scholar] [CrossRef]

- Ai, B.; Huang, K.; Zhao, J.; Sun, S.; Jian, Z.; Liu, X. Comparison of Classification Algorithms for Detecting Typical Coastal Reclamation in Guangdong Province with Landsat 8 and Sentinel 2 Images. Remote Sens. 2022, 14, 385. [Google Scholar] [CrossRef]

- Hou, Q.B.; Zhou, D.Q.; Feng, J.S. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13708–13717. [Google Scholar]

- Yao, W.; Francisco, V.P. Analysis on Ecological Principles in Macau Urban Design. In Proceedings of the International Conference on Intelligent Structure and Vibration Control, Chongqing, China, 14–16 January 2021; Volume 155. [Google Scholar]

- Huang, Y.; Teng, Y.P.; Yang, S. Evaluation of the Sustainable Development of Macau, Based on the BP Neural Network. Sustainability 2023, 15, 879. [Google Scholar] [CrossRef]

- Wu, X.P.; Xu, H.Q. Cross-Comparison between GF-2 PMS2 and ZY-3 MUX Sensor Data. Spectrosc. Spectr. Anal. 2019, 39, 310–318. [Google Scholar]

- Rezaee, M.; Mahdianpari, M.; Zhang, Y.; Salehi, B. Deep convolutional neural network for complex wetland classification using optical remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 3030–3039. [Google Scholar] [CrossRef]

- Wu, N.; Shi, R.; Zhuo, W.; Zhang, C.; Zhou, B.; Xia, Z.; Tao, Z.; Gao, W.; Tian, B. A Classification of Tidal Flat Wetland Vegetation Combining Phenological Features with Google Earth Engine. Remote Sens. 2021, 13, 443. [Google Scholar] [CrossRef]

- Munizaga, J.; García, M.; Ureta, F.; Novoa, V.; Rojas, O.; Rojas, C. Mapping Coastal Wetlands Using Satellite Imagery and MachineLearning in a Highly Urbanized Landscape. Sustainability 2022, 14, 5700. [Google Scholar] [CrossRef]

- Nisa, S.Q.; Ismail, A.R. Dual U-Net with Resnet Encoder for Segmentation of Medical Images. Int. J. Adv. Comput. Sci. Appl. 2023, 13, 537–542. [Google Scholar] [CrossRef]

- Yang, H.L.; Liu, Y.H.; Xia, T. Defect Detection Scheme of Pins for Aviation Connectors Based on Image Segmentation and Improved RESNET-50. Int. J. Image Graph. 2023, 2450011. [Google Scholar] [CrossRef]

- Zhou, Z.; Zhou, Z. Remote Sensing Image Retrieval with Gabor-CA-ResNet and Split-Base Deep Feature Transform Network. Remote Sens. 2021, 13, 869. [Google Scholar] [CrossRef]

- Das, A.; Chandran, S. Transfer Learning with Res2Net for Remote Sensing Scene Classification. In Proceedings of the 2021 11th International Conference on Cloud Computing Data Science Engineering, Noida, India, 28–29 January 2021; pp. 796–801. [Google Scholar]

- Xu, Q.; Xu, S.M.; Wang, D.Y.; Yang, C.; Liu, J.P.; Luo, B. Res2Net with spectral-spatial and channel attention for hyperspectral image classification. J. Intell. Fuzzy Syst. 2022, 43, 6765–6781. [Google Scholar] [CrossRef]

- Liu, Y.S.; Suen, C.Y.; Liu, Y.B.; Ding, L.Y. Scene Classification Using Hierarchical Wasserstein CNN. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2494–2502. [Google Scholar] [CrossRef]

- Yi, J.Y.; Tao, J.H.; Tian, Z.K.; Bai, Y.; Fan, C.H. Focal Loss for Punctuation Prediction. Interspeech 2020, 721–725. [Google Scholar] [CrossRef]

- Chen, Y.H.; Lee, P.J.; Bui, T.A. Multi-scales feature extraction model for water segmentation in satellite image. In Proceedings of the IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 6–8 January 2023. [Google Scholar]

- Yuan, J.Y.; Ma, X.; Han, G.; Li, S.; Gong, W. Research on Lightweight Disaster Classification Based on High Resolution Remote Sensing Images. Remote Sens. 2022, 14, 2577. [Google Scholar] [CrossRef]

- Subedi, A.; Tang, W.; Mondal, T.G.; Wu, R.T.; Jahanshahi, R. Ensemble-based deep learning for autonomous bridge component and damage segmentation leveraging Nested Reg-UNet. Smart Struct. Syst. 2023, 31, 335–349. [Google Scholar]

- Ahmed, K.R.; Jalil, S.Z.A.; Usman, S. Improved Tuna Swarm-based U-EfficientNet: Skin Lesion Image Segmentation by Improved Tuna Swarm Optimization. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 903–913. [Google Scholar] [CrossRef]

- Wang, J.K.; Li, X.; Cheng, Y.Z. Towards an extended EfficientNet-based U-Net framework for joint optic disc and cup segmentation in the fundus image. Biomed. Signal Process. Control 2023, 85, 104906. [Google Scholar] [CrossRef]

- Kwak, T.; Kim, Y. Semi-Supervised Land Cover Classification of Remote Sensing Imagery Using CycleGAN and EfficientNet. KSCE J. Civ. Eng. 2023, 27, 1760–1773. [Google Scholar] [CrossRef]

- Feng, J.W.; Tan, H.L.; Li, W.W.; Xie, M. Conv2NeXt: Reconsidering ConvNeXt Network Design for Image Recognition. In Proceedings of the International Conference on Computers and Artificial Intelligence Technologies (CAIT), Quzhou, China, 4–6 November 2022; pp. 53–60. [Google Scholar]

- Zhou, F.Y.; Deng, H.G.; Xu, Q.G.; Lan, X. CNTR-YOLO: Improved YOLOv5 Based on ConvNeXt and Transsformer Aircraft Detection in Remote Sensing Images. Electronics 2023, 12, 2671. [Google Scholar] [CrossRef]

- Song, F.; Ma, R.F.; Lei, T.; Peng, Z.M. RAIH-Det: An End-to-End Rotated Aircraft and Aircraft Head Detector Based on ConvNeXt and Cyclical Focal Loss in Optical Remote Sensing Images. Remote Sens. 2023, 15, 2364. [Google Scholar] [CrossRef]

- Peng, Y.F.; Deng, J.N.; Gang, W. Remote sensing image data enhancement based on improved SinGAN. Chin. J. Liq. Cryst. Disp. 2023, 38, 387–396. [Google Scholar] [CrossRef]

- Das, B.; Saha, A.; Mukhopadhyay, S. Rain Removal from a Single Image Using Refined Inception ResNet v2. Circuits Syst. Signal Process. 2023, 42, 3485–3508. [Google Scholar] [CrossRef]

- Thomas, A.; Harikrishnan, P.M.; Palanisamy, P.; Gopi, V.P. Moving Vehicle Candidate Recognition and Classification Using Inception-ResNet-v2. In Proceedings of the 2020 IEEE 44th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 13–17 July 2020; pp. 467–472. [Google Scholar]

- He, J.; Jiang, D. Fully Automatic Model Based on SE-ResNet for Bone Age Assessment. IEEE Access 2021, 9, 62460–62466. [Google Scholar] [CrossRef]

- Yue, C.; Ye, M.Q.; Wang, P.P.; Huang, D.B.; Lu, X.J. Generative Adversarial Network Combined with SE-ResNet and Dilated Inception Block for Segmenting Retinal Vessels. Comput. Intell. Neurosci. 2022, 2022, 3585506. [Google Scholar] [CrossRef]

- Allain, B.S.; Marechal, C.; Pottier, C. Wetland water segmentation using multi-angle and polarimetric Radarsat-2 datasets. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Munich, Germany, 22–27 July 2012; pp. 4915–4917. [Google Scholar]

- Ke, Z.Y.; Ru, A.; Li, X.J. ANN based high spatial resolution remote sensing wetland classifcation. In Proceedings of the 2015 14th International Symposium on Distributed Computing and Applications for Business Engineering and Science (DCABES), Guiyang, China, 18–24 August 2015; pp. 180–183. [Google Scholar]

- Gui, Y.; Li, W.; Xia, X.G.; Tao, R.; Yue, A. Infrared attention network for woodland segmentation using multispectral satellite images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5627214. [Google Scholar] [CrossRef]

- Zhao, B.Y.; Zhang, M.M.; Wang, J.B.; Song, X.K.; Gui, Y.Y.; Zhang, Y.X.; Li, W. Multiple Attention Network for Spartina alterniflora Segmentation Using Multitemporal Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5402915. [Google Scholar] [CrossRef]

- Zhao, W.; Guo, Z.; Yue, J.; Zhang, X.; Luo, L. On combining multiscale deep learning features for the classification of hyperspectral remote sensing imagery. Int. J. Remote Sens. 2015, 36, 3368–3379. [Google Scholar] [CrossRef]

- Pande-Chhetri, R.; Abd-Elrahman, A.; Liu, T.; Morton, J.; Wilhelm, V.L. Objectbased classification of wetland vegetation using very high-resolution unmanned air system imagery. Eur. J. Remote Sens. 2017, 50, 564–576. [Google Scholar] [CrossRef]

- Drahota, J.; Reichart, L.M. Wetland seed availability for waterfowl in annual and perennial emergent plant communities of the Rainwater Basin. Wetlands 2015, 35, 1105–1116. [Google Scholar] [CrossRef]

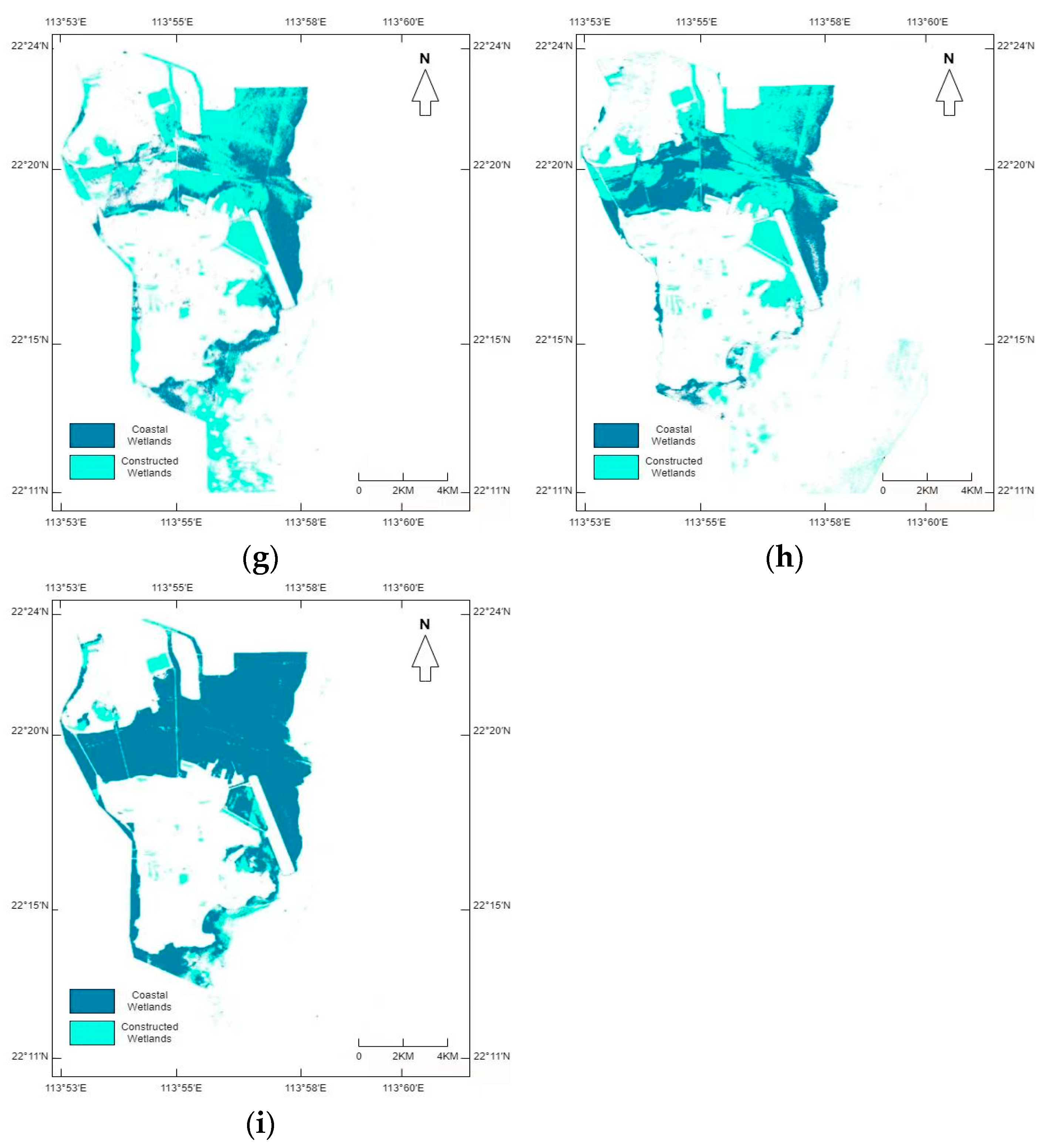

| Coastal Wetlands UA | Coastal Wetlands PA | Coastal Wetlands F1-Score | Constructed Wetlands UA | Constructed Wetlands PA | Constructed Wetlands F1-Score | Others UA | Others PA | Others 1-Score | |

|---|---|---|---|---|---|---|---|---|---|

| ResNet | 0.9782 | 0.9072 | 0.9447 | 0.9134 | 0.9649 | 0.9587 | 0.9563 | 0.9714 | 0.9809 |

| Res2Net | 0.8542 | 0.9305 | 0.8909 | 0.9247 | 0.9481 | 0.9324 | 0.9707 | 0.8964 | 0.9420 |

| RegNet | 0.9887 | 0.9400 | 0.9277 | 0.9415 | 0.9826 | 0.9473 | 0.9839 | 0.9892 | 0.9649 |

| EfficientNet B7 | 0.9767 | 0.8444 | 0.9053 | 0.8099 | 0.9943 | 0.8901 | 0.9851 | 0.9422 | 0.9632 |

| ConvNeXt | 0.9325 | 0.8669 | 0.8988 | 0.9279 | 0.9295 | 0.9287 | 0.8794 | 0.8568 | 0.9167 |

| Inception-RegNet | 0.9627 | 0.9671 | 0.9637 | 0.9607 | 0.9484 | 0.9465 | 0.9611 | 0.9691 | 0.9673 |

| SE-RegNet | 0.9561 | 0.9751 | 0.9709 | 0.9664 | 0.9440 | 0.9443 | 0.9674 | 0.9707 | 0.9704 |

| CA-RegNet | 0.9481 | 0.9874 | 0.9674 | 0.9975 | 0.9419 | 0.9488 | 0.9812 | 0.9953 | 0.9891 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Cui, H.; Tian, X. A Novel CA-RegNet Model for Macau Wetlands Auto Segmentation Based on GF-2 Remote Sensing Images. Appl. Sci. 2023, 13, 12178. https://doi.org/10.3390/app132212178

Li C, Cui H, Tian X. A Novel CA-RegNet Model for Macau Wetlands Auto Segmentation Based on GF-2 Remote Sensing Images. Applied Sciences. 2023; 13(22):12178. https://doi.org/10.3390/app132212178

Chicago/Turabian StyleLi, Cheng, Hanwen Cui, and Xiaolin Tian. 2023. "A Novel CA-RegNet Model for Macau Wetlands Auto Segmentation Based on GF-2 Remote Sensing Images" Applied Sciences 13, no. 22: 12178. https://doi.org/10.3390/app132212178

APA StyleLi, C., Cui, H., & Tian, X. (2023). A Novel CA-RegNet Model for Macau Wetlands Auto Segmentation Based on GF-2 Remote Sensing Images. Applied Sciences, 13(22), 12178. https://doi.org/10.3390/app132212178