RS Transformer: A Two-Stage Region Proposal Using Swin Transformer for Few-Shot Pest Detection in Automated Agricultural Monitoring Systems

Abstract

:1. Introduction

- (1)

- RS Transformer: a novel model based on the region proposal network (RPN), Swin Transformer, and ROI Align, for few-shot detection of pests at different scales.

- (2)

- RGSDD: a new training strategy method named the Randomly Generate Stable Diffusion Dataset is introduced to expand small pest images to effectively classify and detect pests in a few-shot learning scenario.

- (3)

2. Materials and Methods

2.1. Pest Dataset

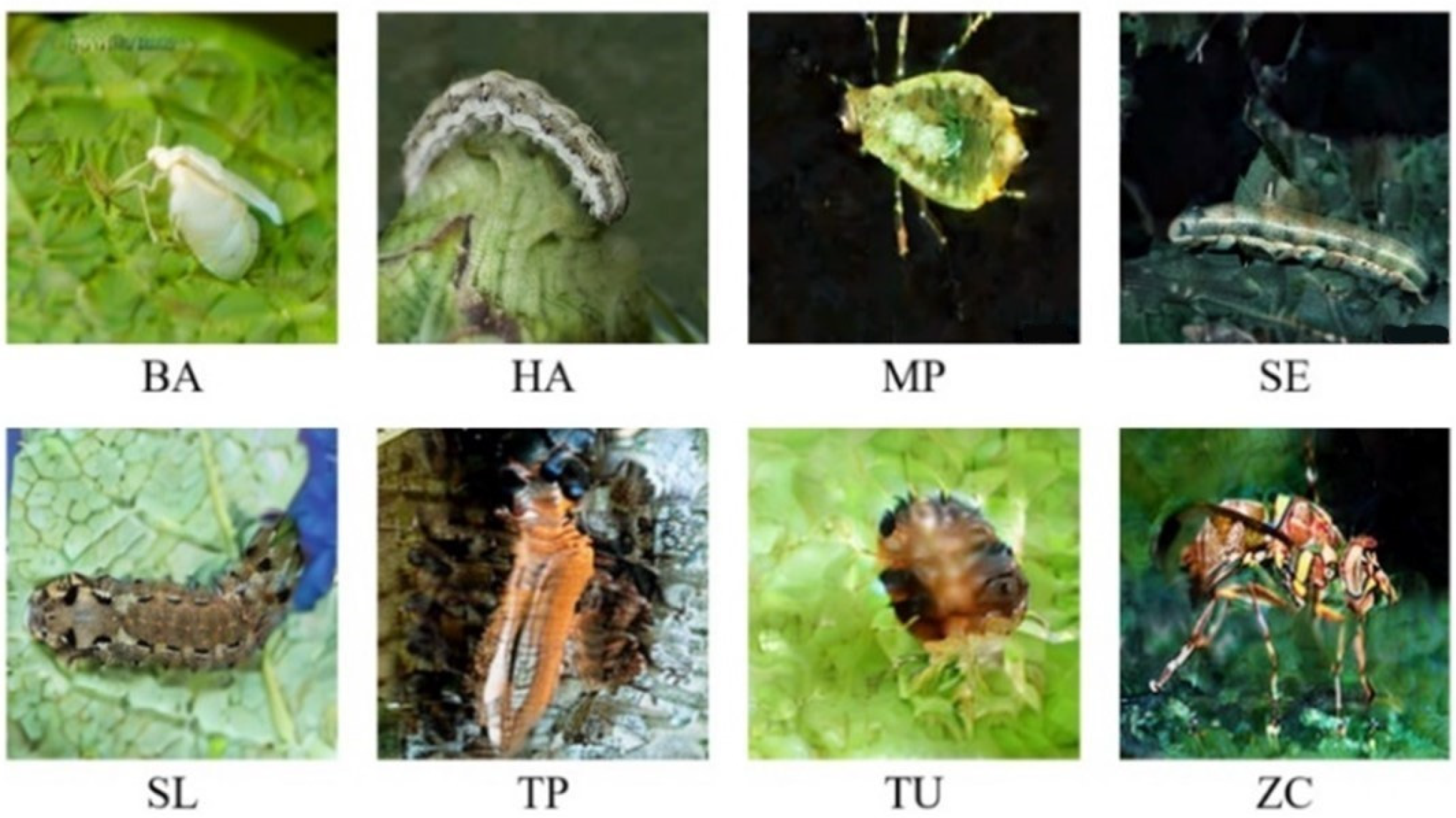

2.1.1. Real Pest Image Dataset

2.1.2. Dataset Generation

2.1.3. Dataset Enhancement

2.2. Framework of the Proposed Method

2.3. RS Transformer

2.3.1. Swin Transformer Backbone

2.3.2. RS Transformer Neck: FPN

2.3.3. RS Transformer Head: RPN, ROI Align

ROI Align

2.4. Experimental Setup

2.5. Evaluation Indicators

2.6. Experimental Baselines

3. Results and Discussion

3.1. Experimental Results and Analysis

3.2. Comparison Results Summary

3.3. Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Merle, I.; Hipólito, J.; Requier, F. Towards integrated pest and pollinator management in tropical crops. Curr. Opin. Insect Sci. 2022, 50, 100866. [Google Scholar] [CrossRef] [PubMed]

- Kannan, M.; Bojan, N.; Swaminathan, J.; Zicarelli, G.; Hemalatha, D.; Zhang, Y.; Ramesh, M.; Faggio, C. Nanopesticides in agricultural pest management and their environmental risks: A review. Int. J. Environ. Sci. Technol. 2023, 20, 10507–10532. [Google Scholar] [CrossRef]

- Bras, A.; Roy, A.; Heckel, D.G.; Anderson, P.; Karlsson Green, K. Pesticide resistance in arthropods: Ecology matters too. Ecol. Lett. 2022, 25, 1746–1759. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. Int. J. Comput. Vis. 2018, 127, 74–91. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. Electronics 2020, 9, 1719. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Dong, Z.; Gao, M. Improved YOLOv5 network for real-time multi-scale traffic sign detection. Neural. Comput. Appl. 2023, 35, 7853–7865. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Montreal, QC, Canada, 7–12 December 2015; Curran Associates, Inc.: Red Hook, NY, USA, 2015; pp. 91–99. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 5998–6008. [Google Scholar]

- Brasoveanu, A.M.P.; Andonie, R. Visualizing Transformers for NLP: A Brief Survey. In Proceedings of the 2020 24th International Conference Information Visualisation (IV), Melbourne, Australia, 7–11 September 2020; IEEE: Melbourne, Australia, 2020; pp. 270–279. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale 2021. arXiv 2021, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows 2021. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 17–21 October 2021. [Google Scholar] [CrossRef]

- Li, W.; Zheng, T.; Yang, Z.; Li, M.; Sun, C.; Yang, X. Classification and Detection of Insects from Field Images Using Deep Learning for Smart Pest Management: A Systematic Review. Ecological. Inform. 2021, 66, 101460. [Google Scholar] [CrossRef]

- Sohl-Dickstein, J.; Weiss, E.A.; Maheswaranathan, N.; Ganguli, S. Deep Unsupervised Learning Using Nonequilibrium Thermodynamics 2015. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, Z.; Song, Y.; Hong, S.; Xu, R.; Zhao, Y.; Zhang, W.; Cui, B.; Yang, M.-H. Diffusion Models: A Comprehensive Survey of Methods and Applications. ACM Comput. Surv. 2022, 10, 123–145. [Google Scholar] [CrossRef]

- Aggarwal, A.; Mittal, M.; Battineni, G. Generative Adversarial Network: An Overview of Theory and Applications. Int. J. Inf. Manag. Data Insights 2021, 1, 100004. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models 2020. arXiv 2020, arXiv:2006.11239. [Google Scholar] [CrossRef]

- Dhariwal, P.; Nichol, A. Diffusion Models Beat GANs on Image Synthesis. Adv. Neural Inf. Process. Syst. 2021, 34, 8780–8794. [Google Scholar] [CrossRef]

- Ramesh, A.; Dhariwal, P.; Nichol, A.; Chu, C.; Chen, M. Hierarchical Text-Conditional Image Generation with CLIP Latents. arXiv 2022, arXiv:2204.06125. [Google Scholar]

- Liu, L.; Wang, R.; Xie, C.; Yang, P.; Wang, F.; Sudirman, S.; Liu, W. PestNet: An End-to-End Deep Learning Approach for Large-Scale Multi-Class Pest Detection and Classification. IEEE Access 2019, 7, 45301–45312. [Google Scholar] [CrossRef]

- Jiao, L.; Dong, S.; Zhang, S.; Xie, C.; Wang, H. AF-RCNN: An Anchor-Free Convolutional Neural Network for Multi-Categories Agricultural Pest Detection. Comput. Electron. Agric. 2020, 174, 105522. [Google Scholar] [CrossRef]

- Pattnaik, G.; Shrivastava, V.K.; Parvathi, K. Transfer Learning-Based Framework for Classification of Pest in Tomato Plants. Appl. Artif. Intell. 2020, 34, 981–993. [Google Scholar] [CrossRef]

- Lee, S.; Lin, S.; Chen, S. Identification of Tea Foliar Diseases and Pest Damage under Practical Field Conditions Using a Convolutional Neural Network. Plant Pathol. 2020, 69, 1731–1739. [Google Scholar] [CrossRef]

- Chen, C.-J.; Huang, Y.-Y.; Li, Y.-S.; Chen, Y.-C.; Chang, C.-Y.; Huang, Y.-M. Identification of Fruit Tree Pests with Deep Learning on Embedded Drone to Achieve Accurate Pesticide Spraying. IEEE Access 2021, 9, 21986–21997. [Google Scholar] [CrossRef]

- Wang, R.; Jiao, L.; Xie, C.; Chen, P.; Du, J.; Li, R. S-RPN: Sampling-Balanced Region Proposal Network for Small Crop Pest Detection. Comput. Electron. Agric. 2021, 187, 106290. [Google Scholar] [CrossRef]

- Peng, Y.; Wang, Y. CNN and Transformer Framework for Insect Pest Classification. Ecol. Inform. 2022, 72, 101846. [Google Scholar] [CrossRef]

- Ullah, N.; Khan, J.A.; Alharbi, L.A.; Raza, A.; Khan, W.; Ahmad, I. An Efficient Approach for Crops Pests Recognition and Classification Based on Novel DeepPestNet Deep Learning Model. IEEE Access 2022, 10, 73019–73032. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection 2021. arXiv 2020, arXiv:2010.04159. [Google Scholar] [CrossRef]

- Letourneau, D.K.; Goldstein, B. Pest Damage and Arthropod Community Structure in Organic vs. Conventional Tomato Production in California. Arthropod. Community Struct. J. Appl. Ecol. 2001, 38, 557–570. [Google Scholar] [CrossRef]

- Cubuk, E.D.; Zoph, B.; Mane, D.; Vasudevan, V.; Le, Q.V. AutoAugment: Learning Augmentation Strategies from Data. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 5 June 2019; IEEE: Long Beach, CA, USA, 2019; pp. 113–123. [Google Scholar]

- Thenmozhi, K.; Srinivasulu Reddy, U. Crop Pest Classification Based on Deep Convolutional Neural Network and Transfer Learning. Comput. Electron. Agric. 2019, 164, 104906. [Google Scholar] [CrossRef]

- Gong, T.; Chen, K.; Wang, X.; Chu, Q.; Zhu, F.; Lin, D.; Yu, N.; Feng, H. Temporal ROI Align for Video Object Recognition. AAAI 2021, 35, 1442–1450. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Computer Vision–ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2020; Volume 12346, pp. 213–229. ISBN 978-3-030-58451-1. [Google Scholar]

- Setiawan, A.; Yudistira, N.; Wihandika, R.C. Large Scale Pest Classification Using Efficient Convolutional Neural Network with Augmentation and Regularizers. Comput. Electron. Agric. 2022, 200, 107204. [Google Scholar] [CrossRef]

- Liu, H.; Zhan, Y.; Xia, H.; Mao, Q.; Tan, Y. Self-Supervised Transformer-Based Pre-Training Method Using Latent Semantic Masking Auto-Encoder for Pest and Disease Classification. Comput. Electron. Agric. 2022, 203, 107448. [Google Scholar] [CrossRef]

- Huang, J.; Fang, Y.; Wu, Y.; Wu, H.; Gao, Z.; Li, Y.; Ser, J.D.; Xia, J.; Yang, G. Swin Transformer for Fast MRI. Neurocomputing 2022, 493, 281–304. [Google Scholar] [CrossRef]

- Lin, A.; Chen, B.; Xu, J.; Zhang, Z.; Lu, G.; Zhang, D. DS-TransUNet: Dual Swin Transformer U-Net for Medical Image Segmentation. IEEE Trans. Instrum. Meas. 2022, 71, 1–15. [Google Scholar] [CrossRef]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin Transformer Embedding UNet for Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Dong, S.; Wang, R.; Liu, K.; Jiao, L.; Li, R.; Du, J.; Teng, Y.; Wang, F. CRA-Net: A Channel Recalibration Feature Pyramid Network for Detecting Small Pests. Comput. Electron. Agric. 2021, 191, 106518. [Google Scholar] [CrossRef]

| Year | Author Reference | Pest | Module | Performance | Generated Dataset |

|---|---|---|---|---|---|

| 2019 | Liu et al. [21] | 16 butterfly species | CNN | mAP (75.46%) | × |

| 2020 | Jiao et al. [22] | 24 agricultural pests | AF-RCNN | mAP (56.4%) | × |

| 2020 | Pattnaik et al. [23] | 10 pest species | Deep CNN | Accuracy (88.83%) | × |

| 2020 | Lee et al. [24] | Leaf miner, tea thrip, tea leaf roller, and tea mosquito bug (TMB) | Faster RCNN | mAP (66.02%) | × |

| 2021 | Chen et al. [25] | T. papillosa | YOLOv3 | mAP (0.93%) | × |

| 2021 | Wang et al. [26] | Agricultural pests | RPN | mAP (78.7%) | × |

| 2022 | Peng et al. [27] | 102 pests | CNN, Transformer | Accuracy (74.90%) | × |

| 2022 | ULLAH et al. [28] | 9 crop pests | CNN | Accuracy (100%) | × |

| 2023 | Our method | 8 agricultural pests | RS Transformer | mAP (90.18%) | √ |

| Dataset | Number of Images |

|---|---|

| Captured images | 400 |

| Images from other datasets | 609 |

| Generated images | 512 |

| Enhanced images | 36,504 |

| Dataset | Real Images | Generated Images |

|---|---|---|

| Primary | 24,216 | 0 |

| 10% RGSDD | 24,216 | 1229 |

| 20% RGSDD | 24,216 | 2458 |

| 30% RGSDD | 24,216 | 3686 |

| 40% RGSDD | 24,216 | 4915 |

| 50% RGSDD | 24,216 | 12,288 |

| Model | Backbone | Parameters (M) |

|---|---|---|

| SSD | VGG16 | 28.32 |

| Faster R-CNN | VGG16 | 138 |

| YOLOv3 | Darknet-53 | 64.46 |

| YOLOv4 | CSPDarknet53 | 5.55 |

| YOLOv5m | CSPDarknet53 | 20.66 |

| YOLOv8 | C2f | 30.13 |

| DETR | ResNet-50 | 40.34 |

| RS Transformer | Swin Transformer | 30.17 |

| Model | mAP (%) | F1 Score (%) | Recall (%) | Precision (%) | Accuracy (%) | mDT (ms) |

|---|---|---|---|---|---|---|

| SSD | 76.91 | 67.62 | 70.12 | 66.23 | 77.11 | 22.9 |

| Faster R-CNN | 72.65 | 65.57 | 69.31 | 67.10 | 73.52 | 24.5 |

| YOLOv3 | 60.38 | 52.38 | 57.78 | 53.64 | 60.32 | 17.7 |

| YOLOv4 | 76.31 | 69.55 | 74.97 | 68.91 | 76.99 | 10.7 |

| YOLOv5m | 80.29 | 75.58 | 79.14 | 77.33 | 79.35 | 13.6 |

| YOLOv8 | 84.72 | 80.32 | 82.11 | 79.59 | 83.49 | 9.8 |

| DETR | 85.56 | 81.18 | 82.82 | 80.43 | 86.12 | 19.2 |

| RS Transformer | 90.18 | 85.89 | 87.31 | 89.91 | 90.08 | 20.1 |

| Model | BA | HA | MP | SE | SL | TP | TU | ZC |

|---|---|---|---|---|---|---|---|---|

| SSD | 77.29 | 73.12 | 77.48 | 73.88 | 79.91 | 80.21 | 78.26 | 74.08 |

| Faster R-CNN | 75.89 | 69.26 | 69.76 | 73.81 | 71.33 | 74.75 | 70.10 | 73.02 |

| YOLOv3 | 57.20 | 63.69 | 61.51 | 60.66 | 62.63 | 58.93 | 58.00 | 64.05 |

| YOLOv4 | 72.55 | 74.47 | 75.40 | 79.11 | 74.24 | 76.13 | 80.05 | 78.51 |

| YOLOv5m | 84.22 | 79.51 | 77.17 | 79.57 | 80.79 | 79.73 | 83.06 | 81.16 |

| YOLOv8 | 81.53 | 88.45 | 82.18 | 84.44 | 85.56 | 84.73 | 83.95 | 83.21 |

| DETR | 83.53 | 82.07 | 87.33 | 85.61 | 87.62 | 83.23 | 88.52 | 85.52 |

| RS Transformer | 91.33 | 91.46 | 88.83 | 86.21 | 92.63 | 89.44 | 87.74 | 91.92 |

| Model | Percentage | mAP (%) | F1 Score (%) | Recall (%) | mDT (ms) |

|---|---|---|---|---|---|

| RS Transformer | 0% | 90.18 | 85.89 | 87.31 | 20.1 |

| 10% | 90.98 | 85.13 | 83.53 | 20.1 | |

| 20% | 93.64 | 86.75 | 90.42 | 20.1 | |

| 30% | 95.71 | 94.82 | 92.47 | 20.2 | |

| 40% | 95.56 | 90.67 | 93.10 | 20.2 | |

| 50% | 94.98 | 91.03 | 93.06 | 20.2 |

| Model | Percentage | mAP (%) | F1 Score (%) | Recall (%) | mDT (ms) |

|---|---|---|---|---|---|

| Faster R-CNN | 0% | 72.65 | 65.57 | 69.31 | 24 |

| 10% | 75.07 | 68.83 | 69.73 | 24 | |

| 20% | 73.47 | 67.26 | 70.62 | 24 | |

| 30% | 73.72 | 67.37 | 74.84 | 24 | |

| 40% | 71.80 | 69.78 | 72.39 | 24.1 | |

| 50% | 73.13 | 68.29 | 70.47 | 24.1 |

| Model | Percentage | mAP (%) | F1 Score (%) | Recall (%) | mDT (ms) |

|---|---|---|---|---|---|

| YOLOv5m | 0% | 80.29 | 75.58 | 76.14 | 13.6 |

| 10% | 83.96 | 74.72 | 76.48 | 13.6 | |

| 20% | 85.43 | 75.90 | 81.91 | 13.6 | |

| 30% | 82.31 | 76.24 | 78.38 | 13.6 | |

| 40% | 84.37 | 76.12 | 79.82 | 13.7 | |

| 50% | 75.53 | 70.41 | 73.76 | 13.7 |

| Model | Percentage | mAP (%) | F1 Score (%) | Recall (%) | mDT (ms) |

|---|---|---|---|---|---|

| YOLOv8 | 0% | 84.72 | 80.32 | 82.11 | 9.8 |

| 10% | 87.38 | 75.77 | 72.31 | 9.8 | |

| 20% | 88.42 | 85.17 | 84.78 | 9.8 | |

| 30% | 88.51 | 85.89 | 85.31 | 9.8 | |

| 40% | 82.32 | 81.76 | 80.11 | 9.9 | |

| 50% | 75.35 | 70.32 | 71.58 | 9.9 |

| Model | Percentage | mAP (%) | F1 Score (%) | Recall (%) | mDT (ms) |

|---|---|---|---|---|---|

| DETR | 0% | 85.56 | 81.18 | 82.82 | 20.1 |

| 10% | 85.94 | 83.10 | 80.62 | 20.1 | |

| 20% | 86.37 | 82.99 | 84.67 | 20.1 | |

| 30% | 87.71 | 86.75 | 85.72 | 20.2 | |

| 40% | 89.92 | 85.02 | 87.89 | 20.2 | |

| 50% | 88.90 | 87.19 | 85.97 | 20.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, T.; Shi, L.; Zhang, L.; Wen, X.; Lu, J.; Li, Z. RS Transformer: A Two-Stage Region Proposal Using Swin Transformer for Few-Shot Pest Detection in Automated Agricultural Monitoring Systems. Appl. Sci. 2023, 13, 12206. https://doi.org/10.3390/app132212206

Wu T, Shi L, Zhang L, Wen X, Lu J, Li Z. RS Transformer: A Two-Stage Region Proposal Using Swin Transformer for Few-Shot Pest Detection in Automated Agricultural Monitoring Systems. Applied Sciences. 2023; 13(22):12206. https://doi.org/10.3390/app132212206

Chicago/Turabian StyleWu, Tengyue, Liantao Shi, Lei Zhang, Xingkai Wen, Jianjun Lu, and Zhengguo Li. 2023. "RS Transformer: A Two-Stage Region Proposal Using Swin Transformer for Few-Shot Pest Detection in Automated Agricultural Monitoring Systems" Applied Sciences 13, no. 22: 12206. https://doi.org/10.3390/app132212206