Proposal and Evaluation of a Robot to Improve the Cognitive Abilities of Novice Baseball Spectators Using a Method for Selecting Utterances Based on the Game Situation

Abstract

:1. Introduction

2. Related Work

2.1. Baseball Spectator Assistance

2.2. Cognitive Spectating Ability

- Individual game intelligence cognitive ability: the ability to focus on individual skills, understand the meaning of individual skills and movements and analyze and evaluate the game.

- Team-play intelligence cognitive ability: The ability to analyze and evaluate the tactical aspects of the movements of all team members.

- Psychological empathy: The ability to sense and empathize with players’ feelings of joy, anger, sadness, and emotion.

- Physical empathy: The ability to understand and empathize with the sensation of moving a player’s body.

- Esthetic intuition: The ability to appreciate the excellence of a play, such as the beauty of individual skill and form.

- Emphasis on fair play: The ability to respect the values associated with fair play.

3. Baseball Spectator Assistance Robot

3.1. Definition of Utterance Categories and Rules

- Confirmation: Utterances to confirm the name of the player currently performing or details of the play the player is performing.

- Information: Utterances about the abilities or strengths of the player currently performing.

- Prediction: Utterances that predict future moves of the player.

- Emotion: Utterances that express emotion toward players’ plays.

- Evaluation: Utterances that evaluate players’ plays.

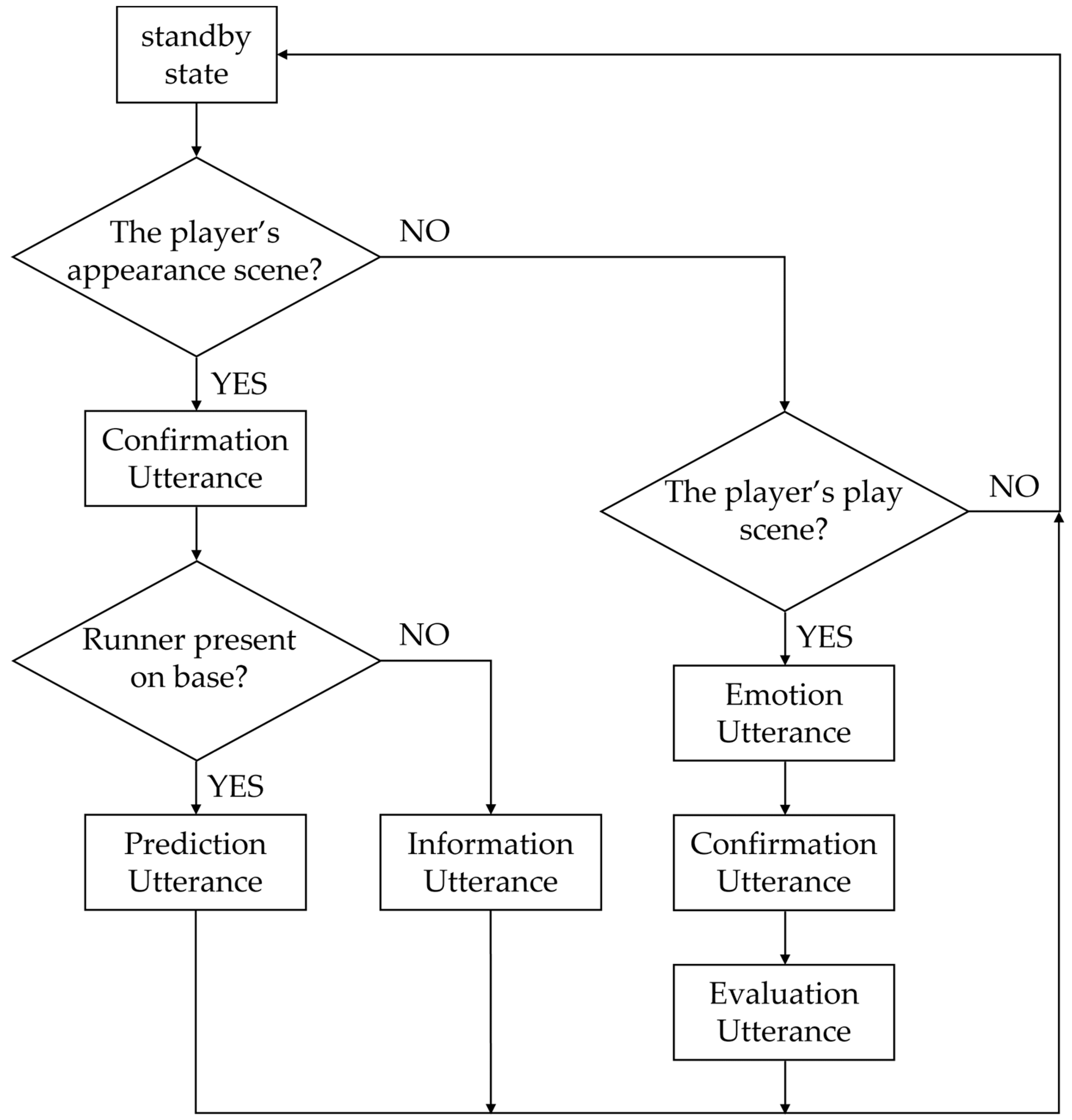

- If there are no runners on base in the player’s appearance scene, the robot will make confirmation and information utterances.

- If there are runners on base in the player’s appearance scene, the robot will make confirmation and prediction utterances.

- In the case of the player’s gameplay scene, the robot will make utterances in the order of emotion, confirmation, and evaluation.

3.2. Utterance Generation Methods for Each Category

3.2.1. Confirmation

3.2.2. Information

3.2.3. Prediction and Evaluation

3.2.4. Emotion

- Procedure 1.

- When the game situation is reflected in the player’s play scene, tweets posted by NPB spectators within 10 s of the gameplay are captured.

- Procedure 2.

- Classification of tweets into “Emotional” and “Not_Emotional.”

- Procedure 3.

- Further classification of “Emotional” tweets into “Positive” and “Negative.”

- Procedure 4.

- Select utterances from tweets that are classified as “Positive” when the team the robot is cheering for gains an advantage from the player’s play and as “Negative” if it is detrimental.

- Remove hashtags (#baystars, #DeNA, etc.).

- Remove URLs (http://~).

- Remove emoticons and emojis.

- Remove auxiliary symbols other than exclamation points, commas, periods, and hyphens.

- Convert all English letters and numbers to half-width.

- If there were multiple consecutive characters that were not Hiragana or Katakana, they were converted to single characters.

- Batch size: 16

- Dropout rate: 1.93 × 10−1

- Learning rate: 3.98 × 10−5

- Number of epochs: 10

- Procedure 1.

- Compare the play results associated with the tweets obtained in the past with the current play results and extract the tweets if they match.

- Procedure 2.

- Classify the tweets into “Emotional” and “Not_Emotional.”

- Procedure 3.

- Further, classify “Emotional” tweets into “Positive” and “Negative.”

- Procedure 4.

- To compare the game situation when the tweet was posted with the current situation, we added +1 to the output value of the classification model for each matching situation.

- Procedure 5.

- Select the utterances from the tweets that are classified as “Positive” when the team the robot is cheering for gains an advantage from the player’s play and as “Negative” if it is detrimental.

4. Experiment

4.1. Condition

4.2. Procedure

4.3. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Saito, T. A conceptual work-frame of spectator behavior: From a management point of view of cultural creativity of spectator sports. Bull. Jpn. Women’s Coll. Phys. Educ. 2013, 43, 117–128. [Google Scholar]

- Saito, T.; Daigo, E.; Deguchi, J.; Takaoka, A.; Nakaji, K.; Shimazaki, M.; Sano, M.; Kawasaki, T.; Nagano, F.; Yanagisawa, K. Explication of cognitive capability on sports spectating. Jpn. J. Manag. Phys. Educ. Sports 2020, 33, 1–19. [Google Scholar]

- Mahmood, Z.; Ali, T.; Muhammad, N.; Bibi, N.; Shahzad, I.; Azmat, S. EAR: Enhanced augmented reality system for sports entertainment applications. KSII Trans. Internet Inf. Syst. 2017, 11, 6069–6091. [Google Scholar]

- Kim, D.; Ko, Y.J. The impact of virtual reality (VR) technology on sport spectators’ flow experience and satisfaction. Comput. Hum. Behav. 2019, 93, 346–356. [Google Scholar] [CrossRef]

- Hagio, Y.; Kamimura, M.; Hoshi, Y.; Kaneko, Y.; Yamamoto, M. TV-watching robot: Toward enriching media experience and activating human communication. SMPTE Mot. Imaging J. 2022, 131, 50–58. [Google Scholar] [CrossRef]

- Nishimura, S.; Kanbara, M.; Hagita, N. Atmosphere Sharing with TV Chat Agents for Increase of User’s Motivation for Conversation. Human Aspects of IT for the Aged Population. Design for the Elderly and Technology Acceptance; Zhou, J., Salvendy, G., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 482–492. [Google Scholar]

- Yamamoto, F.; Ayedoun, E.; Tokumaru, M. Human-robot interaction environment to enhance the sense of presence in remote sports watching. In Proceedings of the 2022 Joint 12th International Conference on Soft Computing and Intelligent Systems and 23rd International Symposium on Advanced Intelligent Systems (SCIS & ISIS), Ise, Japan, 29 November 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Sumino, M.; Yamazaki, T.; Takeshita, S.; Shiokawa, K. How do sports viewers watch a game? A trial mainly on conversation analysis. Jpn. J. Sports Ind. 2010, 20, 243–252. [Google Scholar] [CrossRef]

- Bebie, T.; Bieri, H. A video-based 3D-reconstruction of soccer games. Comput. Graph. Forum 2000, 19, 391–400. [Google Scholar] [CrossRef]

- Koyama, T.; Kitahara, I.; Ohta, Y. Live mixed-reality 3D video in soccer stadium. In Proceedings of the 2nd IEEE and ACM International Symposium on Mixed and Augmented Reality, Tokyo, Japan, 7–10 October 2003; pp. 178–186. [Google Scholar] [CrossRef]

- Inamoto, N.; Saito, H. Free viewpoint video synthesis and presentation of sporting events for mixed reality entertainment. In Proceedings of the 2004 ACM SIGCHI International Conference on Advances in Computer Entertainment Technology, Singapore, 3–5 June 2004; pp. 42–50. [Google Scholar] [CrossRef]

- Matsui, K.; Iwase, M.; Agata, M.; Tanaka, T.; Ohnishi, N. Soccer image sequence computed by a virtual camera. In Proceedings of the 1998 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Santa Barbara, CA, USA, 25 June 1998. [Google Scholar]

- Liang, D.; Liu, Y.; Huang, Q.; Zhu, G.; Jiang, S.; Zhang, Z.; Gao, W. Video2Cartoon: Generating 3D cartoon from broadcast soccer video. In Proceedings of the 13th ACM International Conference on Multimedia, Singapore, 6–11 November 2005. [Google Scholar]

- Kammann, T.D.; Olaizola, I.G.; Barandiaran, I. Interactive augmented reality in digital broadcasting environments. J. Comp. Inf. Syst. 2006, 2, 881. [Google Scholar]

- Han, J.; Farin, D.; de With, P. A mixed-reality system for broadcasting sports video to mobile devices. IEEE Multimed. 2011, 18, 72–84. [Google Scholar] [CrossRef]

- Lee, S.-O.; Ahn, S.C.; Hwang, J.-I.; Kim, H.-G. A vision-based mobile augmented reality system for baseball games. In Proceedings of the Virtual and Mixed Reality—New Trends—International Conference, Virtual and Mixed Reality, Orlando, FL, USA, 9–14 July 2011; pp. 61–68. [Google Scholar] [CrossRef]

- Bielli, S.; Harris, C.G. A mobile augmented reality system to enhance live sporting events. In Proceedings of the 6th Augmented Human International Conference, Singapore, 9–11 March 2015; pp. 141–144. [Google Scholar] [CrossRef]

- Mizushina, Y.; Fujiwara, W.; Sudou, T.; Fernando, C.L.; Minamizawa, K.; Tachi, S. Interactive instant replay: Sharing sports experience using 360-degrees spherical images and haptic sensation based on the coupled body motion. In Proceedings of the 6th Augmented Human International Conference, Singapore, 9–11 March 2015; pp. 227–228. [Google Scholar] [CrossRef]

- Weed, M. Exploring the sport spectator experience: Virtual football spectatorship in the pub 1. Soccer Soc. 2008, 9, 189–197. [Google Scholar] [CrossRef]

- Tanaka, K.; Nakashima, H.; Noda, I.; Hasida, K. MIKE: An automatic commentary system for soccer. In Proceedings of the International Conference on Multi Agent Systems, Paris, France, 3–7 July 1998. [Google Scholar]

- Tanaka-Ishii, K.; Hasida, K.; Noda, I. Reactive content selection in the generation of real-time soccer commentary. In Proceedings of the 36th Annual Meeting of the Association for Computational Linguistics and 17th International Conference on Computational Linguistics—Volume 2, Montreal, QC, Canada, 10 August 1998. [Google Scholar]

- Chen, D.L.; Mooney, R.J. Learning to sportscast: A test of grounded language acquisition. In Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; pp. 128–135. [Google Scholar] [CrossRef]

- Zheng, M.; Kudenko, D. Automated event recognition for football commentary generation. Int. J. Gaming Comput. Mediat. Simul. 2010, 2, 67–84. [Google Scholar] [CrossRef]

- Taniguchi, Y.; Feng, Y.; Takamura, H.; Okumura, M. Generating live soccer-match commentary from play data. Proc. AAAI Conf. Artif. Intell. 2019, 33, 7096–7103. [Google Scholar] [CrossRef]

- Kumano, T.; Ichiki, M.; Kurihara, K.; Kaneko, H.; Komori, T.; Shimizu, T.; Seiyama, N.; Imai, A.; Sumiyoshi, H.; Takagi, T. Generation of automated sports commentary from live sports data. In Proceedings of the 2019 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), Jeju, Republic of Korea, 5–7 June 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Kim, B.J.; Choi, Y.S. Automatic baseball commentary generation using deep learning. In Proceedings of the 35th Annual ACM Symposium on Applied Computing, Brno, Czech Republic, 30 March 2020; pp. 1056–1065. [Google Scholar] [CrossRef]

- Chan, C.-Y.; Hui, C.-C.; Siu, W.-C.; Chan, S.-W.; Chan, H.A. To start automatic commentary of soccer game with mixed spatial and temporal attention. In Proceedings of the TENCON 2022 IEEE Region 10 Conference (TENCON), Hong Kong, Hong Kong, 1–4 November 2022. [Google Scholar] [CrossRef]

- Hayashi, Y.; Matsubara, S. Sentence-style conversion for text-to-speech application. IPSJ SIG Tech. Rep. 2007, 47, 49–54. [Google Scholar]

- Takami, Y. [Multiview Tactical Diagrams: How to Win in Baseball by Understanding the Right Theories and Controlling the Pitcher-Batter Duel] Maruchi Anguru Senjutsu Zukai Yakyuu no Tatakai Kata Tadashii Seorii Wo Rikai Shite “Toushu Tai Dasha” Wo Seisuru; Baseball Magazine Sha: Tokyo, Japan, 2020. (In Japanese) [Google Scholar]

- Thakur, N. Social Media Mining and Analysis: A Brief Review of Recent Challenges. Information 2023, 14, 484. [Google Scholar] [CrossRef]

- Zubiaga, A. Mining social media for newsgathering: A Review. Online Soc. Netw. Media 2019, 13, 100049. [Google Scholar] [CrossRef]

- Sugimori, M.; Mizuno, M.; Sasahara, K. A social media analysis of collective enthusiasm in Japanese professional baseball. In Proceedings of the 33rd Annual Conference of the Japanese Society for Artificial Intelligence, Niigata, Japan, 4 April 2019. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pretraining of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- GitHub—Cl-Tohoku_Bert-Japanese_BERT Models for Japanese Text. Available online: https://github.com/cl-tohoku/bert-japanese (accessed on 1 March 2023).

- Fraser, N.M.; Gilbert, G.N. Simulating speech systems. Comput. Speech Lang. 1991, 5, 81–99. [Google Scholar] [CrossRef]

- Sumino, M.; Harada, M. Emotion in sport spectator behavior: Development of a measurement scale and its application to models. Jpn. J. Sports Ind. 2005, 15, 21–36. [Google Scholar]

- Yoshida, M.; Nakazawa, M.; Inoue, T.; Katagami, T.; Iwamura, S. Repurchase behavior at sporting events: Beyond repurchase intentions. Jpn. J. Sports Manag. 2013, 5, 3–18. [Google Scholar] [CrossRef]

- Mann, H.B.; Whitne, D.R. On a test of whether one of two random variables is stochastically larger than the other. Ann. Math. Stat. 1947, 18, 50–60. [Google Scholar] [CrossRef]

- Wilcoxon, F. Individual comparisons by ranking methods. Biom. Bull. 1945, 1, 80–83. [Google Scholar] [CrossRef]

| Items | Utterance Category |

|---|---|

| 1. Spectating while analyzing the player’s abilities | Information |

| 2. Spectating while knowing the player’s strengths | Information |

| 3. Spectating while predicting the tactics | Prediction |

| 4. Spectating while understanding the meaning of the players’ movements | Evaluation |

| 5. Spectating and distinguishing between technical errors and judgment errors | Evaluation |

| 6. Spectating while paying attention to the player’s play choices | Prediction |

| Items | Utterance Category |

|---|---|

| 1. Spectating while empathizing with the player’s frustration | Emotion |

| 2. Spectating while being moved by the sadness of the player | |

| 3. Spectating while empathizing with the player’s psychological state | |

| 4. Spectating while being moved by the sight of players being happy | |

| 5. Spectating while empathizing with the player’s anger |

| Game Situation | Generated Utterance |

|---|---|

| The player’s appearance scene (when the pitcher appears on the mound for the first time in the game). | {player’s name} + “sensyu ga maundo ni agatta ne” (in Japanese); {player’s name} + “has taken the mound, you see” |

| The player’s appearance scene (when a batter enters the batter’s box). | {player’s name} + “sensyu ga daseki ni haitta ne” (in Japanese); {player’s name} + “has stepped up to the plate, you see” |

| The player’s play scene (when the player’s play is advantageous to the robot’s cheering team). | {play content} + “dane” (in Japanese); {play content} + “you see” |

| The player’s play scene (when the player’s play is disadvantageous to the robot’s cheering team). | {play content} + “ka” (in Japanese); {play content} + “huh” |

| The Part of Speech at the End of the Sentence | An Additional Sentence at the End of the Sentence | Example of Addition at the End of the Sentence |

|---|---|---|

| Particle “ga” + noun or particle | no senshu dayo (He is the player who) | pawā afureru shoubu tsuyoi dageki ga mochiaji + no senshu da yo (in Japanese); He is a player known for his power-packed and aggressive hitting. |

| Sahen-noun (when the sentence includes a word indicating tense) | shita senshu dayo (He is the player who did) | shudou keisoku nagara, koukou jidai ni wa, entou de 115 mētoru, honrui kara nirui e no soukyuu taimu de 1.81 byou o kiroku + shita senshu dayo (in Japanese); He is the player who recorded 115 m in the long throw and 1.81 s in throwing time from home to second base based on manual measurements during his high school days. |

| Sahen-noun (when the sentence does not include a word indicating tense) | suru senshu dayo (He is the player who does) | daseki de wa hidari ashi o agete kara no furusuingu de, migi houkou o chuuin ni hiroi kakudo ni watatte choudaryoku o hakki + suru senshu dayo (in Japanese); He is a player who displays power hitting with a full swing after lifting his left foot in the batter’s box, with a wide range of angles centered on the right field. |

| Noun | Dayo (He is) | sou kou shu de yakudoukan ni afureru purē ga miryoku no gaiyoushu + dayo (in Japanese); He is an outfielder whose dynamic play is full of excitement in running, hitting, and fielding. |

| Other | senshu dayo (He is the player) | dageki de wa, hikume no dakyuu mo chouda ni dekiru pawā o motsu senshu +dayo (in Japanese); He is a player who has the power to hit low pitches for long hits. |

| Label | Tweet Content | Example |

|---|---|---|

| Emotional | Includes interjections | Oh, Ah |

| Contains emotional words | Happy, Sad | |

| Shouting | It’s goooooooone! | |

| Refers to player’s play | Nice batting | |

| Not_Emotional | Other |

| Label | Training Data | Validation Data | Test Data | Total Number of Data |

|---|---|---|---|---|

| Emotional | 2753 | 344 | 344 | 3441 |

| Not_Emotional | 3341 | 418 | 418 | 4177 |

| Label | Training Data | Validation Data | Test Data | Total Number of Data |

|---|---|---|---|---|

| Positive | 2017 | 252 | 253 | 2522 |

| Negative | 735 | 92 | 92 | 919 |

| Game Situation | Utterance Category | Utterance |

|---|---|---|

| Player’s participation scene | Prediction | (This is a situation where we want to aim for a strikeout because even a ground ball or fly ball could score a run.) goro ya hurai demo tokten ga haitte simau kara sanshin wo neratte ikitaine (in Japanese) |

| Player’s play scene | Evaluation | (His pitch went high and sweet.) bōru ga takame ni amaku haitte simattane (in Japanese) |

| Game Situation | Utterance Category | Utterance |

|---|---|---|

| Player’s play scene | Emotion | (Was the umpire thinking of calling him out? If such a decision were made after using up the request in the late game, there would be a riot.) nande auto hantei sitanyaro karini syuuban rikuesuto tukai kitte annna hantei saretara boudou okiruzo (in Japanese) |

| Player’s participation scene | Confirmation | (Sota has stepped up to the plate, you see.) Soto sensyu ga daseki ni haitta ne (in Japanese) |

| Player’s participation scene | Information | (He is a slugger who hits powerfully to all fields from a compact form with a bat held behind his right shoulder and has a very high slugging percentage utilizing his natural power. He has an aggressive style of hitting from the first pitch and goes after it.) migi ushiro ni kamaeta batto wo jyouge ni yurasu konpakuto na fōmu kara koukaku ni kyouda wo utsu suraggā deari, syokyuu kara sekkyokuteki ni uti ni iku sutairu de, motimae no pawā wo ikashita tyouda ritu ga hijyou ni takai sensyu dayo (in Japanese) |

| Player’s play scene | Evaluation | (He ended up hitting a ball outside of the strike zone.) bo-ru dama ni te ga dete shimatta ne (in Japanese)) |

| Items for Anger | Items for Enjoyment | Items for Sadness |

|---|---|---|

| 1. Anger | 1. Best feeling | 1. Dark mood |

| 2. Frustration | 2. Happy feeling | 2. Despair |

| 3. Getting angry | 3. Joy | 3. Sadness |

| 4. Irritation | 4. Delight | 4. Feeling heavy |

| Items |

|---|

| 1. Interested in the continuation and progress of the game. |

| 2. Can expect in the game. |

| 3. Acquired knowledge of baseball. |

| 4. The time and effort spent on watching Yokohama DeNA BayStars’ game was worth it [37]. |

| 5. Considering the time and effort spent on today’s game, what you can acquire is significant [37]. |

| 6. Overall, the value of the Yokohama DeNA BayStars’ game is very high [37]. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mamada, K.; Miyamoto, T.; Katagami, D. Proposal and Evaluation of a Robot to Improve the Cognitive Abilities of Novice Baseball Spectators Using a Method for Selecting Utterances Based on the Game Situation. Appl. Sci. 2023, 13, 12723. https://doi.org/10.3390/app132312723

Mamada K, Miyamoto T, Katagami D. Proposal and Evaluation of a Robot to Improve the Cognitive Abilities of Novice Baseball Spectators Using a Method for Selecting Utterances Based on the Game Situation. Applied Sciences. 2023; 13(23):12723. https://doi.org/10.3390/app132312723

Chicago/Turabian StyleMamada, Keita, Tomoki Miyamoto, and Daisuke Katagami. 2023. "Proposal and Evaluation of a Robot to Improve the Cognitive Abilities of Novice Baseball Spectators Using a Method for Selecting Utterances Based on the Game Situation" Applied Sciences 13, no. 23: 12723. https://doi.org/10.3390/app132312723