Robot Operating System 2 (ROS2)-Based Frameworks for Increasing Robot Autonomy: A Survey

Abstract

:Featured Application

Abstract

1. Introduction

2. Related Work

2.1. ROS: Origin, Potential, and Limits

2.2. ROS2: Origin and Performance Analysis

2.3. ROS2: Industrial Robotics Applications

2.4. ROS2: Other Applications

3. General Architecture for Enhancing Cobot Autonomy

3.1. Motivation

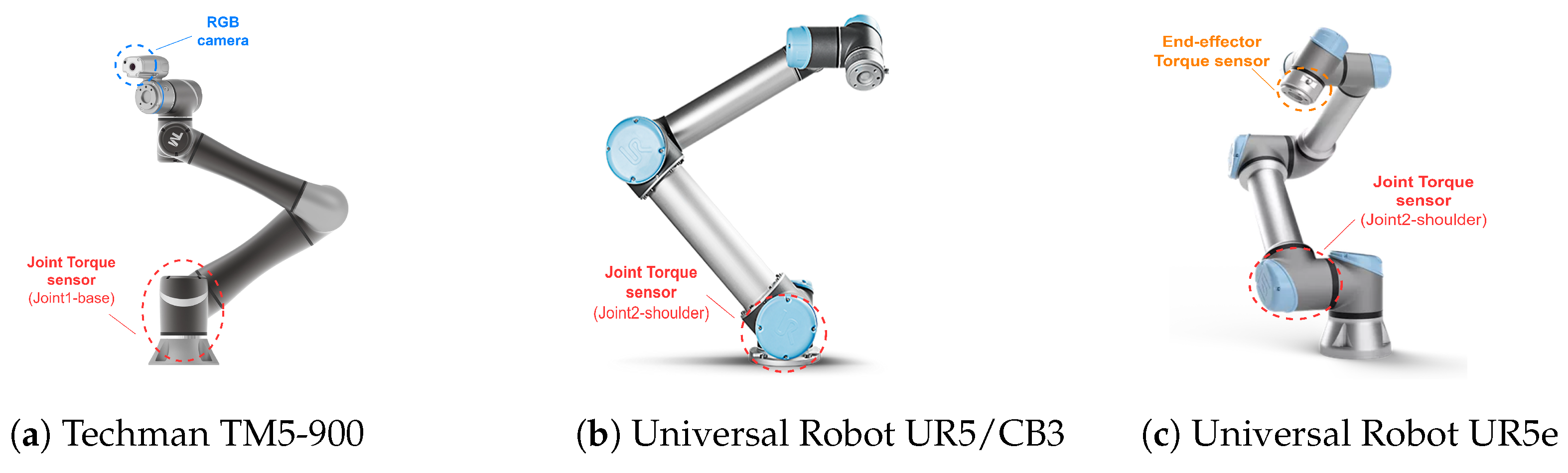

- Torque and force sensors: These are the sensors that allow collision detection and subsequent emergency stop of the robot. Typically, the GUI provided by commercial cobot manufacturers allows users to set safety stop criteria in terms of maximum values of parameters such as TCP (tool center point) force and joint torques. In this way, along with speed limitations, collisions are not anticipated and avoided, but they are made not dangerous. To handle them differently (e.g., with trajectory re-planning), different sensor types such as vision systems or distance sensors are needed.

- RGB camera: Most collaborative robots are also on the market with models equipped with an RGB camera mounted on the end of the manipulator (i.e., eye-in-hand arrangement). The integration of this vision system makes it possible to teach the robotic arm to recognize and grasp specific, identical objects from the same plane. Since depth information is not available, it is necessary to manually reprogram the robot if the objects to be grasped or the plane on which they are located changes. Again, the use of different types of sensors (e.g., depth cameras) could overcome these limitations.

3.2. Design and Requirements

- Perception: The overall architecture needs to use sensors (being exteroceptive or proprioceptive) to gather the necessary information to become aware of the state both of the cobot and of the surrounding environment. This task is then responsible for acquiring and pre-processing these data. The robot state is usually available without the need for additional sensors in all commercialized cobots, which provide access to variables such as joint positions, speeds, and torques or the pose of the TCP. With reference to the latter instead, the often limited environment perception could be easily enhanced by resorting to vision, distance, and/or proximity sensors, such as depth cameras, LIDAR, and sonar. Moreover, this task can be performed by either using a single sensor or by the handling of several, even heterogeneous, sensors, whose data are integrated by means of sensor fusion algorithms to get a better single model of the surrounding environment.

- Recognition: This task goes beyond mere perception, involving higher-level processing and interpretation of the raw data acquired by sensors. As an example, this is a task dealing with scene understanding, environmental reconstruction, and real-time object recognition/classification.

- Behavior planning: This is a task responsible for identifying the type of behavior and/or the sequence of actions to be performed. It is potentially a highly advanced task that, however, can be approached at different levels depending on the desired level of autonomy. At a lower level, there is a fairly standard sequence of actions required to perform a specific and predefined task with minimal variations to handle unexpected situations such as obstacles. At the highest level, this allows the robot to autonomously understand the task to be executed based on the surrounding.

- Trajectory planning: Another essential part of the robotic system is the path-planning algorithm that, based on the desired behavior of the cobot, generates a 3D path of the entire kinematic chain to move the end-effector from the initial pose to the desired final pose. The planned trajectory must be feasible, satisfying the physical constraints of the robot, as well as the constraints imposed by the environment due to the presence of static obstacles (i.e., fixed and already present in the environment at the time of planning).

- Trajectory re-planning: This represents a further fundamental planning module to increase the robot’s autonomy in case of dynamic obstacles (i.e., moving obstacles that can appear within the scene at any time). It has two subtasks responsible for detecting collisions with dynamic obstacles and for avoiding them. Specifically, the first performs continuous collision checking between the cobot and the surrounding environment along the path, while the second re-plans a new path to avoid the detected collisions. This latter can be managed both with a global planner that plans a new path from the current state to the goal taking into account the updated environment and, more efficiently, with a local planner that updates the plan only around the potential detected collision.

- Motion control: This is the low-level task responsible for executing the trajectory planned by the high-level tasks on the real cobot, controlling the robot’s actuators to follow the planned trajectory. Alternatively, it can also handle the execution of this plan in simulation.

- Manipulation: This refers to the ways robots physically interact with and modify the environment and objects around them with a wide range of actions such as grasping, packaging, assembling, or cutting, depending on the end-effector attached to the end of the robotic arm. All these actions require an appropriate control of the robot’s end-effector. This is a task difficult to generalize because it heavily depends on the specific required manipulation action and on the type of end-effector used to accomplish it. As an example, the same grasping action for a pick-and-place task can be done both with suction cups or with a two-finger gripper. This choice, which should be based on the type of object to be grasped, determines a different tool control interface (e.g., suction/release commands instead of open/close) and a different grasp planning (e.g., suction cups require as contact point a flat facet near the object’s center of mass to maintain stability, while a two-finger gripper requires two parallel facets at an appropriate distance).

3.3. Industrial Application Overview

4. ROS2-Based Architecture for Enhancing Cobot Autonomy: Current Capabilities and Limitations

4.1. Perception

4.2. Recognition

- Cobot state;

- Environment reconstruction;

- Object recognition/classification.

4.3. Trajectory Planning

4.4. Trajectory Re-Planning

4.5. Motion Control

5. Example of Using the Framework to Increase Cobot Autonomy: A Proof of Concept

5.1. Hardware and Software Setup

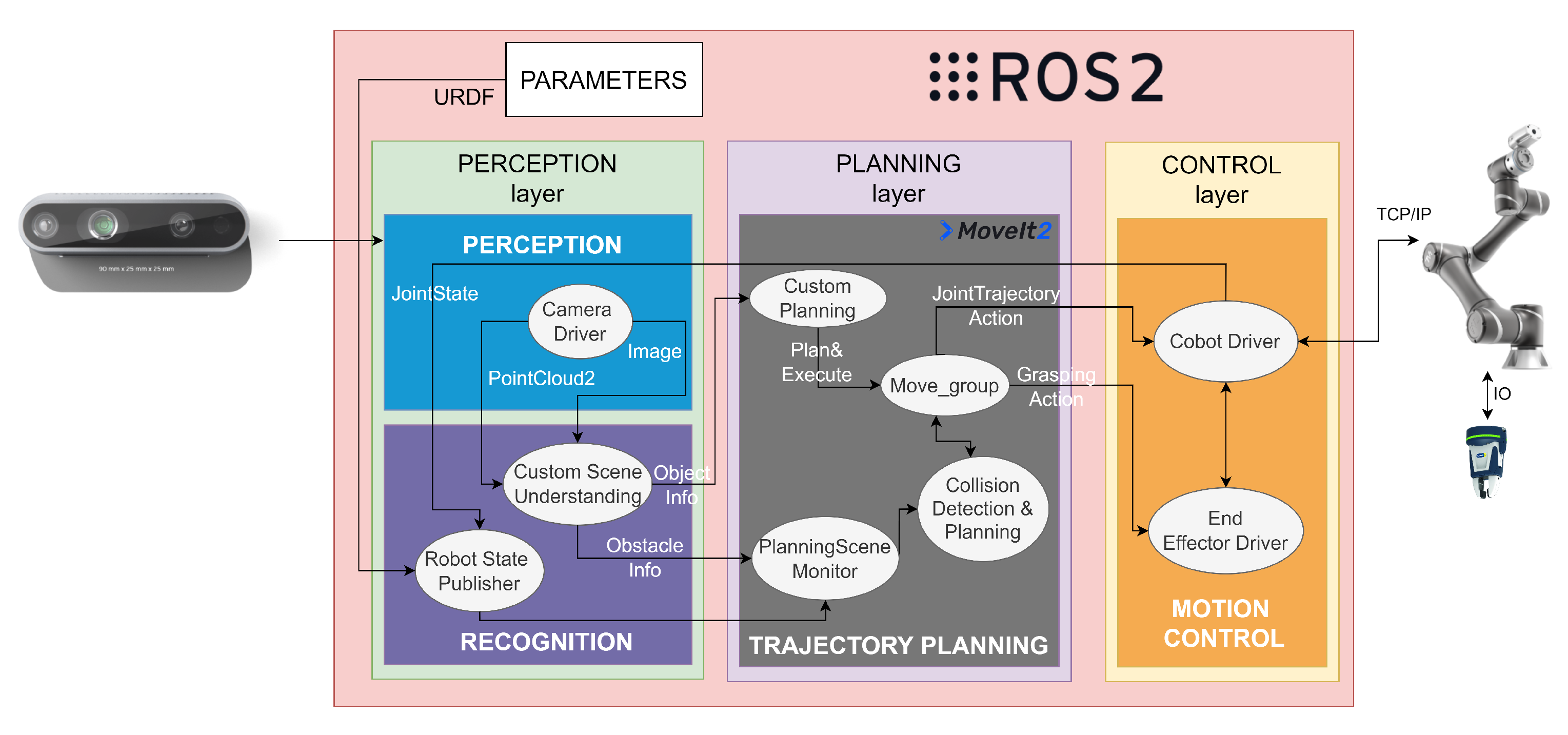

5.2. Software Architecture

5.3. Perception Layer Implementation

5.4. Planning and Control Layer Implementation

6. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nikolakis, N.; Maratos, V.; Makris, S. A cyber physical system (CPS) approach for safe human-robot collaboration in a shared workplace. Robot. Comput.-Integr. Manuf. 2019, 56, 233–243. [Google Scholar] [CrossRef]

- Bonci, A.; Pirani, M.; Longhi, S. Robotics 4.0: Performance improvement made easy. In Proceedings of the 2017 22nd IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Limassol, Cyprus, 12–15 September 2017; pp. 1–8. [Google Scholar]

- Indri, M.; Trapani, S.; Bonci, A.; Pirani, M. Integration of a Production Efficiency Tool with a General Robot Task Modeling Approach. In Proceedings of the 2018 IEEE 23rd International Conference on Emerging Technologies and Factory Automation (ETFA), Turin, Italy, 4–7 September 2018; Volume 1, pp. 1273–1280. [Google Scholar]

- Egyed, A.; Grünbacher, P.; Linsbauer, L.; Prähofer, H.; Schaefer, I. Variability in Products and Production. In Digital Transformation: Core Technologies and Emerging Topics from a Computer Science Perspective; Springer: Berlin/Heidelberg, Germany, 2023; pp. 65–91. [Google Scholar]

- Stadnicka, D.; Bonci, A.; Pirani, M.; Longhi, S. Information Management and Decision Making Supported by an Intelligence System in Kitchen Fronts Control Process. In Proceedings of the Intelligent Systems in Production Engineering and Maintenance—ISPEM 2017, Wrocław, Poland, 28–29 September 2017; Burduk, A., Mazurkiewicz, D., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 249–259. [Google Scholar]

- Iosup, A.; Yigitbasi, N.; Epema, D. On the Performance Variability of Production Cloud Services. In Proceedings of the 2011 11th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing, Newport Beach, CA, USA, 23–26 May 2011; pp. 104–113. [Google Scholar]

- Bonci, A.; Stadnicka, D.; Longhi, S. The Overall Labour Effectiveness to Improve Competitiveness and Productivity in Human-Centered Manufacturing. In Proceedings of the International Scientific-Technical Conference MANUFACTURING, Poznan, Poland, 16–19 May 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 144–155. [Google Scholar]

- Wang, L.; Gao, R.; Váncza, J.; Krüger, J.; Wang, X.; Makris, S.; Chryssolouris, G. Symbiotic human-robot collaborative assembly. CIRP Ann. 2019, 68, 701–726. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A.; Singh, R.P.; Rab, S.; Suman, R. Significant applications of Cobots in the field of manufacturing. Cogn. Robot. 2022, 2, 222–233. [Google Scholar] [CrossRef]

- Open Source Robotics Foundation (OSRF). ROS. Available online: https://www.openrobotics.org/ (accessed on 11 August 2023).

- ROS.org—Open Source Robotics Foundation (OSRF). ROS2. Available online: https://index.ros.org/ (accessed on 11 August 2023).

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A. ROS: An open-source Robot Operating System. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA)—Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; Volume 3. [Google Scholar]

- Willow Garage. Available online: http://www.willowgarage.com/ (accessed on 11 August 2023).

- Urmson, C.; Anhalt, J.; Bagnell, D.; Baker, C.; Bittner, R.; Clark, M.N.; Dolan, J.; Duggins, D.; Galatali, T.; Geyer, C.; et al. Autonomous Driving in Urban Environments: Boss and the Urban Challenge. In The DARPA Urban Challenge: Autonomous Vehicles in City Traffic; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–59. [Google Scholar]

- Boren, J.; Cousins, S. Exponential Growth of ROS—ROS Topics. IEEE Robot. Autom. Mag. 2011, 18, 19–20. [Google Scholar] [CrossRef]

- Open Computer Vision (OpenCV). Available online: https://opencv.org/ (accessed on 11 August 2023).

- Bradski, G. The OpenCV Library. Dr. Dobb’S J. Softw. Tools 2000, 120, 122–125. [Google Scholar]

- Point Cloud Library (PCL). Available online: https://pointclouds.org/ (accessed on 11 August 2023).

- Cousins, S.; Gerkey, B.; Conley, K.; Garage, W. Sharing Software with ROS [ROS Topics]. IEEE Robot. Autom. Mag. 2010, 17, 12–14. [Google Scholar] [CrossRef]

- Korayem, M.; Nekoo, S. The SDRE control of mobile base cooperative manipulators: Collision free path planning and moving obstacle avoidance. Robot. Auton. Syst. 2016, 86, 86–105. [Google Scholar] [CrossRef]

- Bonci, A.; Longhi, S.; Nabissi, G.; Scala, G.A. Execution Time of Optimal Controls in Hard Real Time, a Minimal Execution Time Solution for Nonlinear SDRE. IEEE Access 2020, 8, 158008–158025. [Google Scholar] [CrossRef]

- Open Source Robotics Foundation (OSRF). ROS2 Github Repository. Available online: https://github.com/ros2 (accessed on 11 August 2023).

- Object Management Group (OMG). Available online: https://www.omg.org/ (accessed on 11 August 2023).

- eProsima FastRTPS. Available online: https://www.eprosima.com/index.php/products-all/eprosima-fast-rtps (accessed on 11 August 2023).

- Real-Time Innovations. RTI Connext DDS Professional. Available online: https://www.rti.com/products/connext-dds-professional (accessed on 11 August 2023).

- OpenDDS Foundation. Available online: https://opendds.org/ (accessed on 11 August 2023).

- Adlink Vortex OpenSplice. Available online: https://www.adlinktech.com/en/vortex-opensplice-data-distribution-service (accessed on 11 August 2023).

- Maruyama, Y.; Kato, S.; Azumi, T. Exploring the performance of ROS2. In Proceedings of the 2016 International Conference on Embedded Software (EMSOFT), Pittsburgh, PA, USA, 2–7 October 2016; pp. 1–10. [Google Scholar]

- Pardo-Castellote, G. OMG Data-Distribution Service: Architectural overview. In Proceedings of the 23rd International Conference on Distributed Computing Systems Workshops, Providence, RI, USA, 19–22 May 2003; pp. 200–206. [Google Scholar]

- Schlesselman, J.; Pardo-Castellote, G.; Farabaugh, B. OMG data-distribution service (DDS): Architectural update. In Proceedings of the IEEE MILCOM 2004. Military Communications Conference, Monterey, CA, USA, 31 October–3 November 2004; Volume 2, pp. 961–967. [Google Scholar]

- Yang, J.; Sandström, K.; Nolte, T.; Behnam, M. Data Distribution Service for industrial automation. In Proceedings of the 2012 IEEE 17th International Conference on Emerging Technologies and Factory Automation (ETFA 2012), Krakow, Poland, 17–21 September 2012; pp. 1–8. [Google Scholar]

- Albonico, M.; Đorđević, M.; Hamer, E.; Malavolta, I. Software engineering research on the Robot Operating System: A systematic mapping study. J. Syst. Softw. 2023, 197, 1–28. [Google Scholar] [CrossRef]

- Gutiérrez, C.S.V.; Juan, L.U.S.; Ugarte, I.Z.; Vilches, V.M. Towards a distributed and real-time framework for robots: Evaluation of ROS 2.0 communications for real-time robotic applications. arXiv 2018, arXiv:1809.02595. [Google Scholar]

- Casini, D.; Blass, T.; Lütkebohle, I.; Brandenburg, B.B. Response-Time Analysis of ROS 2 Processing Chains Under Reservation-Based Scheduling. In Proceedings of the Euromicro Conference on Real-Time Systems (ECRTS), Stuttgart, Germany, 9–12 July 2019. [Google Scholar]

- Kronauer, T.; Pohlmann, J.; Matthé, M.; Smejkal, T.; Fettweis, G. Latency Analysis of ROS2 Multi-Node Systems. In Proceedings of the 2021 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Karlsruhe, Germany, 23–25 September 2021; pp. 1–7. [Google Scholar]

- Dust, L.J.; Persson, E.; Ekstrom, M.; Mubeen, S.; Seceleanu, C.; Gu, R. Experimental Evaluation of Callback Behaviour in ROS2 Executors. In Proceedings of the 2023 28th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Sinaia, Romania, 12–15 September 2023; Volume 1, pp. 1–8. [Google Scholar]

- Park, J.; Delgado, R.; Choi, B.W. Real-Time Characteristics of ROS 2.0 in Multiagent Robot Systems: An Empirical Study. IEEE Access 2020, 8, 154637–154651. [Google Scholar] [CrossRef]

- Puck, L.; Keller, P.; Schnell, T.; Plasberg, C.; Tanev, A.; Heppner, G.; Roennau, A.; Dillmann, R. Performance Evaluation of Real-Time ROS2 Robotic Control in a Time-Synchronized Distributed Network. In Proceedings of the 2021 IEEE 17th International Conference on Automation Science and Engineering (CASE), Lyon, France, 23–27 August 2021; pp. 1670–1676. [Google Scholar]

- Thulasiraman, P.; Chen, Z.; Allen, B.; Bingham, B. Evaluation of the Robot Operating System 2 in Lossy Unmanned Networks. In Proceedings of the 2020 IEEE International Systems Conference (SysCon), Montreal, QC, Canada, 24–27 August 2020; pp. 1–8. [Google Scholar]

- DiLuoffo, V.; Michalson, W.R.; Sunar, B. Robot Operating System 2: The need for a holistic security approach to robotic architectures. Int. J. Adv. Robot. Syst. 2018, 15, 1–15. [Google Scholar] [CrossRef]

- Aartsen, M.; Banga, K.; Talko, K.; Touw, D.; Wisman, B.; Meïnsma, D.; Björkqvist, M. Analyzing Interoperability and Security Overhead of ROS2 DDS Middleware. In Proceedings of the 2022 30th Mediterranean Conference on Control and Automation (MED), Vouliagmeni, Greece, 28 June–1 July 2022; pp. 976–981. [Google Scholar]

- Mayoral-Vilches, V.; White, R.; Caiazza, G.; Arguedas, M. SROS2: Usable Cyber Security Tools for ROS 2. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 11253–11259. [Google Scholar]

- Kim, J.; Smereka, J.M.; Cheung, C.; Nepal, S.; Grobler, M. Security and Performance Considerations in ROS 2: A Balancing Act. arXiv 2018, arXiv:1809.09566. [Google Scholar]

- Fernandez, J.; Allen, B.; Thulasiraman, P.; Bingham, B. Performance Study of the Robot Operating System 2 with QoS and Cyber Security Settings. In Proceedings of the 2020 IEEE International Systems Conference (SysCon), Montreal, QC, Canada, 24–27 August 2020; pp. 1–6. [Google Scholar]

- Erős, E.; Dahl, M.; Bengtsson, K.; Hanna, A.; Falkman, P. A ROS2 based communication architecture for control in collaborative and intelligent automation systems. Procedia Manuf. 2019, 38, 349–357. [Google Scholar] [CrossRef]

- Erős, E.; Dahl, M.; Hanna, A.; Albo, A.; Falkman, P.; Bengtsson, K. Integrated virtual commissioning of a ROS2-based collaborative and intelligent automation system. In Proceedings of the 2019 24th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Zaragoza, Spain, 10–13 September 2019; pp. 407–413. [Google Scholar]

- He, J.; Zhang, J.; Liu, J.; Fu, X. A ROS2-Based Framework for Industrial Automation Systems. In Proceedings of the 2022 2nd International Conference on Computer, Control and Robotics (ICCCR), Shanghai, China, 18–20 March 2022; pp. 98–102. [Google Scholar]

- Audonnet, F.P.; Hamilton, A.; Aragon-Camarasa, G. A Systematic Comparison of Simulation Software for Robotic Arm Manipulation using ROS2. In Proceedings of the 2022 22nd International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 27–30 November 2022; pp. 755–762. [Google Scholar]

- Macenski, S.; Foote, T.; Gerkey, B.; Lalancette, C.; Woodall, W. Robot Operating System 2: Design, architecture, and uses in the wild. Sci. Robot. 2022, 7, eabm6074. [Google Scholar] [CrossRef] [PubMed]

- Tonola, C.; Beschi, M.; Faroni, M.; Pedrocchi, N. OpenMORE: An open-source tool for sampling-based path replanning in ROS. In Proceedings of the 2023 28th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Sinaia, Romania, 12–15 September 2023; Volume 1, pp. 1–8. [Google Scholar]

- Tonola, C.; Faroni, M.; Beschi, M.; Pedrocchi, N. Anytime Informed Multi-Path Replanning Strategy for Complex Environments. IEEE Access 2023, 11, 4105–4116. [Google Scholar] [CrossRef]

- Wong, C.C.; Chen, C.J.; Wong, K.Y.; Feng, H.M. Implementation of a Real-Time Object Pick-and-Place System Based on a Changing Strategy for Rapidly-Exploring Random Tree. Sensors 2023, 23, 4814. [Google Scholar] [CrossRef]

- Kang, T.; Yi, J.B.; Song, D.; Yi, S.J. High-Speed Autonomous Robotic Assembly Using In-Hand Manipulation and Re-Grasping. Appl. Sci. 2021, 11, 37. [Google Scholar] [CrossRef]

- Zhou, G.; Luo, J.; Xu, S.; Zhang, S. A Cooperative Shared Control Scheme Based on Intention Recognition for Flexible Assembly Manufacturing. Front. Neurorobotics 2022, 16, 850211. [Google Scholar] [CrossRef]

- Chitta, S.; Jones, E.G.; Ciocarlie, M.; Hsiao, K. Mobile Manipulation in Unstructured Environments: Perception, Planning, and Execution. IEEE Robot. Autom. Mag. 2012, 19, 58–71. [Google Scholar] [CrossRef]

- Bagnell, J.A.; Cavalcanti, F.; Cui, L.; Galluzzo, T.; Hebert, M.; Kazemi, M.; Klingensmith, M.; Libby, J.; Liu, T.Y.; Pollard, N.; et al. An integrated system for autonomous robotics manipulation. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 2955–2962. [Google Scholar]

- Diab, M.; Pomarlan, M.; Beßler, D.; Akbari, A.; Rosell, J.; Bateman, J.; Beetz, M. SkillMaN—A skill-based robotic manipulation framework based on perception and reasoning. Robot. Auton. Syst. 2020, 134, 103653. [Google Scholar] [CrossRef]

- Hellmund, A.M.; Wirges, S.; Tas, O.S.; Bandera, C.; Salscheider, N.O. Robot operating system: A modular software framework for automated driving. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 1564–1570. [Google Scholar]

- Alderisi, G.; Iannizzotto, G.; Bello, L.L. Towards IEEE 802.1 Ethernet AVB for Advanced Driver Assistance Systems: A preliminary assessment. In Proceedings of the 2012 IEEE 17th International Conference on Emerging Technologies and Factory Automation (ETFA 2012), Krakow, Poland, 17–21 September 2012; pp. 1–4. [Google Scholar]

- Patti, G.; Bello, L.L. Performance Assessment of the IEEE 802.1Q in Automotive Applications. In Proceedings of the 2019 AEIT International Conference of Electrical and Electronic Technologies for Automotive (AEIT AUTOMOTIVE), Turin, Italy, 2–4 July 2019; pp. 1–6. [Google Scholar]

- Bonci, A.; De Amicis, R.; Longhi, S.; Lorenzoni, E.; Scala, G.A. A motorcycle enhanced model for active safety devices in intelligent transport systems. In Proceedings of the 2016 12th IEEE/ASME International Conference on Mechatronic and Embedded Systems and Applications, Auckland, New Zealand, 29–31 August 2016; pp. 1–6. [Google Scholar]

- Corno, M.; Panzani, G. Traction Control Systems Design: A Systematic Approach. In Modelling, Simulation and Control of Two-Wheeled Vehicles; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2014; Chapter 8; pp. 198–220. [Google Scholar]

- Bonci, A.; De Amicis, R.; Longhi, S.; Lorenzoni, E.; Scala, G.A. Motorcycle’s lateral stability issues: Comparison of methods for dynamic modelling of roll angle. In Proceedings of the 2016 20th International Conference on System Theory, Control and Computing (ICSTCC), Sinaia, Romania, 13–15 October 2016; pp. 607–612. [Google Scholar]

- Dandiwala, A.; Chakraborty, B.; Chakravarty, D.; Sindha, J. Vehicle dynamics and active rollover stability control of an electric narrow three-wheeled vehicle: A review and concern towards improvement. Veh. Syst. Dyn. 2023, 61, 399–422. [Google Scholar] [CrossRef]

- Bonci, A.; Longhi, S.; Scala, G.A. Towards an All-Wheel Drive Motorcycle: Dynamic Modeling and Simulation. IEEE Access 2020, 8, 112867–112882. [Google Scholar] [CrossRef]

- AUTomotive Open System ARchitecture—AUTOSAR. Available online: https://www.autosar.org/ (accessed on 11 August 2023).

- Henle, J.; Stoffel, M.; Schindewolf, M.; Nägele, A.T.; Sax, E. Architecture platforms for future vehicles: A comparison of ROS2 and Adaptive AUTOSAR. In Proceedings of the 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022; pp. 3095–3102. [Google Scholar]

- Zhang, J.; Keramat, F.; Yu, X.; Hernández, D.M.; Queralta, J.P.; Westerlund, T. Distributed Robotic Systems in the Edge-Cloud Continuum with ROS 2: A Review on Novel Architectures and Technology Readiness. In Proceedings of the 2022 Seventh International Conference on Fog and Mobile Edge Computing (FMEC), Paris, France, 12–15 December 2022; pp. 1–8. [Google Scholar]

- Bianchi, L.; Carnevale, D.; Del Frate, F.; Masocco, R.; Mattogno, S.; Romanelli, F.; Tenaglia, A. A novel distributed architecture for unmanned aircraft systems based on Robot Operating System 2. IET Cyber-Syst. Robot. 2023, 5, e12083. [Google Scholar] [CrossRef]

- Testa, A.; Camisa, A.; Notarstefano, G. ChoiRbot: A ROS 2 Toolbox for Cooperative Robotics. IEEE Robot. Autom. Lett. 2021, 6, 2714–2720. [Google Scholar] [CrossRef]

- Brock, O.; Kuffner, J.; Xiao, J. Motion for Manipulation Tasks. In Springer Handbook of Robotics; Siciliano, B., Khatib, O., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 615–645. [Google Scholar]

- Suomalainen, M.; Karayiannidis, Y.; Kyrki, V. A survey of robot manipulation in contact. Robot. Auton. Syst. 2022, 156, 104224. [Google Scholar] [CrossRef]

- Villani, V.; Pini, F.; Leali, F.; Secchi, C. Survey on human–robot collaboration in industrial settings: Safety, intuitive interfaces and applications. Mechatronics 2018, 55, 248–266. [Google Scholar] [CrossRef]

- Taesi, C.; Aggogeri, F.; Pellegrini, N. COBOT Applications—Recent Advances and Challenges. Robotics 2023, 12, 79. [Google Scholar] [CrossRef]

- Liu, H.; Wang, L. Collision-free human-robot collaboration based on context awareness. Robot. Comput.-Integr. Manuf. 2021, 67, 101997. [Google Scholar] [CrossRef]

- Tavares, P.; Sousa, A. Flexible pick and place architecture using ROS framework. In Proceedings of the 2015 10th Iberian Conference on Information Systems and Technologies (CISTI), Aveiro, Portugal, 17–20 June 2015. [Google Scholar]

- Song, K.T.; Chang, Y.H.; Chen, J.H. 3D Vision for Object Grasp and Obstacle Avoidance of a Collaborative Robot. In Proceedings of the 2019 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Hong Kong, China, 8–12 July 2019; pp. 254–258. [Google Scholar]

- Megalingam, R.K.; Rohith Raj, R.V.; Akhil, T.; Masetti, A.; Chowdary, G.N.; Naick, V.S. Integration of Vision based Robot Manipulation using ROS for Assistive Applications. In Proceedings of the 2020 Second International Conference on Inventive Research in Computing Applications (ICIRCA), Coimbatore, India, 15–17 July 2020; pp. 163–169. [Google Scholar]

- Chiaravalli, D.; Palli, G.; Monica, R.; Aleotti, J.; Rizzini, D.L. Integration of a Multi-Camera Vision System and Admittance Control for Robotic Industrial Depalletizing. In Proceedings of the 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vienna, Austria, 8–11 September 2020; Volume 1, pp. 667–674. [Google Scholar]

- Lee, C.C.; Song, K.T. Path Re-Planning Design of a Cobot in a Dynamic Environment Based on Current Obstacle Configuration. IEEE Robot. Autom. Lett. 2023, 8, 1183–1190. [Google Scholar] [CrossRef]

- Ende, T.; Haddadin, S.; Parusel, S.; Wüsthoff, T.; Hassenzahl, M.; Albu-Schäffer, A. A human-centered approach to robot gesture based communication within collaborative working processes. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 3367–3374. [Google Scholar]

- Hollmann, R.; Rost, A.; Hägele, M.; Verl, A. A HMM-based approach to learning probability models of programming strategies for industrial robots. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 2965–2970. [Google Scholar]

- Krüger, J.; Lien, T.; Verl, A. Cooperation of human and machines in assembly lines. CIRP Ann. 2009, 58, 628–646. [Google Scholar] [CrossRef]

- Hjorth, S.; Chrysostomou, D. Human–robot collaboration in industrial environments: A literature review on non-destructive disassembly. Robot. Comput.-Integr. Manuf. 2022, 73, 102208. [Google Scholar] [CrossRef]

- Çoban, M.; Gelen, G. Realization of human-robot collaboration in hybrid assembly systems by using wearable technology. In Proceedings of the 2018 6th International Conference on Control Engineering & Information Technology (CEIT), Istanbul, Turkey, 25–27 October 2018; pp. 1–6. [Google Scholar]

- Hmedan, B.; Kilgus, D.; Fiorino, H.; Landry, A.; Pellier, D. Adapting Cobot Behavior to Human Task Ordering Variability for Assembly Tasks. Int. FLAIRS Conf. Proc. 2022, 35, 1–6. [Google Scholar] [CrossRef]

- YARP—Yet Another Robot Platform. Software for Humanoid Robots: The YARP. 2023. Available online: https://yarp.it/latest/ (accessed on 11 August 2023).

- Orocos—Open Robot Control Software. The Orocos Project. 2023. Available online: https://orocos.org/ (accessed on 11 August 2023).

- Longhi, M.; Taylor, Z.; Popović, M.; Nieto, J.; Marrocco, G.; Siegwart, R. RFID-Based Localization for Greenhouses Monitoring Using MAVs. In Proceedings of the 2018 8th IEEE-APS Topical Conference on Antennas and Propagation in Wireless Communications (APWC), Cartagena, Colombia, 10–14 September 2018; pp. 905–908. [Google Scholar]

- Longhi, M.; Marrocco, G. Ubiquitous Flying Sensor Antennas: Radiofrequency Identification Meets Micro Drones. IEEE J Radio Freq. Identif. 2017, 1, 291–299. [Google Scholar] [CrossRef]

- MOOS—Mission Oriented Operating Suite. mit.edu. 2023. Available online: https://oceanai.mit.edu/moos-ivp/pmwiki/pmwiki.php (accessed on 11 August 2023).

- Serrano, D. Middleware and Software Frameworks in Robotics—Applicability to Small Unmanned Vehicles. In Proceedings of the NATO-OTAN ST Organization, Cerdanyola del Vallès, Spain, 4–5 January 2015; pp. 1–8. [Google Scholar]

- Karpas, E.; Magazzeni, D. Automated Planning for Robotics. Annu. Rev. Control. Robot. Auton. Syst. 2020, 3, 417–439. [Google Scholar] [CrossRef]

- Pereira, J.L.; Queirós, M.; C. da Costa, N.M.; Marcelino, S.; Meireles, J.; Fonseca, J.C.; Moreira, A.H.J.; Borges, J.L. TMRobot Series Toolbox: Interfacing Collaborative Robots with MATLAB. In Proceedings of the 3rd International Conference on Innovative Intelligent Industrial Production and Logistics—IN4PL. INSTICC, SciTePress, Valletta, Malta, 24–26 October 2022; pp. 46–55. [Google Scholar]

- Nabissi, G.; Longhi, S.; Bonci, A. ROS-Based Condition Monitoring Architecture Enabling Automatic Faults Detection in Industrial Collaborative Robots. Appl. Sci. 2023, 13, 143. [Google Scholar] [CrossRef]

- Bonci, A.; Longhi, S.; Nabissi, G. Fault Diagnosis in a belt-drive system under non-stationary conditions. An industrial case study. In Proceedings of the 2021 IEEE Workshop on Electrical Machines Design, Control and Diagnosis (WEMDCD), Modena, Italy, 8–9 April 2021; pp. 260–265. [Google Scholar]

- Kermenov, R.; Nabissi, G.; Longhi, S.; Bonci, A. Anomaly Detection and Concept Drift Adaptation for Dynamic Systems: A General Method with Practical Implementation Using an Industrial Collaborative Robot. Sensors 2023, 23, 3260. [Google Scholar] [CrossRef] [PubMed]

- Elfes, A.; Steindl, R.; Talbot, F.; Kendoul, F.; Sikka, P.; Lowe, T.; Kottege, N.; Bjelonic, M.; Dungavell, R.; Bandyopadhyay, T.; et al. The Multilegged Autonomous eXplorer (MAX). In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1050–1057. [Google Scholar]

- Hernandez-Mendez, S.; Maldonado-Mendez, C.; Marin-Hernandez, A.; Rios-Figueroa, H.V.; Vazquez-Leal, H.; Palacios-Hernandez, E.R. Design and implementation of a robotic arm using ROS and MoveIt! In Proceedings of the 2017 IEEE International Autumn Meeting on Power, Electronics and Computing (ROPEC), Ixtapa, Mexico, 9–11 November 2017; pp. 1–6. [Google Scholar]

- Gazebo Simulator. Available online: https://classic.gazebosim.org/tutorials?tut=ros2_overview&cat=connect_ros (accessed on 8 August 2023).

- Wang, Y.; Liu, L.; Zhang, X.; Shi, W. HydraOne: An Indoor Experimental Research and Education Platform for CAVs. In Proceedings of the 2nd USENIX Workshop on Hot Topics in Edge Computing (HotEdge 19), Renton, WA, USA, 9 July 2019. [Google Scholar]

- Koubaa, A. (Ed.) Robot Operating System (ROS): The Complete Reference (Volume 1); Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2016; Volume 625. [Google Scholar]

- Wang, X.; Yang, C.; Ju, Z.; Ma, H.; Fu, M. Robot manipulator self-identification for surrounding obstacle detection. Multimed. Tools Appl. 2017, 76, 6495–6520. [Google Scholar] [CrossRef]

- Hornung, A.; Wurm, K.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef]

- Robotic, P. MoveIt 2 Documentation—Planning Scene Monitor. 2023. Available online: https://moveit.picknik.ai/humble/doc/concepts/planning_scene_monitor.html (accessed on 11 August 2023).

- Available online: https://github.com/leggedrobotics/darknet_ros.git (accessed on 11 August 2023).

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Şucan, I.A.; Moll, M.; Kavraki, L.E. The Open Motion Planning Library. IEEE Robot. Autom. Mag. 2012, 19, 72–82. [Google Scholar] [CrossRef]

- Meijer, J.; Lei, Q.; Wisse, M. Performance study of single-query motion planning for grasp execution using various manipulators. In Proceedings of the 2017 18th International Conference on Advanced Robotics (ICAR), Hong Kong, China, 10–12 July 2017; pp. 450–457. [Google Scholar]

- Zucker, M.; Ratliff, N.; Dragan, A.D.; Pivtoraiko, M.; Klingensmith, M.; Dellin, C.M.; Bagnell, J.A.; Srinivasa, S.S. CHOMP: Covariant Hamiltonian optimization for motion planning. Int. J. Robot. Res. 2013, 32, 1164–1193. [Google Scholar] [CrossRef]

- Kalakrishnan, M.; Chitta, S.; Theodorou, E.; Pastor, P.; Schaal, S. STOMP: Stochastic trajectory optimization for motion planning. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 4569–4574. [Google Scholar]

- Pan, J.; Chitta, S.; Manocha, D. FCL: A general purpose library for collision and proximity queries. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 3859–3866. [Google Scholar]

- SDK, B.P. bullet3. 2023. Available online: https://github.com/bulletphysics/bullet3.git (accessed on 11 August 2023).

- Völz, A.; Graichen, K. A Predictive Path-Following Controller for Continuous Replanning With Dynamic Roadmaps. IEEE Robot. Autom. Lett. 2019, 4, 3963–3970. [Google Scholar] [CrossRef]

- Robotics, P. MoveIt2-Hybrid Planning. Available online: https://moveit.picknik.ai/main/doc/concepts/hybrid_planning/hybrid_planning.html (accessed on 11 August 2023).

- IntelRealSense. Realsense-Ros. 2023. Available online: https://github.com/IntelRealSense/realsense-ros.git (accessed on 11 August 2023).

- TechmanRobotInc. tmr_ros2. 2023. Available online: https://github.com/TechmanRobotInc/tmr_ros2.git (accessed on 11 August 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bonci, A.; Gaudeni, F.; Giannini, M.C.; Longhi, S. Robot Operating System 2 (ROS2)-Based Frameworks for Increasing Robot Autonomy: A Survey. Appl. Sci. 2023, 13, 12796. https://doi.org/10.3390/app132312796

Bonci A, Gaudeni F, Giannini MC, Longhi S. Robot Operating System 2 (ROS2)-Based Frameworks for Increasing Robot Autonomy: A Survey. Applied Sciences. 2023; 13(23):12796. https://doi.org/10.3390/app132312796

Chicago/Turabian StyleBonci, Andrea, Francesco Gaudeni, Maria Cristina Giannini, and Sauro Longhi. 2023. "Robot Operating System 2 (ROS2)-Based Frameworks for Increasing Robot Autonomy: A Survey" Applied Sciences 13, no. 23: 12796. https://doi.org/10.3390/app132312796