An In-Depth Analysis of Domain Adaptation in Computer and Robotic Vision

Abstract

:1. Introduction

1.1. Background and Scope

1.2. Objectives and Research Questions

- RQ1: What are the primary issues and causes of the domain shift issue in robotic and computer vision?

- RQ2: What are the distinctive benefits provided by each category and how do traditional domain adaptation techniques and deep learning-based approaches vary from one another?

- RQ3: What conclusions may be taken from domain adaptation methodologies’ cross-domain analysis when dealing with a variety of source and destination domains?

- RQ4: How do the performance and generalization capabilities of computer and robotic vision systems, with the incorporation of domain adaptation techniques, differ from those of baseline models?

- RQ5: What are the challenges and insights encountered during the process of domain adaptation for robotic and computer vision?

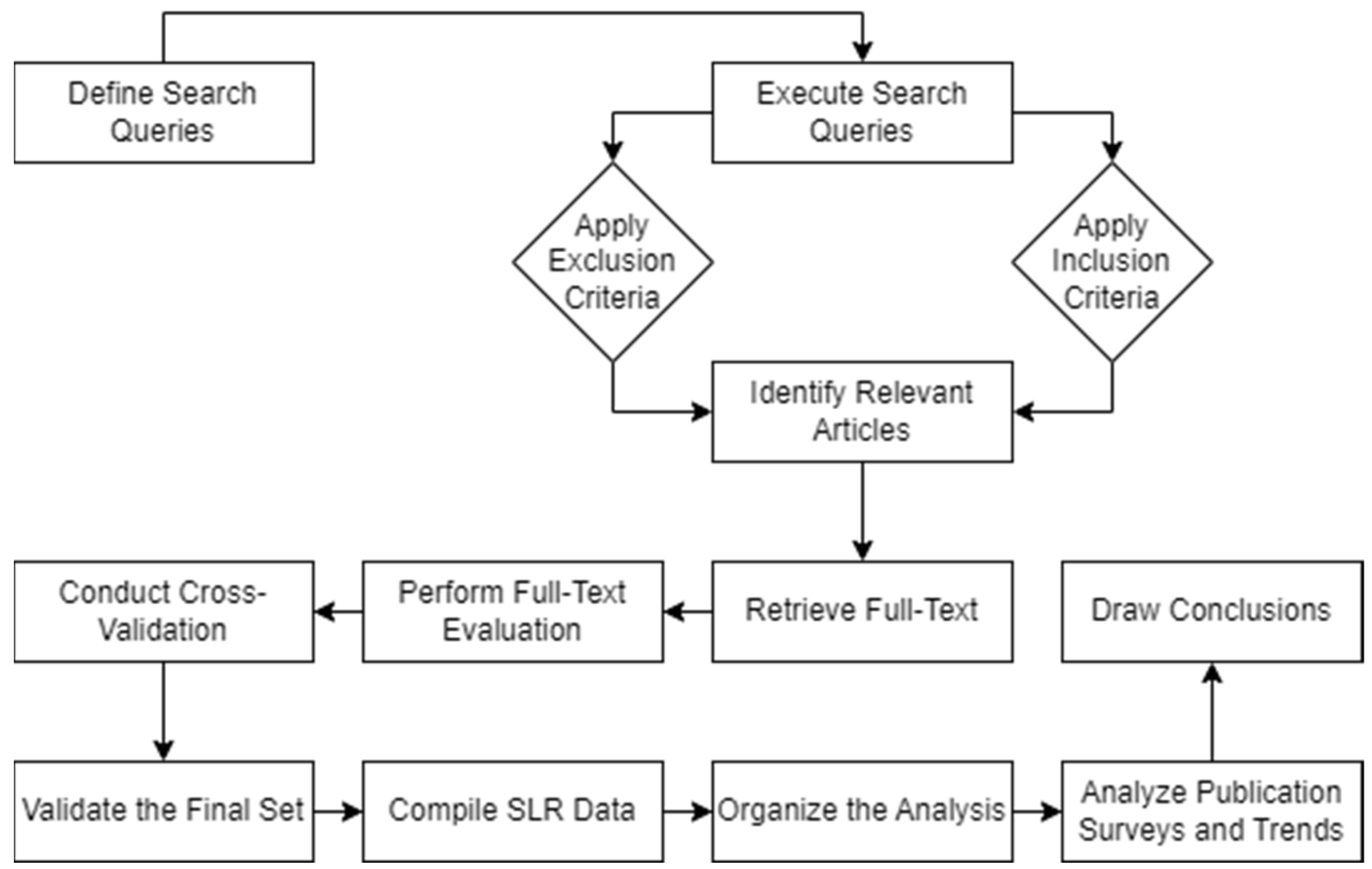

1.3. Methodology and Articles Selection

1.3.1. Data Collection and Preprocessing

- “Domain adaptation” AND “computer vision”;

- “Domain adaptation” AND “robotic vision”;

- “Domain adaptation” AND “visual domain transfer”;

- “Domain shift” AND “computer vision”;

- “Domain shift” AND “robotic vision”.

- Articles that explicitly discuss domain adaptation strategies in relation to computer and robotic vision;

- English-language articles are selected to ensure accessibility and comprehension;

- Publications that are accessible in full-text format as preprints, journal articles, or conference papers.

- Articles that have nothing to do with visual domain transfer or domain adaptation in computer or robotic vision;

- Articles written in languages other than English;

- Articles with little information or without access to the full text.

1.3.2. Evaluation Metrics

1.3.3. Geographical Attribution of Research Articles

- Primary author affiliation—In cases where the primary author of a research article had affiliations in multiple countries or regions, we attributed the article to the country or region corresponding to the primary author’s primary institutional affiliation;

- Collaborative research—For articles involving collaboration among multiple countries or regions, we counted the article once for each collaborating country or region. This approach ensures that collaborative efforts are appropriately acknowledged within our regional analysis;

- Inclusive approach—We adopted an inclusive approach that recognized the contributions of all regions involved in collaborative research. This approach aligns with our goal of providing a comprehensive overview of the geographical distribution of domain adaptation research;

- While we made every effort to accurately attribute geographical affiliations, it is important to acknowledge that certain complexities, such as dual affiliations or author mobility, may not have been fully addressed. Additionally, author information may change over time, and our analysis represents a snapshot based on available data.

2. Domain Adaptation Techniques

2.1. Overview of Domain Adaptation Techniques

2.1.1. Scholarly Landscape Visualization

Domain Adaptation for Image Segmentation

Domain Adaptation for Object Identification

Domain Adaption for Object Classification

2.1.2. Traditional Domain Adaptation Methods

2.1.3. Deep Learning-Based Methods

2.1.4. Hybrid Methods

2.2. Evaluation of Domain Adaptation Techniques

| Paper | Contribution | Advantages |

|---|---|---|

| [161] | Introduces a new multi-task learning framework for domain adaptation | Can improve performance on multiple tasks |

| [162] | Presents a model that works upon adversarial conditional image synthesis | Can improve performance by generating realistic images from the target domain |

| [167] | Presents a model that works upon adversarial conditional variational autoencoders | Can improve performance by using adversarial conditional variational autoencoders to learn representations that are invariant to domain shift |

| [163] | Introduces a new adversarial training framework for domain adaptation that uses an ensemble of discriminators | Can improve performance by combining multiple discriminators |

| [164] | Presents a model that works upon adversarial training and meta-learning | Can improve performance by using adversarial training and meta-learning to learn a model that can generalize to new domains |

| [165] | Introduces a new method for domain adaptation that combines adversarial training and self-supervised learning | Can improve performance by learning features that are invariant to domain shift and using self-supervised learning to learn representations that are transferable to new domains |

| [166] | Introduces a new conditional generative adversarial network framework for domain adaptation | Can improve performance by using conditional generative models |

| [168] | Presents a model that works upon conditional generative adversarial networks | Can improve performance by using conditional generative adversarial networks to generate realistic images from the target domain |

| [169] | Presents a model that works upon conditional Wasserstein generative adversarial networks | Can improve performance by generating realistic images from the target domain |

| [170] | Presents a model that works upon domain-invariant feature extraction | Can improve performance by using domain-invariant feature extraction to learn features that are invariant to domain shift |

| [171] | Introduces a new method for domain adaptation that combines ensemble learning and self-supervised learning | Can improve performance by combining multiple models and using self-supervised learning to learn features that are transferable to new domains |

| [172] | Presents a model that works upon ensemble learning and transfer learning | Can improve performance by using ensemble learning and transfer learning to learn a model that can generalize to new domains |

| [173] | Presents a model that works upon few-shot learning and meta-learning | Can improve performance by using few-shot learning to learn a model that can generalize to new domains and by using meta-learning to adapt to new domains more quickly |

| [174] | Presents a model that works upon few-shot learning and meta-learning | Can improve performance by using few-shot learning and meta-learning to learn a model that can generalize to new domains |

| [175] | Presents a model that works upon generative adversarial networks for semi-supervised learning | Can improve performance by learning from both labeled and unlabeled data |

| [176] | Introduces a new method for learning invariant features for domain adaptation | Can improve performance by learning representations that are invariant to domain shift |

| [177] | Introduces a new method for domain adaptation that combines invariant representations and self-supervised learning | Can improve performance by learning representations that are invariant to domain shift and using self-supervised learning to learn features that are transferable to new domains |

| [178] | Presents a model that works upon meta-learning for transferable features | Can improve performance by learning features that are transferable to new domains |

| [179] | Presents a model that works upon multi-task learning and attention | Can improve performance by using multi-task learning and attention to learn representations that are invariant to domain shift |

| [180] | Presents a model that works upon multi-task learning for few-shot image classification | Can improve performance by learning multiple tasks with few examples |

| [181] | Presents a model that works upon patch-level self-supervised learning | Can improve performance by using patch-level self-supervised learning to learn features that are invariant to domain shift |

| [182] | Presents a model that works upon self-supervised contrastive learning | Can improve performance by learning representations that are invariant to domain shift |

| [183] | Presents a model that works upon self-supervised contrastive learning | Can improve performance by learning representations that are invariant to domain shift |

| [184] | Presents a model that works upon self-supervised learning and synthetic data | Can improve performance by using self-supervised learning to learn features that are invariant to domain shift and by generating synthetic data that are like the target domain |

| [185] | Presents a model that works upon synthetic data and domain-invariant feature aggregation | Can improve performance by generating synthetic data that are like the target domain and by aggregating features from multiple domains |

| [186] | Presents a model that works upon synthetic data and self-supervised learning | Can improve performance by using synthetic data and self-supervised learning to learn features that are invariant to domain shift |

| [187] | Introduces a new Wasserstein adversarial training framework for domain adaptation | Can improve performance by using Wasserstein distance |

| [188] | Presents a model that works upon adversarial learning and meta-learning | Can improve performance by using adversarial learning and meta-learning to learn a model that can generalize to new domains |

| [189] | Presents a model that works upon adversarial learning and meta-learning | Can improve performance by using adversarial learning and meta-learning to learn a model that can generalize to new domains |

| [190] | Presents a model that works upon adversarial learning and self-supervised learning | Can improve performance by using adversarial learning and self-supervised learning to learn a model that can generalize to new domains |

| [191] | Presents a model that works upon adversarial learning and transfer learning | Can improve performance by using adversarial learning and transfer learning to learn a model that can generalize to new domains |

| [192] | Presents a model that works upon adversarial multi-agent reinforcement learning | Can improve performance by using adversarial multi-agent reinforcement learning to learn a model that can generalize to new domains |

| [193] | Presents a model that works upon adversarial training and distillation | Can improve performance by using adversarial training and distillation to learn a model that can generalize to new domains |

| [194] | Presents a model that works upon adversarial training and self-supervised learning | Can improve performance by using adversarial training and self-supervised learning to learn a model that can generalize to new domains |

| [195] | Presents a model that works upon attention and normalization | Can improve performance by using attention and normalization to learn representations that are invariant to domain shift |

| [196] | Presents a model that works upon conditional generative adversarial networks and CycleGAN | Can improve performance by using conditional generative adversarial networks and CycleGAN to generate realistic images from the target domain |

| [197] | Presents a model that works upon ensemble learning and domain adaptation | Can improve performance by using ensemble learning and domain adaptation to learn a model that can generalize to new domains |

| [198] | Presents a model that works upon few-shot learning and data augmentation | Can improve performance by using few-shot learning and data augmentation to learn a model that can generalize to new domains |

| [199] | Presents a model that works upon few-shot learning and interpolation | Can improve performance by using few-shot learning and interpolation to learn a model that can generalize to new domains |

| [200] | Presents a model that works upon few-shot learning and self-supervised learning | Can improve performance by using few-shot learning and self-supervised learning to learn a model that can generalize to new domains |

| [201] | Presents a model that works upon generative adversarial networks and self-supervised learning | Can improve performance by using generative adversarial networks and self-supervised learning to learn features that are invariant to domain shift |

| [202] | Presents a model that works upon graph neural networks | Can improve performance by using graph neural networks to learn representations that are invariant to domain shift |

| [203] | Presents a model that works upon invariant feature extraction and distillation | Can improve performance by using invariant feature extraction and distillation to learn a model that can generalize to new domains |

| [204] | Presents a model that works upon invariant feature extraction and interpolation | Can improve performance by using invariant feature extraction and interpolation to learn features that are invariant to domain shift |

| [205] | Presents a model that works upon meta-learning and importance reweighting | Can improve performance by using meta-learning and importance reweighting to learn a model that can generalize to new domains |

| [206] | Presents a model that works upon multi-task learning and cross-domain augmentation | Can improve performance by using multi-task learning and cross-domain augmentation to learn a model that can generalize to new domains |

| [207] | Presents a model that works upon multi-task learning and domain-invariant feature extraction | Can improve performance by using multi-task learning and domain-invariant feature extraction to learn features that are invariant to domain shift |

| [208] | Presents a model that works upon self-attention and conditional generative adversarial networks | Can improve performance by using self-attention and conditional generative adversarial networks to learn representations that are invariant to domain shift |

| [209] | Presents a model that works upon self-supervised contrastive learning | Can improve performance by using self-supervised contrastive learning to learn features that are invariant to domain shift |

| [210] | Presents a model that works upon self-supervised contrastive learning | Can improve performance by using self-supervised contrastive learning to learn representations that are invariant to domain shift |

| [211] | Presents a model that works upon self-supervised learning and transfer learning | Can improve performance by using self-supervised learning and transfer learning to learn a model that can generalize to new domains |

| [212] | Presents a model that works upon synthetic data and transfer learning | Can improve performance by using synthetic data and transfer learning to learn features that are invariant to domain shift |

| [213] | Presents a model that works upon temporal ensembling | Can improve performance by using temporal ensembling to learn a model that can generalize to new domains |

| [214] | Presents a model that works upon Wasserstein autoencoders | Can improve performance by using Wasserstein autoencoders to learn representations that are invariant to domain shift |

| [215] | Presents a model that works upon data augmentation and self-supervised learning | Can improve performance by using data augmentation and self-supervised learning to learn features that are invariant to domain shift |

| [216] | Presents a model that works upon adversarial learning and contrastive learning | Can improve performance by using adversarial learning and contrastive learning to learn features that are invariant to domain shift |

| [217] | Presents a model that works upon invariant feature extraction and distillation | Can improve performance by using invariant feature extraction and distillation to learn features that are invariant to domain shift |

| [218] | Presents a model that works upon semi-supervised learning and transfer learning | Can improve performance by using semi-supervised learning and transfer learning to learn a model that can generalize to new domains |

| [219] | Presents a model that works upon few-shot learning and invariant feature extraction | Can improve performance by using few-shot learning and invariant feature extraction to learn features that are invariant to domain shift |

| [220] | Presents a model that works upon adversarial learning and meta-learning | Can improve performance by using adversarial learning and meta-learning to learn a model that can generalize to new domains |

| [221] | Presents a model that works upon self-supervised learning and generative adversarial networks | Can improve performance by using self-supervised learning and generative adversarial networks to learn features that are invariant to domain shift |

| [222] | Presents a model that works upon adversarial learning and transfer learning | Can improve performance by using adversarial learning and transfer learning to learn a model that can generalize to new domains |

| [223] | Presents a model that works upon ensemble learning and meta-learning | Can improve performance by using ensemble learning and meta-learning to learn a model that can generalize to new domains |

| [224] | Presents a model that works upon adversarial learning and invariant representations | Can improve performance by using adversarial learning and invariant representations to learn features that are invariant to domain shift |

| [225] | Presents a model that works upon attention and self-supervised learning | Can improve performance by using attention and self-supervised learning to learn features that are invariant to domain shift |

| [226] | Presents a model that works upon conditional domain adversarial networks and self-supervised learning | Can improve performance by using conditional domain adversarial networks and self-supervised learning to learn features that are invariant to domain shift |

| [227] | Presents a model that works upon meta-learning and transfer learning | Can improve performance by using meta-learning and transfer learning to learn a model that can generalize to new domains |

| [228] | Presents a model that works upon few-shot learning and adversarial learning | Can improve performance by using few-shot learning and adversarial learning to learn a model that can generalize to new domains |

| [229] | Presents a model that works upon synthetic data and meta-learning | Can improve performance by using synthetic data and meta-learning to learn a model that can generalize to new domains |

| [230] | Presents a model that works upon adversarial learning and multi-task learning | Can improve performance by using adversarial learning and multi-task learning to learn a model that can generalize to new domains |

| [231] | Presents a model that works upon conditional generative adversarial networks and adversarial learning | Can improve performance by using conditional generative adversarial networks and adversarial learning to learn features that are invariant to domain shift |

| [232] | Presents a model that works upon ensemble learning and self-supervised learning | Can improve performance by using ensemble learning and self-supervised learning to learn a model that can generalize to new domains |

| [233] | Introduces a new method for domain adaptation that learns multi-domain invariant representations | Can improve performance by learning representations that are invariant to multiple domains |

| [234] | Presents a model that works upon self-supervised learning and conditional adversarial networks | Can improve performance by using self-supervised learning and conditional adversarial networks to learn features that are invariant to domain shift |

| [235] | Presents a model that works upon adversarial learning and few-shot learning | Can improve performance by using adversarial learning and few-shot learning to learn a model that can generalize to new domains |

| [236] | Presents a model that works upon domain-invariant feature aggregation and self-supervised learning | Can improve performance by using domain-invariant feature aggregation and self-supervised learning to learn features that are invariant to domain shift |

| [237] | Presents a model that works upon generative adversarial networks and meta-learning | Can improve performance by using generative adversarial networks and meta-learning to learn features that are invariant to domain shift |

| [238] | Presents a model that works upon adversarial learning and synthetic data | Can improve performance by using adversarial learning and synthetic data to learn features that are invariant to domain shift |

| [239] | Presents a model that works upon multi-task learning and meta-learning | Can improve performance by using multi-task learning and meta-learning to learn a model that can generalize to new domains |

| [240] | Presents a model that works upon ensemble learning and transfer learning | Can improve performance by using ensemble learning and transfer learning to learn a model that can generalize to new domains |

| [241] | Presents a model that works upon adversarial learning and self-supervised learning | Can improve performance by using adversarial learning and self-supervised learning to learn a model that can generalize to new domains |

| [242] | Uses conditional generative adversarial networks for domain adaptation | Can generate realistic images from the target domain |

| [243] | Combines distillation and semi-supervised learning for domain adaptation | Can improve performance by using both techniques |

| [244] | Uses graph neural networks for domain adaptation | Can better handle complex relationships between features |

| [245] | Combines meta-learning and adversarial training for domain adaptation | Can improve performance by learning to adapt to new domains |

| [246] | Combines multi-task learning and distillation for domain adaptation | Can improve performance by using both techniques |

| [247] | Uses reinforcement learning for domain adaptation | Can improve performance by learning to adapt to new domains |

| [248] | Uses self-supervised learning for domain adaptation | Can improve performance by using unlabeled data from the target domain |

| [249] | Introduces a method for adversarial learning with Wasserstein distance for domain adaptation | Can improve performance by using a more robust distance metric |

| [250] | Introduces a co-training framework for domain adaptation | Can improve performance by using multiple learners |

| [251] | Introduces a method for learning to correct feature distributions for domain adaptation | Can improve performance by explicitly modeling the domain shift |

| [252] | Combines adversarial training and data augmentation for domain adaptation | Can improve performance by using both techniques |

| [253] | Introduces a new adversarial training framework for domain adaptation | Can improve performance by using virtual adversarial examples |

| [254] | Introduces a Bayesian deep learning framework for domain adaptation | Can better handle uncertainty in the data |

| [255] | Introduces a new conditional Wasserstein GAN framework for domain adaptation | Can improve performance by using conditional generative models |

| [256] | Presents a model that works upon domain-invariant feature aggregation | Can improve performance by aggregating features from multiple domains |

| [257] | Presents a model that works upon few-shot learning | Can improve performance by using few-shot learning to learn a model that can generalize to new domains |

| [258] | Introduces a generative adversarial network for domain adaptation for person re-identification | Can generate realistic images from the target domain |

| [259] | Introduces a graph neural network for domain adaptation | Can better handle complex relationships between data points |

| [260] | Introduces a hierarchical self-attention mechanism for domain adaptation | Can better handle complex relationships between features |

| [261] | Introduces a new method for learning invariant representations for domain adaptation | Can improve performance by learning representations that are invariant to domain shift |

| [262] | Introduces a reinforcement learning framework for domain adaptation | Can learn to adapt to new domains in an online setting |

| [263] | Presents a model that works upon self-supervised learning | Can improve performance by using self-supervised learning to learn features that are invariant to domain shift |

| [264] | Presents a model that works upon synthetic data | Can improve performance by generating synthetic data that are like the target domain |

| [265] | Introduces a variational autoencoder for domain adaptation | Can generate realistic images from the target domain |

| [266] | Introduces a Wasserstein distance for domain adaptation | Can better handle data with large domain shifts |

| [267] | Introduces an ensemble domain adaptation framework | Can improve performance by combining multiple models |

| [268] | Introduces a joint distribution adaptation framework for domain adaptation | Can improve performance by jointly adapting the source and target distributions |

| [269] | Introduces a meta-learning framework for domain adaptation | Can improve performance by learning to adapt to new domains |

| [270] | Introduces a transfer learning framework for domain adaptation | Can improve performance by using a pre-trained model |

| [271] | Introduces a deep generative model for domain adaptation | Can generate realistic images from the target domain |

| [272] | Introduces an importance reweighting mechanism for domain adaptation | Can better handle imbalanced datasets |

| [273] | Introduces a domain adaptation method based on meta-learning | Can adapt to new domains quickly |

| [274] | Introduces a multi-task learning framework for domain adaptation | Can improve performance on multiple tasks |

| [275] | Introduces a reversal gradient technique for domain adaptation | Can better handle data with large domain shifts |

| [276] | Introduces a domain adaptation method based on self-supervised learning | Can be used with unlabeled data from the target domain |

| Paper | Year | Scope | Methods | Contributions |

|---|---|---|---|---|

| [278] | 2019 | A comprehensive survey of transfer learning, domain adaptation, and multi-source learning methods | Transfer learning, domain adaptation, and multi-source learning methods |

|

| [279] | 2020 | A comprehensive survey of domain adaptation methods for computer vision | Instance-based, feature-based, decision-based, generative, and meta-learning methods |

|

| [280] | 2021 | A survey of recent advances in domain adaptation for visual recognition | Instance-based, feature-based, decision-based, generative, and meta-learning methods |

|

| [299] | 2022 | A survey of recent advances in domain adaptation for computer vision | Instance-based, feature-based, decision-based, generative, and meta-learning methods |

|

| [281] | 2022 | A systematic literature review of domain adaptation methods for computer vision | Instance-based, feature-based, decision-based, generative, and meta-learning methods |

|

| [300] | 2022 | A review of domain adaptation methods for computer vision | Instance-based, feature-based, decision-based, generative, and meta-learning methods |

|

| [284] | 2019 | A study of domain adaptation for object detection in video | Instance-based, feature-based, decision-based, generative, and meta-learning methods |

|

| [285] | 2020 | A study of domain adaptation for face recognition | Instance-based, feature-based, decision-based, generative, and meta-learning methods |

|

| [286] | 2021 | A study of domain adaptation for medical image analysis | Instance-based, feature-based, decision-based, generative, and meta-learning methods |

|

| [287] | 2022 | A study of domain adaptation for natural language processing | Instance-based, feature-based, decision-based, generative, and meta-learning methods |

|

| [288] | 2022 | A study of domain adaptation for robotics | Instance-based, feature-based, decision-based, generative, and meta-learning methods |

|

| [289] | 2022 | A study of domain adaptation for 3D vision | Instance-based, feature-based, decision-based, generative, and meta-learning methods |

|

| [301] | 2019 | A study of multi-task learning for domain adaptation | Multi-task learning |

|

| [295] | 2020 | A study of transfer learning for domain adaptation | Transfer learning |

|

| [296] | 2019 | A study of self-supervised learning for domain adaptatin | Self-supervised learning |

|

2.3. Performance Metrics Comparison

2.4. Challenges and Insights from Cross-Domain Analysis

3. Applications and Real-World Scenarios

3.1. Domain Adaptation in Computer Vision: Real-World Applications

3.1.1. Autonomous Driving Systems

3.1.2. Medical Imaging and Diagnosis

3.1.3. Surveillance and Security

3.2. Domain Adaptation in Robotic Vision: Real-World Applications

3.2.1. Industrial Automation

3.2.2. Agriculture and Farming

3.2.3. Search and Rescue Missions

4. Conclusions

4.1. Summary of Findings

4.2. Contributions and Significance of Cross-Domain Analysis

4.3. Prospects and Open Challenges

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, Q.; Meng, F.; Breckon, T.P. Data augmentation with norm-AE and selective pseudo-labelling for unsupervised domain adaptation. Neural Netw. 2023, 161, 614–625. [Google Scholar] [CrossRef]

- Yu, Y.; Chen, W.; Chen, F.; Jia, W.; Lu, Q. Night-time vehicle model recognition based on domain adaptation. Multimed. Tools Appl. 2023, 1–20. [Google Scholar] [CrossRef]

- Han, K.; Kim, Y.; Han, D.; Lee, H.; Hong, S. TL-ADA: Transferable Loss-based Active Domain Adaptation. Neural Netw. 2023, 161, 670–681. [Google Scholar] [CrossRef] [PubMed]

- Gojić, G.; Vincan, V.; Kundačina, O.; Mišković, D.; Dragan, D. Non-adversarial Robustness of Deep Learning Methods for Computer Vision. arXiv 2023, arXiv:2305.14986. [Google Scholar]

- Yu, Z.; Li, J.; Zhu, L.; Lu, K.; Shen, H.T. Classification Certainty Maximization for Unsupervised Domain Adaptation. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 4232–4243. [Google Scholar] [CrossRef]

- Ghaffari, R.; Helfroush, M.S.; Khosravi, A.; Kazemi, K.; Danyali, H.; Rutkowski, L. Towards domain adaptation with open-set target data: Review of theory and computer vision applications R1# C1. In Information Fusion; Elsevier: Amsterdam, The Netherlands, 2023; p. 101912. [Google Scholar]

- Xu, J.; Xiao, L.; López, A.M. Self-supervised domain adaptation for computer vision tasks. IEEE Access 2019, 7, 156694–156706. [Google Scholar] [CrossRef]

- Venkateswara, H.; Panchanathan, S. Domain Adaptation in Computer Vision with Deep Learning; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Petersen, K.; Vakkalanka, S.; Kuzniarz, L. Guidelines for conducting systematic mapping studies in software engineering: An update. Inf. Softw. Technol. 2015, 64, 1–18. [Google Scholar] [CrossRef]

- Chen, W.; Hu, H. Generative attention adversarial classification network for unsupervised domain adaptation. Pattern Recognit. 2020, 107, 107440. [Google Scholar] [CrossRef]

- Yang, J.; Zou, H.; Zhou, Y.; Xie, L. Robust adversarial discriminative domain adaptation for real-world cross-domain visual recognition. Neurocomputing 2021, 433, 28–36. [Google Scholar] [CrossRef]

- Rahman, M.M.; Fookes, C.; Baktashmotlagh, M.; Sridharan, S. On minimum discrepancy estimation for deep domain adaptation. In Domain Adaptation for Visual Understanding; Springer: Berlin/Heidelberg, Germany, 2020; pp. 81–94. [Google Scholar]

- Dunnhofer, M.; Martinel, N.; Micheloni, C. Weakly-supervised domain adaptation of deep regression trackers via reinforced knowledge distillation. IEEE Robot. Autom. Lett. 2021, 6, 5016–5023. [Google Scholar] [CrossRef]

- Yang, G.; Ding, M.; Zhang, Y. Bi-directional class-wise adversaries for unsupervised domain adaptation. In Applied Intelligence; Springer: Berlin/Heidelberg, Germany, 2022; pp. 1–17. [Google Scholar]

- Oza, P.; Sindagi, V.A.; Sharmini, V.V.; Patel, V.M. Unsupervised domain adaptation of object detectors: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 2022, 3217046. [Google Scholar] [CrossRef] [PubMed]

- Csurka, G. Deep visual domain adaptation. In Proceedings of the 2020 22nd International Symposium on Symbolic and Numeric Algorithms for Scientific Computing (SYNASC), Timisoara, Romania, 1–4 September 2020; pp. 1–8. [Google Scholar]

- Chen, C.; Chen, Z.; Jiang, B.; Jin, X. Joint domain alignment and discriminative feature learning for unsupervised deep domain adaptation. Proc. AAAI Conf. Artif. Intell. 2019, 33, 3296–3303. [Google Scholar] [CrossRef]

- Loghmani, M.R.; Robbiano, L.; Planamente, M.; Park, K.; Caputo, B.; Vincze, M. Unsupervised domain adaptation through inter-modal rotation for rgb-d object recognition. IEEE Robot. Autom. Lett. 2020, 5, 6631–6638. [Google Scholar] [CrossRef]

- Li, C.; Du, D.; Zhang, L.; Wen, L.; Luo, T.; Wu, Y.; Zhu, P. Spatial attention pyramid network for unsupervised domain adaptation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 481–497. [Google Scholar]

- Dourado, A.; Guth, F.; de Campos, T.; Weigang, L. Domain adaptation for holistic skin detection. In Proceedings of the 2021 34th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Gramado, Brazil, 18–22 October 2021; pp. 362–369. [Google Scholar]

- Venkateswara, H.; Chakraborty, S.; Panchanathan, S. Deep-Learning Systems for Domain Adaptation in Computer Vision: Learning Transferable Feature Representations. IEEE Signal. Process. Mag. 2017, 34, 117–129. [Google Scholar] [CrossRef]

- Peng, X.; Li, Y.; Saenko, K. Domain2vec: Domain embedding for unsupervised domain adaptation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 756–774. [Google Scholar]

- Bozorgtabar, B.; Mahapatra, D.; Thiran, J.-P. ExprADA: Adversarial domain adaptation for facial expression analysis. Pattern Recognit. 2020, 100, 107111. [Google Scholar] [CrossRef]

- Bateson, M.; Kervadec, H.; Dolz, J.; Lombaert, H.; Ben Ayed, I. Source-relaxed domain adaptation for image segmentation. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, 4–8 October 2020; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 490–499. [Google Scholar]

- Zhang, C.; Zhao, Q. Attention guided for partial domain adaptation. Inf. Sci. N. Y. 2021, 547, 860–869. [Google Scholar] [CrossRef]

- Han, C.; Zhou, D.; Xie, Y.; Gong, M.; Lei, Y.; Shi, J. Collaborative representation with curriculum classifier boosting for unsupervised domain adaptation. Pattern Recognit. 2021, 113, 107802. [Google Scholar] [CrossRef]

- Wittich, D.; Rottensteiner, F. Appearance based deep domain adaptation for the classification of aerial images. ISPRS J. Photogramm. Remote Sens. 2021, 180, 82–102. [Google Scholar] [CrossRef]

- Sahoo, A.; Panda, R.; Feris, R.; Saenko, K.; Das, A. Select, label, and mix: Learning discriminative invariant feature representations for partial domain adaptation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–7 January 2023; pp. 4210–4219. [Google Scholar]

- Thota, M.; Leontidis, G. Contrastive domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision. and Pattern Recognition, Virtual, 24 June 2021; pp. 2209–2218. [Google Scholar]

- Mahyari, A.G.; Locker, T. Domain adaptation for robot predictive maintenance systems. arXiv 2018, arXiv:1809.08626. [Google Scholar]

- Hoffman, J.; Tzeng, E.; Park, T.; Zhu, J.; Isola, P.; Saenko, K.; Efros, A.A.; Darrell, T. CyCADA: Cycle-Consistent Adversarial Domain Adaptation. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Tzeng, E.; Hoffman, J.; Saenko, K.; Darrell, T. Adversarial Discriminative Domain Adaptation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2962–2971. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Ros, G.; Sellart, L.; Materzynska, J.; Vázquez, D.; López, A.M. The SYNTHIA Dataset: A Large Collection of Synthetic Images for Semantic Segmentation of Urban Scenes. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3234–3243. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Richter, S.R.; Vineet, V.; Roth, S.; Koltun, V. Playing for Data: Ground Truth from Computer Games. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Berlin/Heidelberg, Germany, 2016. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Long, M.; Cao, Y.; Wang, J.; Jordan, M.I. Learning Transferable Features with Deep Adaptation Networks. In Proceedings of the International Conference on Machine Learning, Lille, France, 7–9 July 2015. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar]

- Kumari, S.; Singh, P. Deep learning for unsupervised domain adaptation in medical imaging: Recent advancements and future perspectives. arXiv 2023, arXiv:2308.01265. [Google Scholar]

- Liang, J.; He, R.; Tan, T.P. A Comprehensive Survey on Test-Time Adaptation under Distribution Shifts. arXiv 2023, arXiv:2303.15361. [Google Scholar]

- Zhao, D.; Wang, S.; Zang, Q.; Quan, D.; Ye, X.; Jiao, L. Towards Better Stability and Adaptability: Improve Online Self-Training for Model Adaptation in Semantic Segmentation. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar]

- Fang, Y.; Yap, P.; Lin, W.; Zhu, H.; Liu, M. Source-Free Unsupervised Domain Adaptation: A Survey. arXiv 2022, arXiv:2301.00265. [Google Scholar]

- Wang, Y.; Liang, J.; Zhang, Z. Source Data-Free Cross-Domain Semantic Segmentation: Align, Teach and Propagate. arXiv 2021, arXiv:2106.11653. [Google Scholar]

- Paul, S.; Khurana, A.; Aggarwal, G. Unsupervised Adaptation of Semantic Segmentation Models without Source Data. arXiv 2021, arXiv:2112.02359. [Google Scholar]

- Wang, Y.; Liang, J.; Zhang, Z.; Xiao, J.; Mei, S.; Zhang, Z. Domain Adaptive Semantic Segmentation without Source Data: Align, Teach and Propagate. arXiv 2021, arXiv:2110.06484v1. [Google Scholar]

- Csurka, G.; Volpi, R.; Chidlovskii, B. Unsupervised Domain Adaptation for Semantic Image Segmentation: A Comprehensive Survey. arXiv 2021, arXiv:2112.03241. [Google Scholar]

- Akkaya, I.B.; Halici, U. Self-training via Metric Learning for Source-Free Domain Adaptation of Semantic Segmentation. arXiv 2022, arXiv:2212.04227. [Google Scholar]

- Csurka, G.; Volpi, R.; Chidlovskii, B. Semantic Image Segmentation: Two Decades of Research. Found. Trends Comput. Graph. Vis. 2023, 14, 1–162. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.B.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.E.; Fu, C.-Y.; Berg, A. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Biswas, D.; Tevsi’c, J. Progressive Domain Adaptation with Contrastive Learning for Object Detection in the Satellite Imagery. arXiv 2022, arXiv:2209.02564. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.J.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.B.; He, K.; Hariharan, B.; Belongie, S.J. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Cheng, G.; Yuan, X.; Yao, X.; Yan, K.; Zeng, Q.; Han, J. Towards Large-Scale Small Object Detection: Survey and Benchmarks. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 13467–13488. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Feng, Y.; Zhang, S.; Wang, N.; Mei, S.; He, M. Semi-Supervised Person Detection in Aerial Images with Instance Segmentation and Maximum Mean Discrepancy Distance. Remote Sens. 2023, 15, 2928. [Google Scholar] [CrossRef]

- Xiong, Z.; Song, T.; He, S.; Yao, Z.; Wu, X. A unified and costless approach for improving small and long-tail object detection in aerial images of traffic scenarios. Appl. Intell. 2022, 53, 14426–14447. [Google Scholar] [CrossRef]

- Leng, J.; Mo, M.; Zhou, Y.; Gao, C.; Li, W.; Gao, X. Pareto Refocusing for Drone-View Object Detection. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 1320–1334. [Google Scholar] [CrossRef]

- Xu, C.; Wang, J.; Yang, W.; Yu, H.; Yu, L.; Xia, G. RFLA: Gaussian Receptive Field based Label Assignment for Tiny Object Detection. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2022; pp. 526–543. [Google Scholar]

- Liu, Y.; Li, W.; Tan, L.; Huang, X.; Zhang, H.; Jiang, X. DB-YOLOv5: A UAV Object Detection Model Based on Dual Backbone Network for Security Surveillance. Electronics 2023, 12, 3296. [Google Scholar] [CrossRef]

- Wan, Y.; Liao, Z.; Liu, J.; Song, W.; Ji, H.; Gao, Z. Small object detection leveraging density-aware scale adaptation. Photogramm. Rec. 2023, 38, 160–175. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, C.; Guo, W.; Zhang, T.; Li, W. CFANet: Efficient Detection of UAV Image Based on Cross-Layer Feature Aggregation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–11. [Google Scholar] [CrossRef]

- Li, X.; Diao, W.; Mao, Y.; Gao, P.; Mao, X.; Li, X.; Sun, X. OGMN: Occlusion-guided Multi-task Network for Object Detection in UAV Images. arXiv 2023, arXiv:2304.11805. [Google Scholar] [CrossRef]

- Lu, S.; Lu, H.; Dong, J.; Wu, S. Object Detection for UAV Aerial Scenarios Based on Vectorized IOU. Sensors 2023, 23, 3061. [Google Scholar] [CrossRef]

- Zhou, B.; Khosla, A.; Lapedriza, À.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Singh, K.; Lee, Y.J. Hide-and-Seek: Forcing a Network to be Meticulous for Weakly-Supervised Object and Action Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3544–3553. [Google Scholar]

- Yun, S.; Han, D.; Oh, S.; Chun, S.; Choe, J.; Yoo, Y.J. CutMix: Regularization Strategy to Train Strong Classifiers With Localizable Features. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6022–6031. [Google Scholar]

- Choe, J.; Shim, H. Attention-Based Dropout Layer for Weakly Supervised Object Localization. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seoul, Republic of Korea, 27–28 October 2019; pp. 2214–2223. [Google Scholar]

- Xue, H.; Liu, C.; Wan, F.; Jiao, J.; Ji, X.; Ye, Q. DANet: Divergent Activation for Weakly Supervised Object Localization. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6588–6597. [Google Scholar]

- Zhang, X.; Wei, Y.; Feng, J.; Yang, Y.; Huang, T.S. Adversarial Complementary Learning for Weakly Supervised Object Localization. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1325–1334. [Google Scholar]

- Zhang, X.; Wei, Y.; Kang, G.; Yang, Y.; Huang, T. Self-produced Guidance for Weakly-supervised Object Localization. arXiv 2018, arXiv:1807.08902. [Google Scholar]

- Wah, C.; Branson, S.; Welinder, P.; Perona, P.; Belongie, S. The Caltech-Ucsd Birds-200-2011 Dataset. 2011. Available online: https://authors.library.caltech.edu/records/cvm3y-5hh21 (accessed on 11 September 2023).

- Zhao, Y.; Ye, Q.; Wu, W.; Shen, C.; Wan, F. Generative Prompt Model for Weakly Supervised Object Localization. arXiv 2023, arXiv:2307.09756. [Google Scholar]

- Xie, J.; Luo, Z.; Li, Y.; Liu, H.; Shen, L.; Shou, M.Z. Open-World Weakly-Supervised Object Localization. arXiv 2023, arXiv:2304.08271. [Google Scholar]

- Shao, F.; Luo, Y.; Wu, S.; Li, Q.; Gao, F.; Yang, Y.; Xiao, J. Further Improving Weakly-supervised Object Localization via Causal Knowledge Distillation. arXiv 2023, arXiv:2301.01060. [Google Scholar]

- Shaharabany, T.; Tewel, Y.; Wolf, L. What is Where by Looking: Weakly-Supervised Open-World Phrase-Grounding without Text Inputs. arXiv 2022, arXiv:2206.09358. [Google Scholar]

- Xu, L.; Ouyang, W.; Bennamoun, M.; Boussaid, F.; Xu, D. Learning Multi-Modal Class-Specific Tokens for Weakly Supervised Dense Object Localization. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18 June 2023; pp. 19596–19605. [Google Scholar]

- Shao, F.; Chen, L.; Shao, J.; Ji, W.; Xiao, S.; Ye, L.; Zhuang, Y.; Xiao, J. Deep Learning for Weakly-Supervised Object Detection and Localization: A Survey. Neurocomputing 2022, 496, 192–207. [Google Scholar] [CrossRef]

- Xu, R.; Luo, Y.; Hu, H.; Du, B.; Shen, J.; Wen, Y. Rethinking the Localization in Weakly Supervised Object Localization. arXiv 2023, arXiv:2308.06161. [Google Scholar]

- Planamente, M.; Plizzari, C.; Cannici, M.; Ciccone, M.; Strada, F.; Bottino, A.; Matteucci, M.; Caputo, B. Da4event: Towards bridging the sim-to-real gap for event cameras using domain adaptation. IEEE Robot. Autom. Lett. 2021, 6, 6616–6623. [Google Scholar] [CrossRef]

- Chen, C.; Xie, W.; Wen, Y.; Huang, Y.; Ding, X. Multiple-source domain adaptation with generative adversarial nets. Knowl. Based Syst. 2020, 199, 105962. [Google Scholar] [CrossRef]

- Bucci, S.; Loghmani, M.R.; Tommasi, T. On the effectiveness of image rotation for open set domain adaptation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 422–438. [Google Scholar]

- Athanasiadis, C.; Hortal, E.; Asteriadis, S. Audio–visual domain adaptation using conditional semi-supervised generative adversarial networks. Neurocomputing 2020, 397, 331–344. [Google Scholar] [CrossRef]

- Scalbert, M.; Vakalopoulou, M.; Couzinié-Devy, F. Multi-source domain adaptation via supervised contrastive learning and confident consistency regularization. arXiv 2021, arXiv:2106.16093. [Google Scholar]

- Roy, S.; Trapp, M.; Pilzer, A.; Kannala, J.; Sebe, N.; Ricci, E.; Solin, A. Uncertainty-guided source-free domain adaptation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 537–555. [Google Scholar]

- Wang, Y.; Nie, L.; Li, Y.; Chen, S. Soft large margin clustering for unsupervised domain adaptation. Knowl. Based Syst. 2020, 192, 105344. [Google Scholar] [CrossRef]

- Zhang, C.; Zhao, Q.; Wang, Y. Transferable attention networks for adversarial domain adaptation. Inf. Sci. N. Y. 2020, 539, 422–433. [Google Scholar] [CrossRef]

- Hou, J.; Ding, X.; Deng, J.D.; Cranefield, S. Unsupervised domain adaptation using deep networks with cross-grafted stacks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 27 October–2 November 2019. [Google Scholar]

- Huang, J.; Guan, D.; Xiao, A.; Lu, S. Rda: Robust domain adaptation via fourier adversarial attacking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 8988–8999. [Google Scholar]

- Kim, T.; Kim, C. Attract, perturb, and explore: Learning a feature alignment network for semi-supervised domain adaptation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 591–607. [Google Scholar]

- Kieu, M.; Bagdanov, A.D.; Bertini, M.; Del Bimbo, A. Domain adaptation for privacy-preserving pedestrian detection in thermal imagery. In Proceedings of the Image Analysis and Processing–ICIAP 2019: 20th International Conference, Trento, Italy, 9–13 September 2019; pp. 203–213. [Google Scholar]

- Porav, H.; Bruls, T.; Newman, P. Don’t worry about the weather: Unsupervised condition-dependent domain adaptation. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 33–40. [Google Scholar]

- Xu, Y.; Chen, L.; Duan, L.; Tsang, I.W.; Luo, J. Open Set Domain Adaptation With Soft Unknown-Class Rejection. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 1601–1612. [Google Scholar] [CrossRef]

- Braytee, A.; Naji, M.; Kennedy, P.J. Unsupervised domain-adaptation-based tensor feature learning with structure preservation. IEEE Trans. Artif. Intell. 2022, 3, 370–380. [Google Scholar] [CrossRef]

- Fujii, K.; Kawamoto, K. Generative and self-supervised domain adaptation for one-stage object detection. Array 2021, 11, 100071. [Google Scholar] [CrossRef]

- Kurmi, V.K.; Kumar, S.; Namboodiri, V.P. Attending to discriminative certainty for domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 491–500. [Google Scholar]

- Li, R.; Jia, X.; He, J.; Chen, S.; Hu, Q. T-svdnet: Exploring high-order prototypical correlations for multi-source domain adaptation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 9991–10000. [Google Scholar]

- Zhao, S.; Fu, H.; Gong, M.; Tao, D. Geometry-aware symmetric domain adaptation for monocular depth estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9788–9798. [Google Scholar]

- Wen, J.; Yuan, J.; Zheng, Q.; Liu, R.; Gong, Z.; Zheng, N. Hierarchical domain adaptation with local feature patterns. Pattern Recognit. 2022, 124, 108445. [Google Scholar] [CrossRef]

- Jiang, Q.; Zhang, Y.; Bao, F.; Zhao, X.; Zhang, C.; Liu, P. Two-step domain adaptation for underwater image enhancement. Pattern Recognit. 2022, 122, 108324. [Google Scholar] [CrossRef]

- Zhang, L.; Fu, J.; Wang, S.; Zhang, D.; Dong, Z.; Chen, C.L.P. Guide subspace learning for unsupervised domain adaptation. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 3374–3388. [Google Scholar] [CrossRef]

- Wang, J.; Chen, J.; Lin, J.; Sigal, L.; de Silva, C.W. Discriminative feature alignment: Improving transferability of unsupervised domain adaptation by Gaussian-guided latent alignment. Pattern Recognit. 2021, 116, 107943. [Google Scholar] [CrossRef]

- Delussu, R.; Putzu, L.; Fumera, G.; Roli, F. Online domain adaptation for person re-identification with a human in the loop. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 3829–3836. [Google Scholar]

- Kang, Q.; Yao, S.; Zhou, M.; Zhang, K.; Abusorrah, A. Effective visual domain adaptation via generative adversarial distribution matching. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 3919–3929. [Google Scholar] [CrossRef] [PubMed]

- Klingner, M.; Termöhlen, J.-A.; Ritterbach, J.; Fingscheidt, T. Unsupervised batchnorm adaptation (ubna): A domain adaptation method for semantic segmentation without using source domain representations. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 210–220. [Google Scholar]

- Sun, T.; Segu, M.; Postels, J.; Wang, Y.; Van Gool, L.; Schiele, B.; Tombari, F.; Yu, F. SHIFT: A synthetic driving dataset for continuous multi-task domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 21371–21382. [Google Scholar]

- Zhang, Y.; Wang, N.; Cai, S. Adversarial sliced Wasserstein domain adaptation networks. Image Vis. Comput. 2020, 102, 103974. [Google Scholar] [CrossRef]

- Guizilini, V.; Li, J.; Ambru, R.; Gaidon, A. Geometric unsupervised domain adaptation for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 8537–8547. [Google Scholar]

- Han, C.; Zhou, D.; Xie, Y.; Lei, Y.; Shi, J. Label propagation with multi-stage inference for visual domain adaptation. Knowl. Based Syst. 2021, 216, 106809. [Google Scholar] [CrossRef]

- Sun, Y.; Tzeng, E.; Darrell, T.; Efros, A.A. Unsupervised domain adaptation through self-supervision. arXiv 2019, arXiv:1909.11825. [Google Scholar]

- Shirdel, G.; Ghanbari, A. A survey on self-supervised learning methods for domain adaptation in deep neural networks focusing on the optimization problems. AUT J. Math. Comput. 2022, 3, 217–235. [Google Scholar]

- Yue, X.; Zheng, Z.; Zhang, S.; Gao, Y.; Darrell, T.; Keutzer, K.; Vincentelli, A.S. Prototypical cross-domain self-supervised learning for few-shot unsupervised domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 13834–13844. [Google Scholar]

- Sanabria, R.; Zambonelli, F.; Dobson, S.; Ye, J. ContrasGAN: Unsupervised domain adaptation in Human Activity Recognition via adversarial and contrastive learning. Pervasive Mob. Comput. 2021, 78, 101477. [Google Scholar] [CrossRef]

- Yazdanpanah, M.; Moradi, P. Visual Domain Bridge: A source-free domain adaptation for cross-domain few-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2868–2877. [Google Scholar]

- Liang, J.; Hu, D.; Wang, Y.; He, R.; Feng, J. Source data-absent unsupervised domain adaptation through hypothesis transfer and labeling transfer. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 8602–8617. [Google Scholar] [CrossRef]

- Shin, I.; Woo, S.; Pan, F.; Kweon, I.S. Two-phase pseudo label densification for self-training based domain adaptation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 532–548. [Google Scholar]

- Chen, L.; Lou, Y.; He, J.; Bai, T.; Deng, M. Geometric anchor correspondence mining with uncertainty modeling for universal domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision. and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16134–16143. [Google Scholar]

- Gabourie, J.; Rostami, M.; Pope, P.E.; Kolouri, S.; Kim, K. Learning a domain-invariant embedding for unsupervised domain adaptation using class-conditioned distribution alignment. In Proceedings of the 2019 57th Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 24–27 September 2019; pp. 352–359. [Google Scholar]

- Han; Lei, Y.; Xie, Y.; Zhou, D.; Gong, M. Visual domain adaptation based on modified A- distance and sparse filtering. Pattern Recognit. 2020, 104, 107254. [Google Scholar]

- Li, H.; Wang, X.; Shen, F.; Li, Y.; Porikli, F.; Wang, M. Real-time deep tracking via corrective domain adaptation. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 2600–2612. [Google Scholar] [CrossRef]

- Chen, Z.; Zhuang, J.; Liang, X.; Lin, L. Blending-target domain adaptation by adversarial meta-adaptation networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2248–2257. [Google Scholar]

- Tang, H.; Zhao, Y.; Lu, H. Unsupervised person re-identification with iterative self-supervised domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Xu, R.; Liu, P.; Wang, L.; Chen, C.; Wang, J. Reliable weighted optimal transport for unsupervised domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 4394–4403. [Google Scholar]

- de CG Pereira, T.; de Campos, T.E. Domain Adaptation for Person Re-identification on New Unlabeled Data. In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Valletta, Malta, 27–29 February 2020; pp. 695–703. [Google Scholar]

- Yue, Z.; Kratzwald, B.; Feuerriegel, S. Contrastive domain adaptation for question answering using limited text corpora. arXiv 2021, arXiv:2108.13854. [Google Scholar]

- Liu, H.; Cao, Z.; Long, M.; Wang, J.; Yang, Q. Separate to adapt: Open set domain adaptation via progressive separation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2927–2936. [Google Scholar]

- Ning, M.; Lu, D.; Wei, D.; Bian, C.; Yuan, C.; Yu, S.; Ma, K.; Zheng, Y. Multi-anchor active domain adaptation for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 9112–9122. [Google Scholar]

- Zhao, S.; Li, B.; Xu, P.; Keutzer, K. Multi-source domain adaptation in the deep learning era: A systematic survey. arXiv 2020, arXiv:2002.12169. [Google Scholar]

- Huang; Lu, S.; Guan, D.; Zhang, X. Contextual-relation consistent domain adaptation for semantic segmentation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 705–722. [Google Scholar]

- Tang, G.; Gao, X.; Chen, Z.; Zhong, H. Unsupervised adversarial domain adaptation with similarity diffusion for person re-identification. Neurocomputing 2021, 442, 337–347. [Google Scholar] [CrossRef]

- Feng, C.; He, Z.; Wang, J.; Lin, Q.; Zhu, Z.; Lu, J.; Xie, S. Domain adaptation with SBADA-GAN and Mean Teacher. Neurocomputing 2020, 396, 577–586. [Google Scholar] [CrossRef]

- Batanina, E.; Bekkouch, I.E.I.; Youssry, Y.; Khan, A.; Khattak, A.M.; Bortnikov, M. Domain adaptation for car accident detection in videos. In Proceedings of the 2019 Ninth International Conference on Image Processing Theory, Tools and Applications (IPTA), Istanbul, Turkey, 6–9 November 2019; pp. 1–6. [Google Scholar]

- Morerio, P.; Volpi, R.; Ragonesi, R.; Murino, V. Generative pseudo-label refinement for unsupervised domain adaptation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 14–19 June 2020; pp. 3130–3139. [Google Scholar]

- Ayalew, T.W.; Ubbens, J.R.; Stavness, I. Unsupervised domain adaptation for plant organ counting. In Proceedings of the Computer Vision–ECCV 2020 Workshops, Glasgow, UK, 23–28 August 2020; pp. 330–346. [Google Scholar]

- Wang, S.; Wang, B.; Zhang, Z.; Heidari, A.A.; Chen, H. Class-aware sample reweighting optimal transport for multi-source domain adaptation. Neurocomputing 2023, 523, 213–223. [Google Scholar] [CrossRef]

- da Costa, P.R.D.O.; Akçay, A.; Zhang, Y.; Kaymak, U. Remaining useful lifetime prediction via deep domain adaptation. Reliab. Eng. Syst. Saf. 2020, 195, 106682. [Google Scholar] [CrossRef]

- Yang, S.; Wang, Y.; Van De Weijer, J.; Herranz, L.; Jui, S. Generalized source-free domain adaptation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 8978–8987. [Google Scholar]

- Ahmed, W.; Morerio, P.; Murino, V. Cleaning noisy labels by negative ensemble learning for source-free unsupervised domain adaptation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 4–8 January 2022; pp. 1616–1625. [Google Scholar]

- Rahman, M.M.; Fookes, C.; Baktashmotlagh, M.; Sridharan, S. Correlation-aware adversarial domain adaptation and generalization. Pattern Recognit. 2020, 100, 107124. [Google Scholar] [CrossRef]

- Kurmi, V.K.; Namboodiri, V.P. Looking back at labels: A class based domain adaptation technique. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar]

- Truong, T.-D.; Duong, C.N.; Le, N.; Phung, S.L.; Rainwater, C.; Luu, K. Bimal: Bijective maximum likelihood approach to domain adaptation in semantic scene segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 8548–8557. [Google Scholar]

- Tian, Y.; Zhu, S. Partial domain adaptation on semantic segmentation. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 3798–3809. [Google Scholar] [CrossRef]

- Lin, C.; Li, Y.; Liu, Y.; Wang, X.; Geng, S. Building damage assessment from post-hurricane imageries using unsupervised domain adaptation with enhanced feature discrimination. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–10. [Google Scholar] [CrossRef]

- Xu, R.; Li, G.; Yang, J.; Lin, L. Larger norm more transferable: An adaptive feature norm approach for unsupervised domain adaptation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 27 October–2 November 2019; pp. 1426–1435. [Google Scholar]

- Hartley, Z.K.J.; French, A.P. Domain adaptation of synthetic images for wheat head detection. Plants 2021, 10, 2633. [Google Scholar] [CrossRef] [PubMed]

- Csurka, G.; Hospedales, T.M.; Salzmann, M.; Tommasi, T. Visual Domain Adaptation in the Deep Learning Era; Morgan & Claypool Publishers: Kentfield, CA, USA, 2022; ISBN 9781636393421. [Google Scholar]

- Kurmi, V.K.; Subramanian, V.K.; Namboodiri, V.P. Domain impression: A source data free domain adaptation method. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 615–625. [Google Scholar]

- Jhoo, W.Y.; Heo, J.-P. Collaborative learning with disentangled features for zero-shot domain adaptation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 8896–8905. [Google Scholar]

- Yang; Balaji, Y.; Lim, S.-N.; Shrivastava, A. Curriculum manager for source selection in multi-source domain adaptation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 608–624. [Google Scholar]

- Luo, X.; Liu, S.; Fu, K.; Wang, M.; Song, Z. A learnable self-supervised task for unsupervised domain adaptation on point clouds. arXiv 2021, arXiv:2104.05164. [Google Scholar]

- Subhani, N.; Ali, M. Learning from scale-invariant examples for domain adaptation in semantic segmentation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 290–306. [Google Scholar]

- Kieu; Bagdanov, A.D.; Bertini, M.; Del Bimbo, A. Task-conditioned domain adaptation for pedestrian detection in thermal imagery. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 546–562. [Google Scholar]

- Azuma, C.; Ito, T.; Shimobaba, T. Adversarial domain adaptation using contrastive learning. Eng. Appl. Artif. Intell. 2023, 123, 106394. [Google Scholar] [CrossRef]

- Zheng, L.; Ma, W.; Cai, Y.; Lu, T.; Wang, S. GPDAN: Grasp Pose Domain Adaptation Network for Sim-to-Real 6-DoF Object Grasping. IEEE Robot. Autom. Lett. 2023, 2023, 3286816. [Google Scholar] [CrossRef]

- Sun, H.; Li, M. Enhancing unsupervised domain adaptation by exploiting the conceptual consistency of multiple self-supervised tasks. Sci. China Inf. Sci. 2023, 66, 142101. [Google Scholar] [CrossRef]

- Huang, X.; Choi, K.-S.; Zhou, N.; Zhang, Y.; Chen, B.; Pedrycz, W. Shallow Inception Domain Adaptation Network for EEG-based Motor Imagery Classification. IEEE Trans. Cogn. Dev. Syst. 2023, 2023, 3279262. [Google Scholar] [CrossRef]

- Zuo, Y.; Yao, H.; Zhuang, L.; Xu, C. Dual Structural Knowledge Interaction for Domain Adaptation. IEEE Trans. Multimed. 2023, 99, 1–15. [Google Scholar] [CrossRef]

- Chen, J.; He, P.; Zhu, J.; Guo, Y.; Sun, G.; Deng, M.; Li, H. Memory-Contrastive Unsupervised Domain Adaptation for Building Extraction of High-Resolution Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Rizzoli, G.; Shenaj, D.; Zanuttigh, P. Source-Free Domain Adaptation for RGB-D Semantic Segmentation with Vision Transformers. arXiv 2023, arXiv:2305.14269. [Google Scholar]

- Wu, Z.; Li, Z.; Wei, D.; Shang, H.; Guo, J.; Chen, X.; Rao, Z.; Yu, Z.; Yang, J.; Li, S.; et al. Improving Neural Machine Translation Formality Control with Domain Adaptation and Reranking-based Transductive Learning. In Proceedings of the 20th International Conference on Spoken Language Translation (IWSLT 2023), Toronto, ON, Canada, 13–14 July 2023; pp. 180–186. [Google Scholar] [CrossRef]

- Zhou, J.; Tian, Q.; Lu, Z. Progressive decoupled target-into-source multi-target domain adaptation. Inf. Sci. N. Y. 2023, 634, 140–156. [Google Scholar] [CrossRef]

- Ma, X.; Zhang, X.; Wang, Z.; Pun, M.-O. Unsupervised domain adaptation augmented by mutually boosted attention for semantic segmentation of vhr remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Westfechtel, T.; Yeh, H.-W.; Meng, Q.; Mukuta, Y.; Harada, T. Backprop Induced Feature Weighting for Adversarial Domain Adaptation with Iterative Label Distribution Alignment. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 392–401. [Google Scholar]

- Park, J.; Barnard, F.; Hossain, S.; Rambhatla, S.; Fieguth, P. Is Generative Modeling-based Stylization Necessary for Domain Adaptation in Regression Tasks? arXiv 2023, arXiv:2306.01706. [Google Scholar]

- Liu, X.; Zhou, S.; Lei, T.; Jiang, P.; Chen, Z.; Lu, H. First-Person Video Domain Adaptation with Multi-Scene Cross-Site Datasets and Attention-Based Methods. IEEE Trans. Circuits Syst. Video Technol. 2023, 2023, 3281671. [Google Scholar] [CrossRef]

- Mullick, K.; Jain, H.; Gupta, S.; Kale, A.A. Domain Adaptation of Synthetic Driving Datasets for Real-World Autonomous Driving. arXiv 2023, arXiv:2302.04149. [Google Scholar]

- Carrazco, J.I.D.; Kadam, S.K.; Morerio, P.; Del Bue, A.; Murino, V. Target-driven One-Shot Unsupervised Domain Adaptation. arXiv 2023, arXiv:2305.04628. [Google Scholar]

- Yu, Q.; Xi, N.; Yuan, J.; Zhou, Z.; Dang, K.; Ding, X. Source-Free Domain Adaptation for Medical Image Segmentation via Prototype-Anchored Feature Alignment and Contrastive Learning. arXiv 2023, arXiv:2307.09769. [Google Scholar]

- Goel, P.; Ganatra, A. Unsupervised Domain Adaptation for Image Classification and Object Detection Using Guided Transfer Learning Approach and JS Divergence. Sensors 2023, 23, 4436. [Google Scholar] [CrossRef]

- Liang, Y.; Wu, W.; Li, H.; Han, F.; Liu, Z.; Xu, P.; Lian, X.; Chen, X. WiAi-ID: Wi-Fi-Based Domain Adaptation for Appearance-independent Passive Person Identification. IEEE Internet Things J. 2023, 2023, 3288767. [Google Scholar] [CrossRef]

- Zhou, H.; Chang, Y.; Yan, W.; Yan, L. Unsupervised Cumulative Domain Adaptation for Foggy Scene Optical Flow. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 9569–9578. [Google Scholar]

- Niu, Z.; Wang, H.; Sun, H.; Ouyang, S.; Chen, Y.; Lin, L. MCKD: Mutually Collaborative Knowledge Distillation for Federated Domain Adaptation And Generalization. In Proceedings of the ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Houben, T.; Huisman, T.; Pisarenco, M.; van der Sommen, F.; de With, P. Training procedure for scanning electron microscope 3D surface reconstruction using unsupervised domain adaptation with simulated data. J. Micro/Nanopatterning Mater. Metrol. 2023, 22, 31208. [Google Scholar] [CrossRef]

- Capliez, E.; Ienco, D.; Gaetano, R.; Baghdadi, N.; Salah, A.H. Temporal-Domain Adaptation for Satellite Image Time-Series Land-Cover Mapping With Adversarial Learning and Spatially Aware Self-Training. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 3645–3675. [Google Scholar] [CrossRef]

- Zhao, R.; Zhu, Y.; Li, Y. CLA: A self-supervised contrastive learning method for leaf disease identification with domain adaptation. Comput. Electron. Agric. 2023, 211, 107967. [Google Scholar] [CrossRef]

- Neff, C.; Pazho, A.D.; Tabkhi, H. Real-Time Online Unsupervised Domain Adaptation for Real-World Person Re-identification. arXiv 2023, arXiv:2306.03993. [Google Scholar] [CrossRef]

- Taufique, M.N.; Jahan, C.S.; Savakis, A. Continual Unsupervised Domain Adaptation in Data-Constrained Environments. IEEE Trans. Artif. Intell. 2023, 2022, 323379. [Google Scholar] [CrossRef]

- Wu, W. Tea Leaf Disease Classification using Domain Adaptation Method. Front. Comput. Intell. Syst. 2023, 3, 48–50. [Google Scholar] [CrossRef]

- Wang, Y.; Luo, F. A Survey of Crowd Counting Algorithm Based on Domain Adaptation. Acad. J. Sci. Technol. 2023, 5, 35–37. [Google Scholar] [CrossRef]

- Hu, X.; Huang, Y.; Li, B.; Lu, T. Inclusive FinTech Lending via Contrastive Learning and Domain Adaptation. arXiv 2023, arXiv:2305.05827. [Google Scholar]

- Liu, Z.; Shi, K.; Niu, D.; Huo, H.; Zhang, K. Dynamic classifier approximation for unsupervised domain adaptation. Signal. Process. 2023, 206, 108915. [Google Scholar] [CrossRef]

- Chen, D.; Zhu, H.; Yang, S. UC-SFDA: Source-free domain adaptation via uncertainty prediction and evidence-based contrastive learning. Knowl. Based Syst. 2023, 2023, 110728. [Google Scholar] [CrossRef]

- Hu, X.; Zhu, Y. Dual Frame-Level and Region-Level Alignment for Unsupervised Video Domain Adaptation. Neurocomputing 2023, 2023, 126454. [Google Scholar] [CrossRef]

- Zhang, Y.; Ji, J.; Ren, Z.; Ni, Q.; Gu, F.; Feng, K.; Yu, K.; Ge, J.; Lei, Z.; Liu, Z. Digital twin-driven partial domain adaptation network for intelligent fault diagnosis of rolling bearing. Reliab. Eng. Syst. Saf. 2023, 234, 109186. [Google Scholar] [CrossRef]

- Fu, S.; Chen, J.; Chen, D.; He, C. CNNs/ViTs-CNNs/ViTs: Mutual distillation for unsupervised domain adaptation. Inf. Sci. N. Y. 2023, 622, 83–97. [Google Scholar] [CrossRef]

- Du, Y.; Zhou, Y.; Xie, Y.; Zhou, D.; Shi, J.; Lei, Y. Unsupervised domain adaptation via progressive positioning of target-class prototypes. Knowl. Based Syst. 2023, 273, 110586. [Google Scholar] [CrossRef]

- Zhou, X.; Tian, Y.; Wang, X. MEC-DA: Memory-Efficient Collaborative Domain Adaptation for Mobile Edge Devices. IEEE Trans. Mob. Comput. 2023, 2023, 3282941. [Google Scholar] [CrossRef]

- Feng, Y.; Luo, Y.; Yang, J. Cross-platform privacy-preserving CT image COVID-19 diagnosis based on source-free domain adaptation. Knowl. Based Syst. 2023, 264, 110324. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Q.; Gu, Q.; Pang, J.; Lu, X.; Ma, L. Self-adversarial disentangling for specific domain adaptation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 2023, 3238727. [Google Scholar] [CrossRef]

- Wang; Islam, M.; Xu, M.; Ren, H. Curriculum-Based Augmented Fourier Domain Adaptation for Robust Medical Image Segmentation. arXiv 2023, arXiv:2306.03511. [Google Scholar]

- Wang, J.; Wu, Z. Driver distraction detection via multi-scale domain adaptation network. IET Intell. Transp. Syst. 2023. [Google Scholar] [CrossRef]

- Hur, S.; Shin, I.; Park, K.; Woo, S.; Kweon, I.S. Learning Classifiers of Prototypes and Reciprocal Points for Universal Domain Adaptation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–7 January 2023; pp. 531–540. [Google Scholar]

- Fan, C.; Jin, Y.; Liu, P.; Zhao, W. Transferable visual pattern memory network for domain adaptation in anomaly detection. Eng. Appl. Artif. Intell. 2023, 121, 106013. [Google Scholar] [CrossRef]

- Fu, Z.; Wang, S.; Zhao, X.; Long, S.; Wang, B. Causal view mechanism for adversarial domain adaptation. In Multimedia Tools and Applications; Springer: Berlin/Heidelberg, Germany, 2023; pp. 1–20. [Google Scholar]

- Ding, F.; Li, J.; Tian, W.; Zhang, S.; Yuan, W. Unsupervised Domain Adaptation Via Risk-Consistent Estimators. IEEE Trans. Multimed. 2023, 2023, 3277275. [Google Scholar] [CrossRef]

- Gao, K.; Yu, A.; You, X.; Guo, W.; Li, K.; Huang, N. Integrating Multiple Sources Knowledge for Class Asymmetry Domain Adaptation Segmentation of Remote Sensing Images. arXiv 2023, arXiv:2305.09893. [Google Scholar]

- Han, Z.; Zhang, Z.; Wang, F.; He, R.; Su, W.; Xi, X.; Yin, Y. Discriminability and Transferability Estimation: A Bayesian Source Importance Estimation Approach for Multi-Source-Free Domain Adaptation. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington DC, USA, 7–14 February 2023; pp. 7811–7820. [Google Scholar]

- Zhang, Y.; Ren, Z.; Feng, K.; Yu, K.; Beer, M.; Liu, Z. Universal source-free domain adaptation method for cross-domain fault diagnosis of machines. Mech. Syst. Signal. Process 2023, 191, 110159. [Google Scholar] [CrossRef]

- Ding, Y.; Jia, M.; Zhuang, J.; Cao, Y.; Zhao, X.; Lee, C.-G. Deep imbalanced domain adaptation for transfer learning fault diagnosis of bearings under multiple working conditions. Reliab. Eng. Syst. Saf. 2023, 230, 108890. [Google Scholar] [CrossRef]

- Hong, Y.; Chern, W.-C.; Nguyen, T.V.; Cai, H.; Kim, H. Semi-supervised domain adaptation for segmentation models on different monitoring settings. Autom. Constr. 2023, 149, 104773. [Google Scholar] [CrossRef]

- Liu, S.; Zhu, C.; Li, Y.; Tang, W. WUDA: Unsupervised Domain Adaptation Based on Weak Source Domain Labels. In Proceedings of the ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Cai, Z.; Song, J.; Zhang, T.; Hu, C.; Jing, X.-Y. Local weight coupled network: Multi-modal unequal semi-supervised domain adaptation. In Multimedia Tools and Applications; Springer: Berlin/Heidelberg, Germany, 2023; pp. 1–27. [Google Scholar]

- Hernandez-Diaz, K.; Alonso-Fernandez, F.; Bigun, J. One-Shot Learning for Periocular Recognition: Exploring the Effect of Domain Adaptation and Data Bias on Deep Representations. arXiv 2023, arXiv:2307.05128. [Google Scholar] [CrossRef]

- Zhu, Y.; Rahman, M.M.; Alam, M.A.U. Augmenting Deep Learning Adaptation for Wearable Sensor Data through Combined Temporal-Frequency Image Encoding. arXiv 2023, arXiv:2307.00883. [Google Scholar]

- Zhang, Z.; Xu, Y.; Song, J.; Zhou, Q.; Rasol, J.; Ma, L. Planet craters detection based on unsupervised domain adaptation. IEEE Trans. Aerosp. Electron. Syst. 2023, 2023, 3285512. [Google Scholar] [CrossRef]

- Chen, Y.; Fang, X.; Liu, Y.; Zheng, W.; Kang, P.; Han, N.; Xie, S. Two-Step Strategy for Domain Adaptation Retrieval. IEEE Trans. Knowl. Data Eng. 2023, 2023, 3289882. [Google Scholar] [CrossRef]

- Lee, J.; Lee, G. Feature Alignment by Uncertainty and Self-Training for Source-Free Unsupervised Domain Adaptation. Neural Netw. 2023, 161, 682–692. [Google Scholar] [CrossRef]