Design of an Immersive Virtual Reality Framework to Enhance the Sense of Agency Using Affective Computing Technologies

Abstract

:1. Introduction

2. The Relevance of the Sense of Agency in Virtual Reality

2.1. Sense of Agency in Health

2.2. Sense of Agency in the Learning Process

2.3. Sense of Agency in Human–Computer Interaction

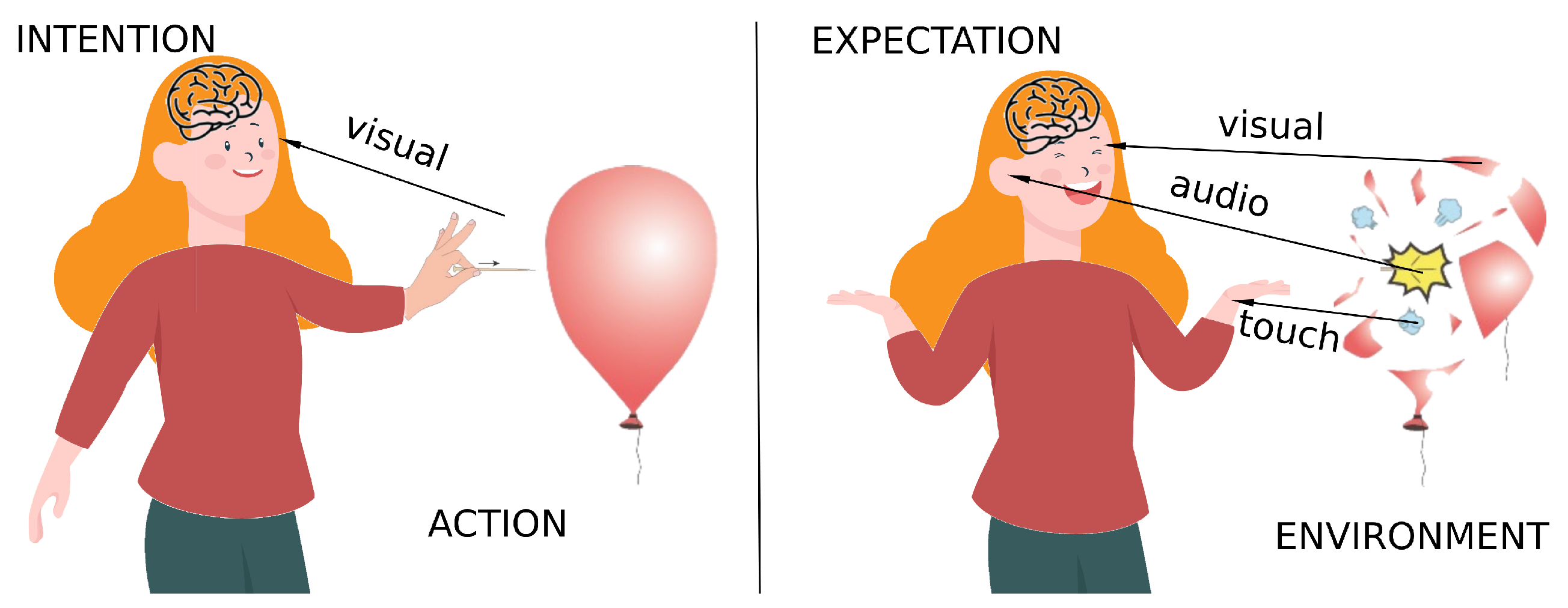

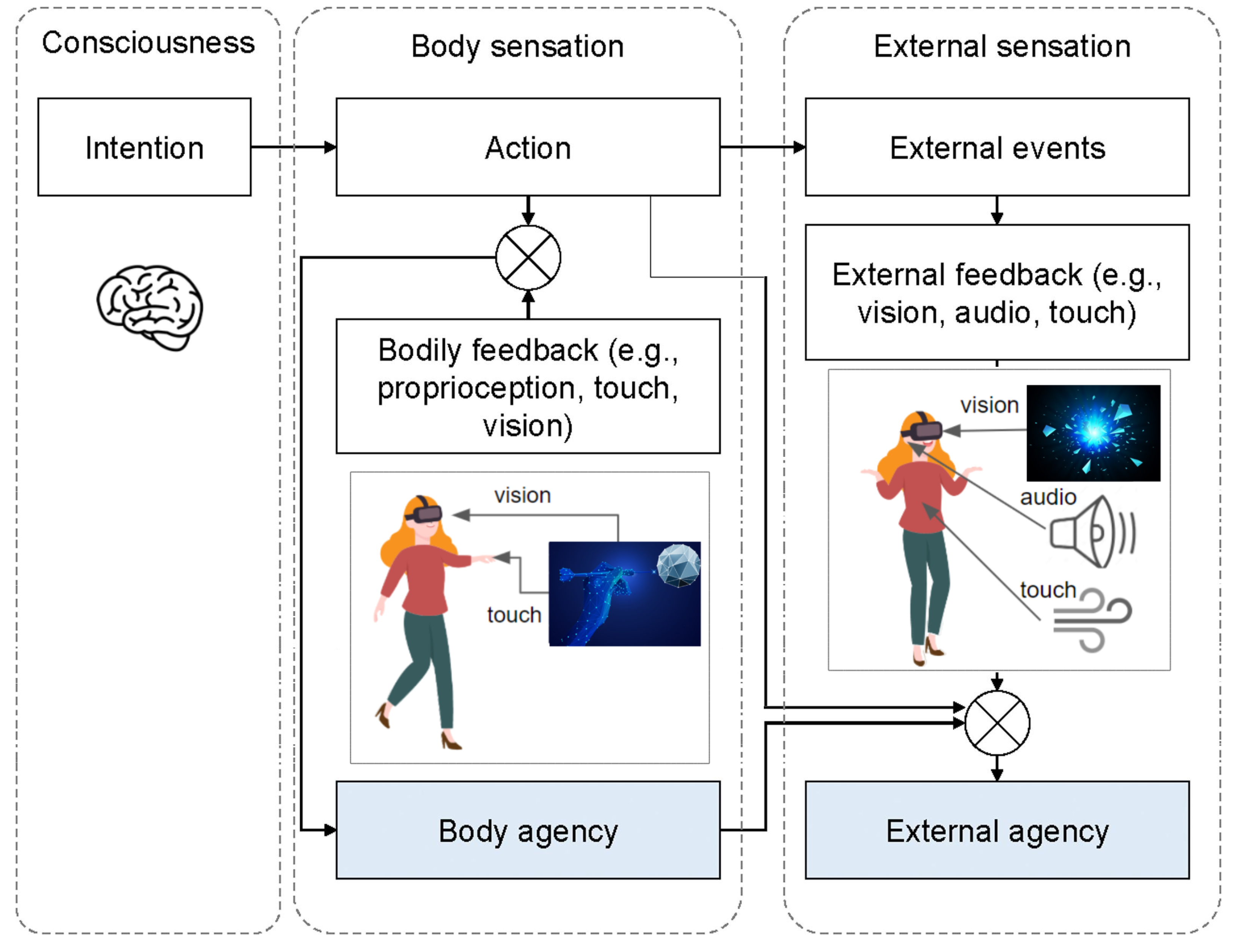

2.4. Sense of Agency: Theoretical Models

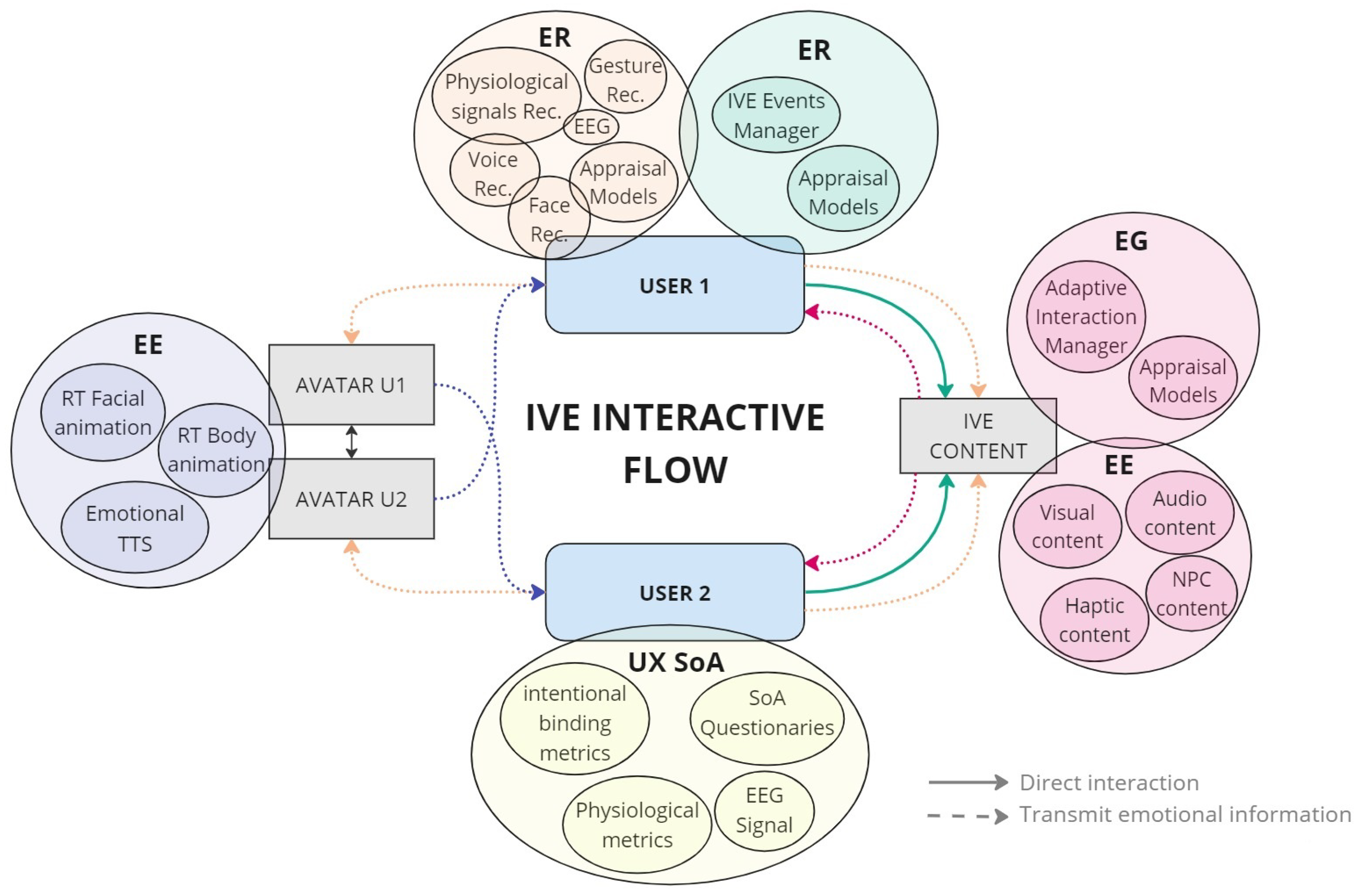

3. State-of-the-Art Technologies for Emotional Management

3.1. Affective Computing

- Emotion classification: models that allow defining a set of emotions that the system is able to recognise and express.

- Emotion recognition: research of the techniques to be used for the recognition of emotions felt by the users during the interaction with the system.

- Generation of emotional responses: emotional models and technologies that allow for the system to make decisions in terms of selecting emotions to answer the users and of choosing the best way to show this emotional response.

- Emotional expression: definition of the audiovisual techniques to be used by the system to show emotional responses.

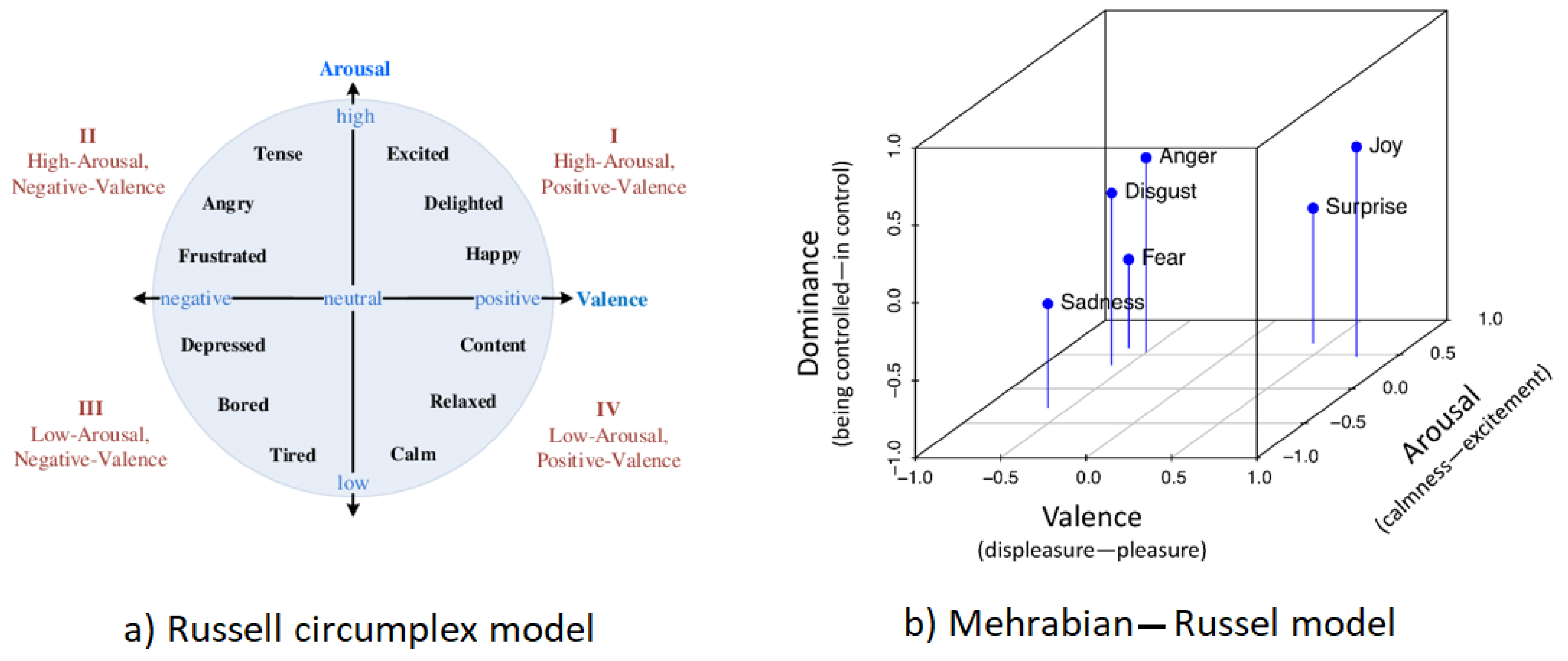

3.2. Classification of Emotions

3.3. Emotion Recognition

- Analysis of electrodermal activity (EDA): it is related to the sympathetic nervous system. The human being reacts to behavioural, cognitive and affective phenomena activating sweat glands to prepare the body for action. The sensors measure this secretion of sweat [38].

- Analysis of the electrocardiogram (ECG): ECG is a reliable source of information and it has considerable potential to recognise and predict human emotions such as anger, joy, trust, sadness, anticipation and surprise. More specifically, to detect these emotions, it is required to extract the Heart Rate Variability (HRV) from ECG measures [39].

- Analysis of the electroencephalogram (EEG): EEG is an electrophysiological monitoring method used to register the electrical activity of the brain by the use of electrodes. EEG signals can reveal relevant characteristics of emotional states. Recently, several BCI emotion recognition techniques have been developed based on EEG [40]. Knowing that ECG, EDA and EEG are the main methods to detect emotions without users’ voluntary control over them, some authors prefer EEG-based systems for emotion recognition [41]. This is because ECG measurements experience a large delay between stimuli and emotional response, and EDA-based systems cannot report the valence dimension when used on their own.

- Analysis of the respiratory rate (RR): emotional states can be identified by means of their respiratory pattern. For instance, happiness and other related positive emotions produce significant respiratory variations, which include an increase in the pattern variability and a decrease in the volume of inspiration and breathing time. Positive emotions vary their effects in the respiratory flow depending on how exciting they are; i.e., the more exciting ones increase the respiratory rate. On the other hand, feeling disgust suppress or cease respiration, probably as a natural reaction to avoid inhaling noxious elements [42].

3.4. Generating Emotional Responses

3.5. Expressing Emotions

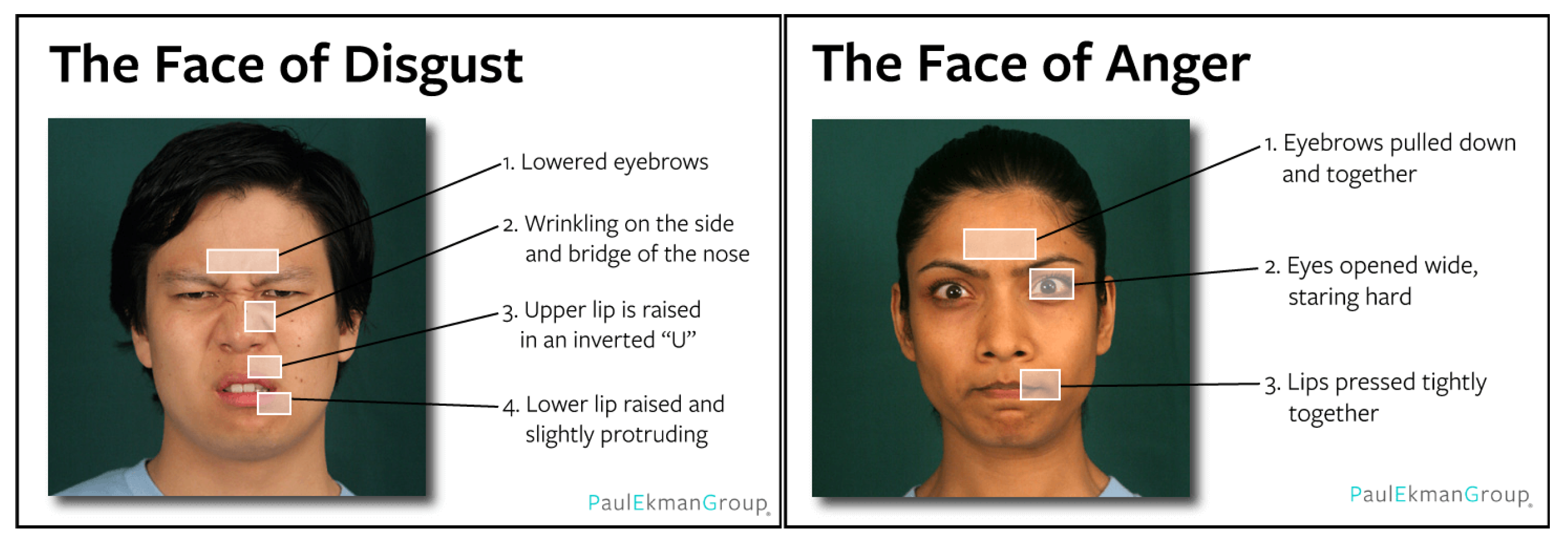

- Non-verbal language: Mehrabian [64] concluded that, in the case of face-to-face communication, the three parts of a message (words, tone of voice and body language) should be coherently supportive of one another. In fact, 7% of our acceptance and empathy for a message emitted face to face depends on our acceptance of the choice of words, 38% depends on the use of voice (tone, volume) and 55% depends on over-gesticulation or facial structure. Because of this, when expressing emotions, non-verbal language has an important impact. Virtual Reality environments allow both non-verbal communication, due to the use of avatars with full-body movements, and verbal communication in real time, although they usually lack a complete system for non-verbal signals [65]. In [66], the authors highlight the lack of interaction paradigms with facial expression and the almost nonexistence of significant control over environmental aspects of non-verbal communication, such as posture, pose and social status.Several research works have focused on defining how human beings express emotions with their body and face. Although studies similar to Giddenss’ [67] state that one of the main aspects of non-verbal communication when communicating emotions is facial emotional expressions, body movements are also considered essential to accompany words [68]. To generate believable avatars, it is imperative that an animation motor is developed based on these studies.On the one hand, in the area of facial animation, there is diverse research. A lot of these studies are based on [43]. Ekman and Friesen developed FACS which stands for Facial Action Coding System. It is an exhaustive system based on human anatomy that captures all visually differentiable facial movements. FACS describes this facial activity by means of 44 unique actions called Action Units (AUs), which categorise all possible head movements and eye positions.On the other hand, the body animation in the field of Affective Computing has been less researched than that of facial expression [69], although some studies were found focused on body positions and the movement of the hands in non-verbal expressiveness [70,71]. Nowadays, most of the work related to body animation is based on data collected by systems of motion capture (mocap). Mocap is a popular technique for representing human motion based on tracking markers corresponding to different regions or joints of the human body [72].Nevertheless, this kind of studies can exclusively be used when an avatar has anthropomorphous appearance. In the case of other types of emotional agents with no human shape, the way to provide them with some tools for non-verbal communication could be to modify their shape [73], colour [74], or to apply some changes to the way they move. Following this last approach, there is research that analyses the effect that amplitude, acceleration and duration of movements have on different emotions [75]. Other studies analyse the variation of heart rate [76] and respiratory rate [42] to link each rhythm variation to its corresponding emotion.

- Verbal Language: Dialog Systems and Digital Storytelling: Bickmore and Cassell [77] confirmed that non-verbal channels are important not only for tansmiting more information and complementing the voice channel but also for regulating the conversational flow. Because of this, when the IVE is expressing emotional response, it is important to provide it with mechanisms that can adapt the conversational flow to the emotional states of the interlocutors. Several authors work with Digital Storytelling techniques to adapt the IVE’s narrative flow to the emotions of participants and offer a more natural and adaptive verbal response. For instance, in [78], players’ physiological signals were mapped into valence and arousal, and they were used as interactive input to adapt a video game narrative.

- Audiovisual Mechanisms: Beyond expressing an emotional response through an avatar’s verbal or non-verbal language, the way in which content is shown inside the IVE is also an option to emotionally respond to the user. For instance, augmenting verbal communication by means of adding sounds that are not related to talking, such as special effects and narrative music, can not only offer more information about the content, but also affect the atmosphere and the mood. This happens because sound is capable of achieving emotional engagement, of improving the learning process and of augmenting the overall immersion [79]. In studies such as [78], sounds such as music are not the only additions to the IVE. Instead, visual elements are also included to accompany the avatar, like a flying ball that changes its colour depending on the users’ emotions detected by physiological measures. Results show that participants are in favour of narrative, musical and visual adaptations of video games based on real-time analysis of their emotions.In the literature, several emotion-based multimedia databases can be found to elicit emotions. The content to be shown can range from a static image (such as the IAPS database [80], which is labelled according to the affective dimensions of valence, arousal and dominance/control) to a single sound (such as the IADS database [81], which is labelled according to the affective dimensions of valence, arousal and dominance/control) or a video sequence (such as the CAAV database [82], which provides a wide range of standardised stimuli based on two emotional dimensions: valence and arousal). Virtual Reality can be an interesting medium due to its complexity and flexibility, as it offers numerous possibilities to transmit and elicit human emotions. An example of this are the ten scenarios developed in [83], two for each of the five basic emotions (rage, fear, disgust, sadness and joy) that contain design elements mapped in valence and arousal dimensions.

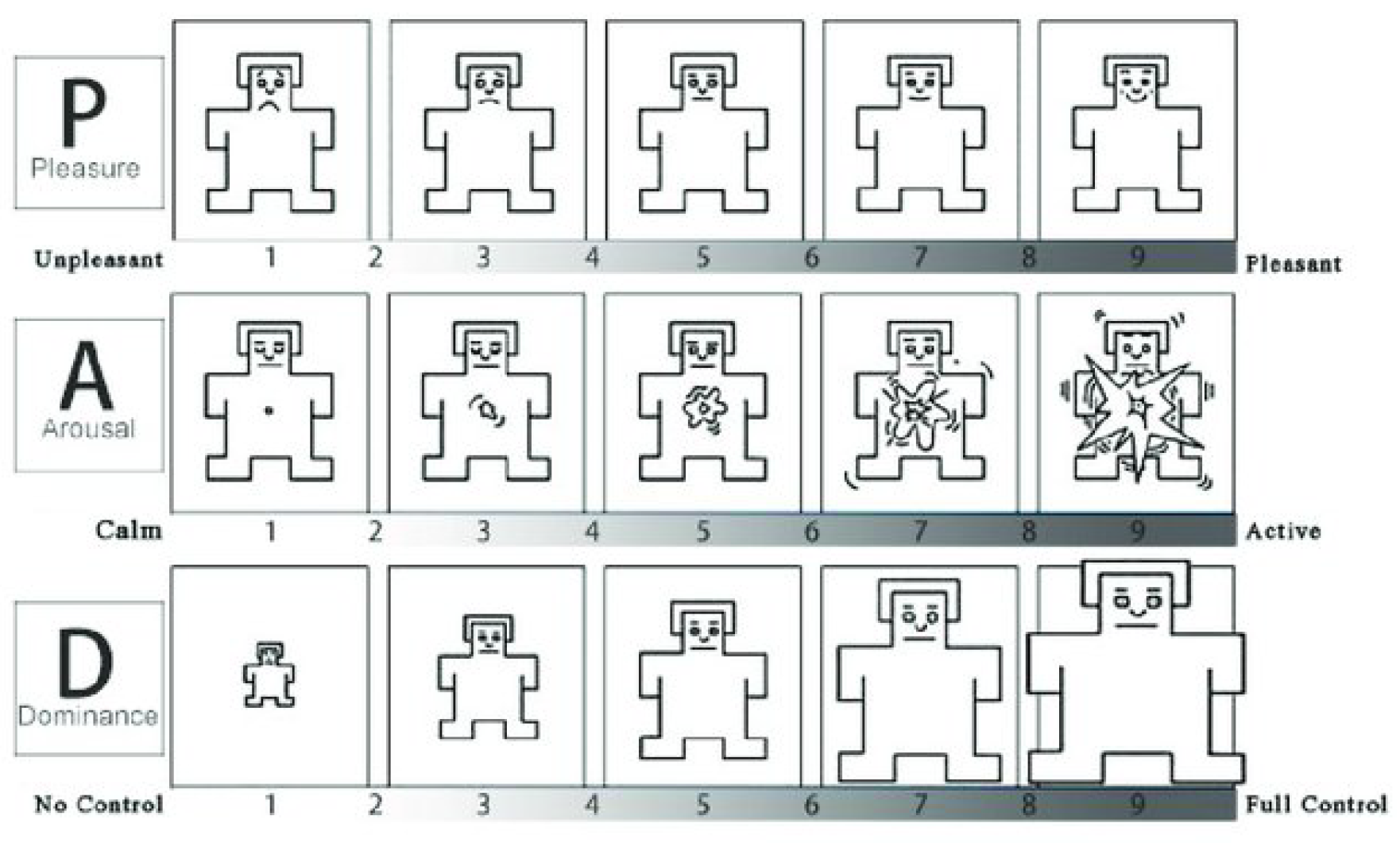

3.6. Methods for User Evaluation

3.6.1. Subjective Measures

3.6.2. Objective Measures

3.7. Frameworks Related to Immersion and Sense of Agency in Immersive Virtual Environments

4. Proposed Framework to Improve the Sense of Agency in Immersive Virtual Environments

5. Discussion and Future Work

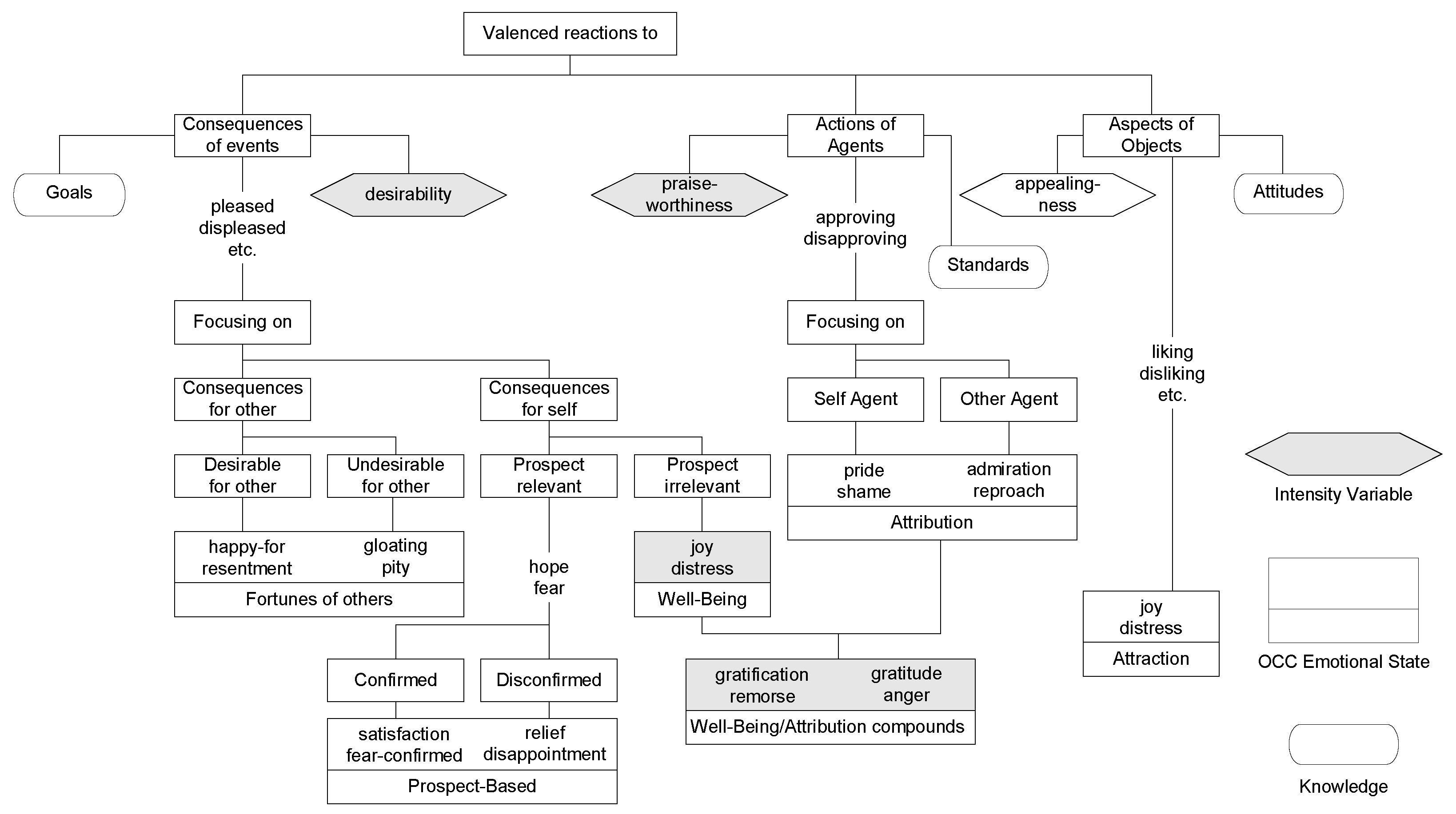

- Develop new techniques for adjusting emotion recognition. In the literature, recognition can be achieved by analysing expressive behaviour and measuring physiological changes. The first one has significantly improved in recent years with the advancement of Artificial Intelligence; however, recognizing emotions by analyzing expressive behavior from the data sources required by AI may involve gender and cultural biases. When recognising emotions by means of physiological signals, the preferred models are the dimensional ones. In the literature, we found that most researchers use the two-dimensional model, since there are sensors that can help to predict the level of valence and arousal reached by the user. However, in order to work with agency and the sense of agency, it is important to acknowledge the third dimension, the dominance, although objective measurement of dominance has not been achieved yet. Schachter and Singer [56] established that the origin of emotions comes, on the one hand, from our interpretation of the peripheral physiological responses of the organism, and on the other hand from the cognitive evaluation of the situation that originates those physiological responses. Following this theory, in this work, we propose as a future challenge to develop a system capable of recognising emotions in real time through the combination of physiological metrics and emotional cognitive models. These cognitive models allow modelling users’ objectives, standards and attitudes, as well as launching one emotion or another based on the agency of an event. We believe that the system could use, on the one hand, physiological measurements for situating a user’s emotional state in the correct global space of dimensional models. Then, with cognitive models such as Roseman [53] or OCC [28] which allow us prediction of the emotion in context (influenced by objectives, norms and attitudes), the system could refine emotional recognition. Using those techniques, the system will simulate the Schachter and Singer [56] process which established that the origin of emotions comes, primarily, from physiological responses of the organism, and then from the cognitive evaluation of the situation.

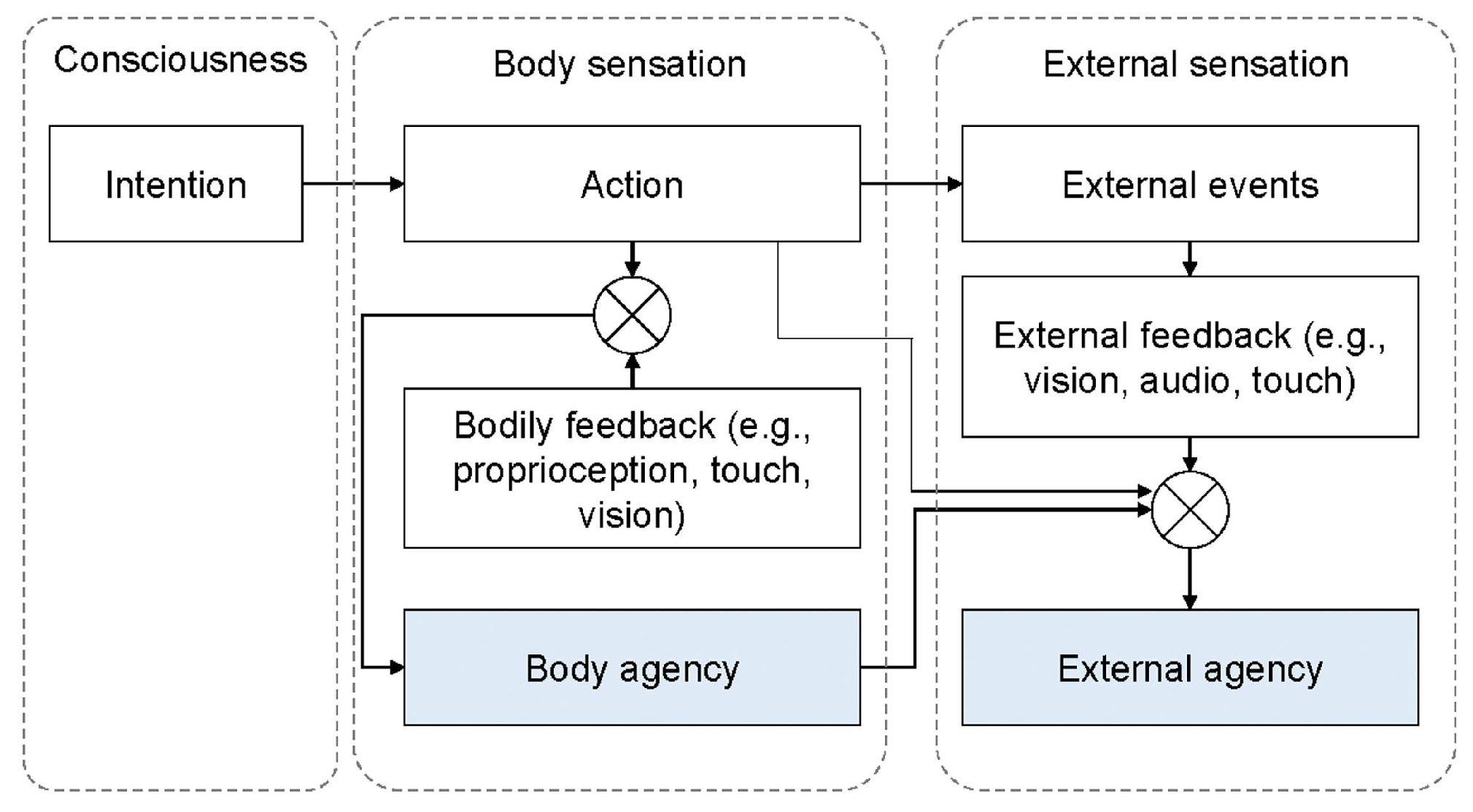

- Improve the external agency of the two-layer model [14] by adapting audio, visual and tactile content to users’ emotions recognised through the combination of physiological metrics and cognitive theories. Adaptation of IVE content has been implemented at the visual level by means of interactive storytelling techniques that employ user events as inputs. However, they rarely introduce physiological metrics as input to the system or use touch as part of system response, which is essential when working with the SoA.

- Improve the body agency of the two-layer model [14] by integrating the physiological metrics on the avatar body animation. The development of techniques that allow adapting the avatar animation based on the user’s physiological metrics is less widespread than the expression of emotions through facial and body animation techniques. As physiological signals may involve external body changes, such as chest movement (from respiratory rate) or skin colour (from temperature), in this work, achieving a high body agency is considered essential.

- Establish objective metrics for the assessment of the SoA in IVEs. In the literature review, several subjective questionnaires have been found for the assessment of the SoA. However, these subjective questionnaires should be accompanied by the analysis of objective metrics for a more unbiased assessment. Techniques based on Libet’s clock [108] measure Intentional binding, but they are not applicable to IVEs, as they are constrained by requiring high visual attention and they do not allow natural interaction with the immersive environment. Therefore, it is necessary to investigate the creation of tools that allow objective measurement of agency.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| SoA | Sense of Agency |

| AC | Affective Computing |

| IVE | Immersive Virtual Environments |

| HCI | Human–Computer Interaction |

| VR | Virtual Reality |

References

- Haggard, P.; Chambon, V. Sense of agency. Curr. Biol. 2012, 22, R390–R392. [Google Scholar] [CrossRef] [PubMed]

- Haggard, P.; Tsakiris, M. The experience of agency: Feelings, judgments, and responsibility. Curr. Dir. Psychol. Sci. 2009, 18, 242–246. [Google Scholar] [CrossRef]

- Patnaik, M.; Thirugnanasambandam, N. Neuroscience of Sense of Agency. Front. Young Minds 2022, 10, 683749. [Google Scholar] [CrossRef]

- Gallagher, S.; Trigg, D. Agency and Anxiety: Delusions of Control and Loss of Control in Schizophrenia and Agoraphobia. Front. Hum. Neurosci. 2016, 10, 459. [Google Scholar] [CrossRef] [PubMed]

- Stern, Y.; Koren, D.; Moebus, R.; Panishev, G.; Salomon, R. Assessing the Relationship between Sense of Agency, the Bodily-Self and Stress: Four Virtual-Reality Experiments in Healthy Individuals. J. Clin. Med. 2020, 9, 2931. [Google Scholar] [CrossRef] [PubMed]

- Lallart, E.; Lallart, X.; Jouvent, R. Agency, the Sense of Presence, and Schizophrenia. Cyberpsychol. Behav. 2009, 12, 139–145. [Google Scholar] [CrossRef] [PubMed]

- Moore, J.W. What Is the Sense of Agency and Why Does it Matter? Front. Psychol. 2016, 7, 1272. [Google Scholar] [CrossRef]

- Code, J. Agency for Learning: Intention, Motivation, Self-Efficacy and Self-Regulation. Front. Educ. 2020, 5, 19. [Google Scholar] [CrossRef]

- Petersen, G.B.; Petkakis, G.; Makransky, G. A study of how immersion and interactivity drive VR learning. Comput. Educ. 2022, 179, 104429. [Google Scholar] [CrossRef]

- Fribourg, R.; Ogawa, N.; Hoyet, L.; Argelaguet, F.; Narumi, T.; Hirose, M.; Lecuyer, A. Virtual Co-Embodiment: Evaluation of the Sense of Agency while Sharing the Control of a Virtual Body among Two Individuals. IEEE Trans. Vis. Comput. Graph. 2021, 27, 4023–4038. [Google Scholar] [CrossRef]

- Limerick, H.; Coyle, D.; Moore, J.W. The experience of agency in human-computer interactions: A review. Front. Hum. Neurosci. 2014, 8, 643. [Google Scholar] [CrossRef] [PubMed]

- Kilteni, K.; Groten, R.; Slater, M. The Sense of Embodiment in Virtual Reality. Presence Teleoperators Virtual Environ. 2012, 21, 373–387. [Google Scholar] [CrossRef]

- Cavazzana, A. Sense of Agency and Intentional Binding: How Does the Brain Link Voluntary Actions with Their Consequences? Università degli Studi di Padova: Padova, Italy, 2016. [Google Scholar]

- Wen, W. Does delay in feedback diminish sense of agency? A review. Conscious. Cogn. 2019, 73, 102759. [Google Scholar] [CrossRef] [PubMed]

- Butz, M.; Hepperle, D.; Wölfel, M. Influence of Visual Appearance of Agents on Presence, Attractiveness, and Agency in Virtual Reality. In ArtsIT, Interactivity and Game Creation, Proceedings of the Creative Heritage, New Perspectives from Media Arts and Artificial Intelligence, 10th EAI International Conference, ArtsIT 2021, Virtual, 2–3 December 2021; Lecture Notes of the Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering, LNICST; Springer: Cham, Switzerland, 2022; Volume 422, pp. 44–60. [Google Scholar] [CrossRef]

- Gallese, V.; Sinigaglia, C. The bodily self as power for action. Neuropsychologia 2010, 48, 746–755. [Google Scholar] [CrossRef] [PubMed]

- Di Plinio, S.; Scalabrini, A.; Ebisch, S.J. An integrative perspective on the role of touch in the development of intersubjectivity. Brain Cogn. 2022, 163, 105915. [Google Scholar] [CrossRef]

- Gibbs, J.K.; Gillies, M.; Pan, X. A comparison of the effects of haptic and visual feedback on presence in virtual reality. Int. J. Hum.-Comput. Stud. 2022, 157, 102717. [Google Scholar] [CrossRef]

- Brave, S.; Nass, C. Emotion in Human-Computer Interaction. In The Human-Computer Interaction Handbook: Fundamentals, Evolving Technologies and Emerging Applications; CRC Press: Boca Raton, FL, USA, 2002. [Google Scholar]

- Sekhavat, Y.A.; Sisi, M.J.; Roohi, S. Affective interaction: Using emotions as a user interface in games. Multimed. Tools Appl. 2021, 80, 5225–5253. [Google Scholar] [CrossRef]

- Picard, R.W. Affective Computing; MIT Press: Cambridge, MA, USA, 1997. [Google Scholar] [CrossRef]

- Picard, R.W. Affective computing: Challenges. Int. J. Humancomputer Stud. 2003, 59, 55–64. [Google Scholar] [CrossRef]

- Schuller, B.W.; Picard, R.; Andre, E.; Gratch, J.; Tao, J. Intelligent Signal Processing for Affective Computing [From the Guest Editors]. IEEE Signal Process. Mag. 2021, 38, 9–11. [Google Scholar] [CrossRef]

- Harmon-Jones, E.; Harmon-Jones, C.; Summerell, E. On the Importance of Both Dimensional and Discrete Models of Emotion. Behav. Sci. 2017, 7, 66. [Google Scholar] [CrossRef]

- Ekman, P. Facial expression and emotion. Am. Psychol. 1993, 48 4, 384–392. [Google Scholar] [CrossRef]

- Plutchik, R. The measurement of emotions. Acta Neuropsychiatr. 1997, 9, 58–60. [Google Scholar] [CrossRef] [PubMed]

- Roseman, I.J. Appraisal determinants of emotions: Constructing a more accurate and comprehensive theory. Cogn. Emot. 1996, 10, 241–278. [Google Scholar] [CrossRef]

- Ortony, A.; Clore, G.; Collins, A. The Cognitive Structure of Emotion; American Sociological Association: Washington, DC, USA, 1988; Volume 18. [Google Scholar] [CrossRef]

- EkmanGroupWeb. Universal Emotions|What Are Emotions?|Paul Ekman Group. 2023. Available online: https://www.paulekman.com/ (accessed on 16 December 2023).

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Mehrabian, A.; Russell, J.A. An Approach to Environmental Psychology; MIT Press: Cambridge, MA, USA, 1974. [Google Scholar]

- Kshirsagar, A.; Gupta, N.; Verma, B. Real Time Facial Emotions Detection of Multiple Faces Using Deep Learning. In Pervasive Computing and Social Networking; Lecture Notes in Networks and Systems; Springer: Singapore, 2023; Volume 475, pp. 363–376. [Google Scholar] [CrossRef]

- Avola, D.; Cinque, L.; Fagioli, A.; Foresti, G.L.; Massaroni, C. Deep Temporal Analysis for Non-Acted Body Affect Recognition. IEEE Trans. Affect. Comput. 2022, 13, 1366–1377. [Google Scholar] [CrossRef]

- Van, L.T.; Le, T.D.T.; Xuan, T.L.; Castelli, E. Emotional Speech Recognition Using Deep Neural Networks. Sensors 2022, 22, 1414. [Google Scholar] [CrossRef]

- Li, W.; Zhang, Z.; Song, A. Physiological-signal-based emotion recognition: An odyssey from methodology to philosophy. Measurement 2021, 172, 108747. [Google Scholar] [CrossRef]

- Maria, E.; Matthias, L.; Sten, H. Emotion Recognition from Physiological Signal Analysis: A Review. Electron. Notes Theor. Comput. Sci. 2019, 343, 35–55. [Google Scholar] [CrossRef]

- Aranha, R.V.; Correa, C.G.; Nunes, F.L. Adapting Software with Affective Computing: A Systematic Review. IEEE Trans. Affect. Comput. 2021, 12, 883–899. [Google Scholar] [CrossRef]

- Matsumoto, R.R.; Walker, B.B.; Walker, J.M.; Hughes, H.C. Fundamentals of neuroscience. In Principles of Psychophysiology: Physical, Social, and Inferential Elements; Cambridge University Press: Cambridge, UK, 1990; pp. 58–113. [Google Scholar]

- Nita, S.; Bitam, S.; Heidet, M.; Mellouk, A. A new data augmentation convolutional neural network for human emotion recognition based on ECG signals. Biomed. Signal Process. Control 2022, 75, 103580. [Google Scholar] [CrossRef]

- Houssein, E.H.; Hammad, A.; Ali, A.A. Human emotion recognition from EEG-based brain–computer interface using machine learning: A comprehensive review. Neural Comput. Appl. 2022, 34, 12527–12557. [Google Scholar] [CrossRef]

- Garcia, O.D.R.; Fernandez, J.F.; Saldana, R.A.B.; Witkowski, O. Emotion-Driven Interactive Storytelling: Let Me Tell You How to Feel. In Artificial Intelligence in Music, Sound, Art and Design, Proceedings of the 11th International Conference, EvoMUSART 2022, Madrid, Spain, 20–22 April 2022; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2022; Volume 13221, pp. 259–274. [Google Scholar] [CrossRef]

- Jerath, R.; Beveridge, C. Respiratory Rhythm, Autonomic Modulation, and the Spectrum of Emotions: The Future of Emotion Recognition and Modulation. Front. Psychol. 2020, 11, 1980. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P.; Friesen, W.V. Facial Action Coding System: A Technique for the Measurement of Facial Movement; Consulting Psychologists Press: Washington, DC, USA, 1978. [Google Scholar]

- Nardelli, M.; Valenza, G.; Greco, A.; Lanata, A.; Scilingo, E.P. Recognizing emotions induced by affective sounds through heart rate variability. IEEE Trans. Affect. Comput. 2015, 6, 385–394. [Google Scholar] [CrossRef]

- Liu, Z.; Xu, A.; Guo, Y.; Mahmud, J.; Liu, H.; Akkiraju, R. Seemo: A Computational Approach to See Emotions. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–12. [Google Scholar] [CrossRef]

- Mitruț, O.; Moise, G.; Petrescu, L.; Moldoveanu, A.; Leordeanu, M.; Moldoveanu, F. Emotion Classification Based on Biophysical Signals and Machine Learning Techniques. Symmetry 2019, 12, 21. [Google Scholar] [CrossRef]

- Calvo, R.A.; D’Mello, S. Affect detection: An interdisciplinary review of models, methods, and their applications. IEEE Trans. Affect. Comput. 2010, 1, 18–37. [Google Scholar] [CrossRef]

- Darwin, C.R. The Expression of the Emotions in Man and Animals, 1st ed.; Penguin Books: London, UK, 1872. [Google Scholar]

- James, W. What is an emotion? Mind 1884, os-IX, 188–205. [Google Scholar] [CrossRef]

- Marg, E. Descartes’error: Emotion, reason, and the human brain. Optom. Vis. Sci. 1995, 72, 847–848. [Google Scholar] [CrossRef]

- Brinkmann, S. Damasio on mind and emotions: A conceptual critique. Nord. Psychol. 2006, 58, 366–380. [Google Scholar] [CrossRef]

- Cannon, W.B. The James-Lange Theory of Emotions: A Critical Examination and an Alternative Theory. Am. J. Psychol. 1927, 39, 106. [Google Scholar] [CrossRef]

- Roseman, I.J.; Spindel, M.S.; Jose, P.E. Appraisals of emotion-eliciting events: Testing a theory of discrete emotions. J. Personal. Soc. Psychol. 1990, 59, 899. [Google Scholar] [CrossRef]

- Scherer, K.R. Emotion as a multicomponent process: A model and some cross-cultural data. Rev. Personal. Soc. Psychol. 1984, 5, 37–63. [Google Scholar]

- Argente, E.; Val, E.D.; Perez-Garcia, D.; Botti, V. Normative Emotional Agents: A Viewpoint Paper. IEEE Trans. Affect. Comput. 2022, 13, 1254–1273. [Google Scholar] [CrossRef]

- Schachter, S.; Singer, J. Cognitive, social, and physiological determinants of emotional state. Psychol. Rev. 1962, 69, 379. [Google Scholar] [CrossRef] [PubMed]

- Bartneck, C.; Lyons, M.J.; Saerbeck, M. The Relationship Between Emotion Models and Artificial Intelligence. arXiv 2017, arXiv:1706.09554. [Google Scholar]

- Ren, D.; Wang, P.; Qiao, H.; Zheng, S. A biologically inspired model of emotion eliciting from visual stimuli. Neurocomputing 2013, 121, 328–336. [Google Scholar] [CrossRef]

- López, Y.S. Abc-Ebdi: A Cognitive-Affective Framework to Support the Modeling of Believable Intelligent Agents. Ph.D. Thesis, Universidad de Zaragoza, Zaragoza, Spain, 2021; p. 1. [Google Scholar]

- Laird, J.E.; Newell, A.; Rosenbloom, P.S. SOAR: An architecture for general intelligence. Artif. Intell. 1987, 33, 1–64. [Google Scholar] [CrossRef]

- Anderson, J.R. Rules of the Mind; Erlbaum: Hillsdale, NJ, USA, 1993; p. 320. [Google Scholar]

- Rao, A.S.; Georgee, M.P. BDI Agents: From Theory to Practice. In Proceedings of the First International Conference on Multiagent Systems, San Francisco, CA, USA, 12–14 June 1995. [Google Scholar]

- Sanchez, Y.; Coma, T.; Aguelo, A.; Cerezo, E. Applying a psychotherapeutic theory to the modeling of affective intelligent agents. IEEE Trans. Cogn. Dev. Syst. 2020, 12, 285–299. [Google Scholar] [CrossRef]

- Mehrabian, A. Communication without words. In Communication Theory, 2nd ed.; Routledge: London, UK, 1968; pp. 193–200. [Google Scholar] [CrossRef]

- Kasapakis, V.; Dzardanova, E.; Nikolakopoulou, V.; Vosinakis, S.; Xenakis, I.; Gavalas, D. Social Virtual Reality: Implementing Non-verbal Cues in Remote Synchronous Communication. In Virtual Reality and Mixed Reality, Proceedings of the 18th EuroXR International Conference, EuroXR 2021, Milan, Italy, 24–26 November 2021; Springer: Cham, Switzerland, 2021; pp. 152–157. [Google Scholar] [CrossRef]

- Tanenbaum, T.J.; Hartoonian, N.; Bryan, J. “How do I make this thing smile?”. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; ACM: New York, NY, USA, 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Giddens, A.; Birdsall, K.; Menezo, J.C. Sociologia; Alianza Editorial: Madrid, Spain, 2002. [Google Scholar]

- Graf, H.P.; Cosatto, E.; Strom, V.; Huang, F.J. Visual prosody: Facial movements accompanying speech. In Proceedings of the 5th IEEE International Conference on Automatic Face Gesture Recognition, FGR 2002, Washington, DC, USA, 21 May 2002; pp. 396–401. [Google Scholar] [CrossRef]

- Kleinsmith, A.; Bianchi-Berthouze, N. Affective body expression perception and recognition: A survey. IEEE Trans. Affect. Comput. 2013, 4, 15–33. [Google Scholar] [CrossRef]

- Davis, F. La Comunicación no Verbal; Google Libros: Online, 1998; Volume 3600. [Google Scholar]

- Alberts, D. The Expressive Body: Physical Characterization for the Actor; Heinemann Educational Publishers: Portsmouth, NH, USA, 1997; 196p. [Google Scholar]

- Ribet, S.; Wannous, H.; Vandeborre, J.P. Survey on Style in 3D Human Body Motion: Taxonomy, Data, Recognition and Its Applications. IEEE Trans. Affect. Comput. 2021, 12, 928–948. [Google Scholar] [CrossRef]

- Aoki, T.; Chujo, R.; Matsui, K.; Choi, S.; Hautasaari, A. EmoBalloon—Conveying Emotional Arousal in Text Chats with Speech Balloons. In Proceedings of the Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 29 April–5 May 2022; p. 16. [Google Scholar] [CrossRef]

- Lin, P.C.; Hung, P.C.; Jiang, Y.; Velasco, C.P.; Cano, M.A.M. An experimental design for facial and color emotion expression of a social robot. J. Supercomput. 2023, 79, 1980–2009. [Google Scholar] [CrossRef]

- Nilay, O. Modeling Empathy in Embodied Conversational Agents. In Proceedings of the 20th ACM International Conference on Multimodal Interaction, Boulder, CO, USA, 16–20 October 2018. [Google Scholar]

- Shu, L.; Yu, Y.; Chen, W.; Hua, H.; Li, Q.; Jin, J.; Xu, X. Wearable Emotion Recognition Using Heart Rate Data from a Smart Bracelet. Sensors 2020, 20, 718. [Google Scholar] [CrossRef] [PubMed]

- Bickmore, T.; Cassell, J. Relational agents: A model and implementation of building user trust. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Seattle, WA, USA, 31 March–5 April 2001; pp. 396–403. [Google Scholar] [CrossRef]

- Frachi, Y.; Takahashi, T.; Wang, F.; Barthet, M. Design of Emotion-Driven Game Interaction Using Biosignals. In HCI in Games, Proceedings of the 4th International Conference, HCI-Games 2022, Virtual, 26 June–1 July 2022; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2022; Volume 13334, pp. 160–179. [Google Scholar] [CrossRef]

- Steinhaeusser, S.C.; Schaper, P.; Lugrin, B. Comparing a Robotic Storyteller versus Audio Book with Integration of Sound Effects and Background Music. In Proceedings of the HRI ’21 Companion: Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 8–11 March 2021. [Google Scholar] [CrossRef]

- Bradley, M.M.; Lang, P.J. International Affective Picture System. In Encyclopedia of Personality and Individual Differences; Springer: Cham, Switzerland, 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Yang, W.; Makita, K.; Nakao, T.; Kanayama, N.; Machizawa, M.G.; Sasaoka, T.; Sugata, A.; Kobayashi, R.; Hiramoto, R.; Yamawaki, S.; et al. Affective auditory stimulus database: An expanded version of the International Affective Digitized Sounds (IADS-E). Behav. Res. Methods 2018, 50, 1415–1429. [Google Scholar] [CrossRef] [PubMed]

- Crosta, A.D.; Malva, P.L.; Manna, C.; Marin, A.; Palumbo, R.; Verrocchio, M.C.; Cortini, M.; Mammarella, N.; Domenico, A.D. The Chieti Affective Action Videos database, a resource for the study of emotions in psychology. Sci. Data 2020, 7, 32. [Google Scholar] [CrossRef] [PubMed]

- Dozio, N.; Marcolin, F.; Scurati, G.W.; Ulrich, L.; Nonis, F.; Vezzetti, E.; Marsocci, G.; Rosa, A.L.; Ferrise, F. A design methodology for affective Virtual Reality. Int. J. Hum.-Comput. Stud. 2022, 162, 102791. [Google Scholar] [CrossRef]

- Hou, G.; Dong, H.; Yang, Y. Developing a Virtual Reality Game User Experience Test Method Based on EEG Signals. In Proceedings of the 2017 5th International Conference on Enterprise Systems: Industrial Digitalization by Enterprise Systems, ES 2017, Beijing, China, 22–24 September 2017; pp. 227–231. [Google Scholar] [CrossRef]

- Chandra, A.N.R.; Jamiy, F.E.; Reza, H. A review on usability and performance evaluation in virtual reality systems. In Proceedings of the 6th Annual Conference on Computational Science and Computational Intelligence, CSCI 2019, Las Vegas, NV, USA, 5–7 December 2019; pp. 1107–1114. [Google Scholar] [CrossRef]

- Thompson, E.R. Development and Validation of an Internationally Reliable Short-Form of the Positive and Negative Affect Schedule (PANAS). J. Cross-Cult. Psychol. 2016, 38, 227–242. [Google Scholar] [CrossRef]

- Bradley, M.M.; Lang, P.J. Measuring emotion: The self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry 1994, 25, 49–59. [Google Scholar] [CrossRef]

- Liao, D.; Shu, L.; Liang, G.; Li, Y.; Zhang, Y.; Zhang, W.; Xu, X. Design and Evaluation of Affective Virtual Reality System Based on Multimodal Physiological Signals and Self-Assessment Manikin. IEEE J. Electromagn. Microw. Med. Biol. 2020, 4, 216–224. [Google Scholar] [CrossRef]

- Tian, F.; Hou, X.; Hua, M. Emotional Response Increments Induced by Equivalent Enhancement of Different Valence Films. In Proceedings of the 2020 5th International Conference on Electromechanical Control Technology and Transportation (ICECTT), Nanchang, China, 15–17 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 213–218. [Google Scholar] [CrossRef]

- Li, B.J.; Bailenson, J.N.; Pines, A.; Greenleaf, W.J.; Williams, L.M. A Public Database of Immersive VR Videos with Corresponding Ratings of Arousal, Valence, and Correlations between Head Movements and Self Report Measures. Front. Psychol. 2017, 8, 2116. [Google Scholar] [CrossRef]

- Rivu, R.; Jiang, R.; Mäkelä, V.; Hassib, M.; Alt, F. Emotion Elicitation Techniques in Virtual Reality. In Human-Computer Interaction—INTERACT 2021, Proceedings of the 18th IFIP TC 13 International Conference, Bari, Italy, 30 August–3 September 2021; Springer: Cham, Switzerland, 2021; pp. 93–114. [Google Scholar] [CrossRef]

- Xie, T.; Cao, M.; Pan, Z. Applying Self-Assessment Manikin (SAM) to Evaluate the Affective Arousal Effects of VR Games. In Proceedings of the 2020 3rd International Conference on Image and Graphics Processing, Singapore, 8–10 February 2020; ACM: New York, NY, USA, 2020; pp. 134–138. [Google Scholar] [CrossRef]

- Wang, J.; Wang, Y.; Liu, Y.; Yue, T.; Wang, C.; Yang, W.; Hansen, P.; You, F. Experimental study on abstract expression of human-robot emotional communication. Symmetry 2021, 13, 1693. [Google Scholar] [CrossRef]

- Mütterlein, J. The Three Pillars of Virtual Reality? Investigating the Roles of Immersion, Presence, and Interactivity. In Proceedings of the 51st Hawaii International Conference on System Sciences, Hilton Waikoloa Village, HI, USA, 3–6 January 2018. [Google Scholar]

- Hudson, S.; Matson-Barkat, S.; Pallamin, N.; Jegou, G. With or without you? Interaction and immersion in a virtual reality experience. J. Bus. Res. 2019, 100, 459–468. [Google Scholar] [CrossRef]

- Jennett, C.; Cox, A.L.; Cairns, P.; Dhoparee, S.; Epps, A.; Tijs, T.; Walton, A. Measuring and defining the experience of immersion in games. Int. J. Hum.-Comput. Stud. 2008, 66, 641–661. [Google Scholar] [CrossRef]

- Aoyagi, K.; Wen, W.; An, Q.; Hamasaki, S.; Yamakawa, H.; Tamura, Y.; Yamashita, A.; Asama, H. Modified sensory feedback enhances the sense of agency during continuous body movements in virtual reality. Sci. Rep. 2021, 11, 2553. [Google Scholar] [CrossRef] [PubMed]

- Tapal, A.; Oren, E.; Dar, R.; Eitam, B. The sense of agency scale: A measure of consciously perceived control over one’s mind, body, and the immediate environment. Front. Psychol. 2017, 8, 1552. [Google Scholar] [CrossRef] [PubMed]

- Polito, V.; Barnier, A.J.; Woody, E.Z. Developing the Sense of Agency Rating Scale (SOARS): An empirical measure of agency disruption in hypnosis. Conscious. Cogn. 2013, 22, 684–696. [Google Scholar] [CrossRef] [PubMed]

- Longo, M.R.; Haggard, P. Sense of agency primes manual motor responses. Perception 2009, 38, 69–78. [Google Scholar] [CrossRef]

- Magdin, M.; Balogh, Z.; Reichel, J.; Francisti, J.; Koprda, Š.; György, M. Automatic detection and classification of emotional states in virtual reality and standard environments (LCD): Comparing valence and arousal of induced emotions. Virtual Real. 2021, 25, 1029–1041. [Google Scholar] [CrossRef]

- Voigt-Antons, J.N.; Spang, R.; Kojic, T.; Meier, L.; Vergari, M.; Muller, S. Don’t Worry be Happy—Using virtual environments to induce emotional states measured by subjective scales and heart rate parameters. In Proceedings of the 2021 IEEE Virtual Reality and 3D User Interfaces (VR), Lisboa, Portugal, 27 March–1 April 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 679–686. [Google Scholar] [CrossRef]

- Li, M.; Pan, J.; Gao, Y.; Shen, Y.; Luo, F.; Dai, J.; Hao, A.; Qin, H. Neurophysiological and Subjective Analysis of VR Emotion Induction Paradigm. IEEE Trans. Vis. Comput. Graph. 2022, 28, 3832–3842. [Google Scholar] [CrossRef]

- Dubovi, I. Cognitive and emotional engagement while learning with VR: The perspective of multimodal methodology. Comput. Educ. 2022, 183, 104495. [Google Scholar] [CrossRef]

- Bergström, J.; Knibbe, J.; Pohl, H.; Hornbæk, K. Sense of Agency and User Experience: Is There a Link? ACM Trans. Comput.-Hum. Interact. 2022, 29, 1–22. [Google Scholar] [CrossRef]

- Zanatto, D.; Chattington, M.; Noyes, J. Human-machine sense of agency. Int. J. Hum.-Comput. Stud. 2021, 156, 102716. [Google Scholar] [CrossRef]

- Haggard, P.; Clark, S.; Kalogeras, J. Voluntary action and conscious awareness. Nat. Neurosci. 2002, 5, 382–385. [Google Scholar] [CrossRef] [PubMed]

- Ivanof, B.E.; Terhune, D.B.; Coyle, D.; Gottero, M.; Moore, J.W. Examining the effect of Libet clock stimulus parameters on temporal binding. Psychol. Res. 2021, 86, 937–951. [Google Scholar] [CrossRef]

- Kong, G.; He, K.; Wei, K. Sensorimotor experience in virtual reality enhances sense of agency associated with an avatar. Conscious. Cogn. 2017, 52, 115–124. [Google Scholar] [CrossRef] [PubMed]

- Cornelio Martinez, P.I.; Maggioni, E.; Hornbæk, K.; Obrist, M.; Subramanian, S. Beyond the Libet clock: Modality variants for agency measurements. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–14. [Google Scholar]

- Bowman, D.A.; McMahan, R.P. Virtual reality: How much immersion is enough? Computer 2007, 40, 36–43. [Google Scholar] [CrossRef]

- Andreoletti, D.; Paoliello, M.; Luceri, L.; Leidi, T.; Peternier, A.; Giordano, S. A framework for emotion-driven product design through virtual reality. In Proceedings of the Special Sessions in the Advances in Information Systems and Technologies Track of the Conference on Computer Science and Intelligence Systems, Virtual, 2–5 September 2021; Springer: Cham, Switzerland, 2021; pp. 42–61. [Google Scholar]

- Andreoletti, D.; Luceri, L.; Peternier, A.; Leidi, T.; Giordano, S. The virtual emotion loop: Towards emotion-driven product design via virtual reality. In Proceedings of the 2021 16th Conference on Computer Science and Intelligence Systems (FedCSIS), Sofia, Bulgaria, 2–5 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 371–378. [Google Scholar]

- Hernandez-Melgarejo, G.; Luviano-Juarez, A.; Fuentes-Aguilar, R.Q. A framework to model and control the state of presence in virtual reality systems. IEEE Trans. Affect. Comput. 2022, 13, 1854–1867. [Google Scholar] [CrossRef]

- Al-Jundi, H.A.; Tanbour, E.Y. A framework for fidelity evaluation of immersive virtual reality systems. Virtual Real. 2022, 26, 1103–1122. [Google Scholar] [CrossRef]

- Wang, Y.; Ijaz, K.; Calvo, R.A. A software application framework for developing immersive virtual reality experiences in health domain. In Proceedings of the 2017 IEEE Life Sciences Conference (LSC), Sydney, Australia, 13–15 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 30–37. [Google Scholar]

- Aranha, R.V.; Chaim, M.L.; Monteiro, C.B.; Silva, T.D.; Guerreiro, F.A.; Silva, W.S.; Nunes, F.L. EasyAffecta: A framework to develop serious games for virtual rehabilitation with affective adaptation. Multimed. Tools Appl. 2023, 82, 2303–2328. [Google Scholar] [CrossRef]

- Angelini, L.; Mecella, M.; Liang, H.N.; Caon, M.; Mugellini, E.; Khaled, O.A.; Bernardini, D. Towards an Emotionally Augmented Metaverse: A Framework for Recording and Analysing Physiological Data and User Behaviour. In Proceedings of the 13th Augmented Human International Conference, Winnipeg, MB, Canada, 26–27 May 2022. ACM International Conference Proceeding Series. [Google Scholar] [CrossRef]

- Roohi, S.; Skarbez, R. The Design and Development of a Goal-Oriented Framework for Emotional Virtual Humans. In Proceedings of the 2022 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), Virtual, 12–14 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 135–139. [Google Scholar]

- Lafleur, A.; Soulières, I.; d’Arc, B.F. Sense of agency: Sensorimotor signals and social context are differentially weighed at implicit and explicit levels. Conscious. Cogn. 2020, 84, 103004. [Google Scholar] [CrossRef]

- Ohata, W.; Tani, J. Investigation of the sense of agency in social cognition, based on frameworks of predictive coding and active inference: A simulation study on multimodal imitative interaction. Front. Neurorobotics 2020, 14, 61. [Google Scholar] [CrossRef]

- Anders, D.; Berisha, A.; Selaskowski, B.; Asché, L.; Thorne, J.D.; Philipsen, A.; Braun, N. Experimental induction of micro-and macrosomatognosia: A virtual hand illusion study. Front. Virtual Real. 2021, 2, 656788. [Google Scholar] [CrossRef]

- Shibuya, S.; Unenaka, S.; Ohki, Y. The relationship between the virtual hand illusion and motor performance. Front. Psychol. 2018, 9, 2242. [Google Scholar] [CrossRef] [PubMed]

- Levac, D.E.; Huber, M.E.; Sternad, D. Learning and transfer of complex motor skills in virtual reality: A perspective review. J. Neuroeng. Rehabil. 2019, 16, 121. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ortiz, A.; Elizondo, S. Design of an Immersive Virtual Reality Framework to Enhance the Sense of Agency Using Affective Computing Technologies. Appl. Sci. 2023, 13, 13322. https://doi.org/10.3390/app132413322

Ortiz A, Elizondo S. Design of an Immersive Virtual Reality Framework to Enhance the Sense of Agency Using Affective Computing Technologies. Applied Sciences. 2023; 13(24):13322. https://doi.org/10.3390/app132413322

Chicago/Turabian StyleOrtiz, Amalia, and Sonia Elizondo. 2023. "Design of an Immersive Virtual Reality Framework to Enhance the Sense of Agency Using Affective Computing Technologies" Applied Sciences 13, no. 24: 13322. https://doi.org/10.3390/app132413322

APA StyleOrtiz, A., & Elizondo, S. (2023). Design of an Immersive Virtual Reality Framework to Enhance the Sense of Agency Using Affective Computing Technologies. Applied Sciences, 13(24), 13322. https://doi.org/10.3390/app132413322