End-to-End Object Detection with Enhanced Positive Sample Filter

Abstract

1. Introduction

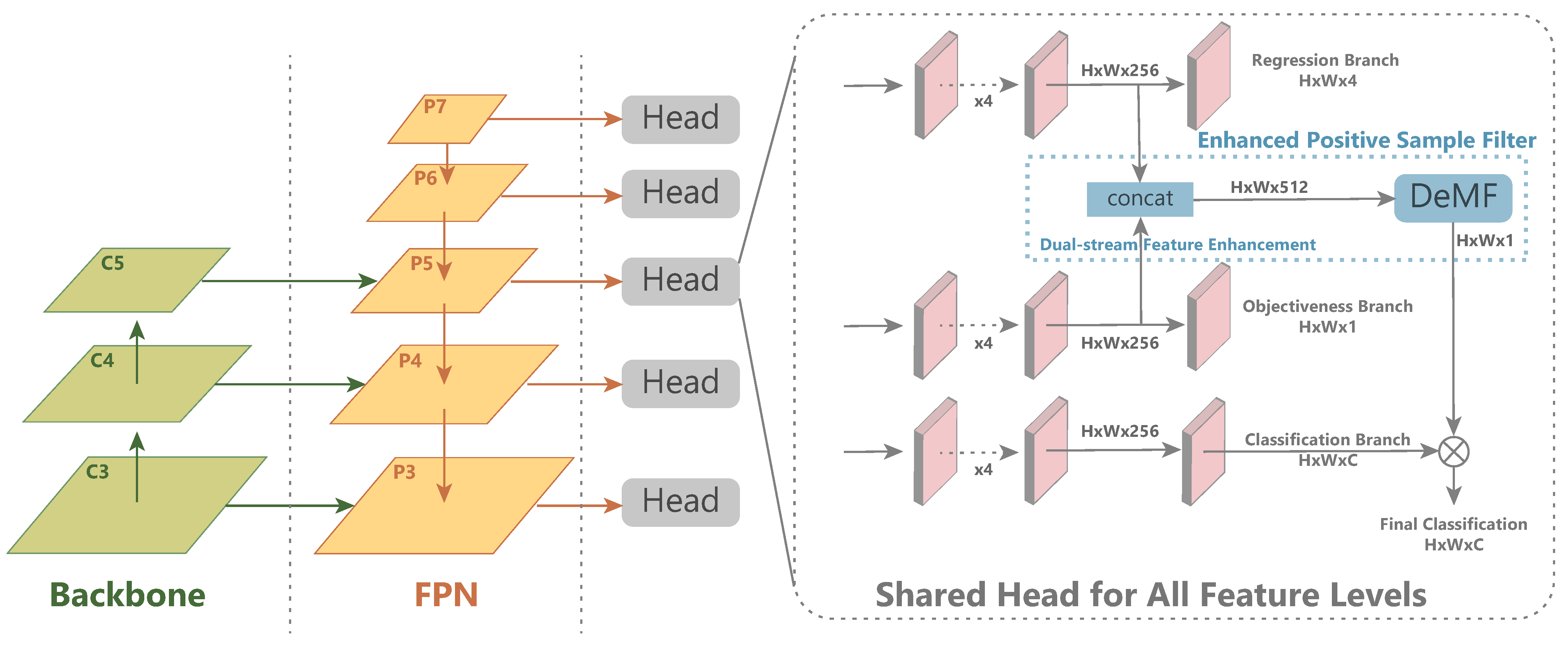

- We propose an end-to-end object detector that eliminates NMS post-processing and realizes fully end-to-end detection. We specifically design an Enhanced Positive Sample Filter (EPSF) for the adaptive positive sample selection in local areas, which is realized by two components, i.e., DsFE and DeMF;

- We design a Dual-stream Feature Enhancement module (DsFE) that extracts rich information from features learned with different targets for one-to-one classification;

- We design a Disentangled Max Pooling Filter (DeMF) to enhance the feature discriminability in potential foreground areas via disentangled max pooling;

2. Related Work

2.1. Object Detection

2.2. End-to-End Object Detector

3. Method

3.1. Overall Pipeline

3.2. Label Assignment

3.3. Enhanced Positive Sample Filter

3.3.1. Dual-Stream Feature Enhancement Module

3.3.2. Disentangled Max Pooling Filter

4. Experiments

4.1. Datasets

4.2. Implementation Detail

4.3. Comparisons with State-of-the-Arts

- The proposed detector shows superiority in performance against other NMS-free detectors and many mainstream NMS-based detectors. Thus, we think it is likely to serve as a strong alternative to current mainstream detectors;

- The proposed detector shows a consistent excellent performance on these mainstream detection datasets including with different scenes, which demonstrates its robustness in terms of handling various situations;

- The proposed end-to-end detector was designed in a one-stage anchor-free manner without involving the heuristic attention mechanism and NMS post-processing step. The entire detection pipeline is simple and efficient, and can be easily deployed in real-world industrial applications.

4.4. Ablation Study

4.5. Qualitative Results

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS–improving object detection with one line of code. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5561–5569. [Google Scholar]

- Hosang, J.; Benenson, R.; Schiele, B. Learning non-maximum suppression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4507–4515. [Google Scholar]

- He, Y.; Zhu, C.; Wang, J.; Savvides, M.; Zhang, X. Bounding box regression with uncertainty for accurate object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 2888–2897. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Adaptive nms: Refining pedestrian detection in a crowd. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 6459–6468. [Google Scholar]

- Huang, X.; Ge, Z.; Jie, Z.; Yoshie, O. Nms by representative region: Towards crowded pedestrian detection by proposal pairing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10750–10759. [Google Scholar]

- Sun, P.; Jiang, Y.; Xie, E.; Shao, W.; Yuan, Z.; Wang, C.; Luo, P. What makes for end-to-end object detection? In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 9934–9944. [Google Scholar]

- Wang, J.; Song, L.; Li, Z.; Sun, H.; Sun, J.; Zheng, N. End-to-end object detection with fully convolutional network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 15849–15858. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Sun, P.; Zhang, R.; Jiang, Y.; Kong, T.; Xu, C.; Zhan, W.; Tomizuka, M.; Li, L.; Yuan, Z.; Wang, C.; et al. Sparse r-cnn: End-to-end object detection with learnable proposals. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 14454–14463. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes Challenge 2007 (VOC2007) Results. Available online: http://www.pascal-network.org/challenges/VOC/voc2007/workshop/index.html (accessed on 7 April 2007).

- Shao, S.; Zhao, Z.; Li, B.; Xiao, T.; Yu, G.; Zhang, X.; Sun, J. Crowdhuman: A benchmark for detecting human in a crowd. arXiv 2018, arXiv:1805.00123. [Google Scholar]

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian detection: An evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 743–761. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Gong, J.; Chen, D. Object Detection Using Multi-Scale Balanced Sampling. Appl. Sci. 2020, 10, 6053. [Google Scholar] [CrossRef]

- Zhang, Y.; Kong, J.; Qi, M.; Liu, Y.; Wang, J.; Lu, Y. Object Detection Based on Multiple Information Fusion Net. Appl. Sci. 2020, 10, 418. [Google Scholar] [CrossRef]

- Jiang, J.; Xu, H.; Zhang, S.; Fang, Y. Object Detection Algorithm Based on Multiheaded Attention. Appl. Sci. 2019, 9, 1829. [Google Scholar] [CrossRef]

- Wang, H.; Li, D.; Song, Y.; Gao, Q.; Wang, Z.; Liu, C. Single-Shot Object Detection with Split and Combine Blocks. Appl. Sci. 2020, 10, 6382. [Google Scholar] [CrossRef]

- Liu, X.; Chen, H.X.; Liu, B.Y. Dynamic Anchor: A Feature-Guided Anchor Strategy for Object Detection. Appl. Sci. 2022, 12, 4897. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6569–6578. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Kong, T.; Sun, F.; Liu, H.; Jiang, Y.; Li, L.; Shi, J. Foveabox: Beyound anchor-based object detection. IEEE Trans. Image Process. 2020, 29, 7389–7398. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. In Proceedings of the International Conference on Learning Representations, Virtual Event, 3–7 May 2021. [Google Scholar]

- Sun, Z.; Cao, S.; Yang, Y.; Kitani, K.M. Rethinking transformer-based set prediction for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtually, 11–17 October 2021; pp. 3611–3620. [Google Scholar]

- Dai, Z.; Cai, B.; Lin, Y.; Chen, J. UP-DETR: Unsupervised Pre-Training for Object Detection With Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 1601–1610. [Google Scholar]

- Zheng, M.; Gao, P.; Zhang, R.; Li, K.; Wang, X.; Li, H.; Dong, H. End-to-end object detection with adaptive clustering transformer. arXiv 2020, arXiv:2011.09315. [Google Scholar]

- Gao, P.; Zheng, M.; Wang, X.; Dai, J.; Li, H. Fast convergence of detr with spatially modulated co-attention. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtually, 11–17 October 2021; pp. 3621–3630. [Google Scholar]

- Zheng, A.; Zhang, Y.; Zhang, X.; Qi, X.; Sun, J. Progressive End-to-End Object Detection in Crowded Scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 857–866. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. Unitbox: An advanced object detection network. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam The Netherlands, 15–19 October 2016; pp. 516–520.

- Wu, Y.; He, K. Group normalization. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhu, B.; Wang, F.; Wang, J.; Yang, S.; Chen, J.; Li, Z. CVPODS: All-in-One Toolbox for Computer Vision Research. 2020. Available online: https://github.com/Megvii-BaseDetection/cvpods (accessed on 3 December 2022).

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 9759–9768.

- Zhang, S.; Yang, J.; Schiele, B. Occluded Pedestrian Detection Through Guided Attention in CNNs. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Cai, Z.; Fan, Q.; Feris, R.; Vasconcelos, N. A Unified Multi-scale Deep Convolutional Neural Network for Fast Object Detection. In Proceedings of the ECCV, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Zhang, L.; Lin, L.; Liang, X.; He, K. Is Faster R-CNN Doing Well for Pedestrian Detection? In Proceedings of the ECCV, Amsterdam, The Netherlands, 11–14 October 2016.

- Zhang, S.; Benenson, R.; Schiele, B. CityPersons: A Diverse Dataset for Pedestrian Detection. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Pang, Y.; Xie, J.; Khan, M.H.; Anwer, R.M.; Khan, F.S.; Shao, L. Mask-Guided Attention Network for Occluded Pedestrian Detection. In Proceedings of the ICCV, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

| Methods | Epochs | AP | AP | AP | AP | AP | AP |

|---|---|---|---|---|---|---|---|

| NMS-based Detectors: | |||||||

| Faster RCNN [1] | 36 | 40.2 | 61.0 | 43.8 | 24.2 | 43.5 | 52.0 |

| RetinaNet [2] | 36 | 38.7 | 58.0 | 41.5 | 23.3 | 42.3 | 50.3 |

| FCOS [3] | 36 | 41.4 | 59.9 | 44.8 | 26.1 | 44.9 | 52.7 |

| NMS-free Detectors: | |||||||

| OneNet [11] | 36 | 38.9 | 57.3 | 42.3 | 23.9 | 41.9 | 49.5 |

| DeFCN [12] | 36 | 41.4 | 59.5 | 45.7 | 26.1 | 44.9 | 52.0 |

| Ours | 36 | 42.0 | 60.0 | 46.3 | 26.3 | 45.2 | 52.8 |

| Methods | NMS | mAP |

|---|---|---|

| Faster RCNN [1] | ✓ | 73.1 |

| RetinaNet [2] | ✓ | 74.0 |

| SSD [24] | ✓ | 71.6 |

| FCOS [3] | ✓ | 73.0 |

| DeFCN [12] | × | 73.1 |

| Ours | × | 74.8 |

| Methods | Epochs | mMR↓ | AP | Recall |

|---|---|---|---|---|

| NMS-based Detectors: | ||||

| Faster RCNN [1] | - | 50.4 | 85.0 | 90.2 |

| RetinaNet [2] | 32 | 57.6 | 81.7 | 88.6 |

| FCOS [3] | 32 | 54.9 | 86.1 | 94.2 |

| ATSS [41] | 32 | 49.7 | 87.2 | 94.0 |

| AdaptiveNMS [9] | - | 49.7 | 84.7 | 91.3 |

| NMS-free Detectors: | ||||

| DETR [13] | 300 | 80.1 | 72.8 | 82.7 |

| Deformable DETR [30] | 32 | 54.0 | 86.7 | 92.5 |

| OneNet [11] | 50 | 48.2 | 90.7 | 97.6 |

| DeFCN [12] | 32 | 48.9 | 89.1 | 96.5 |

| Ours | 32 | 46.0 | 90.8 | 97.7 |

| Method | NMS | R↓ | HO↓ | R + HO↓ |

|---|---|---|---|---|

| FasterRCNN + ATT [42] | ✓ | 10.3 | 45.2 | 18.2 |

| MS-CNN [43] | ✓ | 10.0 | 59.9 | 21.5 |

| RPN + BF [44] | ✓ | 9.6 | 74.4 | 24.0 |

| FasterRCNN [45] | ✓ | 9.2 | 57.6 | 20.0 |

| MGAN [46] | ✓ | 6.8 | 38.2 | 13.8 |

| FCOS [3] | ✓ | 6.9 | 34.1 | 14.2 |

| DeFCN [12] | × | 7.1 | 34.4 | 14.3 |

| Ours | × | 5.8 | 31.6 | 12.6 |

| DsFE | DeMF | AP | AP | AP | AP | AP | AP |

|---|---|---|---|---|---|---|---|

| × | × | 39.1 | 56.2 | 42.9 | 24.4 | 42.5 | 49.6 |

| × | ✓ | 41.5 | 59.6 | 45.7 | 25.1 | 44.9 | 52.0 |

| ✓ | ✓ | 42.0 | 60.0 | 46.3 | 26.3 | 45.2 | 52.8 |

| Methods | AP | AP | AP | AP | AP | AP |

|---|---|---|---|---|---|---|

| (a) | 41.5 | 59.6 | 45.7 | 25.1 | 44.9 | 52.0 |

| (b) | 41.6 | 59.7 | 45.7 | 25.5 | 44.8 | 51.6 |

| (c) | 41.3 | 59.4 | 45.2 | 26.2 | 44.6 | 51.5 |

| (d) | 41.4 | 59.5 | 45.3 | 24.3 | 44.5 | 52.2 |

| (e) | 42.0 | 60.0 | 46.3 | 26.3 | 45.2 | 52.8 |

| Methods | AP | AP | AP | AP | AP | AP |

|---|---|---|---|---|---|---|

| (a) | 41.4 | 59.6 | 45.7 | 25.9 | 44.6 | 52.7 |

| (b) | 42.0 | 60.0 | 46.3 | 26.3 | 45.2 | 52.8 |

| Label Assignment | AP | AP | AP | AP | AP | AP |

|---|---|---|---|---|---|---|

| one-to-9 | 41.8 | 59.7 | 46.0 | 25.6 | 45.1 | 53.2 |

| one-to-16 | 42.0 | 60.0 | 46.3 | 26.3 | 45.2 | 52.8 |

| one-to-25 | 41.7 | 59.7 | 45.9 | 25.5 | 45.0 | 52.8 |

| Methods | AP | AP | AP | AP | AP | AP |

|---|---|---|---|---|---|---|

| DeMf | 42.0 | 60.0 | 46.3 | 26.3 | 45.2 | 52.8 |

| DeMF-3MP | 41.2 | 59.2 | 45.2 | 26.5 | 44.4 | 52.8 |

| DeMF-2nd2dMP | 41.6 | 59.8 | 45.8 | 25.7 | 44.7 | 52.8 |

| Kernel Size | AP | AP | AP | AP | AP | AP |

|---|---|---|---|---|---|---|

| 1x1 | 41.5 | 59.3 | 45.3 | 25.2 | 45.1 | 52.2 |

| 3x3 | 42.0 | 60.0 | 46.3 | 26.3 | 45.2 | 52.8 |

| 5x5 | 41.5 | 59.7 | 45.5 | 25.0 | 45.0 | 52.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, X.; Chen, B.; Li, P.; Wang, B.; Zhang, H. End-to-End Object Detection with Enhanced Positive Sample Filter. Appl. Sci. 2023, 13, 1232. https://doi.org/10.3390/app13031232

Song X, Chen B, Li P, Wang B, Zhang H. End-to-End Object Detection with Enhanced Positive Sample Filter. Applied Sciences. 2023; 13(3):1232. https://doi.org/10.3390/app13031232

Chicago/Turabian StyleSong, Xiaolin, Binghui Chen, Pengyu Li, Biao Wang, and Honggang Zhang. 2023. "End-to-End Object Detection with Enhanced Positive Sample Filter" Applied Sciences 13, no. 3: 1232. https://doi.org/10.3390/app13031232

APA StyleSong, X., Chen, B., Li, P., Wang, B., & Zhang, H. (2023). End-to-End Object Detection with Enhanced Positive Sample Filter. Applied Sciences, 13(3), 1232. https://doi.org/10.3390/app13031232