A Deep-Learning Approach for Identifying a Drunk Person Using Gait Recognition

Abstract

:1. Introduction

- (1)

- Detection of drunkenness with image-based gait pattern recognition is attempted;

- (2)

- Time, length, and velocity of stride are proposed as the features to detect drunken gaits;

- (3)

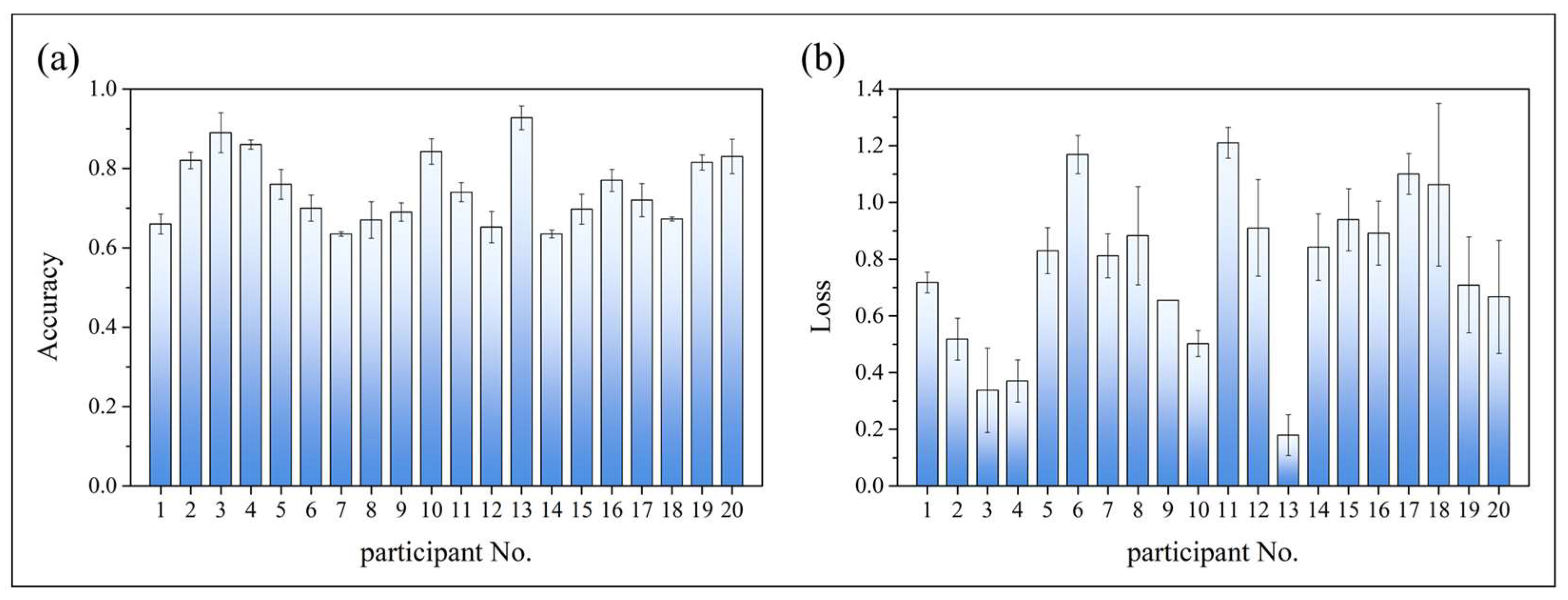

- The accuracy of the proposed algorithm shows that the average and standard deviation are 73.94% and 2.81, respectively.

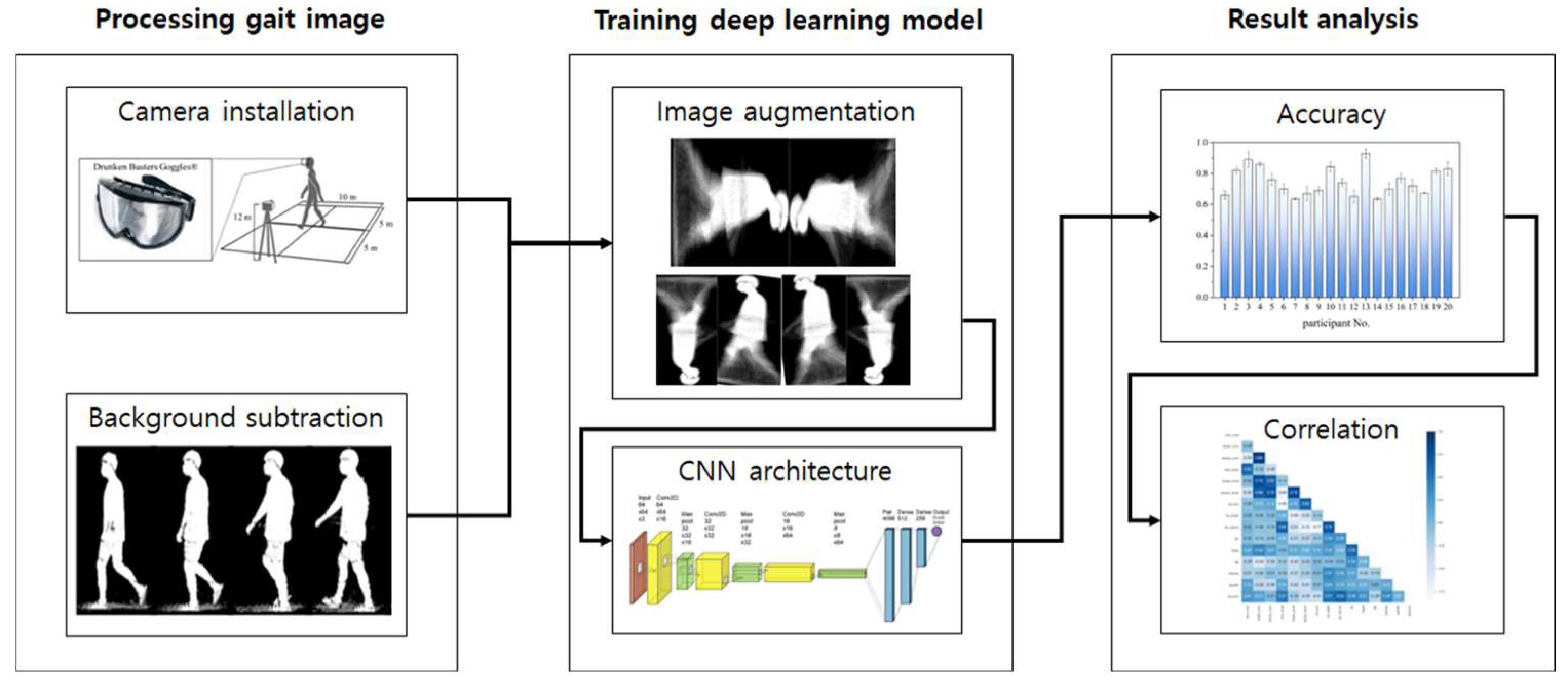

2. Materials and Methods

2.1. Experimental Design

2.1.1. Subjects

2.1.2. Setup

2.2. Gait Analysis

2.2.1. Background Subtraction

2.2.2. Gait Energy Image (GEI)

2.2.3. Image Augmentation (IA)

3. Results

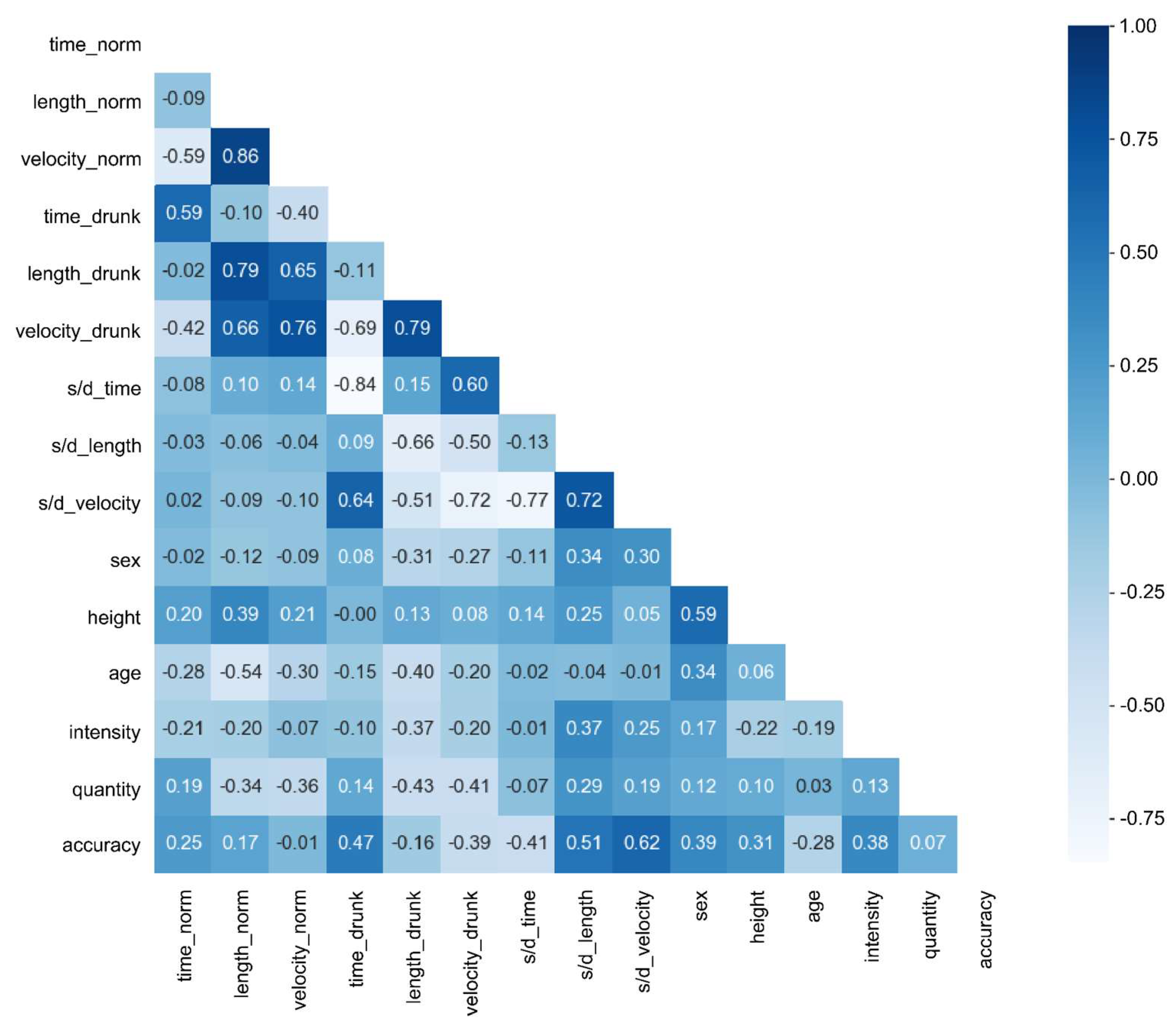

3.1. Gait Parameter

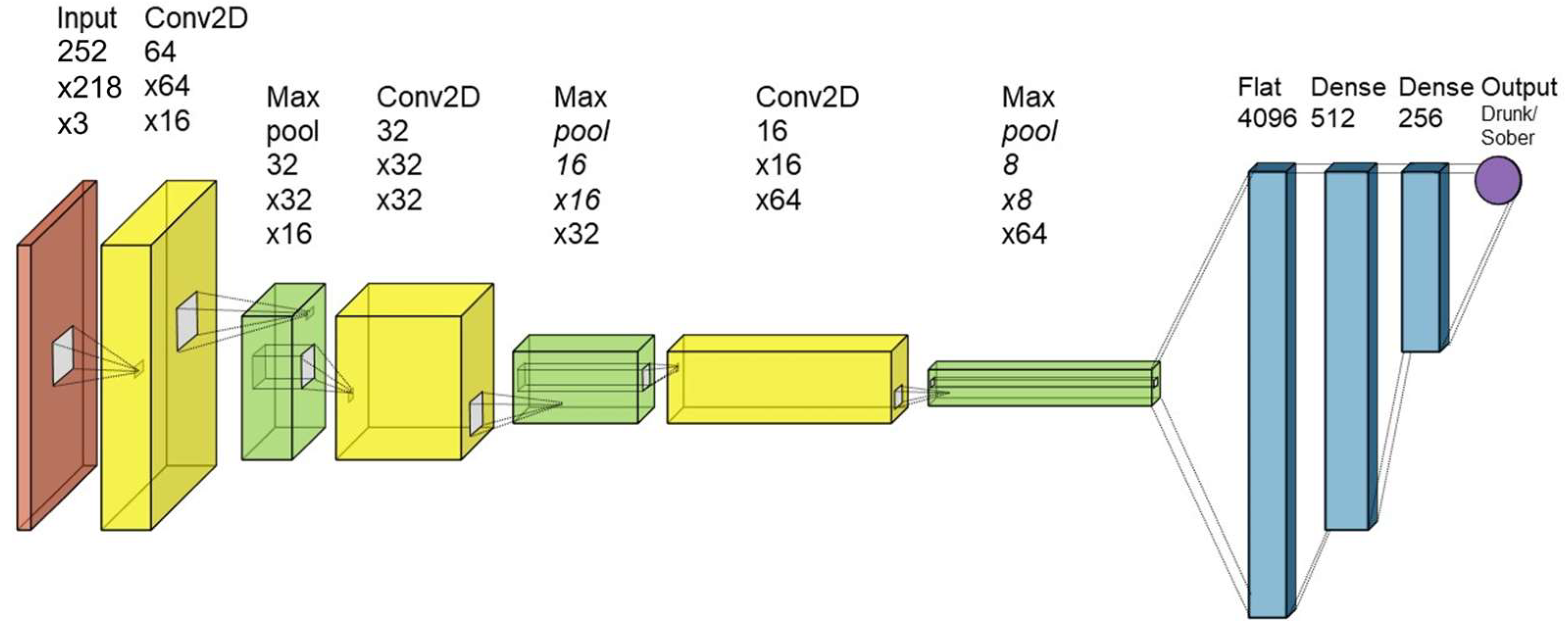

3.2. Identification

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- World Health Organization. Alcohol, Total per Capita (15+) Consumption, WHO. Available online: https://www.who.int/data/gho/data/indicators/indicator-details/GHO/total-(recorded-unrecorded)-alcohol-per-capita-(15-)-consumption (accessed on 18 September 2021).

- Reily, J. Drug testing & safety: What’s the connection? Occupational Health & Safety, 1 September 2014. [Google Scholar]

- Gamella, M.; Campuzano, S.; Manso, J.; de Rivera, G.G.; López-Colino, F.; Reviejo, A.J.; Pingarrón, J.M. A novel non-invasive electrochemical biosensing device for in situ determination of the alcohol content in blood by monitoring ethanol in sweat. Anal. Chim. Acta 2014, 806, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Jeerapan, I.; Imani, S.; Cho, T.N.; Bandodkar, A.; Cinti, S.; Mercier, P.P.; Wang, J. Noninvasive alcohol monitoring using a wearable tattoo-based iontophoretic-biosensing system. ACS Sens. 2016, 1, 1011–1019. [Google Scholar] [CrossRef]

- Bilney, B.; Morris, M.; Webster, K. Concurrent related validity of the GAITRite® walkway system for quantification of the spatial and temporal parameters of gait. Gait Posture 2003, 17, 68–74. [Google Scholar] [CrossRef] [PubMed]

- Webster, K.E.; Wittwer, J.E.; Feller, J.A. Validity of the GAITRite® walkway system for the measurement of averaged and individual step parameters of gait. Gait Posture 2005, 22, 317–321. [Google Scholar] [CrossRef] [PubMed]

- Shin, K.J.; Kang, J.W.; Sung, K.H.; Park, S.H.; Kim, S.E.; Park, K.M.; Ha, S.Y.; Kim, S.E.; Lee, B.I.; Park, J. Quantitative gait and postural analyses in patients with diabetic polyneuropathy. J. Diabetes Its Complicat. 2021, 35, 107857. [Google Scholar] [CrossRef] [PubMed]

- Prakash, C.; Kumar, R.; Mittal, N. Recent developments in human gait research: Parameters, approaches, applications, machine learning techniques, datasets and challenges. Artif. Intell. Rev. 2018, 49, 1–40. [Google Scholar] [CrossRef]

- Arnold, Z.; Larose, D.; Agu, E. Smartphone inference of alcohol consumption levels from gait. In Proceedings of the 2015 International Conference on Healthcare Informatics, Dallas, TX, USA, 21–23 October 2015; pp. 417–426. [Google Scholar]

- Kao, H.L.; Ho, B.J.; Lin, A.C.; Chu, H.H. Phone-based gait analysis to detect alcohol usage. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 661–662. [Google Scholar]

- Li, R.; Balakrishnan, G.P.; Nie, J.; Li, Y.; Agu, E.; Grimone, K.; Herman, D.; Abrantes, A.M.; Stein, M.D. Estimation of blood alcohol concentration from smartphone gait data using neural networks. IEEE Access 2021, 9, 61237–61255. [Google Scholar] [CrossRef] [PubMed]

- Zaki TH, M.; Sahrim, M.; Jamaludin, J.; Balakrishnan, S.R.; Asbulah, L.H.; Hussin, F.S. The study of drunken abnormal human gait recognition using accelerometer and gyroscope sensors in mobile application. In Proceedings of the 2020 16th IEEE International Colloquium on Signal Processing & Its Applications (CSPA), Langkawi, Malaysia, 28–29 February 2020; pp. 151–156. [Google Scholar]

- Arora, S.; Venkataraman, V.; Zhan, A.; Donohue, S.; Biglan, K.M.; Dorsey, E.R.; Little, M.A. Detecting and monitoring the symptoms of Parkinson’s disease using smartphones: A pilot study. Park. Relat. Disord. 2015, 21, 650–653. [Google Scholar] [CrossRef] [Green Version]

- Oh, J.K.; Lee, C.Y.; Zhang, B.T. Analyzing multi-sensory data of smartwatch for tipsiness status recognition. In Proceedings of the 2015 Korean Institute of Information Scientists and Engineers (KIISE) Winter Conference, Pyeongchang, Republic of Korea, 15–17 December 2015; pp. 610–612. [Google Scholar]

- Park, E.; Lee, S.I.; Nam, H.S.; Garst, J.H.; Huang, A.; Campion, A.; Arnell, M.; Ghalehsariand, N.; Park, S.; Chang, H.J.; et al. Unobtrusive and continuous monitoring of alcohol-impaired gait using smart shoes. Methods Inf. Med. 2017, 56, 74–82. [Google Scholar]

- Silsupadol, P.; Teja, K.; Lugade, V. Reliability and validity of a smartphone-based assessment of gait parameters across walking speed and smartphone locations: Body, bag, belt, hand, and pocket. Gait Posture 2017, 58, 516–522. [Google Scholar] [CrossRef] [PubMed]

- Brunnekreef, J.J.; Van Uden, C.J.; van Moorsel, S.; Kooloos, J.G. Reliability of videotaped observational gait analysis in patients with orthopedic impairments. BMC Musculoskelet. Disord. 2005, 6, 17. [Google Scholar] [CrossRef] [PubMed]

- Liao, R.; Makihara, Y.; Muramatsu, D.; Mitsugami, I.; Yagi, Y.; Yoshiyama, K.; Kazui, H.; Takeda, M. A video-based gait disturbance assessment tool for diagnosing idiopathic normal pressure hydrocephalus. IEEJ Trans. Electr. Electron. Eng. 2020, 15, 433–441. [Google Scholar] [CrossRef]

- Lee, L.; Grimson, W.E.L. Gait analysis for recognition and classification. Proceedings of Fifth IEEE International Conference on Automatic Face Gesture Recognition, Washington, DC, USA, 21–21 May 2002. [Google Scholar]

- Zhang, Y.; Ogata, N.; Yozu, A.; Haga, N. Two-dimensional video gait analyses in patients with congenital insensitivity to pain. Dev. Neurorehabilit. 2013, 16, 266–270. [Google Scholar] [CrossRef] [PubMed]

- Roh, H. Effect of visual perceptual disturbance on gait and balance. J. Phys. Ther. Sci. 2015, 27, 3109–3111. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Tan, T.; Ning, H.; Hu, W. Silhouette analysis-based gait recognition for human identification. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1505–1518. [Google Scholar] [CrossRef] [Green Version]

- Hu, B.; Guan, Y.; Gao, Y.; Long, Y.; Lane, N.; Ploetz, T. Robust cross-view gait identification with evidence: A discriminant gait gan (diggan) approach on 10000 people. arXiv 2018, arXiv:1811.10493. [Google Scholar]

- Wu, Z.; Huang, Y.; Wang, L.; Wang, X.; Tan, T. A comprehensive study on cross-view gait based human identification with deep CNNS. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 209–226. [Google Scholar] [CrossRef]

- Yoo, H.W.; Kwon, K.Y. Method for classification of age and gender using gait recognition. Trans. Korean Soc. Mech. Eng. A 2017, 41, 1035–1045. [Google Scholar] [CrossRef]

- Khan, M.H.; Farid, M.S.; Grzegorzek, M. Vision-based approaches towards person identification using gait. Comput. Sci. Rev. 2021, 42, 100432. [Google Scholar] [CrossRef]

- Jiang, X.; Wang, Y.; Liu, W.; Li, S.; Liu, J. CapsNET, CNN, FCN: Comparative performance evaluation for image classification. Int. J. Mach. Learn. Comput. 2019, 9, 840–848. [Google Scholar] [CrossRef]

- Sultana, F.; Sufian, A.; Dutta, P. Advancements in image classification using convolutional neural network. In Proceedings of the 2018 Fourth International Conference on Research in Computational Intelligence and Communication Networks (ICRCICN), Kolkata, India, 22–23 November 2018; pp. 122–129. [Google Scholar]

- Han, D.; Liu, Q.; Fan, W. A new image classification method using CNN transfer learning and web data augmentation. Expert Syst. Appl. 2018, 95, 43–56. [Google Scholar] [CrossRef]

- Saleh, A.M.; Hamoud, T. Analysis and best parameters selection for person recognition based on gait model using CNN algorithm and image augmentation. J. Big Data 2021, 8, 1–20. [Google Scholar] [CrossRef] [PubMed]

- Godbehere, A.B.; Matsukawa, A.; Goldberg, K. Visual tracking of human visitors under variable-lighting conditions for a responsive audio art installation. In Proceedings of the 2012 American Control Conference (ACC), Montreal, QC, Canada, 27–29 June 2012; pp. 4305–4312. [Google Scholar]

- Veenendaal, A.; Daly, E.; Jones, E.; Gang, Z.; Vartak, S.; Patwardhan, R.S. Drunken abnormal human gait detection using sensors. Comput. Sci. Emerg. Res. J. 2013, 1. [Google Scholar]

- Iwamura, M.; Mori, S.; Nakamura, K.; Tanoue, T.; Utsumi, Y.; Makihara, Y.; Muramatsu, D.; Kise, K.; Yagi, Y. Individuality-preserving silhouette extraction for gait recognition and its speedup. IEICE Trans. Inf. Syst. 2021, 104, 992–1001. [Google Scholar] [CrossRef]

| Drunk/Sober | Stride Time | Stride Length | Stride Velocity |

|---|---|---|---|

| Average | 1.13 ± 0.09 | 0.873 ± 0.06 | 0.780 ± 0.08 |

| Method | Speed | Raw Data | Attached to A Person | Usage | |

|---|---|---|---|---|---|

| Sensor-based method | Accelerator on a hand | Fast | Indirect | Need | Personal health management |

| Accelerator on a foot | Fast | Direct | Need | Personal health management | |

| IMU on a foot | Fast | Direct | Need | Personal health management | |

| Vision-based method | Model-based detection | Slow | Direct | Unnecessary | Passenger analysis |

| Appearance-based detection | Fast | Direct | Unnecessary | Passenger analysis | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, S.; Bae, B.; Kang, K.; Kim, H.; Nam, M.S.; Um, J.; Heo, Y.J. A Deep-Learning Approach for Identifying a Drunk Person Using Gait Recognition. Appl. Sci. 2023, 13, 1390. https://doi.org/10.3390/app13031390

Park S, Bae B, Kang K, Kim H, Nam MS, Um J, Heo YJ. A Deep-Learning Approach for Identifying a Drunk Person Using Gait Recognition. Applied Sciences. 2023; 13(3):1390. https://doi.org/10.3390/app13031390

Chicago/Turabian StylePark, Suah, Byunghoon Bae, Kyungmin Kang, Hyunjee Kim, Mi Song Nam, Jumyung Um, and Yun Jung Heo. 2023. "A Deep-Learning Approach for Identifying a Drunk Person Using Gait Recognition" Applied Sciences 13, no. 3: 1390. https://doi.org/10.3390/app13031390

APA StylePark, S., Bae, B., Kang, K., Kim, H., Nam, M. S., Um, J., & Heo, Y. J. (2023). A Deep-Learning Approach for Identifying a Drunk Person Using Gait Recognition. Applied Sciences, 13(3), 1390. https://doi.org/10.3390/app13031390